Abstract

Feature selection (FS) is applied to reduce data dimensions while retaining much information. Many optimization methods have been applied to enhance the efficiency of FS algorithms. These approaches reduce the processing time and improve the accuracy of the learning models. In this paper, a developed method called MPAO based on the marine predators algorithm (MPA) and the “narrowed exploration” strategy of the Aquila optimizer (AO) is proposed to handle FS, global optimization, and engineering problems. This modification enhances the exploration behavior of the MPA to update and explore the search space. Therefore, the narrowed exploration of the AO increases the searchability of the MPA, thereby improving its ability to obtain optimal or near-optimal results, which effectively helps the original MPA overcome the local optima issues in the problem domain. The performance of the proposed MPAO method is evaluated on solving FS and global optimization problems using some evaluation criteria, including the maximum value (Max), minimum value (Min), and standard deviation (Std) of the fitness function. Furthermore, the results are compared to some meta-heuristic methods over four engineering problems. Experimental results confirm the efficiency of the proposed MPAO method in solving FS, global optimization, and engineering problems.

MSC:

68U35

1. Introduction

The curse of dimensionality is one of the most tackled topics in recent research. Many redundant unuseful noisy features might contaminate the feature space. Hence, the idea of feature selection (FS) has been prominent for many years. The literature classified FS methods into two main categories: filter and wrapper [1]. Filter methods take advantage of the statistical measures of the features. For example, it might eliminate or keep features based on their values. The filter method is fast but less sensitive to the used classifier [2]. Wrapper methods select subsets of the feature space and evaluate the classifier performance. However, they are computationally exhaustive and time consuming if the brute force search method is accommodated [3]. Here, the role of metaheuristic algorithms appears clearly. Metaheuristics algorithms can search the feature space for the best subset that achieves the highest classification accuracy [4].

Metaheuristic algorithms can be classified into four classes: (1) swarm intelligence algorithms, (2) human-based algorithms, (3) chemistry and physics algorithms, and (4) evolution algorithms. Swarm-based algorithms depend on studying the behavior of flocks and how they interact to reach food sources. Examples of such algorithms are the grasshopper optimization algorithm (GOA) [5], Harris hawk optimization [6], snake optimizer (SO) [7], and coot bird optimizer [8]. Human-based algorithms depend on simulating physical and nonphysical human behaviors. Such classes of algorithms include teaching learning-based optimization (TLBO) [9], social-based algorithm (SBA) [10], and imperialist competitive algorithm (ICA) [11]. Chemistry and physics algorithms are derived from chemical or physical laws such as ion motion algorithm [12], lightning search algorithm [13], and vortex search algorithm [14]. Evolution algorithms maintain the evolution process of biological creatures such as genetic algorithm (GA) [15], differential evolution (DE) [16], bacterial foraging optimization (BFO) [17], and memetic algorithm (MA) [18]. However, no free lunch theory [19] mentioned that if one optimization algorithm could solve some optimization problems, it might not be able to solve all problems. That provokes scientists to propose more algorithms or even enhance the established ones.

In 2020, the marine predator algorithm (MPA) was introduced by the authors of [20]. Various foraging strategies of predators in biological interaction inspired the authors to develop MPA. The mathematical model of MPA mimics the behavior of marine predators’ foraging strategy in nature. MPA accommodates Lévy and Brownian statistical distributions. Lévy strategy searches the space with small steps associated with long jumps. Meanwhile, the Brownian strategy sweeps the search space in controlled and uniform steps. The virtue of the Lévy strategy is its deep and accurate search, while the Brownian strategy ensures visiting distant areas. This cooperation improved the searchability of MPA significantly. The statistical results showed that MPA outperformed the genetic algorithm (GA), cuckoo search (CS), gravitational search algorithm (GSA), particle swarm optimization (PSO), salp swarm algorithm (SSA), and covariance matrix adaptation evolution strategy (CMA-ES). The MPA also has indicated high execution in solving engineering issues. It shows a high convergence rate toward the global optimal and does not require training its parameters. However, applying the metaheuristic algorithm in solving various optimization problems is encouraged according to the “no free lunch” theory. Moreover, a new metaheuristic algorithm named Aquila optimizer (AO) was introduced in [21]. It is inspired by the hunting behavior of one of the most intelligent birds, Aquila, a dark brown bird belonging to the Accipitridae group. The AO was successfully applied to optimize the parameters of PID controllers [22] and multilevel inverters [23].

From the previous revision, we can summarize the novelty and contributions of the study in the following points:

- Improve the exploration phase of the MPA algorithm.

- Apply the “narrowed exploration” strategy of the AO in the MPA algorithm.

- Evaluate the proposed method using different optimization problems.

2. Related Work

Although MPA has recently appeared as a metaheuristic algorithm, it suffers from early convergence. This drawback affects its accuracy in classification tasks. The authors in [24] improved this drawback by hybridizing MPA and simulated annealing (SM). SM has widened the MPA search space and improved its efficiency in FS tasks from high-dimensional datasets. Many metaheuristic algorithms successfully balance exploration and exploitation phases by embedding chaos theory. Chaos theory is an alternative method to generate random numbers in the algorithm. The authors in [25] introduced a chaotic MPA for feature selection. The practical results showed that the improved MPA had achieved the optimal number of features in many experiments. One of MPA’s drawbacks is its unidirectional search for prey. This drawback provoked the authors in [26] to embed opposition learning-based concepts [27] to allow the examination in all possible search directions. This improvement saves the MPA from stagnation in the local optima. The proposed method achieved the best convergence rate and selected the optimal feature set compared with other competitive algorithms. Again, the shortage of MPA search ability is addressed in [28]. The authors hybridize MPA with a sine cosine algorithm (SCA). Extensive experiments have been conducted to evaluate the performance of the proposed hybrid approach, and it showed its superiority in all accuracy measures.

The authors in [29] noticed that the prey movement of MPA depends on two simple strategies: levy flight and Brownian motion. The problem search space is too complex to be searched by those simple strategies. This note trapped the native MPA in local optima. They proposed a co-evolutionary cultural mechanism that divides the populations into subpopulations. Each subpopulation respects its search space and shares accumulated experiences with others. In addition, two operators are added to enhance the diversity of the populations and allow better experience exchange to support the exploitation of the native MPA. The proposed approach was tested in the FS task and proved its superiority over competitive algorithms. Moreover, it is used to optimize the parameters of the SVM algorithm.

The two most significant drawbacks of the MPA are the lack of population diversity and the bad convergence performance. In this regard, Gang et al. [30] tackled the weakness of MPA regarding population diversity. However new and successful in many applications, MPA solutions still need to be more accurate. They proposed an enhanced MPA by adding the neighborhood-based learning strategy and adaptive technique to control the population size. Their proposed approach was tested on state-of-the-art datasets for solving optimization problems and real-life applications. Compared with the original MPA, the enhanced version showed superior performance. Gang et al., again in 2021 [31], introduced a solution to the shape optimization problem of developable ball surfaces with hybrid MPA. The differential evolution algorithm combined MPA and a quasi-opposition strategy to help the original MPA jump out of local optima and enrich the population diversity. Their proposed approach effectively solved the shape optimization problem regarding robustness and precision.

As a new metaheuristic algorithm, MPA was applied in solving engineering problems in [32]. However, its convergence was treated by the following steps: (1) The logistic chaos function was used to ensure the quality of population diversity. (2) An adjustment transition strategy was added to maintain both exploration and exploitation. Moreover, the problem of falling into local optima was solved by updating the step information of predators by modifying the sigmoid function. Finally, after adding the golden sine factor, the proposed MPA achieved a better convergence rate, and its proposed solutions were diverse enough to solve engineering problems effectively.

MPA has been introduced to solve engineering and renewable energy problems such as predicting wind power in [33]. Mohamed et al. optimized the parameters of ANFIS (adaptive neuro-fuzzy inference system) using augmented MPA. In their augmented MPA, they added a mutation operator to the original MPA to overcome its sticking in local optima. The experiments revealed the competency of their modified MPA approach over many time-series forecasting algorithms.

Multilevel thresholding is an essential preprocessing step in image segmentation. Selecting the optimal threshold affects the accuracy of the segmented image. An improved MPA has been proposed in [34]; it proposed a strategy to steer the worst solutions toward the best ones and, at the same time, randomly in the search space to improve the convergence rate and prevent sticking in local optima. Another strategy is added to improve the exploration and exploitation capabilities. The experimental results showed the competency of the proposed MPA over other metaheuristic algorithms.

From the previous works, we can conclude that MPA has many drawbacks tackled in the previously mentioned proposed approaches, such as the problem of premature convergence, unidirectional search for prey, and sticking in local optima. The motivation of our research is to improve the weakness of the original MPA with the strength of another metaheuristic algorithm, Aquila optimizer (AO). Aquila optimizer was introduced in 2021 to solve continuous optimization problems. However, a binary version of AO is used for the wrapper approach FS from a medical dataset on COVID-19 [35]. Different shapes of transfer functions are applied to convert AO from its continuous nature into binary nature. The proposed approach proved its competencies in many directions, such as increasing accuracy, balancing exploration and exploitation, reducing the number of selected features, and fast convergence speed. Deep learning techniques inspired many researchers to use them as feature extraction methods and then apply AO for feature selection. In [36], the MobileNetV3 deep learning method extracted the features of some medical images. A binary thresholding version of AO selected the most non-trivial features to detect COVID-19 from X-ray images. The proposed method showed high performance and was suggested to cover many other areas of applications. However, the thresholding binary version of the AO optimizer is applied in intrusion detection in an IoT environment [37]. A deep learning technique based on CNN is used first for feature extraction, and then, the binary AO selects the most important features. This research confirmed that binary AO is competitive in many areas other than medical applications. The strength of the AO algorithm can treat the weakness of some metaheuristic algorithms. The hybrid combination of the Harris hawk algorithm with AO enhanced the searchability of the former. The proposed hybrid approach in [38] proved its competence in solving optimization problems. A hybrid algorithm that combined the exploration of AO and the exploitation of Harris hawk emerged in [39]. The robust search capability of AO is integrated with random opposition-based learning to enhance the Harris hawk optimization algorithm. An intensive study on its performance is introduced to test its exploration, exploitation, and sticking in local optima. The results show its superiority and its competence in solving industrial engineering problems.

3. Material and Methods

This section briefly describes the marine predators algorithm and Aquila optimizer.

3.1. Marine Predators Algorithm (MPA)

The MPA was presented in [20]. The biological ocean predators’ motions inspired it. The main steps of MPA are as follows:

- MPA initialization: As with all population-based algorithms, MPA has an initialization step where all populations are distributed uniformly in the search space as shown in Equation (1).where u and l are the lower and upper bounds, respectively. is a random vector in [0, 1]. The fittest solution is selected to form a matrix called Elite shown in Equation (2). Elite matrix has a dimension , where n is the search agents and n is the number of the problem dimension.Another matrix called Prey is constructed with the exact dimensions of Elite shown in Equation (3).The process of MPA is split into three levels based on the difference in velocity ratio between a predator and prey.

- Predator moving faster than prey (exploration phase): When the prey is faster than the predator, the predator’s best strategy is to remain stationary. Exploration is more important in the first third of iterations. The prey position is updated by a calculated as shown in Equation (4).where is a random numbers vector. The new prey position is updated as shown in Equation (5).where is a random vector in [0, 1].

- Predator and prey are moving at the same rate (exploitation vs. exploration):When the predator and the prey move at the same speed, they are both on the prowl for prey. This section occurs during the intermediate stage of the optimization process when exploration attempts to be transiently converted to exploitation. It is critical for both exploration and exploitation. As a result, half of the population is used for exploration and the other half is used for exploitation. During this phase, the predator is in charge of exploration, while the prey is in charge of exploitation. The new positions for the first half of the populations (supporting exploitation) are updated as shown in Equation (6).where is a vector of random numbers based on Levy distribution. The new positions for the second half of the populations (supporting exploration) are updated as shown in Equation (7)where A is a control parameter calculated as shown in Equation (8).

- Prey moving faster than predator (exploitation):This scenario occurs during the final stage of the optimization process and is typically associated with a high capacity for exploitation. The prey positions are updated as shown in Equation (9).To maintain the search, the Fish Aggregating Devices (FADs) is proposed. The mathematical model of FADs is defined in Equation (10). Algorithm 1 shows the pseudo code of the MPA.

| Algorithm 1 MPA pseudo code |

|

3.2. Aquila Optimizer (AO)

AO [21] was introduced in 2021 as an optimization algorithm. The algorithm mimics Aquila hunting behavior and has four main stages: initialization, exploration, exploitation, and finally, reaching the optimal solution. The initialization stage starts by initializing population X with N agents as shown in Equation (11).

where and are the upper and lower bounds of the search space, the number of problem dimensions is , and is the random value.

The next phase of the AO approach is to either explore or exploit until the best solution is identified. According to [21], there are two methods for both exploration and exploitation. The first technique uses the best agent () and the average of all agents () to carry out the exploration. The mathematical formulation of this method is as follows:

T declares the max iteration’s number.

The second method, expressed as follows, uses and the Levy flight distribution to improve the solutions’ ability for exploration.

where u and are random numbers; while and are constants. is a randomly chosen agent in Equation (14). In addition, y and x are used to mimic the spiral shape, and they are written as:

where and are random values, and is a random number.

The first technique is used in [21] in the exploitation phase based on and and it is computed as:

The parameters for exploitation adjustment are given by and . A random value is the value rand [0, 1].

The solution is updated using , , and the quality function in the second exploitation phase. That is defined as follows:

In addition, refers to several motions used to track the optimal solution as in Equation (21).

A random value is denoted by the symbol . , on the other hand, denotes decreasing values from 2 to 0, and it is calculated as:

4. Proposed Method

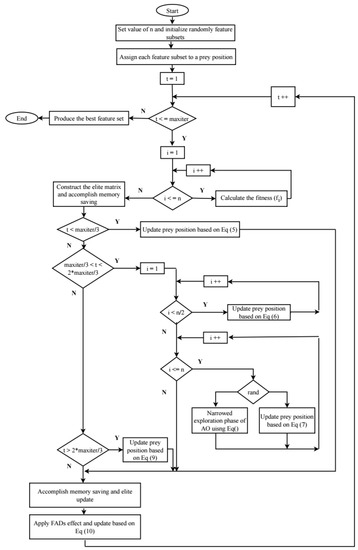

This section describes the proposed MPAO method. This method aims to improve the optimization technique of the original MPA using the strategy of the narrowed exploration of the AO. This modification enhances the exploration behavior of the MPA to update the search space and explore more regions in the search domain. Therefore, the narrowed exploration of the AO increases the searchability of the MPA, thereby improving its ability to obtain optimal or near-optimal results. This phase effectively helps the original MPA overcome the local optima issues in the problem domain. The narrowed exploration of the AO is applied based on a random variable , where . If is greater than 0.25, the narrowed exploration (Equation (14)) is applied, else the operators of MPA (Equation (7)) are used.

The optimization process of MPAO starts by determining the values of all parameters and creates the initial population for the search space. Then, the fitness function () checks the solutions’ quality using Equation (23); if the current solution is better than the old one, the MPAO saves it as the best solution.

where denotes the error of the classification phase (in this study, the K-NN is used as a classifier). The second part of the equation defines the selected feature’s ratio (s). S denotes the number of full features. is a random value in .

After that, the optimization begins by discovering the search space and evaluating the solutions using the fitness function to determine the initial optimal values.

In the second–third part of the optimization process, the MPAO decides to update the solutions using the MPA or AO based on a random value. This step helps with improving the exploration phase and adds more diversity to the search space. Finally, all obtained values by the fitness function are checked; then, the best one is selected and saved. The above steps can be summarized as follows:

- Declare the experiment variables and their values.

- Generate the X population randomly with a specific size and dimension.

- Start the main loop of the MPAO.

- Apply the fitness function for all solutions.

- Return the best value.

The above steps are iterated until reaching the stop condition. The structure of the MPAO is presented in Figure 1.

Figure 1.

Proposed MPAO architecture.

5. Experiments and Discussion

This section evaluates the proposed MPAO using three experiments: solving global optimization problems, selecting the essential features, and solving real engineering problems. The proposed method is compared with nine optimization methods: PSO [40], GA [41], AO [21], MFO [42], MPA [20], SSA [43], GOA [5], slime mold algorithm (SMA) [44], and whale optimization algorithm (WOA) [45]. Table 1 lists the parameter settings for all of them.

Table 1.

Parameter settings.

In the experiments, there are some performance measures used to evaluate the proposed method, namely: the average, minimum (Min), standard deviation (Std), and maximum (Max) values of the fitness function as in Equations (24)–(26), respectively. In addition, the classification accuracy is as in Equation (27).

where f and denote the value and mean of the objective function, respectively. N denotes the size of the sample.

where and denote true negative and positive results, respectively. and denote false negative and positive results.

5.1. Experiment 1: Global Optimization

This experiment discusses the experimental results using the CEC2019 benchmark [46] by comparing the MPAO to some state-of-the-art advanced competitors, including PSO, GA, AO, MFO, SMA, MPA, SSA, GOA, and WOA. Table 2, Table 3, Table 4 and Table 5 report the results of average fitness, Std, Max, and Min over ten test functions of the CEC 2019 benchmark. In all tables, the boldface refers to the best value.

Table 2.

Average of the fitness function.

Table 3.

Average of the standard deviation of the fitness function.

Table 4.

Minimum values of the fitness function.

Table 5.

Maximum values of the fitness function.

Concerning the average of function fitness which is computed for all the counterparts, it is clear from Table 2 that the proposed MPAO is superior in six out of 10 functions (F2–F5 and F7–F8), followed by the MPA, which is better in three functions (F3, F6, and F10). On the other hand, the PSO, GA, MFO, and WOA show the best values in only one out of 10 functions. The SSA and GOA failed to realize the best files over all the functions.

The Std values show the stability in the results obtained by the competitors over the testing functions, which are reported in Table 3. The proposed MPAO shows lower Std values in five out of ten functions (F2, F3, F5, F7, and F10), which reflects the stability of the algorithm in most testing functions. The MFO comes in the second rank, stable over only two functions (F4 and F9), whereas the other competitors did not show stability over all the algorithms.

Table 4 reports the Max values computed from each counterpart’s fitness value of the testing functions. The MPAO reports the Max value in the majority of functions (F2–F8), followed by the MPA that shows the maximal over two functions only (F9–F10). The rest of the competing algorithms cannot provide any maximum value in all the testing functions.

Table 5 presents the Min values of fitness computed for the counterparts over the testing functions. The MPAO shows lower values in five functions (F2–F5 and F9), followed by PSO and MPA, each of which shows lower values in only two functions. Each MFO and SMA obtains the lower values in only one function, namely F8 and F1 means no substantial changes over the experiments. Table 5 demonstrates the Std values, where the MPAO realizes the lower value over seven out of 15 datasets. The PSO is only in three, the MFO in two, and the GA and MPA in one. In contrast, the other counterparts did not realize the best results over any datasets.

5.2. Experiment 2: Feature Selection

This experiment evaluates a set of UCI datasets by the proposed method. These benchmark datasets are collected from distinct fields such as biology, electromagnetic, games, physics, politics, and chemistry. Furthermore, each benchmark shows a different number of instances, features, and categories. The description of each benchmark is provided in Table 6.

Table 6.

Description of the UCI datasets.

Concerning this subsection, we demonstrate and discuss the experimental results of the comparisons conducted to solve the feature selection issue. These comparisons involved the proposed MPAO, PSO [40], GA [41], AO [21], MFO [42], SMA [44], MPA [20], SSA [43], GOA [5], and WOA [45] with the previously described evaluation metrics. Table 7 demonstrates the experimental results of the average of the fitness function, which were calculated for all compared counterparts over the 15 datasets. From the table, it is clear that the proposed MPAO was superior in 13 out of 15 datasets, whereas the PSO demonstrated the best values in three cases, which is followed by the MPA and SSA, each of which was better in one dataset. These results indicated that the MPAO was accurate and superior in the average measure of the fitness value. In all tables, the boldface refers to the best value. Keeping on with the fitness values, we can analyze the fitness functions’ maximum (Max) and minimum (Min) values. This investigation allows us to determine when the algorithms realize the worst and best value.

Table 7.

Results of the average of the fitness value.

Table 8 demonstrates the Min values of fitness obtained by the counterparts over all the datasets. The MPAO, PSO, and MPA showed lower values in most datasets, 13, 11, and 8 of the 15 datasets, respectively, as these algorithms could reach the optimal values.

Table 8.

Results of the minimum value of the fitness value.

On the other side, Table 9 demonstrates the Max values of the fitness function, which were obtained during the conducted experiments using the counterparts across the 15 datasets of the UCI. The MPAO obtained the Max values over most cases (10 out of 15 datasets), followed by the SSA (only in six cases), MPA (in five cases) and WOA (in four cases). The other algorithms, including GA, AO, MFO, and SMA, could not provide any maximal values over all the experiments.

Table 9.

Results of the maximum value of the fitness value.

The results’ stability was calculated for each algorithm and analyzed based on the standard deviation (Std) measure. The Std was computed for the independent experiments over each benchmark by setting the fitness as the input value. In this regard, the lower Std refers to better stability for the results, which means no substantial changes over the experiments. Table 10 demonstrates the Std values, where the MPAO realized the lower value over seven out of 15 datasets. The PSO showed the best values in three datasets, the MFO in two, and the GA and MPA in only one. The other counterparts did not realize the best results over any datasets.

Table 10.

Results of the standard deviation of the fitness value.

The feature numbers resulting across all 15 datasets are reported in Table 11. The recorded results in that table are evidence of the efficacy and superiority of the MPAO algorithm. It obtained the best results in seven out of 15 datasets, showing the minor selected feature number that realizes high performance. It was followed by the SMA and the WOA, which achieved the least features in only three and two datasets, respectively, whereas the remaining algorithms were out of the competition.

Table 11.

Ratio of the selected feature for all datasets.

The computational time consumed by each algorithm is reported in Table 12. In this context, a smaller value is expected to be the best value. Regardless, this is not evidence of better performance as the quick algorithm is not always the accurate one. The time is observed in Table 8, where the SMA represents the algorithm that showed the lower computation time over 11 datasets, which is followed by the MFO with two datasets. The PSO and GOA showed the best time in only one dataset. Accordingly, the proposed MPAO showed a higher computation time because of the operator hybridization; however, the performance of this algorithm was better than those shown by the remaining algorithms.

Table 12.

Computation time by each algorithm.

Furthermore, as was previously illustrated, the accuracy assesses the classification quality according to the values of true positives, false positives, true negatives, and false negatives. Herein, the values obtained by this measure are expected to be closer to one, which indicates a higher accuracy. Table 13 reports the values of the compared counterparts. The proposed MPAO achieved better accuracy concerning the classification of the selected feature. It showed the best accuracy values in 13 of the 15 datasets, followed by the PSO in two, whereas MPA and SSA obtained the best accuracy in only one dataset. The remaining algorithms showed acceptable accuracy but did not outperform the proposed algorithm.

Table 13.

Results of the accuracy measure for all datasets.

For further analysis, Table 14 shows the results of the Wilcoxon rank sum test as a statistical test. This measure tests if there is a significant difference between the proposed method and the other methods at a level equal to 0.05. From Table 14, we can notice that there are significant differences between the MPAO and AO, MFO, SMA, SSA, GOA, and WOA in most datasets, and there are significant differences between the MPAO and PSO, GA, and MPA in 46% of the datasets. These results show the superiority of the proposed MPAO.

Table 14.

Results of the Wilcoxon rank sum test for all methods.

5.3. Experiment 3: Solving Different Real Engineering Problems

This experiment evaluates the proposed MPAO using four well-known engineering issues, including (a) tension/compression spring, (b) rolling element bearing, (c) speed reducer, and (d) gear train design. These issues were handled by the proposed MPAO and some meta-heuristic methods formerly performed in the literature. The following subsection aims to evaluate the effectiveness of the proposed MPAO algorithm by comparing its results with the other methods for solving these optimization issues. In all tables, the boldface refers to the best value.

5.3.1. Tension/Compression Spring Problem

The issue of optimizing tension/compression spring is a portion of the multidisciplinary engineering optimization issue. This issue aims to decrease the spring weight. To solve this issue, it needs three kinds of optimized variables, such as the diameter of the wire (d), the mean coil diameter (D), and the number of active coils (N).

This problem was intensively handled through various optimization algorithms such as: MVO [47], GSA [47], WOA [48], GWO [49], MFO [42], SSA [50] and RO [9]. The comparison results of the tension/ compression spring problem between the proposed MPAO algorithm and the other methods are listed in Table 15. It is obviously observed from Table 15 that the proposed MPAO obtained the minimum cost value with 0.012665, which was ranked in the first place, followed by the GWO algorithm with 0.012666, which was ranked second. At the same time, both SSA and WOA obtained a similar cost value of 0.0126763, followed by MFO and RO algorithms with a slightly lower value. On the contrary, GSA and MVO obtained the highest cost values, with 0.0127022 for GSA and 0.01279 for MVO, which were ranked last. For the tension/compression spring problem, the cost value of the proposed MPAO algorithm is better than other algorithms.

Table 15.

Results of the Tension/Compression Spring.

5.3.2. Rolling Element Bearing Problem

Another basic portion of the multidisciplinary engineering design issues is called rolling element bearing, which aims to maximize the dynamic type of the load-carrying capability of the bearing of the rolling part. To solve this issue, it needs ten kinds of decision variables. In these kinds of designs, problem restrictions are implemented based on manufacturing conditions and kinematics. A comparison between the proposed MPAO and the CHHO [51], HHO [51], SCA [52], MFO [42], MVO [53], TLBO [9], and PVS [54] algorithms for solving that problem is illustrated in Table 16. Concerning the results of the optimal costs displayed in Table 16, the proposed MPAO showed the best value for that problem with 85539.192. The MVO algorithm ranked second with 84491.266 cost value, which is followed by the HHO, MFO, CHHO, and SCA. On the other hand, the TLBO and PVS algorithms showed the lowest value for that problem, with 81859.74 for TLBO and 81859.741 for PVS. The problem results indicate the superiority of the proposed MPAO method in solving the rolling element bearing design problem.

Table 16.

Results of the rolling element bearing.

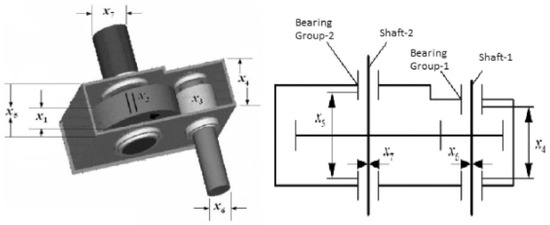

5.3.3. Speed Reducer Problem

Speed reducer is also an important engineering issue. The actual aim of this issue is to reduce the speed reducer weight with the following limitations: bending stress of the gear teeth, the stress of the surface, the shafts stresses, and the shafts’ transverse deflections. The speed reducer problem has some variables that need to be optimized, as shown in Figure 2. In this subsection, these variables are extensively addressed through different bio-inspired optimization methods such as CHHO [51], HHO [51], MDE [55], PSO-DE [56], PSO [57], MBA [58], SSA [57], and ISCA [57]. Table 17 displays the assessment of the speed reducer design problem between the proposed MPAO and the other methods. From the results shown in Table 17, it can be observed that the proposed MPAO algorithm is competitive as it obtained the optimal weight compared to other methods with 2994.4725. In addition, CHHO can be considered equally competitive in line with the proposed MPAO algorithm as it ranked second with 2994.4737, which is followed by MBA with 2994.4824, PSO-DE with 2996.3481, MDE with 2996.3566, and ISCA with 2997.1295. On the other hand, the SSA, PSO, and HHO came in the last rank as they obtained the highest cost values. These results indicate that the proposed MPAO algorithm outperforms other methods in obtaining the optimal cost value for the speed reducer problem.

Figure 2.

Speed reducer problem.

Table 17.

Results of the speed reducer problem.

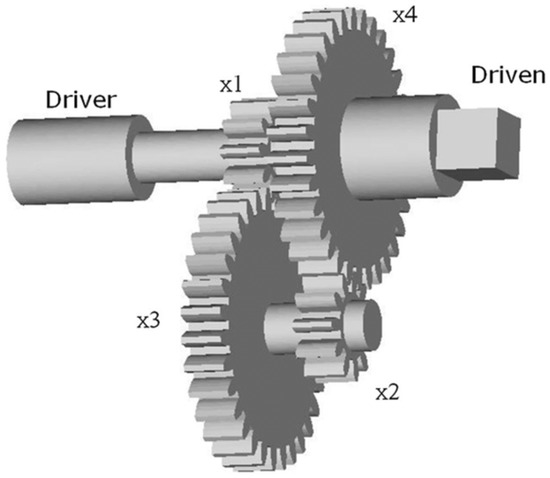

5.3.4. Gear Train Design Problem

Gear train is another kind of engineering optimization issue, which have four types of variables as shown in Figure 3. This kind of engineering issue aims to reduce the teeth ratio and the scaler value of the gear. Therefore, the decision parameter consists of the number of teeth on each gear.

Figure 3.

Gear train design problem.

To test the effect of the proposed MPAO algorithm in handling the problem of gear design, we compared to six optimization methods, including: CHHO [51], HHO [51], IMPFA [59], GeneAS [60], Kannan and Kramer [60], and Sandgren [60]. The test results of the proposed MPAO and other methods are listed in Table 18. The test result of the proposed MPAO algorithm is the best optimal value with 2.701E-12, which is followed by the IMPFA algorithm with 1.392E-10. Kannan and Kramer, GeneAS, and CHHO provided close results with 0.144121 for Kannan and Kramer, 0.144242 for GeneAS, and 0.1434 for CHHO. At the same time, HHO and Sandgren do not perform well, as they provide the lowest optimal value. The comparative results reveal that the proposed MPAO method is more precisely competent for handling the gear train design problem.

Table 18.

Results of the gear train design problem.

To sum up, the outcomes of the previous experiments and the statistical analyses show that the proposed algorithm outperformed all others in obtaining the optimal outcomes and demonstrated its efficiency in most cases in solving FS, global optimization, and engineering problems. Evaluating the efficacy of MPAO relies on different performance metrics, including Min, Max, and Std of the fitness value, besides the classification accuracy, number of features, computation time, and Wilcoxon rank sum test. The MPAO showed some advantages, such as fast convergence, maintaining the search space with good exploration behavior, and escaping from the local optima in most cases. However, it showed a limitation in requiring a higher computation time, which needs to be improved in future study. In general, the better performance of the proposed MPAO can be due to the exploration’s improvement and exploitative capabilities, along with the utilization of AO parameters.

6. Conclusions

This study suggested an improved marine predators algorithm (MPA) efficient optimization technique for handling global optimization, feature selection (FS), and real-world engineering problems. The proposed algorithm in this paper used the strategy of the narrowed exploration of the Aquila optimizer (AO) to update the search space and explore more regions in the search domain to enhance the exploration behavior of the MPA. Therefore, the narrowed exploration of the AO increased the searchability of the MPA, thereby improving its ability to obtain the optimal or near-optimal results and thus helping the original MPA to overcome the local optima issues in the search domain effectively. The MPAO was evaluated for solving three problems: global optimization, feature selection, and real engineering cases. At first, the MPAO was evaluated on ten benchmark global optimization functions and outperformed the other algorithms in 60% of the functions. Concerning the FS experiment, a set of UCI datasets and four evaluation criteria were considered to prove the effectiveness of the suggested MPAO compared to nine metaheuristic optimization algorithms. Moreover, four engineering optimization issues were also considered to demonstrate the superiority of the suggested MPAO. The findings showed that the performance measures proved the superiority of the suggested MPAO compared to other methods in terms of Max, Min, and Std of the fitness function and accuracy over all considered FS issues. It outperformed the compared method in 87% of the datasets in terms of classification accuracy. The MPAO provided better results than the compared methods regarding real engineering problems. In the future, the proposed method will be used to solve more real-world problems, such as wind speed estimation, business optimization issues, and large-scale optimization problems.

Author Contributions

Conceptualization, A.A.E.; Data curation, F.H.I., R.M.G. and M.A.G.; Formal analysis, A.A.E., R.M.G. and M.A.G.; Investigation, A.A.E., F.H.I., R.M.G. and M.A.G.; Methodology, A.A.E., F.H.I., R.M.G. and M.A.G.; Resources, A.A.E.; Software, A.A.E.; Validation, A.A.E., R.M.G. and M.A.G.; Visualization, A.A.E., F.H.I. and M.A.G.; Writing—original draft, A.A.E., F.H.I., R.M.G. and M.A.G.; Writing—review and editing, A.A.E., F.H.I., R.M.G. and M.A.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kohavi, R.; John, G.H. Wrappers for feature subset selection. Artif. Intell. 1997, 97, 273–324. [Google Scholar] [CrossRef]

- Liu, H.; Motoda, H. Feature Extraction, Construction and Selection: A Data Mining Perspective; Springer Science & Business Media: Berlin/Heidelberg, Germany, 1998; Volume 453. [Google Scholar]

- Luukka, P. Feature selection using fuzzy entropy measures with similarity classifier. Expert Syst. Appl. 2011, 38, 4600–4607. [Google Scholar] [CrossRef]

- Talbi, E.G. Metaheuristics: From Design to Implementation; John Wiley & Sons: Hoboken, NJ, USA, 2009. [Google Scholar]

- Saremi, S.; Mirjalili, S.; Lewis, A. Grasshopper optimisation algorithm: Theory and application. Adv. Eng. Softw. 2017, 105, 30–47. [Google Scholar] [CrossRef]

- Hussien, A.G.; Amin, M. A self-adaptive Harris Hawks optimization algorithm with opposition-based learning and chaotic local search strategy for global optimization and feature selection. Int. J. Mach. Learn. Cybern. 2022, 13, 309–336. [Google Scholar] [CrossRef]

- Hashim, F.A.; Hussien, A.G. Snake Optimizer: A novel meta-heuristic optimization algorithm. Knowl.-Based Syst. 2022, 242, 108320. [Google Scholar] [CrossRef]

- Mostafa, R.R.; Hussien, A.G.; Khan, M.A.; Kadry, S.; Hashim, F.A. Enhanced coot optimization algorithm for dimensionality reduction. In Proceedings of the 2022 Fifth International Conference of Women in Data Science at Prince Sultan University (WiDS PSU), Riyadh, Saudi Arabia, 28–29 March 2022; pp. 43–48. [Google Scholar]

- Rao, R.V.; Savsani, V.J.; Vakharia, D. Teaching–learning-based optimization: A novel method for constrained mechanical design optimization problems. Comput.-Aided Des. 2011, 43, 303–315. [Google Scholar] [CrossRef]

- Ramezani, F.; Lotfi, S. Social-based algorithm (SBA). Appl. Soft Comput. 2013, 13, 2837–2856. [Google Scholar] [CrossRef]

- Atashpaz-Gargari, E.; Lucas, C. Imperialist competitive algorithm: An algorithm for optimization inspired by imperialistic competition. In Proceedings of the 2007 IEEE Congress on Evolutionary Computation, Singapore, 25–28 September 2007; pp. 4661–4667. [Google Scholar]

- Javidy, B.; Hatamlou, A.; Mirjalili, S. Ions motion algorithm for solving optimization problems. Appl. Soft Comput. 2015, 32, 72–79. [Google Scholar] [CrossRef]

- Abualigah, L.; Elaziz, M.A.; Hussien, A.G.; Alsalibi, B.; Jalali, S.M.J.; Gandomi, A.H. Lightning search algorithm: A comprehensive survey. Appl. Intell. 2021, 51, 2353–2376. [Google Scholar] [CrossRef]

- Doğan, B.; Ölmez, T. A new metaheuristic for numerical function optimization: Vortex Search algorithm. Inf. Sci. 2015, 293, 125–145. [Google Scholar] [CrossRef]

- Holland, J.H. Adaptation in Natural and Artificial Systems: An Introductory Analysis with Applications to Biology, Control, and Artificial Intelligence; MIT Press: Cambridge, MA, USA, 1992. [Google Scholar]

- Rocca, P.; Oliveri, G.; Massa, A. Differential evolution as applied to electromagnetics. IEEE Antennas Propag. Mag. 2011, 53, 38–49. [Google Scholar] [CrossRef]

- Passino, K.M. Biomimicry of bacterial foraging for distributed optimization and control. IEEE Control Syst. Mag. 2002, 22, 52–67. [Google Scholar]

- Moscato, P.; Mendes, A.; Berretta, R. Benchmarking a memetic algorithm for ordering microarray data. Biosystems 2007, 88, 56–75. [Google Scholar] [CrossRef] [PubMed]

- Wolpert, D.H.; Macready, W.G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Faramarzi, A.; Heidarinejad, M.; Mirjalili, S.; Gandomi, A.H. Marine Predators Algorithm: A nature-inspired metaheuristic. Expert Syst. Appl. 2020, 152, 113377. [Google Scholar] [CrossRef]

- Abualigah, L.; Yousri, D.; Abd Elaziz, M.; Ewees, A.A.; Al-qaness, M.A.; Gandomi, A.H. Aquila Optimizer: A novel meta-heuristic optimization Algorithm. Comput. Ind. Eng. 2021, 157, 107250. [Google Scholar] [CrossRef]

- Aribowo, W.; Supari, B.S.; Suprianto, B. Optimization of PID parameters for controlling DC motor based on the aquila optimizer algorithm. Int. J. Power Electron. Drive Syst. (IJPEDS) 2022, 13, 808–2814. [Google Scholar] [CrossRef]

- Hussan, M.R.; Sarwar, M.I.; Sarwar, A.; Tariq, M.; Ahmad, S.; Shah Noor Mohamed, A.; Khan, I.A.; Ali Khan, M.M. Aquila Optimization Based Harmonic Elimination in a Modified H-Bridge Inverter. Sustainability 2022, 14, 929. [Google Scholar] [CrossRef]

- Khaire, U.M.; Dhanalakshmi, R.; Balakrishnan, K. Hybrid Marine Predator Algorithm with Simulated Annealing for Feature Selection. In Machine Learning and Deep Learning in Medical Data Analytics and Healthcare Applications; CRC Press: Boca Raton, FL, USA, 2022; pp. 131–150. [Google Scholar]

- Alrasheedi, A.F.; Alnowibet, K.A.; Saxena, A.; Sallam, K.M.; Mohamed, A.W. Chaos Embed Marine Predator (CMPA) Algorithm for Feature Selection. Mathematics 2022, 10, 1411. [Google Scholar] [CrossRef]

- Balakrishnan, K.; Dhanalakshmi, R.; Mahadeo Khaire, U. Analysing stable feature selection through an augmented marine predator algorithm based on opposition-based learning. Expert Syst. 2022, 39, e12816. [Google Scholar] [CrossRef]

- Tizhoosh, H.R. Opposition-based learning: A new scheme for machine intelligence. In Proceedings of the International Conference on Computational Intelligence for Modelling, Control and Automation and International Conference on Intelligent Agents, Web Technologies and Internet Commerce (CIMCA-IAWTIC’06), Vienna, Austria, 28–30 November 2005; Volume 1, pp. 695–701. [Google Scholar]

- Abd Elaziz, M.; Ewees, A.A.; Yousri, D.; Abualigah, L.; Al-qaness, M.A. Modified marine predators algorithm for feature selection: Case study metabolomics. Knowl. Inf. Syst. 2022, 64, 261–287. [Google Scholar] [CrossRef]

- Jia, H.; Sun, K.; Li, Y.; Cao, N. Improved marine predators algorithm for feature selection and SVM optimization. KSII Trans. Internet Inf. Syst. (TIIS) 2022, 16, 1128–1145. [Google Scholar]

- Hu, G.; Zhu, X.; Wang, X.; Wei, G. Multi-strategy boosted marine predators algorithm for optimizing approximate developable surface. Knowl.-Based Syst. 2022, 254, 109615. [Google Scholar] [CrossRef]

- Hu, G.; Zhu, X.; Wei, G.; Chang, C.T. An improved marine predators algorithm for shape optimization of developable Ball surfaces. Eng. Appl. Artif. Intell. 2021, 105, 104417. [Google Scholar] [CrossRef]

- Han, M.; Du, Z.; Zhu, H.; Li, Y.; Yuan, Q.; Zhu, H. Golden-Sine dynamic marine predator algorithm for addressing engineering design optimization. Expert Syst. Appl. 2022, 210, 118460. [Google Scholar] [CrossRef]

- Al-qaness, M.A.; Ewees, A.A.; Fan, H.; Abualigah, L.; Abd Elaziz, M. Boosted ANFIS model using augmented marine predator algorithm with mutation operators for wind power forecasting. Appl. Energy 2022, 314, 118851. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Abouhawwash, M. Hybrid marine predators algorithm for image segmentation: Analysis and validations. Artif. Intell. Rev. 2022, 55, 3315–3367. [Google Scholar] [CrossRef]

- Nadimi-Shahraki, M.H.; Taghian, S.; Mirjalili, S.; Abualigah, L. Binary Aquila Optimizer for Selecting Effective Features from Medical Data: A COVID-19 Case Study. Mathematics 2022, 10, 1929. [Google Scholar] [CrossRef]

- Abd Elaziz, M.; Dahou, A.; Alsaleh, N.A.; Elsheikh, A.H.; Saba, A.I.; Ahmadein, M. Boosting COVID-19 image classification using MobileNetV3 and aquila optimizer algorithm. Entropy 2021, 23, 1383. [Google Scholar] [CrossRef]

- Fatani, A.; Dahou, A.; Al-Qaness, M.A.; Lu, S.; Elaziz, M.A. Advanced feature extraction and selection approach using deep learning and Aquila optimizer for IoT intrusion detection system. Sensors 2021, 22, 140. [Google Scholar] [CrossRef]

- Zhang, Y.J.; Zhao, J.; Gao, Z.M. Hybridized improvement of the chaotic Harris Hawk optimization algorithm and Aquila Optimizer. In Proceedings of the International Conference on Electronic Information Engineering and Computer Communication (EIECC 2021), SPIE, Nanchang, China, 17–19 December 2021; Volume 12172, pp. 327–332. [Google Scholar]

- Wang, S.; Jia, H.; Abualigah, L.; Liu, Q.; Zheng, R. An improved hybrid aquila optimizer and harris hawks algorithm for solving industrial engineering optimization problems. Processes 2021, 9, 1551. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Western, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Mitchell, M. An Introduction to Genetic Algorithms; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Mirjalili, S. Moth-flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowl.-Based Syst. 2015, 89, 228–249. [Google Scholar] [CrossRef]

- Mirjalili, S.; Gandomi, A.H.; Mirjalili, S.Z.; Saremi, S.; Faris, H.; Mirjalili, S.M. Salp Swarm Algorithm: A bio-inspired optimizer for engineering design problems. Adv. Eng. Softw. 2017, 114, 163–191. [Google Scholar] [CrossRef]

- Li, S.; Chen, H.; Wang, M.; Heidari, A.A.; Mirjalili, S. Slime mould algorithm: A new method for stochastic optimization. Future Gener. Comput. Syst. 2020, 111, 300–323. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Price, K.; Awad, N.; Ali, M.; Suganthan, P. The 100-Digit Challenge: Problem Definitions and Evaluation Criteria for the 100-Digit Challenge Special Session and Competition on Single Objective Numerical Optimization; Nanyang Technological University: Singapore, 2018. [Google Scholar]

- Wang, Y.; Li, H.X.; Huang, T.; Li, L. Differential evolution based on covariance matrix learning and bimodal distribution parameter setting. Appl. Soft Comput. 2014, 18, 232–247. [Google Scholar] [CrossRef]

- Gandomi, A.H.; Yang, X.S.; Alavi, A.H. Cuckoo search algorithm: A metaheuristic approach to solve structural optimization problems. Eng. Comput. 2013, 29, 17–35. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Xing, Z.; Jia, H. Multilevel color image segmentation based on GLCM and improved salp swarm algorithm. IEEE Access 2019, 7, 37672–37690. [Google Scholar] [CrossRef]

- Dhawale, D.; Kamboj, V.K.; Anand, P. An improved chaotic harris hawks optimizer for solving numerical and engineering optimization problems. Eng. Comput. 2021, 1–46. [Google Scholar] [CrossRef]

- Kamboj, V.K.; Bhadoria, A.; Gupta, N. A novel hybrid GWO-PS algorithm for standard benchmark optimization problems. INAE Lett. 2018, 3, 217–241. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Hatamlou, A. Multi-verse optimizer: A nature-inspired algorithm for global optimization. Neural Comput. Appl. 2016, 27, 495–513. [Google Scholar] [CrossRef]

- Savsani, P.; Savsani, V. Passing vehicle search (PVS): A novel metaheuristic algorithm. Appl. Math. Model. 2016, 40, 3951–3978. [Google Scholar] [CrossRef]

- Zhao, W.; Zhang, Z.; Wang, L. Manta ray foraging optimization: An effective bio-inspired optimizer for engineering applications. Eng. Appl. Artif. Intell. 2020, 87, 103300. [Google Scholar] [CrossRef]

- Niu, B.; Li, L. A novel PSO-DE-based hybrid algorithm for global optimization. International Conference on Intelligent Computing; Springer: Berlin/Heidelberg, Germany, 2008; pp. 156–163. [Google Scholar]

- Gupta, S.; Deep, K.; Moayedi, H.; Foong, L.K.; Assad, A. Sine cosine grey wolf optimizer to solve engineering design problems. Eng. Comput. 2021, 37, 3123–3149. [Google Scholar] [CrossRef]

- Sadollah, A.; Bahreininejad, A.; Eskandar, H.; Hamdi, M. Mine blast algorithm: A new population based algorithm for solving constrained engineering optimization problems. Appl. Soft Comput. 2013, 13, 2592–2612. [Google Scholar] [CrossRef]

- Tang, C.; Zhou, Y.; Luo, Q.; Tang, Z. An enhanced pathfinder algorithm for engineering optimization problems. Eng. Comput. 2022, 38, 1481–1503. [Google Scholar] [CrossRef]

- Deb, K.; Goyal, M. A combined genetic adaptive search (GeneAS) for engineering design. Comput. Sci. Inform. 1996, 26, 30–45. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).