Abstract

This paper studies a generalized distributed optimization problem for second-order multi-agent systems (MASs) over directed networks. Firstly, an improved distributed continuous-time algorithm is proposed. By using the linear transformation method and Lyapunov stability theory, some conditions are obtained to guarantee all agents’ states asymptotically reach the optimal solution. Secondly, to reduce unnecessary communication transmission and control cost, an event-triggered algorithm is designed. Moreover, the convergence of the algorithm is proved, and the Zeno behavior can be avoided based on strict theoretic analysis. Finally, one example is given to verify the good performance of the proposed algorithms.

Keywords:

distributed optimization; directed graph; event-triggered communication; multi-agent systems MSC:

34H05; 49J15; 49K15; 93C15

1. Introduction

In recent years, the MASs [1] with swarm intelligence have been applied in many fields, such as attitude alignment and consensus [2], automated highway systems [3], flocking [4], formation control [5,6], and so on. In practical applications, MASs should optimize the global objective and reach consensus under appropriate control protocols. Different from traditional optimization methods, distributed optimization can effectively avoid the dependence of centralized control to improve privacy protection [7], reliability [8] and scalability [9].

The major assignment of distributed optimization is to design appropriate distributed control protocols, which not only enable agents to achieve efficient swarm behaviors through local collaborations but also optimize the global objective. Therefore, the distributed optimization of MASs is widely used in the maximization of social welfare [10], optimization of allocate resources [11], energy management of microgrids [12,13,14,15], and sensor networks [16]. According to different information updating and transmission rules, existing distributed optimization models can be generally divided into two categories: discrete-time models and continuous-time models. Early researches mainly focused on discrete-time models [17,18,19,20,21,22,23]. Subsequently, the distributed optimization problem for MASs with continuous-time models was considered because it can effectively avoid the analysis of time complexity in discrete-time algorithms with the assistance of modern control theory. At present, some meaningful results have been obtained. For instance, an objective optimization problem for MASs over strongly connected weighted balanced networks was studied in [24]. Considering the constraints of control input, an optimization algorithm with input saturation was designed in [25]. To shorten the convergence time, the finite-time and fixed-time optimization algorithms were proposed in [26,27], respectively.

Most distributed optimization problems were considered based on first-order or second-order MASs. In [28], a first-order distributed optimization problem with an information restriction policy was studied. In [29,30], the first-order distributed optimization problems over directed network structure were discussed. In [31,32], some gradient-based algorithms based on second-order MASs with undirected networks were proposed. In addition, several distributed optimization problems with exogenous disturbances were considered in [33]. However, most distributed optimization algorithms for second-order MASs were proposed based on undirected network structures. How to design distributed optimization algorithms on directed networks is challenging. This is one of the motives of this paper.

In practical application, agents are usually limited by communication resources. Continuous communication inevitably leads to high energy consumption. To reduce unnecessary communication, the event-triggered control technique was employed in the design of the distributed optimal control protocol. For example, some event-triggered subgradient and consensus algorithms were proposed to address optimization problems for first-order MASs over undirected networks in [34,35], respectively. In [36], an event-triggered zero-gradient-sum algorithm over directed networks was proposed. Additionally, a distributed optimization problem for second-order MASs with undirected networks was considered based on the event-triggered communication algorithm in [37]. To our knowledge, the distributed optimization problems for second-order MASs over directed networks have not been considered by using an event-triggered communication algorithm.

Based on the above discussion, this paper considers the distributed optimization problem for second-order MASs over directed networks. Both continuous-time and event-triggered communication protocols are proposed. The main contributions of this paper can be broadly summarized as follows:

- (1)

- Motivated by [38,39], this paper considers a more general distributed optimization problem, which can be regarded as a generalization of the existing one in [33,37]. The global optimization objective is the weighted sum of local objective functions, which can adjust the global optimization objective more flexibly. Thus, it has a wider application prospect.

- (2)

- A directed network is constructed based on the considered optimization problem, and a second-order continuous protocol is proposed according to the constructed directed network. Some sufficient conditions are obtained to ensure the solvability of the considered optimization problem.

- (3)

- In order to reduce the limited resource cost, an event-triggered communication protocol is designed. Combined with the linear transformation method and Lyapunov stability theory, we can prove that the optimization problem can be solved under the proposed protocols.

The remaining parts of this paper are organized as follows. Some preliminaries are presented in Section 2. In Section 3, the continuous-time communication protocol is proposed. In Section 4, an improved event-triggered protocol is given. A simulation example is presented in Section 5. Finally, a conclusion is given in Section 6.

Notations. Let and denote the N-dimensional Euclidean space and dimensional matrices. is the identity matrix with dimension. and denote the column vectors with N ones and N zeros, respectively. ⊗ and denote the Kronecker product and the Euclidean norm, respectively. represents the gradient of function . is the transposition of matrix (vector x), and is the inverse of matrix . denotes the positive integer set.

2. Preliminaries

2.1. Graph Theory

Consider the MASs with N agents, and the interactions among agents are described by a directed graph , in which is a set of finite vertices and is an edge set. For a directed edge , and represent the tail vertex and head vertex, respectively. The neighbor set of vertex is denoted as . is the weighted adjacency matrix of graph , where if , and . The graph is called strongly connected if there is a directed path between any two different nodes. The in-degree matrix of the graph is defined as , where . The corresponding Laplacian matrix ∈ is defined by . A digraph is called detail-balanced [40] if there exists a positive vector , ⋯,, such that for .

2.2. Basic Definition

Definition 1.

The function is called strongly convex if for any there exists , such that .

Definition 2.

The function is called the gradient m-Lipschitz if for any there exists , such that m.

2.3. Problem Statement

In this paper, we mainly study the following optimization problem in a distributed method

where is the decision vector and is the local cost function of agent . is a weight parameter satisfying , and is the global optimization function. Our aim is to solve this optimization problem (1) by proposing some distributed algorithms.

Remark 1.

In existing works [26,27,33,36,37,41,42], the global optimization function is given by. However, the optimization problem (1) is considered in this paper. When we choose, , the optimization problem (1) becomes, which has the same optimal solution as. Therefore, the optimization problem (1) can be regarded as a generalization of the existing one. Furthermore, the optimization problem (1) is equivalent to the optimization problemwith. If we letand, it haswith. As Θ is a constant, it has the same optimal solution as problem (1). Hence, the optimization problem (1) is more general and has more extensive applications.

For the optimization problem (1), we use to represent the estimation of the decision vector of the ith agent. Denote . Assume that the network topology among agents is directed and strongly connected, and the corresponding Laplacian matrix is denoted by . Then, the problem (1) can be written as the following problem:

The proof is similar to the one in [43].

To solve the above problem, the network topology needs to designed carefully. Based on the weighted parameters for , we need to construct a directed graph to satisfy the distributed optimization problem (2). In the literature [41], a directed detail-balanced network construction method was proposed, which is also feasible in this paper. Hence, the specific construction algorithm is omitted here. In the constructed network, the adjacency matrix needs to satisfy the following two conditions:

- (1).

- The directed graph is detail-balanced with the weight . That means , for .

- (2).

- The directed graph is strongly connected.

Let and be a new undirected graph where the adjacency matrix is , so that the corresponding Laplacian matrix is . Then, the matrix is a symmetric matrix, and its eigenvalues are represented by , satisfying . Define , in which

and satisfying . The matrix is an orthogonal matrix which satisfies .

In order to analyze the above problem, the following Assumption bout the objective function is widely used, and details can be found in works [24,33,37].

Assumption 1.

The objective function of agent i is differentiable and -strongly convex on .

Assumption 2.

The gradient is -Lipschitz.

Assumption 3.

The network topology is directed strongly connected and ξ-detailed balanced.

3. The Continuous-Time Communication Protocol

In this section, we assume that the communication among agents is continuous. Based on directed graph , the following algorithm is presented

where denote the position and the velocity states of agent i, respectively. , and are positive parameters. is an auxiliary variable, and the initial value satisfies .

Remark 2.

The distributed optimal algorithm (3) not only contains the consensus term and optimization term but also has auxiliary variable . Motivated by [37,42], the auxiliary variable , as the integral of , is designed for ensuring that the equilibrium point of (3) is the optimal value through the transmission of local information.

Remark 3.

Compared with [37], each item of (3) has a weighted coefficient to efficiently adjust the influence strength on the distributed optimization algorithm. It is well known that the sensitivity of the second-order MASs is higher than that of the first-order MASs, so the stability analysis becomes more difficult. The algorithm (3) is suitable for second-order MASs because of their improved flexibility and higher fineness. In addition, different from the algorithm in [42], the dynamics of do not use the position information of agents in this paper. Thus, the calculation consumption is much simpler than the existing one.

Because of the strict convexity of function , the objective function is also a differentiable and strongly convex function. Based on Assumption 1, the optimization problem (1) has a unique optimal solution

According to the definition of Laplacian , the distributed optimization algorithm (3) can be rewritten as the following compact form:

Assumption 4.

For algorithm (3), is satisfied, and there exist parameters , such that and , where , , and is a constant.

Lemma 1.

For algorithm (3), if Assumptions 1 and 2 are satisfied, then there is a unique solution for any initial value on .

Proof.

Based on the strict convexity of function , one can easily know that there exists one solution for algorithm (3) at least. Therefore, we only need to prove the uniqueness of the solution. Let , then one can obtain

It is noticed that is Lipschitz continuous, then the solution of exists. Assume there are two different solutions of Equation (4), which are and satisfying initial condition . Based on the Assumption 1, we can obtain that

where and . Additionally, then, it has

By using the Gronwall inequality, one has

which means that for . Therefore, Lemma 1 is proved. □

If Assumption 1 and equality holds, then there exists an equilibrium point of algorithm (4). Therefore, the following equation is satisfied for the :

Since is strongly connected and detail-balanced, then it has . Left multiplying for the third equation of (5), it has ; equivalently, . In combination with , it has

Because is an inevitable diagonal matrix, then the first equation of (5) implies . Substituting into the second equation of (5), and left multiplying , it has , that is . Hence, is an optimal value of (2).

We define and , then the system (4) is described as follows:

Denote , and , it has

Then, the variables of equality (7) is divided as follows , , and , , in which and , , . Therefore, equality (7) can be decomposed as follows

and

Remark 4.

Different from the dynamics in [37], the parameter is added to adjust the changing rate of in the proposed dynamics. Hence, the application scope of the second-order system is generalized. In the meantime, the matrix transformation from system (6) to system (7) solves the difficulties caused by the asymmetry of directed network topology.

Theorem 1.

Proof.

Construct the following Lyapunov function

where

and

Note that if . and if and only if . Then, and if and only if . Take the time derivation of , and , it has

and

Therefore, the time derivation of is obtained as

Since

and

As is an identity orthogonal matrix, and , then one has .

Therefore, it has that

where , , and . By comparing the above inequalities, we can easily know that . Thus, we only need to prove , . From the condition of Theorem 1, , and if and only if . Therefore, consensus can be achieved. □

Remark 5.

The distributed optimization problem for MASs with directed networks is more difficult than those on undirected networks, which are mainly reflected in two aspects. The first aspect is the symmetry of the Laplacian matrix of the network topology. The symmetry of the Laplacian matrix of undirected topology can be fully utilized as in [33,37], but the Laplacian matrix is usually asymmetric for directed topology. Therefore, to solve the optimization problem on directed networks, other more complicated methods need to be adopted. The second aspect is that the optimization problem is usually only with the sum of local objective functions and without the convex hull. However, in order to solve more general distributed optimization problems, the directed network needs to be designed carefully in this paper.

Remark 6.

It is not difficult to see that the above algorithm relies on continuous-time communication, which contains distinct shortcomings. In practical application, a continuous-time algorithm inevitably needs a lot of communication costs. As we all know, the bandwidth, transmission energy, and communication frequency are limited by the current industrial level and cannot be used indefinitely. Therefore, in the next subsection, we propose a new algorithm in order to improve this drawback.

4. The Event-Triggered Communication Protocol

Based on directed communication topology , the following algorithm is presented

where denotes the kth event instant of agent i for . Other parameters are consistent with the ones in algorithm (3).

Before analyzing the distributed optimal algorithm, the measurement error of agent i is defined as . Hence, with the definition of , the distributed optimal algorithm (12) for can be rewritten as follows

According to the definition of Laplacian , the distributed optimal algorithm can be rewritten as the following compact matrix–vector form:

where , ,, ⋯,, , , , , , .

We perform a similar coordination transformation as before. Define and , then the new transformed system is described as follows:

Denote and . Then, we divide the variables as follows: and , , where and , , , . Hence, equality (15) can be rewritten as follows:

and

Assumption 5.

For MAS (12), is satisfied, and there exist parameters , such that , , and , where are positive constants, , , , , and ϵ and are two positive constants, and is a positive number for adjustment. Moreover, the event-trigger instantly satisfies

where .

Theorem 2.

Proof.

Choosing the same Lyapunov function as (8). Based on equalities (9)–(11), (16), and (17), we take the time derivation of , and for , as follows:

and

Therefore, the time derivation of for is obtained as

By using the Young’s inequality with , for , one has

Then, we consider the following Lyapunov function

where

Note that and if and only if and . Take the time derivation of and for , respectively, one has

Therefore, the time derivation of for is obtained as

By using Young’s inequality, it has

and

Let , then it is clear that

where , , , , and .

Next, we let , where . As and , it has

Moreover, according to the above definition , one has

Based on , it has

Based on , let

where is an arbitrary positive parameter.

Therefore, it has

Consequently, it is clear that

Therefore, the agent i can use event-triggered sampling control (18) to determine whether to store and update sampling data.

From the condition of Theorem 2, , and if and only if . Therefore, the state of the system can be asymptotically uniform and converge to the optimal solution of the optimization problem. □

Remark 7.

Compared with existing work in [37], the selection of the Lyapunov function is different. In our paper, the positive function , inspired by the one in [44], is added to broaden the limitation of related coefficients in the dynamics.

Remark 8.

In this event-triggered algorithm, the global information and are used by each agent in the trigger function. To avoid using global information, the proposed algorithm will be improved in our future work.

Theorem 3.

For the MAS (12), if all conditions of Theorem 2 are satisfied, then there is no Zeno behavior.

Proof.

For , , the the upper right-hand derivative of is given by

According to equality (12), it has

As the functions and are continuous and differentiable on the closed interval , then there exist at least two points and in , which makes the following two formulas hold, respectively,

and

Hence, it has

Denote .

Since , considering equality (20), we can obtain

where is a constant. The event instants of agent i are determined by equality (18), and the next event will not be triggered before . Therefore, it has

From equality (23), we can easily obtain that . As the network topology is strongly connected, directed, and detail-balanced,

if and only if

That means all agents states achieve consensus, and the communication between agents is no longer needed. Then, before all agents achieve consensus. Therefore, Zeno behavior can be excluded. □

Remark 9.

The optimization problem with event-triggered communication and data rate constraint was investigated in [18]. To preprocess the information, a vector-valued quantizer with finite quantization levels was introduced. This gives us a good research direction. In [18], the considered system is a first-order linear difference equation, and the optimization problem is modeled as the sum of all agents’ local convex cost functions. However, in our paper, we consider a second-order multi-agent system, and the optimization problem is the weighted sum of agents’ local convex cost functions.

5. Numerical Example

In this section, an economic dispatch example is used to verify the performance of the proposed algorithms (3) and (12).

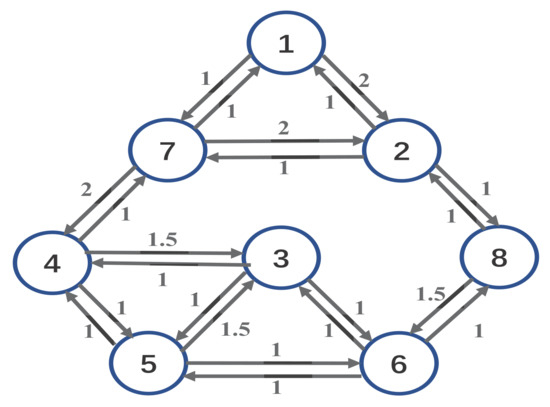

We consider a power system which contains 8 generators and 22 loads. The active power generated by the ith generator is denoted by , and the allocation weight is represented by . The communication topology is constructed as shown in Figure 1.

Figure 1.

The communication topology.

The cost functions are given by the quadratic function [26] , in which , and represent the cost coefficients. Therefore, the economic dispatch problem is given by

In the simulation, we let , , , and and choose the initial states as , , , , , , , and . The cost coefficients and the allocation weights are given in Table 1.

Table 1.

The coefficients.

Case 1.

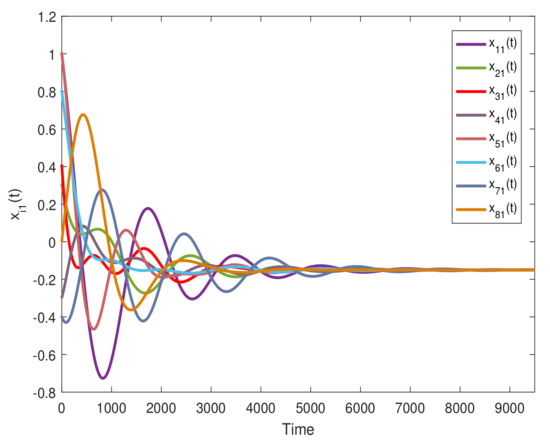

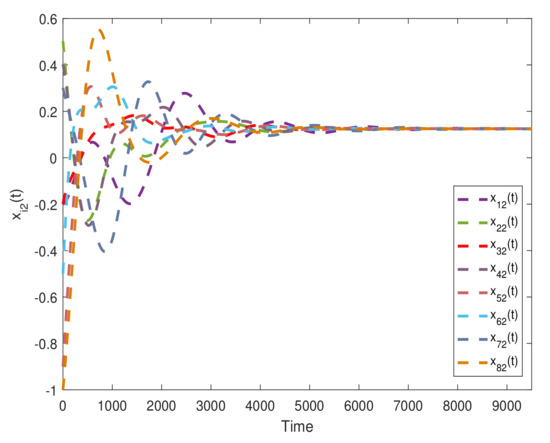

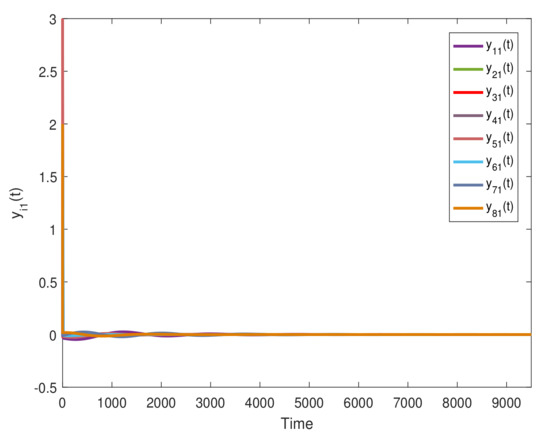

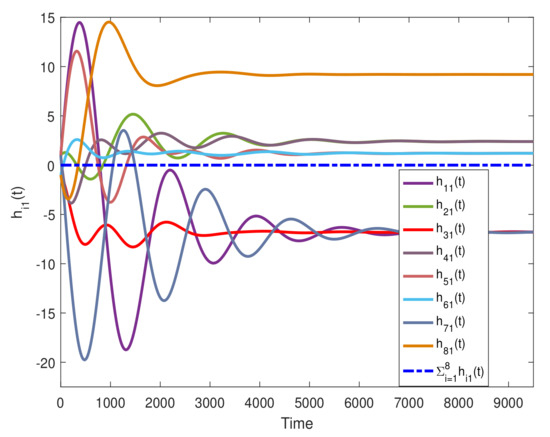

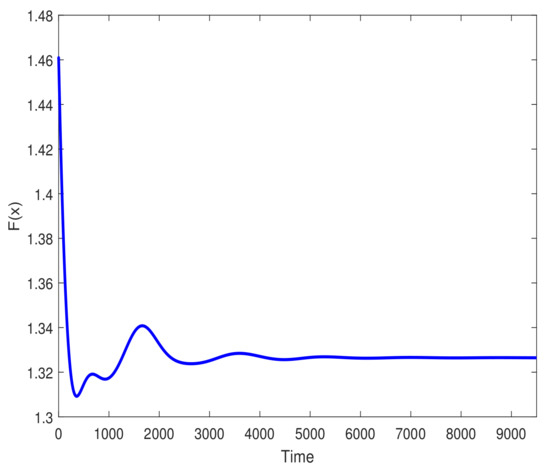

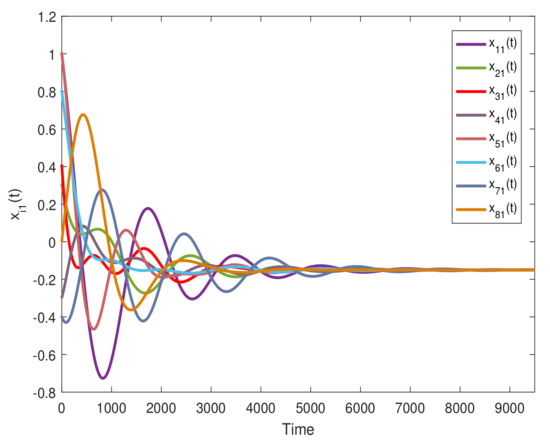

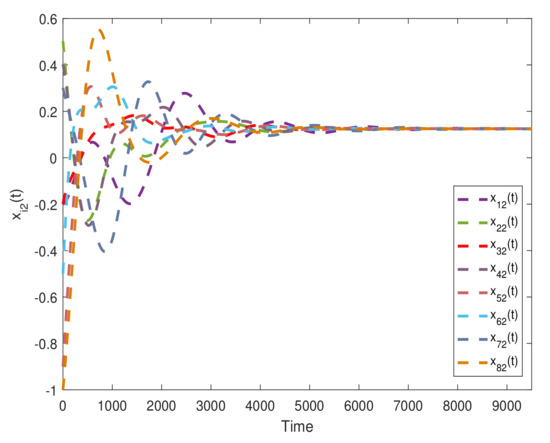

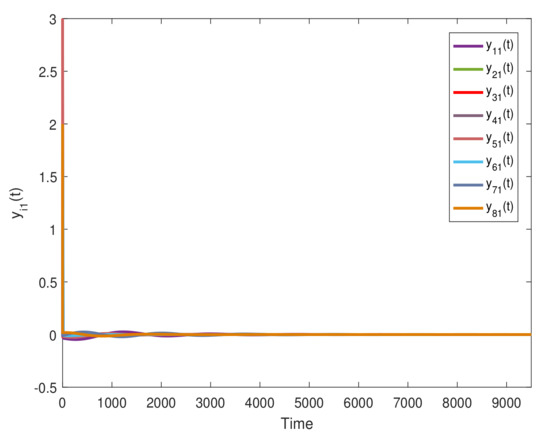

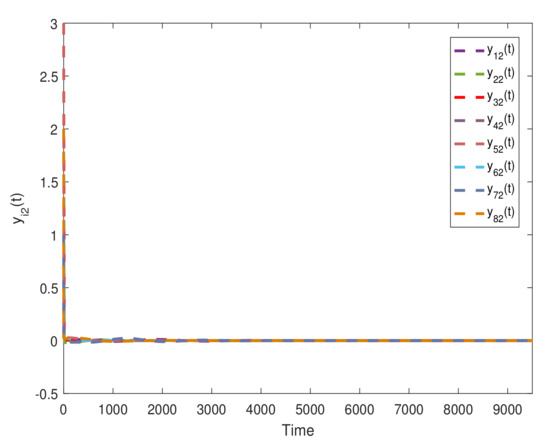

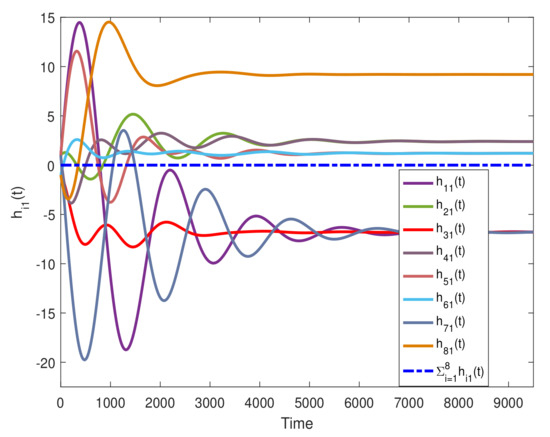

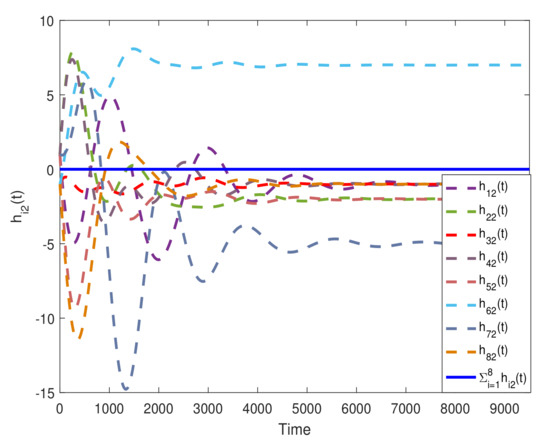

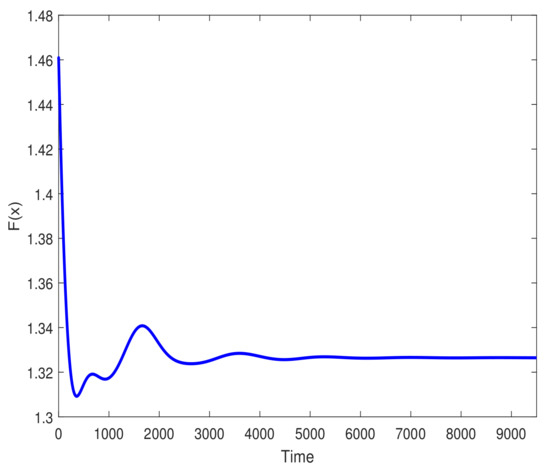

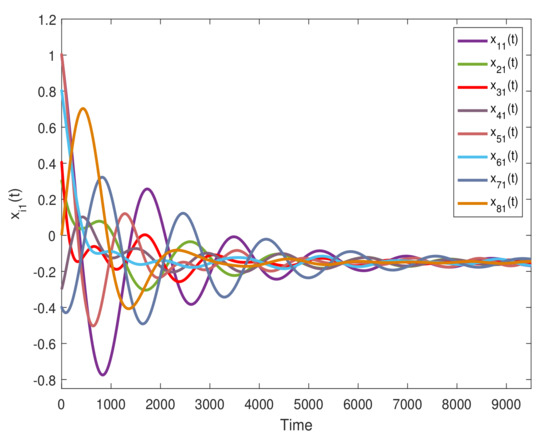

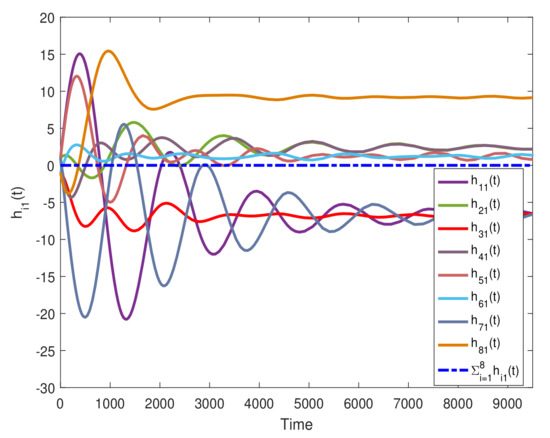

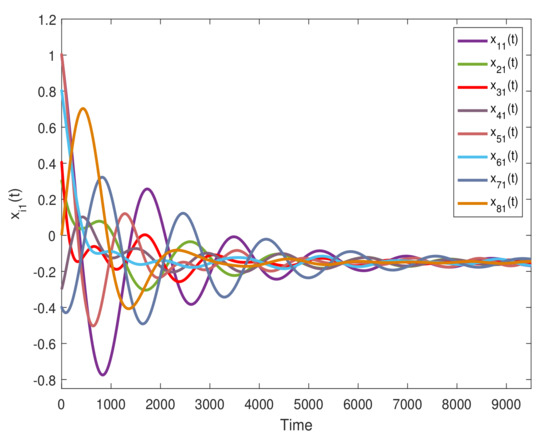

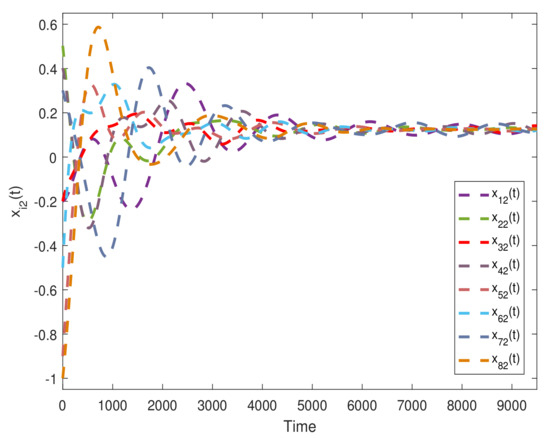

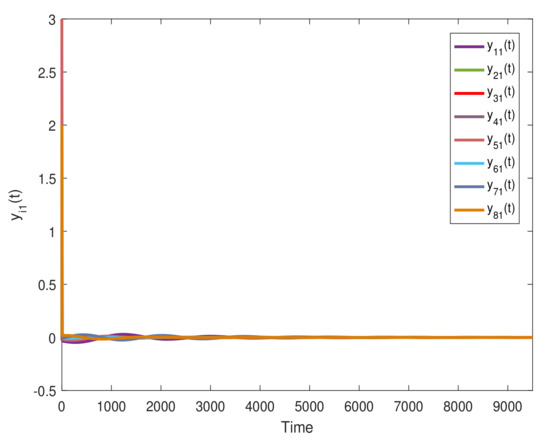

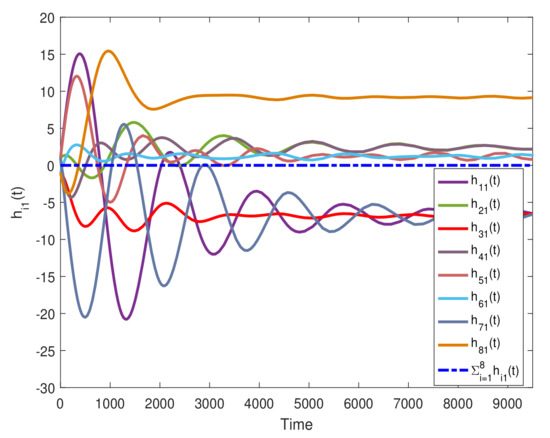

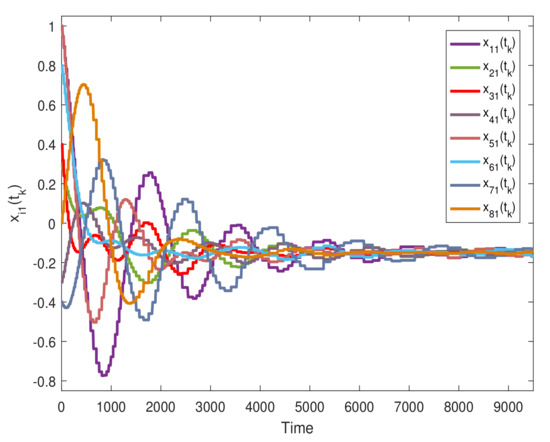

For the optimization problem (24), we use the proposed algorithm (3) with the above parameters. Through calculation, all conditions of Theorem 1 are satisfied. By using MATLAB software for simulation, the states of and are given in Figure 2 and Figure 3, respectively. It is noted that all agents’ states reach consensus and gradually converge to the optimal solution . Figure 4 and Figure 5 show the trajectories of and , respectively. Obviously, and gradually converge to 0. Figure 6 and Figure 7 show the evolution trajectories of and , respectively. From Figure 6 and Figure 7, it can be seen that the condition of is always satisfied. Moreover, gradually converge to some constants. Based on the third equation of (3), that means all agents’ states asymptotically reach the same value. The evolution trajectory of the objective function is shown in Figure 8.

Figure 2.

The states of .

Figure 3.

The states of .

Figure 4.

The trajectories of .

Figure 5.

The trajectories of .

Figure 6.

The trajectories of .

Figure 7.

The trajectories of .

Figure 8.

The cost function .

Case 2.

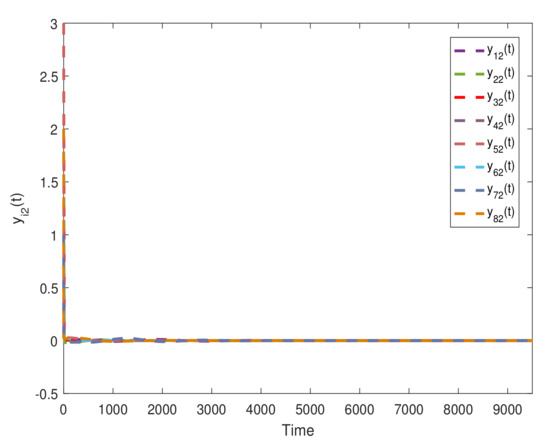

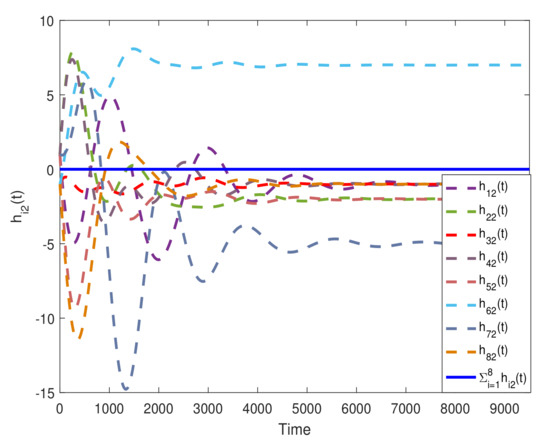

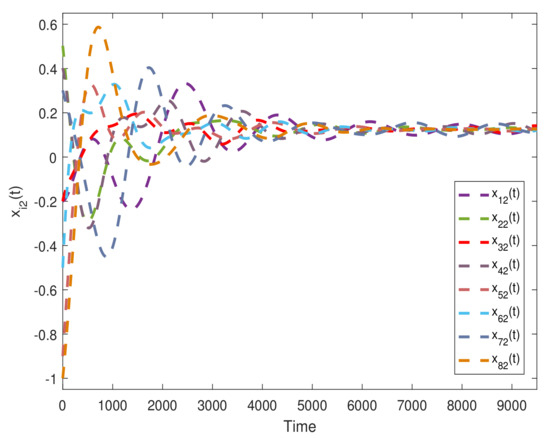

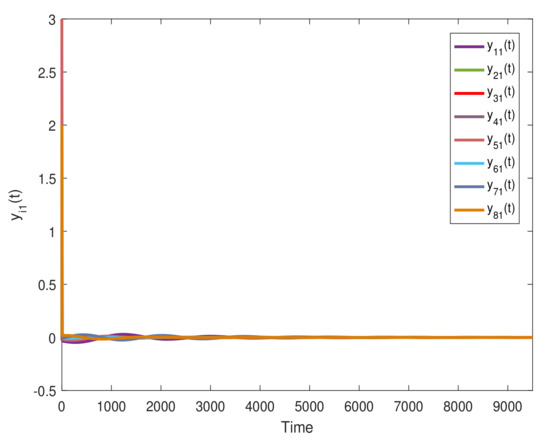

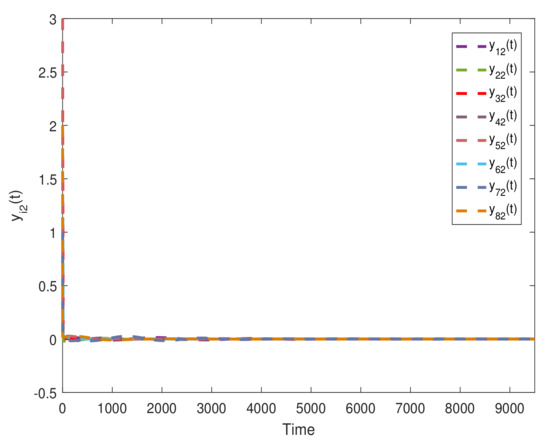

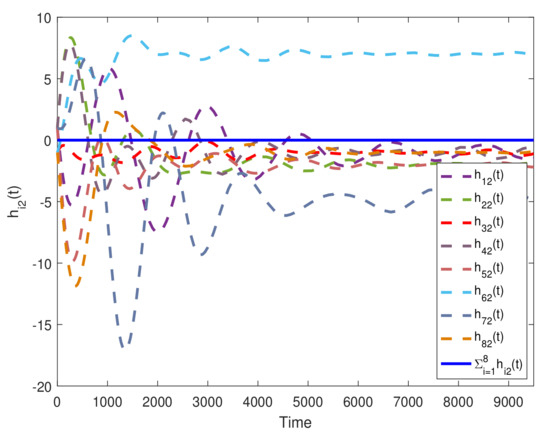

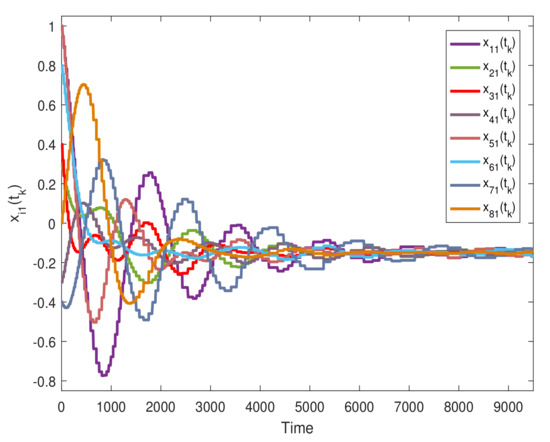

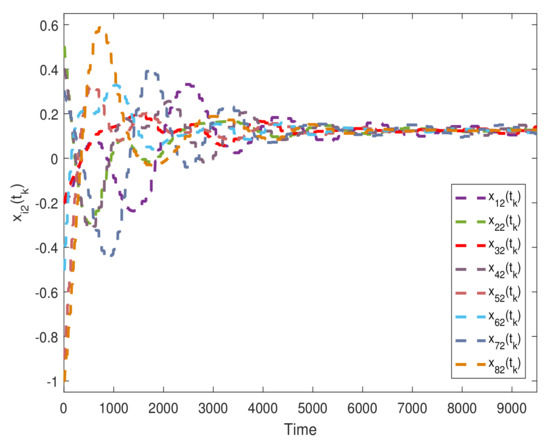

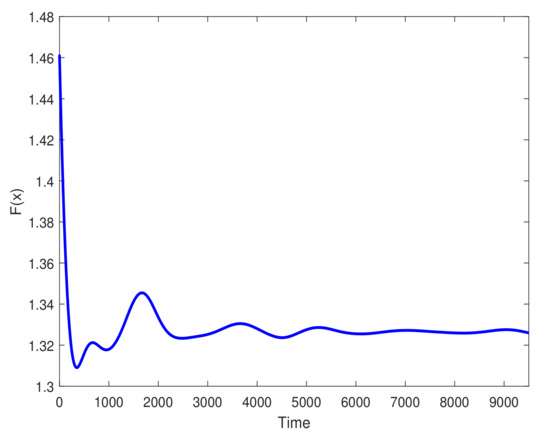

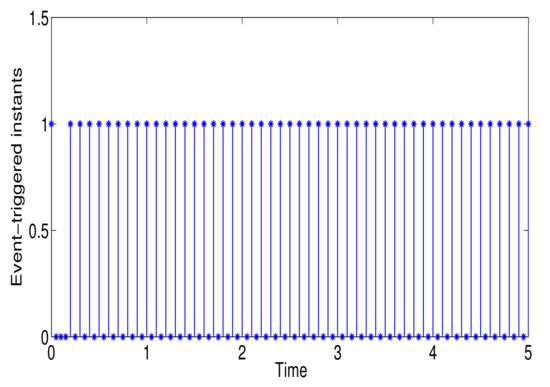

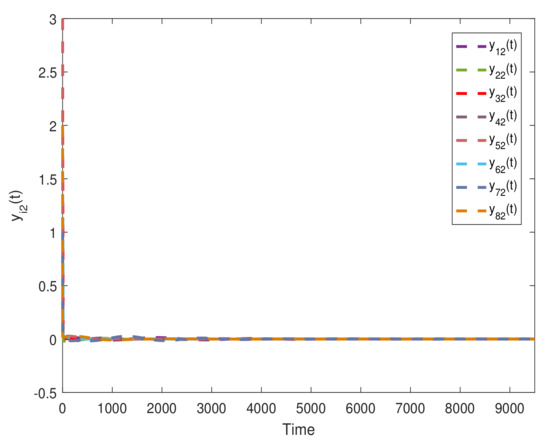

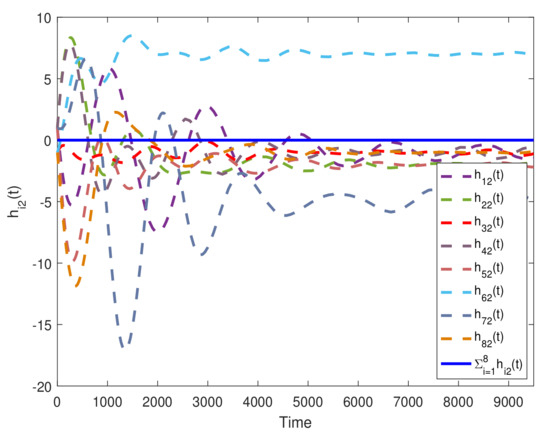

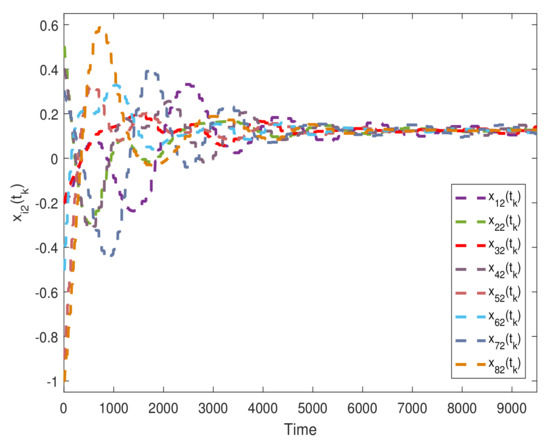

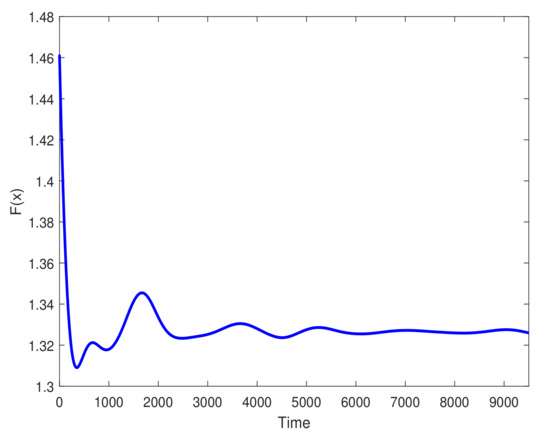

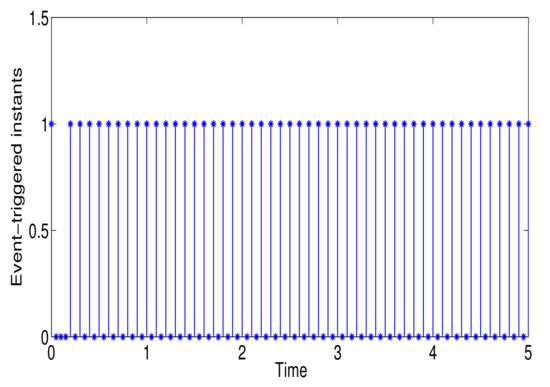

For the optimization problem (24), we use the proposed algorithm (12). All parameters are the same as the ones in Case 1. Through calculation, all conditions of Theorem 2 are also satisfied. The states of and are given in Figure 9 and Figure 10, respectively, where the optimal solution is asymptotically reachable. The trajectories of and are presented in Figure 11 and Figure 12, respectively. Apparently, they all asymptotically converge to 0. Figure 13 and Figure 14 show the trajectories of and , respectively. The condition of is always satisfied. Figure 15 and Figure 16 describe the trajectories of and , respectively. It can be shown that and oscillate to the same value. Figure 17 shows the evolution trajectory of the objective function . Figure 18 shows the event-triggered instants, in which 1 and 0 represent trigger and non-trigger, respectively.

Figure 9.

The states of .

Figure 10.

The states of .

Figure 11.

The trajectories of .

Figure 12.

The trajectories of .

Figure 13.

The trajectories of .

Figure 14.

The trajectories of .

Figure 15.

The trajectories of .

Figure 16.

The trajectories of .

Figure 17.

The cost function .

Figure 18.

Event-triggered signals.

According to the comparison between Cases 1 and 2, we find that the continuous-time communication algorithm (3) and the event-triggered communication algorithm (12) can make the states of MAS achieve consensus and asymptotically reach the optimal solution of the optimization problem (24). That means these two algorithms are feasible. However, under the same conditions, the event-triggered communication algorithm can effectively reduce the limited resource cost of the agents while slowing down the convergence rate of the system. Therefore, in practical application, selecting the optimal method still needs the analysis of specific problems.

Through simulation, we can verify that the distributed optimization problem (1) can be solved asymptotically under the proposed continuous-time communication and event-triggered control protocols. This means that the optimal solution can be obtained as . In a finite time, only an approximate solution of the optimization problem can be obtained. However, in some practical applications, such as the economic dispatch of smart grids, the optimal allocation is required in a finite time. Therefore, the distributed optimization algorithms with finite-time convergence are worth studying further.

6. Conclusions

In this paper, compared with the existing work, we considered a more general distributed optimization problem based on the second-order MASs over directed networks. A special directed network was carefully designed, and then an improved distributed optimization algorithm with continuous-time communication was proposed. By using the Lyapunov stability theory, some conditions were obtained to ensure the asymptotical convergence of the proposed algorithm. Furthermore, we designed an event-triggered control protocol to reduce the burden of communication and analyze the convergence of the event-triggered control algorithm. In addition, the Zeno behavior can be avoided. Finally, some numerical simulations were presented to verify the validity of the results. In our future work, the finite-time distributed optimization problem over directed networks will be considered.

Author Contributions

Conceptualization, F.Y. and Z.Y.; methodology, F.Y. and Z.Y.; software, D.H.; validation, F.Y., Z.Y. and H.J.; formal analysis, F.Y.; investigation, F.Y.; resources, Z.Y.; data curation, F.Y.; writing—original draft preparation, F.Y.; writing—review and editing, Z.Y.; visualization, D.H.; supervision, H.J.; project administration, Z.Y.; funding acquisition, Z.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China (Grant Nos. 62003289, 62163035), in part by the China Postdoctoral Science Foundation (Grant No. 2021M690400), in part by the Special Project for Local Science and Technology Development Guided by the Central Government (Grant No. ZYYD2022A05), and in part by Xinjiang Key Laboratory of Applied Mathematics (Grant No. XJDX1401).

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| MASs | Multi-agent systems |

References

- Bonabeau, E.; Dorigo, M.; Theraulaz, G. Swarm Intelligence: From Natural to Artificial Systems; Oxford Press: New York, NY, USA, 1999. [Google Scholar]

- Zhao, H.; Wang, L.; Zhou, H.; Du, D. Consensus for a class of sampled-data heterogeneous multi-agent Systems. Int. J. Control. Autom. Syst. 2021, 19, 1751–1759. [Google Scholar] [CrossRef]

- Bender, J. An overview of systems studies of automated highway systems. IEEE Trans. Veh. Technol. 1991, 40, 82–99. [Google Scholar] [CrossRef]

- Xu, Z.; Liu, H.; Liu, Y. Fixed-time leader-following flocking for nonlinear second-order multi-agent systems. IEEE Access 2020, 8, 86262–86271. [Google Scholar] [CrossRef]

- Aryankia, K.; Selmic, R. Neuro-adaptive formation control and target tracking for nonlinear multi-agent systems with time-delay. IEEE Control. Syst. Lett. 2021, 5, 791–796. [Google Scholar] [CrossRef]

- Li, Q.; Wei, J.; Gou, Q.; Niu, Z. Distributed adaptive fixed-time formation control for second-order multi-agent systems with collision avoidance. Inf. Sci. 2021, 564, 27–44. [Google Scholar] [CrossRef]

- Léauté, T.; Faltings, B. Protecting privacy through distributed computation in multi-agent decision making. J. Artif. Intell. Res. 2013, 47, 649–695. [Google Scholar] [CrossRef]

- Ke, W.; Wang, S. Reliability evaluation for distributed computing networks with imperfect nodes. IEEE Trans. Reliab. 1997, 46, 342–349. [Google Scholar]

- Manjula, K.; Karthikeyan, P. Distributed computing approaches for scalability and high performance. Int. J. Eng. Sci. Technol. 2010, 2, 2328–2336. [Google Scholar]

- Fu, Z.; He, X.; Huang, T.; Abu-Rub, H. A distributed continuous time consensus algorithm for maximize social welfare in micro grid. J. Frankl. Inst. 2016, 353, 3966–3984. [Google Scholar] [CrossRef]

- Madan, R.; Lall, S. Distributed algorithms for maximum lifetime routing in wireless sensor networks. IEEE Trans. Wirel. Commun. 2004, 5, 2185–2193. [Google Scholar] [CrossRef]

- Thirugnanam, K.; Moursi, M.; Khadkikar, V.; Zeineldin, H.; Hosani, M. Energy management of grid interconnected multi-microgrids based on P2P energy exchange: A data driven approach. IEEE Trans. Power Syst. 2021, 36, 1546–1562. [Google Scholar] [CrossRef]

- Karavas, C.; Kyriakarakos, G.; Arvanitis, K.; Papadakis, G. A multi-agent decentralized energy management system based on distributed intelligence for the design and control of autonomous polygeneration microgrids. Energy Convers. Manag. 2015, 103, 166–179. [Google Scholar] [CrossRef]

- Boglou, V.; Karavas, C.; Karlis, A.; Arvanitis, K. An intelligent decentralized energy management strategy for the optimal electric vehicles’ charging in low-voltage islanded microgrids. Int. J. Energy Res. 2022, 46, 2988–3016. [Google Scholar] [CrossRef]

- Karavas, C.; Plakas, K.; Krommydas, K.; Kurashvili, A.; Dikaiakos, C.; Papaioannou, G. A review of wide-area monitoring and damping control systems in Europe. In Proceedings of the 2021 IEEE Madrid PowerTech, Madrid, Spain, 28 June–2 July 2021; pp. 1–6. [Google Scholar]

- Zhang, Y.; Lou, Y.; Hong, Y.; Xie, L. Distributed projection-based algorithms for source localization in wireless sensor networks. IEEE Trans. Wirel. Commun. 2015, 14, 3131–3142. [Google Scholar] [CrossRef]

- Li, C.; Chen, S.; Li, J.; Wang, F. Distributed multi-step subgradient optimization for multi-agent system. Syst. Control Lett. 2019, 128, 26–33. [Google Scholar] [CrossRef]

- Li, H.; Liu, S.; Soh, Y.; Xie, L. Event-triggered communication and data rate constraint for the distributed optimization of multiagent systems. IEEE Trans. Syst. Man Cybern. Syst. 2018, 48, 1908–1919. [Google Scholar] [CrossRef]

- Ma, W.; Fu, M.; Cui, P.; Zhang, H.; Li, Z. Finite-time average consensus based approach for distributed convex optimization. Asian J. Control 2020, 22, 323–333. [Google Scholar] [CrossRef]

- Nedic, A.; Ozdaglar, A. Distributed subgradient methods for multi-agent optimization. IEEE Trans. Autom. Control 2009, 54, 48–61. [Google Scholar] [CrossRef]

- Nedic, A.; Ozdaglar, A.; Parrilo, P. Constrained consensus and optimization in multi-agent networks. IEEE Trans. Autom. Control 2010, 55, 922–938. [Google Scholar] [CrossRef]

- Shi, W.; Ling, Q.; Wu, G.; Yin, W. Extra: An exact first-order algorithm for decentralized consensus optimization. SIAM J. Optim. 2015, 25, 944–966. [Google Scholar] [CrossRef]

- Khatana, V.; Saraswat, G.; Patel, S.; Salapaka, M. Gradient-consensus method for distributed optimization in directed multi-agent networks. In Proceedings of the 2020 American Control Conference (ACC), Denver, CO, USA, 1–3 July 2020; pp. 4689–4694. [Google Scholar]

- Kia, S.; Cortés, J.; Martínez, S. Distributed convex optimization via continuous time coordination algorithms with discrete-time communication. Automatica 2015, 55, 254–264. [Google Scholar] [CrossRef]

- Xie, Y.; Lin, Z. Global optimal consensus for higher-order multi-agent systems with bounded controls. Automatica 2019, 99, 301–307. [Google Scholar] [CrossRef]

- Chen, G.; Li, Z. A fixed-time convergent algorithm for distributed convex optimization in multi-agent systems. Automatica 2018, 95, 539–543. [Google Scholar] [CrossRef]

- Lin, P.; Ren, W.; Yang, C.; Gui, W. Distributed continuous-time and discrete-time optimization with nonuniform unbounded convex constraint sets and nonuniform stepsizes. IEEE Trans. Autom. Control 2019, 64, 5148–5155. [Google Scholar] [CrossRef]

- Pantoja, A.; Obando, G.; Quijano, N. Distributed optimization with information-constrained population dynamics. J. Frankl. Inst. 2019, 356, 209–236. [Google Scholar] [CrossRef]

- Wang, D.; Chen, Y.; Gupta, V.; Lian, J. Distributed constrained optimization for multi-agent systems over a directed graph with piecewise stepsize. J. Frankl. Inst. 2020, 357, 4855–4868. [Google Scholar] [CrossRef]

- Wang, D.; Wang, Z.; Chen, M.; Wang, W. Distributed optimization for multi-agent systems with constraints set and communication time-delay over a directed graph. Inf. Sci. 2018, 438, 1–14. [Google Scholar] [CrossRef]

- Zhang, Q.; Gong, Z.; Yang, Z.; Chen, Z. Distributed convex optimization for flocking of nonlinear multi-agent systems. Int. J. Control Autom. Syst. 2019, 17, 1177–1183. [Google Scholar] [CrossRef]

- Zhang, Y.; Hong, Y. Distributed optimization design for second-order multi-agent systems. In Proceedings of the 33rd Chinese Control Conference, Nanjing, China, 28–30 July 2014; pp. 1755–1760. [Google Scholar]

- Tran, N.; Wang, Y.; Yang, W. Distributed optimization problem for double-integrator systems with the presence of the exogenous disturbance. Neurocomputing 2018, 272, 386–395. [Google Scholar] [CrossRef]

- Lü, Q.; Li, H.; Liao, X.; Li, H. Geometrical convergence rate for distributed optimization with zero-like-free event-triggered communication scheme and uncoordinated step-sizes. In Proceedings of the 2017 Seventh International Conference on Information Science and Technology, Da Nang, Vietnam, 16–19 April 2017; pp. 351–358. [Google Scholar]

- Lü, Q.; Li, H.; Xia, D. Distributed optimization of first-order discrete-time multi-agent systems with event-triggered communication. Neurocomputing 2017, 235, 255–263. [Google Scholar] [CrossRef]

- Chen, W.; Ren, W. Event-triggered zero-gradient-sum distributed consensus optimization over directed networks. Automatica 2016, 65, 90–97. [Google Scholar] [CrossRef]

- Tran, N.; Wang, Y.; Liu, X.; Xiao, J.; Lei, Y. Distributed optimization problem for second-order multi-agent systems with event-triggered and time-triggered communication. J. Frankl. Inst. 2019, 356, 10196–10215. [Google Scholar] [CrossRef]

- Gharesifard, B.; Cortés, J. Distributed continuous-time convex optimization on weight-balanced digraphs. IEEE Trans. Autom. Control 2014, 59, 781–786. [Google Scholar] [CrossRef]

- Yang, S.; Liu, Q.; Wang, J. Distributed optimization based on a multiagent system in the presence of communication delays. IEEE Trans. Syst. Man Cybern. Syst. 2017, 47, 717–728. [Google Scholar] [CrossRef]

- Wang, L.; Xiao, F. Finite-time consensus problems for networks of dynamic agents. IEEE Trans. Autom. Control 2010, 55, 950–955. [Google Scholar] [CrossRef]

- Yu, Z.; Yu, S.; Jiang, H.; Mei, X. Distributed fixed-time optimization for multi-agent systems over a directed network. Nonlinear Dyn. 2021, 103, 775–789. [Google Scholar] [CrossRef]

- Zhang, Y.; Hong, Y. Distributed optimization design for high-order multi-agent systems. In Proceedings of the 34rd Chinese Control Conference, Hangzhou, China, 28–30 July 2015; pp. 7251–7256. [Google Scholar]

- Gharesifard, B.; Cortés, J. Distributed convergence to Nash equilibria by adversarial networks with undirected topologies. In Proceedings of the 2012 American Control Conference (ACC), Montreal, QC, Canada, 27–29 June 2012; pp. 5881–5886. [Google Scholar]

- Yi, X.; Yao, L.; Yang, T.; George, J.; Johansson, K. Distributed optimization for second-order multi-agent systems with dynamic event-triggered communication. In Proceedings of the IEEE Conference on Decision and Control (CDC), Miami, FL, USA, 17–19 December 2018; pp. 3397–3402. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).