Abstract

In the Internet of Things (IoT) era, various devices generate massive videos containing rich human relations. However, the long-distance transmission of huge videos may cause congestion and delays, and the large gap between the visual and relation spaces brings about difficulties for relation analysis. Hence, this study explores an edge-cloud intelligence framework and two algorithms for cooperative relation extraction and analysis from videos based on an IoT system. First, we exploit a cooperative mechanism on the edges and cloud, which can schedule the relation recognition and analysis subtasks from massive video streams. Second, we propose a Multi-Granularity relation recognition Model (MGM) based on coarse and fined granularity features. This means that better mapping is established for identifying relations more accurately. Specifically, we propose an entity graph based on Graph Convolutional Networks (GCN) with an attention mechanism, which can support comprehensive relationship reasoning. Third, we develop a Community Detection based on the Ensemble Learning model (CDEL), which leverages a heterogeneous skip-gram model to perform node embedding and detect communities. Experiments on SRIV datasets and four movie videos validate that our solution outperforms several competitive baselines.

MSC:

68; 68T45

1. Introduction

With the rapid expansion of the Internet of Things (IoT), an increasing number of videos are generated dramatically by various edge devices. Additionally, the emergence of novel intelligence applications requires less network latency and higher accuracy. Therefore, how to efficiently and accurately extract useful information from massive video data made by edge devices has become a hot issue [1,2]. The network transmission and computing capabilities of many camera devices in the IoT are weak and cannot meet the high-bandwidth and low-latency edge computing tasks [3]. Therefore, several challenges arise for relation extraction in the IoT system: (1) limited storage and computing power in the edge nodes; (2) long transmission delays between edge devices and remote cloud server; and (3) great challenge to provide a satisfactory mapping function from the low-level video pixel space to the high-level relationship space.

Intelligent video surveillance systems provide information extraction [4], content analysis [5], and action analysis [6] without human intervention in the cloud. In the traditional method, the limited computational resources of camera nodes, edge devices, and raw data preprocessing cause computation delay [7]. Due to limited network bandwidth, delivering raw videos to a remote cloud node may cause network congestion and transmission delays [8]. In contrast, edge computing has the characteristics of decentralization, and the computing nodes are closer to the data source [9]. Thus, network traffic and latencies can be reduced. Nowadays, camera-edge-cloud architecture is designed as a feasible video analysis method [10,11,12]. In the camera-edge-cloud architecture, edge nodes can share some computing tasks of cloud computing nodes [10]. An edge node is responsible for multiple nearby cameras. Cloud computing is responsible for video processing tasks that require massive computing and storage [11]. However, with the extensive intelligent needs for massive video and data processing, how to use Artificial Intelligence (AI) algorithms on edge nodes has become a difficult research domain [12]. Thus, how to allocate the tasks of multiple edge computing nodes and coordinate with the cloud computing platform is the difficulty of current research.

Relation extraction and analysis are typical for human-centered videos in multimedia IoT systems [13]. It has recently been a popular research focus [14,15,16]. However, this problem has not been fully solved for the following reasons. First, some efforts have focused on relation recognition based on spatial features or interactions from images, i.e., facial, distance, and object features [17,18]. However, these authors highlight the image features of the video and discount the temporal features, such as people’s actions and other valuable cues for social relations. Second, some methods are recognized only as general video classification study [19,20,21,22]. They mainly focused on the coarse-granularity information such as optical flows [19], and temporal features [21]. In other words, it is challenging for machines to “read” social relations from visual content. Vicol et al. [23] manually constructed the MovieGraphs dataset to support related research. Using artificial intelligence to automatically extract relationship graphs from videos has become the main challenge for current research. Meanwhile, there are few studies on further analysis of the extracted relation graph, for example, community detection. Most of the existing community discovery algorithms rely on massive labeled data [24,25].

Therefore, we propose a framework for video analysis by utilizing the collaboration among edge devices, edge nodes, and the cloud. First, we propose a novel framework combining edge and cloud computing using AI algorithms to create more intelligent IoT systems. By designing the cooperative division and data flow transmission between edge and cloud, network traffic and latency can be reduced. Additionally, the video stream is divided into two streams and transmitted to edge and cloud nodes. Second, an MGM model is proposed for relation graph extraction based on multi-granularity features fusion. We employ spatial and temporal networks to learn global features at a coarse granularity and use Mel-Frequency Cepstral Coefficients (MFCC) to extract audio features. Moreover, we construct a knowledge graph to describe interactions among these entities. An attention mechanism based on Graph convolution Network (GCN), named GAT, is adopted to select the most significant fined-granularity features in one video clip. Third, we propose a CDEL model to integrate structure and attribute similarities into a unified framework. Unlike the general fusion strategy, we first detect communities from the topological graph and node attributes. Then, a weighted fusion method is proposed to optimize the communities, which can reduce data inconsistencies from the graph structure and node attributes.

The main contributions of this paper are summarized as follows.

- We propose an edge computing framework to reduce latency by leveraging edge and cloud nodes’ cooperative processing capabilities on AI algorithms;

- To achieve more accuracy for video analysis, two AI algorithms are proposed based on multi-granularity GCN and ensemble learning in the cloud. In addition, some tasks are processed at edge nodes to prevent network congestion;

- We experiment on some video datasets to demonstrate that our framework and AI algorithms can optimize the accuracy and latency of the relation extraction and analysis from massive videos.

The remainder of this paper is organized as follows. Section 2 reviews three categories of related works. In Section 3, we present the edge-cloud intelligence framework. The AI methods for video analysis in edge and cloud are described in Section 4, including relationship graph extraction and analysis. Section 5 describes our experiments and results and presents the essential discussions. Finally, the conclusions are summarized in Section 6.

2. Related Work

This section discusses the related work from three aspects, namely edge computing, relation extraction and recognition, and relation analysis, which are as follows.

2.1. Edge Computing

Due to the advantages of low latency, low energy, and high bandwidth of edge computing, it has become a hot research topic [5,9,26,27]. With more and more video cameras being deployed to obtain rich information for smart IoT, the research of video data analysis have emerged [6,8,9,27,28,29,30]. For example, Yang et al. [27] deployed an end-edge-cloud collaboration to gradually learn the optimal configuration strategy. Recently, AI algorithms with deep learning have been successfully applied in many fields. Most of these algorithms require powerful GPU computing resources and storage capabilities [6]. Therefore, it has become a research challenge to use edge computing to solve delay-sensitive problems and generate accurate results in video processing tasks. Fathy et al. [28] proposed an intelligent adaptive QoS framework to support video streaming in IoT environments. In some industrial fields, the research on porting these algorithms to edge nodes has been realized [9]. For example, Zhang et al. [12] defined edge intelligent computing and reviewed the relevant systems. [9]. In recent years, research on single tasks in the video has been well solved in edge computing, such as object detection. Taghavi et al. [29] proposed the EdgeMask to solve real-time object segmentation. Dave et al. [30] used an event-driven and resource-efficient surveillance system to collect personal information. However, relation analysis consists of multiple tasks, e.g., entity recognition, relation extraction, and graph analysis. Current research finds it difficult to balance the accuracy of video processing results and the processing efficiency of multiple edge nodes in the IoT system.

2.2. Relation Extraction and Recognition

There are several studies on relation extraction and recognition from videos [31,32,33,34]. Yuan et al. [31] used a weighted Gaussian distribution and calculated the weight of each relationship based on both the shots and scenes. Lv et al. [32] divided a video into stories and extracted the relation graph of the persons following the temporal distribution of people in a story. These studies only construct a node-edge graph with no properties of edges. The relation recognition task can solve this problem. Recently, there have been some studies on this issue [18,35]. Li et al. [35] proposed a social relation graph reasoning model driven by Hybrid-Features. Lin et al. [18] proposed a picture reasoning model to achieve relationship classification. However, these studies are based on relation analysis of static images and cannot be applied to videos. Some studies have tried to recognize relations from video data [22,36,37]. Kukleva et al. [22] designed a model for learning and predicting interactions and relationships from videos. Teng et al. [36] proposed a character and relationship joint learning framework to recognize social relations on a selected part of the MovieGraphs dataset. However, their experimental data required certain textual and manual labels. Lv et al. [37] proposed an attentive sequences recurrent network that identifies relationships directly from the original video and had low model operation efficiency. Implementing the works mentioned above requires immense computing power on the server, which is unsuitable for IoT systems. This paper studies how to assign subtasks and optimize the relation extraction model in the IoT system to improve accuracy.

2.3. Relation Graph Analysis

To discover the key people in surveillance videos and to detect communities are important aspects of massive video processing. Lee et al. [38,39] learned representations of relationship graphs extracted from movies and designed a Char2Vec model for node-edge networks. Lv et al. [32] detected communities from node-edge networks extracted from movies. Many studies have proposed a large number of excellent algorithms for finding community structures [40,41,42]. However, they are typically unable to directly solve the problem of analyzing a network containing edges associated with various relationship types. Pan et al. [25] used the features of attributes and structure of the social relations among nodes. Sun et al. [24] proposed a community detection algorithm based on a deep sparse autoencoder and achieved accurate results. However, the premise that these methods can achieve good results is that the proposed models must be trained on large-scale annotated datasets. Recently, many studies have been on graph representation and analysis, including attribute networks [43] and heterogeneous networks [44,45]. These methods can be used for reference in analyzing video relation graphs. This paper uses the above research methods to design a method for analyzing community on the relation graph extracted from wild video data.

Table 1 compares our work and the state-of-art efforts in edge computing and video analysis. Some studies have proposed edge computing frameworks [12,28,29], but they have not researched the relation recognition task. In recent years, there have been some studies on relation recognition and analysis [32,35,36], but they require training massive data on enormous computing power and do not support edge computing tasks. Our work supports the tasks in the above three scopes.

Table 1.

A summary of main related studies (EC: edge computing; RE: relation extraction; CD: community detection).

3. The Proposed Edge-Cloud Intelligence Framework

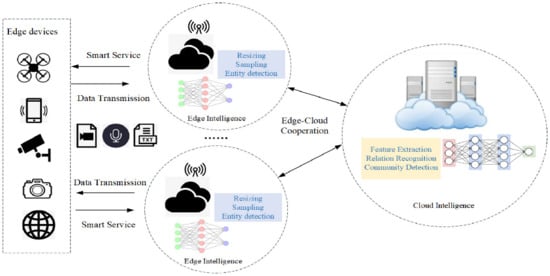

This section first describes the proposed edge-cloud computing architecture for video analysis, depicted in Figure 1. Edge devices, edge nodes, and the cloud will cooperate to complete data transmission and implementation of AI algorithms. Each edge node can serve multiple cameras, communicate with the cloud, and collaborate to complete the different tasks with AI algorithms.

Figure 1.

The framework of edge-cloud intelligence for cooperative relation extraction and analysis from videos.

3.1. Framework Overview

In the edge-cloud intelligence framework, we set the edge node to be capable of executing AI algorithms, as shown in Figure 1. The edge node is closer to the edge device. Thereby, it is very necessary to shorten the transmission distance and improve the response time. There are two aspects of collaboration regarding the intelligent processing part: edge-edge and edge-cloud.

In the proposed edge-cloud framework for delay-sensitive relation recognition from videos, shown in Figure 1, edge devices are deployed in every city corner. They can capture video, communicate with edge computing nodes, and support the operation of intelligent algorithms. They transfer data to each other via edge-to-edge communication [46]. The edge devices capture video streams and divide them into different sub-tasks. The distributed video stream is transmitted to the network through the edge and cloud nodes. Then, edge nodes process the collected video sub-tasks, perform some training of intelligent algorithms, and send the computing results via network to the cloud, which can train more extensive intelligent algorithms and store massive amounts of data.

3.2. Definition

The framework includes three main parts: edge devices, edge intelligence, and cloud intelligence.

- Edge devices: These may be static camera devices fixed in the surveillance area such as city streets, public places, mobile phones, or drones, which invoke video tasks, divide them into smaller sub-tasks, compress videos and transmit them to the edge nodes. Besides, essential information and videos are sent to the cloud for high-level planning and decision with Deep Neural Networks (DNN), e.g., relation recognition and video analysis algorithms;

- Edge intelligence: It reflects the core functions of edge computing. The edge nodes can run lightweight AI algorithms, have some storage capacity, and are closer to the edge device to generate faster query responses. It helps execute some sub-tasks, e.g., feature extraction and entity detection, and transmits these results to cloud computing nodes through the network for further analysis;

- Cloud intelligence: The cloud computing platform undertakes the storage and computing capacity of the overall video in the system, which is a fixed device far from the edge node. The cloud node receives videos and operation results from edge devices and edge nodes to perform more detailed analysis and training, such as relation graph construction, relation recognition, and community detection.

3.3. Collaboration and Workflow

In our edge-cloud intelligence framework, two aspects of collaboration are required: edge-edge and edge-cloud. In the edge-edge scenario, many edges need to collaborate to accomplish a task with each other. Training tasks are always completed on the cloud and then downloaded to edge nodes in the edge-cloud scenario. Sometimes, edge nodes need to retrain the AI model by transfer learning based on the video.

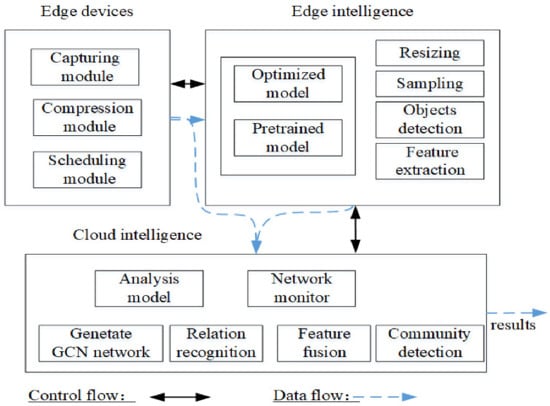

Edge device communicates with edge node via device-to-device. One edge node links with multiple edge devices. Video analysis can be divided into subtasks requiring fast query response, such as entity detection and result query. Some require much computation, preprocessing results, and higher accuracy, such as GCN network training and relation recognition. The workflow of our framework has two pipelines, as shown in Figure 2. One pipeline is deployed for tasks that consider latency more important than accuracy, which processes on the edge nodes for the video task. For example, simple video preprocessing and entity detection tasks require computing power and storage space. The other pipeline is edge-cloud; the video that needs further processing is compressed and transmitted from the edge devices or edge nodes to the cloud node. The network monitor manages transmission performance indicators from each edge and cloud node.

Figure 2.

The collaboration and workflows of the three parts (edge devices, edge intelligence, and cloud intelligence).

4. AI Algorithms for Relation Extraction and Analysis

This section describes our intelligence methods for relation extraction and analysis in edge and cloud nodes. It includes Section 4.1—problem description, Section 4.2—tasks in edge intelligence, and Section 4.3—tasks in cloud intelligence. Table 2 provides a list of important notations used in this paper.

Table 2.

Important notations.

4.1. Problem Description

We make the fundamental assumption that the relation graph between people consists of five elements: person set (nodes), person attribute set (attributes of the nodes), their relationships (edges), and their relation types among the people (types of the edges), and community detection.

Definition 1

(Person Set). Suppose that n is the number of people that appear in a video . The person set is represented as , where is the i-th element of A.

Definition 2

(Person Attribute Set). The person attributes to set the age, sex, and the profile of each person, represented as follows:

Definition 3

(Relationship Graph). denotes the relation graph extracted from the video . It is represented as , where each node a and each link r are associated with mapping functions, and , respectively. and denote the sets of person and relation types and satisfy . The relationship graph not only represents whether relationships exist between pairs of people but also indicates what kinds of relationships they are. can be described as a matrix :

where each element of denotes the corresponding relation type and can be described as , where is a fixed relation type set.

Definition 4

(Community Detection). For the above relationship graph , the objective of the community detection task is to divide the person set A into k communities. The set of communities can be expressed as in accordance with a model , where the model is defined in terms of the structural features , node attribute features , and edge category features of :

4.2. Tasks in Edge Intelligence

DNNs partitions are widely used in various fields [10,47] to complete different tasks, e.g., reducing latency or improving accuracy. Our task’s AI algorithms and video analysis methods can be divided into two parts, running on two locations, e.g., one on an edge node and the other in cloud intelligence.

Multiple edge nodes work collaboratively to accomplish a compute-intensive task, e.g., object detection and intelligent query. An AI algorithm may run distributed on one or more edge nodes as the related computation nodes. It is optimally partitioned, offloaded, and executed with the peer sensor nodes [8]. Then the processing results are transmitted to the cloud intelligence.

4.2.1. Partition Schemes for Entity Detection

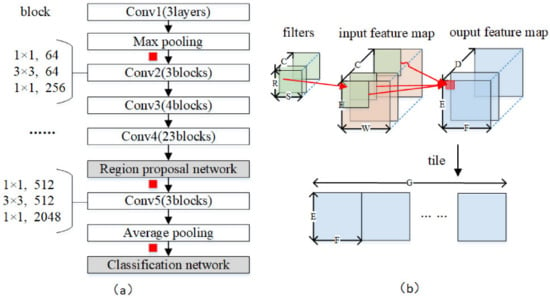

The significant groundwork of relation recognition is entity detection. In our paper, this task employs a Faster R-CNN partition [10]. Our paper uses Faster R-CNN, including a region proposal network and a classification network. The partition scheme considers how to reduce network transmission and seeks a suitable image size and feature map for transmission. ResNet101 consists of 5 components with 33 building blocks in these components [48]. These building blocks are divided into five components: conv1, conv2, conv3, conv4, and conv5, and the number of building blocks contained in each component is 0, 3, 4, 23, and 3. There are three units in each block, and each unit contains three layers of convolution, batch normalization, and activation, respectively. The partitions can be split after the max and average pooling layer and the RPN, shown in Figure 3a.

Figure 3.

The Faster R-CNN and Resnet101 combine the structure of the entity detection network in (a). The red squares represent cut points, representing the model that can be split into different parts. (b) shows the dimensionality of the convolutions. C: filter channels; D: map channels; R/S: filter plane height/width; H/W/E/F: feature map height/width; G = D × F.

Compared with the DNN models, compressing and decompressing image features are executed on edge nodes. So, cloud node only consumes negligible computing resources and storage space. Recent studies have shown that uniform 8-bits quantization has a negligible effect on the accuracy of object detection [49]. We convert 32-bits video feature maps to 8-bits quantized feature maps. A feature map includes many channels; each channel is a 2D integer matrix. Therefore, we tile the channels to convert the 3D matrix into a giant 2D matrix, then the 2D matrix is quantized between 0 and 255, and we use the PNG to compress the 2D matrix. Decompression is the opposite process. Each channel is convolved with a 2D filter shown in Figure 3b. For example, an input image (feature map) to the first layer of a CNN will contain three channels corresponding to the input image’s red, green, and blue components.

4.2.2. Person Identification

In this section, we design a fusion model to obtain the person set A. We use a pre-trained Faster R-CNN model on the Wider Face dataset [50] to recognize faces. All face images are normalized to a size of 112 × 112 pixels. A pre-trained model detects the bodies and is normalized to 128 × 256 pixels. Then, faces and body features are extracted using ResNet101 [10]. Finally, the features are fused, and the fused feature set is obtained as the input to the clustering algorithm presented in Algorithm 1. In the first step of the clustering process, the optimal number of clusters is obtained, using the cosine distance to calculate the similarity of person features [51]. Each node is labeled for the relation graph to get the person set A in the second step. The basic operation of the Algorithm 1 is calculated as . So the time complexity is , where n represents the number of the features .

| Algorithm 1 Person Set A |

| Input: face and body features and . Output: person set A. //Concatenate feature vectors and perform normalization operations.

|

4.3. Tasks in Cloud Intelligence

The intelligent cloud server has robust storage and computing capabilities and mainly undertakes the task of improving accuracy. To improve more accurate and thoughtful services for video analysis, we propose a multi-granularity graph convolutional network, which runs in the cloud.

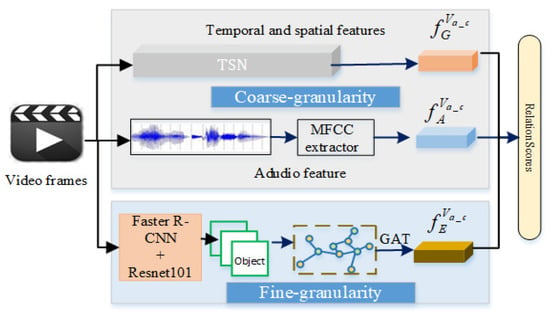

4.3.1. The Architecture of the MGM

The overall architecture of the MGM is shown in Figure 4. It can be divided into coarse-granularity feature extraction and fine-granularity feature extraction. The first part takes as input one video clip , which is sampled into frames for efficiency, . Each frame is resized to 224×224 pixels and fed into a temporal segmentation network, where the global features are obtained in the last convolutional layer of the temporal segment network (TSN) network [52] and get 2048 dimension features. The second part uses MFCC to represent audio features (39 dimension), represented as . In the fine-granularity part, we employ entity detection results from Fast R-CNN and Resnet101 and get 2048 dimension features. We use to denote the semantic features of fine-granularity entities. Then, an entity graph based on a GCN is established to extract fine-granularity features . An attention mechanism is adopted to select the most significant entities. Subsequently, multiple features are fused as the input to the next layer. Finally, the sigmoid cross-entropy loss function is utilized to optimize the classifier for relation recognition.

Figure 4.

The architecture of the MGM for relation recognition. The coarse-granularity part extracts temporal, spatial, and audio features. The fine-granularity part pays attention to the details of the objects in the video, which are transmitted from edge nodes. The Graph Attention (GAT) model is a GCN based on an attention mechanism.

4.3.2. Relation Recognition

The relationship graph indicates whether there is a relationship between persons and . However, the relation type, an important and ubiquitous factor in daily life, is not specified. In this section, we study how to solve this problem.

There is a considerable gap between the abstract relationships among persons and the visual pixels depicting those people. Humans can intuitively understand social relationships from videos. However, it poses many challenges for artificial intelligence. Considering fine-granularity object information helps analyze the relationships between people. For example, fine-granularity information such as the presence of desks, computers, and documents may reflect a “colleague” relationship between two people. This paper proposes a Multi-Granularity relation recognition Model (MGM). This model recognizes entities other than people from a fine-granularity perspective.

The set of nodes in a fine-granularity graph is established in order to extract fine-granularity features, denoted by . is an adjacency matrix. represents how close together objects and person are in one video clip . is an element of , where . Then, is initialized with the frequencies of co-concurrence of all entity pairs throughout the video clip . Furthermore, is normalized in the range from 0 to 1 via min-max normalization,

where .

We perform a linear transformation on the node feature vector . Thus, we obtain a new feature vector , where and is the dimensionality of the new feature vector.

The GAT network employs an attention mechanism to learn the importance of neighboring nodes [53]. Thus, a new feature vector can be obtained:

where represents the importance of node j to node i. and represent node feature vectors sequence. The function represents a shared attention mechanism, which can theoretically calculate the attention between all nodes. If , .

Finally, entity feature sets are obtained.

For relation type , we concatenate the three types of features.

where represents the concatenation of the three vectors for each node, and represents the normalization operation.

Then the last layer to generate the output score for the relation type :

The loss function is defined as

where is the ground truth for the i-th class and is the probability of prediction.

4.3.3. Relationship Graph Analysis

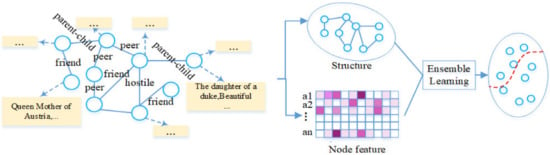

The principal objectives of relationship graph analysis are to discover the grouping of the people to comprise social communities and to find the most influential person within each community [33,39]. The relationship graph extracted may include edge attributes, making it more complex than homogeneous networks. This paper proposes a Community Detection based on the Ensemble Learning model (CDEL) to solve these challenges, as shown in Figure 5.

Figure 5.

The framework of the Community Detection based on the Ensemble Learning (CDEL) model. First, we divide the partitions into two parts, i.e., topological structure and node features. Then we use a fusion strategy to combine these partitions into optimal partitions.

In the CDEL model, we first divide into two parts, i.e., its topological structure and its node feature set . Then, we apply community detection and clustering algorithms to and separately to obtain candidate community partitions and for each branch. Finally, an ensemble learning algorithm is employed to fuse these partitions and obtain the optimal partitions , which is elaborated in Algorithm 2.

(1) Structure partitioning.

The dense regions of the graph typically correspond to entities that are closely related and therefore are considered to belong to a community. Correspondingly, these structure-based community detection algorithms [54,55] have achieved excellent results. However, such methods are unsuitable for application to graphs with edge attributes.

To overcome the challenge presented by the existence of multiple types of links , we leverage metapath2vec [45] to learn a relationship graph representation. Given the relationship graph , metapath2vec can learn latent dimensional representations , and capture the structural and semantic relations among them. In the meta path based random walks step, a meta path scheme is defined as a path that is expressed in the form , where R defines the composite relations between nodes and . The transition probability at step i is defined as follows with the row corresponding to the representation of node a,

where and denote the type of neighborhood of the node . In other words, , that is, the flow of the walker, is conditioned on the predefined metapath.

In our work, the meta path scheme has the form “person-relation-person”. The meta path based random walk strategy ensures that the semantic relationships between different nodes are preserved. The output of the network is a low-dimensional matrix. Finally, the node features are used as an input to the k-means algorithm to detect a community partition , which satisfies and .

(2) Attribution partitioning.

The initial node attributes of the relation graph include the age, sex, and the person profile, as obtained by Bidirectional Encoder Representations from Transformers (Bert) [56].

The node features are also input to the k-means algorithm to detect a community partition , which satisfies and .

N is the number of candidate partitions P from . , where and is the jth community in . The main concern of the CDEL model is to determine a fusion strategy that can guarantee that the final community partition is the optimal one.

Suppose that the community partition is generated by the community cluster detector from the node attributes and that is generated based on the community structure. is the weight vector for the community partition fusion. For each node pair of , the weighted co-association matrix M is defined as

where is the cluster label of in partition , and is 1, if , and 0 otherwise.

The weighting function in the topological graph can be defined as the Jaccard similarity coefficient, and in the attribute graph it can be defined as the related attribute similarity, which is proposed in the paper [57], i.e.,

where retrieves the neighbors of node in . Correspondingly, could also be defined as the related attribute similarity, i.e.,

where represents the feature vector of node , and is the norm operator. represents the cluster that has the label , if the , then we have .

The matrix M is a similarity matrix for A in the relationship graph . The algorithm is shown as Algorithm 2. Our model uses a weighted fusion of the relationship graph structure and attribute information to obtain more accurate communities. The time complexity of the Algorithm 2 is , where N is the number of candidate partitions, and m is the number of and , respectively.

| Algorithm 2 Fusion algorithm for CDEL |

| Input: structure and attribute partitions and . Output: The final community partition . //Traverse the two partitions. If the node pair is not in the same partition, we ignore it. Otherwise, we calculate its weighted co-association matrix M.

|

5. Experiment

The experiment mainly verifies the validity of the edge-cloud intelligence methods. Our experiment environment is comprised of an Intel(R) Xeon(R) CPU E5-2620 v4 @ 2.10 GHz processors running at 2.10 GHz with two TITAN X (Pascal) GPUs and two edge nodes. Each edge node is an NVIDIA Jetson TX2 board.

5.1. Dataset Description

Experimental data were selected to support two tasks: relation recognition and community detection.

The dataset that we used for relation recognition is Social Relation in Videos (SRIV) [19]. The SRIV is a personal relationship video dataset containing 3124 videos totaling 25 h. We also test our MGM model on the real four movie videos, i.e., The Shawshank Redemption, Sissi, Kung Fu Hustle, and Forrest Gump. Complex relations exist between people who appear in videos. In total, we analyzed the relationships among 152 roles. We selected 10–20 video clips for each person pair, each with a duration of approximately 5–10 s. The video and audio encoded is H.264 and AC3, respectively, and the video bit rate is 7916 kb/s, and the audio bit rate is 320 kb/s.

For the community detection task, we use the results obtained from the above four movies employing the relation network extraction method of previous work [32].

5.2. Evaluation Metrics

To validate the overall performance of our framework, we use the following evaluation metrics to assess the performance of the method proposed in this paper.

The relation recognition method is evaluated using the , BalAccuracy, and subset accuracy as follows.

5.2.1.

The score is based on label metrics. for the i-th class is calculated as

where , , and denote numbers of true positives, false positives, true negatives, and false negatives, respectively, for the i-th class.

5.2.2. BalAccuracy

To account for the imbalance between the positive and negative classes, a balanced accuracy (BalAcc) is adopted:

where and are the numbers of positive and negative samples, respectively.

5.2.3. Subset Accuracy

This metric evaluates whether the predicted label set exactly matches the ground-truth set [37]. It is defined as follows:

where and .

5.2.4. NMI

The community discovery method is evaluated using the Normalized Mutual Information (NMI), which takes values between 0 and 1 [58].

denotes the set of ground truth communities and the set of communities divided by the relationship network division algorithm by T. Additionally, r and k denote the numbers of partitions in and T. N is a confusion matrix. The rows in N represent real communities. The columns in N represent the communities obtained by the algorithm. S is the number of nodes. denotes the number of nodes in both real community i and found community j. and sums of i-th row and j-th column in N, respectively. The NMI is calculated as follows:

where and are

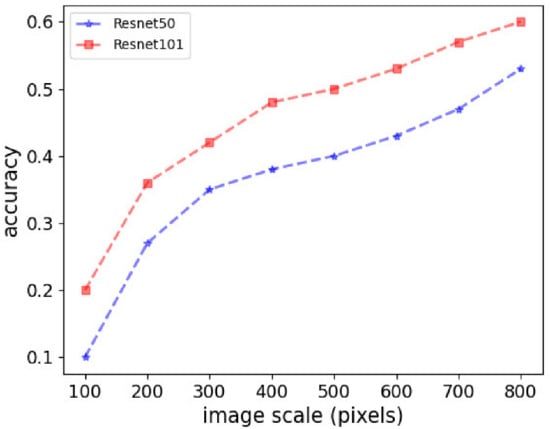

5.3. Entity Detection in Edge Intelligence

To evaluate the computing capacity and accuracy of the AI task of the edge node, we use the entity detection task to perform experiments on an edge node on a given video clip. We compare feature extraction methods in entity recognition models, Resnet50 and Resnet101—both standard networks. The Resnet50 has five components such as Resnet101, but it contains 16 building blocks allocated to 5 components with the numbers 3, 4, 6, and 3. Figure 6 shows the accuracy of different algorithms on different video image sizes. We can find that the accuracy of entity detection grows with the video quality. It demonstrates that our framework and the edge node can establish a stable mechanism for AI algorithms to run stably on extreme edge devices. The accuracy is still far from 100%, because it is challenging to capture positive and clear entity images with real-world video data. The latency may increase with the tasks increase because there are more cloud pipelines in the cloud.

Figure 6.

Impact of video pixels on the accuracy of entity detection.

5.4. Relation Recognition in the Cloud Intelligence

We experimentally verified the accuracy of the MGM model on the cloud server. The relationship graphs for the four movies were constructed as described in a previous work [32]. These graphs show whether a relationship exists between two people; however, what kinds of relations they are is a problem that needs to be solved using the MGM novel relation recognition method.

We compare it with six other methods to validate our MGM based on experiments on the SRIV dataset. The results are shown in Table 3. By aiming at the two innovations of the MGM model, the GAT part and the fusion of multiple features, respectively, ablation experiments were done.

Table 3.

Comparison with the state-of-the-art methods on the SRIV dataset.

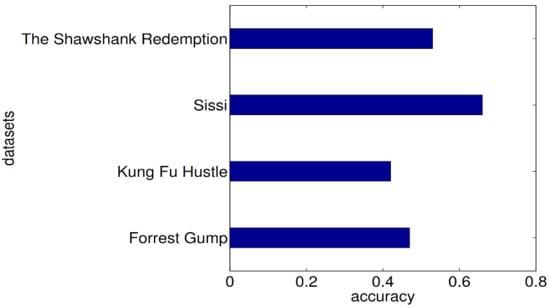

In a video, the relations among people always present complex forms, e.g., through their actions or the items in the same scene. First, the base spatial or temporal base models considered for comparison, i.e., long short-term memory (LSTM) and a TSN, produce relatively low accuracy. In contrast, a fused multi cue model, i.e., the Multi-Stream model, offers improved recognition accuracy, as reflecting the scene and action information are advantageous for relation recognition. These models focus only on coarse information and ignore fine-granularity information. Second, the two-stage model (TSM) is better because it considers detailed appearance characteristics and objects, providing more sophisticated features for relation recognition. Finally, our MGM achieves the best performance by combining the TSN and spatio-temporal multi-view (STMV) models with an attention mechanism. For example, the accuracy achieved is 0.7173 (+8.5%), and achieves 0.7431 (+9.3%), suggesting that the attention mechanism can focus on more crucial entities useful for their relation. More importantly, after audio features are fused, the performances are improved again. For example, the accuracy increased by 1%, demonstrating that the audio feature reflects the emotions at the time and also permeates the relationship.

As shown in Figure 7, the models trained on SRIV were tested on the four movie videos. However, the results vary across the different videos. Our approach yields the worst result on “Kung Fu Hustle” while demonstrating the best performance on “Sissi”. This is because fine-granularity information on relations is more abundant in the latter than in the former.

Figure 7.

The performance on four real-world datasets.

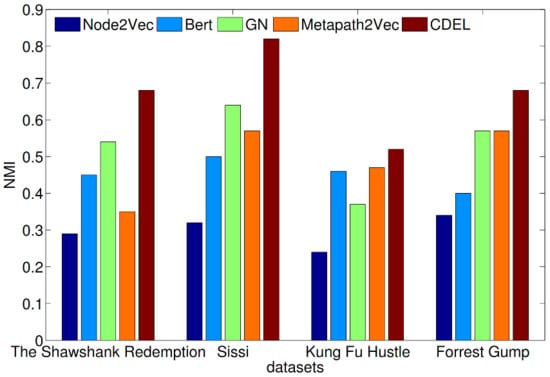

5.5. Community Detection in the Cloud Intelligence

We validate the effectiveness of the CDEL on the cloud intelligence server and some experiments performed on graphs constructed from real-world datasets.

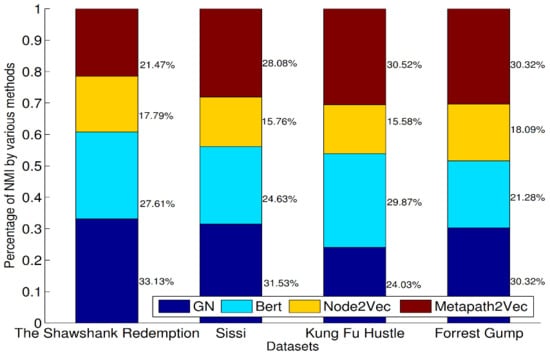

Figure 8 illustrates the comparative results of the various community detection methods. We can see that the NMI value of our proposed model is higher, indicating that it better characterizes the communities formed by the people in videos. Based on the results, we can observe that the node cannot accurately represent the communities features alone, as seen from the fact that node2Vec [61] and Bert [56] achieve the lowest NMI value. Moreover, models using structural features demonstrate superior performance, i.e., Girvan Newman (GN) [62] and metapath2vec [45]. They will likely reflect the relations with the other nodes more comprehensively in the whole relation graph. Finally, our CDEL model shows the best performance, demonstrating the complementary effect of the weighted fusion of multiple types of features of the relation graph.

Figure 8.

values for community detection on real-world datasets.

The proportion of different features is different by comparing experiments conducted on the four movies, as shown in Figure 9. First, we observe that those division methods based on graph structure occupy a more significant role, accounting for about 30% (GN and Metapath2Vec), respectively. Second, a division based on Node2vec shows the worst performance on “Sissi”. This result demonstrates that simple words cannot express relations. Instead, video can help mine richer semantics.

Figure 9.

Different effects for community detection from different representations of the CDEL, which can be divided into two types, i.e., structure features based (GN and Metapath2Vec) and attribute features based (Bert and Node2Vec).

6. Conclusions

This paper proposes an edge-cloud intelligence framework for cooperative relation extraction and analysis from videos. First, we exploit an edge-cloud intelligence framework, which can schedule the sub-tasks for massive video analysis. Second, we propose a multi-granularity fusion model to recognize the relations. Specifically, edge nodes and the cloud can help cooperatives complete the AI algorithms with computing power and network transmission capacity. Third, we exploit a weighted fusion strategy for community detection, which can provide a comprehensive perspective and innovative service on the relation graph. Finally, experiments on some datasets demonstrate the effectiveness of the proposed framework. However, there are also some limitations. The first problem is that we did not consider the different computing power of different edge nodes. Secondly, the dataset labels are artificially labeled, which may be mixed with some subjective factors.

To deal with the above limitations, we suggest future research directions. First, we can optimize the algorithm by implementing more intelligence algorithms to run on the edge nodes for video analysis. Second, future research can collect more realistic datasets and establish a more objective labeling scheme.

Author Contributions

Methodology, J.L.; software, M.L.; writing—original draft, J.L. and L.S.; writing—review and editing, L.S. and Q.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partially supported by R&D Program of Beijing Municipal Education Commission (No.KM202211417014), and the Academic Research Projects of Beijing Union University (No.ZK20202215).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The dataset is available on request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Jiang, X.; Yu, F.R.; Song, T.; Leung, V.C. A survey on multi-access edge computing applied to video streaming: Some research issues and challenges. IEEE Commun. Surv. Tutor. 2021, 23, 871–903. [Google Scholar] [CrossRef]

- Alfonso, G.P. Application of HMM and Ensemble Learning in Intelligent Tunneling. Mathematics 2022, 10, 1785. [Google Scholar]

- Patrikar, D.R.; Parate, M.R. Anomaly detection using edge computing in video surveillance system: Review. Int. J. Multimed. Inf. Retr. 2022, 11, 85–110. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Liu, W.; Zhang, M.; Chen, J.; Gao, L.; Yan, C.; Mei, T. Social relation recognition from videos via multi-scale spatial-temporal reasoning. In Proceedings of the 2019 IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 6–16 June 2019; pp. 3566–3574. [Google Scholar]

- Sun, H.; Shi, W.; Liang, X.; Yu, Y. VU: Edge computing-enabled video usefulness detection and its application in large-scale video surveillance systems. IEEE Internet Things J. 2020, 7, 800–817. [Google Scholar] [CrossRef]

- Xu, K.; Jiang, X.; Sun, T. Anomaly detection based on stacked sparse coding with intraframe classification strategy. IEEE Trans. Multimed. 2018, 20, 1062–1074. [Google Scholar] [CrossRef]

- Georgiou, T.; Liu, Y.; Chen, W.; Lew, M.S. A survey of traditional and deep learning-based feature descriptors for high dimensional data in computer vision. Int. J. Multimed. Inf. Retr. 2020, 9, 135–170. [Google Scholar] [CrossRef]

- Long, C.; Cao, Y.; Jiang, T.; Zhang, Q. Edge computing framework for cooperative video processing in multimedia iot systems. IEEE Trans. Multimed. 2018, 20, 1126–1139. [Google Scholar] [CrossRef]

- Ghosh, A.M.; Grolinger, K. Edge-cloud computing for internet of things data analytics: Embedding intelligence in the edge with deep learning. IEEE Trans. Ind. Inform. 2020, 17, 2191–2200. [Google Scholar]

- Rong, C.; Wang, J.H.; Liu, J.; Wang, J.; Li, F.; Huang, X. Scheduling massive camera streams to optimize large-scale live video analytics. IEEE/ACM Trans. Netw. 2022, 30, 867–880. [Google Scholar] [CrossRef]

- Zhang, B.; Jin, X.; Ratnasamy, S.; Wawrzynek, J.; Lee, E.A. Awstream: Adaptive wide-area streaming analytics. In Proceedings of the 2018 Conference of the ACM Special Interest Group on Data Communication, Budapest, Hungary, 20–25 August 2018; pp. 236–252. [Google Scholar]

- Zhang, X.; Wang, Y.; Lu, S.; Liu, L.; Shi, W. Openei: An open framework for edge intelligence. In Proceedings of the 39th IEEE International Conference on Distributed Computing Systems, Dallas, TX, USA, 7–10 July 2019; pp. 1840–1851. [Google Scholar]

- Angadi, S.; Nandyal, S. Human identification using histogram of oriented gradients (HOG) and non-maximum suppression (NMS) for atm video surveillance. Int. J. Inn. Res. Com. Sci. Tech. 2021, 9, IRP1143. [Google Scholar] [CrossRef]

- Yu, H.; Li, H.; Mao, D.; Cai, Q. A relationship extraction method for domain knowledge graph construction. World Wide Web 2020, 23, 735–753. [Google Scholar] [CrossRef]

- Shashank, G.; Ajay, R.S. Maximum correlation based mutual information scheme for intrusion detection in the data networks. Expert Syst. Appl. 2022, 189, 116089. [Google Scholar]

- Xiong, Z.; Wu, Y.; Ye, C.; Zhang, X.; Xu, F. Color image chaos encryption algorithm combining CRC and nine palace map. Multimed. Tools Appl. 2019, 78, 35–55. [Google Scholar] [CrossRef]

- Zellers, R.; Bisk, Y.; Farhadi, A.; Choi, Y. From recognition to cognition: Visual commonsense reasoning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 6–16 June 2019; pp. 6720–6731. [Google Scholar]

- Lin, D.; Wang, L.; Shi, G.; Xu, H. Social relationship recognition based on relational self-attention mechanism. In Proceedings of the 25th IEEE International Conference on Computer Supported Cooperative Work in Design, Hangzhou, China, 4–6 May 2022; pp. 798–803. [Google Scholar]

- Lv, J.; Liu, W.; Zhou, L.; Wu, B.; Ma, H. Multi-stream fusion model for social relation recognition from videos. In Proceedings of the MultiMedia Modeling—24th International Conference, Bangkok, Thailand, 5–7 February 2018; pp. 355–368. [Google Scholar]

- Dai, P.; Lv, J.; Wu, B. Two-stage model for social relationship understanding from videos. In Proceedings of the IEEE International Conference on Multimedia and Expo, Shanghai, China, 8–12 July 2019; pp. 1132–1137. [Google Scholar]

- Xu, T.; Zhou, P.; Hu, L.; He, X. Socializing the videos: A multimodal approach for social relation recognition. ACM Trans. Multimed. Comput. 2021, 17, 23. [Google Scholar] [CrossRef]

- Kukleva, A.; Tapaswi, M.; Laptev, I. Learning interactions and relationships between movie characters. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9849–9858. [Google Scholar]

- Vicol, P.; Tapaswi, M.; Castrejón, L.; Fidler, S. Moviegraphs: Towards understanding human-centric situations from videos. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8581–8590. [Google Scholar]

- Li, S.; Jiang, S.; Xu, X.; Han, W.; Zhao, D.; Wang, Z. A weighted network community detection algorithm based on deep learning. Appl. Math. Comput. 2021, 401, 126012. [Google Scholar] [CrossRef]

- Ma, C.X.; Song, J.C.; Zhu, Q.; Maher, K.; Huang, Z.Y.; Wang, H.A. Emotionmap: Visual analysis of video emotional content on a map. J. Comput. Sci. Technol. 2020, 35, 576–591. [Google Scholar] [CrossRef]

- Donta, P.K.; Srirama, S.N.; Amgoth, T.; Annavarapu, C.S.R. Survey on recent advances in iot application layer protocols and machine learning scope for research directions. Digit. Commun. Netw. 2021. [Google Scholar] [CrossRef]

- Yang, P.; Lyu, F.; Wu, W.; Zhang, N.; Yu, L.; Shen, X.S. Edge coordinated query configuration for low-latency and accurate video analytics. IEEE Trans. Ind. Inform. 2019, 16, 4855–4864. [Google Scholar] [CrossRef]

- Fathy, C.; Saleh, S.N. Integrating deep learning-based iot and fog computing with software-defined networking for detecting weapons in video surveillance systems. Sensors 2022, 22, 5075. [Google Scholar] [CrossRef]

- Taghavi, S.; Shi, W. Edgemask: An edge-based privacy preserving service for video data sharing. In Proceedings of the 5th IEEE/ACM Symposium on Edge Computing, San Jose, CA, USA, 12–14 November 2020; pp. 382–387. [Google Scholar]

- Dave, M.; Rastogi, V.; Miglani, M.; Saharan, P.; Goyal, N. Smart fog-based video surveillance with privacy preservation based on blockchain. Wirel. Pers. Commun. 2022, 124, 1677–1694. [Google Scholar] [CrossRef]

- Yuan, K.; Yao, H.; Ji, R.; Sun, X. Mining actor correlations with hierarchical concurrence parsing. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Dallas, TX, USA, 14–19 March 2010; pp. 798–801. [Google Scholar]

- Lv, J.; Wu, B.; Zhou, L.; Wang, H. Storyrolenet: Social network construction of role relationship in video. IEEE Access 2018, 6, 958–969. [Google Scholar] [CrossRef]

- Labatut, V.; Bost, X. Extraction and analysis of fictional character networks: A survey. ACM Comput. Surv. 2019, 52, 89. [Google Scholar] [CrossRef]

- Gao, J.; Qing, L.; Li, L.; Cheng, Y.; Peng, Y. Multi-scale features based interpersonal relation recognition using higher-order graph neural network. Neurocomputing 2021, 456, 243–252. [Google Scholar] [CrossRef]

- Li, L.; Qing, L.; Wang, Y.; Su, J. HF-SRGR: A new hybrid feature-driven social relation graph reasoning model. Vis. Com. 2021, 1–14. [Google Scholar] [CrossRef]

- Teng, Y.; Song, C.; Wu, B. Toward jointly understanding social relationships and characters from videos. Appl. Intell. 2022, 52, 5633–5645. [Google Scholar] [CrossRef]

- Lv, J.; Wu, B. Spatio-temporal attention model based on multi-view for social relation understanding. In Proceedings of the MultiMedia Modeling—25th International Conference, Thessaloniki, Greece, 8–11 January 2019; pp. 390–401. [Google Scholar]

- Feng, L.; Bhanu, B. Understanding dynamic social grouping behaviors of pedestrians. IEEE J. Sel. Top. Signal Process. 2015, 9, 317–329. [Google Scholar] [CrossRef]

- Lee, O.J.; Jung, J.J. Story embedding: Learning distributed representations of stories based on character networks. Artif. Intell. 2020, 281, 103235. [Google Scholar] [CrossRef]

- Wang, W.; Du, H.Y.; Bai, L. An overlapping community detection algorithm based on centrality measurement of network node. J. Comput. Dev. 2018, 55, 1619. [Google Scholar]

- Li, Y.; He, K.; Kloster, K.; Bindel, D.; Hopcroft, J. Local spectral clustering for overlapping community detection. ACM Trans. Knowl. Discov. Data 2018, 12, 17. [Google Scholar] [CrossRef]

- Abbe, E. Community detection and stochastic block models: Recent developments. J. Mach. Learn. Res. 2017, 18, 1–86. [Google Scholar]

- Sun, H.; He, F.; Huang, J.; Sun, Y.; Li, Y.; Wang, C.; He, L.; Sun, Z.; Jia, X. Network embedding for community detection in attributed networks. ACM Trans. Knowl. Discov. Data. 2020, 14, 36. [Google Scholar] [CrossRef]

- Su, X.; Xue, S.; Liu, F.; Wu, J. A comprehensive survey on community detection with deep learning. IEEE T. Neur. Net. Lear. 2022. [Google Scholar] [CrossRef] [PubMed]

- Dong, Y.; Chawla, N.V.; Swami, A. metapath2vec: Scalable representation learning for heterogeneous networks. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13–17 August 2017; pp. 135–144. [Google Scholar]

- Cao, Y.; Jiang, T.; Chen, X.; Zhang, J. Social-aware video multicast based on device-to-device communications. IEEE Trans. Mob. Comput. 2016, 15, 1528–1539. [Google Scholar] [CrossRef]

- Hu, C.; Bao, W.; Wang, D.; Liu, F. Dynamic adaptive DNN surgery for inference acceleration on the edge. In Proceedings of the 2019 IEEE Conference on Computer Communications, Paris, France, 29 April–2 May 2019; pp. 1423–1431. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Sze, V.; Chen, Y.H.; Yang, T.J. Efficient processing of deep neural networks: A tutorial and survey. Proc. IEEE 2017, 105, 2295–2329. [Google Scholar] [CrossRef]

- Yang, S.; Luo, P.; Loy, C.C.; Tang, X. Wider face: A face detection benchmark. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 5525–5533. [Google Scholar]

- Zhou, L.; Wu, B.; Lv, J. Sre-net model for automatic social relation extraction from video. In Proceedings of the 6th CCF Conference, Xi’an, China, 11–13 October 2018; pp. 442–460. [Google Scholar]

- Wang, L.; Xiong, Y.; Wang, Z.; Qiao, Y. Temporal Segment Networks: Towards Good Practices for Deep Action Recognition. In Proceedings of the 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 20–36. [Google Scholar]

- Velickovic, P.; Cucurull, G.; Casanova, A.; Romero, A.; Liò, P.; Bengio, Y. Graph attention networks. arXiv 2017, arXiv:1710.10903. [Google Scholar]

- Blondel, V.D.; Guillaume, J.L.; Lambiotte, R.; Lefebvre, E. Fast unfolding of communities in large networks. J. Stat. Mech. Theory Exp. 2008, 10, 10008. [Google Scholar] [CrossRef] [Green Version]

- Vazquez, A.F.; Dapena, A.; Souto-Salorio, M.J. Calculation of the Connected Dominating Set Considering Vertex Importance Metrics. Entropy 2019, 20, 87. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Luo, S.; Zhang, Z.; Ma, Y.; Shu, W. Co-association matrix-based multi-layer fusion for community detection in attributed networks. Entropy 2019, 21, 95. [Google Scholar] [CrossRef]

- Danon, L.; Diaz-Guilera, A.; Duch, J.; Arenas, A. Comparing community structure identification. J. Stat. Mech. Theory Exp. 2005, 9, P09008. [Google Scholar] [CrossRef]

- Du, T.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4489–4497. [Google Scholar]

- Findler, N.V. Short note on a heuristic search strategy in long-term memory networks. Inform. Process. Lett. 1972, 1, 191–196. [Google Scholar] [CrossRef]

- Grover, A.; Leskovec, J. node2vec: Scalable feature learning for networks. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 855–864. [Google Scholar]

- Girvan, M.; Newman, M. Community structure in social and biological networks. Proc. Natl. Acad. Sci. USA 2002, 99, 7821–7826. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).