Abstract

In essence, the network is a way of encoding the information of the underlying social management system. Ubiquitous social management systems rarely exist alone and have dynamic complexity. For complex social management systems, it is difficult to extract and represent multi-angle features of data only by using non-negative matrix factorization. Existing deep NMF models integrating multi-layer information struggle to explain the results obtained after mid-layer NMF. In this paper, NMF is introduced into the multi-layer NMF structure, and the feature representation of the input data is realized by using the complex hierarchical structure. By adding regularization constraints for each layer, the essential features of the data are obtained by characterizing the feature transformation layer-by-layer. Furthermore, the deep autoencoder and NMF are fused to construct the multi-layer NMF model MSDA-NMF that integrates the deep autoencoder. Through multiple data sets such as HEP-TH, OAG and HEP-TH, Pol blog, Orkut and Livejournal, compared with 8 popular NMF models, the Micro index of the better model increased by , NMI value increased by , and link prediction performance improved by . Furthermore, the robustness of the proposed model is verified.

Keywords:

depth autocoding; social management systems; multilayered structure; non-negative matrix factorization; character representation MSC:

22-08

1. Introduction

Non-negative matrix factorization (NMF) is now known to be a relatively new method of matrix factorization [1]. Since D.D. Lee et al. proposed a new method of feature subspace in Nature in 1999, Non-negative Matrix Factorization [2] has been widely used in image analysis, text clustering, data mining, speech processing and other aspects. With the deepening of research, many application analyses have developed, from the early single-structure feature analysis to the joint mining of multiple network structures and the layered analysis of multi-source information. In addition, abundant data indicate that the model based on a single pairwise interaction may not capture complex dependencies between network nodes [3]. The interaction of user with both the video and its enrichments results in a lot of explicit and implicit relevance feedback, which enables some works to provide personalized and rich multimedia content [4]. Lambiotte and Rosvall et al. [5] described the shortcomings of the traditional network model and the existing ideal high-order model in their article published in NaturePhysics and discussed that the multi-layer network model played an important role in the analysis of various types of interactions of many actual complex systems.

In the analysis and mining of multi-relational data, the method based on network representation learning has attracted the attention of many scholars because of its excellent performance in many practical tasks. Network representation learning [6] (also known as network embedding) is an effective network analysis method. Based on preserving network structure information, it embeds graphs into a low-dimensional and compact space. For complex multi-level data, however, with only the NMF decomposition single-layer network composed of a single decomposition, it tends to reach a higher accuracy even with the corruption of a severe proportion of data, as well as the function of the local minimum in split, leaving it unable to express the characteristics of data from many angles, so decomposition results are not always satisfied [7].

To effectively represent high-order and multi-layer complex data, traditional vector-based machine learning and deep learning algorithms can directly use the mapping of low-dimensional space representations to efficiently complete network analysis tasks, which greatly enrich the selection of algorithms and models for network mining tasks [8,9]. Therefore, how to use an effective deep network structure for the hierarchical feature extraction of complex data and how to combine the advantages of non-negative matrix decomposition and deep networks have important practical implications for the collaborative discovery of data research knowledge from multiple information sources.

Therefore, in this paper, NMF is introduced into a multi-layer NMF structure, and the feature representation of input data is realized by using a complex hierarchical structure. By adding regularization constraints for each layer, the essential features of data are obtained by characterizing the feature transformation layer-by-layer, and further, the multi-layer NMF model MDA-NMF of a deep autoencoder and NMF is constructed. The method proposed in this paper can effectively improve the detection accuracy and prediction accuracy of social groups in the complex social management system. The main contributions of the proposed system can be summarized as follows: 1. The model integrates the multi-layer structure features of deep self-coding; 2. The model introduces multi-layer NMF structure, which can effectively use a complex hierarchical structure to achieve feature representation of input data; 3. Through the evaluation of multiple data sets, it is proved that the proposed method is superior to the existing multi-layer NMF method.

The rest of the paper is organized as follows: Section 2 discusses the related work. Section 3 introduces the proposed method through model description and model optimization. The experimental results are shown in Section 4 and discussed by parametric sensitivity analysis, multiclassification experiment, node clustering experiment, and link prediction experiment. Finally, Section 5 introduces the conclusion.

2. Materials and Methods

2.1. Study on Non-Negative Matrix Factorization

Non-negative matrix factorization is a popular machine learning technique. The essence of a non-negative matrix algorithm is to transform high-dimensional data into low-dimensional data by a linear combination of variables. There is non-negative value in the decomposition result, which dramatically improves the interpretability of the model, and development is also a widespread concern. In 2001, Lee and Seung put forward the multiplicative iteration algorithm [10], and from then the NMF algorithm was widely used in areas such as NLP, CV, a recommendation system and other fields. However, as the result of matrix decomposition, the feature matrix and coefficient matrix are not sparse enough, so the feature extraction is not apparent enough. Therefore, researchers have begun to study the sparsity of the feature matrix and coefficient matrix, and the repeatability of data in the feature matrix and sparse matrix will also be reduced. Similarly, many methods have been proposed. For example, Hoyer et al. [11] reconstructed the data and established the objective function by calculating the error between the actual and Euclidean distances. In addition, L1 was added as the penalty term, and the eigenmatrix and coefficient moment were optimized by iteration through the gradient descent method. Ren et al. [12] first used the given reference image to create nonorthogonal and non-negative basis components and then used these components to model the target. A factor then scales the constructed model to compensate for the contribution from the disk. Jia et al. [13] proposed a new semi-supervised model that simultaneously learns similar matrices with supervised information and generates clustering results. Huang et al. [14] proposed a robust multi-feature collective non-negative matrix decomposition (RMCNMF) model for ECG biometric noise and sample variation.

In summary, many studies implement complex function approximation and learn data sets from small sample sets through distributed representation of input data. Therefore, more and more researchers have focused on deep non-negative matrix factorization based on deep learning.

2.2. Deep Non-Negative Matrix Decomposition Analysis

The development of deep learning has gone through a long process. SVM, Boosting, Logistic Regression (LR) and other methods have been successively proposed. The structures of these methods either have no hidden layer nodes or contain a layer of them, so they are collectively referred to as shallow layer models. In 2006, The DBN (Deep Belief Network) model proposed by Hinton et al. [15] made it possible to construct Deep models for learning. Subsequently, deep models such as Deep Boltzmann Machines (DBM) [16] and Fuzzy Deep Belief Networks (FDBN) [17] were successively proposed. These deep networks with multiple hidden layers have excellent feature learning abilities. The features acquired by learning have an essential description of data, which is more favourable in visualization or classification applications. Ye et al. [18] proposed a new model for community detection—Depth-like autoencoder NMF (DANMF) based on the deep non-negative matrix method. DANMF adopts the architecture of hierarchical mapping between the original network and the final network community allocation and implicitly learns the hidden attributes from the low-level to the high-level of the original network in the middle layer. According to the deep decomposition architecture, De et al. [19] reviews the MF model based on deep learning and introduces the algorithm and application.

Because of deep networks’ excellent data learning performance, some scholars have proposed multi-layer non-negative matrix decomposition algorithms based on NMF and related algorithms in recent years [20]. However, the above methods and the deep NMF model integrating multi-layer information struggle to explain the results of the middle layer NMF.

2.3. Multilayer Non-Negative Matrix Factorization

Unlike single-layer learning, multi-layer NMF reveals more intuitive feature levels through the relationship between features of each layer. Layered structures learn meaningful and helpful features. The first model to extend CLRMA to multiple levels was the multi-layer NMF proposed by Cichocki et al., 2006 [21,22]. At the first level, the low-rank factor factorization of X is computed. At the next level, the matrix factorization is performed until the matrix factorization is performed. In 2013, a hierarchical non-negative matrix decomposition algorithm was proposed by [23] for hierarchical data representation. Multi-layer NMF obtains a multi-layer structure through multiple iterations of a nonsmooth non-negative matrix decomposition algorithm. Rajabi et al. [24] proposed using multi-layer NMF (MLNMF) to achieve hyperspectral decomposition. The spectral eigenmatrices are modelled as the product of sparse matrices. Chen et al. [25] proposed a constrained multi-layer NMF method for hyperspectral data processing. In this approach, at each level, and two constraints are implemented on the objective function. One is the sparsity on the abundance matrix, and the other is the minimum volume on the spectral matrix. The hierarchical processing decomposed the abundance matrix into a series of matrices, making the sparsity feature more evident and meaningful. Yuan et al. [26] introduced Hoyer projectors to provide the iterative directivity of structures in the decomposition of processes. They proposed a multi-layer non-negative matrix decomposition framework based on Hoyer projection—HP-MLNMF, which completely reconstructed and enhanced the methods.

However, in the above methods, there are few studies on the description of the middle layer of multi-layer network and the fusion model of NMF.

2.4. Encoder-Based Model

The basic idea is to map the context matrix to the embedding matrix and then use the embedding matrix to reconstruct the original context matrix. Deep neural graph representation (DNGR) [27], Structural Deep Network Embedding (SDNE) [28] and MVC-DNE [29] use deep neural networks to combine graph structures into coder algorithms directly. The basic idea behind these methods is to use autoencoders to compress information approximately node-local neighbor characteristics. The key to SDNE’s ability to preserve node neighbour characteristics is deep autoencoders and multiple nonlinear layers. The model is used to protect first and second-order approximations of nodes by using Laplacian features in the middle layers of the encoder and by using modified autoencoder reconstruction errors. In addition to the domain autoencoder method mentioned above, there is also an encoder method that iteratively aggregates domain information to generate node embedding [30] and the GraphSAGE algorithm [31]. Researchers design encoders that rely on node-local neighbor characteristics rather than the whole graph and use node embedding methods to overcome significant limitations of shallow embedding and self-coding methods.

To sum up, most of the research based on the encoder model are based on local graphs, and few on multi-layer global graphs.

3. Proposed Model

3.1. Model Description

There is only one layer of mapping between the original network and the embedded result features. The organization patterns of real-world networks is complex and diverse, and the mapping of the original network and community member space is likely to contain not only complex hierarchical and structural information, but also imply low-level hidden features, which cannot be extracted using classical shallow NMF-based methods. Furthermore, deep autoencoders are an excellent scheme for bridging the gap between low-level and high-level abstractions of raw data [32]. Inspired by the deep autoencoders, we can assume that we can obtain better structural features between nodes (i.e., more accurate structural feature matrix V) by further decomposition of mapping V and quality extraction at a deeper level from a lower to a higher level.

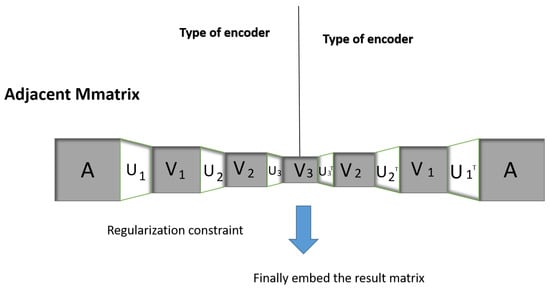

Based on the above discussion, this paper proposes a new model called the fusion deep autoencoder multi-layer STRUCTURE NMF model (MSDA-NMF). NMF introduces a multi-layer NMF structure and combines a deep autoencoder with NMF. Figure 1 illustrates an encoder component and a decoder component constituting the MSDA-NMF with a deep structure. Similar to the deep autoencoder, the encoder component attempts to transform raw networks into a low-dimensional hidden feature matrix captured in the middle layer. Like the deep autoencoder, the encoder component changes the primary network into a low-dimensional invisible feature matrix captured in the middle layer. Every intermediate layer explains the resemblance between nodes of various sequence. The decoder has symmetry with the encoder. It reconstructs the original network from the final embedded eigenmatrix through the hierarchical mapping learned in the encoder component. Unlike traditional NMF-based loss functions that only consider the decoder components, MSDA-NMF integrates encoder components and decoder components into unified loss functions.Using this method, the quasi-autoencoder NMF can learn the relationships between cross-layer features and obtain the extraction process from first-order to the high-order similarity of network structure in complex data intuitively and easily. MSDA-NMF using this hierarchical structure feature extraction algorithm, the optimal depth class autoencoder class NMF structure suitable for classification tasks is studied.

Figure 1.

Framework diagram of the MSDA-NMF model.

To better explain the terms and symbols used in this paper, we have unified the terms and symbols used. See Table 1 for details.

Table 1.

Symbol Description.

3.1.1. Model Solution

NMF directly learns a layer of auxiliary matrix and basis matrix . However, real-world networks are often complex and diverse organizational patterns. Therefore, the mapping between the original network and the community member space will probably contain complicated hierarchical and structural information with implicit low-level hidden attributes. It is well known that deep learning can bridge the gap between low-level and high-level abstractions of raw data. In this sense, we propose to factor the mapping further, hoping to add an extra layer of abstraction for each layer of embedding results and extract the similarity between nodes from low order to high order. To be specific, the adjacency matrix A is decomposed into the product of non-negative matrices, as follows:

Thereinto , , request .

A hierarchy in Formula (1) that allows p-level abstract understanding of the original network can look similar to the following Sample decomposition:

We also retain the non-negative constraint . is the embedded characteristic matrix of the i layer, and is the auxiliary matrix of the i layer. By doing so, each layer of abstract captures node similarity of different orders, from first-order proximity to structural identification and finally to community-level similarity. To learn the embedding matrix, we derive the following objective function. This paper uses a three-layer NMF structure, so :

After the optimization of Formula (3), we can obtain the learning result of a network representation of each layer by as [33].

3.1.2. Fusion Depth-like Autoencoder Matrix Decomposition

It can be seen that Formula (1) is a reconstruction of the original network corresponding to the decoder part of the autoencoder. To improve the representation learning capability of the auto encoder, the encoder components must be integrated into the community detection model based on NMF to form the NMF model similar to the autoencoder. The rationality of the NMF model of class automatic coders is quite simple. For the ideal basis matrix , it is supposed to be able to reconstruct the primal network by reconstructing the mapping with a small error; meanwhile, it should be able to precisely project the primal network to the community membership space by the help of the mapping , that is, . Integrating the encoder component and the decoder component with a unified loss function allows them to know each other during the learning process, from which we can obtain the ideal community members of the node. To accomplish this vision in the depth model, the objective function of the encoder is as follows.

The current deep class autoencoder matrix decomposition model cannot explain what kind of network representation results can be obtained at the impenetrable levels. To enhance the interpretability of this model, we add standard terms for different levels. In [34], the second-order similarity matrix of LINE is expressed in the form:

is the logarithm of all the matrix elements, is the degree matrix of graph G, , and b is the negative sampling parameter. is the capacity of the graph. , .

The final objective function is:

In this way, the first-order similarity of the network structure can be obtained after first-layer non-negative matrix decompositions, the second-order similarity of the network structure can be obtained after second-layer non-negative matrix decompositions, and higher-order features can be obtained after third-layer non-negative matrix decompositions.

3.2. Model Optimization

Optimization problems are highly related to scientific research [35], and in this paper in order to speed up the model’s approximation of the factor matrix, we pretrain each layer to obtain the initial approximation and of the factor matrix. The training time of the model can be significantly reduced by the pretraining process. The effectiveness of pretraining has been proven in deep autoencoder networks. For pretraining, we first decompose the adjacency matrix by minimizing . Then, we decompose the matrix into by minimizing . The third layer is similar. Then, fine-tuning the objective function (6) for each layer by alternately minimizing the objective function proposed in the equation until the update of the objective function is very small, that is, the convergence ends. The updated algorithm is shown in the objective function of Algorithm 1.

| Algorithm 1: Optimization of MSDA-NMF model |

|

The objective function (6) requires a parameter matrix consisting of , and their update rules are introduced in detail below. So here we have .

3.2.1. Subproblems

When is updated, the matrices and are fixed to obtain the objective function is Equation (6).

By introducing Lagrangian multiplication matrix , the following can be obtained:

Let be obtained:

Initialize and update according to the following rules:

3.2.2. Subproblem

When updating , other variables are fixed to receive the following objective function:

Similar to the update matrix , Lagrangian multiplication matrix is introduced to obtain:

Identical to the optimization calculation of , we define the update rules of as follows:

3.2.3. Subproblem

When is updated, other variables are fixed and the following objective function is obtained:

Referring to the optimization algorithm, we define the update rules of as follows:

3.2.4. Subproblem

When updating , fix other variables and obtain the following objective function:

Refer to the optimization algorithm, we define the update rules of as follows:

3.2.5. W Subproblem

When is updated, other variables are fixed and the following objective function is obtained:

Refer to the optimization algorithm, we define the update rule of W as follows:

3.2.6. Model Complexity Analysis

The computational complexity of the five updated formulas in algorithm Algorithm 1 is respectively , , , , . Since , , can be considered input constants, and , the computational complexity is . Most real complex networks are sparse, so only nonzero values are computed in the matrix multiplication of real complex networks. The calculation is simplified to ; here, we use E to represent the number of edges in the complex network. In addition, the model, the matrices , , , are parametric, the space complexity is denoted by . Since , , and are much smaller than n, the calculation method of space complexity is simplified as , but the complexity increases as the embedded dimensions increase.

4. Results and Discussion

In this paper, MSDA-NMF model is constructed by using the complex hierarchical structure to realize the feature representation of input data. With 8 different data sets and 6 methods, effective comparison is achieved. The experiments are performed on a computer with Windows 7, 3.10 GHz and 32.00 GB RAM.

4.1. Experimental Objects

The parameters of the msDA-NMF model in this paper include three hyperparameters and , different layer dimensions , and convergence coefficient . In this complex network experiment, we first defined and or , . Based on these parameters, we obtain the optimal parameters of the model. Here, the number of clusters, K, is a variable that changes according to the tags in the data set.

4.2. Data Sets and Comparison Methods

This section provides a brief overview of the open data set and advanced complex network representation learning models used in various fields.

4.2.1. Data Set

We complete the task of multi-label node classification through four popular networks. To better verify our model’s clustering and link prediction robustness, we use three data sets with basic facts here. The statistical characteristics of the data set are shown in Table 2:

Table 2.

Data set network structure information.

- GR-QC, Hep-TH, Hep-PH [36]: This collaborative network is coauthored by authors from three different fields (general relativity and quantum cosmology, theory of high-energy physics, and phenomenology of high-energy physics) and extracted in the arXiv. The vertices of the network represent the authors, and the edges of the network represents an author who co-authored a scientific paper in this network. The GR-QC data set covers the smallest graph with 19,309 nodes (5855 authors, 13,454 articles) and 26,169 edges. The HEP-TH data set covers documents during January 1993 to April 2003 (124 months). It began during the beginning of arXiv and therefore essentially stands for the entire history of the HEP-TH section, with the citation graph covering all citations in a data set with N = 29,555 and E = 352,807 edges. If a paper I references a paper J, the chart contains directed edges from I to j. If an article is cited or cited, the diagram does not collect pieces of information about the paper. The HEP-PH data set is the second citation graph, taken from the HEP-PH section of arXiv, which covers all the citations in the data set with N = 30,501 and E = 347,268 edges.

- Open Academic Graph (OAG) [37]: This collaborative network of undirected authors is formed by an open available academic chart indexed from Microsoft Academia and on the Miner website in the United States, which includes 67, 768, 244 authors and 895, 368, 962 collaborative advantages. The labels of the network vertices represent the top research areas of each author, and the network contains 19 areas (labels) and allows authors to post in different areas, making related vertices have multiple labels.

- Polblog [38]: Polblog is a network of blogs used by American politicians with nodes. There are 1335 blogs, including 676 pages of liberal blogs and 659 pages of conservative blogs. If their blogs have a WEB connection, there are edges. The labels here mainly refer to the different political categories of politicians.

- Orkut [39]: As an online dating network, Orkut takes nodes as users and creates connections between nodes according to the user’s friend relationships. Orkut has multiple highlighted communities, including student communities, events, interest-based groups and varsity teams.

- Livejournal [39]: The nodes in the Livejournal data set represent bloggers. How two people can be friends, and there is an edge between them. It divides bloggers into groups based on their friendships and label them culture, entertainment, expression, fans, life/style, life/support, games, sports, student life and technology.

- Wikipedia [40]: This word co-occurrence network is owned by Wikipedia. The class tags for this network are POS tags inferred by the Stanford pos-tagger.

4.2.2. Control Methods

We compared the proposed SDA-NMF model based on three NMFs with the four most advanced network embedding methods. The comparison results are as follows.

- M-NMF [41]: M-NMF combines the community structure characteristics and 2-step proximity of nodes in the NMF framework to learn node embedding in network structure. Node representation is used to show consistency with the network community structure, and an auxiliary community representation matrix is used to link local characteristics (first- and second-order similarity). Community structure features in the network structure to make joint optimization through the optimization formula. The embedding aspect of the experiment is set to 128, and the other parameters are set according to the original paper.

- NetMF [34]: NetMF proves that models with negative sampling (DeepWalk, PTE and LINE) may be considered enclosed matrices, and demonstrates their superiority over DeepWalk and LINE in traditional network analysis and mining missions.

- AROPE [42]: This method moves the singular value decomposition frame, and the embedding vector to any order, and learns the higher-order proximity of nodes. Thus, further, it reveals its internal relations.

- DeepWalk [43]: Deep walk generates random paths for each node and treats the paths of these nodes as sentences in a language model. It subsequently proceeds to learn the embedding vectors using the Skip-Gram model. In the experiment, the parameters are set according to the original paper.

- Node2vec [44]: This method extends the use of a biased random walk in DeepWalk. All parameters are the default settings of the algorithm, but two offset parameters P and q are introduced to optimize the process of random walk.

- LINE [45]: LINE learns the embedding of the nodes through the definition of two loss functions, preserving the first- and second-order of proximity separately. The standard parameter setup is applied by default in this article, but the negative ratio is 5.

- SDNE [28]: SDN utilizes a deep autoencoder with a semi-supervised architecture to optimize first- and second-order similarity of nodes and explicits objective functions to elucidate ways to retain network structure. In the experiment, the parameters are set according to the original paper.

- GAE [30]: GAE model has some advantages in link prediction tasks of citation networks. The algorithm is based on a variational autoencoder and has the same convolution structure as GCN.

4.2.3. Evaluation Index

The robustness of the proposed model is verified by the following evaluation indicators:

NMI [46]: The accuracy of comparison between algorithmically divided communities and generated standard communities is an important measure of community discovery. The measurement value is generally between 0 and 1. The higher the value is, the more accurate the detection result of the algorithm will be. When the value is 1, the result consistent with the label community can be obtained. The formula is as follows:

NMI [47]: AUC is defined as the area under the receiver operating characteristic (ROC) curve, which is initially used to evaluate the classification effect of a classifier. Specifically, given the order of edges that are not observed, the AUC value is a random selection of the edge of a lost edge (e.g., an edge in the EP) and the probability is higher than the edge of a randomly selected edge not existing (for example, an edge in the US—E), a probability in the process of the realization of the algorithm, because, considering the time complexity, we usually calculate the probability of each observed no-edge value instead of a sorted list. To better estimate the value of AUC in the sorted list case, at each step we randomly select a missing edge and a nonexistent edge and compare their values. If in n independent comparisons, the value of the missing edge is higher than that of the non-existent edge for n times, and they are equal for n times, then AUC can be defined as:

4.3. Parameter Sensitivity Analysis

This section analyzes the effects of parameters and of the MSDA-NMF model on the clustering performance. These effects are on the real network, where are the i-level embedding dimensions. To determine the specific parameters of the model, this paper first fixed all the other parameters except the two changing numbers based on the OAG data set. Second, the effect of each change is verified by adjusting the parameters of the two changes. This paper takes the OAG data set as an example to investigate influences of different parameters. The effects of each parameter are explored through varying parameters and simultaneously keeping the others fixed. For example, we observe the effect of , by changing , and fixing with , , and so on. Specifically, we change from 100, 200, 300, 400, 500, and ask for .

Figure 2 shows the influence of dimensions and embedded in different layers on the effects of the three data clustering classes. The lighter the color of the point in the figure is, the larger the NMI value under the point coordinates. The NMI value of the yellow point is the maximum, and the NMI value of the purple point is the minimum. The size of a point also indicates the NMI value of the point. The larger the point is, the greater the NMI value of the point.

Figure 2.

Analysis of the embedding dimension relationship of different layers.

Figure 3a–c shows the performance of the NMI as these parameters change.

Figure 3.

Relationship between and cluster evaluation index NMI. (a) Relationship between , and cluster evaluation index NMI; (b) Relationship between , and cluster evaluation index NMI; (c) Relationship between , and cluster evaluation index NMI.

From the figures, we can see that:

1. In Figure 2, when is approximately 200, is approximately 170 and is 150, NMI is the maximum. If is controlled and remains unchanged, NMI decreases with the increase of , and the dimension of is not lower, but the NMI value is higher.

2. In Figure 3a:

(1) When is less than 30, and is greater than 80 and less than 100, the model has the worst value of robustness.

(2) When is greater than 40 and less than 80, the NMI value tends to be stable with an increase in to less than 50.

(3) When is greater than 50 and is less than 30, the model can obtain better clustering performance at this time.

3. In Figure 3b, when y is in , NMI does not change much, indicating that when is in , and the clustering performance is relatively stable with increasing .

4. In Figure 3c, we notice that in a particular range, when both and increase, NMI tends to be stable, while when and are in the range , NMI reaches its maximum value.

As for the relationship between and the cluster evaluation index NMI, experimental results show that when , and , at this point, more effective network structure features can be obtained, even in low-dimensional space. Therefore, we set the data in the following experiment when , and .

4.4. Multiclassification Experiment

Table 3 illustrates the experimental results.

Table 3.

Performance evaluation of multi-label classification.

In the HEP-TH dataset, the MSDA-NMF () model has a micro result of and a macro result of , just slightly below the optimal values of and for all the comparative models, respectively, but the results of this model are better than all the other comparative models except for the optimal value. The MSDA-NMF () model has a micro result of , better than all comparative models and higher than the AROPE model, which has the highest micro result among them. In the macro comparison, the macro result of this model of is only slightly lower than the optimal value of of the GAE model, but still performs better in the optimal value than the other comparative models.

The results of the multiclassification experiments of the MSDA-NMF () and the MSDA-NMF () are compared. In the HEP-TH dataset, the results of the MSDA-NMF () model are lower than those of the three-layer model. It can be seen that the more intermediate levels there are in the classification experiments, the more effective the MSDA-NMF can be in the model. In other citation network data sets, OAG and HEP-PH, the performance of the three-layer models proposed in this paper is superior to other models.

In addition, the micro and macro data results of the MSDA-NMF () model proposed in this paper also outperformed the other models in the HEP-PH dataset, and the macro indicators were higher than the comparison models in the OAG dataset. In the HEP-TH, OAG and HEP-PH data sets, the micro-F1 and macro-F1 multi-classification evaluation performance of MSDA-NMF is better than those of NetMF, GAE and other popular feature models. This proves the effectiveness of our network-embedding model.

What is special here is that the AREOP model performs better than the MSDA-NMF method for the micro-F1 and macro-F1 metrics in our comparison based on the Wikipedia data set. This is probably because Wikipedia is a dense word co-occurrence network, so a relatively low order is sufficient to characterize Wikipedia’s web structure. Therefore, the MSDA-NMF method based only on matrix decomposition performs poorly in the classification task on sparse data sets.

4.5. Node Clustering Experiment

In this section, the behavior of node clusters is assessed according to the normalized mutual information (NMI) of typical metrics. In this paper, we use real data (including Polbog, Livejournal and Orkut) to assess the clustering performance of the model on real data sets. The NMI varies from 0 to 1, with a larger value indicating better cluster performance. In experiments to verify the clustering effect of the model, the standard K-means algorithm is used. Because the initial value has a significant impact on the clustering result, the clustering should be repeated 50 times and its average value should be considered as the result.

Figure 4 demonstrates the clustering ability of nodes with related NMI. It can be seen from the figure that:

Figure 4.

Evaluation of node clustering performance based on NMI.

1. In the Polblog and Orkut data sets, MSDA-NMF () and MSDA-NMF () obtained better results based on NMI compared with all models. In particular, in the Polblog data set, the MSDA-NMF () and MSDA-NMF () modes also have a great advantage in NMI value compared with the best DeepWalk mode among the comparison modes. This is because our method integrates lower-order structural features and multi-layer features to capture diverse and comprehensive structural features of the network, and can obtain better NMI values in data sets with a lower number of categories.

2. The model in this paper also achieves better results in Orkut data sets with fewer categories. What is special here is that in the relatively high number of categories in the Livejournal data set, the NMI value obtained by this model is slightly lower than that obtained by the GAE model. The main reason for this is that GAE has the same convolution structure as GCN and is based on a variational autoencoder, so GAE has better robustness in link prediction for citation networks.

3. SDNE and LINE only retain the proximity between network nodes and cannot effectively preserve the community structure. Random walk-based DeepWalk and Node2VEC can better capture the second-order and higher-order similarity. Although AROPE can capture the similarity of different nodes and capture more global structure information as the length increases, the omission of community structure makes the algorithm ignore module information. But for the sparse network and the network without prominent community structure, the modularity of the NMF is constrained by the similarity of nodes to each other. Therefore, its performance is relatively low.

This paper also compares the performance of the MSDA-NMF model of three-layer NMF and the MSDA-NMF model of two-layer NMF in multiclassification experiments. The results show that the NMI values obtained by the MSDA-NMF () model are higher than those obtained by the MSDA-NMF () model in all data sets. This proves the validity of the MSDA-NMF () model in network embedding.

4.6. Link Prediction Experiment

Link prediction mainly detects the accuracy of prediction by predicting which pairs of nodes may form edges and comparing them with the actually deleted edges. In the experiment, we randomly hide the , , , and edges as test data, and the other edges are connected. We use the remaining edges to train the robust results of node embedding vectors. We evaluated the effectiveness of our model based on typical AUC (Area Under A Curve) evaluation indexes.

To verify the validity of our proposed model, we first delete the edge on all network data sets. As shown in Table 4:

Table 4.

Experimental results of link prediction.

1. Compared with other algorithms, MSDA-NMF () improves the prediction performance by compared to the optimal prediction model GAE and .

2. Compared to the worst prediction model, MSDA-NMF () has a low prediction performance of compared to the optimal prediction model in the comparison model.

3. In the comparison data set of Orkut and GR-QC:

(1) The proposed MSDA-NMF () and MSDA-NMF () models obtained the optimal prediction results.

(2) However, in the GR-QC data set, the accuracy of the MSDA-NMF () model was higher than that of the MSDA-NMF ().

What is remarkable here is that in the Polblog data set, none of the methods proposed in this paper can obtain the optimal prediction effect. As shown in Table 2, the Polblog data set has a small number of pairs of categories, which makes the model unable to obtain better prediction results in hierarchical operation.

Specifically, to further verify the influence of the proportion of training data on the model, this paper conducted tests through Livejournal and Orkut data. The results in Figure 5 show that our method has a certain superiority over all the mainstream methods for removing different parts of edges in the two data sets. Because networks have different structural characteristics, the remaining edge of the Livejournal data set is close to the optimal level at , and MSDA-NMF () obtains prediction results similar to those of MSDA-NMF (). It can be seen that our method has an excellent performance in link prediction, indicating that network embedding results retain the structural characteristics of data sets.

Figure 5.

Changing the predictive performance of training data ratios.

5. Conclusions and Future Work

This paper introduces the multilayer structure NMF, a complex hierarchical structure to realize the characteristics of the input data by adding the regularization constraint for each layer, the essential feature of depicting feature transformation to obtain the data one by one, to further merge their depth due to the multilayer structure of the encoder MSDA NMF model. It can effectively improve the detection accuracy and prediction accuracy of social groups in the complex social management system. Eight popular models, NetMF, M-NMF, DeepWalk, Node2vec, LINE SDNE, AROPE and GAE were compared with eight real data sets to verify the robustness of the algorithm.

Although the proposed method (the data set presented in this paper) has a good detection effect, there are still some problems to be solved; for example, the results of the model are not optimal in the network with a large number of categories. Therefore, how to overcome the multistructure characteristics of multicategories in real networks and models simultaneously is the key to effectively improving the detection performance of the model in all data sets, and it is also challenging and necessary work [48]. We will take these factors into account in our future work.

Author Contributions

Conceptualization, W.Y.; data curation, X.L.; formal analysis, W.Y. and X.L.; investigation, G.X.; methodology, W.Y.; resources, X.L.; software, G.X.; validation, F.L.; writing—original draft, W.Y. and X.L.; writing—review and editing, X.L. and F.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported in part by the Key R&D program of Zhejiang Province (2022C01083), the National Natural Science Foundation of China (62102262, 62172297, 61902276) and the State Key Development Program of China (No. 2019YFB2101700, No. 2018YFB0804402).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| MDPI | Multidisciplinary Digital Publishing Institute |

| DOAJ | Directory of open access journals |

| TLA | Three letter acronym |

| LD | Linear dichroism |

References

- Maisog, J.M.; DeMarco, A.T.; Devarajan, K.; Young, S.; Fogel, P.; Luta, G. Assessing Methods for Evaluating the Number of Components in Non-Negative Matrix Factorization. Mathematics 2021, 9, 2840. [Google Scholar] [CrossRef] [PubMed]

- Lee, D.D.; Seung, S.H. Learning the parts of objects by non-negative matrix factorization. Nature 1999, 401, 788–791. [Google Scholar] [CrossRef]

- Cai, T.; Li, J.; Mian, A.S.; Li, R.; Yu, J.X. Target-aware Holistic Influence Maximization in Spatial Social Networks. IEEE Trans. Knowl. Data Eng. 2020, 34(4), 1–14. [Google Scholar] [CrossRef]

- Stai, E.; Kafetzoglou, S.; Tsiropoulou, E.E.; Papavassiliou, S. A holistic approach for personalization, relevance feedback & recommendation in enriched multimedia content. Multimed. Tools Appl. 2018, 77, 283–326. [Google Scholar]

- Lambiotte, R.; Rosvall, M.; Scholtes, I. From networks to optimal higher-order models of complex systems. Nat. Phys. 2019, 15, 313–320. [Google Scholar] [CrossRef] [PubMed]

- Cai, H.; Zheng, V.W.; Chang, C.C. A Comprehensive Survey of Graph Embedding: Problems, Techniques, and Applications. IEEE Trans. Knowl. Data Eng. 2018, 30, 1616–1637. [Google Scholar] [CrossRef] [Green Version]

- Shao, W.; Fang, B.; Pu, W.; Ren, M.; Yi, S. A new method of extracting FECG by BSS of sparse signal. Sheng wu yi xue gong cheng xue za zhi = J. Biomed. Eng. 2009, 26, 1206–1210. [Google Scholar]

- Fan, Z.; Lei, L.; Zhang, K.; Trajcevski, G.; Zhong, T. DeepLink: A Deep Learning Approach for User Identity Linkage. In Proceedings of the IEEE INFOCOM 2018—IEEE Conference on Computer Communications, Honolulu, HI, USA, 16–19 April 2018. [Google Scholar]

- Xu, G.; Bai, X.; Luo, N.N.X.; Cheng, L.; Zheng, X. SG-PBFT: A secure and highly efficient distributed blockchain PBFT consensus algorithm for intelligent Internet of vehicles. J. Parallel Distrib. Comput. 2022, 164, 1–11. [Google Scholar] [CrossRef]

- Févotte, C.; Idier, J. Algorithms for nonnegative matrix factorization with the β-divergence. Neural Comput. 2011, 23, 2421–2456. [Google Scholar] [CrossRef]

- Hoyer, P.O. Non-negative sparse coding. In Proceedings of the 12th IEEE Workshop on Neural Networks for Signal Processing, Valais, Switzerland, 4–6 September 2002; pp. 557–565. [Google Scholar]

- Ren, B.; Pueyo, L.; Zhu, G.B.; Debes, J.; Duchêne, G. Non-negative matrix factorization: Robust extraction of extended structures. Astrophys. J. 2018, 852, 104. [Google Scholar] [CrossRef] [Green Version]

- Jia, Y.; Liu, H.; Hou, J.; Kwong, S. Semisupervised adaptive symmetric non-negative matrix factorization. IEEE Trans. Cybern. 2020, 51, 2550–2562. [Google Scholar] [CrossRef]

- Huang, Y.; Yang, G.; Wang, K.; Liu, H.; Yin, Y. Robust Multi-feature Collective Non-Negative Matrix Factorization for ECG Biometrics. Pattern Recognit. 2021, 123, 108376. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y.W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef]

- Salakhutdinov, R.; Tenenbaum, J.B.; Torralba, A. Learning with hierarchical-deep models. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 1958–1971. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhou, S.; Chen, Q.; Wang, X. Fuzzy deep belief networks for semi-supervised sentiment classification. Neurocomputing 2014, 131, 312–322. [Google Scholar] [CrossRef]

- Ye, F.; Chen, C.; Zheng, Z. Deep autoencoder-like nonnegative matrix factorization for community detection. In Proceedings of the 27th ACM International Conference on Information and Knowledge Management, Torino, Italy, 22–26 October 2018; pp. 1393–1402. [Google Scholar]

- De Handschutter, P.; Gillis, N.; Siebert, X. Deep matrix factorizations. arXiv 2020, arXiv:2010.00380. [Google Scholar]

- Li, X.; Xu, G.; Tang, M. Community detection for multi-layer social network based on local random walk. J. Vis. Commun. Image Represent. 2018, 57, 91–98. [Google Scholar] [CrossRef]

- Cichocki, A.; Zdunek, R. Multilayer nonnegative matrix factorisation. Electron. Lett. 2006, 42, 947–948. [Google Scholar] [CrossRef] [Green Version]

- Cichocki, A.; Zdunek, R. Multilayer nonnegative matrix factorization using projected gradient approaches. Int. J. Neural Syst. 2007, 17, 431–446. [Google Scholar] [CrossRef] [Green Version]

- Song, H.A.; Lee, S.Y. Hierarchical data representation model-multi-layer NMF. arXiv 2013, arXiv:1301.6316. [Google Scholar]

- Rajabi, R.; Ghassemian, H. Spectral unmixing of hyperspectral imagery using multilayer NMF. IEEE Geosci. Remote. Sens. Lett. 2014, 12, 38–42. [Google Scholar] [CrossRef] [Green Version]

- Chen, L.; Chen, S.; Guo, X. Multilayer NMF for blind unmixing of hyperspectral imagery with additional constraints. Photogramm. Eng. Remote Sens. 2017, 83, 307–316. [Google Scholar] [CrossRef]

- Yuan, Y.; Zhang, Z.; Liu, G. A novel hyperspectral unmixing model based on multilayer NMF with Hoyer’s projection. Neurocomputing 2021, 440, 145–158. [Google Scholar] [CrossRef]

- Cao, S.; Lu, W.; Xu, Q. Deep neural networks for learning graph representations. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

- Wang, D.; Cui, P.; Zhu, W. Structural deep network embedding. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 1225–1234. [Google Scholar]

- Yang, D.; Wang, S.; Li, C.; Zhang, X.; Li, Z. From properties to links: Deep network embedding on incomplete graphs. In Proceedings of the 2017 ACM on Conference on Information and Knowledge Management, Singapore, 6–10 November 2017; pp. 367–376. [Google Scholar]

- Kipf, T.N.; Welling, M. Variational graph auto-encoders. arXiv 2016, arXiv:1611.07308. [Google Scholar]

- Hamilton, W.L.; Ying, R.; Leskovec, J. Inductive representation learning on large graphs. arXiv 2017, arXiv:1706.02216. [Google Scholar]

- Bengio, Y.; Courville, A.; Vincent, P. Representation learning: A review and new perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1798–1828. [Google Scholar] [CrossRef] [PubMed]

- Trigeorgis, G.; Bousmalis, K.; Zafeiriou, S.; Schuller, B.W. A deep matrix factorization method for learning attribute representations. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 417–429. [Google Scholar] [CrossRef] [Green Version]

- Qiu, J.; Dong, Y.; Ma, H.; Li, J.; Wang, K.; Tang, J. Network embedding as matrix factorization: Unifying deepwalk, line, pte, and node2vec. In Proceedings of the Eleventh ACM International Conference on Web Search and Data Mining, Los Angeles, CA, USA, 5–9 February 2018; pp. 459–467. [Google Scholar]

- El-Shorbagy, M.A.; Omar, H.A.; Fetouh, T. Hybridization of Manta-Ray Foraging Optimization Algorithm with Pseudo Parameter Based Genetic Algorithm for Dealing Optimization Problems and Unit Commitment Problem. Mathematics 2022, 10, 2179. [Google Scholar] [CrossRef]

- Leskovec, J.; Kleinberg, J.; Faloutsos, C. Graph evolution: Densification and shrinking diameters. ACM Trans. Knowl. Discov. Data (TKDD) 2007, 1, 2. [Google Scholar] [CrossRef]

- Chaudhuri, K.; Chung, F.; Tsiatas, A. Spectral clustering of graphs with general degrees in the extended planted partition model. In Proceedings of the Conference on Learning Theory, JMLR Workshop and Conference Proceedings, Edinburgh, Scotland, 25–27 June 2012; pp. 35-1–35-23. [Google Scholar]

- Adamic, L.A.; Glance, N. The political blogosphere and the 2004 US election: Divided they blog. In Proceedings of the 3rd International Workshop on Link Discovery, Chicago, IL, USA, 21–25 August 2005; pp. 36–43. [Google Scholar]

- Yang, J.; Leskovec, J. Defining and evaluating network communities based on ground-truth. Knowl. Inf. Syst. 2015, 42, 181–213. [Google Scholar] [CrossRef] [Green Version]

- Toutanova, K.; Klein, D.; Manning, C.D.; Singer, Y. Feature-rich part-of-speech tagging with a cyclic dependency network. In Proceedings of the 2003 Human Language Technology Conference of the North American Chapter of the Association for Computational Linguistics, Edmonton, AB, Canada, 27 May–1 June 2003; pp. 252–259. [Google Scholar]

- Wang, X.; Cui, P.; Wang, J.; Pei, J.; Zhu, W.; Yang, S. Community preserving network embedding. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31, pp. 203–209. [Google Scholar]

- Zhang, Z.; Cui, P.; Wang, X.; Pei, J.; Yao, X.; Zhu, W. Arbitrary-order proximity preserved network embedding. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 2778–2786. [Google Scholar]

- Perozzi, B.; Al-Rfou, R.; Skiena, S. Deepwalk: Online learning of social representations. In Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 24–27 August 2014; pp. 701–710. [Google Scholar]

- Grover, A.; Leskovec, J. node2vec: Scalable feature learning for networks. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 855–864. [Google Scholar]

- Tang, J.; Qu, M.; Wang, M.; Zhang, M.; Yan, J.; Mei, Q. Line: Large-scale information network embedding. In Proceedings of the 24th International Conference on World Wide Web, Florence, Italy, 18–22 May 2015; pp. 1067–1077. [Google Scholar]

- Barcelona, U.D.; Sescelades, C. Comparing community structure identification. Radiology 2005, 9, P09008. [Google Scholar]

- Hanley, J.A.; Mcneil, B.J. A method of comparing the areas under receiver operating characteristic curves derived from the same cases. Radiology 1983, 148, 839–843. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Xu, G.; Dong, W.; Xing, J.; Lei, W.; Liu, J.; Gong, L.; Feng, M.; Zheng, X.; Liu, S. Delay-CJ: A novel cryptojacking covert attack method based on delayed strategy and its detection. Digit. Commun. Netw. 2022, in press. [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).