Can Learners’ Use of GenAI Enhance Learning Engagement?—A Meta-Analysis

Abstract

1. Introduction

- Q1: What is the overall effect of learners’ use of GenAI on learning engagement, and how does this effect manifest across its cognitive, behavioral, and affective sub-dimensions?

- Q2: To what extent is this relationship influenced by key moderating variables, including educational stage, duration, learning mode, interaction approach, and the presence of teacher intervention?

- Q3: How do these moderators specifically affect each sub-dimension of learning engagement?

2. Literature Review

2.1. Learning Engagement

2.2. GenAI and Learning Engagement

2.3. Research Gap

3. Materials and Methods

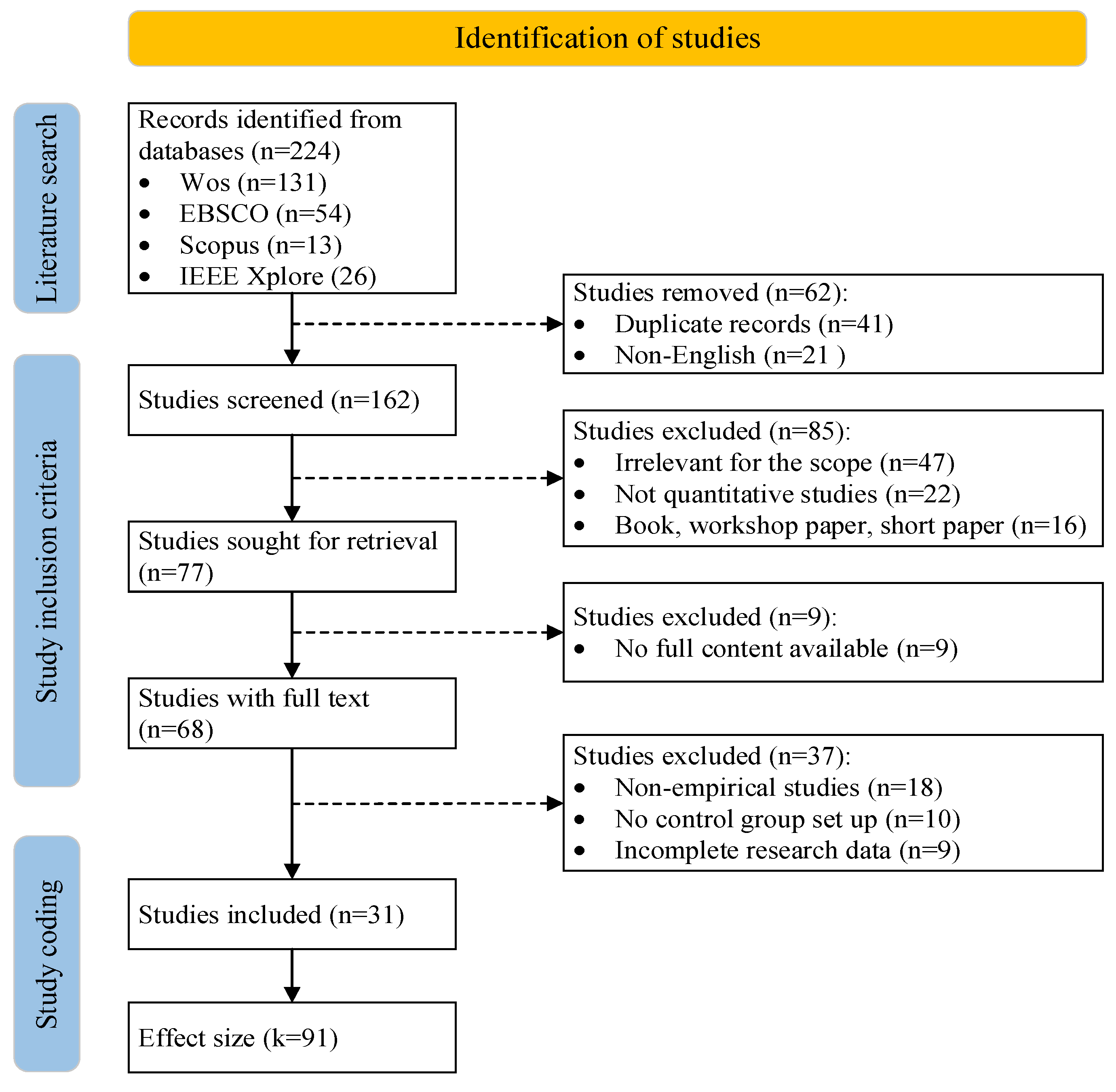

3.1. Literature Search

3.2. Study Inclusion Criteria

3.3. Study Coding

4. Results

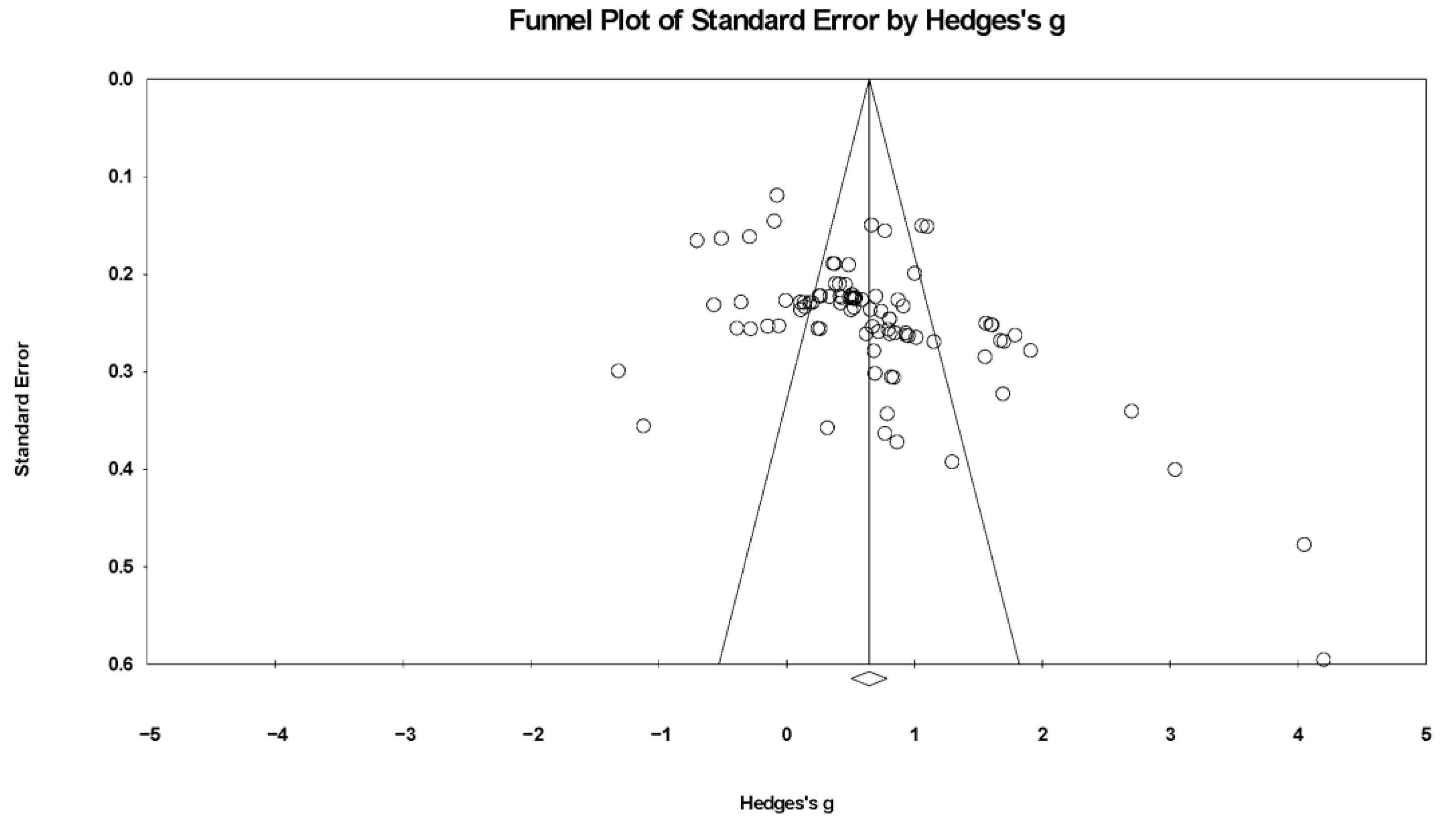

4.1. Publication Bias

4.2. Effect Size and Heterogeneity

4.3. Effect Sizes of Moderator Variables on Learning Engagement

4.4. Effect Sizes of Moderator Variables on Multi-Dimension of Learning Engagement

5. Discussion

5.1. Responses to the Three Research Questions

5.2. Theoretical Implications

5.3. Practical Implications

5.4. Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Alsaiari, O., Baghaei, N., Lahza, H., Lodge, J. M., Boden, M., & Khosravi, H. (2025). Emotionally enriched AI-generated feedback: Supporting student well-being without compromising learning. Computers & Education, 239, 105363. [Google Scholar] [CrossRef]

- Amofa, B., Kamudyariwa, X. B., Fernandes, F. A. P., Osobajo, O. A., Jeremiah, F., & Oke, A. (2025). Navigating the complexity of generative artificial intelligence in higher education: A systematic literature review. Education Sciences, 15(7), 826. [Google Scholar] [CrossRef]

- Bhatia, A. P., Lambat, A., & Jain, T. (2024). A comparative analysis of conventional and chat-generative pre-trained transformer-assisted teaching methods in dndergraduate dental education. Cureus, 16(5), e60006. [Google Scholar] [CrossRef]

- Borenstein, M., Hedges, L. V., Higgins, J. P. T., & Rothstein, H. R. (2009). Introduction to meta-analysis. John Wiley & Sons. [Google Scholar]

- Chang, C., & Hwang, G. (2024). Promoting professional trainers’ teaching and feedback competences with ChatGPT: A question-exploration-evaluation training mode. Educational Technology & Society, 27(2), 405–421. [Google Scholar] [CrossRef]

- Chang, C., & Hwang, G. (2025). ChatGPT-facilitated professional development: Evidence from professional trainers’ learning achievements, self-worth, and self-confidence. Interactive Learning Environments, 33(1), 883–900. [Google Scholar] [CrossRef]

- Chaturvedi, A., Yadav, N., & Dasgupta, M. (2025). Tech-driven transformation: Unravelling the role of artificial intelligence in shaping strategic decision-making. International Journal of Human–Computer Interaction, 41(19), 12305–12324. [Google Scholar] [CrossRef]

- Chen, J., Mokmin, N. A. M., & Su, H. (2025). Integrating generative artificial intelligence into design and art course: Effects on student achievement, motivation, and self-efficacy. Innovations in Education and Teaching International, 62(5), 1431–1446. [Google Scholar] [CrossRef]

- Chen, Y., & Hou, H. (2024). A mobile contextualized educational game framework with ChatGPT interactive scaffolding for employee ethics training. Journal of Educational Computing Research, 62(7), 1517–1542. [Google Scholar] [CrossRef]

- Chen, Y., Wang, Y., Wüstenberg, T., Kizilcec, R. F., Fan, Y., Li, Y., Lu, B., Yuan, M., Zhang, J., Zhang, Z., Geldsetzer, P., Chen, S., & Bärnighausen, T. (2025). Effects of generative artificial intelligence on cognitive effort and task performance: Study protocol for a randomized controlled experiment among college students. Trials, 26(1), 244. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y., Zhang, X., & Hu, L. (2024). A progressive prompt-based image-generative AI approach to promoting students’ achievement and perceptions in learning ancient Chinese poetry. Educational Technology & Society, 27(2), 284–305. [Google Scholar] [CrossRef]

- Chiu, M., & Hwang, G. (2025). Enhancing student creative and critical thinking in generative AI-empowered creation: A mind-mapping approach. Interactive Learning Environments, 1–22. [Google Scholar] [CrossRef]

- Chu, H., Hsu, C., & Wang, C. (2025). Effects of AI-generated drawing on students’ learning achievement and creativity in an ancient poetry course. Educational Technology & Society, 28(2), 295–309. [Google Scholar] [CrossRef]

- Coates, H. (2007). A model of online and general campus—Based student engagement. Assessment & Evaluation in Higher Education, 32(2), 121–141. [Google Scholar] [CrossRef]

- De Silva, D., Kaynak, O., El-Ayoubi, M., Mills, N., Alahakoon, D., & Manic, M. (2025). Opportunities and challenges of generative artificial intelligence: Research, education, industry engagement, and social impact. IEEE Industrial Electronics Magazine, 19(1), 30–45. [Google Scholar] [CrossRef]

- Essel, H. B., Vlachopoulos, D., Nunoo-Mensah, H., & Amankwa, J. O. (2025). Exploring the impact of VoiceBots on multimedia programming education among Ghanaian university students. British Journal of Educational Technology, 56(1), 276–295. [Google Scholar] [CrossRef]

- Farahmandpour, Z., & Voelkel, R. (2025). Teacher turnover factors and school-level influences: A meta-analysis of the literature. Education Sciences, 15(2), 219. [Google Scholar] [CrossRef]

- Fredricks, J. A., Blumenfeld, P. C., & Paris, A. H. (2004). School engagement: Potential of the concept, state of the evidence. Review of Educational Research, 74(1), 59–109. [Google Scholar] [CrossRef]

- Glass, G. V. (1976). Primary, secondary, and meta-analysis of research. Educational Researcher, 5(10), 3–8. [Google Scholar] [CrossRef]

- Gong, X., Li, Z., & Qiao, A. (2025). Impact of generative AI dialogic feedback on different stages of programming problem solving. Education and Information Technologies, 30(7), 9689–9709. [Google Scholar] [CrossRef]

- Güner, H., & Er, E. (2025). AI in the classroom: Exploring students’ interaction with ChatGPT in programming learning. Education and Information Technologies, 30(9), 12681–12707. [Google Scholar] [CrossRef]

- Herianto, H., Sofroniou, A., Fitrah, M., Rosana, D., Setiawan, C., Rosnawati, R., Widihastuti, W., Jusmiana, A., & Marinding, Y. (2024). Quantifying the relationship between self-efficacy and mathematical creativity: A meta-analysis. Education Sciences, 14(11), 1251. [Google Scholar] [CrossRef]

- Hu, Y., Chen, J., & Hwang, G. (2025). A ChatGPT-supported QIVE model to enhance college students’ learning performance, problem-solving and self-efficacy in art appreciation. Interactive Learning Environments, 1–14. [Google Scholar] [CrossRef]

- Huang, S., Wen, C., Bai, X., Li, S., Wang, S., Wang, X., & Yang, D. (2025). Exploring the Application Capability of ChatGPT as an Instructor in Skills Education for Dental Medical Students: Randomized Controlled Trial. Journal of Medical Internet Research, 27, e68538. [Google Scholar] [CrossRef]

- Johnson, E. S., Crawford, A., Moylan, L. A., & Zheng, Y. (2018). Using evidence-Centered design to create a special educator observation system. Educational Measurement: Issues and Practice, 37(2), 35–44. [Google Scholar] [CrossRef]

- Kuh, G. D. (2003). What we’re learning about student engagement from NSSE: Benchmarks for effective educational practices. Change: The Magazine of Higher Learning, 35(2), 24–32. [Google Scholar] [CrossRef]

- Li, G., Li, Z., Wu, X., & Zhen, R. (2022). Relations between class competition and primary school students’ academic achievement: Learning anxiety and learning engagement as mediators. Frontiers in Psychology, 13, 775213. [Google Scholar] [CrossRef] [PubMed]

- Li, H. (2023). Effects of a ChatGPT-based flipped learning guiding approach on learners’ courseware project performances and perceptions. Australasian Journal of Educational Technology, 39(5), 40–58. [Google Scholar] [CrossRef]

- Linnenbrink-Garcia, L., Rogat, T. K., & Koskey, K. L. K. (2011). Affect and engagement during small group instruction. Contemporary Educational Psychology, 36(1), 13–24. [Google Scholar] [CrossRef]

- Liu, C., Wang, D., Gu, X., Hwang, G., Tu, Y., & Wang, Y. (2025). Facilitating pre-service teachers’ instructional design and higher-order thinking with generative AI: An integrated approach with the peer assessment and concept map. Journal of Research on Technology in Education, 1–26. [Google Scholar] [CrossRef]

- Liu, M., Zhang, L. J., & Biebricher, C. (2024). Investigating students’ cognitive processes in generative AI-assisted digital multimodal composing and traditional writing. Computers & Education, 211, 104977. [Google Scholar] [CrossRef]

- Liu, S., Zhang, S., & Dai, Y. (2025). Do mobile games improve language learning? A meta-analysis. Computer Assisted Language Learning, 1–29. [Google Scholar] [CrossRef]

- Liu, Z., Tang, Q., Ouyang, F., Long, T., & Liu, S. (2024). Profiling students’ learning engagement in MOOC discussions to identify learning achievement: An automated configurational approach. Computers & Education, 219, 105109. [Google Scholar] [CrossRef]

- Livinƫi, R., Gunnesch-Luca, G., & Iliescu, D. (2021). Research self-efficacy: A meta-analysis. Educational Psychologist, 56(3), 215–242. [Google Scholar] [CrossRef]

- Ng, D. T. K., Tan, C. W., & Leung, J. K. L. (2024). Empowering student self-regulated learning and science education through ChatGPT: A pioneering pilot study. British Journal of Educational Technology, 55(4), 1328–1353. [Google Scholar] [CrossRef]

- Niloy, A. C., Akter, S., Sultana, N., Sultana, J., & Rahman, S. I. U. (2024). Is Chatgpt a menace for creative writing ability? An experiment. Journal of Computer Assisted Learning, 40(2), 919–930. [Google Scholar] [CrossRef]

- Page, M. J., McKenzie, J. E., Bossuyt, P. M., Boutron, I., Hoffmann, T. C., Mulrow, C. D., Shamseer, L., Tetzlaff, J. M., Akl, E. A., Brennan, S. E., Chou, R., Glanville, J., Grimshaw, J. M., Hróbjartsson, A., Lalu, M. M., Li, T., Loder, E. W., Mayo-Wilson, E., McDonald, S., … Moher, D. (2021). The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. PLoS Medicine, 18(3), e1003583. [Google Scholar] [CrossRef]

- Pan, X., & Lin, L. (2025). Online presence and technology-enhanced language learning experience: A multivariate relationship of learning aspiration, perceived authenticity and learning engagement. Educational Technology Research and Development, 73(2), 1179–1203. [Google Scholar] [CrossRef]

- Prestridge, S., Fry, K., & Kim, E.-J. A. (2025). Teachers’ pedagogical beliefs for Gen AI use in secondary school. Technology, Pedagogy and Education, 34(2), 183–199. [Google Scholar] [CrossRef]

- Runge, I., Lazarides, R., Rubach, C., Richter, D., & Scheiter, K. (2023). Teacher-reported instructional quality in the context of technology-enhanced teaching: The role of teachers’ digital competence-related beliefs in empowering learners. Computers & Education, 198, 104761. [Google Scholar] [CrossRef]

- Shi, S. J., Li, J. W., & Zhang, R. (2024). A study on the impact of generative artificial intelligence supported situational interactive teaching on students’ ‘flow’ experience and learning effectiveness—A case study of legal education in China. Asia Pacific Journal of Education, 44(1), 112–138. [Google Scholar] [CrossRef]

- Sibley, L., Lachner, A., Plicht, C., Fabian, A., Backfisch, I., Scheiter, K., & Bohl, T. (2024). Feasibility of adaptive teaching with technology: Which implementation conditions matter? Computers & Education, 219, 105108. [Google Scholar] [CrossRef]

- Soleimani, S., Farrokhnia, M., Van Dijk, A., & Noroozi, O. (2025). Educators’ perceptions of generative AI: Investigating attitudes, barriers and learning needs in higher education. Innovations in Education and Teaching International, 62(5), 1598–1613. [Google Scholar] [CrossRef]

- Song, Y., Huang, L., Zheng, L., Fan, M., & Liu, Z. (2025). Interactions with generative AI chatbots: Unveiling dialogic dynamics, students’ perceptions, and practical competencies in creative problem-solving. International Journal of Educational Technology in Higher Education, 22(1), 12. [Google Scholar] [CrossRef]

- Soriano-Sánchez, J. G. (2025). The impact of ICT on primary school students’ natural science learning in support of diversity: A meta-analysis. Education Sciences, 15(6), 690. [Google Scholar] [CrossRef]

- Svendsen, K., Askar, M., Umer, D., & Halvorsen, K. H. (2024). Short-term learning effect of ChatGPT on pharmacy students’ learning. Exploratory Research in Clinical and Social Pharmacy, 15, 100478. [Google Scholar] [CrossRef]

- Tan, C. Y., & Gao, L. (2025). Evaluating methodological quality of meta-analyses: A case study of meta-analyses on associations between parental involvement and students’ learning outcomes. Educational Research Review, 47, 100678. [Google Scholar] [CrossRef]

- Tang, Q., Deng, W., Huang, Y., Wang, S., & Zhang, H. (2025). Can generative artificial intelligence be a good teaching assistant?—An empirical analysis based on generative AI-assisted teaching. Journal of Computer Assisted Learning, 41(3), e70027. [Google Scholar] [CrossRef]

- Tian, S., Wang, D., Wang, J., & Zhong, W. (2025). Empowering GenAI with a guidance-based approach in MTPE learning: Effect on student translators’ cognitive process, final translation quality and learning motivation. The Interpreter and Translator Trainer, 1–26. [Google Scholar] [CrossRef]

- Vasilaki, M.-M., Zafeiroudi, A., Tsartsapakis, I., Grivas, G. V., Chatzipanteli, A., Aphamis, G., Giannaki, C., & Kouthouris, C. (2025). Learning in nature: A systematic review and meta-analysis of outdoor recreation’s role in youth development. Education Sciences, 15(3), 332. [Google Scholar] [CrossRef]

- Woodruff, E. (2024). AI detection of human understanding in a Gen-AI tutor. AI, 5(2), 898–921. [Google Scholar] [CrossRef]

- Wu, J., Jiang, H., & Chen, S. (2024). Augmented reality technology in language learning: A meta-analysis. Language Learning & Technology, 28(1), 1–23. [Google Scholar] [CrossRef]

- Wu, J., Wang, K., He, C., Huang, X., & Dong, K. (2021). Characterizing the patterns of China’s policies against COVID-19: A bibliometric study. Information Processing & Management, 58, 102562. [Google Scholar] [CrossRef]

- Xu, T., Liu, Y., Jin, Y., Qu, Y., Bai, J., Zhang, W., & Zhou, Y. (2025). From recorded to AI-generated instructional videos: A comparison of learning performance and experience. British Journal of Educational Technology, 56(4), 1463–1487. [Google Scholar] [CrossRef]

- Yang, G., Rong, Y., Wang, Y., Zhang, Y., Yan, J., & Tu, Y. (2025). How generative artificial intelligence supported reflective strategies promote middle school students’ conceptual knowledge learning: An empirical study from China. Interactive Learning Environments, 1–26. [Google Scholar] [CrossRef]

- Yang, M., Wu, X., & Deris, F. D. (2025). Exploring EFL learners’ positive emotions, technostress and psychological well-being in AI-assisted language instruction with/without teacher support in Malaysia. British Educational Research Journal. [Google Scholar] [CrossRef]

- Yang, T., Hsu, Y., & Wu, J. (2025). The effectiveness of ChatGPT in assisting high school students in programming learning: Evidence from a quasi-experimental research. Interactive Learning Environments, 33, 1–18. [Google Scholar] [CrossRef]

- Ye, X., Zhang, W., Zhou, Y., Li, X., & Zhou, Q. (2025). Improving students’ programming performance: An integrated mind mapping and generative AI chatbot learning approach. Humanities and Social Sciences Communications, 12(1), 558. [Google Scholar] [CrossRef]

- Zeng, H., & Xin, Y. (2025). Comparing learning persistence and engagement in asynchronous and synchronous online learning, the role of autonomous academic motivation and time management. Interactive Learning Environments, 33(1), 276–295. [Google Scholar] [CrossRef]

- Zhao, G., Yang, L., Hu, B., & Wang, J. (2025). A generative artificial intelligence (AI)-based human-computer collaborative programming learning method to improve computational thinking, learning attitudes, and learning achievement. Journal of Educational Computing Research, 63(5), 1059–1087. [Google Scholar] [CrossRef]

- Zhong, H., & Xu, J. (2025). The effect of fragmented learning ability on college students’ online learning satisfaction: Exploring the mediating role of affective, behavioral, and cognitive engagement. Interactive Learning Environments, 1–15. [Google Scholar] [CrossRef]

| Screening Stage | Inclusion Criteria | Exclusion Criteria | Literature Count |

|---|---|---|---|

| Initial Screening after Literature Search | 1. Records identified from databases (Wos, EBSCO, Scopus, IEEE Xplore) | 1. Duplicate records (n = 41) 2. Non-English records (n = 21) | Initial: 224 After: 162 |

| Title/Abstract Screening | 1. Relevant to the research scope | 1. Irrelevant for the scope (n = 47) 2. Not quantitative studies (n = 22) 3. Book, workshop paper, short paper (n = 16) | Initial: 162 After: 77 |

| Full-text Retrieval | 1. Full text available | 1. No full content available (n = 9) | Initial: 77 After: 68 |

| Full-text Evaluation | 1. Empirical studies 2. Control group setup 3. Complete research data | 1. Non-empirical studies (n = 18) 2. No control group setup (n = 10) 3. Incomplete research data (n = 9) | Initial: 68 After: 31 |

| Items | Coding Rules | Description | |

|---|---|---|---|

| Basic literature information | Article title, authors, and publication year, etc. | ||

| Research data | Sample size, mean value, and standard deviation, etc. | ||

| Dependent variable | Learning engagement | Cognitive development | Conceptual understanding, academic scores, etc. |

| Behavioral competence | Interaction frequency, active questioning, etc. | ||

| Affective attitude | Learning interest, motivation, satisfaction, etc. | ||

| Moderator variable | Educational stage | Basic education | Education at the K12 stage, e.g., primary and secondary school. |

| Higher education | Education in universities, colleges, etc., e.g., undergraduate and postgraduate education. | ||

| Continuing education | Education for post-formal learning, e.g., professional skill upgrading, lifelong learning. | ||

| Duration | <1 day | Actual GenAI usage duration less than one day. | |

| 1 day–1 month | Actual GenAI usage duration from one day to one month. | ||

| 1 month–4 months | Actual GenAI usage duration from one month to four months. | ||

| Learning mode | Independent Learning | Self-directed use of GenAI for learning. | |

| Collaborative Learning | Group-based interaction with GenAI for learning. | ||

| Interaction approaches | Text-only | GenAI interaction where both the tool output and interaction behavior are text-based. | |

| Multi-modality | GenAI interaction involving two or more of visuals, sounds, text, etc. | ||

| Teacher intervention | Without Teacher Intervention | Learning with GenAI without teacher support. | |

| With Teacher Intervention | Learning with GenAI with teacher support, including helping to use AI, task clarification, subject matter understanding, tech issue resolution, etc. | ||

| Model | k | g | 95% CI | Heterogeneity | ||||

|---|---|---|---|---|---|---|---|---|

| Lower | Upper | Q | df | p | I2 | |||

| Fixed | 91 | 0.518 *** | 0.471 | 0.565 | 737.969 | 89 | 0.000 | 87.940 |

| Random | 91 | 0.645 *** | 0.506 | 0.783 | ||||

| Learning Engagement | k | g | 95% CI | Heterogeneity | ||

|---|---|---|---|---|---|---|

| Lower | Upper | Q | p | |||

| Cognitive development | 38 | 0.952 *** | 0.716 | 1.188 | 7.879 | 0.019 |

| Behavioral competence | 25 | 0.481 *** | 0.195 | 0.767 | ||

| Affective attitude | 28 | 0.546 *** | 0.276 | 0.816 | ||

| Variable | k | g | 95% CI | Heterogeneity | |||

|---|---|---|---|---|---|---|---|

| Lower | Upper | Q | p | ||||

| Educational stage | Basic education | 35 | 0.626 *** | 0.386 | 0.865 | 3.956 | 0.038 |

| Higher education | 46 | 0.826 *** | 0.611 | 1.040 | |||

| Continuing Education | 10 | 0.353 | −0.098 | 0.804 | |||

| Duration | <1 day | 21 | 0.537 *** | 0.225 | 0.849 | 2.704 | 0.029 |

| 1 day–1 month | 30 | 0.859 *** | 0.600 | 1.118 | |||

| 1 month–4 months | 40 | 0.647 *** | 0.422 | 0.872 | |||

| Learning mode | Independent Learning | 66 | 0.683 *** | 0.507 | 0.858 | 0.048 | 0.826 |

| Collaborative Learning | 25 | 0.720 *** | 0.435 | 1.006 | |||

| Interaction approaches | Text-only | 34 | 0.754 *** | 0.505 | 1.002 | 0.355 | 0.551 |

| Multi-modality | 57 | 0.659 *** | 0.469 | 0.848 | |||

| Teacher intervention | Without Teacher Intervention | 57 | 0.613 *** | 0.424 | 0.802 | 1.895 | 0.019 |

| With Teacher Intervention | 34 | 0.832 *** | 0.584 | 1.079 | |||

| Educational Level | Dimension | k | g | 95% CI | Heterogeneity | ||

|---|---|---|---|---|---|---|---|

| Lower | Upper | Q | p | ||||

| Basic education | Cognitive development | 15 | 0.777 *** | 0.431 | 1.124 | 1.481 | 0.477 |

| Behavioral competence | 9 | 0.580 * | 0.133 | 1.026 | |||

| Affective attitude | 11 | 0.453 * | 0.050 | 0.856 | |||

| Higher education | Cognitive development | 19 | 1.295 *** | 0.908 | 1.681 | 9.360 | 0.009 |

| Behavioral competence | 12 | 0.381 | −0.092 | 0.853 | |||

| Affective attitude | 15 | 0.700 *** | 0.278 | 1.122 | |||

| Continuing Education | Cognitive development | 4 | 0.353 | −0.078 | 0.783 | 2.102 | 0.350 |

| Behavioral competence | 4 | 0.537 * | 0.106 | 0.968 | |||

| Affective attitude | 2 | −0.014 | −0.622 | 0.594 | |||

| Duration | Dimension | k | g | 95% CI | Heterogeneity | ||

|---|---|---|---|---|---|---|---|

| Lower | Upper | Q | p | ||||

| <1 day | Cognitive development | 9 | 1.051 *** | 0.516 | 1.587 | 5.417 | 0.047 |

| Behavioral competence | 4 | 0.192 | −0.579 | 0.963 | |||

| Affective attitude | 8 | 0.242 | −0.301 | 0.789 | |||

| 1day–1 month | Cognitive development | 11 | 1.067 *** | 0.615 | 1.519 | 2.636 | 0.268 |

| Behavioral competence | 9 | 0.525 * | 0.025 | 1.026 | |||

| Affective attitude | 10 | 0.944 *** | 0.464 | 1.423 | |||

| 1 month–4 months | Cognitive development | 18 | 0.853 *** | 0.522 | 1.184 | 2.898 | 0.035 |

| Behavioral competence | 12 | 0.545 ** | 0.147 | 0.944 | |||

| Affective attitude | 10 | 0.409 | −0.023 | 0.841 | |||

| Learning Mode | Dimension | k | g | 95% CI | Heterogeneity | ||

|---|---|---|---|---|---|---|---|

| Lower | Upper | Q | p | ||||

| Independent learning | Cognitive development | 30 | 0.951 *** | 0.662 | 1.240 | 5.706 | 0.068 |

| Behavioral competence | 16 | 0.413 * | 0.024 | 0.803 | |||

| Affective attitude | 20 | 0.548 ** | 0.199 | 0.898 | |||

| Collaborative learning | Cognitive development | 8 | 0.949 *** | 0.593 | 1.306 | 2.879 | 0.237 |

| Behavioral competence | 9 | 0.620 *** | 0.295 | 0.945 | |||

| Affective attitude | 8 | 0.550 *** | 0.215 | 0.886 | |||

| Interactive Approaches | Dimension | k | g | 95% CI | Heterogeneity | ||

|---|---|---|---|---|---|---|---|

| Lower | Upper | Q | p | ||||

| Text-only | Cognitive development | 17 | 1.077 *** | 0.677 | 1.476 | 4.151 | 0.125 |

| Behavioral competence | 11 | 0.533 * | 0.052 | 1.014 | |||

| Affective attitude | 6 | 0.446 | −0.201 | 1.093 | |||

| Multi-modality | Cognitive development | 21 | 0.892 *** | 0.595 | 1.189 | 4.154 | 0.125 |

| Behavioral competence | 14 | 0.438 * | 0.075 | 0.801 | |||

| Affective attitude | 22 | 0.570 *** | 0.281 | 0.859 | |||

| Teacher Intervention | Dimension | k | g | 95% CI | Heterogeneity | ||

|---|---|---|---|---|---|---|---|

| Lower | Upper | Q | p | ||||

| Without teacher intervention | Cognitive development | 24 | 0.745 *** | 0.457 | 1.034 | 1.502 | 0.472 |

| Behavioral competence | 15 | 0.473 * | 0.111 | 0.836 | |||

| Affective attitude | 18 | 0.553 *** | 0.221 | 0.884 | |||

| With teacher intervention | Cognitive development | 14 | 1.372 *** | 0.943 | 1.801 | 9.387 | 0.009 |

| Behavioral competence | 10 | 0.490 * | −0.001 | 0.981 | |||

| Affective attitude | 10 | 0.531 * | 0.043 | 1.018 | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, K.; Guo, Z. Can Learners’ Use of GenAI Enhance Learning Engagement?—A Meta-Analysis. Educ. Sci. 2025, 15, 1578. https://doi.org/10.3390/educsci15121578

Wang K, Guo Z. Can Learners’ Use of GenAI Enhance Learning Engagement?—A Meta-Analysis. Education Sciences. 2025; 15(12):1578. https://doi.org/10.3390/educsci15121578

Chicago/Turabian StyleWang, Kaili, and Zhencheng Guo. 2025. "Can Learners’ Use of GenAI Enhance Learning Engagement?—A Meta-Analysis" Education Sciences 15, no. 12: 1578. https://doi.org/10.3390/educsci15121578

APA StyleWang, K., & Guo, Z. (2025). Can Learners’ Use of GenAI Enhance Learning Engagement?—A Meta-Analysis. Education Sciences, 15(12), 1578. https://doi.org/10.3390/educsci15121578