Abstract

This study explored two issues: (1) could the number of design awards represent the design level of a country? (2) If the design award is not suitable as criterion, is there a more appropriate one(s)? Beginning with the phenomenon that use the number of design awards as a benchmark for evaluating national design capabilities, its rationality through objective data is examined. The research reviews the existing mechanisms and attempts to establish a more comprehensive one through expert interviews and questionnaires. Six criteria were identified: international activity, designers’ level, future trends, historical impact, lifestyle taste, and environment standards. When these criteria are used to evaluate a country’s design level, the results are in line with the overall impression of design experts. Conversely, the framework based on the number of design awards leads to a significant gap. The results overturn the evaluation systems of design awards. In terms of academic contribution, through the establishment of a new mechanism, the lack of existing ones can be made up. In terms of practical implication, design stakeholders in various countries have a benchmark for inspection when trying to improve their design level or international reputation. The research provides reference for policy formulation and strategy development.

1. Introduction

1.1. “Design Awards Phenomenon” in Taiwan

In the past few years, Taiwan has ranked among the top in the number of awards received in major international design awards. This small island with only more than 20 million people and located on the edge of East Asia has experienced the stigma era of “Pirate Kingdom”, and then it has achieved the international reputation of MIT (“made in Taiwan”) with its strong manufacturing and OEM capabilities.

In recent years, with the injection of government resources, the implementation of relevant policies, and the concerted efforts of private enterprises and academic institutions, Taiwan is committed to investment in design. Continuous talent training, diverse and frequent design activities/exhibitions/ forums, and active participation in international awards, etc., Taiwan has made great efforts to improve its own design level and international visibility in the past few years. In the end, Taiwan was recognized for many design awards, and the international reputation of “DIT (design in Taiwan)” was gradually established.

The official promoter of Taiwan’s design industry, the Taiwan Design Center, held the first International Design Alliance (IDA) conference in 2011, and then successfully assisted Taipei city to be selected as the WDC (world design capital) in 2016. In 2020, the Taiwan Design Center was transformed to become the Taiwan Design Research Institute, expanding the design application level to the governments, schools, transportation, and other aspects.

In recent years, Taiwan has achieved the benefits of substantive diplomacy by participating in international organizations and enhancing its international visibility through design. Years of hard work, cumulative investment of manpower, time, and resources are quite considerable, and some benchmarks are bound to be used for effectiveness evaluation.

How should one evaluate the results of Taiwan’s investment in design? Has the overall design level improved? How do other countries rate Taiwanese design? These issues are not easy to be qualitatively stated clearly. The design awards provide a benchmark for quantitative verification, and they have international visibility, credibility, and influence. They just meet such evaluation needs and provide the most direct and convenient solution.

Generally speaking, the operation of the design award is roughly as follows: (1) obtain as many entries as possible and select relatively high-quality designs that meet the requirements of the design award. (2) Through the award, the design concept that the organizer wants to convey is demonstrated. (3) Through a large number of registrations, directly or indirectly obtaining operating expenses, or tangible or intangible assets (such as the production rights of the winning design), the design award can be attained.

Taiwan’s internal market is small, and export sales have become an important lifeline of the economy. Therefore, international recognition has long been the goal of Taiwan’s active efforts. For example: MIT has become an international recognition of the quality of Taiwan’s manufacturing and is an important asset for Taiwan’s exports.

The same is true in design. Design mainly originated and emerged in European countries and the USA; in Asia, Japan was one of the earlier countries that entered the era of modern design. As a late follower, Taiwan learns design from the aforementioned leading countries. Therefore, when evaluating the achievements and growth of these years, it is natural to look for international perspectives as a basis. It is an inevitable and convenient choice to use the number of international design awards as the benchmark for Taiwan’s investment in design. The large number of entries from Taiwan also coincided with the operational goals of these design awards.

Among the many international design awards, Taiwan is most actively involved in the following four: “iF Design Award (hereinafter referred to as “iF”)”, “Reddot Design Award (hereinafter referred to as “Reddot”)”, “Good Design Award (hereinafter referred to as “G Mark”)”, and “International Design Excellence Award (hereinafter referred to as “IDEA”)”. According to a public speech by the President of Taiwan, the number of the four major design awards won was not many at the beginning (only 16 in 2003), but by 2018, Taiwan has won a total of 3825, including 142 first prizes or gold awards. This splendid figure has become a concrete manifestation of Taiwan’s strong “design power” [1].

According to “Taiwan Design Power Report 2019”, the fastest way for Taiwan’s design industry to gain recognition is to enhance “company reputation” and obtain “free publicity” through international design awards. Up to 84.1% of design practitioners have participated in the International Design Awards, and 1/5 of them participate regularly every year [2].

1.2. World Design Ranking (WDR)

Countries could be ranked according to their economic and military power, but can “design power” be ranked? If so, what criteria should be used to evaluate it?

According to the “World Design Ranking (WDR)”, published by the Italian A’ Design Award (hereinafter referred to as A’), Taiwan ranks 7th in the world, and the top six are China, USA, Japan, Italy, Hong Kong, and the UK. Germany, Spain, Netherlands, Switzerland, Finland, France, and Sweden, which are generally recognized as leading design countries and possess world-class design masters, lag behind Taiwan in this ranking, ranging from 9th to 37th, and Denmark ranks only astonishingly at 61st [3].

Similar rankings have been launched in various design awards, and Taiwan has achieved quite impressive results. The excellent performance of the international design awards has become an important achievement in the design policy of the local government. A public research report by the Taiwan Design Institute, a semi-official design promotion organization, cited WDR as proof of Taiwan’s leap in “design power” [4]. The local academic community also attaches great importance to the number of design awards, and has listed them as key evaluation standards for design schools and professors.

However, is Taiwan really such a leader in international design rankings? Is it ahead of many recognized design-leading countries? Or, to put it another way, is it appropriate to use these design awards as a benchmark for evaluating a country’s “design power”? Does it reflect and match real-world perceptions?

Perhaps it is because of the credibility of the design awards, or complex factors such as politics and interests, that related discussions or questions are rarely seen in research papers. For global design rankings, especially for country-specific rankings, it is difficult to find comparable and influential benchmarks other than WDR. The lack of related mechanisms has left a lot of space for academic research, which has also become the motivation for this research.

This study attempts to establish a country-based design level evaluation mechanism. It is hoped that this attempt will inspire more design scholars, practitioners, and policy makers to further discuss and participate in dialogue so that future mechanisms can be developed more comprehensively.

When the number of design awards is used as a way to evaluate the design level of a collective unit (such as a school, a company, or a country), and participation in design awards also becomes a collective craze, this study calls such a situation the “Design Award Phenomenon”, and the suitability of this phenomenon is questioned.

Through this study, it is expected that a more macroscopic, comprehensive, and rigorous evaluation system can be established.

2. Materials and Methods

2.1. The Definitions of Good Design

How is “Good Design” defined? This topic has been of great interest to design research scholars for a long time, but it is expressed individually, full of various arguments, viewpoints, and guidelines. For example, the “Form Follows Function” proposed by Bauhaus school architect Louis Sullivan, who laid the foundation for modern design, is still the guiding principle that many designers hold as the standard. In the late 1970s, design guru Dieter Rams thought of: “Good design is subjective and can’t necessarily be measured.” But he still tried to come up with the famous “10 Principles of Good Design”, which defines “Good Design” must fulfill the criteria “Innovative”, “Useful”, “Aesthetic”, “Understandable”, “Unobtrusive”, “Honest”, “Long-lasting”, “Thorough down to the last detail”, “Environmentally-friendly”, “As little design as possible”. These principles have become iconic and keep inspiring designers around the world [5].

However, there are still different arguments for the definition of “Good Design”. Steve Jobs also tried to give his opinion on this: “Design isn’t just what it looks like and feels like—design is how it works” [6]. His views on good design tend to be more pragmatic.

Some scholars put forward: “the most important design elements are those that cannot be specified by a standard”. Many designs that are considered “important” and “good” are often overlooked by standards to evaluate designs because of their beauty [7]. Jared Spool, expert on design and usability, echoes this statement: “good design, when it’s done well, becomes invisible. It’s only when it’s done poorly that we notice it.” This is why good design is tricky to define [8].

Over time, there have been guidelines developed for various design fields. For example: in 1997, a group of architects, product designers, engineers, environmental designers, research scholars, etc. led by Ronald Mace in the North Carolina State University initiated “the 7 principles of universal design”. These principles are: “Equitable Use”, “Flexibility in Use”, “Simple and Intuitive Use”, “Perceptible Information”, “Tolerance for Error”, “Low Physical Effort”, and “Size and Space for Approach and Use”. These guidelines are designed to incorporate more care and empathy for people and the environment [9].

In the other example, Jakob Nielsen proposed “10 usability heuristics for user interface design”, which is a design principle formulated in response to human–computer interaction in modern design. The ten principles mentioned in this argument are: “Visibility of system status”, “Match between system and the real world”, “Consistency and standards”, “Error prevention”, “Recognition rather than recall”, “Flexibility and efficiency of use”, “Aesthetic and minimalist design”, “Aesthetic and minimalist design”, “Help users recognize, diagnose, and recover from errors”, and “Help and documentation”.

These design principles are more specific than Dieter Rams’ argument. They are formulated according to the specific design field, and they are more inclined to the design execution [7].

How do you rate “good design”? It has always been a controversial issue, and the focus is not whether it should be evaluated, but what kind of “standard” should be used [10] (Lin, 2007). However, it is precisely because such standards are not easy to set, and different reviewers will produce different judgments. Therefore, the definition of “good design” has always been the most interesting and philosophical dialectical field in the design profession.

2.2. Purpose of the Design Awards and Mechanism for Participation

When talking about “Good Design”, the design award has to be mentioned. Although each design award has a different purpose, the attempt to define, praise, encourage, and even influence how the society sees “Good Design” are the common purposes for most of them.

The design awards have gradually taken shape since the 1950s. In order to help the public distinguish high-quality designs and encourage the production of them, the form of certification and encouragement are given.

Design awards are usually run by specific organizations and employ judges to decide which works meet their criteria for awards and to place a ranking. Through the awards and the interpretation of the judges, the public will more easily understand the value of design. The design award gives design practitioners a quantifiable metric as proof of differentiation from other design peers. Through promotion, the winning works have a higher exposure. Design awards offer occasions for business matching. Through the award criteria and feedback, designers can improve their design skills. In addition, through the publicity of the awards, the original status of the winning works has also been established.

Design awards are often established to convey certain design values. In particular, awards that focus on special fields will help increase the public’s attention to issues in this field (such as environmental protection, sustainability, and technology). Design awards can also be used as incentives to give designers more motivation to make designs better. Design awards also directly or indirectly provide designers with financial benefits (ex. bonuses or government incentives) [11].

A’ has a similar explanation for the purpose of its award: the purpose of a good design competition (or award) is to discover and promote good designs so that good designers can have more public exposure and access to more, updated audiences, potential customers, design enthusiasts, and media. Good designers could create more good designs, obtain more funding, and form a positive cycle. In addition, participating in the award can also positively enhance the designer’s sociability, popularity, and vision expansion [12].

G-Mark proposes: The purpose of the establishment of the design award is to explore and commend good design through design awards and to enrich our life and society [13].

To sum up, the three main impacts of the design award are: (1) raise public awareness of good design, (2) provide incentives for designers and brands to create better designs, and (3) establish and support good design and bring good taste, cultural influence, to achieve educational purposes [11].

Today, the categories of design awards are increasing day by day. There are some awards that set the theme according to the design field (such as: green design, service design, interactive design, etc.), and some are established to shape the social image of the organizer or the demand for design (such as: Lexus Design Award). The categories and goals of these design awards are becoming more and more diverse, and the demands and target groups are gradually becoming more niche. The “Design Award Phenomenon” discussed here belongs to the more mainstream and common category.

According to the different ways of participation, the design awards can be roughly divided into two types: “Registration System” and “Nomination System”. The “Registration System” provides an application channel, allowing those who wish to participate to sign up on their own. This type of design award is represented by the awards discussed in the “Design Award Phenomenon”: iF, Reddot, G-Mark and A’, etc.

The “Nomination System” design award usually excludes the channel of self-registration. The organizer invites experts or committees to provide participating designs by nomination. This type of design award usually considers fairness and will require nominators not to nominate designs that they participated in. This type of design award is represented by the Design of the Year Award (hereinafter referred to as “BDOTY”) and the Pritzker Architecture Prize (hereinafter referred to as “Pritzker”).

There are also some special hybrid forms, such as “The greatest designs of modern times” launched by Fortune Magazine, which invites top designers, scholars, etc. to list up to 10 best designs each and provide reasons for nomination. Then, according to the number of nominations, the top ranking is determined. For those who have been nominated less often and cannot reach a majority consensus, researchers will use language analysis to determine the remaining rankings using five evaluation criteria: “how adaptable and expandable the product is”; “impact on society or the environment”; “ease of use”; “commercial success”; and “whether it redefined its category” [14].

Through data collection, expert consensus meetings, etc., this study sorted out the differences, advantages, and disadvantages of the two categories of design awards: the registration system and the nomination system.

The list is as follows in Table 1.

Table 1.

Mechanism for participating in design awards.

The “Design Award Phenomenon” mentioned in this study includes iF, Reddot, and A’, all of which belong to the “Registration System”. The design awards of the “Nomination System” include Pritzker, BDOTY, etc. Due to fundamental differences in motivation and methods of participation, the latter are not within the scope of this study. Follow-up researchers can further explore related issues.

2.3. The Impact of Design Awards on Academics and Business

The value of design in business has been widely recognized by the market and customers as a way to enhance corporate profits and brand prestige [15]. However, what about design awards? Scholars have found that most respondents believe that the winning works of design awards may not bring direct economic profits to enterprises, but they should have indirect profits. However, the same study also pointed out that the impact of design awards on enterprise performance, whether it is direct or indirect profit, is not significant. In other words, the indirect profit brought by the design award is not as good as expected [16].

Some studies have also shown that award-winning designs are not usually the best-selling items. The study also raises a dilemma: should designers continue to pursue the design standards set by the design awards or put more thought into creating business value for their clients [17]? There seems to be a gap between the ideal standards revealed by the design awards and the reality of the commercial market.

The same dilemma also appears in design education. When the number of design awards is the main evaluation target of school evaluation, it will inevitably crowd out other values that should be taught in design education. After graduation, students face the challenges of the real world, which can no longer be solved simply by winning awards. Designers are entering a new era of “Society 5.0”, with its complex and heavy challenges [18]. Whether a designer can adapt to the rapid changes in the complex environment, as well as the challenges from business and execution, are the real keys to survival.

Don Norman, a well known design scholar, has questioned the current design education and proposed reform. He believes that the current design education is too focused on technical or aesthetic disciplines, and lacks the basic understanding of interdisciplinary (e.g., sociology, science, statistics, etc.) cultivation. The solutions proposed by designers are often superficial or overly simplified due to the lack of knowledge in other fields. Since much design education is conducted in this context, it is difficult for faculty or design award judges to break out of this framework. As a result, even though there are many designers who can produce good-looking and even award-winning designs, they are not able to propose solutions that truly address the core of the problem in the complex real world [19].

Too much attention to design awards may result in similarity in style and form or limitations in thinking. Academic studies have used AI to examine and analyze the appearance of award-winning designs. Using this system, the likelihood of an entry winning an award becomes predictable [20]. There are also books by experienced award-winning designers that teach the secrets of winning awards [21]. When most designers pursue the same design award criteria, or when the results of design awards can be deciphered by appearance or formulaic methods, it is easy for designers to become homogenized and lack the will to think, reflect, break through, or even challenge authority. As a result, in the face of more brutal and complex challenges, designers’ vision and ability may not be macroscopic enough to deal with them.

2.4. Design Rankings by Schools, Cities, and Countries

For ‘Good Design’, it seems that design scholars and experts can’t be satisfied with the formulation or debates of definitions, or the giving and praise of awards. How should one distinguish between ‘Good Design’? How does one find ‘Better Design’ or ‘Best Design’ among the many good designs? The design ranking mechanism was born in response, and it has become an important basis for the design community to compare, evaluate, or improve.

Among various design rankings, there are rankings for different subjects, such as people, companies, schools, cities, and countries. This study attempts to challenge the current method of assessing the national design level by the number of design awards and to identify a more appropriate framework. Therefore, the design evaluation mechanism for collective units is the focus of this study, and the ones for individuals, companies, etc. will not be discussed here.

2.4.1. Design School Rankings

With the trend of globalization and marketization, competition among academic institutions has become increasingly intense, and school rankings are a product of this context [22]. A good ranking is not only a condition to attract excellent students and teachers, but also an important basis for the public to examine the management quality of academic institutions [23]. Ranking is a very convenient reference for school enrollment, students’ choice of schools, evaluation by competent authorities or resource allocation.

The ranking of design schools can be roughly classified into two categories: “Design Award System” and “Comprehensive Evaluation System”. The “Design Award System” is represented by the Reddot Design Ranking (hereinafter referred to as RDR), established by Reddot, and is ranked according to the number of Reddot Design Concept awards obtained by the evaluated units in the past five years [24].

The “Comprehensive Evaluation System” is represented by “The QS World University Rankings by Subject: Art & Design (hereinafter referred to as QS)”. Representatives of various schools and enterprises participate in the evaluation and list the best design schools in their minds (up to 10 domestic and 30 international institutions per response). In addition, the impact of research papers is also listed as a benchmark for evaluation. “Academic Reputation”, “Employer Reputation”, and “Research Impact” become the three criteria used to comprehensively evaluate the level of design schools [25].

The latest rankings of design schools for both systems are as follows in Table 2:

Table 2.

Design School Rankings.

Comparing the school rankings made by the “Design Award System” and the “Comprehensive Evaluation System”, several interesting phenomena could be found: (1) the “Design Award System” only uses the number of single design award as the evaluation criteria for the evaluation unit (school), while the “Comprehensive Evaluation System” usually refers to more evaluation indicators and asks a certain number of experts to take the assessment. (2) Except for the Royal College of Art, London (UK), the two top-ranked lists do not overlap at all. (3) Reddot Design Ranking does not compare schools from all over the world, but distinguishes the two major regions of “Asia Pacific” and “Americas & Europe”, and then compares and ranks them separately. 4). The Asian schools in the Reddot Design Ranking are not at the top of the QS rankings [24,26].

From the above inference, there will be a considerable difference between using the number of design awards and using the comprehensive evaluation index as the benchmark for ranking design schools. The latter combines the views of experts from both academia and industry and can represent a larger consensus in the design community. Therefore, this result can also be interpreted as: when the number of design awards is used as the evaluation benchmark for design schools, the results obtained will be quite different from the consensus of the design community.

Will there be similar conclusions when the unit of assessment is changed from school to country?

2.4.2. Design Cities Ranking

Among the design evaluation mechanisms based on cities, the more well-known and influential ones include World Design Capital® (hereinafter referred to as WDC), UNESCO City of Design (hereinafter referred to as UCD), and The Top 10 Design Cities (hereinafter referred to as T10).

WDC is organized by the World Design Organization® (WDO), and every two years a city is elected for the WDC title. Cities interested in WDC must register with the organizer and prove that the city effectively uses design in economic, social, cultural, environmental, and other aspects of development. Once selected, the city is required to host a year-long event related to sustainable design, urban policy, and innovation [27].

UCD is a part of the UNESCO Creative Cities Network, which includes “Literature”, “Design”, “Crafts and Folk Art”, “Film”, “Music”, “Media Arts”, and “Gastronomy”. The purpose of UCD is to promote the design value of cities and to use design to improve urban life and solve urban problems. The selected cities communicate, observe, learn, and stimulate growth with each other [28].

If a city wants to be selected for the WDC or UCD, it must submit the relevant application documents and pay the registration fee, and the final winner will be selected through the review process. This is similar to the “Registration System” design awards mentioned above: only those who have registered have the opportunity to be evaluated. Therefore, if a city is selected for WDC or UCD, it only means that the city meets the standards required by WDC or UCD, or puts forward more competitive conditions than competing cities.

After examining the relevant goals and evaluation criteria, it can be found that WDC and UCD focus more on the use of design in urban planning, policy formulation, and international activity. In other words, WDC and UCD pay more attention to the city’s enthusiasm for design investment, rather than directly reflecting the city’s overall design level.

Comparing the list of WDC and UCD, it will be found that almost all cities that obtained WDC are also selected for UCD, except that Taipei, which does not have the membership of UNESCO. Also, cities with a long-term boom in design are still missing from both lists (London, New York, Tokyo, Paris, etc.).

Based on the above discussion, the following arguments can be drawn: (1) most of the cities selected for WDC or UCD are actively using design in urban development, or participating in relevant international organizations; (2) cities that have not registered or obtained the title of WDC/UCD cannot be said to be inferior to the selected cities in terms of design and creativity; (3) WDC or UCD are more suitable for examining the design application status of a city and whether it actively participates in international design organization. It is not suitable for comparing the overall design level between cities.

Organized by Metropolis Magazine, T10 annually selects the 10 best design cities in the world. According to the official website, T10 was assessed using an “unusual approach” in 2018 (Metropolis Magazine, 2018). Although it is not clear how the “usual approach” does it, the so-called “unusual approach” is still quite worthwhile to refer to.

Metropolis Magazine obtained responses from 80 of the world’s top designers and architects, who nominated their best match in 3 categories—”design powerhouses”, “buzzing cultural hubs”, and “cities that inspire or personally resonate with them”. The T10 list selected by this model includes cities such as Milan, London, Berlin, which are famous for their design and meet the expectations of design experts, as well as cities such as Shanghai, which are full of new vitality and cultural integration [29].

T10 has set a reasonable evaluation benchmark and obtained feedback from a certain number of design experts. The result is not too surprising or unpredictable, which can be said to be in line with the consensus of design professionals. However, what is interesting is that the new list launched by T10 in 2019 does not seem to adopt the same evaluation criteria and consensus of external experts as in 2018. The selected list of cities is quite different from that in 2018.

List of Design Cities in WDC, UCD, and T10 could be seen in Table 3.

Table 3.

List of Design Cities in WDC, UCD, and T10.

To conclude, the WDC/UCD evaluation mechanisms are based on a registration system in which only the cities that applied could be evaluated. The real significance of being selected is that, among the cities that registered, these cities demonstrate a higher degree of compliance with the evaluation criteria or preferences of the judges.

In contrast, the T10 adopts the nomination system, which does not consider the willingness of cities to apply for participation. As a result, cities around the world are automatically included in the evaluation, which is a better way to examine the overall design level of cities around the world. In addition, since a certain number of design experts participated in the assessment, the results are more in line with the expectations of design professionals.

2.4.3. Design Rankings for Countries

When the scope of the assessment is extended to countries or regions, the relevant measurement mechanisms are rather scarce. Most of the recently found and still active ones are related to design awards, namely, “iF Ranking (hereinafter referred to as iF-R)” issued by iF, and “World Design Ranking (hereinafter referred to as WDR)”, published by A’. Looking further back in time, we found the “Design Competitiveness Ranking” (hereinafter referred to as DCR) published by DESIGNIUM-Center for Innovation Design.

iF-R is ranked according to the number of iF Design Awards a unit has won, according to its official website: “Which companies, countries or creatives have won the most iF DESIGN AWARDS? Filter by continent, country or industry”. It seems that the system can perform ranking queries with categories, such as continent, country, or industry. However, in practice, “Country” cannot be used as a ranking category. According to iF employees, this is to avoid unnecessary misunderstandings and comparisons [31].

The WDR is one of the very few country-based design rankings. Rankings are compared according to the number of As a country has received, and the number of the highest level of awards (Platinum Design Award) determines the ranking priority. In the event of a tie, items such as the next level of awards and total points will be included in the comparison. Different levels of awards are given different points, from the lowest “Iron Design Award” (+2 points) to the highest “Platinum Design Award” (+6 points).

In the 2022 WDR ranking, China ranks first in the world (1/102/11259), and Hong Kong (5/23/3030) and Taiwan (7/17/6052) are also in the top ten. Chinese design occupies three places in the Top 10 list! Other notable countries include: Turkey (8/15/1917), Netherlands (14/10/356), Switzerland (16/8/297), India (20/5/1043), Singapore (21/5/689), Finland (24/4/186), France (28/3/407), Denmark (61/0/157), etc. The numbers marked after the above countries/regions represent the country/region’s overall ranking in the WDR/the number of “Platinum Design Award” awards/ the total WDR score [3] (see Table 4).

Table 4.

Top 28 countries/regions in WDR, 2022.

The DCR is supported by the European Union and was published in the World Economic Forum in 2002, 2005 and 2007. The ranking has seven indicators, focusing on the resources invested by enterprises in R&D—”Company Spending on R&D”, the positioning of enterprises in the global market—”Nature of Competitive Advantage”, the positioning of enterprise on value chain—”Value Chain Breadth”, how companies adopt its innovative approach—”Capacity for Innovation”, the status of technology used in product manufacturing—”Production Process Sophistication”, the diversity of marketing strategies—”Extent of Marketing” and the service quality to customers—and ”Degree of Customer Orientation”. According to the above-mentioned indicators, representatives of different countries evaluate their own countries [32].

DCR clearly has a more diverse and rigorous view than WDR. However, the most recent DCRs available for this study are more than ten years old and may not be able to keep up with the changes in the design field over the years. In addition, the evaluation criteria used in the DCRs seem to be more oriented toward product design and lack comprehensive representation of other design categories, especially new forms of design that have been developed in this decade (e.g., service design, digital design, XR design, etc.). In addition, the evaluation criteria place a lot of emphasis on the attitude and approach to innovation and technology adopted by companies in each country. The above indicators seem to be more related to national economic competitiveness, and the relevance of the national design level needs to be further examined. Spain, Italy and other countries that are recognized as having very high design standards, if they do not invest as much in science and technology, their ranking may not be as advantageous under such criteria.

The top ranking list of WDR and DCR could be seen in Table 5.

Table 5.

Design Countries Rankings.

Can WDR or DCR represent the design level of each country? According to the latest results of the two rankings, there seems to be a considerable degree of difference. WDR only takes the number of design awards as the evaluation index, which has the common defect of the registration system: it is difficult to make a comprehensive comparison among countries with different participation motivations and conditions. On the other hand, DCR has the above-mentioned defects, so it may not be so suitable as the object of verification. In the absence of other rankings or academic discussions, this study chose to re-construct the evaluation framework and invited design experts to use the new benchmark for evaluation. Through a more rigorous, diverse and contemporary viewpoint, this study hopes to dig out some insights.

3. Research Design

This study explored two issues: (1) sould the number of design awards represent the design level of a country? (2) If the design award is not suitable as an evaluation benchmark, is there a more appropriate one?

At present, it is known that WDR uses the number of design awards as the benchmark for ranking the design level among countries. However, how should one verify that the result is appropriate? Determining whether the method is reasonable is difficult due to the lack of other measurement frameworks. Therefore, the focus of this study is to find out how design professionals identify the benchmark of national design level and what key factors affect their perception. The new standard derived from the above content is used to evaluate the global design level, and the results are compared with the previously obtained rankings to find possible contexts.

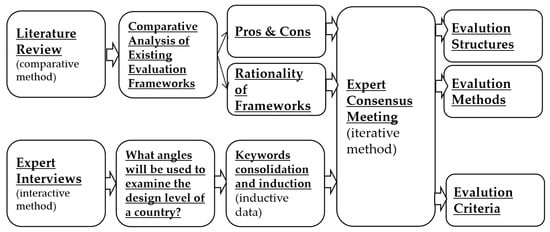

The research steps are divided into the following stages: (1) literature research and interview stage, (2) structure, methods, and criteria induction, (3) pre-test stage, (4) formal questionnaire stage, and (5) statistics and analysis stage (see Figure 1).

Figure 1.

Research process.

In general, when designing indicators or assessment criteria for evaluation, the scope of the target population or the ease of obtaining assessment data are considered. In the case of global university rankings, it is difficult to obtain information or data from universities around the world, or to verify the accuracy of the data, so the design of other evaluation criteria should be considered. For example, QS has chosen to use university reputation as a measurement criterion and formed the characteristics of the ranking [33].

It is the same when assessing the design level of each country, as there may be situations in which objective data is needed, such as the “employment status of designers” and “the operation status of design companies” in various countries. Considering the difficulty of collecting data from multiple countries and the huge workload, we can only settle for the next best thing. The qualitative “impression score” is used as the subject of evaluation.

3.1. Literature Review and Expert Interview

In the absence of a comparison mechanism, in order to verify whether WDR’s evaluation framework is reasonable, this study is based on the spirit of “Grounded Theory” and tries to construct a new framework as a comparison. According to the collected empirical data, a theory close to the essence is obtained through systematic analysis. The research adopts four common methods of “Grounded Theory”: “inductive data”, “iterative method”, “interactive method”, and “comparative method” [34].

Starting from the literature review and expert interviews, the research tries to find out the key factors that will affect the experts’ evaluation of the design level of each country. First, the design evaluation mechanism for different units (such as cities, schools) was reviewed, trying to find elements worth referring to when evaluating countries.

In the process of the literature review, we mainly compare and analyze the existing design evaluation mechanisms, trying to find out the advantages (pros) and disadvantages (cons) of different mechanisms, and clarify the rationality of various architectures. Through expert consensus meetings, evaluation frameworks were selected, for their results are more in line with the expert perception. Next, the common characteristics of these frameworks are sorted out, and an evaluation framework and method suitable for this study are constructed.

In order to establish an evaluation framework that accords with the cognition of design experts, interviews with relevant experts are very helpful. The expert interview process adopts open-ended question, trying to find out from what angle(s) a design expert will approach when evaluating a country’s design level. What are the key factors that will influence the expert’s judgment? Examples of interview topics are: (1) how do you evaluate the design level of a country? (2) When someone mentions whether a country’s design level is high or low, what do you think is the key factor that makes the difference? (3) Countries are often evaluated and ranked by economic strength, military strength, etc. If there is a national “design strength” evaluation, how should it be evaluated?

3.2. Structure, Methods, and Criteria Induction

During the literature review phase, it was found that all design rankings (e.g., RDR, WDC, UCD, WDR) that use the registration system have the drawback that non-registered units cannot be evaluated. After the expert consensus meeting, it was concluded that such rankings could not be used comprehensively to examine the design levels of schools, cities, or countries around the world and were not suitable as a model for this study.

QS/T10 utilizes a variety of evaluation criteria with the participation of a certain number of experts. The results are more in line with the experts’ perceptions. However, we also found that QS/T10 could not include all the evaluated units (schools, cities) in the ranking. QS/T10 invites experts to list a certain number of the best design schools and cities that meet the evaluation criteria and then ranks them cumulatively. This method is very helpful to find out the best design schools and cities that most experts agree on, but it is not easy to rank the middle and fall-behind groups. In order to fill the gap, it may be necessary to rely on other objective data. Since the acquisition of global objective data requires a lot of manpower, time, and money, it may be necessary to wait until future research conditions permit. Therefore, this study is based on the QS/T10 rating framework, with some minor modifications. Instead of just giving a list of the best in mind, the experts rate each country according to the assessment criteria. In this way, the units in the middle and fall-behind design categories can be evaluated as well.

In terms of expert interviews, the invited interviewees are mainly design experts who have a good understanding of the international design status. The key points of the interviews will be excerpted and reviewed, screened, refined, and summarized through expert consensus meetings. The criteria for later evaluations are derived from this. In addition, the expert consensus meeting also identified the countries and design fields to be assessed.

Steps to establish an evaluation framework could be seen in Figure 2.

Figure 2.

Steps to establish an evaluation framework, based on the four common methods of ”Grounded Theory”: “inductive data”, “iterative method”, “interactive method”, and “comparative method”.

According to the results of the interviews, it is found that when design experts assess the design level of a country, the perspectives they take can be roughly classified into the following five indicators: “International Activity Index”, “International Learning Index”, “Top Design Index”, “Daily Design Index”, and “Cross-border Business Index”. The above indicators can be further divided into two categories of “People” and “Things”, and a total of 10 evaluation criteria are obtained (see Table 6).

Table 6.

Criteria derived from the first stage induction.

3.3. Pre-Test

With reference to the WDR, five countries/regions for evaluation were selected through an expert consensus meeting, including: Taiwan (7/17/6052), Turkey (8/15/1917), India (20/5/1043), France (28/3/407), and Denmark (61/0/157). The numbers marked after the above countries/regions represent the country/region’s annual overall ranking in WDR/the number of “Platinum Design Award” won/the total WDR score.

The selection of these countries is based on two conditions: (1) the countries/regions with the leading, mid-level and fall-behind design levels recognized by the experts must be included; (2) those with a gap between the WDR ranking and the actual design level perceived by experts are preferred to be selected.

Based on the summarized assessment criteria, a questionnaire was designed and distributed. Then, after preliminary statistical analysis of the results, the evaluation criteria were further re-examined. Factors with low reliability/validity or overlapping effects were removed. Afterwards, the next stage of questionnaire and analysis was carried out using the restructured evaluation criteria.

3.4. Formal Questionnaire

After the questionnaire and statistical results obtained in the pre-test stage, as well as the feedback from the respondents, this study further consolidated the relevant benchmarks. Three major indicators and six evaluation criteria are formed, as shown in Table 7.

Table 7.

Three major indicators and six evaluation criteria used to evaluate the national design level.

The questionnaire is divided into three parts: basic information, questionnaire introduction, and evaluation content. The basic information is mainly to obtain the profiles of the respondents, such as gender, age, education level, design professional qualifications, etc. For the content of the evaluation, the respondents are asked to rate each country according to the six “Criteria”. There is also a comprehensive design evaluation for each country, “Overall Impression”, which is used for comparison and reference purposes. In this questionnaire, a 7-point Likert scale is used. The results of the questionnaire will also be used for comparison and analysis with WDR.

Since the discussion of related topics requires a considerable understanding of the design status of various countries, the respondents are mainly design experts who have long-term observations on the international design status or have considerable experience in international design activities.

After discussion at the expert consensus meeting, nine countries/regions were selected for evaluation, including: South Korea (17/7/972), the USA (2/74/4690), India (20/5/1043), Denmark (61/ 0/157), Taiwan (7/17/6052), France (28/3/407), Japan (3/48/2574), South Africa (32/3/118), and Turkey (8/15/1917). The numbers marked after the above countries/regions represent the country/region’s annual overall ranking in WDR/the number of “Platinum Design Award” won/the total WDR score.

To select the countries/regions to be evaluated at this stage, the expert consensus meeting first refers to the WDR list and selects two to four countries that the experts consider to be in the leading, middle, and fall-behind stages of the international design level. In addition to countries such as Japan and the United States that have little difference between the WDR ranking and expert cognition, countries with large differences in WDR ranking and expert cognition (such as Denmark, France, India, etc.) are also selected as comparisons. It is hoped that through follow-up questionnaires and statistical analysis, the correlation between evaluation criteria and national rankings will be found.

3.5. Statistics and Analysis

In order to explore the comparison of design strengths among countries/regions, and to find out the correlation between each criterion and the overall evaluation, this study uses methods such as MDS (multidimensional scaling), ANOVA, and regression analysis to interpret the data.

The questionnaire was carried out in two stages. In the first stage, a total of 76 questionnaires were collected; in the second stage, a total of 106 questionnaires were collected. The proportion of male and female respondents in the second stage was nearly half (50.9% and 49.1%); the age groups were mainly 40–60 years old (58.5%) and 20–40 years old (30.2%); the highest educational background was graduate school (68.9%/ 25.5%) and universities (25.5%); for design professional experience, seniors are the main ones (64.2%), followed by three to six years of experience (12.3%).

4. Findings and Discussions

4.1. Descriptive Statistics

The questions are divided into two parts. The first part evaluates the overall design level (overall impression) of nine countries/regions. In the second part, respondents are asked to rate the design status of each country/region based on the six criteria summarized in this study. The average score of “Overall Impression” obtained by each country/region in descending order is: Japan (6.27), France (6.13), Denmark (6.12), USA (6.04), South Korea (5.25), Taiwan (4.80), Turkey (4.05), South Africa (3.96), and India (3.80).

The scores for the six criteria show different results across countries/regions, but generally still echo the “Overall Impression” scores, with differences between individual criterion(s). Countries with high “Overall Impression” scores generally also perform well across individual criterion, but those with the highest “Overall Impression” scores may not necessarily have the highest individual criterion. Compared with other countries in the top scorer group, the scores in different criteria are different; a similar situation can be seen in the middle or fall-behind countries (see Table 8).

Table 8.

The scores and statistical pressure coefficients obtained by each country/region in the overall (f0—“Overall Impression”) and individual (f1~f6) criteria.

4.2. MDS (Multidimensional Scaling) Analysis

From the descriptive statistics, it can be roughly seen that there is a certain degree of correlation between the “Overall Impression” scores given by design experts for each country/region and the individual criterion scores. But how does one interpret this correlation? What are the strengths and weaknesses of each country? How does each criterion affect the difference in overall score distribution? These questions are difficult to draw intuitively from descriptive statistics. Therefore, this study adopts the MDS analysis method to further analyze the results of the questionnaires.

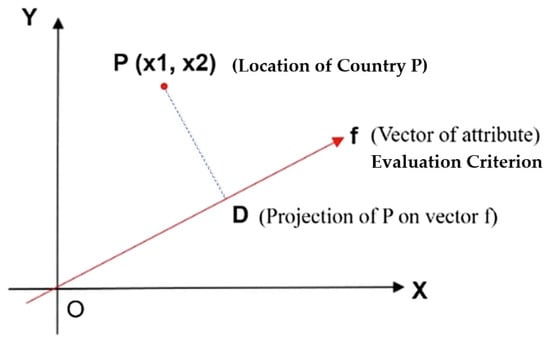

Through MDS analysis, the measured units have different landing positions in space, and with the axis coordinates formed by each evaluation criterion, it is possible to delineate the subgroups among the units and find out the similarities and differences between them.

Another important information is OD distance in a MDS plot. On the coordinate plane, each point of the observed countries has an orthogonal projection on the vectors (representing each evaluation criterion). The distance of projection point D onto the origin point O demonstrates the characteristic strength, of which the vector contributes to the country (see Figure 3).

Figure 3.

OD distance on MDS plot.

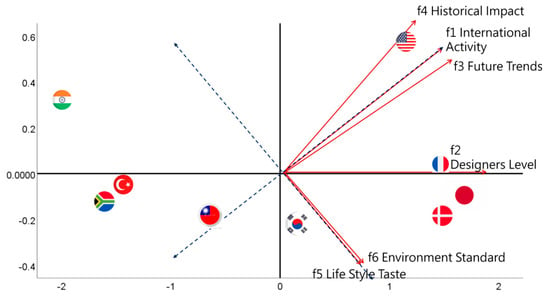

Also, we found out that two criteria, “f1-International Activity” and “f5-Life Style Taste”, are about 90 degree in space. If we take these two as new axes and draw new coordinate geometry, four new quadrants would be formed (see Figure 4, Table 9).

Figure 4.

MDS chart.

Table 9.

Stress and squared correlation (RSQ) in distances & Configuration derived in 2 dimensions. For matrix. Stress =0.01158 RSQ = 0.99976. Stimulus Coordinates Dimension.

Through this new coordinate geometry, it can be seen that the landing points of the nine countries/regions can be roughly divided into the following groups:

- Leading Countries

All countries that fall into the new 2nd quadrant, France, Japan, and Denmark, are the leading design countries that score high in all attributes. On the other hand, the United States, despite falling into the new 1st quadrant due to mediocre performance in “Environment Standard” and “Life Style Taste”, still has impressively high scores in other categories, and thus is still a leader in design. All the above countries correspond to their high “overall impression” scores (from USA’s 6.04 to Japan’s 6.27), thus they could be proven to be the leading countries in design.

Among the design-leading groups, France, Japan, and Denmark are quite close in the MDS chart, and they all scored high in each criterion.

Japan: except for “Historical Impact”, which is slightly inferior to the United States and France, all other criteria ranked 1st or 2nd, which can be said to be the most comprehensive design leading country this time. The position in MDS also echoes the highest score achieved by “Overall Impression”.

France and Denmark: The two countries are very close to Japan in the MDS chart. When looking at the vertical position of each criterion axis, they can obtain quite high-level landing points, and the results also echo the statistical data. Denmark has many world-class design masters and classic and timeless design works. In addition, high-quality design can be seen almost everywhere in Denmark, and “Good Design” is integrated into the daily life and environment of ordinary citizens. These are all reasons why Denmark is a design leader. France has many influential international design celebrities, creative and surprising design works, as well as high-profile design rookies, the ability to lead future design trends, and people’s attention to life taste. These are the key strengths of French design at the forefront. Denmark and France were highly appraised by all critics, and “Overall Impression” closely followed Japan with only a slight gap, which proved the impression of the two countries as design powerhouses in the eyes of experts.

USA: The USA is the only country in the leading group that falls in the new 1st quadrant (but, on the edge of the second quadrant). American designers are often invited by other countries to carry out design projects, speeches, teaching and other activities. Many American designs also play a pivotal role in design history, becoming “Iconic Designs”. In addition, futuristic design innovations often sprout from the USA. The above situation can be proved in the MDS chart: the USA is in the highest position on the axes of “International Activity”, “Historical Impact”, and “Future Trends”. It could also be seen in the descriptive statistics that the USA has the highest score among the nine countries/regions in these corresponding criteria.

How can the USA fall into the new 1st quadrant when it ranks 1st in multiple criteria? As can be seen from the MDS chart, the two criteria axes of the USA in “Life Style Taste” and “Environment Standard” fall to the left (relative to the origin). In terms of statistical scores, the USA also obtained only 4.99 and 5.31 respectively, which are not outstanding scores. The reason why these two criteria are not prominent may be: compared with other leading design countries, the impression of the United States, whether it is the choice of daily necessities by the American people, or the public space they live in, is not so detailed and sophisticated. In addition, US consumers seem to value price and durability over quality, which may also contribute to the mediocre performance of the relevant criteria.

On the whole, these countries, which are in the leading design group, are very consistent with experts’ perception of global design trends. The scores of each criterion and the distribution of MDS can also reasonably explain the design status of each country. The gaps between these countries are very small, and it could be said that the final ranking is determined by very small details.

- Mid-level

South Korea and Taiwan, with overall impression scores of 5.25 and 4.80 respectively, both fall roughly inside or near the new 4th quadrant, which are the mid-level design countries/ regions.

Compared to other countries, South Korea and Taiwan are relatively close on the MDS chart; South Korea is in the new fourth quadrant, and Taiwan is in the third (but next to the fourth). In addition, both of them obtain a mid-level score of about 4 to 5 points in each criterion, so they are classified as “Mid-level” here.

It can be seen from the MDS chart that South Korea and Taiwan are lagging behind the leading group in the four criteria axes of “International Activity”, “Designers Level”, “Future Trends”, and “Historical Impact”. South Korea has a better performance than Taiwan, especially in the two criteria of “Life Style Taste” and “Environment Standard”, which are not inferior to the USA. The “Mid-level” classification of South Korea and Taiwan from the six criteria echoes their evaluation in “Overall Impression”.

The possible reasons for the difference in evaluation between South Korea and Taiwan could be roughly attributable to: (a) South Korea started the development of modernization earlier, (b) international brands such as LG and Samsung, as well as K-pop’s global influences, drove the vigorous development of related industries, and (c) Seoul took the lead in taking design as the focus of urban development and improving the design level of various public constructions.

In recent years, Taiwan has vigorously promoted the value of design through the design organization established by the government and has also received a lot of international design awards. However, most of the design activities, exposure, and projects are still confined to the island. International participation is limited to exhibitions, events, international conferences, several specific design awards, etc. Perhaps pushing designers to the international stage will be the key to bringing Taiwan closer to a design-leading country.

- Fall-behind

Turkey, South Africa, and India, with overall impression scores 4.05, 3.96, 3.80 respectively, all fall inside the new 3rd quadrant, and they are the fall-behind design countries. Some of the survey participants responded that they are not familiar with the design status of these countries.

The three countries in this group are all in the new third quadrant of the MDS chart, with Turkey and South Africa being very close, and India alone being the furthest from all other countries. In the descriptive statistics, Turkey and South Africa have scored 3.30~3.92 in each criterion. How to make breakthroughs in any one or two criteria will be the key to whether Turkey and South Africa can advance in the rankings.

The biggest difference between India and the other two countries in this group is in the two criteria of “Life Style Taste” and “Environment Standard”. India only obtained the lowest score of all nine countries/regions. This score may correlate to a considerable extent with India’s poorer overall economic conditions, quality of life, environmental conditions, etc. How does India use design to transform its international image? An improvement in the overall economy, or a design upgrade for affordable goods, may be more appropriate for the country’s current conditions.

The design rankings of the above countries/regions are derived from the MDS spatial distribution of each criterion (f1~f6). The results echo the corresponding overall evaluation values (f0). The leading countries score from the USA (6.04) to Japan (6.27); mid-level includes South Korea (5.25) and Taiwan (4.80); and fall-behind ranges from India (3.80) to Turkey (4.05) (see Table 10).

Table 10.

Analysis and comparison of 3 items: “Overall Impression” evaluation, descriptive statistical analysis, MDS.

4.3. Design Awards Ranking vs Design Ranking as Perceived by Experts

This study found that when evaluating the design level of a country, whether it is the overall impression of experts’ intuition (“Overall Impression”) or the results of questionnaire analysis measured by six criteria, all point to the same conclusion: The leading groups are Japan, France, Denmark, and the USA; the mid-level groups are South Korea and Taiwan; and the fall-behind groups are Turkey, South Africa, and India.

On the contrary, the rankings with the design awards as the only factor (such as: WDR, iF Ranking, and Reddot Design Ranking), it could be found that there is a considerable gap between the ranking results and the design experts’ perception of the current status.

Taking WDR as an example, among the 114 countries/regions on the global list, the United States and Japan still occupy the positions of the leading groups (2nd and 3rd, respectively). However, Taiwan in the mid-level group and Turkey in the fall-behind group are in the Top 10 in WDR (7th and 8th respectively). Even more surprising, India in the fall-behind group ranks much higher on WDR (20th) than the design powerhouses France and Denmark (28th and 61st respectively). Taking the number of design awards as the only criterion for global design ranking, the evaluation results are obviously different from the cognition of design experts (see Table 11).

Table 11.

The overall impressions of experts on the design levels of different countries, in comparison with WDR and DCR Ranking.

The reason for such a gap may be found in the motivation for participating in the design awards. Taking the well known iF, Reddot, G-Mark, and A’ as examples, applicants need to fill in many forms and documents and then pay a registration fee to enroll. Therefore, participants in such awards must be motivated enough to be willing to spend considerable time, effort, and expense. Usually, participants of these design awards hope to use the awards to prove their design abilities, or as a means of marketing, publicity, and obtaining subsidies.

Some designers who have established a considerable reputation, or brands and manufacturers who do not need to rely on awards to prove their design quality, are less motivated to apply for these design awards. Therefore, these “Registration System” types of design awards are mainly attracted to those who need to use the awards to establish their own reputation or achieve certain publicity effects.

When the scope of discussion is extended to the national level, those countries or regions that want to gain international recognition and break through the status quo are the most active (such as China and Taiwan); some European countries that have already established themselves as global leaders are not so active. Based on the current situation, Asian countries (especially the Chinese-dominated regions) are the main participants of the design awards, while European countries are relatively indifferent. It is not difficult to understand that those design powerhouses are not outstanding in such awards.

Design awards are attractive to those who hope to gain recognition through awards and obtain some commercial and marketing purposes. There is an interdependent “supply-demand” relationship between design awards and applicants. It cannot be denied that the design award is still an important driving force for improving design. However, winning the award only represents the affirmation of the design, and it cannot be said that the award-winning designs are better than the ones that were not awarded. If this premise is ignored and the number of awards is used as the benchmark for comparison of design capabilities, it will be far from the true purpose and value of the design award and will fall into the myth of chasing awards.

When the results of this study are compared to the DCR, interesting findings emerge. Although the DCR is a ranking from more than a decade ago and uses different criteria, it surprisingly yields similar results. For the sample of nine countries in this study, the ranking order is Japan, France, Denmark, the United States, South Korea, Taiwan, Turkey, South Africa, and India, and the ranking of DCR is Japan, Denmark, the United States, France, Taiwan, India, and South Africa. Among them, Turkey was not found in the DCR 2007 ranking and cannot be compared. The only two groups with slight differences in rankings are South Korea/France and India/South Africa. If the rankings are separated into leading, middle, and fall-behind groups, only South Korea is different.

Considering the time difference of more than ten years, as well as the difference of evaluation criteria and methods, the similarity between the two rankings is worth exploring. This study puts forward the following bold inferences: (1) although many new design fields have sprung up in the past decade, the global design ranking seems to have not changed much. (2) The indicators adopted by DCR are mostly related to industrial and technological innovation, and the evaluators evaluate their own countries, resulting in slight differences in rankings. (3) To some extent, the newly constructed evaluation framework of this study has been verified and supported by DCR.

4.4. The Influence of Each Criterion on the Overall Evaluation

In addition to descriptive statistics and MDS analysis, this study also used ANOVA and regression analysis to find associations between six criteria and overall design evaluation. The relevant findings are as follows:

Overall design evaluation was significantly and positively associated with Criteria 1—“International Activity” in eight of nine countries (South Korea: p < 0.01, USA: p < 0.001, Denmark: p < 0.05, Taiwan: p < 0.001, France: p < 0.001, Japan: p < 0.01, South Africa: p < 0.001, Turkey: p < 0.01); followed by criteria 2—“Designers Level”, which has a significant and positive impact on seven countries (South Korea: p < 0.01, USA: p < 0.001, Taiwan: p < 0.01, France: p < 0.01, Japan: p < 0.01, South Africa: p < 0.05, Turkey: p < 0.01); criteria 3—“Future Trends” (South Korea: p < 0.05, France: p < 0.05, Japan: p < 0.01); criteria 4—“Historical Impact” (Taiwan: p < 0.05, France: p < 0.05, Turkey: p < 0.05); criteria 6—“Environment Standards” (Denmark: p < 0.001) had a significant and positive impact on the overall design evaluation of one to three countries/regions, respectively; criteria 5—“Lifestyle Taste” did not significantly affect the overall design evaluation of any country/region in this study.

It can be deduced from the above that the overall design evaluation of a country will be greatly affected by the international activity and the level of designers. Due to differences in their background, culture, economy, and other factors, each country’s evaluation is affected by the criteria differently. For example, Denmark was the only country whose overall rating was significantly affected by the “Environment Standard” (p < 0.001). The respondents had a very good impression on the design standards of Denmark’s architecture, transportation, public construction, etc., which positively affected the overall evaluation of Denmark.

“Future Trends” has a significant impact on the overall evaluation of Japan, South Korea, and France (Japan: p < 0.01, South Korea: p < 0.05, France: p < 0.05). Japan and South Korea are in a leading position in futuristic fields, such as high-tech and interactive design; French design has an avant-garde and experimental style. These are all key factors contributing to the overall high evaluation of the above-mentioned countries.

4.5. The Influence of Subjectivity and Objectivity on Evaluation

The authoritative QS ranking of global design schools, the T10 design ranking of global cities, and the design ranking among countries in this study all rely heavily on the experts’ impressions of the evaluated objects.

Due to the shortage of manpower, funds, resources, etc., it is quite difficult to obtain objective information and data from various countries. This research has to conduct questionnaires without providing background information. As some compensation, there is no limit to the response time, nor does it limit the possibility of respondents to find reference materials. Interestingly, respondents still mostly rely on their own impressions of the subjects to respond.

How do international organizations with relatively adequate resources deal with similar issues? For QS, there are a total of five criteria. Among them, “Academic Reputation” is a list of domestic and foreign schools that respondents think are excellent in this field (except their own schools). “Employer Reputation” is a list of domestic and foreign schools that respondents (employers) consider excellent or prefer when considering employment. This list is without the channeling for different faculty areas [17].

T10 also uses a similar method. After formulating the evaluation criteria, nearly a hundred design experts are asked to propose the most suitable cities in their minds, and then the ranking is calculated.

The above assessment methods have a considerable degree of subjective components. Could these subjective impressions lead to biased or misinterpreted assessment results? Subjective impressions are based on respondents’ observations, learning, contacts, and interactions with the object over many years, and are influenced by factors such as education, culture, life, and work experience. Judgments based on the accumulation of these experiences can also be said to be framed by some objective content.

Subjective judgments, especially those from experts, have long been an important basis for design rankings. However, there is indeed an effect of familiarity with the subject being assessed. Therefore, ranking the leading group in this way is very effective; but, for the evaluation of the mid-level or fall-behind group, there may be a possibility of a decline in discrimination due to lack of familiarity.

The quality of the design is difficult to quantify, so it still relies on the subjective judgment of experts. Design evaluations that rely on subjective impressions may be imperfect but are still necessary until a better way emerges. In order to avoid bias caused by a small number of individuals, it is best to obtain a certain number of experts to participate in the evaluation and achieve sufficient consensus to make the results more reliable and authoritative.

4.6. The Impact of Outstanding Performance in a few Fields on the Overall Evaluation

The evaluation framework adopted in this study mainly refers to the mechanism of QS and T10, and the evaluation is carried out based on the overall design status of a country. Various design fields of a country (e.g., visual, product, interior, architecture, digital, fashion, etc.) are covered.

Respondents may be familiar with one or more specific design field(s) of a country, but not others. In this case, they may rate based on their impressions of the familiar domain(s), a situation that is prone to bias.

Existing design evaluation mechanisms (such as: WDR, WDC, T10, QS, etc.) are often used as a reference for evaluating the design level of countries, cities, schools, etc. Several of them have been regarded as authorities in the field. For example: QS has always been the most important and even the only reference for many design students when choosing schools. However, these evaluation mechanisms are based on the overall design level and are not subdivided by design fields. Therefore, the bias mentioned here is inevitable. Outstanding performance in a few field can lead to an overall evaluation advantage.

The New School (Parsons School of Design, hereinafter referred to as Parsons) has been ranked among the top three in the QS world rankings for many years. Although the design departments of Parsons are generally well-received, the most prominent and internationally renowned ones are only a few, such as fashion. National Cheng Kung University mainly focuses on science and engineering, but due to the outstanding performance of a few design departments, such as architecture and industrial design, it was shortlisted in the QS Global Top 100. The case of National Taiwan University is even more peculiar. Just by offering courses such as Design Thinking at Stanford D-School, it has also entered the QS Top 100 rankings without a substantial department [17].

A similar situation was encountered in the process of this research. Some respondents said that they gave relatively high marks to Denmark, mainly because they were impressed by the classic Danish furniture design. Respondents, however, were less familiar with other fields of design in the country.

If the design field is not subdivided, the above results are inevitable. To reduce this kind of bias, it is necessary to conduct individual evaluations by field, and then calculate them collectively. However, the investment of time, manpower, resources, and other costs can also be quite considerable.

5. Conclusions, Implications, and Limitations

5.1. The Design Award Cannot Comprehensively Reflect the Real State of the Design World, Which Is the Inevitable Result of its Own Structure

This study found that when the number of design awards is used as the only criteria for global design ranking or evaluation, the results will have a considerable gap with the perception of experts. This kind of ranking mechanism ignores many other more important factors and is only the result of comparison among applicants. For those who have not registered for various reasons, the fairness of the competition in the same field is completely excluded.

Winning the design award can only mean that a relatively high-quality design was selected among the works submitted at that time. To be more precise, the works that better met the selection criteria and the appeal of the design award were selected. As for those designs that were not submitted due to various factors, no comparison can be made through this type of mechanism. Therefore, snubs are a natural occurrence of design awards.

If the number of design awards is used as the benchmark for the evaluation of a unit, more caution is required. Due to the different representation and significance of each award, none of the awards is comprehensive enough, and most of them can only reflect the design status of a certain aspect.

Based on the above, this study believes that the design award cannot fully reflect the real design situation, which is a structural necessity. Using the number of design awards as a criterion for evaluating a country’s design level can easily lead to bias. In addition, due to differences in motivation, countries with different design development status have significant gaps in their willingness to participate in the design awards. This type of design award evaluation mechanism lacks fairness and comparability for all designs to compete on the same stage. Therefore, the design award is not appropriate as a single or primary criteria for assessing the level of design across countries.

Although this study only uses the country as the unit of comparison, it can be boldly speculated with the same logic: when the evaluation unit is replaced (such as: school, city, etc.), similar conclusions could also be obtained.

5.2. 6 Criteria for Evaluating the Design Level of a Country

It is inappropriate to use the number of design awards as a benchmark for global design evaluation, and other more influential evaluation mechanisms are also lacking. Therefore, this study attempts to construct a new evaluation mechanism. According to the theoretical basis of “Grounded Theory”, expert interviews were conducted, and six indicators were summarized: international activity, designers’ level, future trends, historical impact, lifestyle taste, and environment standards. Questionnaire surveys and statistical analysis were conducted using these six criteria, and the results echoed the overall design evaluations of design experts.

The six criteria affect the overall design evaluation of each country to varying degrees. Almost every country has been significantly influenced by “International Activity” and “Designers Level”. However, due to differences in background, culture, economy, etc., they are influenced by other individual criteria to varying degrees. If policy makers want to improve the overall evaluation status of their countries, they can refer to the framework proposed in this study and strategically focus on criteria with a high degree of influence and optimize the use of resources.

5.3. Reflections on Taiwan’s “Design Award Phenomenon”

The “Design Award Phenomenon” mentioned in this study is most pronounced in Asian countries/regions, such as Taiwan. The government cites the number of design awards as a symbol of national “design power”, and it is also an important criterion for evaluating design companies and design schools. The craze for design awards has become a collective social phenomenon.

According to the research of “Taiwan Design Power Report 2019”, two contradictory phenomena have been discovered: (1) Taiwanese designers hope that by winning international design awards, they can obtain benefits such as increased prestige and free publicity. More than 80% of them have participated, and 1/5 of them participate regularly every year. (2) Design awards are not the primary consideration when design firms measure their own performance [2].

Why are so many Taiwanese design companies willing to spend time and money to participate in design awards that are not the primary consideration for performance? Part of it comes from peer competition (other design companies are fighting for it, it seems that it is impossible not to obtain it), and part of it comes from customer requirements (the winning design is easier to market). Of course, it is also due to the lack of objective understanding of good designs/designers/design companies (without seeing the award-winning qualifications, and it is difficult to conclude that it is a good design/designer/design company). The reasons for pursuing the design award are quite helpless, and it is difficult to directly convert the awards and cash them in the actual revenue, which creates a dilemma and forms a vicious circle.

A similar situation has also occurred in Taiwan’s academic circles. The number of design awards is used as the benchmark for evaluation of design schools, and even the promotion conditions for professors. An educational policy targeting design awards does have the potential to stimulate students to create better designs, and it is easy to review the results in the short term. However, too much emphasis on winning prizes may crowd out other values that need to be taught in the design education process.

The real challenges of a design career are the problems, changes, and complex realities that students are bound to encounter after graduation. If students have a solid foundation, a correct perspective, and a macroscopic vision of the world, they will have a better chance of survival and the ability to cope with and adapt to the real world. These are aspects of design education that cannot be taught through technical aspects but should not be overlooked.

In Taiwan, the phenomenon that the number of design awards is used as the benchmark for measuring individual, collective, and even the overall national design level has been regarded as the norm. Although it brings considerable convenience to the evaluator, it ignores many other more important design aspects. The lack of supporting facilities after the award also makes it difficult to extend the value after the goal is achieved. According to this study, this evaluation mechanism is prone to bias, and there is a significant gap between the evaluation results and the cognition of design experts.