Abstract

The development of digital teaching competence is one of the fundamental requirements of what is known as the “knowledge society”. With the aim of evaluating, from an expert point of view, the design of a training itinerary oriented to the training development of non-university teachers under the t-MOOC architecture for the improvement of digital teaching competence (DTC), the following quantitative research is presented. For this purpose, a quantitative validation design was established using the expert judgement technique. To measure the level of the expert coefficient, the expert competence (K) index was calculated for a random sample of 292 subjects participating in the study: teachers belonging to preschool and primary schools in the Andalusian community. The responses of those experts who scored ≥0.8 on the external competence index were then selected. The results demonstrate the validity of the tool produced (T-MOOC) as well as the uniformity of the criteria of the experts participating in the evaluation. Consequently, the necessary structuring of personalised training plans supported by reference models is discussed.

1. Introduction

Today’s society is immersed in profound and diverse changes all brought about by advances in the use of information and communication, thus generating a new technological era.

This technological context generates new ways of communication and, therefore, the emergence of leadership styles and frameworks that govern the expansion of new cultures, which are progressively challenging [1].

This impact generated in society creates collaborative and communicative environments, which also generate improvements in the educational field [2,3]. Despite this, mere inclusion in this technological era does not equalize the opportunities for access and use for the user, causing visible inequalities in the different levels of competence [4]. These demands require constant updates, generating uncertainties largely due to the lack of knowledge of what the immediate future will be like.

The strong presence of ICT in the field of education is progressively increasing, meaning that the digital competences that teachers must possess go beyond the mere mastery of teaching content and methodologies, as indicated in the Horizon Reports [5].

This call led the European Commission in 2006 to consider digital competence as essential for the critical and safe use of ICT. It is understood as a set of transversal competences that a society must possess in order to be able to function effectively in the knowledge society [6].

In response to the demands raised, in 2013, the European Union Commission detailed the importance of “reorienting education” as a way to achieve teaching excellence within current environments, which led to the introduction of ICT in the educational framework. This led to the creation of international training plans capable of effectively integrating the CD among teachers, ensuring common training in this competence [7]. It could be argued, therefore, that ICT has managed to acquire a relevant role as an indispensable resource among teachers, whose level of competence will be essential in providing quality education in virtual environments [8].

The introduction of these means and resources by teachers requires constant reflection on the aspects that may hinder their correct application. According to [9], it is necessary to review the concept of literacy and to advance in new forms of identification that facilitate greater and better access to the development of the competences demanded by society. The incessant need to achieve the introduction of a literacy model generates digital culture promoting digital literacy, e-learning, e-inclusion, e-health, and digital solutions in these fields.

Due to the existing demands and the need to develop digital competences and strategies, official bodies and institutions began to draw up a list of essential competences, among which we find digital competence [10] and with it new expressions, such as digital teaching competence (DTC). This definition refers to the development of knowledge, skills, and strategies to address educational problems using digital technology [11,12].

This teaching in digital competences crosses several dimensions and therefore needs to offer reference frameworks for its correct application, as detailed by [13]. Among them we find the ISTE standards, the UNESCO framework, and the INTEF framework—each and every one of them were analysed in different studies.

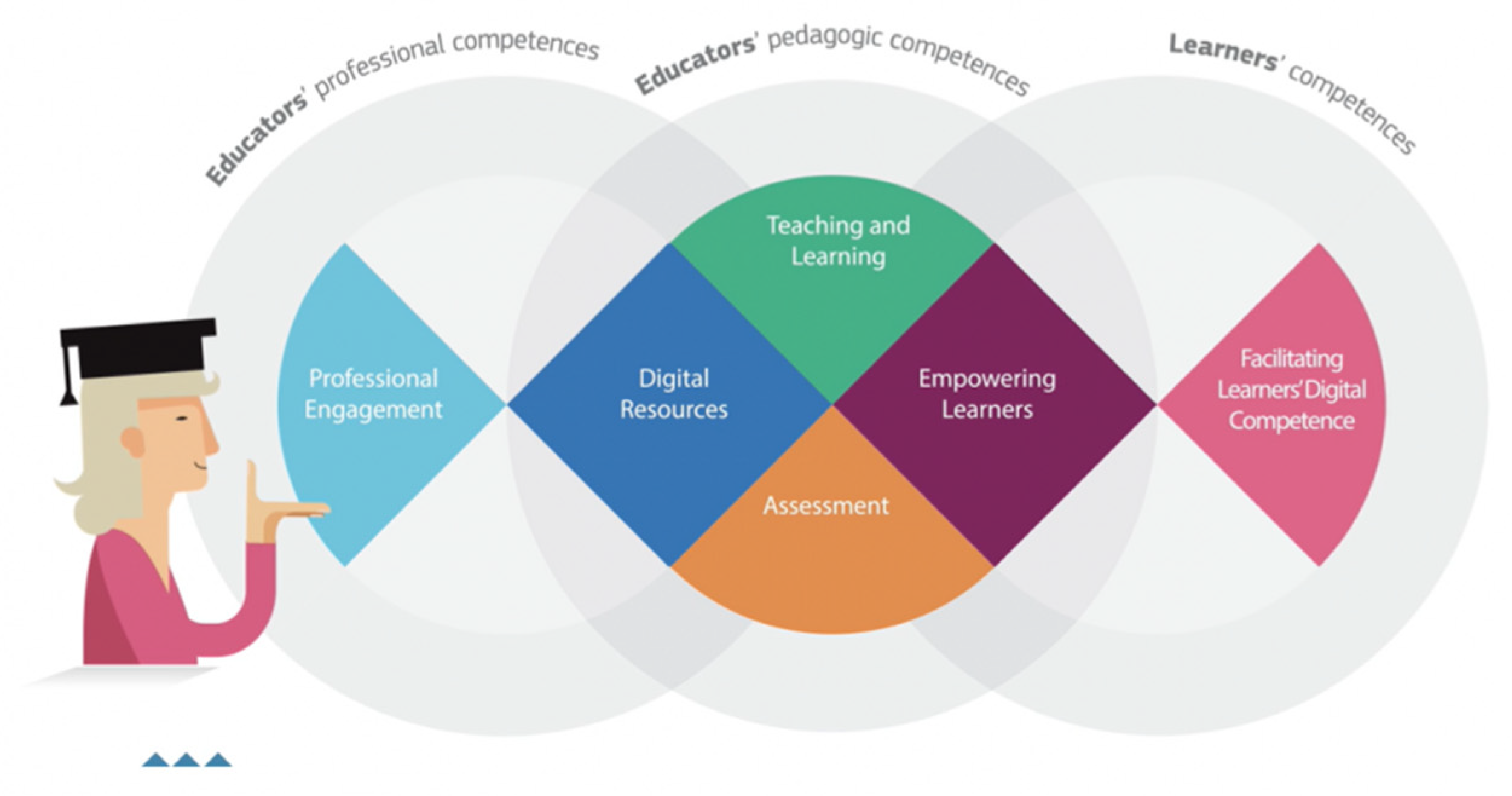

In our field of study, we will focus, as detailed above, on the one provided by the European Union: the DigCompEdu framework (Digital Competences Framework for Educators). This framework has a total of six areas (Figure 1).

Figure 1.

DigCompEdu Framework. JRC.

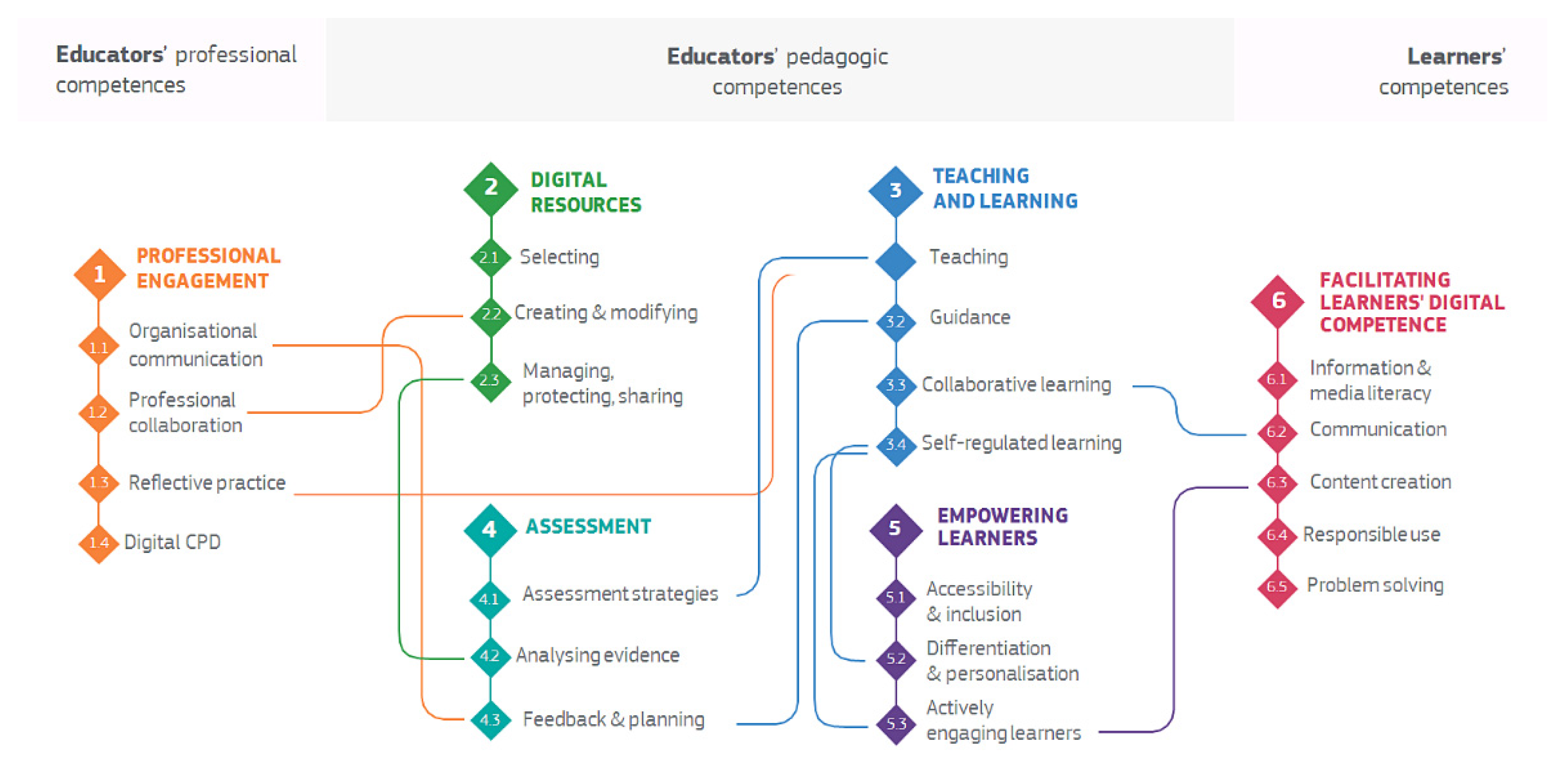

DigCompEdu is a competency model of six areas (Figure 2) that are associated with different competences that teachers must acquire in order to achieve the promotion of productive, inclusive, and integrative learning strategies through the use of digital tools [14].

Figure 2.

Overview of the DigCompEdu Framework. JRC.

As proposed by [15,16,17,18,19,20], they can (a) offer global learning where learner participation and engagement would come into play; (b) enable access to public and shared content, thus generating more emergent knowledge; (c) have an impact on higher educational stages; and (d) improve educational quality and design. MOOCs could therefore be said to represent an impetus to enhance and promote the 2030 Agenda and the SDGs [21].

As we have previously pointed out, there are currently many reference frameworks around digital competence that have emerged in recent years. These frameworks must be understood as a guide to orient the development of said competence. They must go further and serve as a basis for the development of learning strategies that induce and/or favour the digital development of individuals.

Using one of the most representative frameworks currently available regarding digital competence, such as DigCompEdu, this research is presented. The research questions that arise are as follows: Can training itineraries be designed to promote digital competence based on the DigCompEdu framework? Are t-MOOCs configured as valid and reliable training instruments for the development of said competence?

2. Methods

2.1. Objective

The main objective of this research was to carry out an expert evaluation of a training design for the development of digital teacher competence (DTC) using the DigCompEdu reference framework.

2.2. Context/Material

An evaluation of a t-MOOC training environment developed for the further development of DTC following the DigCompEdu framework was offered. The platform where the content was developed was Moodle (https://www.dipromooc.es/ accessed on 1 December 2022). Among the advantages of this LMS, we can highlight [22]:

- Diverse possibilities for developing greater independence, commitment, and exercise of the learner;

- The use of various digital resources that improve skills in the use of information;

- The possibility of generating new knowledge, exchanging learning experiences, and searching for information.

The evaluation of the training action generated for the improvement of DTC under the DigCompEdu framework is presented below. Each of the competence areas included a video pill describing them. After viewing the video, the educator started with an elaboration of the content and ended with the completion of a final activity or task. A total of 4 to 6 activities oriented to each competence and level were available, from which the teacher had to choose to carry out 2 of them.

A didactic guide was provided showing how to identify the tasks, make recommendations for use, and measure the excellence of the distribution (Likert 1–6 points) via a checklist, and a final rubric was used by tutors in the final evaluation.

T-MOOC included tasks of different kinds: creation of concept maps, participation in group forums, design of blogs, construction of personal learning environments (PLE), elaboration of e-activities for students, construction of learning communities, among others.

We could say that t-MOOC included [23]:

- A total of sixty-six learning modules (3 per competence);

- Two hundred and thirty e-activities included in the modules;

- One general didactic animation;

- Six specific didactic animations per competence area;

- Twenty-two specific animations for each competence;

- Twenty-four infographics for the different modules;

- Eleven polymedia for the different learning modules.

2.3. Procedure

The sample selected was random, and the questionnaire was sent to all ICT coordinators in publicly funded preschools and primary schools of the Department of Educational Development and Vocational Training of the Andalusian Regional Government (Spain).

A total of 364 e-mails were sent for data collection. The instrument was made available to teachers for two weeks, and a total of 292 responses were obtained. A total of 52% of the subjects surveyed were male compared to 48% who were female; the average age was 50 years.

In order to measure the level of expert knowledge [24,25] of the sample members, the experts were asked to self-assess their own level of knowledge [26] based on criteria such as publications on the subject to be analysed, years of experience, training, professional career, etc.

In this sense, the expert competence index (K) used in studies of a similar nature [27,28] was calculated. To calculate this index, the following parameter was used: K = ½ (Kc + Ka), where (Kc) is the “Knowledge Coefficient” that the expert has about the research topic and (Ka) is the “Argumentation Coefficient” or the sources of criteria of each expert. The result of the CCE index yielded a value ≥0.8, which is considered a high value of expert competence [26].

In this case, to refine the expert selection process, we selected those experts who scored ≥ 0.8. This allowed us to identify 50 experts; this represented 17.12% of the total number of responses obtained. The proportion of the selected sample based on the gender variable is very similar to the proportion of responses obtained at a general level.

Fifty-nine percent of the experts were male compared to 41% who were female. The average age of the experts was 54 years. Although all of them work in preschools and primary schools, 24% (12 subjects) of them also work as associate professors in Andalusian public universities.

Finally, the vast majority of the experts selected (92.4%) indicated that they had teaching experience with, had published on, or had participated in research or working groups related to ICT and/or digital literacy and competence, both for teachers and learners.

2.4. Instrument

To meet the objectives of our study, we adapted the instrument designed in [29] to assess the self-concept, management, and planning of other technology. We selected this instrument based on adequate internal consistency, both at a general level and in each of its individual dimensions, corroborated by other studies that have shown the importance of these dimensions in the university population.

The designed instrument consisted of a total of 18 items distributed in four dimensions (see Table 1) related to the architecture of the designed t-MOOC.

Table 1.

Dimensions and items of the instrument.

Cronbach’s Alpha statistic was applied to calculate the reliability with a very acceptable result (0.985) for the instrument as a whole. With regard to reliability by dimensions, the data also show high levels of reliability: technical and aesthetic aspects (0.996), ease of use (0.901), diversity of resources and activities (0.956), and quality of content (0.979).

The instrument used a Likert-type scale with six response options: 1. MN = Very negative/Very negative/Strongly disagree/Very difficult; 2. N = Negative/Disagree/Difficult; 3. R− = Fairly negative/Moderately disagree/Moderately difficult; 4. R+ = Fairly positive/Moderately agree/Moderately easy. The items are grouped into 4 dimensions and are shown in Table 1.

The questionnaire was administered online using the Google Forms tool: https://cutt.ly/PzZsfCV (accessed on 11 October 2022).

3. Results

Before presenting the results, the reliability of the questionnaire was checked by applying Cronbach’s Alpha (0.925) and McDonald’s Omega (0.963) statistics. Both showed excellent results in terms of the overall reliability of the expert questionnaire.

The mean standards and their corresponding standard deviations achieved within the four areas that make up the data tool in addition to an overall assessment are detailed below (Table 2).

Table 2.

Mean rating data and standard deviation obtained by experts.

The data obtained corroborate that in each of the evaluations of each dimension, the dimension has been judged positively. In particular, the high quality of the contents (5.41) and the technical aspects of the t-MOOC (5.23) stand out. The rest of the dimensions also maintained good scores in terms of simplicity of use and the variety of resources and activities contained in the t-MOOC.

Below, we can see the scores obtained for the different items in each of the dimensions.

With regard to Dimension 1 (technical and aesthetic aspects), two fundamental, interrelated aspects should be noted (see Table 3): It is worth highlighting the high score obtained by the experts both in terms of the functioning of the t-MOOC itself and its technical functioning, considering that the tool’s functioning was correct and adequate and allowing users to navigate through its architecture easily and comfortably.

Table 3.

Mean score and standard deviation of the experts for the items of the dimension “Technical and aesthetic aspects”.

Table 4 shows the data resulting from the mean and standard deviation of the second dimension referring to the ease of use:

Table 4.

Mean score and standard deviation of the experts for the items of the dimension “Technical and aesthetic aspects”.

It should be noted that most of the scores obtained in the items were high, where M >5, with the exception of the item “Using the t-MOOC produced was fun”, which obtained a lower score (4.70). This fact shows the need for a review of the tool’s architecture to make it more attractive to future users.

Along the same lines as the scores obtained in the previous dimensions, the values obtained in Dimension 3 (diversity of resources and activities) (see Table 5) exceeded the average score of 5. The high score achieved in the item “The materials, readings, animations, videos… offered in the t-MOOC are clear and appropriate” (5.29) may lead to the conclusion that the materials selected are suitable for producing quality learning situations. Similarly, the score achieved in the item “From your point of view, how would you evaluate the accessibility/usability of the t-MOOC we have presented to you?” (5.27) may lead to the conclusion that the architecture designed for the t-MOOC favours accessibility and/or usability for users, an aspect of vital importance in the design of this type of materials.

Table 5.

Mean score and standard deviation of the experts on the items of the dimension “Diversity of resources and activities”.

The mean values of the scores of the last dimension of the instrument (the quality of the contents) are presented in Table 6. It should be noted that the scores obtained in each of the items of this dimension reached the highest scores in the instrument.

Table 6.

Mean score and standard deviation made by the experts on the items of the dimension “Quality of contents”.

4. Discussion

The importance of this research is supported by the effectiveness of the procedure used. The evaluations carried out by the experts allowed for considerable improvements in some of its aspects. In this sense, to highlight those crucial aspects in the architecture of the t-MOOC that have obtained very high scores, such as navigation and the technical functioning of the t-MOOC, the improved version of the training action will include:

- Less linear structure.

- Modification of some tasks.

- Presentation of contents including complementary material.

Authors such as [30] point out that MOOCs should have a clear course structure where navigation and orientation is facilitated.

5. Conclusions

Different researchers support ways of designing training actions by considering the use of dissimilar tools used for the exposition of data and carrying out activities in each of the modules to overcome and reach the next level [31]. This way of developing training actions makes it necessary to rethink new ways of designing the exact resources used in online training [32,33,34]. It should be noted that this tool, according to the assessments offered by the experts, offers the possibility of training teachers in DTC within the DigCompEdu framework, and therefore supports the proposed training plan.

Therefore, the acquisition of the skills demanded by the digital society in which we find ourselves is favoured [35,36].

Finally, the pilot experience can increase and lead institutions regarding the strategies to follow in order to establish strategies for teacher training in digital competences for teachers. In this sense, the present study offers the possibility of looking at the training environment as a training plan that, thanks to its architecture, is offered for teacher training through a training offer of different levels linked to the European Framework for Digital Competence in Education: DigCompEdu [37].

The main limitations of this study are related to the use of self-perception-based questionnaires. However, the large number of responses obtained as well as the expert selection process through the use of the expert competence coefficient increased its scientific potential. However, as a line of future research, the use of qualitative evaluation instruments is recommended. Some lines of consideration could be: qualitative evaluation by the experts or qualitative evaluation by the students participating in the training action. Likewise, the evaluation of this environment has been carried out almost exclusively by experts of Spanish nationality. Therefore, it is suggested to repeat the experience with international experts. In this case, a prior contextualization should be produced in the training action.

Author Contributions

Conceptualization, J.J.G.-C. and A.P.-R.; methodology, A.P.-R.; software, M.S.-H.; validation, L.M.-P., J.J.G.-C. and M.S.-H.; formal analysis, A.P.-R.; investigation, J.J.G.-C.; writing—review and editing, L.M.-P.; funding acquisition, J.J.G.-C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Ministry of Science, Innovation and Universities (Spain), grant number RTI2018-097214-B-C31.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data is on the project page https://grupotecnologiaeducativa.es/ (accessed on 1 December 2022).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Cabero-Almenara, J.; Fernández-Batanero, J.M.; Pérez, M.C. Conocimiento de las TIC aplicadas a las personas con discapacidades. Construcción de un instrumento de diagnóstico. Magis Rev. Int. Investig. Educ. 2016, 8, 157–176. [Google Scholar] [CrossRef]

- Cabero-Almenara, J.; Gutiérrez-Castillo, J.J.; Palacios-Rodríguez, A.; Barroso-Osuna, J. Development of the Teacher Digital Competence Validation of DigCompEdu Check-In Questionnaire in the University Context of Andalusia (Spain). Sustainability 2020, 12, 6094. [Google Scholar] [CrossRef]

- Cabero-Almenara, J.; Romero-Tena, R.; Palacios-Rodríguez, A. Evaluation of Teacher Digital Competence Frameworks through Expert Judgement: The Use of the Expert Competence Coefficient. J. New Approaches Educ. Res. 2020, 9, 275–293. [Google Scholar] [CrossRef]

- Casillas Martín, S.; Cabezas González, M.; García Peñalvo, F.J. Digital competence of early childhood education teachers: Attitude, knowledge and use of ICT. Eur. J. Teach. Educ. 2020, 43, 210–223. [Google Scholar] [CrossRef]

- Adams, V.; Burger, S.; Crawford, K.; Setter, R. Can You Escape? Creating an Escape Room to Facilitate Active Learning. J. Nurses Prof. Dev. 2018, 34, E1. [Google Scholar] [CrossRef] [PubMed]

- Ferrari, A. DIGCOMP: A Framework for Developing and Understanding Digital Competence in Europe; JRC-IPTS: Sevilla, Spain, 2013. [Google Scholar]

- Europeo, C. Recomendación del Consejo de 22 de Abril de 2013 Sobre el Establecimiento de la Garantía Juvenil. Off. J. Eur. Union 2013. Available online: https://bit.ly/3CWA0z7 (accessed on 1 December 2022).

- Salinas, J. Innovación docente y uso de las TIC en la enseñanza universitaria. Rev. Univ. Soc. Conoc. 2004, 1, 1–16. [Google Scholar]

- Coll, C. Las competencias en la educación escolar: Algo más que una moda y mucho menos que un remedio. Aula Innovación Educ. 2020, 161, 34–39. [Google Scholar]

- INTEF. Marco Común de Competencia Digital Docente. Enero 2017; Instituto Nacional de Tecnologías Educativas y Formación del Profesorado: Madrid, Spain, 2017. [Google Scholar]

- Casal, L.; Barreira, E.M.; Mariño, R.; García, B. Competencia Digital Docente del profesorado de FP de Galicia. Pixel-Bit. Rev. Medios Educ. 2021, 61, 165–196. [Google Scholar] [CrossRef]

- Cabero-Almenara, J.; Barroso-Osuna, J.; Palacios-Rodríguez, A.; Llorente-Cejudo, C. Marcos de Competencias Digitales para docentes universitarios: Su evaluación a través del coeficiente competencia experta. Rev. Electrónica Interuniv. Form. Profr. 2020, 23, 1–18. [Google Scholar] [CrossRef]

- Calderón-Garrido, D.; Gustems-Carnicer, J.; Carrera, X. Digital technologies in music students on primary teacher training degrees in Spain: Teachers’ habits and profiles. Int. J. Music. Educ. 2020, 38, 613–624. [Google Scholar] [CrossRef]

- Redecker, C.; Punie, Y. European Framework for the Digital Competence of Educators: DigCompEdu; Joint Research Centre: Ispra, Italy, 2017. [Google Scholar]

- Kady, H.R.; Vadeboncoeur, J.A. Massive Open Online Courses (MOOCs); EBSCO Publishing: Ipswich, MA, USA, 2013. [Google Scholar]

- Watson, S.L.; Watson, W.R.; Yu, J.H.; Alamri, H.; Mueller, C. Learner profiles of attitudinal learning in a MOOC: An explanatory sequential mixed methods study. Comput. Educ. 2017, 114, 274–285. [Google Scholar] [CrossRef]

- García-Peñalvo, F.J.; García-Holgado, A.; Vázquez-Ingelmo, A.; Seoane-Pardo, A.M. Usability test of WYRED platform. In Learning and Collaboration Technologies. Design, Development and Technological Innovation. 5th International Conference, LCT 2018, Held as Part of HCI International 2018, Las Vegas, NV, USA; Zaphiris, P., Ioannou, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar] [CrossRef]

- Zawacki-Richer, O.; Bozkurt, A.; Alturki, U.; Aldraiweesh, A. What research says about MOOCs—An explorative content analysis. Int. Rev. Res. Open Distrib. Learn. 2018, 19, 242–259. [Google Scholar] [CrossRef]

- Cornelius, S.; Clader, C.; Mtika, P. Understanding learner engagement on a blended course including a MOOC. Res. Learn. Technol. 2019, 27, 2097. [Google Scholar] [CrossRef]

- Deng, R.; Benckendorff, P.; Gannaway, D. Learner engagement in MOOCs: Scale development and validation. Br. J. Educ. Technol. 2020, 51, 245–262. [Google Scholar] [CrossRef]

- Hueso, J.J. Creación de una red neuronal artificial para predecir el comportamiento de las plataformas MOOC sobre la agenda 2030 y los objetivos para el desarrollo sostenible. Vivat Acad. Rev. Commun. 2022, 155, 61–89. [Google Scholar]

- Evgenievich Egorov, E.; Petrovna Prokhorova, M.; Evgenievna Lebedeva, T.; Aleksandrovna Mineeva, O.; Yevgenyevna Tsvetkova, S. Moodle LMS: Positive and Negative Aspects of Using Distance Education in Higher Education Institutions. Propósitos Represent. 2021, 9, e1104. [Google Scholar] [CrossRef]

- Cabero-Almenara, J.; Barragán-Sánchez, R.; Palacios-Rodríguez, A.; Martín-Párraga, L. Diseño y Validación de t-MOOC para el Desarrollo de la Competencia Digital del Docente No Universitario. Tecnologías 2021, 9, 84. [Google Scholar] [CrossRef]

- Landeta, J. El Método Delphi: Una Técnica de Previsión Para la Incertidumbre; Ariel: Barcelona, Spain, 1999. [Google Scholar]

- Blasco, J.E.; López, A.; Mengual, S. Validación mediante método Delphi de un cuestionario para conocer las experiencias e interés hacia las actividades acuáticas con especial atención al windsurf. Ágora Para Educ. Física Deporte 2010, 12, 75–96. [Google Scholar]

- López Gómez, E. El método Delphi en la investigación actual en educación: Una revisión teórica y metodológica. [The Delphi method in current educational research: A theoretical and methodological review]. Educ. XX1 2018, 21, 17–40. [Google Scholar] [CrossRef]

- Cabero-Almenara, J.; Tena-Romero, R. Diseño de un t-MOOC para la formación en competencias digitales docentes: Estudio en desarrollo (Proyecto DIPROMOOC). Innoeduca Int. J. Technol. Educ. Innov. 2020, 6, 4–13. [Google Scholar] [CrossRef]

- Lamm, K.; Powell, A.; Lombardini, L. Identifying Critical Issues in the Horticulture Industry: A Delphi Analysis during the COVID-19 Pandemic. Horticulturae 2021, 7, 416. [Google Scholar] [CrossRef]

- Martínez-Martínez, A.; Olmos-Gómez, M.D.C.; Tomé-Fernández, M.; Olmedo-Moreno, E.M. Analysis of Psychometric Properties and Validation of the Personal Learning Environments Questionnaire (PLE) and Social Integration of Unaccompanied Foreign Minors (MENA). Sustainability 2019, 11, 2903. [Google Scholar] [CrossRef]

- Moore, R.; Blackmon, S. From the learner’s perspective: A systematic review of MOOC learner experiences (2008–2021). Comput. Educ. 2022, 190, 104596. [Google Scholar] [CrossRef]

- Baeza-González, A.; Lázaro-Cantabrana, J.-L.; Sanromà-Giménez, M. Evaluación de la competencia digital del alumnado de ciclo superior de primaria en Cataluña. Pixel-Bit. Rev. Medios Educ. 2022, 64, 265–298. [Google Scholar] [CrossRef]

- Guillén-Gámez, F.D.; Mayorga-Fernández, M.J.; Álvarez-García, F.J. A study on the actual use of digital competence in the practicum of education degree. Technol. Knowl. Learn. 2018, 25, 667–684. [Google Scholar] [CrossRef]

- Guillén-Gámez, F.D.; Mayorga-Fernández, M.J. Identification of variables that predict teachers’ attitudes toward ICT in higher education for teaching and research: A study with regression. Sustainability 2020, 12, 1312. [Google Scholar] [CrossRef]

- García-Prieto, F.; López-Aguilar, D.; Delgado-García, M. Competencia digital del alumnado universitario y rendimiento académico en tiempos de COVID-19. Pixel-Bit. Rev. Medios Educ. 2022, 64, 165–199. [Google Scholar] [CrossRef]

- Colomo Magaña, A.; Colomo Magaña, E.; Guillén-Gámez, F.D.; Cívico Ariza, A. Analysis of Prospective Teachers’ Perceptions of the Flipped Classroom as a Classroom Methodology. Societies 2022, 12, 98. [Google Scholar] [CrossRef]

- Gabarda Méndez, V.; Cuevas Monzonís, N.; Colomo Magaña, E.; Cívico Ariza, A. Competencias Clave, Competencia Digital Y formación Del Profesorado: Percepción De Los Estudiantes De Pedagogía. Profesorado 2022, 26, 7–27. [Google Scholar] [CrossRef]

- Barragán Sánchez, R.; Llorente Cejudo, C.; Aguilar Gavira, S.; Benítez Gavira, R. Autopercepção inicial e nível de competência digital de professores universitários. Texto Livre 2021, 15, e36032. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).