Rewired Leadership: Integrating AI-Powered Mediation and Decision-Making in Higher Education Institutions

Abstract

1. Introduction

1.1. The Rhetorical Crossroads of Tradition and Transformation

1.2. Conceptual Model Context: Bridging Human–AI Interaction Through Integrated Theory

1.3. AI Mediation for Education 5.0

1.4. Conceptual Foundations

1.4.1. Media Richness Theory (MRT)

1.4.2. Social Presence Theory (SPT)

1.4.3. Technology Acceptance Models (TAMs and UTAUT)

1.4.4. Trust Theory

1.4.5. Ethically Aligned Design and Machine Agency

1.4.6. The Central Role of Trust

1.4.7. The Paradox of Leadership in the Digital Age

1.5. Research Gap: From Functionality to Relational Legitimacy

1.5.1. Research Question

1.5.2. Research Objectives

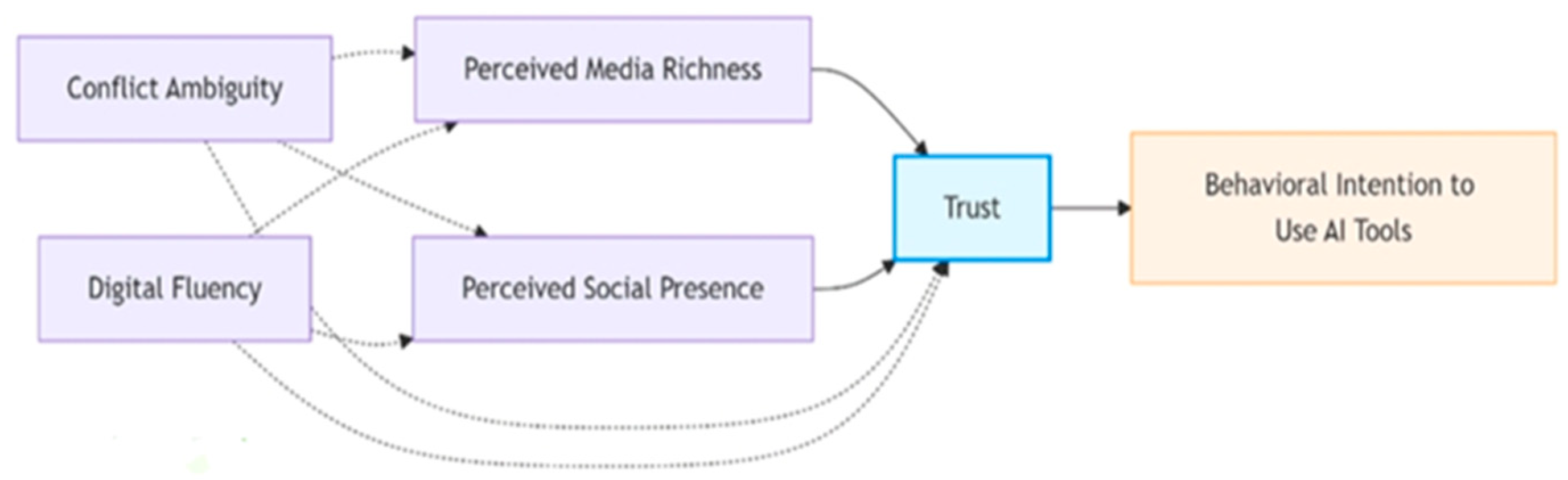

- Perception and trust: Assess how students’ perceptions of media richness and social presence influence trust in AI mediation tools.

- Trust intention: Determine how trust impacts behavioral intentions to adopt and use such tools.

- Moderators: Examine how conflict uncertainty and digital fluency moderate these relationships.

- Qualitative nuance: Explore students’ ethical concerns, emotional expectations, and perceptions through open-ended responses.

- Model validation: The proposed integrated, idea-driven model was empirically tested across a diverse sample of university students, aiming to examine the hypothesized relationships depicted through the directional pathways in Figure 1.

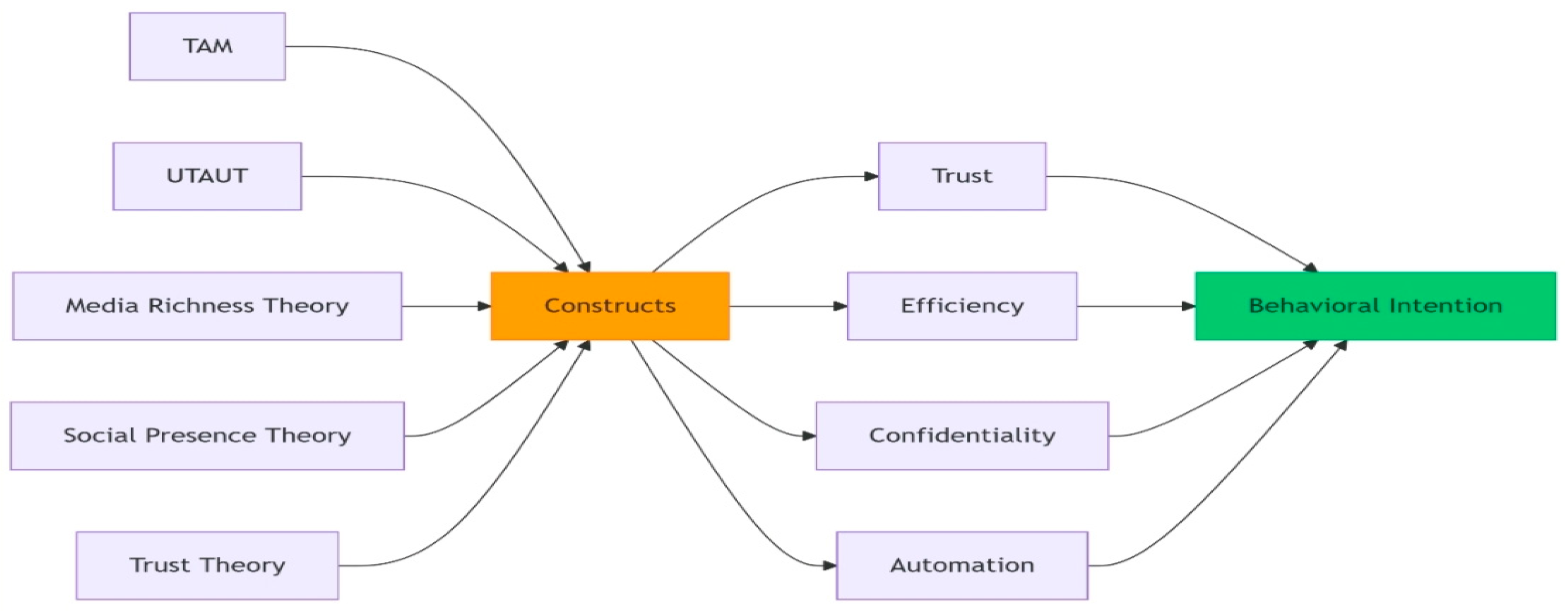

- (1)

- Digital transformation and leadership in higher education establishes the broader institutional context, highlighting how AI integration influences leadership discourse, trust dynamics, and communicative practices.

- (2)

- The application of AI in educational mediation and governance provides the conceptual foundation for constructs such as automation, confidentiality, and explainability.

- (3)

- Media richness and communicative effectiveness in digital environments elucidates the constructs of perceived media richness and social presence.

- (4)

- Trust and social presence in human–AI interaction constitutes the theoretical core of the model’s mediating mechanism—trust—as shaped by emotional perception and ethical interpretation.

- (5)

- Technology acceptance frameworks within institutional settings inform the outcome variable of behavioral intention while also incorporating the contextual moderators of digital fluency and conflict ambiguity.

2. Theoretical Foundations

2.1. Classical Foundations and Digital Disruption

2.1.1. Media Richness Theory (MRT): Reconfiguring Richness for AI

2.1.2. AI-Driven “Pseudo-Richness” and Its Limitations

2.1.3. Reframing Richness: Channel Expansion and the Role of Digital Fluency

2.2. Toward a Redefined AI-Mediated Richness

2.2.1. Social Presence Theory (SPT): Trust, Relationality, and AI and the Black Box

2.2.2. AI and the Presence Deficit

2.3. Paradoxical Successes: Presence Through Hyper-Relevance

2.4. Trust as the Mediating Construct

2.5. Technology Acceptance (TAM/UTAUT): Adoption Barriers as Trust Failures

2.6. Low Perceived Usefulness: When AI Lacks Contextual Intelligence

2.7. Low Perceived Ease of Use: When Rich Tools Are Hard to Navigate

2.8. Social Influence: Cultural Friction and Institutional Resistance

2.9. Facilitating Conditions: Inequity in Infrastructure and Access

3. Materials and Methods

3.1. Participants and Sampling

- -

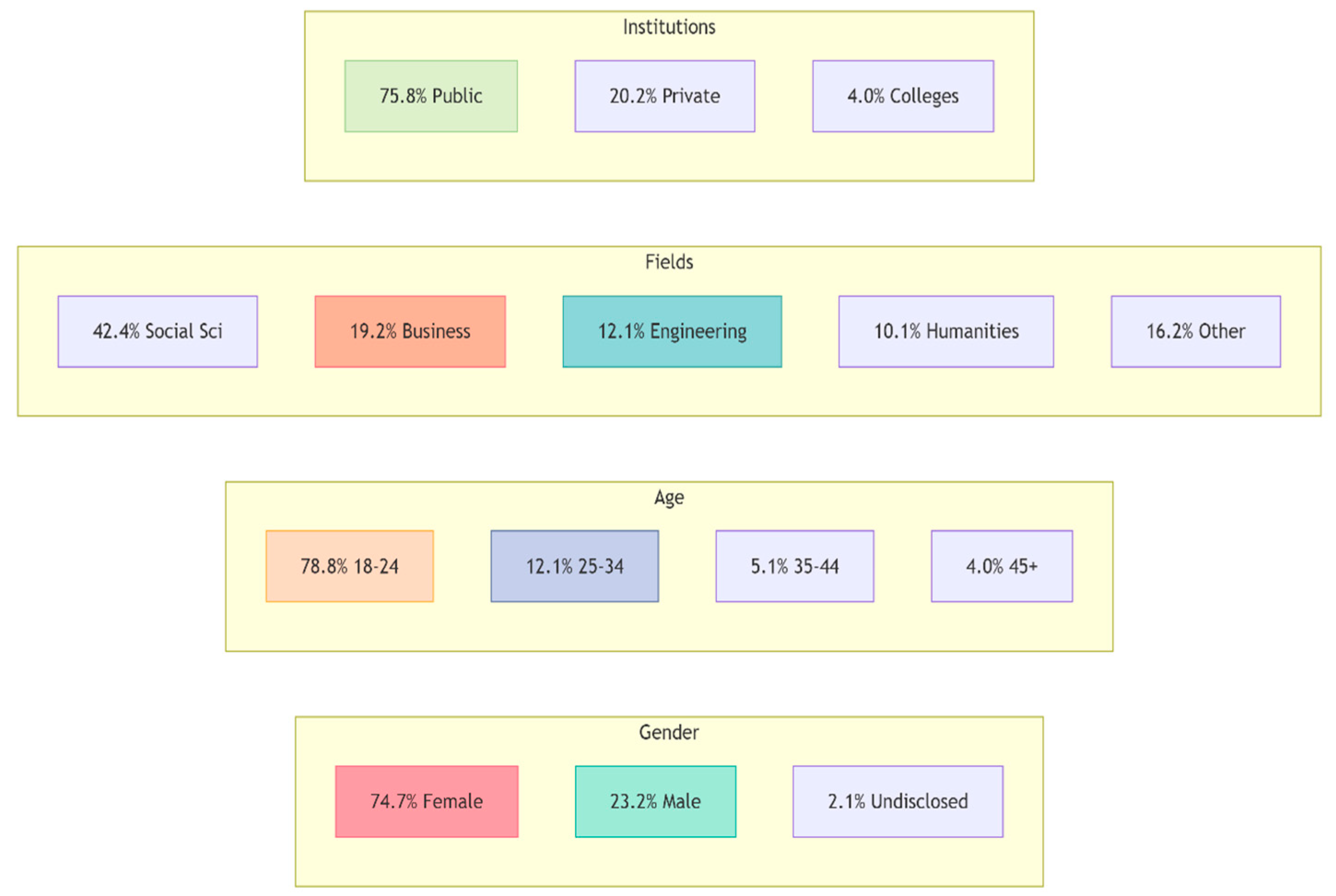

- Gender: 74.7% female, 23.2% male, 2.1% preferred not to disclose.

- -

- Age: 78.8% aged 18–24; 12.1% aged 25–34; 5.1% aged 35–44; 4% aged 45+.

- -

- Fields of study: 42.4% social sciences, 19.2% business/economics, 12.1% engineering, 10.1% humanities, 16.2% other.

- -

- Institution type: 75.8% Public universities, 20.2% private institutions, 4% colleges.

- -

- Level of study: 60.6% undergraduate (bachelor), 34.5% postgraduate (master), 4.9% other (e.g., PhD, non-specified)

3.2. Instrumentation and Constructs

- -

- Media richness: personalization, ease of use, multimodal cues.

- -

- Social presence: authenticity, empathy.

- -

- Trust: integrity, competence, benevolence.

- -

- Confidentiality: perceived data protection.

- -

- Behavioral intention: willingness to adopt AI-powered mediation.

- –

- Media richness (e.g., “How easy do you think it is to use AI-based mediation tools in conflict resolution?”).

- –

- Social presence and trust (e.g., “Would you trust AI systems to deliver impartial outcomes in mediation between students and/or staff?”).

- –

- Confidentiality (e.g., “How confident are you that AI tools can maintain confidentiality during the mediation process?”).

- –

- Behavioral intention (e.g., “Are you willing to use AI-based mediation tools to resolve conflicts with students and/or colleagues?”).

- –

- “What changes would you like to see in the current mediation practices at your institution?”

- –

- “If given a choice between AI-based mediation and human mediation, which would you prefer and why?”

- –

- “What do you believe are the potential benefits and challenges of using AI systems for conflict resolution between students and/or staff?”

3.3. Quantitative Analysis

- -

- 74.2% of students found mediation techniques effective.

- -

- 63.6% expressed concern about the confidentiality of AI tools.

- -

- 41.4% showed positive behavioral intention to use AI tools.

- -

- 68.7% supported automation when combined with human oversight.

- -

- Trust × Intention: ρ = 0.49, p < 0.001

- -

- Automation × Intention: ρ = 0.54, p < 0.001

- -

- Efficiency × Intention: ρ = 0.47, p < 0.001

3.4. Qualitative Analysis

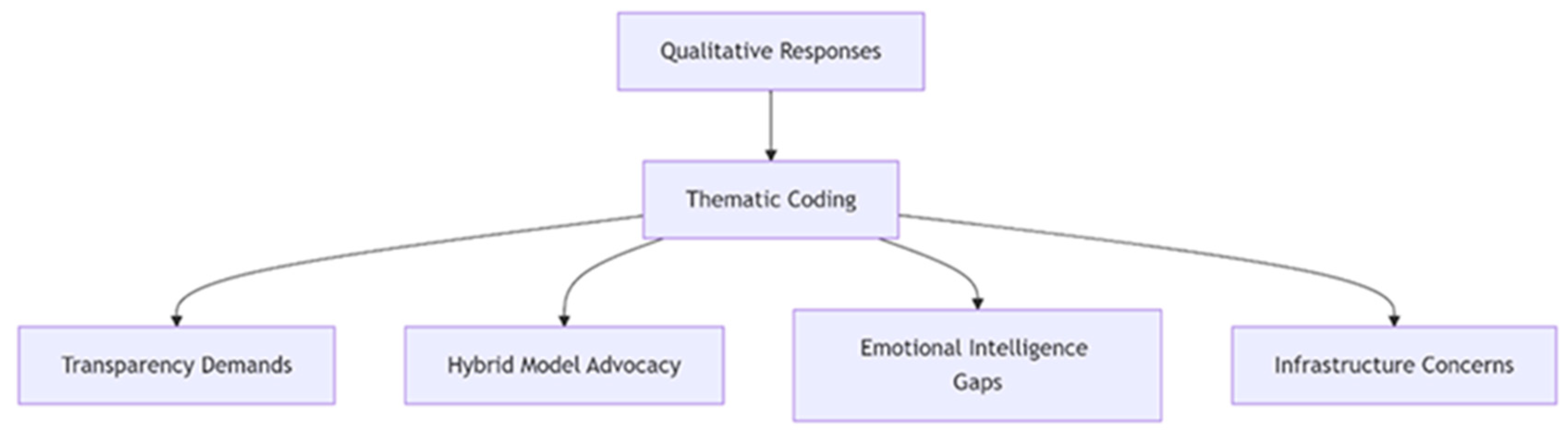

- -

- Explainability concerns and demand for transparency.

- -

- Preference for hybrid human–AI models.

- -

- Emotional intelligence deficits in AI.

- -

- Institutional infrastructure and readiness gaps.

3.5. Ethical Considerations

3.6. Analytical Tools

3.7. Alignment with Research Question

4. Results

4.1. Theoretical Framework for AI-Powered Mediation Acceptance in Higher Education

4.2. Interpretation of Key Predictors in Light of Theoretical Models

4.3. Integration of Quantitative and Qualitative Findings

5. Discussion

5.1. Implications for Theory

5.2. Practical Implications for Higher Education Institutions (HEIs)

5.3. Limitations

5.4. Future Research

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Triantari, S. Leadership: Leadership Theories from the Aristotelian Orator to the Modern Leader; K. & M. Stamoulis: Thessaloniki, Greece, 2020; ISBN 978-960-656-012-5. [Google Scholar]

- Zawacki-Richter, O.; Marín, V.I.; Bond, M.; Gouverneur, F. Systematic Review of Research on Artificial Intelligence Applications in Higher Education—Where Are the Educators? Int. J. Educ. Technol. High. Educ. 2019, 16, 39. [Google Scholar] [CrossRef]

- Battisti, D. Second-Person Authenticity and the Mediating Role of AI: A Moral Challenge for Human-to-Human Relationships? Philos. Technol. 2025, 38, 28. [Google Scholar] [CrossRef]

- Bolden, R.; Gosling, J.; O’Brien, A.; Peters, K.; Ryan, M.; Haslam, S.A. Academic Leadership: Changing Conceptions, Identities and Experiences in UK Higher Education; Leadership Foundation for Higher Education: London, UK, 2012; ISBN 978-1-906627-35-5. [Google Scholar]

- Liden, R.C.; Wang, X.; Wang, Y. The Evolution of Leadership: Past Insights, Present Trends, and Future Directions. J. Bus. Res. 2025, 186, 115036. [Google Scholar] [CrossRef]

- Avolio, B.J.; Kahai, S.S. Adding the “E” to E-Leadership: How It May Impact Your Leadership. Organ. Dyn. 2003, 31, 325–338. [Google Scholar] [CrossRef]

- Oncioiu, I.; Bularca, A.R. Artificial Intelligence Governance in Higher Education: The Role of Knowledge-Based Strategies in Fostering Legal Awareness and Ethical Artificial Intelligence Literacy. Societies 2025, 15, 144. [Google Scholar] [CrossRef]

- Guo, Y.; Dong, P.; Lu, B. The Influence of Public Expectations on Simulated Emotional Perceptions of AI-Driven Government Chatbots: A Moderated Study. J. Theor. Appl. Electron. Commer. Res. 2025, 20, 50. [Google Scholar] [CrossRef]

- Flavián, C.; Belk, R.W.; Belanche, D.; Casaló, L.V. Automated Social Presence in AI: Avoiding Consumer Psychological Tensions to Improve Service Value. J. Bus. Res. 2024, 175, 114545. [Google Scholar] [CrossRef]

- Guo, K. The Relationship Between Ethical Leadership and Employee Job Satisfaction: The Mediating Role of Media Richness and Perceived Organizational Transparency. Front. Psychol. 2022, 13, 885515. [Google Scholar] [CrossRef]

- Fraser-Burgess, S.; Heybach, J.; Metro-Roland, D. Emerging Ethical Pathways and Frameworks: Integration, Disruption, and New Ethical Paradigms. In The Cambridge Handbook of Ethics and Education, 1st ed.; Kristjánsson, K., Gregory, M., Eds.; Cambridge University Press: Cambridge, UK, 2024; pp. 593–867. [Google Scholar]

- Daft, R.L.; Lengel, R.H. Organizational Information Requirements, Media Richness and Structural Design. Manag. Sci. 1986, 32, 554–571. [Google Scholar] [CrossRef]

- Gunawardena, C.N. Social Presence Theory and Implications for Interaction and Collaborative Learning in Computer Conferences. Int. J. Educ. Telecommun. 1995, 1, 147–166. [Google Scholar]

- Short, J.; Williams, E.; Christie, B. The Social Psychology of Telecommunications; John Wiley & Sons: Hoboken, NJ, USA, 1976. [Google Scholar]

- Davis, F.D. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Forcael, E.; Garcés, G.; Lantada, A.D. Convergence of Educational Paradigms into Engineering Education 5.0. In Proceedings of the 2023 World Engineering Education Forum—Global Engineering Deans Council (WEEF-GEDC), Monterrey, Mexico, 23–27 October 2023; pp. 1–8. [Google Scholar] [CrossRef]

- Broo, D.G.; Kaynak, O.; Sait, S.M. Rethinking engineering education at the age of Industry 5.0. J. Ind. Inf. Integr. 2021, 25, 100311. [Google Scholar] [CrossRef]

- Shahidi Hamedani, S.; Aslam, S.; Mundher Oraibi, B.A.; Wah, Y.B.; Shahidi Hamedani, S. Transitioning towards tomorrow’s workforce: Education 5.0 in the landscape of Society 5.0—A systematic literature review. Educ. Sci. 2024, 14, 1041. [Google Scholar] [CrossRef]

- Calvetti, D.; Mêda, P.; de Sousa, H.; Chichorro Gonçalves, M.; Amorim Faria, J.M.; Moreira da Costa, J. Experiencing Education 5.0 for civil engineering. Procedia Comput. Sci. 2024, 232, 2416–2425. [Google Scholar] [CrossRef]

- Wang, Z. Media richness and continuance intention to online learning platforms: The mediating role of social presence and the moderating role of need for cognition. Front. Psychol. 2022, 13, 950501. [Google Scholar] [CrossRef] [PubMed]

- Oh, C.S.; Bailenson, J.N.; Welch, G.F. A systematic review of social presence: Definition, antecedents, and implications. Front. Robot. AI 2018, 5, 114. [Google Scholar] [CrossRef]

- Kreijns, K.; Xu, K.; Weidlich, J. Social presence: Conceptualization and measurement. Educ. Psychol. Rev. 2022, 34, 139–170. [Google Scholar] [CrossRef] [PubMed]

- Luo, Y.; Sun, L. The Effects of Social and Spatial Presence on Learning Engagement in Sustainable E-Learning. Sustainability 2025, 17, 4082. [Google Scholar] [CrossRef]

- Mari, A.; Mandelli, A.; Algesheimer, R. Empathic voice assistants: Enhancing consumer responses in voice commerce. J. Bus. Res. 2024, 175, 114566. [Google Scholar] [CrossRef]

- Zhai, C.; Wibowo, S.; Li, L.D. The effects of over-reliance on AI dialogue systems on students’ cognitive abilities: A systematic review. Smart Learn. Environ. 2024, 11, 28. [Google Scholar] [CrossRef]

- Ahmad, S.F.; Han, H.; Alam, M.M.; Rehmat, M.; Irshad, M.; Arraño-Muñoz, M.; Ariza-Montes, A. Impact of artificial intelligence on human loss in decision making, laziness and safety in education. Humanit. Soc. Sci. Commun. 2023, 10, 17. [Google Scholar] [CrossRef]

- Venkatesh, V.; Thong, J.Y.L.; Xu, X. Unified Theory of Acceptance and Use of Technology: A Synthesis and the Road Ahead. J. Assoc. Inf. Syst. 2016, 17, 328–376. [Google Scholar] [CrossRef]

- Lee, A.T.; Ramasamy, R.K.; Subbarao, A. Understanding Psychosocial Barriers to Healthcare Technology Adoption: A Review of TAM Technology Acceptance Model and Unified Theory of Acceptance and Use of Technology and UTAUT Frameworks. Healthcare 2025, 13, 250. [Google Scholar] [CrossRef]

- Alarcon, G.M.; Lyons, J.B.; Christensen, J.C.; Klosterman, S.L.; Bowers, M.A.; Ryan, T.J. The Effect of Propensity to Trust and Perceptions of Trustworthiness on Trust Behaviors in Dyads. Behav. Res. 2018, 50, 1906–1920. [Google Scholar] [CrossRef]

- Cetinkaya, N.E.; Krämer, N. Between Transparency and Trust: Identifying Key Factors in AI System Perception. Behav. Inf. Technol. 2025; online ahead of print. [Google Scholar] [CrossRef]

- Aydoğan, R.; Baarslag, T.; Gerding, E. Artificial Intelligence Techniques for Conflict Resolution. Group Decis. Negot. 2021, 30, 879–883. [Google Scholar] [CrossRef]

- Sundar, S.S.; Lee, E. Rise of Machine Agency: A Framework for Studying the Psychology of Human–AI Interaction (HAII). J. Comput.-Mediat. Commun. 2020, 25, 74–88. [Google Scholar] [CrossRef]

- Castellanos-Reyes, D.; Richardson, J.C.; Maeda, Y. The Evolution of Social Presence: A Longitudinal Exploration of the Effect of Online Students’ Peer-Interactions Using Social Network Analysis. Internet High. Educ. 2024, 61, 100939. [Google Scholar] [CrossRef]

- IEEE. Ethically Aligned Design: A Vision for Prioritizing Human Well-Being with Autonomous and Intelligent Systems, 2nd ed.; IEEE Standards Association: Piscataway, NJ, USA, 2019; Available online: https://standards.ieee.org/wp-content/uploads/import/documents/other/ead_v2.pdf (accessed on 30 April 2025).

- Vieriu, A.M.; Petrea, G. The Impact of Artificial Intelligence (AI) on Students’ Academic Development. Educ. Sci. 2025, 15, 343. [Google Scholar] [CrossRef]

- Đerić, E.; Frank, D.; Milković, M. Trust in Generative AI Tools: A Comparative Study of Higher Education Students, Teachers, and Researchers. Information 2025, 16, 622. [Google Scholar] [CrossRef]

- Bankins, S.; Formosa, P.; Griep, Y.; Richards, D. AI Decision Making with Dignity? Contrasting Workers’ Justice Perceptions of Human and AI Decision Making in a Human Resource Management Context. Inf. Syst. Front. 2022, 24, 857–875. [Google Scholar] [CrossRef]

- Marvi, R.; Foroudi, P.; AmirDadbar, N. Dynamics of User Engagement: AI Mastery Goal and the Paradox Mindset in AI–Employee Collaboration. Int. J. Inf. Manag. 2025, 83, 102908. [Google Scholar] [CrossRef]

- Xiao, Y.; Yu, S. Can ChatGPT Replace Humans in Crisis Communication? The Effects of AI-Mediated Crisis Communication on Stakeholder Satisfaction and Responsibility Attribution. Int. J. Inf. Manag. 2025, 80, 102835. [Google Scholar] [CrossRef]

- Chan, C.K.Y. AI as the Therapist: Student Insights on the Challenges of Using Generative AI for School Mental Health Frameworks. Behav. Sci. 2025, 15, 287. [Google Scholar] [CrossRef] [PubMed]

- Sposato, M. Artificial Intelligence in Educational Leadership: A Comprehensive Taxonomy and Future Directions. Int. J. Educ. Technol. High. Educ. 2025, 22, 20. [Google Scholar] [CrossRef]

- Sethi, S.S.; Jain, K. AI Technologies for Social Emotional Learning: Recent Research and Future Directions. J. Res. Innov. Teach. Learn. 2024, 17, 213–225. [Google Scholar] [CrossRef]

- Ciechanowski, L.; Przegalinska, A.; Magnuski, M.; Gloor, P. In the Shades of the Uncanny Valley: An Experimental Study of Human–Chatbot Interaction. Future Gener. Comput. Syst. 2019, 92, 539–548. [Google Scholar] [CrossRef]

- Murire, O.T. Artificial Intelligence and Its Role in Shaping Organizational Work Practices and Culture. Adm. Sci. 2024, 14, 316. [Google Scholar] [CrossRef]

- Kezar, A.; Eckel, P.D. The Effect of Institutional Culture on Change Strategies in Higher Education: Universal Principles or Culturally Responsive Concepts? J. High. Educ. 2002, 73, 435–460. [Google Scholar] [CrossRef]

- Aldosari, A.M.; Alramthi, S.M.; Eid, H.F. Improving Social Presence in Online Higher Education: Using Live Virtual Classrooms to Confront Learning Challenges during the COVID-19 Pandemic. Front. Psychol. 2022, 13, 994403. [Google Scholar] [CrossRef]

- Dwivedi, Y.K.; Kshetri, N.; Hughes, L.; Slade, E.L.; Jeyaraj, A.; Kar, A.K.; Baabdullah, A.M.; Koohang, A.; Raghavan, V.; Ahuja, M.; et al. Opinion Paper: “So What If ChatGPT Wrote It?” Multidisciplinary Perspectives on Opportunities, Challenges and Implications of Generative Conversational AI for Research, Practice and Policy. Int. J. Inf. Manag. 2023, 71, 102642. [Google Scholar] [CrossRef]

- Ngo, A.; Kocoń, J. Integrating Personalized and Contextual Information in Fine-Grained Emotion Recognition in Text: A Multi-Source Fusion Approach with Explainability. Inf. Fusion 2025, 118, 102966. [Google Scholar] [CrossRef]

- Aly, M. Revolutionizing online education: Advanced facial expression recognition for real-time student progress tracking via deep learning model. Multimed. Tools Appl. 2025, 84, 12575–12614. [Google Scholar] [CrossRef]

- Chen, A.; Evans, R.; Zeng, R. Editorial: Coping with an AI-saturated world: Psychological dynamics and outcomes of AI-mediated communication. Front. Psychol. 2024, 15, 1479981. [Google Scholar] [CrossRef] [PubMed]

- Lim, J.S.; Hong, N.; Schneider, E. How Warm-Versus Competent-Toned AI Apologies Affect Trust and Forgiveness through Emotions and Perceived Sincerity. Comput. Hum. Behav. 2025, 172, 108761. [Google Scholar] [CrossRef]

- Carbonell, G.; Barbu, C.-M.; Vorgerd, L.; Brand, M.; Molnar, A. The Impact of Emotionality and Trust Cues on the Perceived Trustworthiness of Online Reviews. Cogent Bus. Manag. 2019, 6, 1586062. [Google Scholar] [CrossRef]

- Carlson, J.R.; Zmud, R.W. Channel expansion theory and the experiential nature of media richness perceptions. Acad. Manag. J. 1999, 42, 153–170. [Google Scholar] [CrossRef]

- Zhang, L.; Mo, L.; Sun, X.; Zhou, Z.; Ren, J. How visual and mental human-likeness of virtual influencers affects customer–brand relationship on e-commerce platform. J. Theor. Appl. Electron. Commer. Res. 2025, 20, 200. [Google Scholar] [CrossRef]

- Rapanta, C.; Bhatt, I.; Bozkurt, A.; Chubb, L.A.; Erb, C.; Forsler, I.; Gravett, K.; Koole, M.; Lintner, T.; Örtegren, A.; et al. Critical GenAI literacy: Postdigital configurations. Postdigit. Sci. Educ. 2025; online ahead of print. [Google Scholar] [CrossRef]

- Hassani, H.; Silva, E.S.; Unger, S.; TajMazinani, M.; MacFeely, S. Artificial intelligence (AI) or intelligence augmentation (IA): What is the future? AI 2020, 1, 143–155. [Google Scholar] [CrossRef]

- Ifenthaler, D.; Yau, J.Y.K. Utilising learning analytics to support study success in higher education: A systematic review. Educ. Technol. Res. Dev. 2020, 68, 1961–1990. [Google Scholar] [CrossRef]

- Zafar, M. Normativity and AI moral agency. AI Ethics 2025, 5, 2605–2622. [Google Scholar] [CrossRef]

- Holmes, W.; Bialik, M.; Fadel, C. Artificial Intelligence in Education: Promises and Implications for Teaching and Learning; Global Series; Globethics Publications: Geneva, Switzerland, 2019. [Google Scholar] [CrossRef]

- Mattioli, M.; Cabitza, F. Not in my face: Challenges and ethical considerations in automatic face emotion recognition technology. Mach. Learn. Knowl. Extr. 2024, 6, 2201–2231. [Google Scholar] [CrossRef]

- Choung, H.; Seberger, J.S.; David, P. When AI is perceived to be fairer than a human: Understanding perceptions of algorithmic decisions in a job application context. Int. J. Hum.-Comput. Interact. 2023, 40, 7451–7468. [Google Scholar] [CrossRef]

- Wang, Y.; Gong, D.; Xiao, R.; Wu, X.; Zhang, H. A systematic review on extended reality-mediated multi-user social engagement. Systems 2024, 12, 396. [Google Scholar] [CrossRef]

- Cummings, J.J.; Wertz, E.E. Capturing social presence: Concept explication through an empirical analysis of social presence measures. J. Comput.-Mediat. Commun. 2023, 28, zmac027. [Google Scholar] [CrossRef]

- Jim, J.R.; Talukder, M.A.R.; Malakar, P.; Kabir, M.M.; Nur, K.; Mridha, M.F. Recent advancements and challenges of NLP-based sentiment analysis: A state-of-the-art review. Nat. Lang. Process. J. 2024, 6, 100059. [Google Scholar] [CrossRef]

- Papa, R.; Jackson, K.M. (Eds.) AI Transforms Twentieth-Century Learning. In Artificial Intelligence, Human Agency and the Educational Leader; Springer: Cham, Switzerland, 2021; pp. 1–32. [Google Scholar] [CrossRef]

- Ki, S.; Park, S.; Ryu, J.; Kim, J.; Kim, I. Alone but not isolated: Social presence and cognitive load in learning with 360 virtual reality videos. Front. Psychol. 2024, 15, 1305477. [Google Scholar] [CrossRef]

- Hsu, A.; Chaudhary, D. AI4PCR: Artificial intelligence for practicing conflict resolution. Comput. Hum. Behav. Artif. Hum. 2023, 1, 100002. [Google Scholar] [CrossRef]

- Li, B.J.; Lee, E.W.J.; Goh, Z.H.; Tandoc, E. From frequency to fatigue: Exploring the influence of videoconference use on videoconference fatigue in Singapore. Comput. Hum. Behav. Rep. 2022, 7, 100214. [Google Scholar] [CrossRef]

- Denzin, N.K.; Lincoln, Y.S. (Eds.) The SAGE Handbook of Qualitative Research, 5th ed.; SAGE Publications: Thousand Oaks, CA, USA, 2018; ISBN 978-1-4833-4980-0. [Google Scholar]

- Sun, X.; Yang, Y.; Song, Y. Unlocking the Synergy: Increasing Productivity through Human–AI Collaboration in the Industry 5.0 Era. Comput. Ind. Eng. 2025, 200, 110657. [Google Scholar] [CrossRef]

- Kinchin, N. “Voiceless”: The procedural gap in algorithmic justice. Int. J. Law Inf. Technol. 2024, 32, eaae024. [Google Scholar] [CrossRef]

- Wanner, J.; Herm, L.V.; Heinrich, K.; Janiesch, C. The effect of transparency and trust on intelligent system acceptance: Evidence from a user-based study. Electron. Mark. 2022, 32, 2079–2102. [Google Scholar] [CrossRef]

- Cao, N.; Cheung, S.-O.; Li, K. Perceptive biases in construction mediation: Evidence and application of artificial intelligence. Buildings 2023, 13, 2460. [Google Scholar] [CrossRef]

- Al-Adwan, A.S.; Li, N.; Al-Adwan, A.; Abbasi, G.A.; Albelbisi, N.A.; Habibi, A. Extending the technology acceptance model (TAM) to predict university students’ intentions to use metaverse-based learning platforms. Educ. Inf. Technol. 2023, 28, 15381–15413. [Google Scholar] [CrossRef]

- Ibrahim, F.; Münscher, J.-C.; Daseking, M.; Telle, N.-T. The technology acceptance model and adopter type analysis in the context of artificial intelligence. Front. Artif. Intell. 2025, 7, 1496518. [Google Scholar] [CrossRef]

- Yusuf, A.; Pervin, N.; Román-González, M. Generative AI and the future of higher education: A threat to academic integrity or reformation? Evidence from multicultural perspectives. Int. J. Educ. Technol. High. Educ. 2024, 21, 21. [Google Scholar] [CrossRef]

- Oppong, M. Leveraging predictive analytics to enhance student retention: A case study of Georgia State University. ResearchGate, 2024; accessed online. [Google Scholar] [CrossRef]

- Lopes, S.; Chalil Madathil, K.; Bertrand, J.; Brady, C.; Li, D.; McNeese, N. Effect of mental fatigue on trust and workload with AI-enabled infrastructure visual inspection systems. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Atlanta, GA, USA, 10–14 October 2022; Sage: Los Angeles, CA, USA, 2022; Volume 66, pp. 285–286. [Google Scholar] [CrossRef]

- Mosakas, K. On the moral status of social robots: Considering the consciousness criterion. AI Soc. 2021, 36, 429–443. [Google Scholar] [CrossRef]

- Dong, X.; Wang, Z.; Han, S. Mitigating learning burnout caused by generative artificial intelligence misuse in higher education: A case study in programming language teaching. Informatics 2025, 12, 51. [Google Scholar] [CrossRef]

- Smutny, P.; Schreiberova, P. Chatbots for learning: A review of educational chatbots for the Facebook Messenger. Comput. Educ. 2020, 151, 103862. [Google Scholar] [CrossRef]

- Selwyn, N. The future of AI and education: Some cautionary notes. Eur. J. Educ. 2022, 57, 620–631. [Google Scholar] [CrossRef]

- Bittle, K.; El-Gayar, O. Generative AI and academic integrity in higher education: A systematic review and research agenda. Information 2025, 16, 296. [Google Scholar] [CrossRef]

- Buijsman, S.; Carter, S.E.; Bermúdez, J.P. Autonomy by design: Preserving human autonomy in AI decision-support. Philos. Technol. 2025, 38, 97. [Google Scholar] [CrossRef]

- Sadek, M.; Mougenot, C. Challenges in value-sensitive AI design: Insights from AI practitioner interviews. Int. J. Hum.-Comput. Interact. 2024; accessed online. [Google Scholar] [CrossRef]

- Ulven, J.B.; Wangen, G. A systematic review of cybersecurity risks in higher education. Future Internet 2021, 13, 39. [Google Scholar] [CrossRef]

- Creswell, J.W.; Creswell, J.D. Research Design: Qualitative, Quantitative, and Mixed Methods Approaches, 5th ed.; SAGE Publications: Thousand Oaks, CA, USA, 2018. [Google Scholar]

- Braun, V.; Clarke, V. Using thematic analysis in psychology. Qual. Res. Psychol. 2006, 3, 77–101. [Google Scholar] [CrossRef]

- Yin, R.K. Case Study Research: Design and Methods, 4th ed.; SAGE Publications: Thousand Oaks, CA, USA, 2009. [Google Scholar]

- Boateng, G.O.; Neilands, T.B.; Frongillo, E.A.; Melgar-Quiñonez, H.R.; Young, S.L. Best practices for developing and validating scales for health, social, and behavioral research: A primer. Front. Public Health 2018, 6, 149. [Google Scholar] [CrossRef]

- DeVellis, R.F.; Thorpe, C.T. Scale Development: Theory and Applications, 5th ed.; SAGE Publications: Thousand Oaks, CA, USA, 2021. [Google Scholar]

- Nunnally, J.C.; Bernstein, I.H. Psychometric Theory, 3rd ed.; McGraw-Hill: New York, NY, USA, 1994. [Google Scholar]

- Vaske, J.J.; Beaman, J.; Sponarski, C.C. Rethinking internal consistency in Cronbach’s alpha. Leis. Sci. 2016, 39, 163–173. [Google Scholar] [CrossRef]

- Fošner, A. University students’ attitudes and perceptions towards AI tools: Implications for sustainable educational practices. Sustainability 2024, 16, 8668. [Google Scholar] [CrossRef]

- Bulathwela, S.; Pérez-Ortiz, M.; Holloway, C.; Cukurova, M.; Shawe-Taylor, J. Artificial intelligence alone will not democratise education: On educational inequality, techno-solutionism and inclusive tools. Sustainability 2024, 16, 781. [Google Scholar] [CrossRef]

- Nazaretsky, T.; Mejia-Domenzain, P.; Swamy, V.; Frej, J.; Käser, T. The critical role of trust in adopting AI-powered educational technology for learning: An instrument for measuring student perceptions. Comput. Educ. Artif. Intell. 2025, 8, 100368. [Google Scholar] [CrossRef]

- Al-Zahrani, A.M.; Alasmari, T.M. Exploring the impact of artificial intelligence on higher education: The dynamics of ethical, social, and educational implications. Humanit. Soc. Sci. Commun. 2024, 11, 912. [Google Scholar] [CrossRef]

- Hair, J.F.; Babin, B.J.; Anderson, R.E.; Black, W.C. Multivariate Data Analysis, 8th ed.; Cengage Learning: Boston, MA, USA, 2019. [Google Scholar]

- Bond, M.; Khosravi, H.; De Laat, M.; Bergdahl, N.; Negrea, V.; Oxley, E.; Pham, P.; Siemens, S.W.C.G. A meta systematic review of artificial intelligence in higher education: A call for increased ethics, collaboration, and rigour. Int. J. Educ. Technol. High. Educ. 2024, 21, 4. [Google Scholar] [CrossRef]

- Rogers, E.M. Diffusion of Innovations, 5th ed.; Free Press: New York, NY, USA, 2003. [Google Scholar]

- Bates, T.; Cobo, C.; Mariño, O.; Wheeler, S. Can artificial intelligence transform higher education? Int. J. Educ. Technol. High. Educ. 2020, 17, 42. [Google Scholar] [CrossRef]

- Stacks, D.W.; Salwen, M.B. (Eds.) An Integrated Approach to Communication Theory and Research, 2nd ed.; Routledge: New York, NY, USA, 2008. [Google Scholar] [CrossRef]

- Viberg, O.; Hatakka, M.; Bälter, O.; Mavroudi, A. The current landscape of learning analytics in higher education. Comput. Hum. Behav. 2018, 89, 98–110. [Google Scholar] [CrossRef]

- Choung, H.; David, P.; Ross, A. Trust in AI and its role in the acceptance of AI technologies. Int. J. Hum.-Comput. Interact. 2022, 39, 1727–1739. [Google Scholar] [CrossRef]

- Zhou, M.; Liu, L.; Feng, Y. Building citizen trust to enhance satisfaction in digital public services: The role of empathetic chatbot communication. Behav. Inf. Technol. 2025; accessed online. [Google Scholar] [CrossRef]

- Pew Research Center. How Americans View Data Privacy: The Role of Technology Companies, AI and Regulation—Plus Personal Experiences with Data Breaches, Passwords, Cybersecurity and Privacy Policies; Pew Research Center: Washington, DC, USA, 2023; Available online: https://www.pewresearch.org/internet/2023/10/18/how-americans-view-data-privacy/ (accessed on 10 April 2025).

- Willems, J.; Schmid, M.J.; Vanderelst, D.; Vogel, D.; Ebinger, F. AI-Driven Public Services and the Privacy Paradox: Do Citizens Really Care about Their Privacy? Public Manag. Rev. 2022, 24, 2116–2134. [Google Scholar] [CrossRef]

- Hancock, P.A.; Kessler, T.T.; Kaplan, A.D.; Stowers, K.; Brill, J.C.; Billings, D.R.; Schaefer, K.E.; Szalma, J.L. How and Why Humans Trust: A Meta-Analysis and Elaborated Model. Front. Psychol. 2023, 14, 1081086. [Google Scholar] [CrossRef]

- Mustofa, R.H.; Kuncoro, T.G.; Atmono, D.; Hermawan, H.D.; Sukirman. Extending the technology acceptance model: The role of subjective norms, ethics, and trust in AI tool adoption among students. Comput. Educ. Artif. Intell. 2025, 8, 100379. [Google Scholar] [CrossRef]

- Stahl, B.C. Embedding responsibility in intelligent systems: From AI ethics to responsible AI ecosystems. Sci. Rep. 2023, 13, 7586. [Google Scholar] [CrossRef]

- Renick, J.; Wegemer, C.M.; Reich, S.M. Relational principles for enacting social justice values in educational partnerships. J. High. Educ. Outreach Engagem. 2024, 28, 135–152. [Google Scholar]

- Kamalov, F.; Santandreu Calonge, D.; Gurrib, I. New era of artificial intelligence in education: Towards a sustainable multifaceted revolution. Sustainability 2023, 15, 12451. [Google Scholar] [CrossRef]

- Kozak, J.; Fel, S. How sociodemographic factors relate to trust in artificial intelligence among students in Poland and the United Kingdom. Sci. Rep. 2024, 14, 28776. [Google Scholar] [CrossRef]

- Mayer, R.C.; Davis, J.H.; Schoorman, F.D. An Integrative Model of Organizational Trust. Acad. Manag. Rev. 1995, 20, 709–734. [Google Scholar] [CrossRef]

- Saranya, A.; Subhashini, R. A systematic review of explainable artificial intelligence models and applications: Recent developments and future trends. Decis. Anal. J. 2023, 7, 100230. [Google Scholar] [CrossRef]

- Baker, R.S.; Hawn, A. Algorithmic bias in education. Int. J. Artif. Intell. Educ. 2022, 32, 1052–1092. [Google Scholar] [CrossRef]

- Almheiri, H.M.; Ahmad, S.Z.; Khalid, K.; Ngah, A.H. Examining the impact of artificial intelligence capability on dynamic capabilities, organizational creativity and organization performance in public organizations. J. Syst. Inf. Technol. 2025, 27, 1–20. [Google Scholar] [CrossRef]

- Blanka, C.; Krumay, B.; Rueckel, D. The interplay of digital transformation and employee competency: A design science approach. Technol. Forecast. Soc. Change 2022, 178, 121575. [Google Scholar] [CrossRef]

- Díaz-Rodríguez, N.; Del Ser, J.; Coeckelbergh, M.; López de Prado, M.; Herrera-Viedma, E.; Herrera, F. Connecting the dots in trustworthy artificial intelligence: From AI principles, ethics, and key requirements to responsible AI systems and regulation. Inf. Fusion 2023, 99, 101896. [Google Scholar] [CrossRef]

- Tzirides, A.O.; Zapata, G.; Kastania, N.P.; Saini, A.K.; Castro, V.; Ismael, S.A.; You, Y.; dos Santos, T.A.; Searsmith, D.; O’Brien, C.; et al. Combining human and artificial intelligence for enhanced AI literacy in higher education. Comput. Educ. Open 2024, 6, 100184. [Google Scholar] [CrossRef]

- Liu, B.L.; Morales, D.; Roser-Chinchilla, J.; Sabzalieva, E.; Valentini, A.; Vieira do Nascimento, D.; Yerovi, C. Harnessing the Era of Artificial Intelligence in Higher Education: A Primer for Higher Education Stakeholders; UNESCO International Institute for Higher Education in Latin America and the Caribbean: Paris, France; Caracas, Venezuela, 2023; Available online: https://unesdoc.unesco.org/ark:/48223/pf0000386670 (accessed on 10 April 2025).

- Hofstede, G. Culture’s Consequences: Comparing Values, Behaviors, Institutions and Organizations Across Nations, 2nd ed.; SAGE Publications: Thousand Oaks, CA, USA, 2001. [Google Scholar]

- Dell’Aquila, E.; Ponticorvo, M.; Limone, P. Psychological foundations for effective human–computer interaction in education. Appl. Sci. 2025, 15, 3194. [Google Scholar] [CrossRef]

- Honnibal, M.; Montani, I.; Van Landeghem, S.; Boyd, A. spaCy: Industrial-Strength Natural Language Processing in Python. 2020. Available online: https://spacy.io (accessed on 10 April 2025).

- Conneau, A.; Khandelwal, K.; Goyal, N.; Chaudhary, V.; Wenzek, G.; Guzmán, F.; Grave, E.; Ott, M.; Zettlemoyer, L.; Stoyanov, V. Unsupervised Cross-lingual Representation Learning at Scale. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics (ACL 2020), Online, 5–10 July 2020; pp. 8440–8451. [Google Scholar] [CrossRef]

- Chen, S.; Wang, C.; Chen, Z.; Wu, Y.; Liu, S.; Chen, Z.; Li, J.; Kanda, N.; Yoshioka, T.; Xiao, X.; et al. WavLM: Large-Scale Self-Supervised Pre-Training for Full-Stack Speech Processing. IEEE J. Sel. Top. Signal Process. 2022, 16, 1505–1518. [Google Scholar] [CrossRef]

- Chai, C.P. Comparison of Text Preprocessing Methods. Nat. Lang. Eng. 2022, 29, 509–553. [Google Scholar] [CrossRef]

- Bunt, H.; Petukhova, V.; Gilmartin, E.; Pelachaud, C.; Fang, A.; Keizer, S.; Prévot, L. The ISO Standard for Dialogue Act Annotation, Second Edition. In Proceedings of the Twelfth Language Resources and Evaluation Conference, Marseille, France, 11–16 May 2020; Calzolari, N., Béchet, F., Blache, P., Choukri, K., Cieri, C., Declerck, T., Goggi, S., Isahara, H., Maegaard, B., Mariani, J., et al., Eds.; European Language Resources Association: Marseille, France, 2020; pp. 549–558, ISBN 979-10-95546-34-4. Available online: https://aclanthology.org/2020.lrec-1.69/ (accessed on 1 April 2025).

- Nguyen, G.; Dlugolinsky, S.; Bobák, M.; Tran, V.; García, Á.L.; Heredia, I.; Malík, P.; Hluchý, L. Machine Learning and Deep Learning Frameworks and Libraries for Large-Scale Data Mining: A Survey. Artif. Intell. Rev. 2019, 52, 77–124. [Google Scholar] [CrossRef]

- Navarro, G. A Guided Tour to Approximate String Matching. ACM Comput. Surv. 2001, 33, 31–88. [Google Scholar] [CrossRef]

- Suh, K.S. Impact of Communication Medium on Task Performance and Satisfaction: An Examination of Media-Richness Theory. Inf. Manag. 1999, 35, 295–312. [Google Scholar] [CrossRef]

- Walther, J.B. Interpersonal Effects in Computer-Mediated Interaction: A Relational Perspective. Commun. Res. 1992, 19, 52–90. [Google Scholar] [CrossRef]

- Ma, L.; Zhang, X.; Xu, Y.; Liu, C. Perceived Social Fairness and Trust in Government Serially Mediate the Effect of Governance Quality on Subjective Well-Being. Sci. Rep. 2024, 14, 14321. [Google Scholar] [CrossRef]

- Triantari, S.; Vavouras, E. Decision-making in the modern manager-leader: Organizational ethics, business ethics, and corporate social responsibility. Cogito 2024, 16, 7–15. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gkanatsiou, M.A.; Triantari, S.; Tzartzas, G.; Kotopoulos, T.; Gkanatsios, S. Rewired Leadership: Integrating AI-Powered Mediation and Decision-Making in Higher Education Institutions. Technologies 2025, 13, 396. https://doi.org/10.3390/technologies13090396

Gkanatsiou MA, Triantari S, Tzartzas G, Kotopoulos T, Gkanatsios S. Rewired Leadership: Integrating AI-Powered Mediation and Decision-Making in Higher Education Institutions. Technologies. 2025; 13(9):396. https://doi.org/10.3390/technologies13090396

Chicago/Turabian StyleGkanatsiou, Margarita Aimilia, Sotiria Triantari, Georgios Tzartzas, Triantafyllos Kotopoulos, and Stavros Gkanatsios. 2025. "Rewired Leadership: Integrating AI-Powered Mediation and Decision-Making in Higher Education Institutions" Technologies 13, no. 9: 396. https://doi.org/10.3390/technologies13090396

APA StyleGkanatsiou, M. A., Triantari, S., Tzartzas, G., Kotopoulos, T., & Gkanatsios, S. (2025). Rewired Leadership: Integrating AI-Powered Mediation and Decision-Making in Higher Education Institutions. Technologies, 13(9), 396. https://doi.org/10.3390/technologies13090396