1. Introduction

The trajectory computation serves as the foundation for the mission planning and decision-making (MPDM) process of the rocket-powered vehicle swarm, as the trajectory simulation can validate the outcomes of MPDM to enhance the success rate of missions. Due to the real-time demands of the MPDM of rocket-powered vehicle swarm, the promptness of trajectory computation becomes an increasingly crucial consideration. Therefore, within this context, the process of MPDM poses a new challenge for the real-time computation of trajectories for rocket-powered vehicle swarm.

Based on existing research, the trajectory prediction problem can be classified into two types by different perspectives: predicting the trajectory of the own flight vehicle and predicting the trajectory of the target flight vehicle. The former typically involves seeking the optimal trajectory for a flight vehicle under certain constraints. The latter, however, aims to predict the future trajectory within a specific time frame based on the historical trajectory of the target, achieving the purpose of intent recognition. Although this research delves into the trajectory prediction of the own flight vehicle, certain theoretical methods from both categories can be mutually beneficial. Therefore, a summary of the related research of both will be developed next.

For target flight vehicle trajectory prediction, there are currently two main methods. One method is based on the principle of the Kalman filter (KF). This theoretical approach can be used to predict the future state parameters under the constraints of known target vehicle parameters, Gaussian white noise distribution, and associated measurement noise. However, due to the diversity of target vehicle maneuvers, KF methods based on a single dynamical model often struggle to predict accurately. Thus, methods like multi-model filtering [

1,

2,

3] and unscented Kalman filtering [

4,

5,

6] have been proposed to address this issue. The other approach is based on deep learning. In order to overcome the inability of traditional filtering methods to adapt to the varying model, numerous scholars have explored deep neural networks (DNNs) for model-free trajectory prediction. Considering the temporal characteristics of trajectory data, the recurrent neural network represented by long short-term memory (LSTM) has been widely used to train trajectory prediction models. D. Lui designed two distinct model architectures using LSTM, trained with significant offline real trajectory data to predict the positions and velocities of the flight vehicle from raw radar measurement data, and compared their predictions against the interactive multiple model, concluding that data-driven models have good generalization capabilities [

7]. Similar work has been done in [

8,

9,

10].

The methods for predicting the trajectory of the own flight vehicle are also mainly classified into two categories. One type includes traditional analytical methods and numerical integration methods. In the works of [

11,

12,

13,

14,

15,

16], the analytic solution of the glide trajectory for a hypersonic vehicle is derived by a perturbation method and spectral decomposition method. The accuracy of the analytical solution depends on the accuracy and complexity of the model, and thus the derivation process of analytical solutions is often extremely complicated, and the accuracy of results is difficult to guarantee. The numerical integration method is different from the analytical method. Although the high accuracy of its calculation results can be guaranteed, it requires a large amount of computation and a long computation time. The common numerical integration methods include the Runge–Kutta methods and so on. In addition, some intelligent optimization algorithms have been combined to rapidly solve the rocket-powered trajectory parameters. Wei et al. [

17] numerically solved trajectory parameters through polynomial fitting and the Levenberg–Marquardt (L-M) search method. Furthermore, in the work of [

18], a method combining the BP neural network and L-M algorithm was proposed to achieve the accurate and fast computation of a rocket-powered trajectory. In this method, the BP neural network is used to quickly predict the initial values of the rocket-powered trajectory parameters, and the L-M algorithm is used for further numerical optimization calculation. Based on the high efficiency of gradient search and the stochastic nature of particle swarm search, a hybrid particle swarm algorithm was proposed for the optimization calculation of the rendez-vous trajectory of the ascent section of a launch vehicle [

19]. The second category involves DNN-based approaches. Taking advantage of the excellent fitting ability of neural networks to nonlinear models, researchers have applied them to fit and approximate missile trajectories for rapid prediction. Dong et al. [

20] utilized BP neural networks to fit the coefficients of missile trajectory equations to rapidly generate trajectory curves, but in this method, the predictive accuracy remains constrained by the precision of the equations, failing to fully leverage the excellent fitting capability of the neural network. Wang et al. [

21] developed a neural network structure with two hidden layers, trained on traditional model-generated trajectory data. In this way, the fast prediction of rocket-powered trajectories can be realized within a small error margin and its solving time is only one-fourth of the traditional iterative calculation algorithms. Wang et al. [

22] used the optimal trajectory data obtained through pseudo-spectral methods to train deep neural networks, obtaining optimal trajectory neural network models. Compared to traditional methods, this approach not only meets accuracy requirements but also exhibits good generalization. Similar works using DNN-based onboard controllers for real-time optimization control include [

23,

24]. In addition, there have been preliminary DNN-based algorithms developed to solve the problem of precise landing [

25,

26,

27] and the orbit transfer of spacecraft [

28,

29,

30,

31].

Leveraging the strong generalization ability of deep learning methods and the inherent efficiency of matrix-based computation, many studies have developed DNN-based approaches to enable onboard applications that meet real-time computing requirements [

22,

23,

31,

32]. In this study, building on the success of deep learning techniques in rapidly generating optimal control solutions, this paper proposes a real-time trajectory rapid computation method based on deep neural networks for rocket-powered vehicles. This method ensures high accuracy while achieving the millisecond-level rapid computation of trajectory, and compared to traditional iterative calculation methods, it better meets the real-time requirements of MPDM for rocket-powered vehicles. Moreover, the model in [

21,

22] exhibits predictive errors in the kilometer range in the three-dimensional direction, whereas the predictive accuracy of the model in this paper is superior, with average errors within the hundred-meter range. The DNN-based trajectory prediction model obtained in this paper takes the starting and target positions of rocket-powered vehicles as input and outputs three-dimensional trajectory scatter data with a time sequence. The main contributions of this paper are in two aspects:

To fully leverage the advantages of deep neural networks (DNNs), this paper proposes a technical framework based on the segmented trajectory characteristics of rocket-powered vehicles, consisting of data generation and segmentation, segmented training, and multi-model fusion prediction. Monte Carlo simulation experiments were conducted on the final trajectory generator, confirming the effectiveness of the proposed approach.

To support the practical training of the proposed DNN-based framework, trajectory data is generated using the traditional iterative calculation method. In order to ensure that the dataset covers arbitrary launch conditions within the national territory, characteristic parameters for trajectory computation are determined based on trajectory principles, allowing for effective range definition and gridding. Additionally, the data is segmented in accordance with the phase-based training strategy, while ensuring logical consistency across different trajectory segments.

Overall, this research effectively addresses the main point that the calculation time of the traditional method is incapable of meeting real-time decision making and rapid response needs for flight vehicles, bearing significant engineering significance.

The rest of the paper is arranged as follows.

Section 2 describes the problem and the research idea.

Section 3 details the creation of the dataset and the implementation of the segmentation strategy.

Section 4 describes the process of determining the neural network structure and training hyperparameters, and obtains the relevant neural network model.

Section 5 describes the multi-scaled neural network model cooperation strategy and performs the associated numerical simulations, evaluating the performance of the DNN-based trajectory prediction model. The main work of this paper is summarized in

Section 6.

3. Homemade Dataset and Preprocessing

3.1. Determination of Rocket-Powered Trajectory Calculation Characteristic Parameters

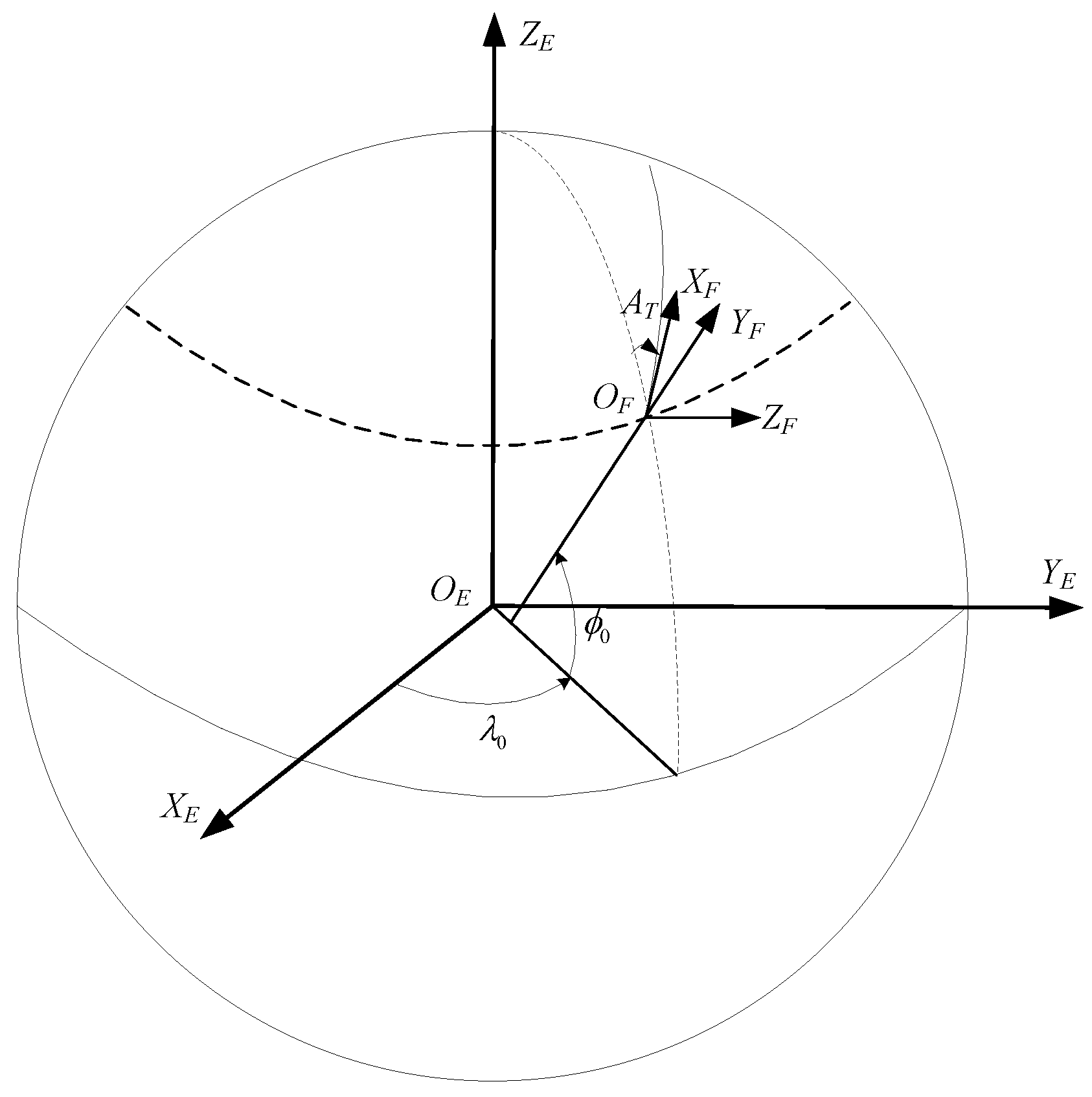

To describe the positions and the law of motion of the flight vehicle, the Earth-Centered–Earth-Fixed (ECEF) system and the Launch Coordinate system (LCS) are commonly employed. The sample data involved in this paper are generated in the LCS. Therefore, the LCS is used as the reference system to explore how the rocket-powered trajectory calculation characteristic parameters are determined.

As shown in

Figure 2, the

coordinate system is the ECEF system and the

coordinate system is the LCS. The starting point of the flight vehicle is

, the

axis is defined by a vertical line through the starting point, the upward direction is positive, and the

axis is perpendicular to the

axis and points in the direction of aiming. At the starting point, the angle

between the

axis and due north along the astronomical meridian is referred to as the geodetic azimuth of the starting point. The

axis together with the

,

axis forms a right-handed right-angle coordinate system. After the longitude, latitude, and altitude of the starting point and the target point are determined, the geodetic azimuth can be calculated through inverse geodetic algorithm. Then, the position of the LCS is completely determined, and thus a standard rocket-powered trajectory curve is determined.

In order to acquire a large volume of samples, the rocket-powered trajectory calculation characteristic parameters need to be gridded at specific intervals and arranged in combinations to form multiple sets of initial parameters. To ensure broad sample coverage and persuasive representation, it is necessary to determine the scopes for each characteristic parameter prior to the gridding. Assuming the latitude, longitude, and altitude of the starting point and target point as characteristic parameters, the scope of the latitude and longitude of the target point cannot be precisely determined. Therefore, based on the principles of geodetic direct and inverse calculation, this study utilizes the latitude, longitude, altitude of the starting point, the range, the geodesic azimuth, and the altitude of the target point as characteristic parameters. Furthermore, considering the Earth as a homogeneous rotating ellipsoid and assuming the same flight environment corresponding to each rocket-powered trajectory, the variation in longitude at the starting point has minimal influence on the flight trajectory. That is, after other characteristic parameters are determined, the standard trajectory remains consistent at each longitude. Consequently, the longitude of the starting point is removed and the latitude, altitude of the starting point, the range, the geodesic azimuth, and the altitude of the target point are chosen as the final characteristic parameters. For ease of description, the relevant parameter symbols are defined as shown in

Table 1.

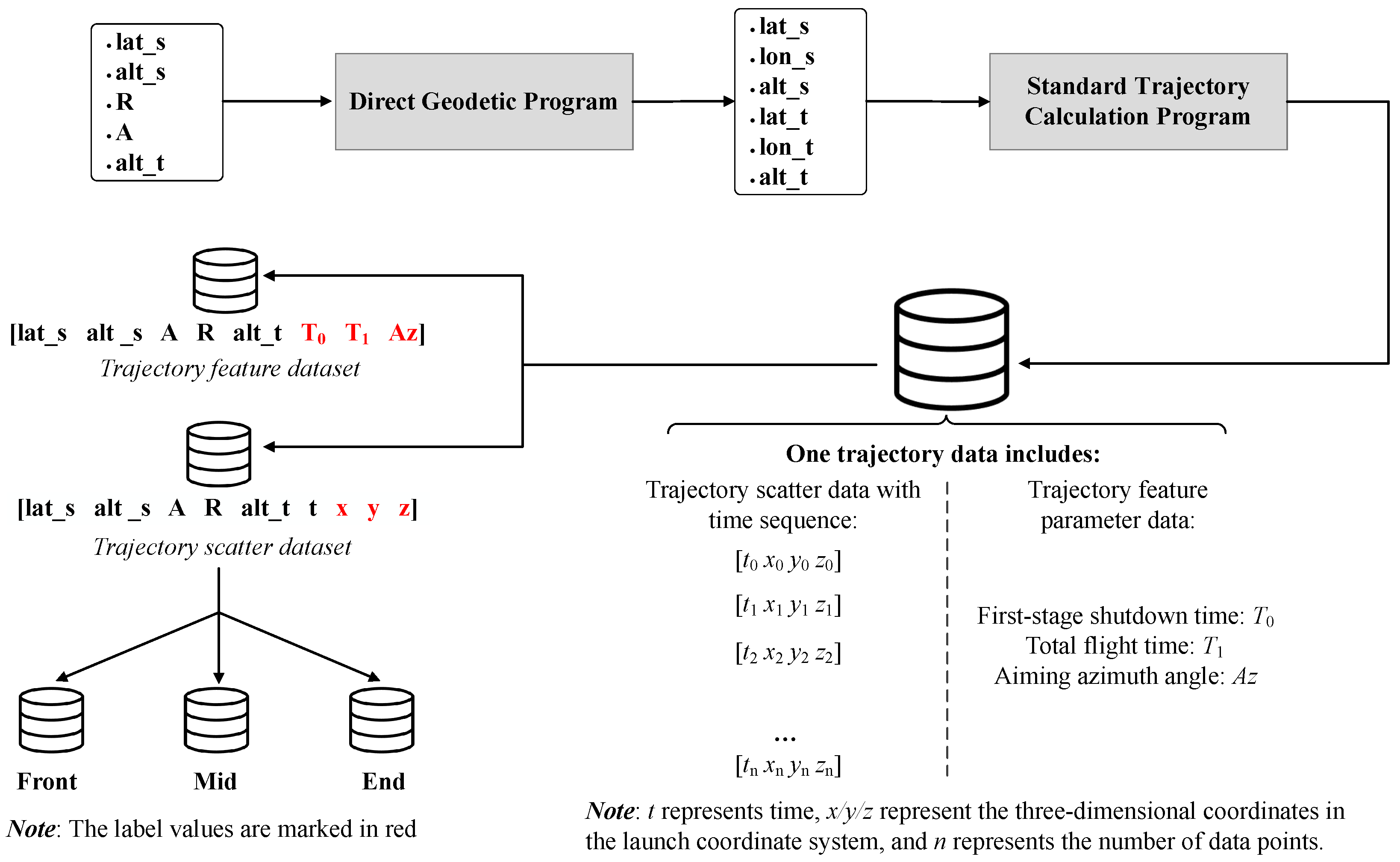

3.2. Training Data Generation

For DNNs, the quality of the sample data sets the upper limit for the predictive performance of model. Hence, training samples should strive to cover all possible scenarios of the problem. After determining the rocket-powered trajectory calculation characteristic parameters, the scopes and intervals for each parameter are further defined. These intervals are established based on a comprehensive assessment of the hardware conditions in the computing platform and the corresponding training effects of different intervals.

Following the ranges and intervals outlined in

Table 2 for gridding, a total of 599,508 sets of rocket-powered trajectory initial parameters will be generated. Should all initial parameters prove valid, this would result in 599,508 individual trajectories. Upon determining the position of the starting point, a gridding representation of the range and the Geodetic azimuth angle is depicted in

Figure 3.

After obtaining multiple sets of initial parameters, the corresponding geographic coordinates of the starting point and the target point are computed by direct geodetic algorithm. Then, the trajectory data is calculated using a standard trajectory calculation program. As for the principles of the standard trajectory calculation program, it involves fourth-order Runge–Kutta numerical integration based on the motion model of a flight vehicle. Its solution process follows the following assumptions: (1) Under the action of the control system, the vehicle is always in an instantaneous torque equilibrium state; (2) The Euler angles involved in the computation are considered to be small quantities, meaning that their sine values are approximated by their radian values, and their cosine values are taken as 1. When products of these angle values appear, they are treated as higher-order terms and thus neglected. Upon acquiring multiple raw trajectory data, further processing is required to derive both trajectory feature data samples and trajectory scatter data samples (processed into three segments:

Front,

Mid, and

End), where the trajectory scatter data unfolds each trajectory in a chronological sequence. The specific workflow for sample generation is illustrated in

Figure 4.

3.3. Segmented Training Strategy Based on Domain Knowledge

The segmented training strategy refers to segmenting trajectory scatter data into three segments, as shown in

Figure 4, to train the neural networks of different structures and obtain corresponding models. The segmented training strategy is designed based on the domain-specific knowledge regarding the dynamics and segmented aerodynamic effects of a rocket-powered vehicle. According to whether the main engine of the flight vehicle is working or not, the flight trajectory is divided into active and passive segments. Furthermore, based on the extent of aerodynamic forces acting on the vehicle, the passive segment can be divided into the free-flight segment and the re-entry segment. Thus, the flight process of the vehicle is sequentially divided into an active segment, a free segment, and a re-entry segment.

In the active segment, the main forces acting on the flight vehicle include gravity, engine thrust, aerodynamic forces, control forces, and the corresponding moments they generate. During the free-flight segment, the influence of gravity on the flight vehicle is significantly greater than that of aerodynamic forces. In contrast, the braking effect of the aerodynamic force on the flight vehicle is much greater than the influence of gravity in the re-entry segment, resulting in intense aerodynamic heating that is markedly distinct from those in the free-flight phase. Overall, the force conditions experienced by the vehicle vary significantly across the different flight phases, resulting in distinct differential equations of motion for each segment. Therefore, during model training, neural networks with different structures are required to fit the nonlinear relationships corresponding to the equations of motion in each phase, thereby improving the overall prediction accuracy of the trained model. In conclusion, the segmented training strategy is theoretically supported and enhances the interpretability of the multi-scaled neural networks model.

For the trajectory scatter data, segmentation boundaries are defined as follows: each trajectory scatter data from time 0 up to the first-stage shutdown time is divided into the Front sample (for the active segment). Given that the free-flight segment typically constitutes 80% to 90% of the total trajectory time and the maximum flight time in the samples is 666.1 s, it can be concluded that the combined duration of the re-entry and active segment totals approximately 133 s at most. Using the first-stage shutdown time as the endpoint of the active segment, it is observed that the minimum shutdown time among all samples is 58.6 s, implying that the maximum duration of the re-entry phase in the samples is approximately 74.4 s. Given a time interval of 1 s between scatter data points, the last 80 rows of scatter data for each trajectory are selected as the End sample (for the re-entry segment), while the remaining intermediate scattered data is assigned as the Mid sample (for the free-flight segment).

4. DNN Optimization

The trajectory scatter data samples—

Front,

Mid, and

End—along with the feature data samples are used to train

Model_Front,

Model_Mid,

Model_End, and

Model_Feature, respectively. Among them, the input and output of

Model_Front,

Model_Mid, and

Model_End are identical and collectively defined as

,

. Meanwhile, the input and output of

Model_Feature are defined as

,

. Once the inputs and outputs of neural networks are determined, additional relevant hyper-parameters for training DNN need to be defined. The primary parameters involved in the process of training are listed in

Table 3.

The approach for determining the structure and training parameters of Model_Front, Model_Mid, Model_End, and Model_Feature is essentially the same. Thus, the subsequent optimization process will be elucidated using the training of Model_Front as an illustrative example.

4.1. Feature Normalization

After determining the sample features and labels, normalizing the data is crucial to eliminate the influence of the base unit and value ranges among the features. In this work, a Min-Max normalization technique is employed, linearly transforming the raw data columns to fall within the [0, 1] range. The normalization formula is expressed as

where

X is the original data,

is the normalized data,

is the minimum value of each feature, and

is the maximum value of each feature.

4.2. Loss Function Design

The prediction of trajectories is fundamentally a regression problem, where the Mean Squared Error (MSE) function is commonly utilized as the loss function. However, concerning the trajectory scatter data in the LCS, the z-label data values are relatively smaller compared to the x-label and y-label. As a result, the traditional MSE function tends to diminish the impact of errors associated with the z-label on the weight updates during error backpropagation, leading to suboptimal weight updates. To address this imbalance, a weighting matrix is introduced to amplify the error contribution of z-label values, enabling the better learning of z-label values.

Define the number of rows of data for a batch as

m, and the corresponding predicted value matrix is

and the real value matrix is

then the corresponding variance loss matrix can be obtained by (

7) and (

8):

where

Define the weight matrix

where

,

,

are the error weights corresponding to

x-label,

y-label, and

z-label, respectively. Therefore, the final average loss function is

where

means to sum the elements of the matrix.

In this way, the learning degree of each label during the error backpropagation process can be adjusted by fine-tuning w.

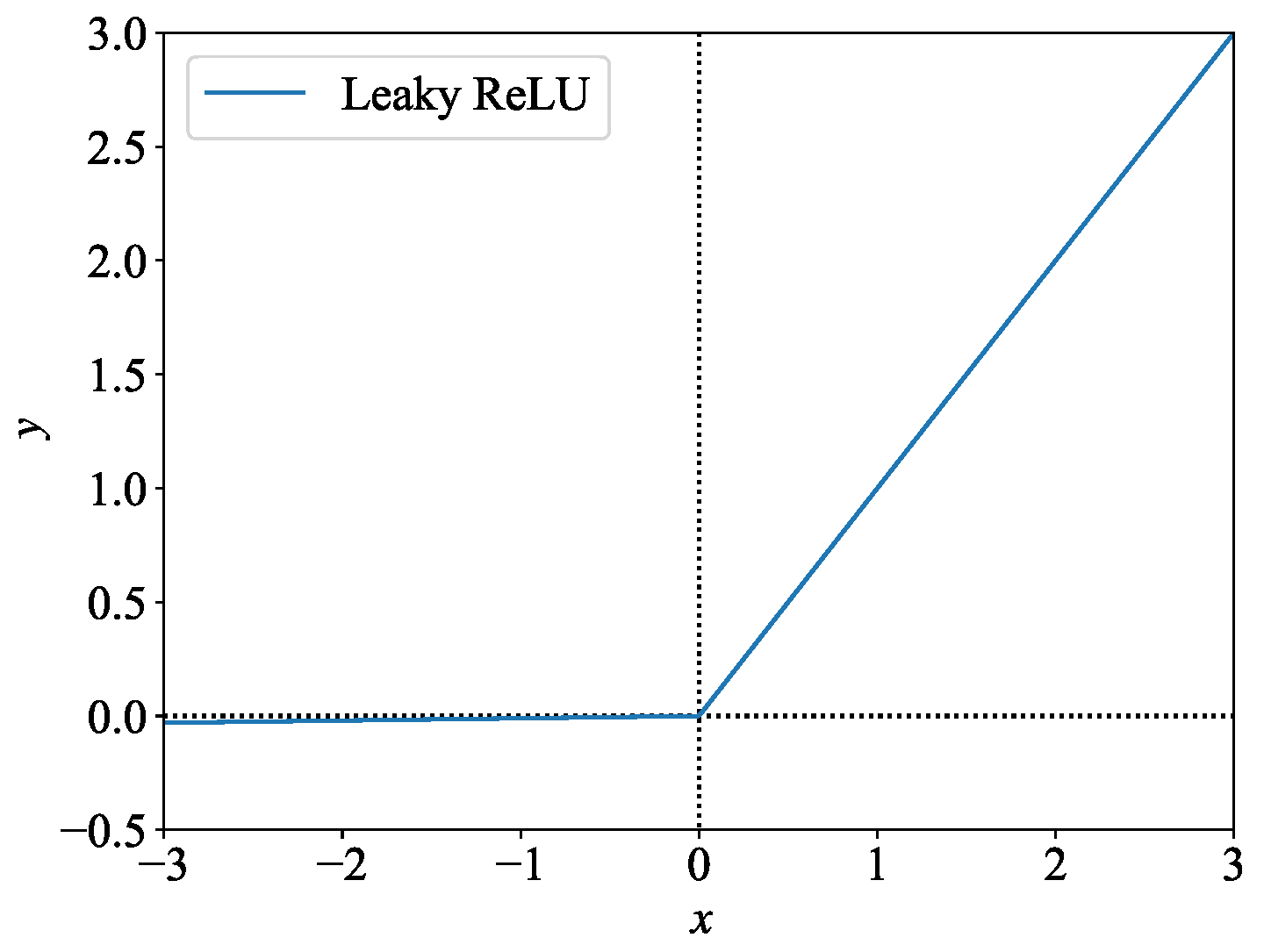

4.3. Activation Function, Optimizer, and Learning Rate

4.3.1. Selection of Activation Function

To avoid the problem of neuron death, the LeakyReLU function is chosen as the activation function, as illustrated in

Figure 5. The specific expression is

4.3.2. Selection of Optimizer

Compared to other optimizers (like Stochastic Gradient Descent, SGD), the Adam optimizer performs better when dealing with large datasets [

33]. Given the large number of data samples involved in this study, the Adam optimizer is chosen.

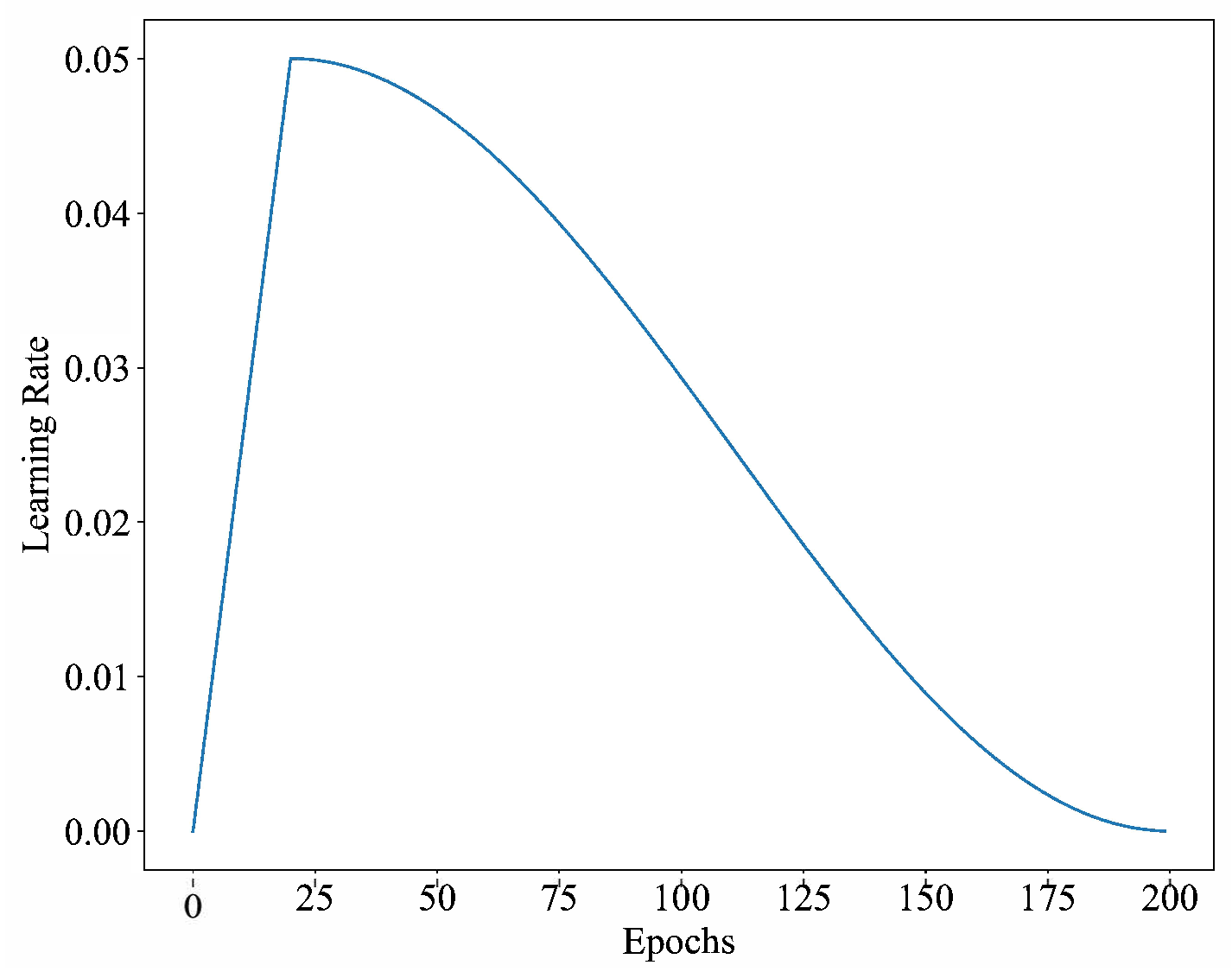

4.3.3. Learning Rate (LR)

To improve the training efficiency and effectiveness, a dynamically varying learning rate is used, and its changing form is called “cosine_warmup”. At the beginning of training, the learning rate increases linearly to the specified value and then decreases in a cosine fashion.

In

Figure 6, the vertical axis of the graph represents the learning rate, and its maximum value is set to 0.05; the horizontal axis represents the epochs, for a total of 200. During the first 1/10th of the epochs, the learning rate increases linearly from 0 to 0.05, and after that, the cosine-decays to 0 from 0.05.

4.4. Determination of Network Structure

The network architecture is a crucial factor influencing the training effectiveness of neural network, setting the lower limit for the training effectiveness. Changing the number of hidden layers or the number of neurons per layer essentially increases the total number of neurons in the network, enhancing model complexity, and its fitting capability. Too few hidden layers or neurons can result in underfitting, while an excessive number can prolong the training time and potentially lead to overfitting. In this paper, assuming the same number of neurons per layer, the number of hidden layers and the number of neurons per layer are determined by cross-validation.

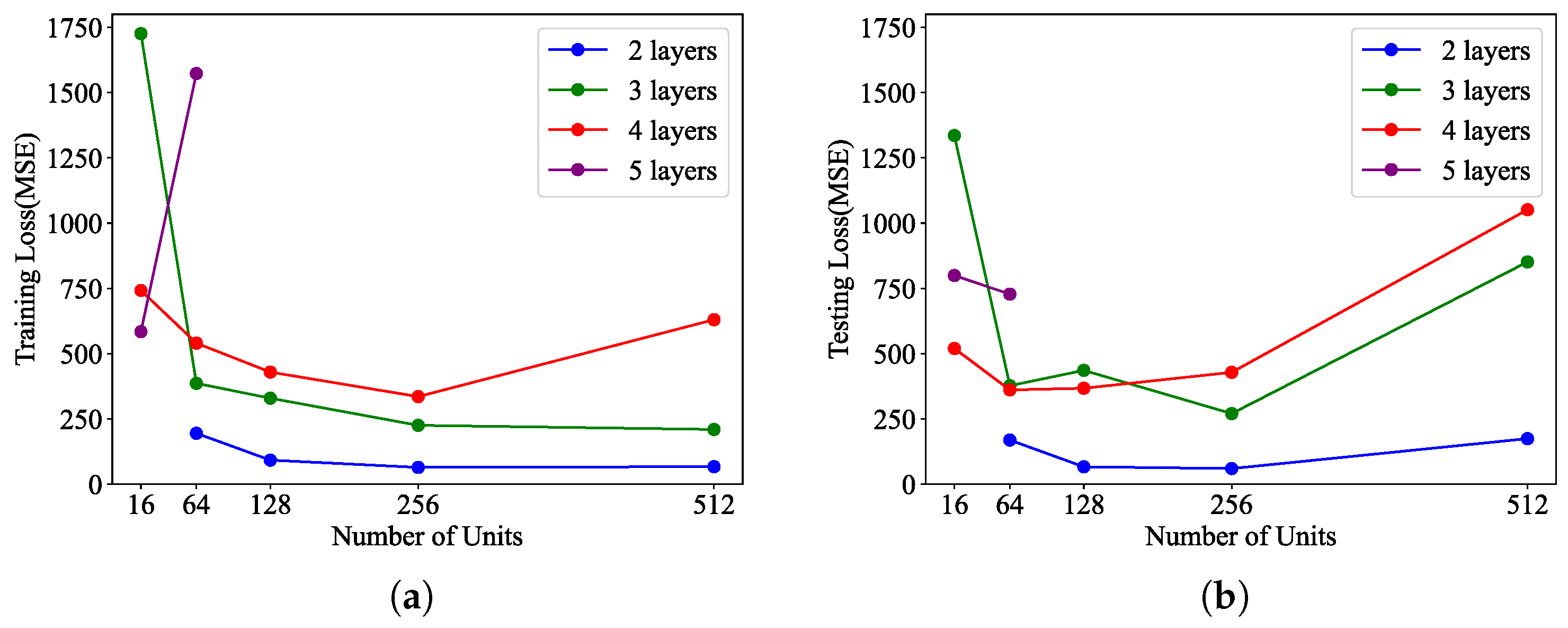

As illustrated in

Figure 7, the training error and testing error corresponding to different combinations of layers and units are recorded and analyzed. The results lead to the following conclusions: first, with a fixed number of units, an increase in layers results in the increase in training and testing errors; second, with a fixed number of layers, an increase in units showcases a trend of decreasing-then-increasing or decreasing-then-stabilizing in training and testing errors. Based on the above analysis, a network structure with 2 hidden layers and 256 units per layer is selected. This architecture achieves low training and testing error, indicating superior learning performance and generalization capability.

4.5. Determination of Learning Rate

The learning rate (LR) is a tuning parameter that affects the convergence speed and learning effectiveness of deep neural networks. To determine a suitable LR that allows the model to achieve optimal loss without compromising training speed, certain training experiments using different LR values on the network structure are defined in

Section 4.4 are conducted.

The outcomes are illustrated in

Figure 8 and several conclusions can be drawn: a smaller LR can decrease fluctuations in training and validation losses, but an excessively small LR will significantly slow down the convergence speed. Therefore, to strike a balance between accuracy and computational load, a learning rate of 0.001 is selected for subsequent experiments.

4.6. Summary of Training Parameters

Following the above optimization ideas of model training, the optimal architecture and training parameters of all models are finalized as summarized in

Table 4, where

n denotes the number of sampling interval rows in the original samples.

5. Simulation Results

5.1. Multi-DNN Cooperation Strategy

The ranges of t for model_Front, model_Mid, and model_End are determined by the maximum and minimum values of the time features in their corresponding training sets. The specific determination process is as follows: Assuming the ranges of t for front, mid, end datasets are , , and , respectively. So, in the inference process, the ranges of t for inputs to model_Front, model_Mid, and model_End are, respectively, determined as , , , respectively.

A complete trajectory prediction process is shown in Algorithm 1.

| Algorithm 1: Multi-DNN cooperation. |

![Aerospace 12 00760 i001 Aerospace 12 00760 i001]() |

5.2. Analysis of Prediction Accuracy

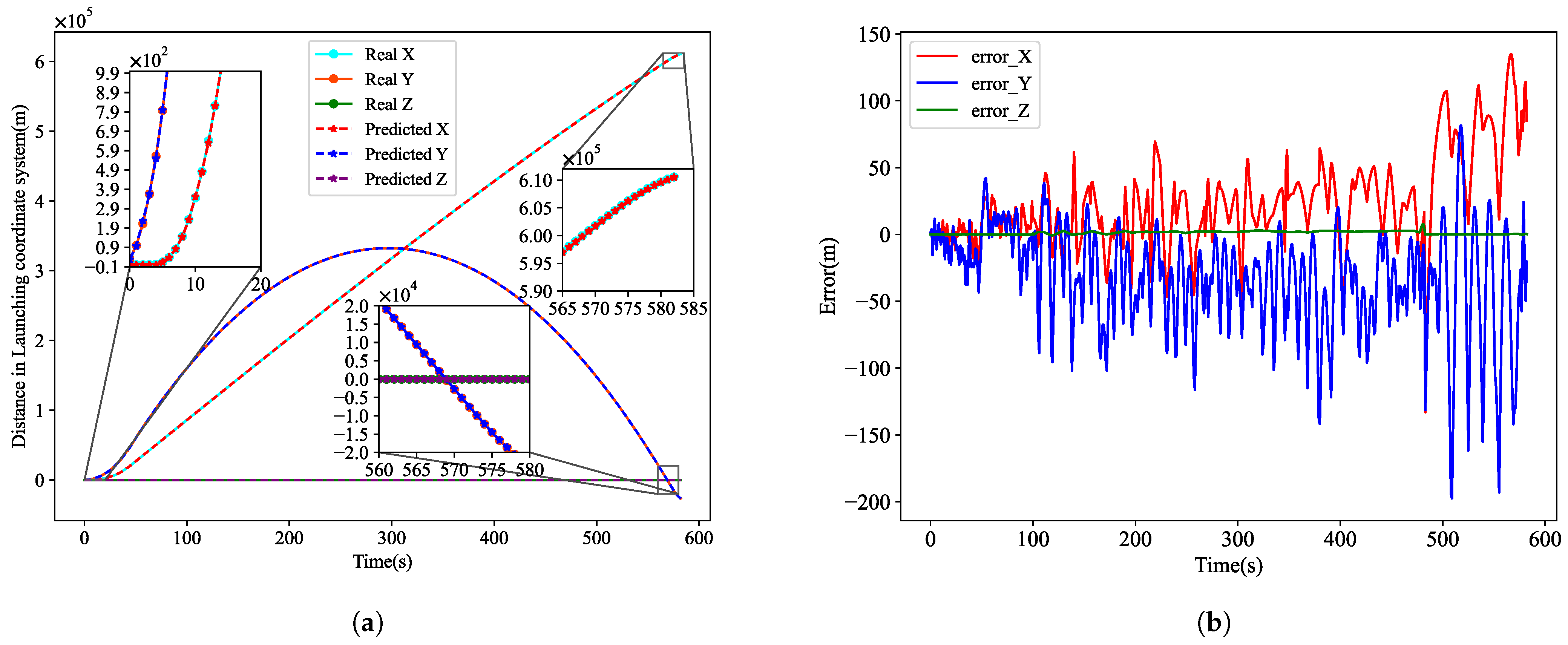

To evaluate the performance of the “trajectory generator” based on multi-scale network fusion, a set of initial parameters [42°,1000,210,611111,4000] (not in the training and testing sets) is randomly selected. The prediction accuracy of the “trajectory generator” is assessed by comparing the trajectory predicted with the trajectory obtained through traditional iterative calculation methods.

Figure 9a illustrates the comparison of the distance at each moment in the

x,

y, and

z directions in the LCS. Notably, the distances in the

z direction are mostly around 0.

Figure 9b shows the corresponding errors. The errors are analyzed as follows:

(1) The

z directional values are primarily close to 0, and are significantly different from those in the

x and

y directions. Yet, prediction error in the

z direction is minimal, so the learning effectiveness in the

z direction has not been significantly affected by large difference in the labels, indicating the effectiveness of the loss function designed in

Section 4.2.

(2) Errors in the x, y directions tend to increase toward the later stages of the flight. This trend is due to the increasing label values in the x, y directions over flight time. Consequently, the magnitude of the mean squared error becomes considerably larger, resulting in relatively poorer convergence compared to earlier flight stages, finally causing a slight increase in errors.

Figure 9c displays a comparison of the complete flight trajectory in the LCS, while

Figure 9d illustrates the Euclidean distance between the actual and predicted positions at each moment, representing the prediction error in three-dimensional space. Observing

Figure 9d, it is evident that the Euclidean distance slightly increases over flight time, similarly to the marginal increase in errors in the

x and the

y directions depicted in

Figure 9b. Overall, the majority of instances correspond to Euclidean distances below 100, with distances ranging between 100 and 200 in the later stages of flight. Such predictive accuracy is viable for providing trajectory simulation verification for the process of MPDM for multi rocket-powered vehicles.

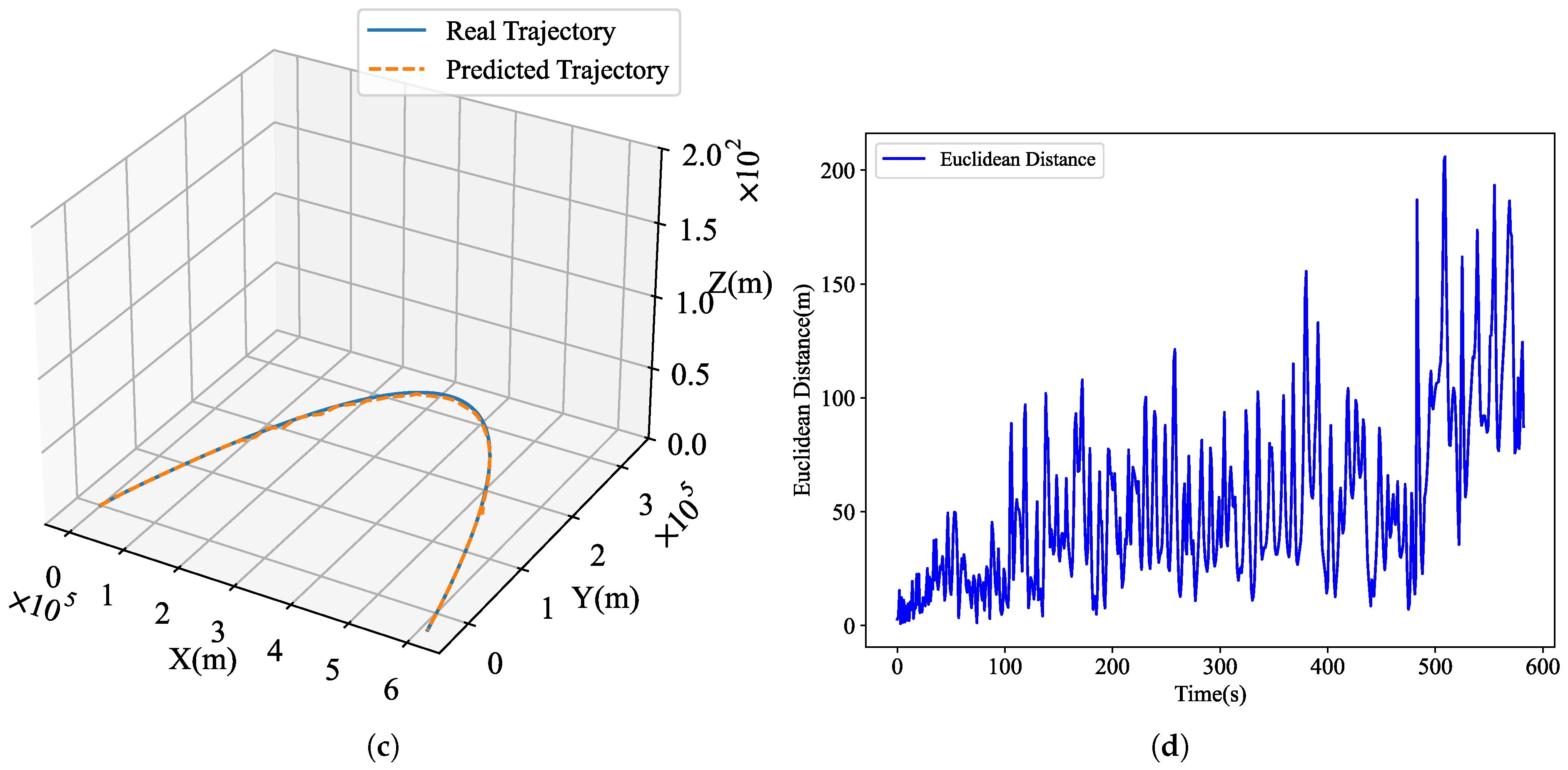

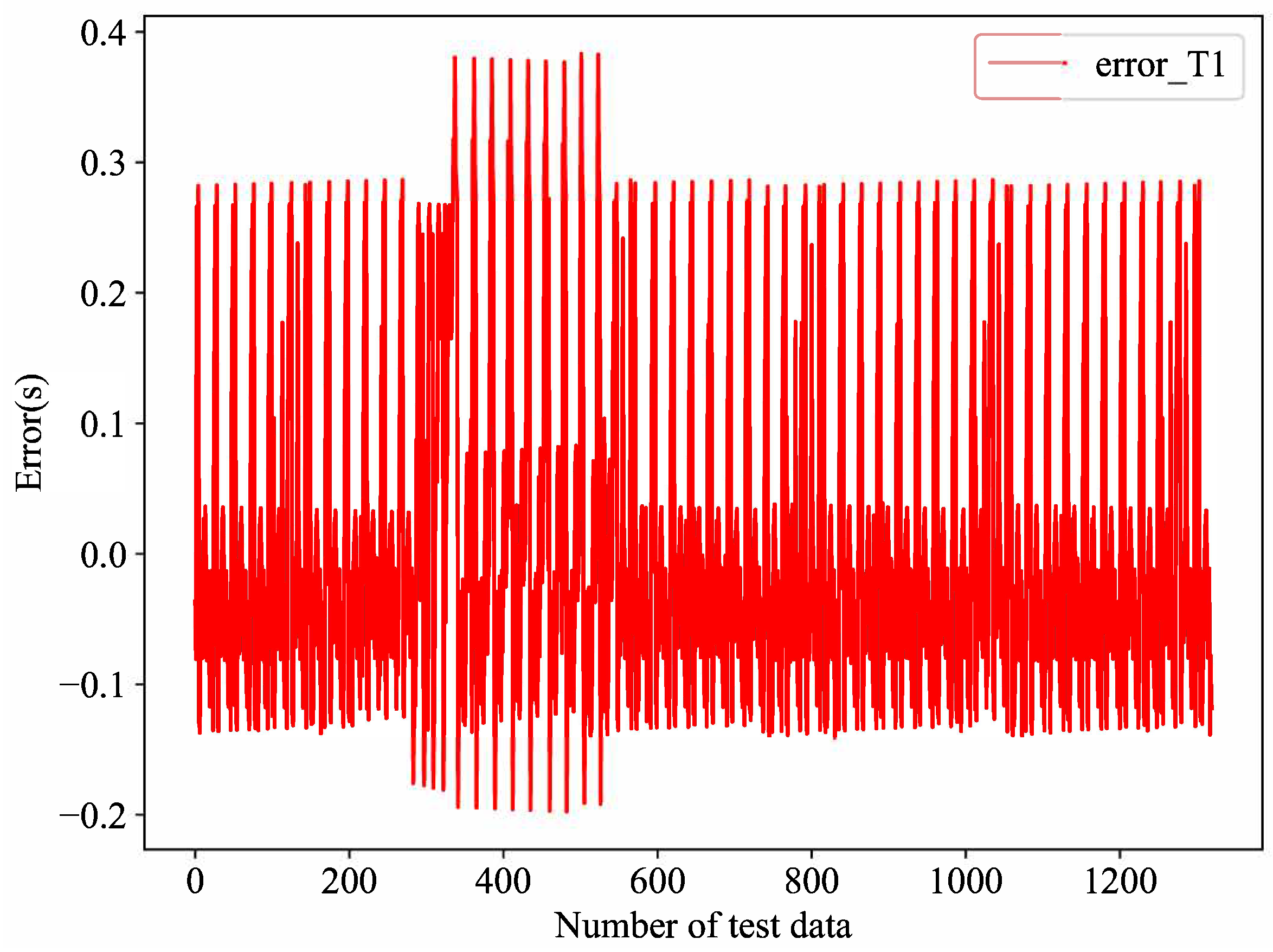

5.3. Monte Carlo Simulation

To further validate the generalization capability of the models, Monte Carlo simulations and error analyses are conducted. A total of 1320 sets of trajectory calculation parameters are randomly generated. The trajectory data predicted by the DNN-based method is compared with that computed by the traditional iterative calculation method to derive the statistical details of the associated errors.

Before comparing the trajectory data, it is important to briefly outline the predictive accuracy of

model_Feature, as the algorithm 1 relies on its prediction of

T1.

Figure 10 illustrates the

T1 prediction error curve for the 1320 test cases, with a maximum error not exceeding 0.33 s. This error margin satisfies the accuracy requirements for the overall trajectory prediction.

Next, the maximum value, minimum value, median value, and average value of the absolute error corresponding to each trajectory are statistically analyzed, and the following box chart is obtained as shown in

Figure 11.

Based on the box plot of maximum values, it is evident that the maximum error in the

x,

y direction for each predicted trajectory is around 200 m. This indicates a good stability in the model predictions, without any significantly anomalous results. Simultaneously, within the median box plot, the median error in the

x,

y direction mostly lies around 20 m. This signifies a high level of prediction accuracy for the majority of the time sequence data, as also evident from the box plot of the mean values. The specific maximum, minimum, median, and mean values of errors are as

Table 5.

5.4. Validation of Segmentation Strategy

The main innovation of this paper lies in utilizing a segmentation training strategy to enhance the prediction accuracy. To demonstrate the feasibility and effectiveness of this strategy, models trained without a segmentation strategy are used for prediction on the same Monte Carlo simulation data in

Section 5.3, obtaining statistical information on the corresponding prediction errors. The network structure and training parameters employed in the non-segmented training are illustrated in

Table 6.

To better highlight the effectiveness of the segmented training strategy,

Table 7 shows the prediction errors of the DNN models corresponding to the segmented and non-segmented training strategies under the same Monte Carlo experiments.

Frome

Table 7, one can see that it is evident that the maximum and average prediction errors of the models trained without segmentation are approximately ten times higher than those trained with segmentation strategy. It demonstrates that the segmentation training strategy is effective in reducing the overall prediction errors and enhancing prediction stability.

5.5. Analysis of Calculation Time

The aim of this study is to achieve the rapid prediction of rocket-powered trajectory at the millisecond level while ensuring accuracy, meeting the real-time requirements of mission planning and decision-making. The number of computational parameters and multiply-accumulate operations (MACs) of the trajectory generator are counted, with the results being 345.548 K and 0.34779 M, respectively. An actual inference time testing program was run to measure the solving time of the “trajectory generator” when predicting different numbers of trajectories. Meanwhile, to make a comparison, the solving time of the traditional iterative calculation method was also recorded. The traditional method was primarily executed on an Intel(R) Core(TM) i7-10700 CPU, while the inference time of the DNN-based method was tested on an NVIDIA GeForce GTX 1050 Ti GPU.

According to the results in the above

Table 8, the DNN-based method can realize a millisecond-level trajectory solution. Particularly when considering the solving time for 1000 rocket-powered trajectories, the DNN-based method has a significant advantage in large-scale rocket-powered trajectory calculations and can meet the real-time requirement of MPDM for rocket-powered vehicle swarm.

6. Conclusions

This paper introduced a DNN-based method for predicting the trajectory of a rocket-powered vehicle, which achieves millisecond-level rapid trajectory prediction while ensuring prediction accuracy, thus meeting the real-time requirements of mission planning and decision-making for rocket-powered vehicles. The final trajectory generator is obtained through the fusion of multi-DNN models, which were developed using a segmented training strategy based on the characteristics of actual trajectory segments. The trajectory generator can predict any single trajectory launched within the national territory in milliseconds while maintaining a prediction error at the scale of 100 m. Moreover, it can simultaneously predict 1000 full trajectories in just 321 milliseconds, showcasing excellent scalability and computational efficiency. Simulation results verify the effectiveness of the segmented training strategy, while comprehensive analysis further confirms the proposed method’s strengths in prediction accuracy, generalization ability, stability, and runtime performance.

The method proposed in this paper is applicable to trajectory prediction for various types of aircraft; however, when formulating segmented strategy, it is essential to consider the specific trajectory characteristics of each type of aircraft. Additionally, in future work, distributed model inference methods can be employed to further reduce the time required for trajectory generation in large-scale scenarios.