Abstract

A cooperative game negotiation strategy considering multiple constraints is proposed for distributed impulsive multi-spacecraft approach missions in the presence of defending spacecraft. It is a dual-stage decision-making method that includes offline trajectory planning and online distributed negotiation. In the trajectory planning stage, a relative orbital dynamics model is first established based on the Clohessy–Wiltshire (CW) equations, and the state transition equations for impulsive maneuvers are derived. Subsequently, a multi-objective optimization model is formulated based on the NSGA-II algorithm, utilizing a constraint dominance principle (CDP) to address various constraints and generate Pareto front solutions for each spacecraft. In the distributed negotiation stage, the negotiation strategy among spacecraft is modeled as a cooperative game. A potential function is constructed to further analyze the existence and global convergence of Nash equilibrium. Additionally, a simulated annealing negotiation strategy is developed to iteratively select the optimal comprehensive approach strategy from the Pareto fronts. Simulation results demonstrate that the proposed method effectively optimizes approach trajectories for multi-spacecraft under complex constraints. By leveraging inter-satellite iterative negotiation, the method converges to a Nash equilibrium. Additionally, the simulated annealing negotiation strategy enhances global search performance, avoiding entrapment in local optima. Finally, the effectiveness and robustness of the dual-stage decision-making method were further demonstrated through Monte Carlo simulations.

1. Introduction

With the rapid development of space technology, space competition has become increasingly intense, and threats to space security are on the rise. In response to the complex and changing space situation, countries around the world are competing to enhance spacecraft performance, achieving significant breakthroughs in computational capability, maneuverability, and intelligence levels. Against this backdrop, orbital games [1] have received widespread attention, with typical application scenarios including orbital pursuit–evasion [2], orbital defense [3], and orbital pursuit–defense [4]. However, a single satellite is limited by computational resources, maneuvering energy, and payload capacity, which leads to evident limitations in executing tasks in orbit. Therefore, the collaborative deployment and application of multi-aircraft motion systems have become a current research hotspot in the field of aerospace, and it is essential to develop high-performance distributed cooperative control technologies. Distributed spacecraft cooperative control methods are fundamental to tasks such as in-orbit servicing, space target surveillance, and orbital games. Given the complexity of future orbital tasks, as well as the high operational costs and limited resources of spacecraft, the trajectory planning must consider spacecraft safety while optimizing objectives like fuel consumption and time efficiency. Therefore, the flight trajectories of multi-spacecraft need to be optimized to achieve the best cooperative approach strategy.

Currently, spacecraft trajectory planning methods have been widely studied. Traditional planning methods can generally be categorized into two main types: indirect methods and direct methods. Indirect methods adopt an analytical optimization approach based on optimal control theory. They reformulate the optimal control problem as a boundary value problem (BVP) and derive the first-order necessary conditions (FONCs) for optimal trajectories using variational principles. However, as spacecraft missions grow increasingly complex, trajectory planning problems also scale in size and difficulty, with more constraints being introduced. Such complexities significantly increase the challenges of deriving and numerically solving FONCs, leading to higher computational costs. Moreover, indirect methods are highly sensitive to initial values, which limits their practical application in real-world engineering scenarios [5]. Direct methods discretize the state and control variables, transforming the continuous-time optimal control problem into a finite-dimensional nonlinear programming (NLP) problem, that can be solved using numerical optimization techniques. Compared to indirect methods, although direct methods sacrifice some accuracy, they have gained widespread attention due to their numerical robustness, efficiency, and ability to handle complex constraints. Gradient-based techniques [6] leverage numerical optimization algorithms like sequential quadratic programming (SQP) to solve trajectory planning problems, while convexification approaches [7] transform non-convex optimization problems into convex ones to improve computational efficiency. Some researchers have attempted to combine direct methods and indirect methods to leverage their complementary advantages in addressing the complex problems of spacecraft trajectory planning [8]. However, direct methods tend to converge to local optima due to their gradient-based nature, and the computational cost increases with the addition of constraints.

Heuristic methods provide innovative approaches to trajectory optimization, with representative techniques including Genetic Algorithms (GA) [9], Particle Swarm Optimization (PSO) [10], and Ant Colony Optimization (ACO) [11] demonstrating success in solving complex optimization problems. Such methods are particularly effective for solving complex nonlinear, multi-modal, non-convex, or discontinuous optimization problems, which are common in engineering scenarios. Their strong global search capabilities can effectively address the issue of sensitivity to initial conditions and demonstrate outstanding performance in engineering applications [11]. In the early stages of spacecraft trajectory planning, the focus was typically on optimizing a single objective, such as fuel consumption or flight time. In recent years, researchers have increasingly turned their interest toward multi-objective trajectory optimization problems. Solving such problems can be viewed as a process of determining the Pareto front. Advanced multi-objective optimization algorithms, such as MOPSO [12] and NSGAII [13,14,15], have already been successfully applied to single-spacecraft trajectory optimization. Such methods explore the solution space through iterative optimization. To improve the computational efficiency of trajectory planning for spacecraft, the Clohessy–Wiltshire (CW) equations are typically adopted as the foundational model [13,15,16]. This model is derived from the precise nonlinear equations of motion in the Local Vertical Local Horizontal (LVLH) coordinate system, linearized to obtain relative dynamics equations. The simplifications made during the derivation result in insufficient accuracy for modeling elliptical orbits, limiting its applicability to circular orbits and short-range approach scenarios. However, the linearization characteristics of the CW equations enables the explicit derivation of the relationship between impulsive maneuvers and terminal states, allowing the rapid evaluation of solution quality in each iteration without numerical integration. This not only enhances computational efficiency but also facilitates future engineering implementation for on-orbit applications. Therefore, this study focuses on approach decision-making methods for near-circular orbits based on the CW equations. Future work could extend the proposed trajectory planning method to the elliptical orbits by reducing the complexity of the computational solution of nonlinear dynamic equations and improving computational efficiency [17]. Additionally, intelligent methods such as imitation learning [18] and reinforcement learning [19] can be combined to achieve approach control for long-distance, high-eccentricity orbits.

When faced with more complex space missions, single-spacecraft systems encounter significant limitations due to constraints such as computational resources, maneuvering energy, and payload capacity. In contrast, multi-spacecraft systems, with the advantages of high fault tolerance, flexibility, and efficiency, have gradually become a research focus in the aerospace field. They are widely applied in tasks such as formation flying, orbital maintenance, and deep space exploration [20]. Centralized control requires a central node to gather global information and make unified decisions. However, due to the constraints of spacecraft sensing, communication, and computing capabilities, achieving efficient and reliable on-orbit decision-making is challenging. In comparison, distributed control distributes computational tasks among multiple nodes, with each node only processing information from itself and nearby nodes. This significantly reduces computational complexity, improves efficiency, and is better suited for the demands of high-dynamic, cooperative operations. In [21], the computational efficiency of spacecraft formation reconfiguration path planning using a distributed method is approximately seven times higher than that of a centralized method. This highlights that distributed control methods are a key technological trend for addressing complex tasks in future high-dynamic space environments. In recent years, numerous spacecraft swarm control methods have emerged. However, most are based on continuous maneuvering models and primarily target tasks such as formation maintenance and orbital adjustments. Common methods include leader–follower control [22], artificial potential fields [23], and swarm control [24]. However, these methods often fail to meet the demands of long-distance maneuvering and rapid approach to targets under an impulsive maneuvering mode. To achieve coordinated target approach for multi-spacecraft, there is an urgent need to develop a cooperative path-planning method suitable for the impulsive maneuvering mode.

Distributed negotiation strategies are often used to achieve task balancing in the multi-spacecraft system. In [25], a networked game model based on a game-theoretic negotiation mechanism is proposed. Through cooperation with neighbors, individual actions are updated iteratively to reach a Nash equilibrium. Similarly, in [26], the self-organizing task allocation problem in multi-spacecraft systems was modeled as a potential game, and a distributed task allocation algorithm based on game learning was proposed. Inspired by this, the study attempted to use a distributed negotiation method to determine the cooperative approach strategy. However, due to information asymmetry among spacecraft in distributed conditions, decentralized computational methods struggle to plan approach paths from a global perspective, which means global optimality cannot be guaranteed. To address this, this paper studies cooperative approach strategies in multi-spacecraft systems under distributed conditions. Facing challenges such as complex constraints, opponent interception, information asymmetry, and the tendency to fall into local optima, a cooperative game negotiation strategy combining offline trajectory planning and online distributed negotiation is proposed. The main contributions are as follows:

- A multi-objective optimization model considering multiple constraints is established, and a constraint-handling mechanism based on the constraint dominance principle (CDP) is introduced. By combining the NSGAII method, the NSGAII-CDP algorithm is designed to efficiently generate the Pareto front, thereby obtaining a set of approach paths that meet the constraint.

- The negotiation strategy among multi-spacecraft is modeled as a cooperative game, with defined players, strategy space, and local reward functions. Based on this, the existence and convergence of the Nash equilibrium under distributed conditions are theoretically analyzed and verified by constructing an exact potential function.

- A distributed negotiation strategy based on simulated annealing is proposed, effectively overcoming the problem of negotiation strategies tending to fall into local optima and improving global optimization performance.

The remaining sections of this paper are organized as follows. Section 2 describes the multi-spacecraft coordinated approach problem and the relative dynamics model. Section 3 presents the multi-objective optimization algorithm, including the optimization variables, objective functions, constraints, mathematical model and the design of NSGAII-CPD. In Section 4, the distributed negotiation strategy based on simulated annealing is presented, and the existence and convergence of the Nash equilibrium are discussed. Section 5 demonstrates the effectiveness and superiority of the proposed method through numerical simulations. Finally, Section 6 draws the conclusions of this paper.

2. Multi-Spacecraft Coordinated Approach

2.1. Problem Description

In the scenario of a multi-spacecraft approach, pursuers are required to evade the defenders while approaching the target to accomplish a coordinated approach task. Due to constraints such as fuel consumption and mission requirements, it is assumed that the target cannot autonomously evade potential threats.

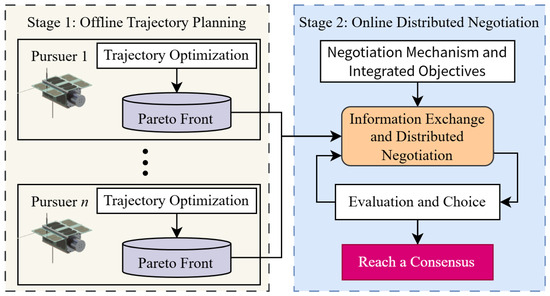

To accomplish the cooperative approach task for the pursuers, a cooperative game negotiation strategy is proposed, which is a dual-stage decision-making method that includes offline trajectory planning and online distributed negotiation. Figure 1 illustrates the cooperative game negotiation strategy.

Figure 1.

Illustration of cooperative game negotiation strategy.

Stage 1: Use a multi-objective optimization algorithm to solve the pursuers’ approach trajectory planning under complex and multi-constrained conditions. Multiple objectives, such as flight time, fuel consumption, and terminal distance, are comprehensively considered to generate a Pareto front that represents a set of feasible approach paths.

Stage 2: A distributed negotiation strategy is proposed by establishing a decentralized communication and decision-making mechanism among the pursuers. By taking the total mission cost into account, the globally optimal collaborative approach strategy is determined through information exchange and distributed negotiation.

2.2. Relative Dynamics Model

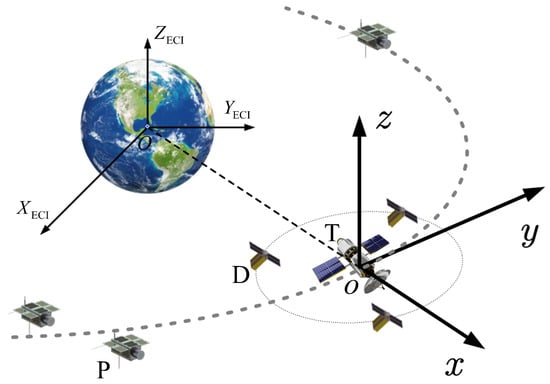

To describe the motion of the pursuers and the defenders relative to the target , an LVLH coordinate system is established at the center of the target, as shown in Figure 2. The origin o is located at the center of mass of the target. The x-axis extends from the Earth’s center to the target’s barycenter, the y-axis is perpendicular to the x-axis within the orbital plane, oriented positively towards the direction of flight, and the z-axis aligns with the normal of the target’s orbital plane. Additionally, the Earth-Centered Inertial (ECI) coordinate system is defined with its origin O at the Earth’s mass center.

Figure 2.

Orbital coordinate system.

Under the assumption of a near-circular orbit and neglecting perturbations, relative motion can be expressed using the CW equations:

where refers to the orbital angular velocity of the target, stands for the relative position components, and represents the three-axis thrust acceleration.

Assuming the spacecraft performs no orbit maneuvers (), the relative state can be determined using the state transition matrix as follows:

Here, is the initial relative state, and is the state transition matrix:

And the specific expressions of the sub-matrices are expressed as follows:

When the spacecraft performs n orbital maneuvers at times , with control vectors , the propagation equation of the relative state is given as

The final relative state after a series of orbital maneuvers can be determined from (8).

3. Multi-Objective Optimization

This section takes the ith pursuer as an example to illustrate the design and application of the multi-objective optimization algorithm. The optimization variables consist of the time intervals between consecutive impulses and the velocity increments associated with each impulse.

To facilitate the constraint on the maximum velocity increment for each impulse, the kth velocity increment vector is transformed into spherical coordinates , where denotes the magnitude of the velocity increment, is the angle between the velocity vector and the x-y plane, and is the angle between the projection of the velocity vector onto the x-y plane and the x-axis. Therefore, is defined as

Define as the velocity increment corresponding to the transformation of into the Earth-Centered Inertial (ECI) coordinate system. The optimization variables can be represented as a set of parameters , and the total number of optimization variables is .

3.1. Objective Functions

Considering the timeliness of space game tasks, it is required that pursuers complete the approach task within a relatively short period. Furthermore, the total fuel consumption during the mission and the successful completion of the approach task are also crucial. Therefore, three optimization objectives are defined as follows:

- The total flight time:

- The total fuel consumption:

- The terminal distance:where denotes the distance between pursuer and target . The parameter specifies the terminal moment of the mission.

3.2. Constraints

Firstly, the relative dynamics constraint between the pursuer and the target must be satisfied and is expressed as follows:

The initial relative state conditions should satisfy the following constraints:

The maneuvering time should satisfy the following constraints:

where represents the maneuvering moment of the kth impulse. defines the minimum time interval either between two impulses or between the moment of the first impulse and the initial time. This ensures that the pursuer has sufficient time for iterative optimization or attitude adjustments before executing a maneuvering decision. represents the maximum time interval, while denotes the maximum total mission time.

Due to the limitation of the thruster capacity, the velocity increment of a single-impulse maneuver operation and the total velocity increment should satisfy the following constraints:

where represents the maximum velocity increment of a single-impulse maneuver operation, and stands for the maximum total velocity increment available from the thruster.

In addition, to ensure that the pursuer avoids interception, the following passive safety constraints must be satisfied:

where denotes the distance between pursuer and defender , and represents the safe distance. Furthermore, M is the number of defenders.

Finally, to achieve the final approach operation, corresponding terminal constraints are established, which mainly include constraints on the terminal distance and terminal relative velocity.

The terminal distance constraint requires that, at the final moment, the distance between the pursuer and the target must be less than the specified distance to accomplish the approach operation:

The terminal relative velocity constraint requires that, at the final moment, the relative velocity between the pursuer and the target must be less than the relative velocity :

where denotes the relative velocity between pursuer and target .

3.3. Mathematical Model

The mathematical model of the multi-objective optimization problem (MOOP) studied in this study can be expressed as follows:

where

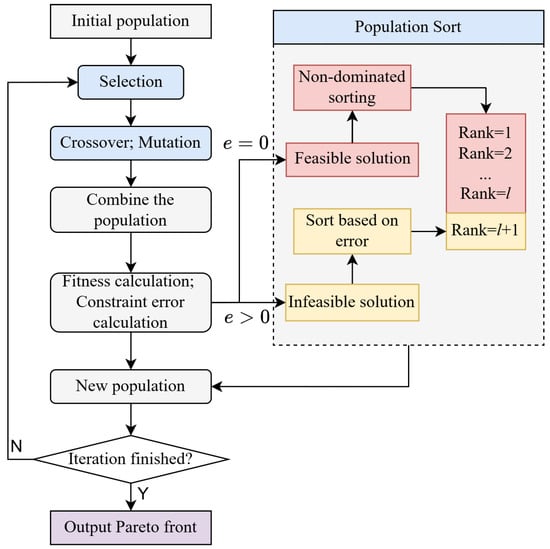

3.4. NSGAII-CDP

NSGAII is an improved multi-objective evolutionary algorithm (MOEA) based on NSGA [27]. Based on NSGAII, Simulated Binary Crossover (SBX) and Polynomial Mutation are applied to continuous variables, eliminating the need for additional encoding or decoding steps and improving the efficiency and adaptability of the algorithm. Specifically, given two parent individuals and , two offspring individuals and are generated using the SBX operator:

where is a random variable uniformly distributed between 0 and 1, denoted as . is dynamically and randomly determined by the distribution factor according to (23).

Individual f undergoes polynomial mutation and transforms into

where and denote the variable’s upper and lower bounds. is dynamically and randomly determined by the distribution factor according to (25).

Furthermore, for constraint handling, common techniques include the CDP, adaptive penalty methods, and adaptive trade-off models. Among these, CDP performs the best [28]. Therefore, this paper adopts CDP to handle multiple constraints, including the following three basic rules [29]:

- Any feasible solution is preferred to any infeasible solution.

- Among two feasible solutions, the one with a better objective function value is preferred.

- Among two infeasible solutions, the one with a smaller constraint violation is preferred.

For this purpose, an error operator e is introduced to calculate the overall degree of constraint violation independently of the objective function. e is defined as follows:

During population sorting, solutions are first divided into feasible and infeasible based on whether e equals zero. Feasible solutions are sorted using the non-dominated sorting method, while infeasible solutions are ordered in ascending degree of constraint violation and positioned after the feasible ones. The flowchart of NSGAII-CDP is shown in Figure 3.

Figure 3.

Flowchart of NSGAII-CDP.

4. Distributed Negotiation

A distributed cooperative game negotiation method is employed among the pursuers to jointly negotiate based on the existing Pareto front and determine an optimal comprehensive approach strategy that balances objectives such as flight time intervals, total flight time, and total fuel consumption.

4.1. Communication Topology

The communication topology is modeled using graph theory, with an undirected graph describing the communication topology of the multi-spacecraft system. represents the set of nodes, and represents the set of edges in the connections. Node corresponds to pursuer , and edge indicates that can exchange information with .

Due to communication resource constraints, each spacecraft can only communicate with a limited number of other spacecraft. An adjacency matrix is defined to describe inter-satellite communication, specifically expressed as

Since the communication network is undirected, the adjacency matrix is symmetric, meaning for all . Assume that the communication topology contains at least one spanning tree, ensuring the connectivity of the communication network.

4.2. Distributed Cooperative Game Model

To achieve efficient strategy negotiation, this section models the cooperative game problem as , where represents the set of players (i.e., the pursuers), and each player acts as an independent decision-maker. represents the strategy space, where is the Pareto front for . represents the combination of strategies of all players, where is the feasible strategy set for , derived from its Pareto front obtained through multi-objective optimization. denotes the payoff functions for all players, while represents the individual payoff of a player. Here, represents the strategies of the other players. During the cooperative game process, continuously adjusts its strategy and exchanges information with other players to maximize its own payoff .

Pursuers aim to achieve a balance that prioritizes ensuring the most consistent arrival time possible while simultaneously minimizing the total flight time and reducing their respective fuel consumption as much as possible. Therefore, the utility function is defined as

where and represent the total flight time and total fuel consumption of , respectively, while denotes the total flight time of . Dimensionless parameters , and c are introduced to standardize these indicators. Coefficients , and serve as weighting factors. The overall optimization objective is obtained through a weighted linear summation, allowing for different weighting schemes to be set according to varying requirements.

4.3. Nash Equilibrium Existence and Convergence

Each pursuer optimizes its strategy based on its payoff function to achieve an optimal planning outcome. The system reaches Nash equilibrium [30] when no individual pursuer can unilaterally adjust its strategy to gain a greater benefit. Importantly, at the end of each cooperative game round, pursuers must exchange their strategies to maintain consistency in collective understanding.

4.3.1. Existence of Nash Equilibrium

Theorem 1.

Every game with a finite number of players and action profiles has at least one Nash equilibrium [31].

In the negotiation and decision-making process of pursuers, the number of pursuers is finite, and the size of the Pareto front obtained from multi-objective optimization is also finite. Referring to Theorem 1, it can be concluded that a Nash equilibrium exists in the distributed negotiation process.

4.3.2. Convergence of Nash Equilibrium

To further illustrate the global convergence of Nash equilibrium, construct a potential function

Analysis indicates that the following condition holds for all pursuers:

where and represent the strategy choices of in the gth and th iteration, respectively. Therefore, G is a potential game with an exact potential function [32].

This study is based on the best response selection strategy, which can be described as

Each pursuer selects the most advantageous strategy based on the current partial information. In other words, each round of decision-making must ensure the maximization of current benefits , such that the payoff of each individual after each round of the game is guaranteed to be non-decreasing. According to (31), the following can be derived:

Since the strategy space is finite, Equation (30) is monotonically increasing and bounded. During the strategy adjustment process, when no single player can unilaterally change their strategy to achieve a higher payoff, the system is deemed to have reached a stable state, corresponding to a Nash equilibrium. Consequently, with a sufficient number of iterations, it is guaranteed that the decision-making process will converge to a locally optimal solution, and potentially even achieve a globally optimal solution.

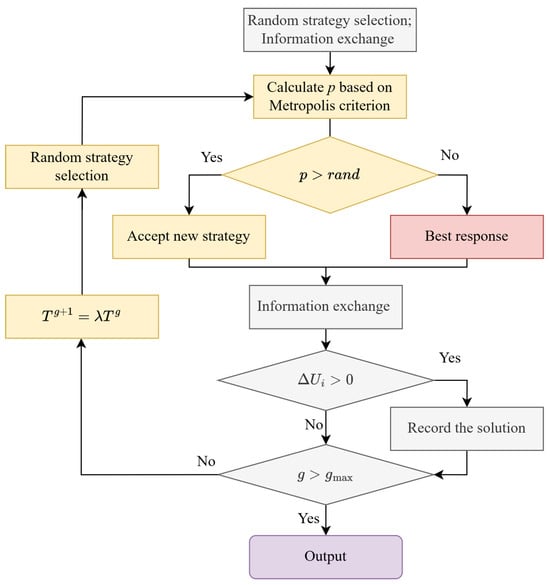

4.4. Simulated Annealing Distributed Negotiation

However, due to factors such as incomplete information decision-making and the mutual influence of strategies, it is challenging to converge the global optimal solution. Therefore, a distributed cooperative game negotiation strategy with a simulated annealing mechanism is designed to escape from local optima and search for better solutions. The process of simulated annealing cooperative game negotiation is shown in Figure 4.

Figure 4.

The process of simulated annealing cooperative game negotiation.

In each round of the cooperative game, pursuer randomly selects a strategy and calculates its payoff. Then, the strategy is accepted with probability p. If not accepted, optimizes its strategy according to (32). The acceptance probability p is determined by the Metropolis criterion:

where T represents the annealing temperature, and denotes the payoff difference. From (34), it can be concluded that

- When , the strategy is accepted fully.

- When , the strategy is adjusted according to the probability in (34).

- The value of p is influenced by the temperature T and the payoff difference . The higher the temperature T, the larger the p. Conversely, the worse the payoff of the new strategy, the smaller the p. Therefore, when the temperature is very high, there is still a high probability of accepting the new strategy, even if its payoff is significantly lower.

To ensure proper convergence of the algorithm, the temperature T is annealed using an exponential decay approach, defined as

where and represent the annealing temperature in the gth and th iteration, respectively. is the annealing parameter that represents the decay rate. Algorithm 1 illustrates the iterative process for pursuer to find a cooperative approach strategy.

| Algorithm 1: Simulated Annealing Distributed Negotiation Process |

|

5. Simulation Verification

5.1. Problem Configuration

To demonstrate the effectiveness of the proposed method, this section adopts the collaborative detection of high-orbit targets as the application scenario, where four pursuers are required to accomplish a coordinated approach detection mission under multiple constraints, while the target is guarded by four defenders. The pursuers must satisfy the following constraints:

- Approach within of the target for detection;

- Achieve terminal relative velocity less than ;

- Synchronize the arrival times of all pursuers as closely as possible;

- Successfully evade interception by the defenders.

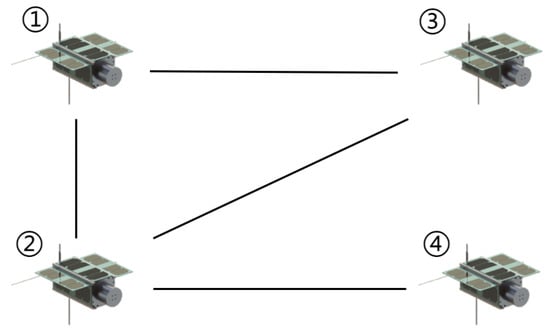

Space perturbation effects such as J2 perturbation, three-body gravitational forces, and solar radiation pressure may lead to decreased control efficiency and increased deviation. To validate the reliability of the decision-making method proposed in this study, a high-fidelity dynamics simulation environment was established. At the initial stage of the simulation, the pursuers execute the mission by following the approach trajectory generated through the cooperative game negotiation strategy. At , the target perceives the threat, and the defenders immediately initiate an interception maneuver based on Lambert guidance, aiming to intercept the pursuers within 8 hours. Faced with the interception threat, the pursuers renegotiate and replan a cooperative approach trajectory. It should be noted that, for simplicity of analysis, this section assumes the defenders execute only one interception maneuver. If the defenders adjust their interception strategies multiple times, the pursuers must renegotiate their approach strategies to enhance mission robustness in complex environments. The orbital elements of all spacecraft at the initial time are shown in Table 1. The maneuver performed by the defenders at the beginning is shown in Table 2. The communication topology of the pursuers is shown in Figure 5, and the adjacency matrix is defined as

Table 1.

Orbital elements at the initial time.

Table 2.

The maneuver performed by the defenders.

Figure 5.

The communication topology of the pursuers.

During the multi-objective optimization stage, the NSGAII-CDP algorithm is used to find the feasible solution set. The main simulation parameters are shown in Table 3. In the distributed negotiation stage, the simulated annealing game negotiation method is employed to achieve distributed decision-making. The main simulation parameters are shown in Table 4.

Table 3.

Simulation parameters for the multi-objective optimization stage.

Table 4.

Simulation parameters for the distributed negotiation stage.

5.2. Simulation Results and Analysis

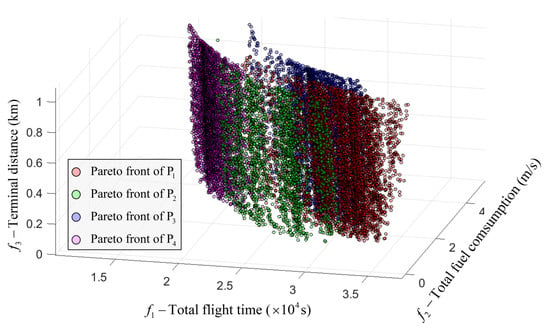

First, the NSGAII-CDP algorithm is applied to solve the MOOP, and the relationship between different optimization objectives in the (21) is obtained, namely, the Pareto front. Figure 6 illustrates the Pareto front derived from trajectory optimization for each pursuer.

Figure 6.

Pareto front of pursuers.

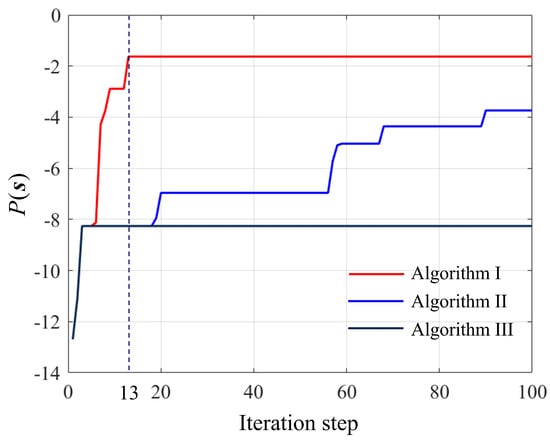

Subsequently, a distributed cooperative game negotiation strategy based on simulated annealing (Algorithm ) is employed to iteratively optimize the cooperative strategy in order to achieve a comprehensive optimal solution. To demonstrate the superiority of Algorithm , comparisons were made with a random negotiation strategy (Algorithm ) and a traditional negotiation strategy (Algorithm ), respectively. As shown in Figure 7, the changes in the potential function of Equation (30) during the iterative process are presented. The analysis shows that Algorithm converges to a local minimum but becomes trapped, failing to discover superior solutions. Although Algorithm can effectively escape local optima, its optimization efficiency and performance are both inferior to those of the proposed Algorithm . The corresponding Pareto optimal solution set for the pursuers is presented in Table 5.

Figure 7.

Changes in the potential function during the iterative process.

Table 5.

Pareto optimal solution obtained through negotiation.

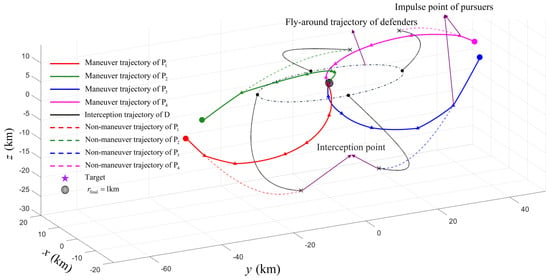

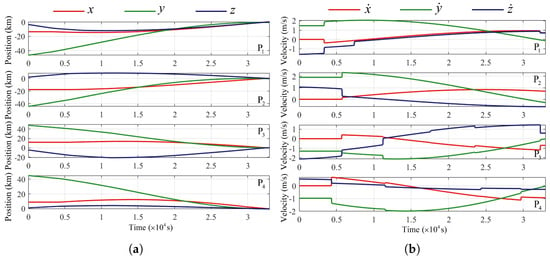

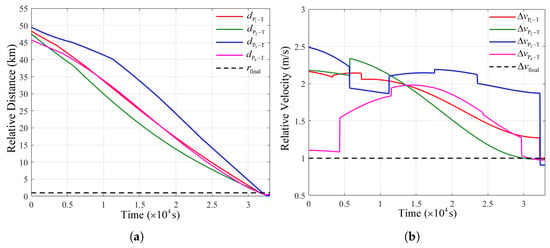

Furthermore, Table 6 presents the orbital maneuver strategies corresponding to the Pareto optimal solutions shown in Table 5, including the time intervals between consecutive impulses and the velocity increments in the ECI coordinate system. Figure 8 illustrates the relative motion trajectories of multi-spacecraft. It shows the interception trajectories after the defenders execute the maneuver scheme described in Table 2, as well as the avoidance and approach trajectories resulting from the orbital maneuver scheme in Table 6 executed by the pursuers. Figure 9 shows the changes in the relative state during the approach process of the pursuers. Specifically, Figure 9a,b represent the variations in three-axis relative position and velocity, respectively. In addition, Figure 10a,b illustrate the changes in distance and velocity of the pursuers relative to the target.

Table 6.

Orbital maneuver strategies corresponding to the Pareto optimal solutions in Table 5.

Figure 8.

Orbital maneuver trajectories corresponding to the Pareto optimal solutions in Table 5.

Figure 9.

Relative state variations. (a) Relative position variations. (b) Relative velocity variations.

Figure 10.

Relative distance and velocity variations. (a) Distance variations of the pursuers relative to the target. (b) Velocity variations of the pursuers relative to the target.

An integrated analysis of Table 5 and Table 6, along with Figure 8, Figure 9 and Figure 10, reveals the following: (1) Without executing any maneuvers, the pursuers would be intercepted by the defenders. (2) By performing the specific maneuver sequence, the pursuers successfully avoided the defenders and successfully approached the target. (3) At the terminal time, the pursuers satisfied the mission’s terminal constraints, with the terminal distance and relative velocity being within the limits (). (4) The maneuver time intervals and velocity increments adhered to the predefined constraints. Moreover, the total flight time and total fuel consumption did not exceed the mission requirements. (5) For all pursuers, the total flight times were nearly identical.

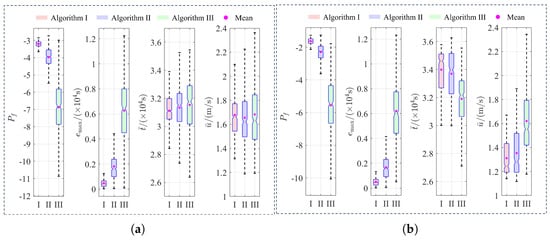

To further validate the effectiveness of the proposed method, Monte Carlo simulation experiments with a sufficient sample size were conducted based on the existing Pareto front with two different weighting schemes. These experiments aimed to evaluate the performance of the simulated annealing distributed negotiation strategy. Specifically, a total of 1000 Monte Carlo simulations were performed for each weighting scheme. In each simulation, the initial strategy was randomly selected, and distributed optimization was then carried out using Algorithm , Algorithm , and Algorithm . To comprehensively evaluate the performance of the strategies, several key metrics were recorded for each simulation, including the potential function values after convergence , the maximum approach time difference , the average flight time , and the average fuel consumption . Figure 11 illustrates the distribution of key metrics under two weight schemes. Table 7 provides a statistical analysis of the metrics, including their mean, standard deviation, and maximum and minimum values.

Figure 11.

Results of 1000 Monte Carlo simulations. (a) Case 1: . (b) Case 2: .

Table 7.

Analysis of the results of 1000 Monte Carlo simulations.

Based on the data from Figure 11 and Table 7, the distribution of potential function values and statistical results indicate that the simulated annealing distributed negotiation strategy demonstrates significantly better optimization performance compared to the traditional strategy. In Case 1, Algorithm achieved and improvements in the mean value of compared to Algorithm and Algorithm , respectively. In Case 2, the mean value of of Algorithm I increased by and , respectively. These results demonstrate that the simulated annealing negotiation strategy proposed in this study delivers superior optimization efficiency. Moreover, the standard deviation and range of extreme values for the simulated annealing strategy indicate a more concentrated distribution of , stronger optimization depth, and greater stability and robustness. Additionally, the simulated annealing strategy exhibits a clear advantage in controlling the maximum approach time difference , with a lower mean value and reduced variability, effectively enhancing the coordination capability of multi-spacecraft. Further analysis of and data reveals that longer total flight times result in lower total fuel consumption, and vice versa. In practical applications, the choice of specific weighting schemes should be balanced based on mission requirements.

Overall, the dual-stage decision-making method based on cooperative game negotiation proposed in this study effectively enhances distributed optimization capabilities and achieves coordinated and safe approach among pursuers.

6. Conclusions

This study proposes a dual-stage decision-making method based on cooperative game negotiation for cooperative approach tasks of multi-spacecraft. For the multi-objective optimization stage, a multi-objective optimization model considering various constraints was constructed, and the multi-objective optimization algorithm NSGAII-CDP is applied to solve the model, obtaining the Pareto front. In the distributed negotiation stage, the negotiation strategy among multi-spacecraft is modeled as a cooperative game, and the existence and global convergence of Nash equilibrium solutions are analyzed. Furthermore, a simulated annealing distributed negotiation strategy was designed for the iterative selection of the optimal comprehensive approach strategy from the Pareto front. The feasibility of the proposed method was validated through simulation results, and its superiority and stability were further demonstrated via extensive Monte Carlo simulations. This method provides a reliable and effective solution for distributed multi-spacecraft trajectory optimization under multiple constraints. Future research will focus on overcoming the challenges of cooperative approach problems involving multi-spacecraft under nonlinear dynamic constraints, particularly the real-time control challenges in complex maneuvering scenarios of the defenders.

Author Contributions

Methodology, S.F.; formal analysis, S.F.; resources, W.L.; writing—original draft, S.F.; writing—review and editing, S.F. and X.Z.; supervision, W.L.; project administration, X.Z.; funding acquisition, X.Z. and W.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Fund Project of Key Laboratory of Space Intelligent Control Technology, grant number HTKJ2023KL502009, and the National Key Research and Development Program of the Ministry of Science and Technology of China, grant number 2024YFE0116500.

Data Availability Statement

The data presented in this study are available on request from the corresponding author due to privacy reasons.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zhao, L.; Dang, Z.; Zhang, Y. Orbital game: Concepts, principles and methods. J. Command. Control 2021, 7, 215–224. [Google Scholar] [CrossRef]

- Fan, S.; Liao, W.; Zhang, X.; Chen, J. Close-range pursuit-evasion game control method of dual satellite based on deep reinforcement. J. Chin. Inert. Technol. 2024, 32, 1240–1249. [Google Scholar] [CrossRef]

- Liu, Y.; Li, R.; Hu, L.; Cai, Z. Optimal solution to orbital three-player defense problems using impulsive transfer. Soft Comput. 2018, 22, 2921–2934. [Google Scholar] [CrossRef]

- Li, Z.Y. Orbital Pursuit–Evasion–Defense Linear-Quadratic Differential Game. Aerospace 2024, 11, 443. [Google Scholar] [CrossRef]

- Chai, R.; Savvaris, A.; Tsourdos, A.; Chai, S.; Xia, Y. A review of optimization techniques in spacecraft flight trajectory design. Prog. Aerosp. Sci. 2019, 109, 100543. [Google Scholar] [CrossRef]

- Wang, J.; Xiao, Y.; Ye, D.; Wang, Z.; Sun, Z. Research on optimization method for flyby observation mission adapted to satellite on-board computation. Proc. Inst. Mech. Eng. Part J. Aerosp. Eng. 2024, 238, 629–644. [Google Scholar] [CrossRef]

- Zhao, Z.; Shang, H.; Dong, Y.; Wang, H. Multi-phase trajectory optimization of aerospace vehicle using sequential penalized convex relaxation. Aerosp. Sci. Technol. 2021, 119, 107175. [Google Scholar] [CrossRef]

- Boyarko, G.; Yakimenko, O.; Romano, M. Optimal Rendezvous Trajectories of a Controlled Spacecraft and a Tumbling Object. J. Guid. Control Dyn. 2011, 34, 1239–1252. [Google Scholar] [CrossRef]

- Zhou, Y.; Yan, Y.; Huang, X.; Kong, L. Mission planning optimization for multiple geosynchronous satellites refueling. Adv. Space Res. 2015, 56, 2612–2625. [Google Scholar] [CrossRef]

- Zhou, Y.; Yan, Y.; Huang, X.; Kong, L. Optimal scheduling of multiple geosynchronous satellites refueling based on a hybrid particle swarm optimizer. Aerosp. Sci. Technol. 2015, 47, 125–134. [Google Scholar] [CrossRef]

- Mac, T.T.; Copot, C.; Tran, D.T.; De Keyser, R. Heuristic approaches in robot path planning: A survey. Robot. Auton. Syst. 2016, 86, 13–28. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, X.; Cai, G.; Chen, J. Trajectory planning and coordination control of a space robot for detumbling a flexible tumbling target in post-capture phase. Multibody Syst. Dyn. 2021, 52, 281–311. [Google Scholar] [CrossRef]

- Du, R.H.; Liao, W.H.; Zhang, X. Multi-objective optimization of angles-only navigation and closed-loop guidance control for spacecraft autonomous noncooperative rendezvous. Adv. Space Res. 2022, 70, 3325–3339. [Google Scholar] [CrossRef]

- Samsam, S.; Chhabra, R. Multi-impulse smooth trajectory design for long-range rendezvous with an orbiting target using multi-objective non-dominated sorting genetic algorithm. Aerosp. Sci. Technol. 2022, 120, 107285. [Google Scholar] [CrossRef]

- Xu, G.; Zeng, N.; Chen, D. A multi-segment multi-pulse maneuver control method for micro satellite. J. Phys. Conf. Ser. 2023, 2472, 012037. [Google Scholar] [CrossRef]

- Wu, J.; Xu, X.; Yuan, Q.; Han, H.; Zhou, D. A Multi-Stage Optimization Approach for Satellite Orbit Pursuit–Evasion Games Based on a Coevolutionary Mechanism. Remote Sens. 2025, 17, 1441. [Google Scholar] [CrossRef]

- Chihabi, Y.; Ulrich, S. Analytical spacecraft relative dynamics with gravitational, drag and third-body perturbations. Acta Astronaut. 2021, 185, 42–57. [Google Scholar] [CrossRef]

- Parmar, K.; Guzzetti, D. Interactive imitation learning for spacecraft path-planning in binary asteroid systems. Adv. Space Res. 2021, 68, 1928–1951. [Google Scholar] [CrossRef]

- Zhao, L.; Zhang, Y.; Dang, Z. PRD-MADDPG: An efficient learning-based algorithm for orbital pursuit-evasion game with impulsive maneuvers. Adv. Space Res. 2023, 72, 211–230. [Google Scholar] [CrossRef]

- Yue, C.; Lu, L.; Wu, Y.; Cao, Q.; Cao, X. Research Progress and Prospect of the Key Technologies for On-orbit Spacecraft Swarm Manipulation. J. Astronaut. 2023, 44, 817–828. [Google Scholar] [CrossRef]

- Sarno, S.; D’Errico, M.; Guo, J.; Gill, E. Path planning and guidance algorithms for SAR formation reconfiguration: Comparison between centralized and decentralized approaches. Acta Astronaut. 2020, 167, 404–417. [Google Scholar] [CrossRef]

- Wang, T.; Huang, J. Leader-following consensus of multiple spacecraft systems with disturbance rejection over switching networks by adaptive learning control. Int. J. Robust Nonlinear Control 2022, 32, 3001–3020. [Google Scholar] [CrossRef]

- Guan, Y.; Zhang, X.; Chen, D.; Fan, S. Artificial potential field-based method for multi-spacecraft loose formation control. J. Phys. Conf. Ser. 2024, 2746, 012053. [Google Scholar] [CrossRef]

- Li, S.; Ye, D.; Sun, Z.; Zhang, J.; Zhong, W. Collision-Free Flocking Control for Satellite Cluster With Constrained Spanning Tree Topology and Communication Delay. IEEE Trans. Aerosp. Electron. Syst. 2023, 59, 4134–4146. [Google Scholar] [CrossRef]

- Liu, L.; Dong, Z.; Su, H.; Yu, D. A Study of Distributed Earth Observation Satellites Mission Scheduling Method Based on Game-Negotiation Mechanism. Sensors 2021, 21, 6660. [Google Scholar] [CrossRef]

- Sun, C.; Wang, X.; Qiu, H.; Zhou, Q. Game theoretic self-organization in multi-satellite distributed task allocation. Aerosp. Sci. Technol. 2021, 112, 106650. [Google Scholar] [CrossRef]

- Deb, K.; Pratap, A.; Agarwal, S.; Meyarivan, T. A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans. Evol. Comput. 2002, 6, 182–197. [Google Scholar] [CrossRef]

- Mahbub, M.S. A comparative study on constraint handling techniques of NSGAII. In Proceedings of the 2020 International Conference on Electrical, Communication, and Computer Engineering (ICECCE), Istanbul, Turkey, 12–13 June 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Deb, K. An efficient constraint handling method for genetic algorithms. Comput. Methods Appl. Mech. Eng. 2000, 186, 311–338. [Google Scholar] [CrossRef]

- Fudenberg, D.; Tirole, J. Game Theory; MIT Press: Cambridge, MA, USA, 1991. [Google Scholar]

- Jiang, A.X.; Leyton-Brown, K. A Tutorial on the Proof of the Existence of Nash Equilibria. 2007. Available online: https://www.cs.ubc.ca/~jiang/papers/NashReport.pdf (accessed on 4 July 2025).

- Carbonell-Nicolau, O.; McLean, R.P. Refinements of Nash equilibrium in potential games. Theor. Econ. 2014, 9, 555–582. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).