Abstract

Accurate prediction of urban grid-scale population inflow is crucial for smart city management and emergency response. However, existing methods struggle to model spatial heterogeneity and quantify prediction uncertainty, limiting their accuracy and decision-support capabilities. This paper proposes PDCDM (POI-enhanced Dual-Dimensional Conditional Diffusion Model), which integrates urban functional semantic awareness with conditional diffusion modeling. The model captures urban functional attributes through multi-scale Point of Interest (POI) feature representations and incorporates them into the diffusion generation process. A dual-dimensional Transformer architecture is employed to decouple the modeling of temporal dependencies and inter-grid interactions, enabling adaptive fusion of grid-level features with dynamic temporal patterns. Building upon this dual-dimensional modeling framework, the conditional diffusion mechanism generates probabilistic predictions with explicit uncertainty quantification. Real-world urban dataset validation demonstrates that PDCDM significantly outperforms existing state-of-the-art methods in prediction accuracy and uncertainty quantification. Comprehensive ablation studies validate the effectiveness of each component and confirm the model’s practicality in complex urban scenarios.

1. Introduction

Urban grid-scale population inflow prediction plays a pivotal role in smart city management and emergency decision-making. Based on historical flow data, population inflow prediction models estimate future inflow across urban grids, which is critical for optimizing urban planning, improving traffic control, enhancing emergency response, and allocating public service resources efficiently [,]. With accelerating urbanization and increasing demands for fine-grained urban management, population inflow prediction has emerged as a key research priority in urban computing and smart city domains []. However, urban population mobility exhibits significant spatiotemporal heterogeneity, as the underlying temporal data are governed by both periodic temporal regularities and urban spatial functional structures, thereby posing substantial challenges for accurate prediction.

Existing urban population prediction methods are primarily grounded in two paradigms: traditional time series analysis and deep learning techniques [,]. Traditional statistical models, including Autoregressive Integrated Moving Average (ARIMA) [], Vector Autoregression (VAR) [], and Kalman Filter [], offer theoretical advantages and interpretability in capturing linear temporal trends but struggle to handle complex nonlinear relationships and high-dimensional spatial features. The emergence of deep learning methods has enabled new breakthroughs in complex forecasting tasks. Recurrent Neural Networks (RNNs) [] and their variants, such as Long Short-Term Memory (LSTM) [] and Gated Recurrent Unit (GRU) [], have demonstrated strong capabilities in modeling sequential dependencies. Furthermore, graph-based approaches, including Graph Neural Networks (GNNs) [], Graph Attention Networks (GATs) [], and Spatiotemporal Graph Convolutional Networks (STGCNs) [], have achieved significant progress in modeling spatial correlations. However, these methods predominantly rely on deterministic modeling frameworks that provide only deterministic point estimates without quantifying predictive uncertainty [,]. This limitation is particularly critical in practical urban management applications: decision-makers require not only predicted values but also assessments of prediction reliability and potential risks to formulate robust management strategies.

Despite the substantial progress achieved in spatiotemporal modeling, most existing studies continue to employ deterministic architectures that produce point estimates and neglect explicit uncertainty quantification. In contrast, some recent studies have begun to incorporate probabilistic reasoning into graph-based spatiotemporal frameworks. For example, Wang et al. [] proposed the Prob-GNN framework for quantifying spatiotemporal uncertainty in urban travel flow prediction, marking one of the first attempts to embed probabilistic graph neural networks into traffic forecasting. Similarly, Gao et al. [] developed an uncertainty-aware probabilistic graph learning model that explicitly captures prediction variance across multiple forecast steps for traffic risk assessment. While these approaches have demonstrated the potential of PGNNs to model spatiotemporal uncertainties, they still rely on indirect approximations for uncertainty estimation and suffer from limited calibration fidelity, leaving notable gaps in robust and scalable probabilistic prediction.

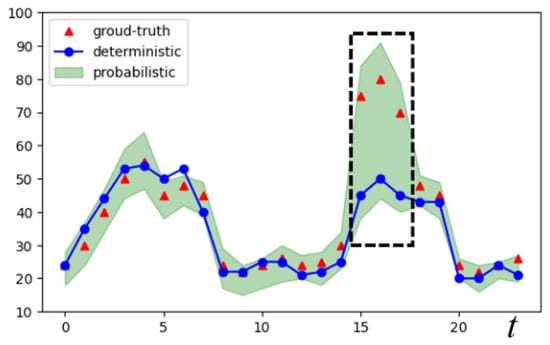

Uncertainty quantification is critical for urban prediction tasks. Existing uncertainty modeling approaches, such as Bayesian Neural Networks [,] and Deep Gaussian Processes [], attempt to incorporate uncertainty estimation into neural network frameworks but often suffer from high computational complexity, convergence difficulties, or overly restrictive assumptions. In practical applications, uncertainty information provides direct value for decision-making. For instance, Figure 1 illustrates the predicted passenger flow at subway stations. As shown in the black-boxed region, deterministic methods fail to indicate prediction reliability. In contrast, the probabilistic approach reveals substantially higher uncertainty (depicted by the green shaded area), suggesting potential passenger flow surges in this region. Therefore, developing methods that simultaneously deliver accurate predictions and reliable uncertainty estimates is of paramount importance.

Figure 1.

Motivation of probabilistic prediction.

In recent years, the advent of generative artificial intelligence has engendered novel paradigms for addressing complex prediction problems. Recent studies have investigated Variational Autoencoders (VAEs) [] and Generative Adversarial Networks (GANs) [] as effective tools for probabilistic representation and sample-based uncertainty modeling. Huang et al. [] proposed a VAE-based generative model of urban human mobility that combines a latent variable architecture with a sequence-to-sequence structure, enabling realistic reconstruction and simulation of mobility trajectories under data sparsity. Mo, Fu, and Di [] developed a PhysGAN-TSE and explicitly quantified uncertainty by integrating stochastic traffic flow models into the adversarial training process. These studies demonstrate the effectiveness of generative models in representing probabilistic distributions of urban dynamics. However, their adversarial or variational inference mechanisms often lead to unstable training, insufficient probabilistic calibration, and limited scalability in large-scale forecasting tasks.

Building on the progress of generative modeling, diffusion models have recently emerged as a powerful and theoretically grounded alternative. As a distinctive class of generative methods, diffusion models exhibit stronger training stability and sample quality compared with VAEs and GANs. Representative variants include Denoising Diffusion Probabilistic Models (DDPMs) [,], Score-based Generative Models [,], and SDE-based Diffusion Models []. These approaches achieve probabilistic forecasting by iteratively perturbing and reconstructing data through controlled noise processes. Recent studies have further demonstrated the effectiveness of diffusion mechanisms for time-series forecasting. Biloš et al. [] introduced a function-space diffusion framework that models temporal dynamics as continuous stochastic processes. Their method defines perturbation and denoising operations directly on continuous functions, allowing the model to handle irregularly sampled sequences and produce calibrated probabilistic forecasts through stochastic process diffusion. Yuan et al. [] extended the use of diffusion mechanisms to spatio-temporal point processes by proposing a unified probabilistic framework capable of learning complex joint spatial-temporal distributions. They incorporated a spatio-temporal co-attention module to capture interdependent features between event time and location, yielding significant performance improvements across tasks such as epidemic spread, urban mobility, and crime prediction. Although these studies reveal the strong capability of diffusion mechanisms for modeling stochastic temporal dependencies, there remains no research applying diffusion models to urban grid-scale population inflow prediction or integrating functional semantic information such as POI attributes as conditional guidance.

Urban functional heterogeneity is a key factor influencing population mobility patterns, as well as a core challenge for diffusion models in urban prediction. Studies in spatial econometrics and geographic information science [,] demonstrate that different functional zones, due to variations in service types, facility density, and spatial accessibility, exhibit distinctly different population agglomeration dynamics and temporal variations. POIs, as direct proxies for urban functions [], encode rich spatial semantics: commercial areas exhibit significant commuting attractiveness on weekdays, and educational facility vicinities display typical tidal flow patterns, while residential zones form complementary population flow dynamics with employment centers. These spatial functional attributes not only shape the magnitude and temporal distribution of population inflow but also determine the degree and spatial variability of prediction uncertainty.

Given the limitations of existing methods and the practical demands of urban forecasting tasks, this study faces three core challenges: (1) How to quantify uncertainty while providing accurate predictions to meet risk assessment needs in urban management decision-making; (2) How to effectively integrate information about urban spatial heterogeneity, particularly the semantic constraints imposed by POI functional characteristics on population flows; (3) How to design a probabilistic modeling framework suited to the characteristics of urban grid data, handling high-dimensional time-series data in real-world scenarios while maintaining computational efficiency.

Building upon the above analysis, this study proposes PDCDM, a novel urban grid inflow prediction model. PDCDM innovatively integrates urban functional semantic information into the conditional diffusion generation process by extracting static POI features from each grid as conditional guidance. The model employs a dual-dimensional Transformer architecture to separately capture temporal dependencies and inter-grid feature correlations, achieving progressive feature fusion through residual connections. The main contributions of this study can be summarized as follows:

- Introduction of conditional diffusion models to urban grid-scale population inflow prediction, simultaneously achieving accurate point estimates and reliable uncertainty quantification through a probabilistic generative framework, thereby offering a novel modeling paradigm for risk-sensitive urban management decisions.

- Design of a POI-enhanced conditional encoding framework that systematically incorporates urban functional semantics into the diffusion process, enabling the model to capture zone-specific population agglomeration patterns and enhance spatial prediction adaptability.

- Design of a decoupled dual-dimensional attention mechanism that separately models temporal dependencies and inter-grid correlations, thereby enhancing model representation of complex spatiotemporal patterns.

- Comprehensive validation on real-world urban datasets demonstrating that the proposed model outperforms baseline approaches in both prediction accuracy and uncertainty quantification, with systematic ablation studies confirming the effectiveness of each component and the model’s practical utility in complex urban scenarios.

2. Materials and Methods

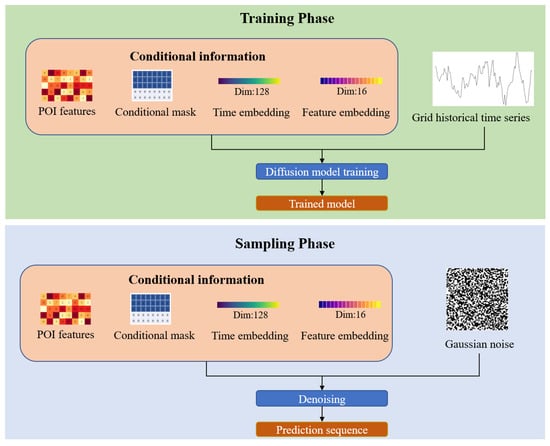

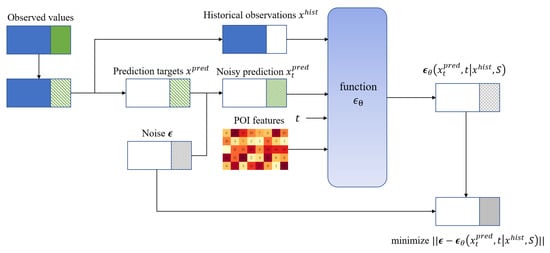

The core methodology leverages historical population inflow time series as model input, with static grid-level POI features as the primary conditioning signal, enabling predictions that capture actual urban functional zone characteristics. Figure 2 provides an overview of the complete PDCDM workflow, consisting of two major phases: the Training Phase and the Sampling Phase. In the training phase, the historical grid inflow sequences and POI-based conditional information (including the conditional mask, time embeddings, and feature embeddings) are jointly encoded and passed through the conditional denoising network to learn the diffusion process. The model is optimized to recover the true inflow distribution from noisy representations, and the best-performing checkpoint is retained as the trained model. During the sampling phase, Gaussian noise is iteratively denoised using the trained model, guided by the same conditional information to progressively reconstruct the future population inflow over each prediction step. The internal model architecture and implementation details are described later in Section 2.4.

Figure 2.

The overall workflow.

2.1. Problem Formulation

The objective of urban grid population flow prediction is to infer the joint future state of population flow across all grid cells given historical observations. Let denote the historical population flow data over time steps, defined as follows:

where represents the historical observation sequence and is the number of time steps. The population inflow across all grid cells at time step is denoted as:

where represents the population inflow across all grid cells at time step , is the number of grid cells, and denotes the inflow to grid . The goal is to predict population inflow over the next time steps based on historical observations. The prediction captures the future inflow across all grids, given by:

where represents the predicted population inflow sequence, denotes the number of prediction time steps, and denotes the prediction at the -th future time step.

Each prediction comprises the population inflow across grid cells, defined as:

where denotes the predicted population inflow for grid cell at time step .

Given historical observations, the goal is to predict population inflow across all grid cells over a future time window. Considering spatial dependencies between grids and POI-based functional characteristics, we define the target prediction set as:

where represents the target prediction set, denotes the length of the observation window, denotes the length of the prediction window, and represents the ground-truth population inflow at the -th prediction time step. During training, we employ supervised learning, while during testing, ground-truth labels are not required. The model input comprises two main components: historical flow dynamics and static grid-level POI features.

Historical flow dynamics comprise the historical population inflow observations across all grid cells during the observation window, defined as:

where represents the historical flow dynamics matrix. Each observation can be represented as a three-tuple:

where denotes the observed population inflow for grid cell at time step , is the temporal position index, and is the observation mask, where indicates a valid observation and indicates a missing value.

Static grid-scale POI features are constructed from urban POI data, represented as:

where denotes the complete POI feature matrix and is the feature dimension. The -th row, , represents the static POI feature vector for grid cell , encoding the grid’s spatial location and functional attributes derived from POI distributions. These static characteristics remain unchanged throughout the entire prediction process and are used to capture the population attraction differences of different functional areas.

The model output is the future time step long population inflow prediction sequence. Based on the probabilistic prediction paradigm of the model, the model can generate multiple prediction sample sets to quantify uncertainty. Through random sampling, we can obtain:

where represents the prediction sequence obtained by the -th sampling, and represents the sample prediction value at time of the -th sample.

2.2. Data Preparation

In order to evaluate the proposed framework for the multivariate spatiotemporal prediction of urban grid population inflow, we have used a Beijing dataset from Amap (Gaode Map), a Chinese navigation and mapping service operated by Alibaba Group, alongside the relevant POI data. The model input comprises two main components: time-series population inflow across grid cells and grid-level contextual features. Prior research [] has shown that geographical factors such as land use, topography, and urban planning significantly influence population distribution patterns. Accordingly, we incorporate static POI features as spatial attributes for grid cells to enhance inflow prediction.

The population inflow data consist of grid-level records for Beijing, collected via the Amap API and spanning a period of 10 months from September 2023 to June 2024. Observations are recorded at 15 min intervals, yielding 96 time steps per day. To focus on areas with high activity, the top 10% of grid cells are selected based on their average inflow intensity.

In order to capture spatial heterogeneity and functional characteristics, we extracted 64-dimensional POI features from Beijing POI data. These features encompass a variety of urban elements, such as transport hubs, leisure and recreation facilities, and commercial districts. Taking into account the spatial spillover effects of urban functions, whereby POI distributions in neighboring grids influence population flows in target grids, we use Kernel Density Estimation (KDE) to aggregate neighborhood POI features spatially. Specifically, for each grid cell and category , we identify the nearest POI points and compute the spatial density using a Gaussian kernel:

where denotes the distance from the center of grid cell to the -th POI, is the kernel bandwidth parameter, and is the exclusion radius. We only consider POI points at distances greater than to avoid double-counting POI density within the grid itself, ensuring that the KDE features better capture spatial dependencies and neighborhood characteristics. These neighborhood features reflect the functional intensity of surrounding areas, enabling the model to better encode local geographic context.

Finally, the static features for each grid cell comprise two components: (1) POI category counts within the grid cell and spatial coordinates (reflecting intrinsic functional attributes), and (2) KDE-based neighborhood density features (capturing surrounding spatial context) []. All POI features undergo log1p transformation and Z-score standardization to stabilize numerical ranges and accelerate model convergence. Additionally, to encode geographic location, we apply 2D sine-cosine positional encoding to the latitude and longitude coordinates of grid centers, generating 32-dimensional spatial embeddings. To ensure spatial alignment between time-series observations and static features, we align the datasets by taking the intersection of grid IDs, ensuring that each grid cell has complete temporal records and corresponding static features.

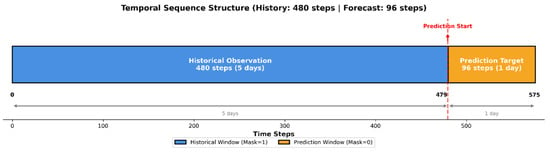

Since the model uses a time-series forecasting framework, the data is converted into fixed-length sequences using a sliding window approach. Each sequence comprises 576 time steps, consisting of a 480-step historical window (five days of observations) and a 96-step prediction window (one-day forecast horizon). This configuration is suitable for urban planning scenarios where decision-makers require short-term forecasts based on recent observations. Figure 3 illustrates the sliding window partitioning scheme.

Figure 3.

Time series segmentation structure.

To stabilize model training and accelerate convergence, we applied Z-score normalization to the temporal inflow data at the grid level:

where denotes the observed inflow for grid cell at time step , and and are the mean and standard deviation for grid cell across the entire observation period, respectively.

To enable conditional generation in the diffusion model, we construct two binary masks for each sequence. The observation mask () indicates data availability. Since our dataset is complete, is set to 1 at all time steps. The ground-truth mask () distinguishes between historical and future time steps: is set to 1 during the observation window (training region) and 0 during the prediction window (target region). This masking strategy enables the model to learn conditional mappings from observed historical data to unobserved future values.

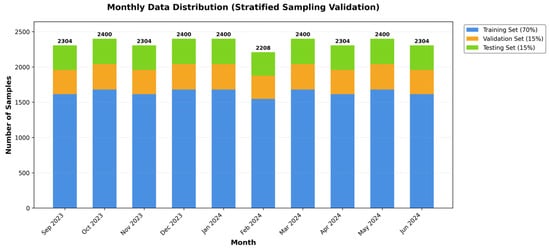

To ensure robust generalization across temporal patterns, we use a stratified monthly sampling strategy to partition the dataset. Specifically, we split the 10-month dataset into training (70%), validation (15%), and test (15%) sets, drawing samples proportionally from each month. For each month , we calculate the total number of days , generate candidate samples based on time step indices, and allocate them proportionally to the three subsets. This stratified approach ensures that each month is proportionally represented in all subsets, mitigating temporal bias from seasonal variations or specific time periods. Unlike spatial partitioning strategies, our approach is temporal-only: all grid cells are shared across training, validation, and test sets, but with non-overlapping time windows to prevent data leakage. Figure 4 illustrates the dataset partitioning scheme across months. Table 1 defines the structure and dimensions of the input data, while Table 2 summarizes the statistical information and partitioning configuration of the final dataset. The final dataset comprises 23,434 time series samples, covering 5853 high-traffic grids and a 10-month observation period.

Figure 4.

Distribution of monthly data.

Table 1.

Input Data Structure and Dimensions.

Table 2.

Dataset Statistics and Split Configuration.

2.3. Conditional Diffusion Model

This study employs diffusion probabilistic models as our generative framework for predicting grid-scale population inflow. Specifically, we leverage a conditional variant of the Denoising Diffusion Probabilistic Model (DDPM) to forecast population inflow across grid cells. Below, we present the formulation of the original DDPM and describe how we adapt it for conditional grid-scale population inflow forecasting by incorporating POI spatial features as conditional inputs.

2.3.1. Denoising Diffusion Probabilistic Models

The goal is to learn a model distribution that approximates the data distribution . Let () denote the latent variables at different diffusion time steps, where represents the original data and all reside in the same latent space . Diffusion probabilistic models are latent variable models that are composed of two processes: the forward process and the reverse process. The forward process is defined by the following Markov chain:

and is a small positive constant that represents a noise level. Sampling of has the closed-form written as where . Then, can be expressed as where . On the other hand, the reverse process denoises to recover , and is defined by the following Markov chain:

Ho et al. [] proposed DDPM with the following parameterization:

where is a trainable denoising function. We denote and as and . The denoising function also corresponds to a rescaled score model for score-based generative models []. Under this parameterization, the reverse process is trained by minimizing the following loss function:

The denoising function is designed to estimate the noise vector that has been added to the corrupted input . This training objective can also be interpreted as a weighted form of denoising score matching, which is widely used for training score-based generative models.

2.3.2. Conditional Multivariate Time Series Forecasting Based on PDCDM

We consider more general multi-grid time series prediction tasks. Consider the following scenario: given a historical sequence , and prediction target , we generate prediction target by conditioning on historical observation . Here, both and correspond to the same spatial domain across all grids. Therefore, the goal of probabilistic prediction is to use the model distribution to estimate the true conditional distribution , where is the grid static POI features.

Unlike general conditional generation tasks, the temporal split between historical observations and prediction targets in forecasting tasks is fixed. Specifically, corresponds to historical time steps , and similarly corresponds to future time steps . This fixed temporal division reflects the causal nature of forecasting, where only past information can be used to predict the future. We define an observation mask to indicate the positions of historical observations (with value 1 for historical steps and 0 for prediction steps). Correspondingly, we define a prediction mask , such that .

To leverage the diffusion model , we note that in the unconditional case, the reverse process defines the data model distribution over the entire sequence. Therefore, it is natural to extend the reverse process to the conditional setting.

However, existing diffusion models are generally designed for data generation and do not take conditional observations and spatial features as inputs. In order to use the diffusion model for prediction, we define the conditional denoising function that takes as input the noisy sequence, time step , historical observations , POI features , and observation mask as conditions. Specifically, during the forward diffusion process, we only add Gaussian noise to the prediction targets while keeping the historical observations intact. In the reverse denoising process, we concatenate the noisy prediction targets with the original historical observations before feeding them into the network, using the following parameterization:

where and are defined in Section 2.3.1. This design ensures that historical observations remain unchanged throughout the denoising process, thus avoiding information loss while fully leveraging both the historical information and the POI features of each grid to guide the generation of prediction targets.

2.3.3. Model Training

Our training objective is to recover the original prediction targets from their noisy versions. Since the forward diffusion process is defined as a Markov chain with fixed Gaussian transitions, only the reverse denoising process needs to be learned. It has been shown that to optimize the denoising model, the learned reverse transitions should closely approximate the true posterior distribution. Furthermore, it has been established that learning to predict the original clean data is mathematically equivalent to predicting the noise that was added to it. In practice, the noise prediction formulation has been found to yield superior performance. Therefore, we adopt the noise prediction objective, and the loss function is formulated as follows:

The training process is shown in Figure 5.

Figure 5.

Self-supervised training procedure of PDCDM. Complete sequence is split into historical observations (blue, steps) and prediction targets (green hatched, steps). Historical observations and POI features serve as conditional inputs. Prediction targets are corrupted with Gaussian noise and fed into the denoising network along with diffusion step . The network predicts noise and is trained by minimizing the difference from true noise.

2.4. PDCDM Model Architecture

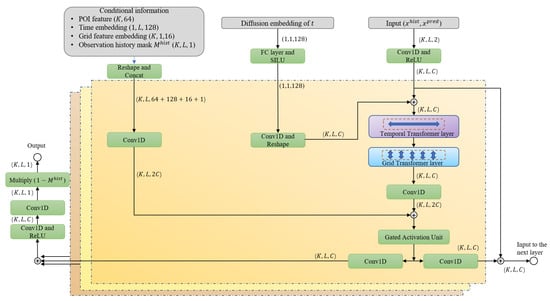

To achieve this conditional denoising objective, we design a denoising network that integrates temporal grid features with POI semantic information. As illustrated in Figure 6, the network employs a DiffWave-based residual block architecture [] and incorporates a dual-dimension Transformer attention mechanism to capture long-range dependencies across both temporal and grid dimensions. Via gated activation units and cross-layer skip connections, the network adaptively fuses static POI features with dynamic grid-level temporal patterns, thereby enabling multi-scale feature aggregation.

Figure 6.

PDCDM Network Architecture.

2.4.1. Input Processing and Encoding

We define the denoising function as , which takes as input the noisy prediction target, diffusion step, historical observations, POI features, and observation mask. During training, the prediction target is progressively corrupted to through the forward diffusion process, while the historical observations remain unchanged. During sampling, the prediction region is initialized as pure Gaussian noise . We construct dual-channel inputs through masking operations:

where denotes element-wise multiplication. and are concatenated along the feature dimension to form the model input , which is then passed through a 1D convolutional layer followed by ReLU activation for initial feature fusion:

where is the number of hidden channels. This design enables adaptive learning of feature representations by fusing conditioning information from historical observations with noisy prediction targets. Subsequent blocks progressively refine these representations.

2.4.2. Diffusion Timestep Embedding

To inform the network of the current diffusion step and enable adaptive denoising, we encode the timestep into a continuous high-dimensional vector. Following the sinusoidal positional encoding with exponentially scaled frequencies, we employ 64 sine and cosine functions:

where , generating a 128-dimensional vector . This embedding is then projected to the hidden dimension via a fully connected layer with SiLU activation. The resulting timestep embedding is broadcast and added to each grid location across the feature and temporal dimensions, thereby providing step-conditional information to the network.

2.4.3. Conditional Information Encoding

To incorporate the spatial semantic information of static POIs within the grid into temporal prediction, we feed the POI feature as conditional information into the network. In addition to the POI feature, we also utilize temporal embeddings, feature embeddings, and conditional masks collectively as the conditional information input into the network, guiding the training process.

The temporal embedding captures the positional information and periodic patterns of the time series. For each time index , we apply 128-dimensional sinusoidal encoding:

where . The grid feature embedding employs 16-dimensional learnable embeddings for each of the grid locations to distinguish different grids. The observation mask indicates which positions are conditional inputs. All conditional information (temporal embeddings, grid embeddings, observation masks, POI features) is concatenated along the channel dimension:

where the total dimension is 209. The concatenated conditional features are then projected to the network hidden dimension via a 1 × 1 convolutional layer, enabling effective fusion of multi-source information. This direct concatenation design avoids the overfitting risk associated with pre-fused additional parameters, allowing the network to adaptively learn task-specific contribution weights for different conditional features.

2.4.4. Dual-Dimension Transformer Attention Mechanism

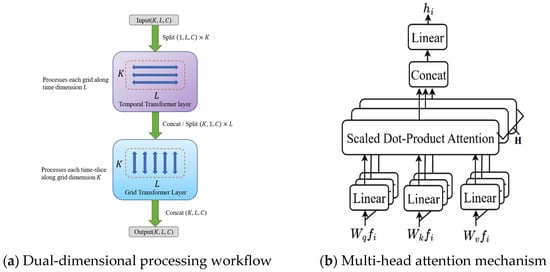

To capture the temporal-spatial dependencies, the fused features undergo a two-dimensional Transformer attention mechanism. As shown in Figure 7, this mechanism consists of a sequential arrangement of a temporal Transformer layer and a grid Transformer layer.

Figure 7.

Dual-dimensional Transformer Attention Mechanism. (a) Dual-dimensional processing flow: represents the number of spatial grids and is the length of temporal sequence. First, independently model temporal dependencies for each grid along the time dimension , then model interactions between grids along the grid dimension ; (b) Multi-head attention structure: Features are projected via to compute attention and aggregate information.

The Temporal Transformer layer models sequence dependencies across the temporal dimension. For input features, it first unrolls grid points into independent time series, then applies a self-attention mechanism along the temporal dimension to compute the correlation weights between each time step. Temporal attention enables the model to adaptively focus on critical time steps within the prediction target based on the context of historical observations, capturing periodic patterns (such as daily peaks and weekly rhythms) and long-range temporal dependencies.

The Grid Transformer layer models multi-grid interactions across the grid dimension. It divides the time-processed features into independent multi-grid snapshots across time steps. A global self-attention mechanism is then applied along the grid dimension to compute correlation weights between sampled grid cells. Unlike methods based on predefined adjacency graphs, global attention enables the model to learn dependencies between arbitrary grid pairs in a data-driven manner, without requiring manual spatial adjacency specifications. Through adaptive learning of attention weights, the Grid Transformer layer dynamically adjusts interaction strengths between different grid pairs for each time step.

The sequential design of dual-dimensional attention ensures comprehensive interaction between temporal and spatial features. Temporal attention first models time-dependent relationships within each grid, followed by grid attention modeling interactions across all grids. This “time-first, grid-second” processing sequence enables the network to first understand the temporal evolution patterns within each grid before capturing synergistic effects between grids, thereby more effectively modeling complex dependency structures among multi-grid data.

3. Experimental Settings

3.1. Dataset

To evaluate the effectiveness of the proposed PDCDM, we conduct comprehensive experiments on a real-world dataset of grid-level population inflows in Beijing, spanning 10 months from September 2023 to June 2024. Table 3 summarizes the dataset statistics.

Table 3.

The information of datasets.

We adopt the same stratified monthly sampling strategy described in Section 2.2 for dataset partitioning.

3.2. Evaluation Metrics

We choose the Continuous Ranked Probability Score (CPRS) as the metric to evaluate the performance of probabilistic prediction, which is commonly used to measure the compatibility of an estimated probability distribution with an observation :

where is an indicator function which equals one if , and zero otherwise. Smaller CRPS means better performance.

In addition, we leverage Mean Absolute Error (MAE) and Root Mean Squared Error (RMSE) to evaluate the performance of deterministic prediction. Let be the label, and denote the predictive result.

where a smaller metric means better performance.

3.3. Implementation Details

In our experiments, we set the batch size to 8 and used the Adam optimizer for parameter updates. The initial learning rate was set to 0.001 and the weight decay coefficient to 1e-6, in order to prevent overfitting. A multi-step learning rate decay strategy was implemented, reducing the learning rate to 10% of its original value at 75% and 90% of the training process, in order to achieve finer-grained parameter tuning. The model was trained using an early stopping mechanism that evaluated model performance on the validation set every 10 epochs and terminated training prematurely if the validation loss failed to improve for several consecutive evaluations. To address the computational challenges posed by high-dimensional data, we randomly sampled 100 grids per batch (selected from a total of 5853 grids) during training. This approach significantly reduced computational costs while preserving the model’s generalization capabilities. During validation, we used a 5% grid sampling rate (approximately 293 grids) to efficiently evaluate model performance. This method avoids memory overflow while yielding stable performance estimates. Additionally, we employ a quadratic noise schedule for the forward diffusion process: , where denotes the total diffusion steps, and and represent the minimum and maximum noise levels, respectively. The raw inflow data is normalized using z-score standardization to ensure comparability across different grids and timesteps.

3.4. Baseline Models

Both state-of-the-art probabilistic and deterministic models are included for the performance comparison.

Probabilistic baselines. The following methods are implemented as baselines for forecasting:

- SSSD [], which is a diffusion model that combines structured state space models (S4) for probabilistic time series forecasting.

- DiffusionTS [], which is a Transformer-based diffusion model tailored for probabilistic time series forecasting.

- CSDI [], which is a diffusion-based non-autoregressive model first proposed for multivariate time series imputation.

Deterministic baselines. We choose some popular and state-of-the-art methods for comparison:

- PatchTST [], which is a patch-based Transformer model that segments time series into patches for capturing multi-scale temporal dependencies.

- iTransformer [], which is an inverted Transformer architecture that treats each variate as a token to effectively model inter-variate dependencies in multivariate time series.

- TimeMixer [], which is a multi-scale mixing model that decomposes time series into macro and micro temporal patterns for multi-resolution feature fusion and forecasting.

- TimesNet [], which is a 2D convolution-based model that reshapes 1D time series into 2D tensors to capture intra-period and inter-period variations.

- FEDformer [], which is a frequency-domain Transformer that applies attention mechanisms via Fourier transforms to capture periodic patterns.

4. Results

4.1. Performance Comparison

Probabilistic modeling approaches for urban grid-level population inflow forecasting remain underexplored. Therefore, we benchmark PDCDM against state-of-the-art probabilistic and deterministic methods from the time series forecasting domain, including probabilistic approaches (SSSD, DiffusionTS, CSDI) and deterministic approaches (PatchTST, iTransformer, TimeMixer, TimesNet, FEDformer). We employ the Continuous Ranked Probability Score (CRPS) as the primary metric for probabilistic forecasting performance, alongside MAE and RMSE to assess point prediction accuracy across all models.

As shown in Table 4, PDCDM achieves the best performance across all evaluation metrics. Compared to the strongest baseline, PDCDM reduces MAE by 11.7%, RMSE by 14.9%, and CRPS by 8.6%. Among probabilistic baselines, DiffusionTS demonstrates strong competitive performance. As a diffusion model specifically designed for time series forecasting, DiffusionTS effectively captures temporal dependencies through its Transformer architecture, highlighting the strengths of diffusion models for sequential modeling. However, it does not account for urban spatial heterogeneity, which limits its performance on grid-level forecasting tasks. SSSD exhibits limited performance in our forecasting task, as its structured state space model struggles with high-dimensional spatial data. While CSDI demonstrates strong capability in spatial prediction, its imputation-oriented design fails to fully leverage the autoregressive nature of forecasting, thereby limiting its predictive accuracy. Beyond point forecasting accuracy, PDCDM achieves the lowest CRPS among all baselines, demonstrating improved probabilistic calibration and reduced prediction uncertainty. This confirms that PDCDM delivers not only accurate but also well-calibrated probabilistic forecasts.

Table 4.

Experimental results on test set of different mainstream methods. The best result is in bold, the second-best result is underlined and “-” indicates that the model does not support probability prediction.

Inference Time. Generally, diffusion-based prediction methods are significantly slower than others due to the iterative denoising required during inference sampling, yet they deliver superior probabilistic prediction performance. To address this, we primarily compare the inference speeds of diffusion-based prediction methods, including SSSD, DiffusionTS, CSDI, and the proposed model PDCDM. Table 5 reports the average inference time cost for these four diffusion models when generating different numbers of samples on a single grid. We observe that SSSD is extremely time-consuming due to its recurrent state space architecture. Our model achieves approximately 30-fold acceleration compared to SSSD, owing to its non-autoregressive parallelized architecture. Despite employing a decoupled Transformer structure, DiffusionTS still incurs high inference time, requiring 103.783 s to generate 30 samples. PDCDM achieves efficient inference through parallelization in the temporal dimension and optimized network design, generating 30 samples in just 13.894 s. This places it on par with CSDI (10.662 s), which also employs a non-autoregressive architecture.

Table 5.

Time cost (by seconds) of diffusion-based models (SSSD, DiffusionTS, CSDI, PDCDM).

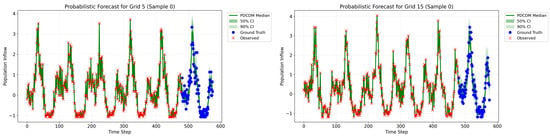

Visualization. To illustrate PDCDM’s forecasting performance, we visualize the probabilistic prediction distributions for representative grids in Figure 8. The model quantifies uncertainty by generating 15 prediction samples, from which we derive the median prediction (green solid line) and two confidence intervals: the 50% CI (dark green shaded area, spanning the 25th–75th percentiles) and the 90% CI (light green shaded area, spanning the 5th–95th percentiles). As shown in Figure 8, during the historical period (timesteps 0–480, marked with red crosses), observed values cluster tightly around the median prediction and fall almost entirely within the confidence intervals, demonstrating the model’s ability to accurately capture historical patterns. More critically, during the forecasting horizon (timesteps 480–576, marked with blue circles), ground truth values across all grids predominantly lie within the 90% confidence interval, validating the model’s probabilistic reliability. Across different grids, PDCDM maintains consistent prediction quality while successfully capturing spatial heterogeneity.

Figure 8.

Probabilistic forecasting visualization.

Notably, the confidence interval width adapts to prediction difficulty: during stable periods, narrower intervals reflect higher predictive certainty, while during volatile periods (e.g., the peak region in Grid 5 at timesteps 500–570), the model produces wider uncertainty bounds, demonstrating its capability to capture prediction uncertainty. Furthermore, the visualizations clearly reveal PDCDM’s ability to learn periodic patterns in population inflow data. Regular oscillations observed during the historical period are effectively extrapolated to the forecasting horizon. For instance, in Grid 15’s forecasting period, the model accurately captures the fluctuation pattern between timesteps 520–550, with the median prediction closely aligning with ground truth. This demonstrates the model’s capacity to learn and extrapolate long-range temporal dependencies in extended forecasting scenarios. These adaptive uncertainty quantification capabilities and precise spatiotemporal pattern recognition enable PDCDM to provide reliable decision support for practical applications such as urban planning. These visualizations further confirm PDCDM’s capability to model predictive uncertainty adaptively: narrower intervals during stable phases represent higher confidence, while wider intervals during volatile periods reflect greater uncertainty awareness.

4.2. Ablation Study

To systematically evaluate the contribution of each component to the overall performance, we conduct ablation studies by removing individual modules from PDCDM. Table 6 summarizes the results across different configurations.

Table 6.

Ablation study of different modules in the model.

PDCDM comprises three core components: the POI feature fusion module, the temporal Transformer layer, and the spatial Transformer layer. We conduct systematic ablation studies by removing each component individually to assess its contribution to probabilistic forecasting performance, evaluated using MAE and CRPS.

The complete PDCDM achieves the best performance across all metrics. Removing the temporal Transformer layer yields the most severe performance degradation, with MAE and CRPS increasing to 0.421 and 0.464, corresponding to error increases of 84.6% and 98.2%, respectively. The temporal Transformer leverages self-attention to construct temporal representations for each grid, adaptively capturing periodic patterns and long-range dependencies. Without it, the model loses its capacity to model temporal dynamics, confirming that temporal dependency modeling is critical for accurate forecasting.

Removing the spatial Transformer layer causes MAE and CRPS to rise to 0.331 and 0.362, increasing errors by 45.2% and 55.4% relative to PDCDM. The spatial Transformer learns inter-grid dependencies through global self-attention. Its removal reduces the model to processing grids independently, preventing it from capturing cross-regional flow propagation and spatial coordination effects.

Removing the POI feature fusion module increases MAE and CRPS to 0.269 and 0.261, representing error increases of 18.0% and 12.0% relative to PDCDM. POI features encode urban functional attributes to provide static semantic context. Without them, the model cannot capture function-driven flow dynamics, reducing its generalization capability across heterogeneous functional zones and limiting prediction interpretability.

5. Conclusions

This paper presents PDCDM, a probabilistic forecasting framework for urban grid population inflow. PDCDM pioneers the application of conditional diffusion models to grid-scale population forecasting, overcoming the limitations of traditional deterministic approaches. Beyond delivering accurate point forecasts, it quantifies prediction uncertainty to support risk-aware urban management decisions. In particular, the model exhibits a strong capacity to represent and adaptively reduce predictive uncertainty through its probabilistic diffusion mechanism, as confirmed by its consistently lowest CRPS and well-calibrated confidence intervals across experiments.

The model incorporates a POI-enhanced conditional encoding framework that systematically integrates urban functional semantics into the diffusion process. By constructing multi-scale static POI feature representations, PDCDM effectively captures population aggregation patterns across diverse functional zones, enhancing prediction accuracy in spatially heterogeneous urban areas. Furthermore, we employ a dual-dimensional attention mechanism to decouple temporal and grid feature modeling, with separate pathways processing temporal dependencies and inter-grid spatial correlations through Transformer-based attention, while residual connections enable progressive feature fusion. Experiments on real-world urban datasets demonstrate that PDCDM outperforms numerous existing forecasting methods.

Although the current experimental validation is conducted using grid-level inflow data from Beijing due to dataset availability, the proposed PDCDM framework is theoretically generalizable to other cities or countries. The model depends only on grid-scale population inflow time series and corresponding POI features, which are widely obtainable in urban environments. Therefore, for regions lacking sufficient historical data, PDCDM can be extended through transfer learning, cross-city pretraining, or regional similarity analysis to leverage prior knowledge from data-rich areas. These strategies enhance the model’s adaptability and potential applicability across diverse urban contexts.

However, several limitations should be acknowledged. First, due to GPU memory constraints, a 5% grid sampling strategy was adopted for validation and testing, which may introduce minor sampling bias in performance assessment. Second, the model relies on static POI data that does not capture temporal variations, and excludes external factors such as weather conditions and specific temporal constraints (e.g., holidays, events). The model’s performance is also inherently dependent on the accuracy and representativeness of the POI data; if the POI information deviates from actual urban functional distributions, prediction deviations may emerge. Incorporating these factors could further improve prediction accuracy. Future work will address these limitations through full-scale evaluation on complete grid sets and by expanding the conditional framework to integrate dynamic environmental and temporal factors, thereby enhancing the model’s robustness and applicability to real-world urban management scenarios.

Author Contributions

Conceptualization, Zhiming Gui and Zhenji Gao; Formal analysis, Zhiming Gui and Yuanchao Zhong; Investigation, Zhiming Gui and Yuanchao Zhong; Methodology, Zhiming Gui and Yuanchao Zhong; Resources, Zhenji Gao; Software, Yuanchao Zhong; Supervision, Zhenji Gao; Validation, Yuanchao Zhong; Visualization, Yuanchao Zhong; Writing—original draft, Yuanchao Zhong; Writing—review and editing, Zhiming Gui. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the China National Geological survey project Research on the Tracking and Development Strategy of Geological Survey Information Technology under grant number 20250009.

Data Availability Statement

The data presented in this study are available on request from the corresponding author due to legal and privacy restrictions. The data are not publicly available as they contain commercially sensitive information subject to confidentiality agreements.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| POI | Point of Interest |

| DDPM | Denoising Diffusion Probabilistic Models |

| MAE | Mean Absolute Error |

| RMSE | Root Mean Square Error |

| CRPS | Continuous Ranked Probability Score |

References

- Wolniak, R.; Stecuła, K. Artificial Intelligence in Smart Cities—Applications, Barriers, and Future Directions: A Review. Smart Cities 2024, 7, 1346–1389. [Google Scholar] [CrossRef]

- Xie, P.; Li, T.; Liu, J.; Du, S.; Yang, X.; Zhang, J. Urban Flow Prediction from Spatiotemporal Data Using Machine Learning: A Survey. Inf. Fusion 2020, 59, 1–12. [Google Scholar] [CrossRef]

- Zheng, Y.; Capra, L.; Wolfson, O.; Yang, H. Urban Computing: Concepts, Methodologies, and Applications. ACM Trans. Intell. Syst. Technol. 2014, 5, 38. [Google Scholar] [CrossRef]

- Lim, B.; Zohren, S. Time-Series Forecasting with Deep Learning: A Survey. Philos. Trans. R. Soc. A 2021, 397, 20200209. [Google Scholar] [CrossRef]

- Kontopoulou, V.I.; Panagopoulos, A.D.; Kakkos, I.; Matsopoulos, G.K. A Review of ARIMA vs. Machine Learning Approaches for Time Series Forecasting in Data Driven Networks. Future Internet 2023, 15, 255. [Google Scholar] [CrossRef]

- Box, G.E.P.; Pierce, D.A. Distribution of Residual Autocorrelations in Autoregressive-Integrated Moving Average Time Series Models. J. Am. Stat. Assoc. 1970, 65, 1509–1526. [Google Scholar] [CrossRef]

- Stock, J.H.; Watson, M.W. Vector Autoregressions. J. Econ. Perspect. 2001, 15, 101–115. [Google Scholar] [CrossRef]

- Khodarahmi, M.; Maihami, V. A Review on Kalman Filter Models. Arch. Comput. Methods Eng. 2023, 30, 727–747. [Google Scholar] [CrossRef]

- Lipton, Z.C.; Berkowitz, J.; Elkan, C. A Critical Review of Recurrent Neural Networks for Sequence Learning. arXiv 2015, arXiv:1506.00019. [Google Scholar] [CrossRef]

- Graves, A. Long Short-Term Memory. In Supervised Sequence Labelling with Recurrent Neural Networks; Graves, A., Ed.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 37–45. ISBN 978-3-642-24797-2. [Google Scholar]

- Dey, R.; Salem, F.M. Gate-Variants of Gated Recurrent Unit (GRU) Neural Networks. In Proceedings of the 2017 IEEE 60th International Midwest Symposium on Circuits and Systems (MWSCAS), Boston, MA, USA, 6–9 August 2017; pp. 1597–1600. [Google Scholar]

- Wu, Z.; Pan, S.; Chen, F.; Long, G.; Zhang, C.; Yu, P.S. A Comprehensive Survey on Graph Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 4–24. [Google Scholar] [CrossRef]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Liò, P.; Bengio, Y. Graph Attention Networks. arXiv 2018, arXiv:1710.10903. [Google Scholar]

- Yu, B.; Yin, H.; Zhu, Z. Spatio-Temporal Graph Convolutional Networks: A Deep Learning Framework for Traffic Forecasting. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; pp. 3634–3640. [Google Scholar]

- Bond-Taylor, S.; Leach, A.; Long, Y.; Willcocks, C.G. Deep Generative Modelling: A Comparative Review of VAEs, GANs, Normalizing Flows, Energy-Based and Autoregressive Models. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 7327–7347. [Google Scholar] [CrossRef]

- Tyralis, H.; Papacharalampous, G. A Review of Predictive Uncertainty Estimation with Machine Learning. Artif. Intell. Rev. 2024, 57, 94. [Google Scholar] [CrossRef]

- Wang, Q.; Wang, S.; Zhuang, D.; Koutsopoulos, H.; Zhao, J. Uncertainty Quantification of Spatiotemporal Travel Demand With Probabilistic Graph Neural Networks. IEEE Trans. Intell. Transp. Syst. 2024, 25, 8770–8781. [Google Scholar] [CrossRef]

- Gao, X.; Jiang, X.; Haworth, J.; Zhuang, D.; Wang, S.; Chen, H.; Law, S. Uncertainty-Aware Probabilistic Graph Neural Networks for Road-Level Traffic Crash Prediction. Accid. Anal. Prev. 2024, 208, 107801. [Google Scholar] [CrossRef]

- Hernandez-Lobato, J.M.; Adams, R. Probabilistic Backpropagation for Scalable Learning of Bayesian Neural Networks. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 7–9 June 2015; pp. 1861–1869. [Google Scholar]

- Goan, E.; Fookes, C. Bayesian Neural Networks: An Introduction and Survey. In Case Studies in Applied Bayesian Data Science: CIRM Jean-Morlet Chair, Fall 2018; Mengersen, K.L., Pudlo, P., Robert, C.P., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 45–87. ISBN 978-3-030-42553-1. [Google Scholar]

- Damianou, A.; Lawrence, N.D. Deep Gaussian Processes. In Proceedings of the Sixteenth International Conference on Artificial Intelligence and Statistics, Scottsdale, AZ, USA, 29 April–1 May 2013; pp. 207–215. [Google Scholar]

- Kingma, D.P.; Welling, M. An Introduction to Variational Autoencoders. Found. Trends® Mach. Learn. 2019, 12, 307–392. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Huang, D.; Song, X.; Fan, Z.; Jiang, R.; Shibasaki, R.; Zhang, Y.; Wang, H.; Kato, Y. A Variational Autoencoder Based Generative Model of Urban Human Mobility. In Proceedings of the 2019 IEEE Conference on Multimedia Information Processing and Retrieval (MIPR), San Jose, CA, USA, 28–30 March 2019; pp. 425–430. [Google Scholar]

- Mo, Z.; Fu, Y.; Di, X. Quantifying Uncertainty In Traffic State Estimation Using Generative Adversarial Networks. In Proceedings of the 2022 IEEE 25th International Conference on Intelligent Transportation Systems (ITSC), Macau, China, 8–12 October 2022; pp. 2769–2774. [Google Scholar]

- Yang, Y.; Jin, M.; Wen, H.; Zhang, C.; Liang, Y.; Ma, L.; Wang, Y.; Liu, C.; Yang, B.; Xu, Z.; et al. A Survey on Diffusion Models for Time Series and Spatio-Temporal Data. arXiv 2024, arXiv:2404.18886. [Google Scholar] [CrossRef]

- Nichol, A.Q.; Dhariwal, P. Improved Denoising Diffusion Probabilistic Models. In Proceedings of the 38th International Conference on Machine Learning, Virtual Event, 18–24 July 2021; pp. 8162–8171. [Google Scholar]

- Song, Y.; Ermon, S. Improved Techniques for Training Score-Based Generative Models. Adv. Neural Inf. Process. Syst. 2020, 33, 12438–12448. [Google Scholar]

- Song, Y.; Sohl-Dickstein, J.; Kingma, D.P.; Kumar, A.; Ermon, S.; Poole, B. Score-Based Generative Modeling through Stochastic Differential Equations. arXiv 2021, arXiv:2011.13456. [Google Scholar] [CrossRef]

- Song, J.; Meng, C.; Ermon, S. Denoising Diffusion Implicit Models. arXiv 2022, arXiv:2010.02502. [Google Scholar] [CrossRef]

- Biloš, M.; Rasul, K.; Schneider, A.; Nevmyvaka, Y.; Günnemann, S. Modeling Temporal Data as Continuous Functions with Stochastic Process Diffusion. In Proceedings of the 40th International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; pp. 2452–2470. [Google Scholar]

- Yuan, Y.; Ding, J.; Shao, C.; Jin, D.; Li, Y. Spatio-Temporal Diffusion Point Processes. In Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Long Beach, CA, USA, 6–10 August 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 3173–3184. [Google Scholar]

- Yuan, N.J.; Zheng, Y.; Xie, X.; Wang, Y.; Zheng, K.; Xiong, H. Discovering Urban Functional Zones Using Latent Activity Trajectories. IEEE Trans. Knowl. Data Eng. 2015, 27, 712–725. [Google Scholar] [CrossRef]

- Wang, J.; Wu, J.; Wang, Z.; Gao, F.; Xiong, Z. Understanding Urban Dynamics via Context-Aware Tensor Factorization with Neighboring Regularization. IEEE Trans. Knowl. Data Eng. 2020, 32, 2269–2283. [Google Scholar] [CrossRef]

- Psyllidis, A.; Gao, S.; Hu, Y.; Kim, E.-K.; McKenzie, G.; Purves, R.; Yuan, M.; Andris, C. Points of Interest (POI): A Commentary on the State of the Art, Challenges, and Prospects for the Future. Comput. Urban Sci. 2022, 2, 20. [Google Scholar] [CrossRef]

- Nieves, J.J.; Stevens, F.R.; Gaughan, A.E.; Linard, C.; Sorichetta, A.; Hornby, G.; Patel, N.N.; Tatem, A.J. Examining the Correlates and Drivers of Human Population Distributions across Low- and Middle-Income Countries. J. R. Soc. Interface 2017, 14, 20170401. [Google Scholar] [CrossRef] [PubMed]

- Xie, Z.; Yan, J. Kernel Density Estimation of Traffic Accidents in a Network Space. Comput. Environ. Urban Syst. 2008, 32, 396–406. [Google Scholar] [CrossRef]

- Ho, J.; Jain, A.; Abbeel, P. Denoising Diffusion Probabilistic Models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Song, Y.; Ermon, S. Generative Modeling by Estimating Gradients of the Data Distribution. Adv. Neural Inf. Process. Syst. 2019, 32. [Google Scholar]

- Kong, Z.; Ping, W.; Huang, J.; Zhao, K.; Catanzaro, B. DiffWave: A Versatile Diffusion Model for Audio Synthesis. arXiv 2021, arXiv:2009.09761. [Google Scholar] [CrossRef]

- Alcaraz, J.L.; Strodthoff, N. Diffusion-Based Time Series Imputation and Forecasting with Structured State Space Models. Trans. Mach. Learn. Res. arXiv 2022, arXiv:2208.09399. [Google Scholar]

- Yuan, X.; Qiao, Y. Diffusion-TS: Interpretable Diffusion for General Time Series Generation. arXiv 2024, arXiv:2403.01742. [Google Scholar] [CrossRef]

- Tashiro, Y.; Song, J.; Song, Y.; Ermon, S. CSDI: Conditional Score-Based Diffusion Models for Probabilistic Time Series Imputation. Adv. Neural Inf. Process. Syst. 2021, 34, 24804–24816. [Google Scholar]

- Nie, Y.; Nguyen, N.H.; Sinthong, P.; Kalagnanam, J. A Time Series Is Worth 64 Words: Long-Term Forecasting with Transformers. arXiv 2023, arXiv:2211.14730. [Google Scholar] [CrossRef]

- Liu, Y.; Hu, T.; Zhang, H.; Wu, H.; Wang, S.; Ma, L.; Long, M. iTransformer: Inverted Transformers Are Effective for Time Series Forecasting. arXiv 2024, arXiv:2310.06625. [Google Scholar] [CrossRef]

- Wang, S.; Wu, H.; Shi, X.; Hu, T.; Luo, H.; Ma, L.; Zhang, J.Y.; Zhou, J. TimeMixer: Decomposable Multiscale Mixing for Time Series Forecasting. arXiv 2024, arXiv:2405.14616. [Google Scholar] [CrossRef]

- Wu, H.; Hu, T.; Liu, Y.; Zhou, H.; Wang, J.; Long, M. TimesNet: Temporal 2D-Variation Modeling for General Time Series Analysis. arXiv 2023, arXiv:2210.02186. [Google Scholar]

- Zhou, T.; Ma, Z.; Wen, Q.; Wang, X.; Sun, L.; Jin, R. FEDformer: Frequency Enhanced Decomposed Transformer for Long-Term Series Forecasting. In Proceedings of the 39th International Conference on Machine Learning, Baltimore, MD, USA, 17–23 July 2022; pp. 27268–27286. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the International Society for Photogrammetry and Remote Sensing. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).