Spatiotemporal Analysis of Urban Perception Using Multi-Year Street View Images and Deep Learning

Abstract

1. Introduction

2. Background and Related Work

2.1. Urban Spatial Perception Measurement Method

2.2. Street View Imagery in Urban Studies

2.3. Time Series Street View Research

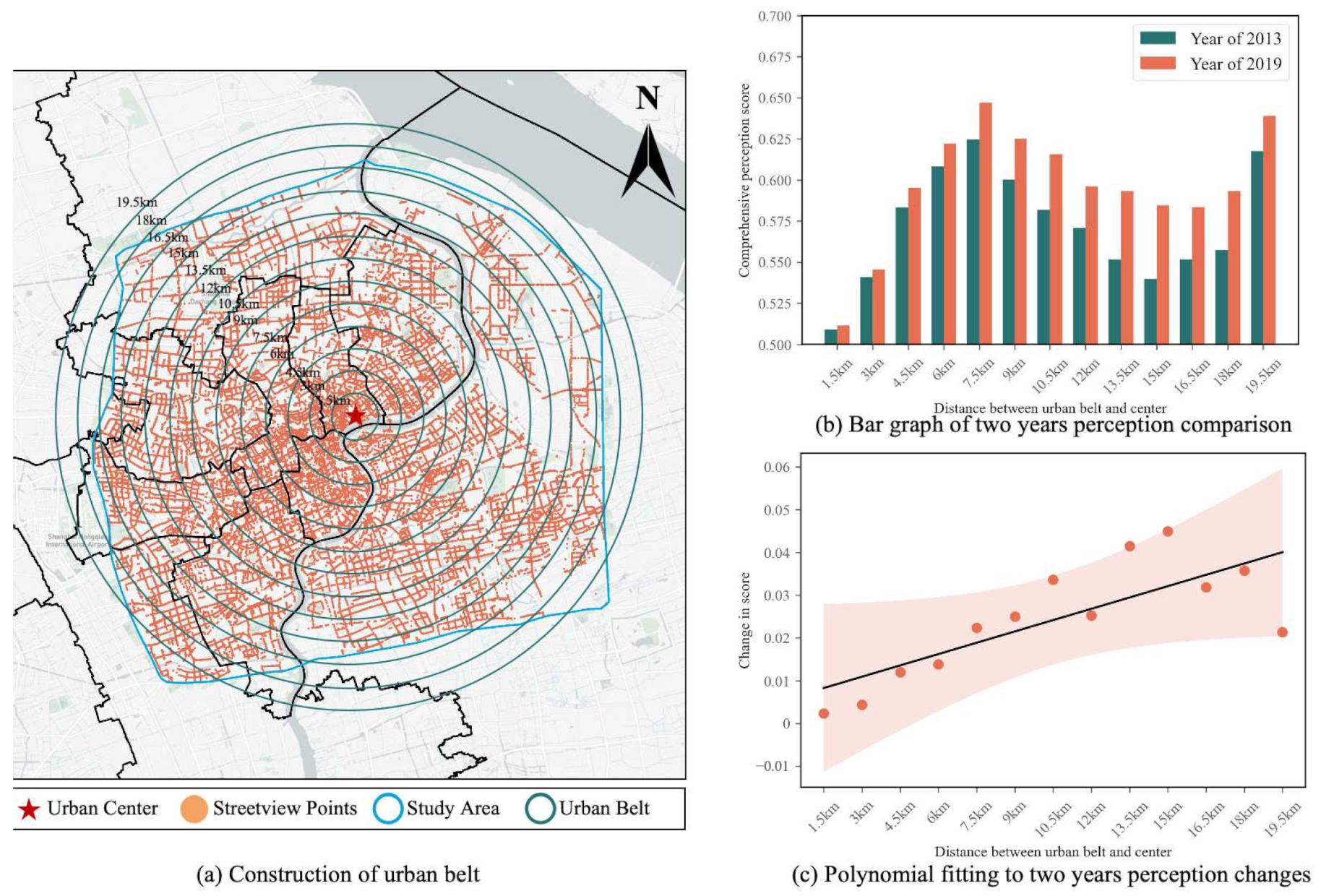

3. Study Area and Data Sources

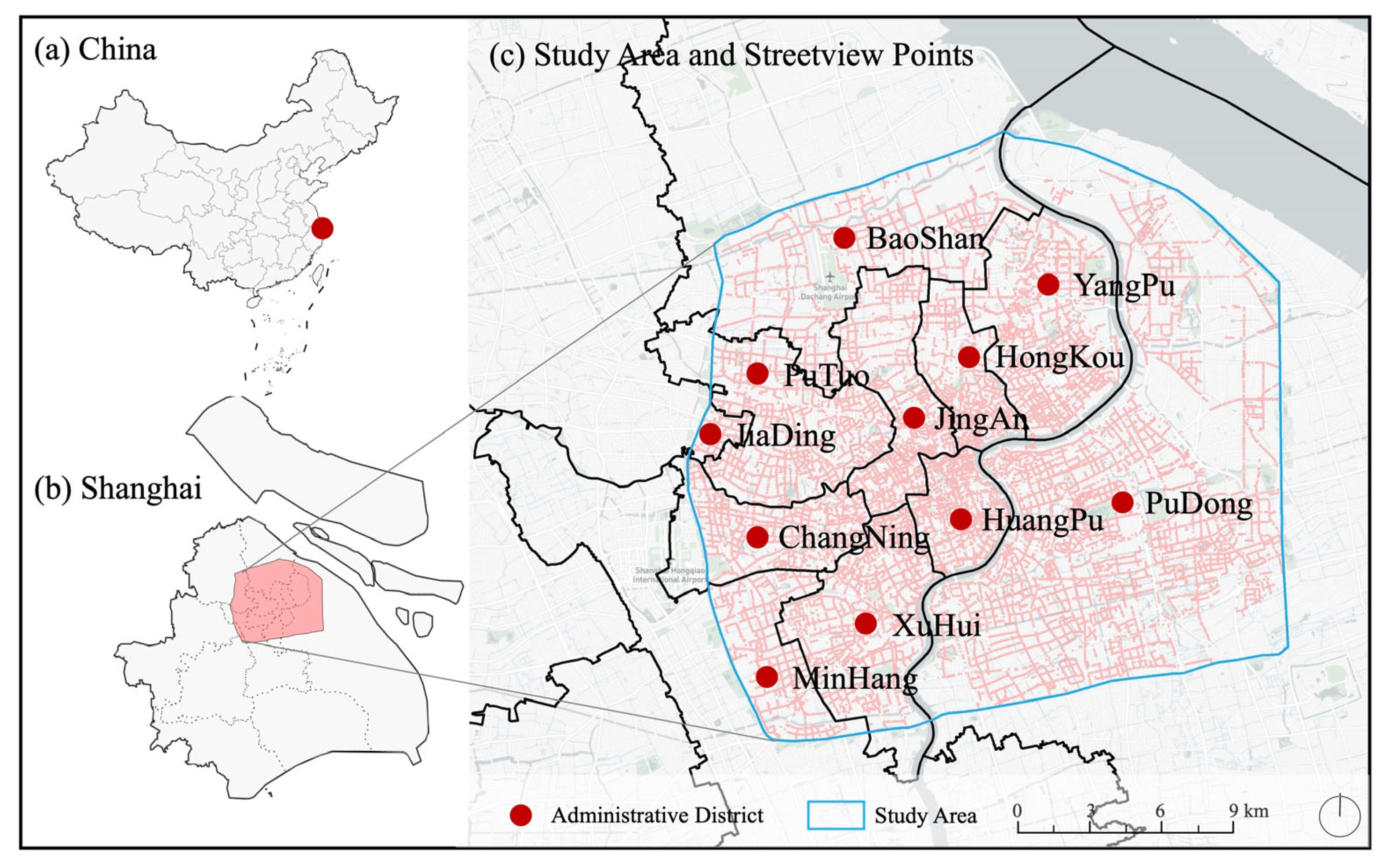

3.1. Study Area

3.2. MIT Place Pulse Dataset

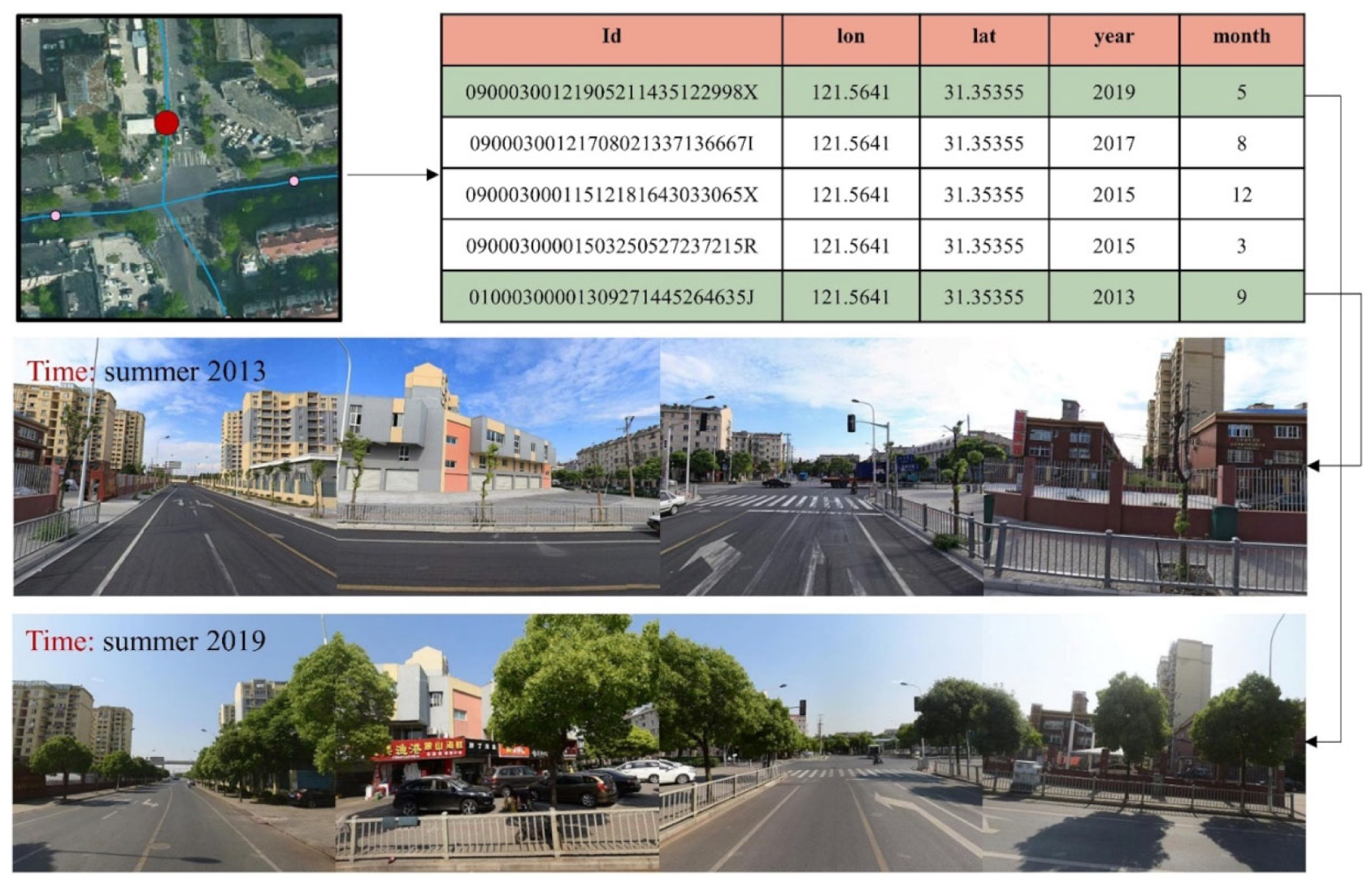

3.3. Historical Street View Data of Shanghai

4. Methodology

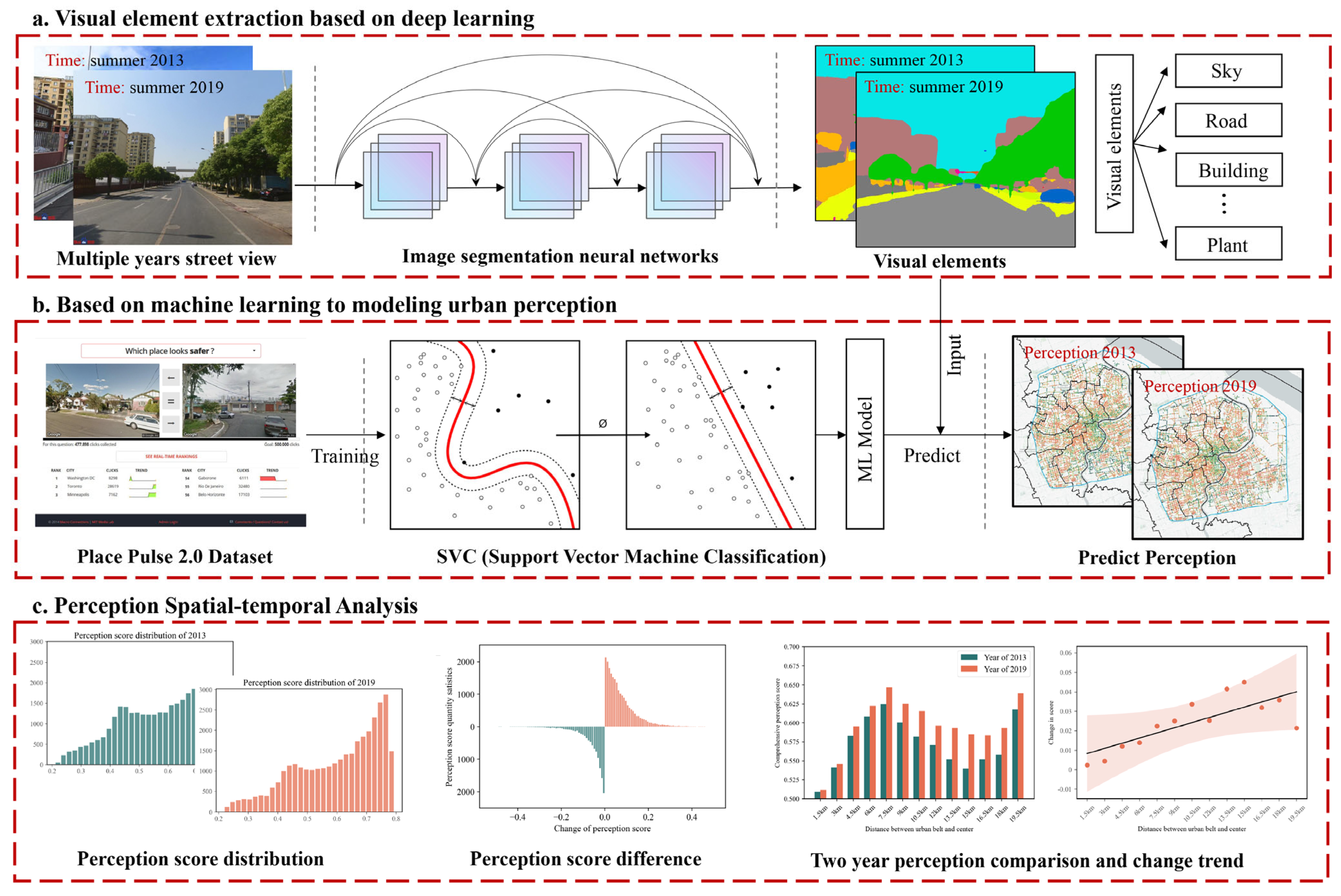

4.1. Visual Element Extraction Based on Deep Learning

4.2. MIT Place Pulse Dataset Preprocessing to Obtain a Single Scene Perception Score

4.3. Using Machine Learning to Model Urban Perception

5. Results

5.1. Average Urban Perception Prediction Results

5.2. Spatial and Temporal Changes in Street View Elements and Average Urban Perception

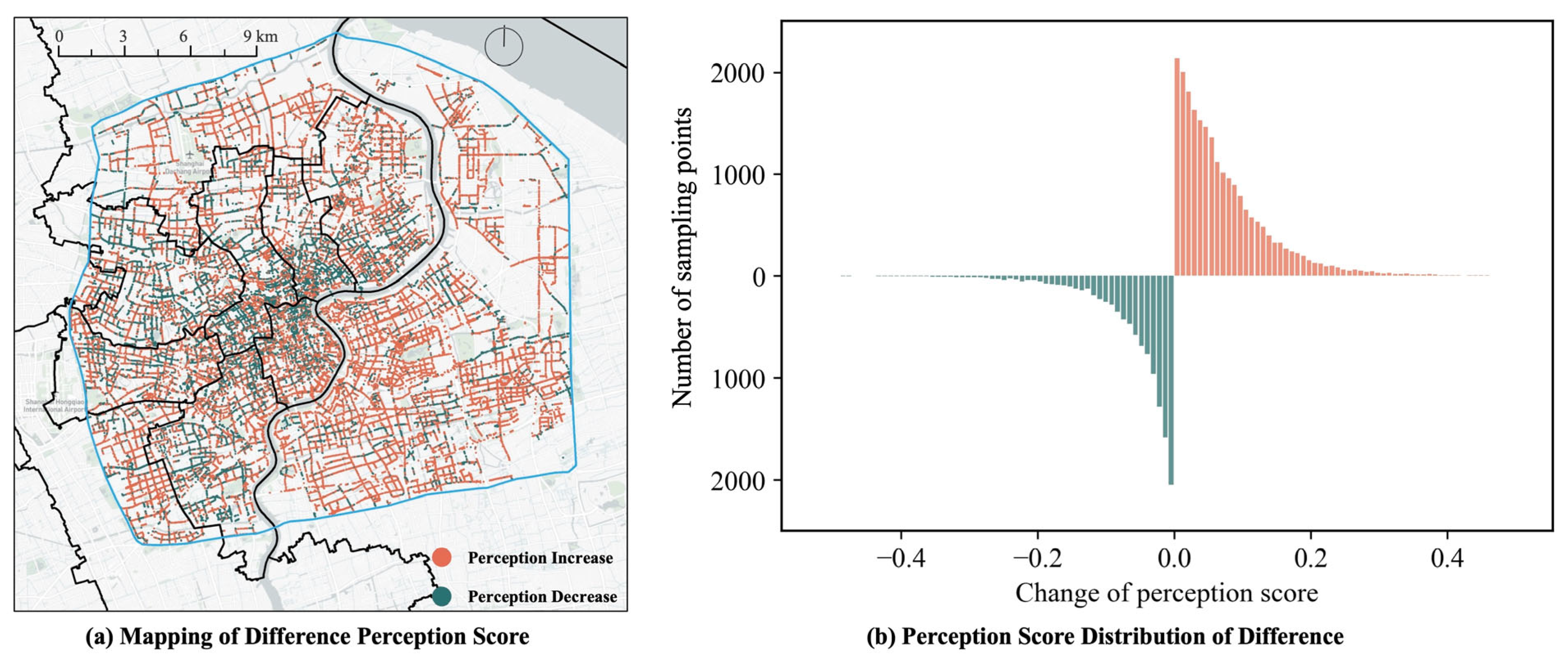

5.3. Recognition Results of Spatiotemporal Changes in Urban Perception

5.4. The Geographical Distribution of Urban Spatial Perception Enhancement

5.5. Correlation Analyses on Differences in Visual Elements and Their Effect on Perception

6. Discussion

6.1. Summary of the Research Result

6.2. The Scientific Contribution of the Practical Approach

6.3. Policy Recommendations Based on Urban Perception

6.3.1. Strengthening Perception-Oriented Policy Incentives

6.3.2. Integrating Natural Elements into Urban Design

6.3.3. Advancing Spatial Justice Through Urban Development

6.3.4. Establishing an Urban Ecological Corridor Network

6.3.5. Diversifying Land Use and Promoting Functional Integration

6.4. Limitations and Future Works

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Salesses, P.; Schechtner, K.; Hidalgo, C.A. The Collaborative Image of The City: Mapping the Inequality of Urban Perception. PLoS ONE 2013, 8, e68400. [Google Scholar] [CrossRef]

- Tuan, Y. Space and Place: The Perspective of Experience; University of Minnesota Press: Minneapolis, MN, USA, 1977. [Google Scholar]

- Goodchild, M.F. Formalizing Place in Geographic Information Systems. In Communities, Neighborhoods, and Health: Expanding the Boundaries of Place; Burton, L.M., Matthews, S.A., Leung, M., Kemp, S.P., Takeuchi, D.T., Eds.; Springer: New York, NY, USA, 2011; pp. 21–33. ISBN 978-1-4419-7482-2. [Google Scholar]

- Naik, N.; Philipoom, J.; Raskar, R.; Hidalgo, C. Streetscore—Predicting the Perceived Safety of One Million Streetscapes. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition Workshops, Columbus, OH, USA, 23–28 June 2014; IEEE: New York, NY, USA, 2014; pp. 793–799. [Google Scholar]

- Ruggeri, D.; Harvey, C.; Bosselmann, P. Perceiving the Livable City: Cross-Cultural Lessons on Virtual and Field Experiences of Urban Environments. J. Am. Plan. Assoc. 2018, 84, 250–262. [Google Scholar] [CrossRef]

- Montello, D.R.; Goodchild, M.F.; Gottsegen, J.; Fohl, P. Where’s Downtown? Behavioral Methods for Determining Referents of Vague Spatial Queries. In Spatial Vagueness, Uncertainty, Granularity; Psychology Press: London, UK, 2003; ISBN 978-0-203-76457-2. [Google Scholar]

- Han, X.; Wang, L.; Seo, S.H.; He, J.; Jung, T. Measuring Perceived Psychological Stress in Urban Built Environments Using Google Street View and Deep Learning. Front. Public Health 2022, 10, 891736. [Google Scholar] [CrossRef] [PubMed]

- Sallis, J.F.; Glanz, K. The Role of Built Environments in Physical Activity, Eating, and Obesity in Childhood. Future Child. 2006, 16, 89–108. [Google Scholar] [CrossRef] [PubMed]

- Zakariya, K.; Harun, N.Z.; Mansor, M. Place Meaning of the Historic Square as Tourism Attraction and Community Leisure Space. Procedia-Soc. Behav. Sci. 2015, 202, 477–486. [Google Scholar] [CrossRef]

- Yoshimura, Y.; He, S.; Hack, G.; Nagakura, T.; Ratti, C. Quantifying Memories: Mapping Urban Perception. Mob. Netw. Appl. 2020, 25, 1275–1286. [Google Scholar] [CrossRef]

- Wei, W.; Deng, Y.; Huang, L.; Chen, S.; Li, F.; Xu, L. Environment-Deterministic Pedestrian Behavior? New Insights from Surveillance Video Evidence. Cities 2022, 125, 103638. [Google Scholar] [CrossRef]

- Han, X.; Wang, L.; He, J.; Jung, T. Restorative Perception of Urban Streets: Interpretation Using Deep Learning and MGWR Models. Front. Public Health 2023, 11, 1141630. [Google Scholar] [CrossRef]

- Ma, X.; Ma, C.; Wu, C.; Xi, Y.; Yang, R.; Peng, N.; Zhang, C.; Ren, F. Measuring Human Perceptions of Streetscapes to Better Inform Urban Renewal: A Perspective of Scene Semantic Parsing. Cities 2021, 110, 103086. [Google Scholar] [CrossRef]

- Ye, Y.; Richards, D.; Lu, Y.; Song, X.; Zhuang, Y.; Zeng, W.; Zhong, T. Measuring Daily Accessed Street Greenery: A Human-Scale Approach for Informing Better Urban Planning Practices. Landsc. Urban Plan. 2019, 191, 103434. [Google Scholar] [CrossRef]

- Kaplan, S. The Restorative Benefits of Nature: Toward an Integrative Framework. J. Environ. Psychol. 1995, 15, 169–182. [Google Scholar] [CrossRef]

- Van Renterghem, T.; Botteldooren, D. View on Outdoor Vegetation Reduces Noise Annoyance for Dwellers near Busy Roads. Landsc. Urban Plan. 2016, 148, 203–215. [Google Scholar] [CrossRef]

- Taghipour, A.; Sievers, T.; Eggenschwiler, K. Acoustic Comfort in Virtual Inner Yards with Various Building Facades. Int. J. Environ. Res. Public Health 2019, 16, 249. [Google Scholar] [CrossRef] [PubMed]

- Verma, D.; Jana, A.; Ramamritham, K. Predicting Human Perception of the Urban Environment in a Spatiotemporal Urban Setting Using Locally Acquired Street View Images and Audio Clips. Build. Environ. 2020, 186, 107340. [Google Scholar] [CrossRef]

- Ramírez, T.; Hurtubia, R.; Lobel, H.; Rossetti, T. Measuring Heterogeneous Perception of Urban Space with Massive Data and Machine Learning: An Application to Safety. Landsc. Urban Plan. 2021, 208, 104002. [Google Scholar] [CrossRef]

- Wang, R.; Ren, S.; Zhang, J.; Yao, Y.; Wang, Y.; Guan, Q. A Comparison of Two Deep-Learning-Based Urban Perception Models: Which One Is Better? Comput. Urban Sci. 2021, 1, 3. [Google Scholar] [CrossRef]

- Cai, M.; Xiang, L.; Ng, E. How Does the Visual Environment Influence Pedestrian Physiological Stress? Evidence from High-Density Cities Using Ambulatory Technology and Spatial Machine Learning. Sustain. Cities Soc. 2023, 96, 104695. [Google Scholar] [CrossRef]

- Biljecki, F.; Ito, K. Street View Imagery in Urban Analytics and GIS: A Review. Landsc. Urban Plan. 2021, 215, 104217. [Google Scholar] [CrossRef]

- Marasinghe, R.; Yigitcanlar, T.; Mayere, S.; Washington, T.; Limb, M. Computer Vision Applications for Urban Planning: A Systematic Review of Opportunities and Constraints. Sustain. Cities Soc. 2023, 100, 105047. [Google Scholar] [CrossRef]

- Zhang, F.; Zhang, D.; Liu, Y.; Lin, H. Representing Place Locales Using Scene Elements. Comput. Environ. Urban Syst. 2018, 71, 153–164. [Google Scholar] [CrossRef]

- Larkin, A.; Gu, X.; Chen, L.; Hystad, P. Predicting Perceptions of the Built Environment Using GIS, Satellite and Street View Image Approaches. Landsc. Urban Plan. 2021, 216, 104257. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Lu, Y.; Ye, Y.; Xiao, Y.; Yang, L. Examining the Association between the Built Environment and Pedestrian Volume Using Street View Images. Cities 2022, 127, 103734. [Google Scholar] [CrossRef]

- Dai, L.; Zheng, C.; Dong, Z.; Yao, Y.; Wang, R.; Zhang, X.; Ren, S.; Zhang, J.; Song, X.; Guan, Q. Analyzing the Correlation between Visual Space and Residents’ Psychology in Wuhan, China Using Street-View Images and Deep-Learning Technique. City Environ. Interact. 2021, 11, 100069. [Google Scholar] [CrossRef]

- Jeon, J.Y.; Jo, H.I.; Lee, K. Psycho-Physiological Restoration with Audio-Visual Interactions through Virtual Reality Simulations of Soundscape and Landscape Experiences in Urban, Waterfront, and Green Environments. Sustain. Cities Soc. 2023, 99, 104929. [Google Scholar] [CrossRef]

- He, J.; Zhang, J.; Yao, Y.; Li, X. Extracting Human Perceptions from Street View Images for Better Assessing Urban Renewal Potential. Cities 2023, 134, 104189. [Google Scholar] [CrossRef]

- Wei, J.; Yue, W.; Li, M.; Gao, J. Mapping Human Perception of Urban Landscape from Street-View Images: A Deep-Learning Approach. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102886. [Google Scholar] [CrossRef]

- Wang, Z.; Ito, K.; Biljecki, F. Assessing the Equity and Evolution of Urban Visual Perceptual Quality with Time Series Street View Imagery. Cities 2024, 145, 104704. [Google Scholar] [CrossRef]

- Thompson, C.W. Urban Open Space in the 21st Century. Landsc. Urban Plan. 2002, 60, 59–72. [Google Scholar] [CrossRef]

- Caughy, M.O.; O’Campo, P.J.; Patterson, J. A Brief Observational Measure for Urban Neighborhoods. Health Place 2001, 7, 225–236. [Google Scholar] [CrossRef]

- Inoue, T.; Manabe, R.; Murayama, A.; Koizumi, H. Landscape Value in Urban Neighborhoods: A Pilot Analysis Using Street-Level Images. Landsc. Urban Plan. 2022, 221, 104357. [Google Scholar] [CrossRef]

- Bishop, I.D.; Hulse, D.W. Prediction of Scenic Beauty Using Mapped Data and Geographic Information Systems. Landsc. Urban Plan. 1994, 30, 59–70. [Google Scholar] [CrossRef]

- Wang, L.; Han, X.; He, J.; Jung, T. Measuring Residents’ Perceptions of City Streets to Inform Better Street Planning through Deep Learning and Space Syntax. ISPRS J. Photogramm. Remote Sens. 2022, 190, 215–230. [Google Scholar] [CrossRef]

- Calder, A.J.; Young, A.W.; Keane, J.; Dean, M. Configural Information in Facial Expression Perception. J. Exp. Psychol. Hum. Percept. Perform. 2000, 26, 527–551. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Zhuo, K.; Wei, W.; Li, F.; Yin, J.; Xu, L. Emotional Responses to the Visual Patterns of Urban Streets: Evidence from Physiological and Subjective Indicators. Int. J. Environ. Res. Public Health 2021, 18, 9677. [Google Scholar] [CrossRef] [PubMed]

- Chen, D.; Yin, J.; Yu, C.-P.; Sun, S.; Gabel, C.; Spengler, J.D. Physiological and Psychological Responses to Transitions between Urban Built and Natural Environments Using the Cave Automated Virtual Environment. Landsc. Urban Plan. 2024, 241, 104919. [Google Scholar] [CrossRef]

- Li, Y.; Yabuki, N.; Fukuda, T. Measuring Visual Walkability Perception Using Panoramic Street View Images, Virtual Reality, and Deep Learning. Sustain. Cities Soc. 2022, 86, 104140. [Google Scholar] [CrossRef]

- Ordonez, V.; Berg, T.L. Learning High-Level Judgments of Urban Perception. In Proceedings of the Computer Vision—ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Springer International Publishing: Cham, Switzerland, 2014; Volume 8694, pp. 494–510. [Google Scholar]

- Naik, N.; Raskar, R.; Hidalgo, C.A. Cities Are Physical Too: Using Computer Vision to Measure the Quality and Impact of Urban Appearance. Am. Econ. Rev. 2016, 106, 128–132. [Google Scholar] [CrossRef]

- Naik, N.; Kominers, S.D.; Raskar, R.; Glaeser, E.L.; Hidalgo, C.A. Computer Vision Uncovers Predictors of Physical Urban Change. Proc. Natl. Acad. Sci. USA 2017, 114, 7571–7576. [Google Scholar] [CrossRef]

- Zhang, F.; Zu, J.; Hu, M.; Zhu, D.; Kang, Y.; Gao, S.; Zhang, Y.; Huang, Z. Uncovering Inconspicuous Places Using Social Media Check-Ins and Street View Images. Comput. Environ. Urban Syst. 2020, 81, 101478. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, M.; Wang, M.; Huang, J.; Thomas, F.; Rahimi, K.; Mamouei, M. An Interpretable Machine Learning Framework for Measuring Urban Perceptions from Panoramic Street View Images. iScience 2023, 26, 106132. [Google Scholar] [CrossRef]

- Zhang, F.; Fan, Z.; Kang, Y.; Hu, Y.; Ratti, C. “Perception Bias”: Deciphering a Mismatch between Urban Crime and Perception of Safety. Landsc. Urban Plan. 2021, 207, 104003. [Google Scholar] [CrossRef]

- Fan, Z.; Zhang, F.; Loo, B.P.Y.; Ratti, C. Urban Visual Intelligence: Uncovering Hidden City Profiles with Street View Images. Proc. Natl. Acad. Sci. USA 2023, 120, e2220417120. [Google Scholar] [CrossRef] [PubMed]

- Zhang, F.; Salazar-Miranda, A.; Duarte, F.; Vale, L.; Hack, G.; Chen, M.; Liu, Y.; Batty, M.; Ratti, C. Urban Visual Intelligence: Studying Cities with Artificial Intelligence and Street-Level Imagery. Ann. Am. Assoc. Geogr. 2024, 114, 876–897. [Google Scholar] [CrossRef]

- Hou, Y.; Biljecki, F. A Comprehensive Framework for Evaluating the Quality of Street View Imagery. Int. J. Appl. Earth Obs. Geoinf. 2022, 115, 103094. [Google Scholar] [CrossRef]

- Kang, Y.; Zhang, F.; Gao, S.; Lin, H.; Liu, Y. A Review of Urban Physical Environment Sensing Using Street View Imagery in Public Health Studies. Ann. GIS 2020, 26, 261–275. [Google Scholar] [CrossRef]

- Zhou, B.; Zhao, H.; Puig, X.; Xiao, T.; Fidler, S.; Barriuso, A.; Torralba, A. Semantic Understanding of Scenes through the ADE20K Dataset. Int. J. Comput. Vis. 2018, 127, 302–321. [Google Scholar] [CrossRef]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: Las Vegas, NV, USA, 2016; pp. 3213–3223. [Google Scholar]

- Lin, J.; Wang, Q.; Li, X. Socioeconomic and Spatial Inequalities of Street Tree Abundance, Species Diversity, and Size Structure in New York City. Landsc. Urban Plan. 2021, 206, 103992. [Google Scholar] [CrossRef]

- Sun, Y.; Wang, X.; Zhu, J.; Chen, L.; Jia, Y.; Lawrence, J.M.; Jiang, L.; Xie, X.; Wu, J. Using Machine Learning to Examine Street Green Space Types at a High Spatial Resolution: Application in Los Angeles County on Socioeconomic Disparities in Exposure. Sci. Total Environ. 2021, 787, 147653. [Google Scholar] [CrossRef]

- Xiao, C.; Shi, Q.; Gu, C.-J. Assessing the Spatial Distribution Pattern of Street Greenery and Its Relationship with Socioeconomic Status and the Built Environment in Shanghai, China. Land 2021, 10, 871. [Google Scholar] [CrossRef]

- He, D.; Miao, J.; Lu, Y.; Song, Y.; Chen, L.; Liu, Y. Urban Greenery Mitigates the Negative Effect of Urban Density on Older Adults’ Life Satisfaction: Evidence from Shanghai, China. Cities 2022, 124, 103607. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, L.; Wu, J.; Li, P.; Dong, J.; Wang, T. Decoding Urban Green Spaces: Deep Learning and Google Street View Measure Greening Structures. Urban For. Urban Green. 2023, 87, 128028. [Google Scholar] [CrossRef]

- Zhu, H.; Nan, X.; Yang, F.; Bao, Z. Utilizing the Green View Index to Improve the Urban Street Greenery Index System: A Statistical Study Using Road Patterns and Vegetation Structures as Entry Points. Landsc. Urban Plan. 2023, 237, 104780. [Google Scholar] [CrossRef]

- Krylov, V.A.; Kenny, E.; Dahyot, R. Automatic Discovery and Geotagging of Objects from Street View Imagery. Remote Sens. 2018, 10, 661. [Google Scholar] [CrossRef]

- Lumnitz, S.; Devisscher, T.; Mayaud, J.R.; Radic, V.; Coops, N.C.; Griess, V.C. Mapping Trees along Urban Street Networks with Deep Learning and Street-Level Imagery. ISPRS J. Photogramm. Remote Sens. 2021, 175, 144–157. [Google Scholar] [CrossRef]

- Velasquez-Camacho, L.; Etxegarai, M.; de-Miguel, S. Implementing Deep Learning Algorithms for Urban Tree Detection and Geolocation with High-Resolution Aerial, Satellite, and Ground-Level Images. Comput. Environ. Urban Syst. 2023, 105, 102025. [Google Scholar] [CrossRef]

- Liu, D.; Jiang, Y.; Wang, R.; Lu, Y. Establishing a Citywide Street Tree Inventory with Street View Images and Computer Vision Techniques. Comput. Environ. Urban Syst. 2023, 100, 101924. [Google Scholar] [CrossRef]

- Hu, C.-B.; Zhang, F.; Gong, F.-Y.; Ratti, C.; Li, X. Classification and Mapping of Urban Canyon Geometry Using Google Street View Images and Deep Multitask Learning. Build. Environ. 2020, 167, 106424. [Google Scholar] [CrossRef]

- Yan, Y.; Huang, B. Estimation of Building Height Using a Single Street View Image via Deep Neural Networks. ISPRS J. Photogramm. Remote Sens. 2022, 192, 83–98. [Google Scholar] [CrossRef]

- Sun, M.; Zhang, F.; Duarte, F.; Ratti, C. Understanding Architecture Age and Style through Deep Learning. Cities 2022, 128, 103787. [Google Scholar] [CrossRef]

- Tang, J.; Long, Y. Measuring Visual Quality of Street Space and Its Temporal Variation: Methodology and Its Application in the Hutong Area in Beijing. Landsc. Urban Plan. 2019, 191, 103436. [Google Scholar] [CrossRef]

- Xia, Y.; Yabuki, N.; Fukuda, T. Sky View Factor Estimation from Street View Images Based on Semantic Segmentation. Urban Clim. 2021, 40, 100999. [Google Scholar] [CrossRef]

- Zhu, H.; Gu, Z. A Method of Estimating the Spatiotemporal Distribution of Reflected Sunlight from Glass Curtain Walls in High-Rise Business Districts Using Street-View Panoramas. Sustain. Cities Soc. 2022, 79, 103671. [Google Scholar] [CrossRef]

- Li, X.; Ratti, C.; Seiferling, I. Quantifying the Shade Provision of Street Trees in Urban Landscape: A Case Study in Boston, USA, Using Google Street View. Landsc. Urban Plan. 2018, 169, 81–91. [Google Scholar] [CrossRef]

- Liu, Z. Towards Feasibility of Photovoltaic Road for Urban Traffic-Solar Energy Estimation Using Street View Image. J. Clean. Prod. 2019, 228, 303–318. [Google Scholar] [CrossRef]

- Deng, M.; Yang, W.; Chen, C.; Wu, Z.; Liu, Y.; Xiang, C. Street-Level Solar Radiation Mapping and Patterns Profiling Using Baidu Street View Images. Sustain. Cities Soc. 2021, 75, 103289. [Google Scholar] [CrossRef]

- Kim, S.; Woo, A. Streetscape and Business Survival: Examining the Impact of Walkable Environments on the Survival of Restaurant Businesses in Commercial Areas Based on Street View Images. J. Transp. Geogr. 2022, 105, 103480. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, Y. Detecting the City-Scale Spatial Pattern of the Urban Informal Sector by Using the Street View Images: A Street Vendor Massive Investigation Case. Cities 2022, 131, 103959. [Google Scholar] [CrossRef]

- Campbell, A.; Both, A.; Sun, Q. (Chayn) Detecting and Mapping Traffic Signs from Google Street View Images Using Deep Learning and GIS. Comput. Environ. Urban Syst. 2019, 77, 101350. [Google Scholar] [CrossRef]

- Guan, F.; Fang, Z.; Zhang, X.; Zhong, H.; Zhang, J.; Huang, H. Using Street-View Panoramas to Model the Decision-Making Complexity of Road Intersections Based on the Passing Branches during Navigation. Comput. Environ. Urban Syst. 2023, 103, 101975. [Google Scholar] [CrossRef]

- Xing, Z.; Yang, S.; Zan, X.; Dong, X.; Yao, Y.; Liu, Z.; Zhang, X. Flood Vulnerability Assessment of Urban Buildings Based on Integrating High-Resolution Remote Sensing and Street View Images. Sustain. Cities Soc. 2023, 92, 104467. [Google Scholar] [CrossRef]

- Ki, D.; Chen, Z.; Lee, S.; Lieu, S. A Novel Walkability Index Using Google Street View and Deep Learning. Sustain. Cities Soc. 2023, 99, 104896. [Google Scholar] [CrossRef]

- Liu, J.; Ettema, D.; Helbich, M. Street View Environments Are Associated with the Walking Duration of Pedestrians: The Case of Amsterdam, the Netherlands. Landsc. Urban Plan. 2023, 235, 104752. [Google Scholar] [CrossRef]

- Rui, J. Measuring Streetscape Perceptions from Driveways and Sidewalks to Inform Pedestrian-Oriented Street Renewal in Düsseldorf. Cities 2023, 141, 104472. [Google Scholar] [CrossRef]

- Helbich, M.; Yao, Y.; Liu, Y.; Zhang, J.; Liu, P.; Wang, R. Using Deep Learning to Examine Street View Green and Blue Spaces and Their Associations with Geriatric Depression in Beijing, China. Environ. Int. 2019, 126, 107–117. [Google Scholar] [CrossRef]

- Akpınar, A. How Perceived Sensory Dimensions of Urban Green Spaces Are Associated with Teenagers’ Perceived Restoration, Stress, and Mental Health? Landsc. Urban Plan. 2021, 214, 104185. [Google Scholar] [CrossRef]

- Yao, Y.; Yin, H.; Xu, C.; Chen, D.; Shao, L.; Guan, Q.; Wang, R. Assessing Myocardial Infarction Severity from the Urban Environment Perspective in Wuhan, China. J. Environ. Manag. 2022, 317, 115438. [Google Scholar] [CrossRef]

- Kim, J.-I.; Yu, C.-Y.; Woo, A. The Impacts of Visual Street Environments on Obesity: The Mediating Role of Walking Behaviors. J. Transp. Geogr. 2023, 109, 103593. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, F.; Chen, N. Migratable Urban Street Scene Sensing Method Based on Vision Language Pre-Trained Model. Int. J. Appl. Earth Obs. Geoinf. 2022, 113, 102989. [Google Scholar] [CrossRef]

- Zeng, Q.; Gong, Z.; Wu, S.; Zhuang, C.; Li, S. Measuring Cyclists’ Subjective Perceptions of the Street Riding Environment Using K-Means SMOTE-RF Model and Street View Imagery. Int. J. Appl. Earth Obs. Geoinf. 2024, 128, 103739. [Google Scholar] [CrossRef]

- Yu, X.; Her, Y.; Huo, W.; Chen, G.; Qi, W. Spatio-Temporal Monitoring of Urban Street-Side Vegetation Greenery Using Baidu Street View Images. Urban For. Urban Green. 2022, 73, 127617. [Google Scholar] [CrossRef]

- Han, Y.; Zhong, T.; Yeh, A.G.O.; Zhong, X.; Chen, M.; Lü, G. Mapping Seasonal Changes of Street Greenery Using Multi-Temporal Street-View Images. Sustain. Cities Soc. 2023, 92, 104498. [Google Scholar] [CrossRef]

- Li, X. Examining the Spatial Distribution and Temporal Change of the Green View Index in New York City Using Google Street View Images and Deep Learning. Environ. Plan. B Urban Anal. City Sci. 2021, 48, 2039–2054. [Google Scholar] [CrossRef]

- Thackway, W.; Ng, M.; Lee, C.-L.; Pettit, C. Implementing a Deep-Learning Model Using Google Street View to Combine Social and Physical Indicators of Gentrification. Comput. Environ. Urban Syst. 2023, 102, 101970. [Google Scholar] [CrossRef]

- Shanghai Municipal Bureau of Planning and Natural Resources. Shanghai Master Plan (2017–2035). Shanghai, China. 2018. Available online: https://ghzyj.sh.gov.cn/gtztgh/20230920/9799aa7eeed84b8aa318983474f9eccf.html (accessed on 18 July 2025).

- Shanghai Municipal Bureau of Statistics. The Seventh National Population Census of Shanghai. Shanghai, China. 2021. Available online: https://tjj.sh.gov.cn/7renpu/index.html (accessed on 18 July 2025).

- Dubey, A.; Naik, N.; Parikh, D.; Raskar, R.; Hidalgo, C.A. Deep Learning the City: Quantifying Urban Perception at a Global Scale. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2016; Volume 9905, pp. 196–212. ISBN 978-3-319-46447-3. [Google Scholar]

- Donnelly, J.; Daneshkhah, A.; Abolfathi, S. Physics-Informed Neural Networks as Surrogate Models of Hydrodynamic Simulators. Sci. Total Environ. 2024, 912, 168814. [Google Scholar] [CrossRef] [PubMed]

- Donnelly, J.; Daneshkhah, A.; Abolfathi, S. Forecasting Global Climate Drivers Using Gaussian Processes and Convolutional Autoencoders. Eng. Appl. Artif. Intell. 2024, 128, 107536. [Google Scholar] [CrossRef]

- Zhou, B.; Zhao, H.; Puig, X.; Fidler, S.; Barriuso, A.; Torralba, A. Scene Parsing Through ADE20K Dataset. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 633–641. [Google Scholar]

- Zhang, F.; Zhou, B.; Liu, L.; Liu, Y.; Fung, H.H.; Lin, H.; Ratti, C. Measuring Human Perceptions of a Large-Scale Urban Region Using Machine Learning. Landsc. Urban Plan. 2018, 180, 148–160. [Google Scholar] [CrossRef]

- Schölkopf, B.; Platt, J.; Hofmann, T. TrueSkill: A Bayesian Skill Rating System; MIT Press: Boston, MA, USA, 2007; pp. 569–576. [Google Scholar]

- Nasar, J.L. The Evaluative Image of the City. J. Am. Plan. Assoc. 1990, 56, 41–53. [Google Scholar] [CrossRef]

- Joachims, T. Text Categorization with Support Vector Machines: Learning with Many Relevant Features. In Proceedings of the Machine Learning: ECML-98, Chemnitz, Germany, 21–23 April 1998; Nédellec, C., Rouveirol, C., Eds.; Springer: Berlin/Heidelberg, Germany, 1998; pp. 137–142. [Google Scholar]

- Yeganeh-Bakhtiary, A.; EyvazOghli, H.; Shabakhty, N.; Abolfathi, S. Machine Learning Prediction of Wave Characteristics: Comparison between Semi-Empirical Approaches and DT Model. Ocean Eng. 2023, 286, 115583. [Google Scholar] [CrossRef]

| Continent | Number of Cities | Number of Images |

|---|---|---|

| Asia | 7 | 11,342 |

| Africa | 3 | 5069 |

| Australia | 2 | 6082 |

| Europe | 22 | 38,636 |

| North America | 15 | 33,691 |

| South America | 7 | 16,168 |

| Total | 56 | 110,988 |

| Perception | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|

| Safety | 0.6783 | 0.6847 | 0.6783 | 0.6737 |

| Lively | 0.6777 | 0.6784 | 0.6777 | 0.6777 |

| Beautiful | 0.7239 | 0.7342 | 0.7239 | 0.7186 |

| Wealthy | 0.6237 | 0.6295 | 0.6237 | 0.6191 |

| Depress | 0.6190 | 0.6279 | 0.6190 | 0.6115 |

| Boring | 0.5912 | 0.5924 | 0.5912 | 0.5889 |

| Number | Visual Elements | 2013 Mean | 2019 Mean | 2013 Max | 2019 Max | 2013 Min | 2019 Min | 2013 S.D. | 2019 S.D. |

|---|---|---|---|---|---|---|---|---|---|

| 1 | Road | 0.233 | 0.225 | 0.458 | 0.431 | 1.74E-06 | 6.94E-06 | 0.083 | 0.069 |

| 2 | Plant | 0.222 | 0.259 | 0.792 | 0.801 | 8.68E-07 | 8.68E-07 | 0.128 | 0.139 |

| 4 | Building | 0.192 | 0.184 | 0.879 | 0.838 | 5.21E-06 | 8.68E-07 | 0.129 | 0.134 |

| 5 | Sky | 0.177 | 0.173 | 0.548 | 0.584 | 8.68E-07 | 3.47E-06 | 0.107 | 0.109 |

| 6 | Sidewalk | 0.068 | 0.060 | 0.368 | 0.346 | 8.68E-07 | 8.68E-07 | 0.054 | 0.044 |

| 7 | Wall | 0.023 | 0.023 | 0.676 | 0.878 | 8.67E-07 | 8.68E-07 | 0.044 | 0.046 |

| 8 | Fence | 0.012 | 0.014 | 0.302 | 0.353 | 8.68E-07 | 8.68E-07 | 0.020 | 0.023 |

| Non-Standardized Coefficient | Standardized Coefficient | ||||

|---|---|---|---|---|---|

| B | Stand Error | Beta | t | p | |

| (Constant) | 0.003 | 0 | - | 10.199 | 0.000 *** |

| Differ Tree | 0.659 | 0.006 | 0.571 | 113.341 | 0.000 *** |

| Differ Road | 0.126 | 0.004 | 0.086 | 30.236 | 0.000 *** |

| Differ Building | −0.087 | 0.006 | −0.068 | −15.219 | 0.000 *** |

| Differ Sky | −0.652 | 0.006 | −0.423 | −108.136 | 0.000 *** |

| Differ Sidewalk | −0.033 | 0.005 | −0.017 | −6.241 | 0.000 *** |

| Differ Plant | 0.061 | 0.008 | 0.019 | 7.656 | 0.000 *** |

| Differ Wall | −0.256 | 0.007 | −0.103 | −36.058 | 0.000 *** |

| Differ Fence | −0.07 | 0.009 | −0.019 | −7.485 | 0.000 *** |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the International Society for Photogrammetry and Remote Sensing. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhong, W.; Wang, L.; Han, X.; Gao, Z. Spatiotemporal Analysis of Urban Perception Using Multi-Year Street View Images and Deep Learning. ISPRS Int. J. Geo-Inf. 2025, 14, 390. https://doi.org/10.3390/ijgi14100390

Zhong W, Wang L, Han X, Gao Z. Spatiotemporal Analysis of Urban Perception Using Multi-Year Street View Images and Deep Learning. ISPRS International Journal of Geo-Information. 2025; 14(10):390. https://doi.org/10.3390/ijgi14100390

Chicago/Turabian StyleZhong, Wen, Lei Wang, Xin Han, and Zhe Gao. 2025. "Spatiotemporal Analysis of Urban Perception Using Multi-Year Street View Images and Deep Learning" ISPRS International Journal of Geo-Information 14, no. 10: 390. https://doi.org/10.3390/ijgi14100390

APA StyleZhong, W., Wang, L., Han, X., & Gao, Z. (2025). Spatiotemporal Analysis of Urban Perception Using Multi-Year Street View Images and Deep Learning. ISPRS International Journal of Geo-Information, 14(10), 390. https://doi.org/10.3390/ijgi14100390