Abstract

Multispectral object detection is a fundamental task with an extensive range of practical implications. In particular, combining visible (RGB) and infrared (IR) images can offer complementary information that enhances detection performance in different weather scenarios. However, the existing methods generally involve aligning features across modalities and require proposals for the two-stage detectors, which are often slow and unsuitable for large-scale applications. To overcome this challenge, we introduce a novel one-stage oriented detector for RGB-infrared object detection called the Layer-wise Cross-Modality calibration and Aggregation (LCMA) detector. LCMA employs a layer-wise strategy to achieve cross-modality alignment by using the proposed inter-modality spatial-reduction attention. Moreover, we design Gated Coupled Filter in each layer to capture semantically meaningful features while ensuring that well-aligned and foreground object information is obtained before forwarding them to the detection head. This relieves the need for a region proposal step for the alignment, enabling direct category and bounding box predictions in a unified one-stage oriented detector. Extensive experiments on two challenging datasets demonstrate that the proposed LCMA outperforms state-of-the-art methods in terms of both accuracy and computational efficiency, which implies the efficacy of our approach in exploiting multi-modality information for robust and efficient multispectral object detection.

1. Introduction

Object detection in aerial imagery is an essential computer vision task that entails identifying and localizing objects in images captured from high-altitude platforms, including satellites or unmanned aerial vehicles [1,2]. Although numerous object detection methods based on visible images have shown exceptional performance in well-lit conditions [3,4], they often encounter difficulties in accurately detecting objects in low-light or other challenging lighting conditions, restricting their deployment [5,6]. To this end, visible (RGB) and infrared (IR) imaging have emerged as a promising alternative for all-weather sensing applications, especially object detection [7,8,9,10].

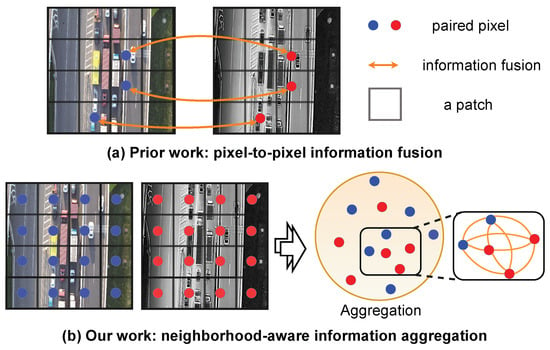

Existing methods assume that RGB-IR images are fully geometrically aligned and they fuse features directly in the pixel-to-pixel manner. Actually, as different sensors are not fully synchronized, cross-modality images inevitably have weak misalignment at the pixel level. Furthermore, pixel-to-pixel fusion [11,12,13] fails to consider the rich contextual information in intra- and inter-modality, as illustrated in Figure 1.

Figure 1.

Comparison of various fusion methods (a,b).

Recently, many cross-modality object detection methods have focused on using two-stage detectors [11,14,15,16,17] to deal with the alignment. However, aligning cross-modality features in the region proposal stage incurs a significant computational cost, and the calculation burden of detection head scales linearly with the number of input modalities. Despite the promising results achieved by the state-of-the-art two-stage TSFANet [15], which outperforms the AR-CNN [18] and CIAN [19] models, it still struggles to meet the growing demands of high-speed object detection. Thus, we pose the following question: Can we develop an efficient one-stage object detector for RGB-IR images?

To this end, we propose an efficient one-stage oriented detector for RGB-IR object detection: the layer-wise cross-modality calibration and aggregation (LCMA) detector. Considering the alignment relationship of weak, misaligned pixels in neighboring pixels, LCMA employs a neighborhood-aware way to calibrate the weak, misaligned RGB-IR features in a layer-wise strategy; that is, the features are calibrated and aggregated layer by layer during the interaction process. To reduce the computational burden and calibrate pixels, we introduce inter-modality spatial-reduction attention (ISRA) embedded into each layer, which calibrates multi-modality features by neighborhood-aware aggregation, reducing the computational burden by sharing cross-modal attentional weights.

Moreover, a large amount of redundant information in RGB-IR images, e.g., RGB images with insufficient lighting, also introduces many unnecessary computations. We design a Gated Coupled Filter (GCF) module embedded in each layer to tackle this issue. It couples the multi-modality features to enable inter-channel communication and filter the redundant information using MLP, so that the joint function of ISRA and GCF ensures that the features of the input detection head are calibrated and semantically informative. Combined with the layer-wise strategy, the proposed LCMA avoids sending all multi-modality features to the region extraction stage for alignment. Instead, it directly predicts the class and bounding box of the object, achieving efficient one-stage object detection. Our one-stage oriented detector LCMA delivers high detection speed with improved detection accuracy simultaneously, making it suitable for practical applications.

The main contributions of this paper are as follows:

- We propose a one-stage RGB-IR object detection framework, aligning multispectral images with contextual information to improve performance and efficiency.

- An ISRA module is embedded in each layer to calibrate multi-modal images at the feature level through neighborhood-aware aggregation.

- The design of the calibration and filtering of the ISRA and GCF modules and the design of the detector head together form an efficient one-stage oriented detector.

- Extensive experiments on three RGB-IR object detection benchmarks highlight LCMA’s superiority, demonstrating significant performance gains and increased speed.

2. Related Work

2.1. Oriented Object Detection

In recent years, aerial imagery has gained significant interest, leading to the development of benchmarks including DOTA [20] for large-scale aerial image object detection that accounts for direction. In particular, object detection methods based on the rotation framework [4,21,22] and OR-CNN [23] have demonstrated prominent performance by utilizing multiple anchor frames with varying angles and scales. Recently, researchers have proposed a range of revolutionary frameworks to augment the efficacy of detectors. Among these, there are R3Det [24], S2A-Net [25], and AO2-DETR [26], which focus on one-stage and anchor-free directional detection. To improve the efficiency and availability of detectors, we devise a two-stream rotation detector based on the one-stage detection framework.

A prevalent approach in this domain involves the utilization of rotation-based frameworks such as those presented in [4,21,22] and OR-CNN [23]. These methods leverage multiple anchor frames characterized by varying angles and scales, facilitating robust detection performance in scenarios where object orientation plays a significant role.

The quest for enhanced detection efficacy and real-time performance has spurred the development of revolutionary frameworks aimed at augmenting the capabilities of detectors [27]. Notable among these advancements are R3Det [24], S2A-Net [25], and AO2-DETR [26]. These methods diverge from traditional paradigms by focusing on one-stage and anchor-free directional detection, streamlining the detection process while maintaining high accuracy [28].

In response to the imperative for efficient and accessible multispectral object detection frameworks, we propose a novel two-stream rotation detector built upon the foundation of the one-stage detection framework. By capitalizing on the advantages of one-stage detection while integrating specialized mechanisms tailored to handle object orientation, our approach aims to balance detection accuracy, computational efficiency, and real-time performance in aerial imagery analysis.

2.2. Cross-Modality Fusion

Multispectral imaging has emerged as a crucial tool for analyzing complex scenes, leveraging both visible (RGB) and infrared (IR) modalities to capture diverse aspects of the environment [29,30]. The fusion of multispectral inputs plays a pivotal role in enhancing the interpretability and utility of the acquired data, with numerous methodologies devised to address various multi-modal configurations, including RGB-IR [31], RGB+Depth [32], RGB+Lidar [33], and multispectral pedestrian detection [34].

Recent advancements in multispectral object detection have yielded remarkable performance gains [27,35,36,37], showcasing notable efficacy in handling diverse modalities. However, existing methodologies primarily focus on pixel-to-pixel feature fusion and the evaluation of modalities’ significance [38,39,40], overlooking the inherent challenge of pixel-level misalignment in RGB-IR images, which can lead to inaccuracies in pixel-to-pixel correspondence [15]. To address the pixel-level misalignment problem, Zhang et al. [18] pioneered the alignment problem by introducing a region feature alignment (RFA) module aimed at aligning features across modalities at the region level. Similarly, Xie et al. [14] proposed a methodology that projects features from both modalities into a latent space, enabling the capture of non-local cross-modality information, thereby mitigating the impact of misalignment. Additionally, MBNet [11] devised an illumination-aware method to address the inherent imbalance between RGB-IR modalities, thereby enhancing the robustness of multispectral object detection systems. Although recent advances have taken multispectral object detection to new heights, pixel-level misalignment in RGB-IR images remains a key obstacle. Many methods introduced to address this challenge demonstrate various innovative approaches ranging from region-level alignment to latent spatial projection, highlighting the relentless efforts to improve the accuracy and robustness of multispectral object detection systems in real-world scenarios.

Despite these efforts, existing alignment frameworks are hampered by the two-stage detector design, which severely limits the speed of object detection inference, particularly in large-scale applications. Moreover, pixel-to-pixel fusion is inadequate because it does not consider the rich contextual information inter- and intra-modalities in the pixels.

3. Methodology

3.1. Overall Architecture

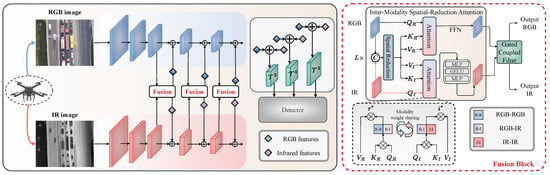

Our intention is to develop an efficient one-stage oriented detector that can accurately detect objects in RGB-IR images. Traditional two-stage RGB-IR detectors rely on proposals for cross-modality calibration. To address this, we propose a two-stream network that uses a layer-wise strategy with the ISRA module to align features efficiently across the two modalities. A GCF module follows it in each layer to filter out redundant or non-informative background information. As illustrated in Figure 2, the two-stream backbone takes RGB-IR images as inputs, denoted by and , respectively, where n represents the number of layers in the backbone. To facilitate multi-scale feature extraction, the FPN [41] is integrated following the backbone, with the dimensions of the three features remaining unchanged after extraction. The backbone comprises five layers in total, with the ISRA and GCF modules integrated into the final three layers of the two-stream feature extraction network as follows:

Figure 2.

The overall architecture of LCMA. Our model comprises a two-stream feature extraction network, an inter-modality spatial-reduction attention module (ISRA), a Gated Coupled Filter module (GCF), and a detector. The ISRA and GCF modules are combined as Fusion blocks. ISRA leverages a cross-modal attention mechanism to aggregate and align features from RGB-IR modalities. GCF module is used to filter out redundant multimodal features.

Following feature extraction, the network is further bolstered by feature reintegration, which enhances its ability to extract and integrate features across multiple modalities. Furthermore, the ISRA module uses neighborhood-aware aggregation by mining contextual information within and between modalities, as shown in Figure 1. The resulting outputs denoted as and , are generated. The powerful impact of this fusion on alignment is demonstrated in Figure 3, where the heterogeneity of features between the two modalities is effectively mitigated. To ensure detection speed, we utilize their summation as follows: , where denotes aggregated features. These features have the same granularity as the single-modality features. Then, the final three layers of features () are fused and input into detection head.

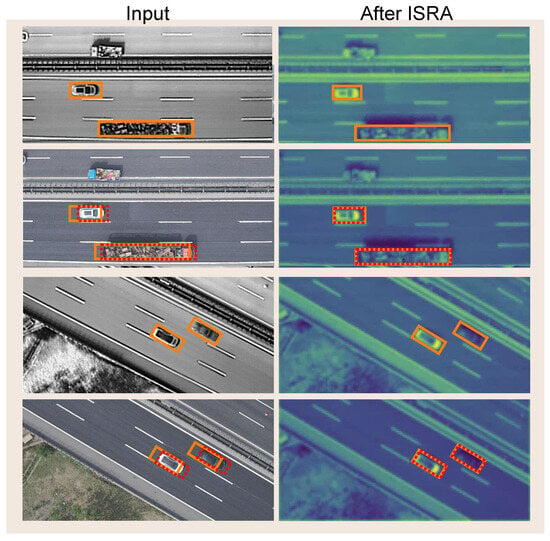

Figure 3.

Visualization of the cross-modality alignment. The first row of the original input RGB-IR images, and the second row represents the features of RGB-IR after ISRA.

3.2. Inter-Modality Spatial-Reduction Attention

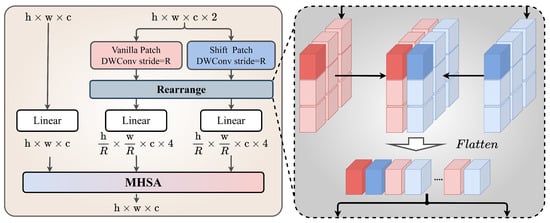

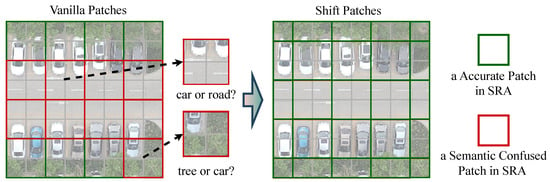

As illustrated in Figure 4, the proposed ISRA module comprises inter-modality spatial-reduction attention, and an inter-modality shared attention mechanism, introduced in this section. The ISRA block comprises L layers. We represent the input for the layer as for RGB and for IR, where . To identify the calibration position between RGB-IR features, we compute the attention map for each feature concerning features from the other modality. Specifically, we transform the RGB-IR features into a query denoted as , with representing the number of patches, where h and w denote the height and width of the input image. Next, we combine the RGB-IR features into a single entity denoted as , serving as both a key and a value , with . Consequently, we construct the similarity matrix for each modality, where . The calibration position is deduced by calculating the similarity difference between neighbouring RGB-IR pixels. On the other hand, inter-modality consensus features and intra-modality features are aggregated using spatial attention to aid in unimodal feature representation. Obviously, the transformers in cross-modality fusion lead to a significant computational burden. The cost can be simplified as: , where is a feed-forward network and is self-attention. Previous studies (e.g., SRA [42]) only consider patch-to-patch interaction, resulting in the insufficient expressive power of the model, as illustrated in Figure 5.

Figure 4.

Illustration of Spatial Reduction. The rearrange operation arranges the information of the original patch and the shift patch together by corresponding pixel points. MHSA stands for multi-head attention mechanism included in .

Figure 5.

Illustration of the shift patch operation used to compute self-attention in the ISRA architecture. The use of original, unaligned patches can lead to inaccurate semantic representations by separating target objects. To address this, we shift the patches by half a patch unit to the upper-left corner. As shown on the right, this results in an enhanced patch that better connects and encompasses the boundaries of the target.

To minimize computational overhead and find the calibration position between RGB-IR features, we leverage depthwise convolution with a step size of R to decrease the dimension of the and matrices before the attention operation. Then, we shift the vanilla patch by half of the patch size in the upper-left direction and then aggregate the two patches, which better describe the feature map to calibrate the pixels. The ISRA module is defined as:

where FFN is the feed-forward network, and its design refers to the architecture of the transformer.

Implementation details of : The main difference between and is the computation. Since the computation process of the key () and value () is the same, we mainly describe the computation process of for simplicity. The core components are shown in Figure 4, and the formula is defined as:

where denotes the positioning of the reduced patch by shifting the patch size 1/2 to the upper left corner to alleviate semantic confusion in the patch (as shown in Figure 5). Then, we use depthwise convolution (DWConv) to reduce the dimension of two informative features. Then, we propose a rearrangement method to aggregate the pixels corresponding to two features, as illustrated in the left of Figure 4.

Subsequently, the cross-attention calibration features are obtained by passing through a function and ,

where denotes softmax and is the scaling factor. The and . The cross-modality feature is calibrated and aggregated through ISRA, while the computational cost is greatly reduced.

Analysis of ISRA mechanism. Four matrix modules can be naturally inferred when computing the cross-modality correlation matrix, as shown in the right of Figure 2. The attention formula is calculated as follows:

When calculating the cross-modality attention, we only calculate the and then obtain the attention matrix by transposing. The inter-modality correlation matrix modules are shared according to the cross-modality characteristics, which can reduce computational complexity. With the above settings, , the computational complexity of ISRA is:

where R is the spatial reduction rate of the ISRA. Compared to the standard transformer modules, these modules reduce computational complexity () to () while maintaining accuracy. ISRA is attracted to handling multi-modality high-resolution feature maps with large . By adjusting the value of R, the amount of attention calculated on the input is not impacted by the number of input modalities.

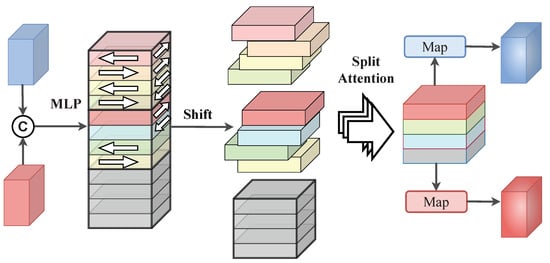

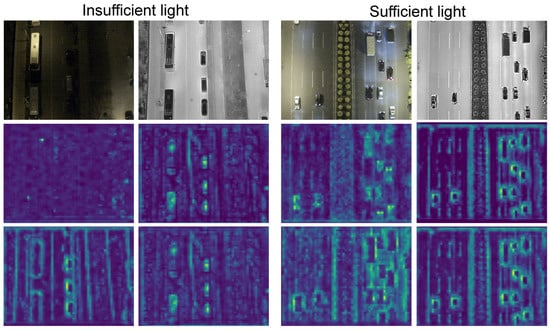

3.3. Gated Coupled Filter

Insufficient and redundant information in both RGB-IR images often leads to unnecessary computations [43], e.g., RGB images under partial illumination conditions. To this end, we propose the GCF module, which couples multi-modality features and employs MLP to filter out redundant features (as shown in Figure 6). After ISRA, when an input is given. First, we connect RGB-IR features as consensus features and extend the channel to by MLP, where the first two parts are then shifted in eight directions along the channel axis. Then, we leverage the split-attention [44], which couples each modality to incorporate visual information, where the refined coupled features are calculated as:

Figure 6.

Illustration of gated coupled filter module.

In , the first two parts are shifted along the channel axis in eight directions. The extended feature mapping is divided into three parts along the channel dimension, as shown by the following formula:

Finally, we reverse to RGB-IR modalities as follows:

where is layer. Through the reverse operation, the is transformed to and , where the number of channels is the same as the dimension of the corresponding backbone. Hence, the GCF module filters out insufficient RGB-IR features by distributing adaptive weights across the entire feature map, thus providing refined features for the next layer and working collaboratively with the ISRA module.

3.4. One-Stage Oriented Detector

The annotation box for DronVehicle includes the direction. To address the regression problem of angles inspired by CSL [45], we convert it into a 180-degree classification problem, allowing us to learn the orientation information of the object. We solely utilize a one-stage directional detection head to accelerate the detection process.

3.5. Loss Function

In the domain of multispectral object detection, the formulation of the multitask loss function during the training phase is articulated as follows:

where , , and are hyperparameters that balance the contributions of each respective loss term during training. Here, denotes the classification loss in focal loss [46], while represents the confidence loss, capturing the certainty of detections. The bounding-box loss, , is based on the SmoothL1 function, widely recognized for its robust performance in bounding-box regression [47] tasks:

Furthermore, the circular symmetric loss ( is defined to handle the periodicity inherent in angular measurements, which is crucial for precise object localization:

Here, represents a periodic window function, r is the radius, and is the angle of the current bounding box. The periodic nature of is defined by for and , ensuring the function repeats every T interval to maintain angular resolution. This structured loss function formulation uniquely addresses the challenges of bounding-box localization, object classification, and confidence scoring within multispectral object detection. Prior research underscores the importance of such a comprehensive loss function in enhancing the performance of detection models.

4. Analysis of ISRA Module

A standard transformer module consists of an and an . We stitch together the features of the two modalities to obtain an input feature of size , where , and its complexity is calculated as:

where r is the expansion ratio of the , and and are the channels of the keys and values, respectively. More specifically, the transformers set and , and the cost can be simplified as:

Using vanilla transformers in cross-modality fusion leads to a significant computational burden. Previous studies have utilized vanilla spatial-reduction attention (SRA) patches (e.g., linear SRA [42]), which cause an incorrect representation of object information due to object splitting. Meanwhile, it only considers patch-to-patch interaction, resulting in insufficient expressive power of the model due to the lack of cross-patch connectivity.

When calculating the cross-modality attention, we only calculate the cross-modality attention and then obtain the attention matrix by transposing. The inter-modality correlation matrix modules are shared according to the cross-modality characteristics, which can reduce computational complexity. With the above settings, the computational complexity of the ISRA module is as follows:

where R is the spatial reduction rate of the ISRA. Compared to the standard transformer modules, these modules reduce computational complexity ( to ) while maintaining accuracy. ISRA is designed to handle multi-modality high-resolution feature maps with large . By adjusting the value of R, the amount of attention calculated is not impacted by the number of input modalities.

5. Experiments and Results

5.1. Dataset and Implementation Details

DroneVehicle. The dataset [48] was shot with a drone and covered different scenes in the aerial scene. It comprises daytime, nighttime, and nighttime under the light. A total of 17,990 pairs of RGB-IR images were trained, 1469 pairs were used for validation, and 8980 pairs were used for testing. The dataset contains five categories relating to automobiles, which are more similar across categories. The labeled boxes are oriented to facilitate fine-grained target identification.

LLVIP. It is a recently released RGB-IR paired pedestrian dataset [49] for low-light scenarios. Taken by surveillance cameras at 26 locations on the street, most of which are in low or no-light scenarios, and all images are strictly spatiotemporally aligned, the dataset contains 33,672 images for a total of 16,836 image pairs.

FLIR. The FLIR ADAS [50] is a meticulously curated repository of images tailored for object detection endeavors, strategically crafted to challenge algorithms across a spectrum of day and night scenarios. Initially, the dataset grappled with numerous pairs of images lacking alignment, which posed significant hurdles in neural network training. Subsequently, an enhanced iteration emerged, meticulously purged of these misaligned pairs, resulting in a refined collection comprising 5142 precisely aligned image pairs. Within this corpus, 4129 pairs are allocated for rigorous training, while 1013 pairs are earmarked for robust testing. The dataset encapsulates three principal object categories: person, car, and bicycle.

OBB. The concept of Oriented Bounding Box (OBB) [51] has proven to be of paramount importance in a variety of applications ranging from collision detection to shape recognition. One of the fundamental aspects of an OBB is the definition of its rotation angle () characterized by the tuple , where conveys the angle of rotation. The DroneVehicle dataset provides the OBB information, while the LLVIP does not provide it.

Implementation Details. We evaluate the accuracy of RGB-IR object detection using the mAP@0.5 and mAP@.5:.95 of the COCO-style metric. The two-stream network, featuring a one-stage oriented detector head, is initialized with pre-trained weights. The preprocessing pipeline includes several key steps: First, all images (RGB and infrared) are resized to a fixed resolution of 640 × 640 pixels to ensure uniform processing. Then, pixel values are normalized to the range [0, 1] by dividing by 255. The detector and FPN [41] are designed based on standard object detection frameworks. We employ data mosaic to improve the network performance as a data augmentation technique during the training process. The stochastic gradient descent (SGD) was used for optimization with a momentum of 0.850 and a weight decay of 0.001. The batch size was set to 8, and the network was trained for 50 epochs with a learning rate of 0.01, which was later adjusted to 0.03. Our experimental parameter settings are aligned [15] to ensure experimental consistency. The training and testing experiment was conducted on an RTX3090.

5.2. Comparisons with State-of-the-Art Methods

5.2.1. Comparison Methods

To validate the effectiveness of our proposed method, we conducted a rigorous series of experiments comparing its performance against nine unimodal object detectors: YOLOv5, RetinaNet [46], Faster RCNN [3], RoITransformer [22], S2ANet [25], Oriented R-CNN [23], ReDet [4], Gliding Vertex [21], DETR [52] and ten multi-modality object detectors: GAFF [53], DCMNet [14], CSSA [54], ImageBind [55], ProbEn [56], Halfway Fusion [57], CIAN [19], AR-CNN [18], TSFADet [15], CALNet [58], DAIK [59], and C2Former [27]. Both quantitative and qualitative analyses were conducted on three distinct datasets. All data used in our analyses were obtained from publicly available resources and codes provided by the respective authors. Red and Blue indicate the top and second-top values, respectively.

5.2.2. DroneVehicle

We compared our method with competing methods on the test set of the DroneVehicle dataset (shown in Table 1). Since there is a lack of cross-modal oriented detection methods for aerial scenes in existing studies, we evaluated our method and eight other state-of-the-art methods, with six single-modal and two multi-modal methods. Among the multispectral approaches, TSFDet achieved the highest detection accuracy (70.44% mAP). In contrast, our proposed detector achieved 77.19% mAP, improving by 7.84% mAP relative to the state-of-the-art method.

Table 1.

Comprehensive experiments on the DroneVicle dataset. We compare the LCMA method with single-modality and detectors. For the experiment to be fair, the two-stream backbone of the multispectral detector is set to ResNet50 and DarkNet53. The OBB indicates an oriented bounding box.

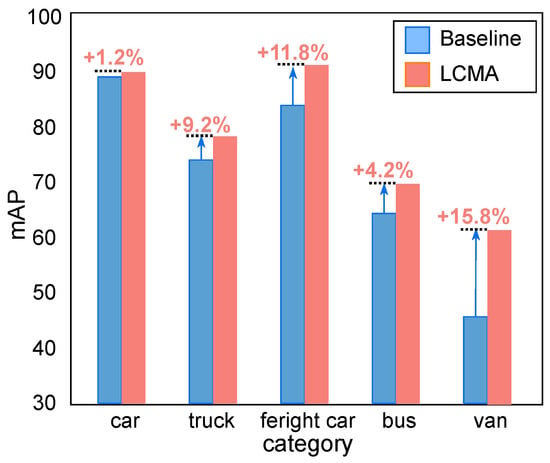

Moreover, our method outperforms state-of-the-art methods in all categories of the DroneVehicle dataset in terms of accuracy. Specifically, our method improves the accuracy of car, truck, freight car, bus, and van by 0.23%, 7.68%, 5.46%, 0.19%, and 8.26% on mAP@0.5, respectively, further demonstrating the usefulness of neighbor fusion for fine-grained target classification.

As shown in Table 1 val., we evaluated our method against eleven other advanced methods, including five multi-modal methods. The same methods using RGB-IR images outperformed the single-modal methods. Among the multispectral methods, Cascade-TSFDet achieved advanced detection accuracy (73.90% mAP). Our LCMA achieves remarkable performance, with our proposed detector achieving 78.28% mAP, which is superior to other methods and improves by 4.38% mAP relative to the state-of-the-art method.

5.2.3. LLVIP & FLIR

Table 2 demonstrated the performance of our LCMA-based approach compared to state-of-the-art methods. Specifically, our method achieves a significant 2.5% improvement in mAP@.5:.95 over DCMNet, highlighting the effectiveness of our approach in addressing the complexities inherent in oriented object detection tasks. This enhancement reflects our method’s ability to improve the alignment between RGB-IR sensor data, even in the challenging low-light conditions characteristic of the LLVIP dataset. In contrast, recent developments have introduced one-stage detectors such as RetinaNet and CSSA, which streamline the detection pipeline by directly predicting object bounding boxes and class probabilities from feature maps. Despite this trend, our model still achieves a 0.6% improvement over the pre-trained model of ImageBind [55], demonstrating LCMA superiority in multispectral object detection.

Table 2.

Comprehensive experiments on the LLVIP dataset. The last column is the input modality.

- FLIR

Table 3 presents the comparative results of LCMA-base against six benchmark algorithms, including YOLOv5, Faster R-CNN, Halfway Fusion, GAFF, ProbEn, and CSSA, on the FLIR dataset. Our method achieves state-of-the-art performance, outperforming existing approaches by 1.3% in mAP@0.5 and 0.9% in mAP@.5:.95. Notably, both Halfway Fusion and GAFF yield lower performance than unimodal infrared (IR) detection, indicating that suboptimal cross-modal fusion may introduce interference and degrade overall accuracy. This observation underscores that ineffective modality integration can compromise detection performance, even falling below that of single-modality systems.

Table 3.

Comparative experiments on the FLIR dataset.

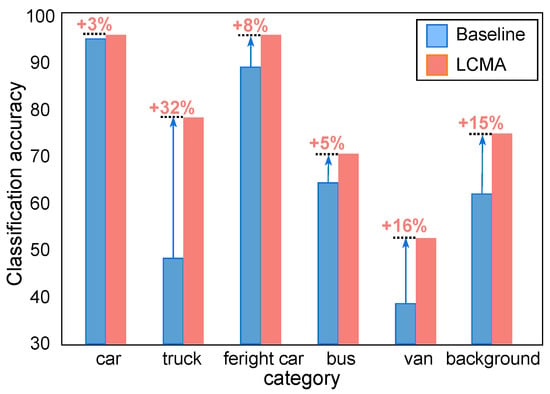

- Classification Performance

Conflicting information between different modalities can create semantic ambiguity and significantly affect the categorization of objects. To investigate the performance of LCMA in addressing cross-modal semantic conflicts, we compared the classification accuracy of LCMA and the baseline model on the DroneVehicle dataset (shown in Figure 7). This dataset consists of five classes of automobiles, presenting a significant challenge for fine-grained classification due to the substantial ambiguity among classes. The fine-grained classification further compounds the classification confusion problem. Figure 8 illustrates that the baseline model has difficulty classifying trucks and vans. In contrast, LCMA improves the classification accuracy of trucks and vans by 32% and 16%, respectively, and by 15.8% on average for each category.

Figure 7.

Classification accuracy histogram on the DroneVehicle val dataset. The baseline uses a simple concatenation-based fusion strategy.

Figure 8.

Classification accuracy histogram on the DroneVehicle val dataset. Comparison shows the improvement over the baseline two-stream detector.

5.2.4. Speed and Memory

To assess the efficiency and feasibility of the LCMA algorithm in practical applications, we compared the performance of various detectors on a single NVIDIA GV100 GPU, considering both speed and accuracy metrics. All detectors were subjected to identical testing conditions, with a batch size of 1 (As shown in Table 4). Among the multispectral detectors examined, LCMA-tiny demonstrated superior performance with an accuracy of 73.89% mAP@.5:.95, which is notably higher than that of other detectors. Furthermore, LCMA-tiny achieves a commendable speed of 43.86 frames per second (FPS) with guaranteed accuracy, which can be applied to video scenes. This remarkable feat is attributed to its rapid processing speed and ability to achieve a 4.38% mAP@.5:.95 performance gain compared to the best algorithms. As shown in Table 5, the LCMA displays varying memory requirements and performance characteristics. The LCMA-ResNet model, which requires 199.5 M of memory, achieves a frame rate of 20.0 FPS and a mAP@0.5 of 76.35%. In contrast, the LCMA-tiny model utilizes only 82.8 M of memory and delivers a significantly higher FPS of 43.9, although with a slightly lower mAP@0.5 of 73.89%. These results highlight the trade-offs between model size, memory consumption, and performance, demonstrating the versatility of the LCMA models across different configurations.

Table 4.

Speed vs. accuracy on the DroneVehicle using a single GV100 GPU batch size of 1. Speed is compared using FPS.

Table 5.

The performance of LCMA models under different configurations.

5.3. Ablation Studies

To analyze the effectiveness of key components in detail, we perform ablation experiments on the DroneVehicle. During the experiment, the role of each module in LCMA-base is verified by removing critical components from the full version. The baseline is an LCMA-base without fusion block.

5.3.1. Effectiveness of Inter-Modality Spatial-Reduction Attention

To verify the effectiveness of the neighborhood-aware aggregation of ISRA, the baseline is an LCMA detector without a fusion block. Under the same experimental conditions, we conducted experiments to verify the effectiveness of ISRA. Lines 4 and 5 of Table 6 show that switching from SRA to ISRA can improve the accuracy by 0.53% mAP@0.5. In addition, we removed the GCF module to conduct experiments with the ISRA module alone, and Lines 2 and 3 show that the LCMA is 1.08% higher than the LCMA of Baseline+SRA. These two observations show that ISRA can effectively improve performance and capture the attention in the patches.

Table 6.

Ablation study of ISRA and GCF modules on the DroneVehicle.

5.3.2. Effectiveness of Gated Coupled Filter

To verify the validity of the GCF module of LCMA, we first remove the ISRA and construct LCMA w/o ISRA using only cross-modality neighborhood-aware aggregation. We use the same training and inference configuration as LCMA (shown in Table 6). Lines 1 and 2 show that in LCMA w/o ISRA is 1.75% mAP@0.5 higher than the baseline, proving that GCF is still valid without the help of cross-modality aggregation. Finally, we remove the GCF module, and lines 3 and 4 show that LCMA is 1.18% mAP@0.5 higher than LCMA w/o GCF. Thus, GCF achieves multispectral features for aggregation and calibration in conjunction with ISRA by filtering out insufficient information.

5.4. Quantitative Visualization

To quantitatively assess the feature alignment capability of the fusion block, we visualized the feature mapping of the fusion block. Due to the small resolution of the last three layers of features, the alignment performance cannot be discerned. We remove the fusion block from the first layer of features of the feature extraction network, i.e., for and to be fused, to demonstrate the alignment performance better. The experimental results are shown in Figure 3, where we select an input image that exhibits a weak misalignment problem, and we accurately align its features by neighborhood-aware aggregation performed by ISRA. The results indicate that the neighborhood-aware fusion adopted by ISRA can solve the cross-modal weak misalignment problem by effectively combining contextual semantic information within and across multiple modalities.

5.5. Comparing the Impact of Diverse Transformer Backbones

The results in Table 7 highlight the performance of various backbones on the DroneVehicle dataset, with DarkNet53 outperforming the others. Several factors contribute to DarkNet53’s superior performance:

Table 7.

Comparison on the DroneVehicle with multiple backbones.

One-Stage Oriented Detectors

DarkNet53 is well-suited for detectors, often used in scenarios requiring real-time processing, such as drone-based applications. Its architecture is optimized for tasks involving oriented bounding boxes, making it efficient in handling drone data.

- Handling of Small Objects

The DroneVehicle dataset includes many small objects, which can be challenging to detect. Transformers like Vision Transformer (ViT) and its variants (PVT, PVTv2, Swin-T) often act similarly to low-pass filters. This characteristic can cause them to overlook small objects, leading to lower detection performance.

However, DarkNet53 maintains high resolution throughout its layers, improving its ability to detect small objects accurately. This advantage stems primarily from its architectural design: DarkNet53 retains high-resolution feature maps and performs multiscale feature fusion more effectively than transformer-based models, allowing for finer-grained spatial details to be preserved throughout the network—a critical property for detecting small objects in cluttered aerial scenes. Furthermore, its convolutional inductive bias favors local feature interactions, which proves particularly beneficial for capturing subtle appearance cues of small targets that are often lost in the global self-attention mechanisms of transformers.

- Efficiency

Transformers typically have slower inference speeds than networks like DarkNet53. The attention mechanisms in transformers can introduce latency and inconsistencies in real-time applications. DarkNet53’s design aligns more with efficient and fast inference, making it more suitable for practical deployment in drone-based detection systems.

In summary, while transformer-based backbones offer strong performance in many vision tasks, the specific requirements of directional frame object detection in drone data favor DarkNet53. Its tailored design for one-stage detectors, superior handling of small objects, and efficient inference capabilities make it the best choice among the evaluated backbones.

5.6. Discussion

5.6.1. Visualization

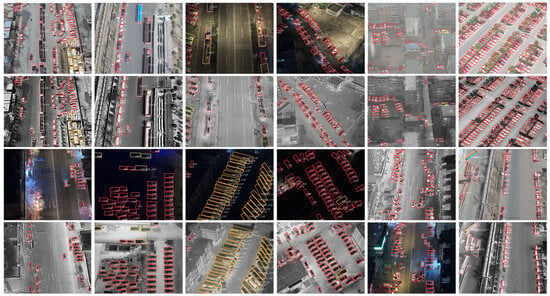

Figure 9 visualize detection of LCMA on DroneVehicle, and it can be seen that LCMA produces accurate detection even for small objects. On the other hand, under varying lighting conditions, our LCMA can adaptively filter out low-quality features in both RGB-IR images, as shown in Figure 10. However, when the quality of multispectral images is degraded, this may significantly impair the model’s performance. Therefore, integrating low-light enhancement algorithms could improve LCMA’s performance under challenging lighting conditions. Future research may explore the development of a unified framework that combines multispectral image enhancement and fusion techniques, thus further enhancing the robustness and versatility of LCMA.

Figure 9.

Visualization results of LCMA on DroneVehicle. Odd rows represent RGB images, and even rows represent IR images, where RGB-IR images correspond to the top and bottom.

Figure 10.

Visualization of the feature maps of one channel in layer three before and after the fusion block. The first row is the input image, the second is the pre-fusion feature, and the third is the post-fusion feature.

5.6.2. Deployment Analysis

Our algorithm outperforms traditional transformer-based models regarding computational memory usage, mainly due to the cross-modal weight-sharing mechanism. This mechanism reduces memory requirements by sharing weights across modalities, improving memory efficiency. Additionally, we use a space-reducing attention mechanism that minimizes the memory overhead associated with image operations, further enhancing the model’s efficiency. Furthermore, the GCF module also includes a gating mechanism that filters out irrelevant background features, which reduces the computational load and makes the model more suitable for deployment on UAV platforms. By focusing computational resources on relevant features, the gating mechanism improves accuracy and supports the model’s feasibility in resource-constrained environments.

As shown in Table 4, the LCMA-tiny algorithm achieves 43.9 FPS, meeting the real-time performance requirements for target detection. This frame rate ensures the model can operate effectively on UAVs, balancing high detection performance and efficient resource usage in practical deployment scenarios. On the other hand, we conducted comparison experiments by increasing the algorithm’s memory, as presented in Table 5 where LCMA-tiny with a capacity of 82.9 M is optimized to run on UAV platforms with limited resources. This memory requirement is relatively small compared to the computational demands of many models, making the LCMA-tiny algorithm feasible for deployment on UAV devices.

5.6.3. Limitation

While the LCMA demonstrates promising results in accuracy and computational efficiency for RGB-infrared object detection, several limitations should be addressed in future work. First, although the one-stage design eliminates the need for region proposals and alignment steps, the layer-wise cross-modality calibration and spatial-reduction attention mechanisms may still be sensitive to variations in image quality and discrepancies between modalities, particularly under extreme lighting or weather conditions. These factors limit the generalization in real-world scenarios with highly variable environmental conditions. Furthermore, although LCMA performs well on benchmark datasets, its effectiveness in environments with heavy occlusions or complex background clutter, common in dynamic real-world settings, may require further refinement to improve performance under such challenging conditions.

While LCMA shows promising results on benchmarks, its performance in complex real-world scenes with heavy occlusions or cluttered backgrounds requires further improvement. The current framework struggles with scenarios where both modalities degrade simultaneously, such as when thermal diffusion blurs infrared object boundaries while RGB textures are lost under occlusion. Additionally, semantic conflicts arise when strong infrared backgrounds contradict RGB cues. Enhancing robustness to such cross-modal interference remains a key challenge.

6. Conclusions

In this paper, we propose an efficient one-stage oriented detector for RGB-IR object detection named LCMA. The core of this approach involves a layer-wise strategy that enables cross-modality calibration and relieves the need for regional proposal steps to align cross-modality features. The method achieves cross-modality calibration and aggregation through the neighborhood-aware aggregation of ISRA. The GCF filters out insufficient information, ensuring that informative and well-aligned features are fed into the detector. The experimental results demonstrate that LCMA improves performance and speeds up processing.

Author Contributions

Conceptualization, X.H., Y.G. and C.T.; Methodology, X.H., T.Y. (Tingzhou Yan), H.L. and Y.G.; Software, X.H., T.Y. (Tingzhou Yan) and Z.L.; Validation, T.Y. (Tong Yang), H.L., Z.R., Z.L. and J.J.; Formal analysis, T.Y. (Tingzhou Yan), Y.G., Z.L. and C.T.; Investigation, T.Y. (Tong Yang), T.Y. (Tingzhou Yan), H.L., Z.L. and J.J.; Resources, T.Y. (Tingzhou Yan) and J.J.; Data curation, H.L., Z.R. and J.J.; Writing—original draft, T.Y. (Tong Yang), Z.L. and C.T.; Writing—review & editing, X.H., T.Y. (Tong Yang) and Y.G.; Supervision, Z.R.; Project administration, Z.R. and C.T.; Funding acquisition, Z.R. and C.T. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the fund of science project of scientific research project of CPECC Co., Ltd.: Research on key technologies of typical application of power engineering survey based on artificial intelligence under grant DG2-L03-2024, and in part by Natural Science Foundation of Shandong Province under grant ZR2021LZH001, and in part by the Natural Science Foundation of Jiangsu Province under grant BK20220075.

Data Availability Statement

Data is contained within the article.

Conflicts of Interest

Author Tong Yang was employed by the company Inner Mongolia Ecological Environment Big Data Co., Ltd., Hohhot, China. Authors Tingzhou Yan and Hongtao Li were employed by the company Hubei Communications Planning and Design Institute Co., Ltd., Wuhan, China. Authors Yang Ge and Zhenwen Ren were employed by the company China Energy Engineering Group Jiangsu Power Design Institute Co., Ltd., Nanjing, China. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Zou, Z.; Shi, Z.; Guo, Y.; Ye, J. Object Detection in 20 Years: A Survey. arXiv 2019, arXiv:1905.05055. [Google Scholar] [CrossRef]

- Song, K.; Xue, X.; Wen, H.; Ji, Y.; Yan, Y.; Meng, Q. Misaligned Visible-Thermal Object Detection: A Drone-based Benchmark and Baseline. IEEE Trans. Intell. Veh. 2024, 9, 7449–7460. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. In Proceedings of the 29th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; Volume 28. [Google Scholar]

- Han, J.; Ding, J.; Xue, N.; Xia, G.S. Redet: A rotation-equivariant detector for aerial object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 2786–2795. [Google Scholar]

- Liu, T.; Lam, K.M.; Zhao, R.; Qiu, G. Deep cross-modal representation learning and distillation for illumination-invariant pedestrian detection. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 315–329. [Google Scholar] [CrossRef]

- Xu, D.; Ouyang, W.; Ricci, E.; Wang, X.; Sebe, N. Learning cross-modal deep representations for robust pedestrian detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5363–5371. [Google Scholar]

- Zhou, T.; Fan, D.P.; Cheng, M.M.; Shen, J.; Shao, L. RGB-D salient object detection: A survey. Comput. Vis. Media 2021, 7, 37–69. [Google Scholar] [CrossRef]

- Tang, L.; Xiang, X.; Zhang, H.; Gong, M.; Ma, J. DIVFusion: Darkness-free infrared and visible image fusion. Inf. Fusion 2023, 91, 477–493. [Google Scholar] [CrossRef]

- Ji, W.; Li, J.; Yu, S.; Zhang, M.; Piao, Y.; Yao, S.; Bi, Q.; Ma, K.; Zheng, Y.; Lu, H.; et al. Calibrated RGB-D salient object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 9471–9481. [Google Scholar]

- Jiang, X.; Wang, N.; Xin, J.; Xia, X.; Yang, X.; Gao, X. Learning lightweight super-resolution networks with weight pruning. Neural Netw. 2021, 144, 21–32. [Google Scholar] [CrossRef]

- Zhou, K.; Chen, L.; Cao, X. Improving multispectral pedestrian detection by addressing modality imbalance problems. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 787–803. [Google Scholar]

- Li, H.; Wang, N.; Ding, X.; Yang, X.; Gao, X. Adaptively learning facial expression representation via cf labels and distillation. IEEE Trans. Image Process. 2021, 30, 2016–2028. [Google Scholar] [CrossRef]

- Zhou, D.; Wang, N.; Peng, C.; Yu, Y.; Yang, X.; Gao, X. Towards multi-domain face synthesis via domain-invariant representations and multi-level feature parts. IEEE Trans. Multimed. 2021, 24, 3469–3479. [Google Scholar] [CrossRef]

- Xie, J.; Anwer, R.M.; Cholakkal, H.; Nie, J.; Cao, J.; Laaksonen, J.; Khan, F.S. Learning a Dynamic Cross-Modal Network for Multispectral Pedestrian Detection. In Proceedings of the 30th ACM International Conference on Multimedia, Lisboa, Portugal, 10–14 October 2022; pp. 4043–4052. [Google Scholar]

- Yuan, M.; Wang, Y.; Wei, X. Translation, Scale and Rotation: Cross-Modal Alignment Meets RGB-Infrared Vehicle Detection. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 509–525. [Google Scholar]

- Liu, Y.; Wang, W.; Feng, C.; Zhang, H.; Chen, Z.; Zhan, Y. Expression snippet transformer for robust video-based facial expression recognition. Pattern Recognit. 2023, 138, 109368. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, H.; Zhan, Y.; Chen, Z.; Yin, G.; Wei, L.; Chen, Z. Noise-resistant multimodal transformer for emotion recognition. Int. J. Comput. Vis. 2025, 133, 3020–3040. [Google Scholar] [CrossRef]

- Zhang, L.; Zhu, X.; Chen, X.; Yang, X.; Lei, Z.; Liu, Z. Weakly aligned cross-modal learning for multispectral pedestrian detection. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5127–5137. [Google Scholar]

- Zhang, L.; Liu, Z.; Zhang, S.; Yang, X.; Qiao, H.; Huang, K.; Hussain, A. Cross-modality interactive attention network for multispectral pedestrian detection. Inf. Fusion 2019, 50, 20–29. [Google Scholar] [CrossRef]

- Xia, G.S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A large-scale dataset for object detection in aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3974–3983. [Google Scholar]

- Xu, Y.; Fu, M.; Wang, Q.; Wang, Y.; Chen, K.; Xia, G.; Bai, X. Gliding Vertex on the Horizontal Bounding Box for Multi-Oriented Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 1452–1459. [Google Scholar] [CrossRef]

- Ding, J.; Xue, N.; Long, Y.; Xia, G.S.; Lu, Q. Learning RoI transformer for oriented object detection in aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2849–2858. [Google Scholar]

- Xie, X.; Cheng, G.; Wang, J.; Yao, X.; Han, J. Oriented R-CNN for object detection. In Proceedings of the IEEE International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 3520–3529. [Google Scholar]

- Yang, X.; Yan, J.; Feng, Z.; He, T. R3Det: Refined Single-Stage Detector with Feature Refinement for Rotating Object. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 19–21 May 2021; Volume 35, pp. 3163–3171. [Google Scholar]

- Han, J.; Ding, J.; Li, J.; Xia, G.S. Align deep features for oriented object detection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5602511. [Google Scholar] [CrossRef]

- Dai, L.; Liu, H.; Tang, H.; Wu, Z.; Song, P. AO2-DETR: Arbitrary-Oriented Object Detection Transformer. arXiv 2022, arXiv:2205.12785. [Google Scholar] [CrossRef]

- Yuan, M.; Wei, X. C2 Former: Calibrated and Complementary Transformer for RGB-Infrared Object Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5403712. [Google Scholar] [CrossRef]

- Sun, X.; Yu, Y.; Cheng, Q. Low-rank Multimodal Remote Sensing Object Detection with Frequency Filtering Experts. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5637114. [Google Scholar] [CrossRef]

- Li, C.; Song, D.; Tong, R.; Tang, M. Illumination-aware faster R-CNN for robust multispectral pedestrian detection. Pattern Recognit. 2019, 85, 161–171. [Google Scholar] [CrossRef]

- Yang, J.; Xiao, L.; Zhao, Y.Q.; Chan, J.C.W. Unsupervised deep tensor network for hyperspectral–multispectral image fusion. IEEE Trans. Neural Netw. Learn. Syst. 2023, 35, 13017–13031. [Google Scholar] [CrossRef]

- Tu, Z.; Lin, C.; Zhao, W.; Li, C.; Tang, J. M 5 l: Multi-modal multi-margin metric learning for rgbt tracking. IEEE Trans. Image Process. 2021, 31, 85–98. [Google Scholar] [CrossRef]

- Zhou, T.; Fu, H.; Chen, G.; Zhou, Y.; Fan, D.P.; Shao, L. Specificity-preserving rgb-d saliency detection. In Proceedings of the IEEE International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 4681–4691. [Google Scholar]

- Bai, X.; Hu, Z.; Zhu, X.; Huang, Q.; Chen, Y.; Fu, H.; Tai, C.L. Transfusion: Robust lidar-camera fusion for 3d object detection with transformers. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1090–1099. [Google Scholar]

- Cao, Y.; Luo, X.; Yang, J.; Cao, Y.; Yang, M.Y. Locality guided cross-modal feature aggregation and pixel-level fusion for multispectral pedestrian detection. Inf. Fusion 2022, 88, 1–11. [Google Scholar] [CrossRef]

- Yang, J.; Lin, T.; Chen, X.; Xiao, L. Multiple Deep Proximal Learning for Hyperspectral-Multispectral Image Fusion. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5525814. [Google Scholar] [CrossRef]

- Kim, J.U.; Park, S.; Ro, Y.M. Towards Versatile Pedestrian Detector with Multisensory-Matching and Multispectral Recalling Memory. In Proceedings of the 36th AAAI Conference on Artificial Intelligence (AAAI 22), Online, 22 February–1 March 2022; Association for the Advancement of Artificial Intelligence: Washington, DC, USA, 2022. [Google Scholar]

- Li, X.; Ding, M.; Pižurica, A. Deep feature fusion via two-stream convolutional neural network for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2019, 58, 2615–2629. [Google Scholar] [CrossRef]

- Tu, Z.; Ma, Y.; Li, Z.; Li, C.; Xu, J.; Liu, Y. RGBT salient object detection: A large-scale dataset and benchmark. IEEE Trans. Multimed. 2023, 25, 4163–4176. [Google Scholar] [CrossRef]

- Tang, Z.; Xu, T.; Wu, X.J. A Survey for Deep RGBT Tracking. arXiv 2022, arXiv:2201.09296. [Google Scholar] [CrossRef]

- Liu, L.; Chen, J.; Wu, H.; Li, G.; Li, C.; Lin, L. Cross-modal collaborative representation learning and a large-scale rgbt benchmark for crowd counting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 4823–4833. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pvt v2: Improved baselines with pyramid vision transformer. Comput. Vis. Media 2022, 8, 415–424. [Google Scholar] [CrossRef]

- Wang, Y.; Wei, X.; Tang, X.; Shen, H.; Zhang, H. Adaptive fusion CNN features for rgbt object tracking. IEEE Trans. Intell. Transp. Syst. 2022, 23, 7831–7840. [Google Scholar] [CrossRef]

- Zhang, H.; Wu, C.; Zhang, Z.; Zhu, Y.; Lin, H.; Zhang, Z.; Sun, Y.; He, T.; Mueller, J.; Manmatha, R.; et al. Resnest: Split-attention networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 2736–2746. [Google Scholar]

- Yang, X.; Yan, J. Arbitrary-oriented object detection with circular smooth label. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part VIII 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 677–694. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Honolulu, HI, USA, 21–26 July 2017; pp. 2980–2988. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection Over Union: A Metric and a Loss for Bounding Box Regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Sun, Y.; Cao, B.; Zhu, P.; Hu, Q. Drone-based RGB-Infrared Cross-Modality Vehicle Detection via Uncertainty-Aware Learning. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 6700–6713. [Google Scholar] [CrossRef]

- Jia, X.; Zhu, C.; Li, M.; Tang, W.; Zhou, W. LLVIP: A visible-infrared paired dataset for low-light vision. In Proceedings of the IEEE International Conference on Computer Vision, Nashville, TN, USA, 20–25 June 2021; pp. 3496–3504. [Google Scholar]

- Zhang, H.; Fromont, E.; Lefevre, S.; Avignon, B. Multispectral fusion for object detection with cyclic fuse-and-refine blocks. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Online, 25–28 September 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 276–280. [Google Scholar]

- Chen, Z.; Chen, K.; Lin, W.; See, J.; Yu, H.; Ke, Y.; Yang, C. Piou loss: Towards accurate oriented object detection in complex environments. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part V 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 195–211. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar]

- Zhang, H.; Fromont, E.; Lefèvre, S.; Avignon, B. Guided attentive feature fusion for multispectral pedestrian detection. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision, Online, 5–9 January 2021; pp. 72–80. [Google Scholar]

- Cao, Y.; Bin, J.; Hamari, J.; Blasch, E.; Liu, Z. Multimodal object detection by channel switching and spatial attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 403–411. [Google Scholar]

- Girdhar, R.; El-Nouby, A.; Liu, Z.; Singh, M.; Alwala, K.V.; Joulin, A.; Misra, I. Imagebind: One embedding space to bind them all. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 15180–15190. [Google Scholar]

- Chen, Y.T.; Shi, J.; Ye, Z.; Mertz, C.; Ramanan, D.; Kong, S. Multimodal Object Detection via Probabilistic Ensembling. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 139–158. [Google Scholar]

- Liu, J.; Zhang, S.; Wang, S.; Metaxas, D.N. Multispectral deep neural networks for pedestrian detection. arXiv 2016, arXiv:1611.02644. [Google Scholar] [CrossRef]

- He, X.; Tang, C.; Zou, X.; Zhang, W. Multispectral Object Detection via Cross-Modal Conflict-Aware Learning. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 1465–1474. [Google Scholar]

- Wang, A.; Wang, H.; Huang, Z.; Zhao, B.; Li, W. Directional Alignment Instance Knowledge Distillation for Arbitrary-Oriented Object Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5618914. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.