Abstract

Low-illumination conditions significantly degrade the performance of vision-based garbage recognition systems in practical smart city applications. To address this issue, this paper presents a garbage recognition framework that combines low-light image enhancement with attention-guided feature learning. A multi-branch low-light enhancement network (MBLLEN) is employed as the enhancement backbone, and a Convolutional Block Attention Module (CBAM) is integrated to alleviate local over-enhancement and guide feature responses under uneven illumination. The enhanced images are then used as inputs for a deep learning-based garbage classification model. In addition, a lightweight encryption mechanism is considered at the system level to support secure data transmission in practical deployment scenarios. Experiments conducted on a self-collected low-light garbage dataset show that the proposed framework achieves improved image quality and recognition performance compared with baseline approaches. These results suggest that integrating low-light enhancement with attention-guided feature learning can be beneficial for garbage recognition tasks under challenging illumination conditions.

1. Introduction

The application of garbage recognition technology based on computer vision in garbage sweepers, particularly in low-illumination environments, enables real-time and dynamic identification of road waste. This technology facilitates adjustments to the operation of key components such as the fan speed, sweep pan, and water spray volume, ensuring effective cleaning while reducing energy consumption. It also supports adaptive control of sweepers, allowing for modifications based on the type and coverage of waste, as detected by real-time video sensors. This results in a significant enhancement of cleaning efficiency and optimization of water usage. In the context of smart city development, the integration of this technology not only elevates the level of intelligence in urban cleaning operations but also provides more efficient and environmentally sustainable solutions for urban environmental management.

Garbage identification under low-illumination conditions presents several unique challenges, primarily due to the difficulty in acquiring clear visual information caused by insufficient lighting. Traditional image recognition systems are often ineffective in low-light environments because they rely on adequate lighting to capture clear and detailed images. When lighting is inadequate, images may become blurred, distorted, or even completely unrecognizable, significantly compromising the accuracy and reliability of garbage identification. Moreover, capturing color under low-light conditions presents a considerable challenge. Color plays a crucial role in distinguishing between different types of waste, but under dim lighting, the colors captured by the camera can become distorted, making the sorting process more difficult. Additionally, fluctuating lighting conditions, such as those caused by streetlights or passing vehicles, can further destabilize image quality, increasing the complexity of waste identification.

In recent years, the application of deep learning in waste classification has yielded promising results. For example, Zhang et al. (2022) developed a YOLO-based network that efficiently classifies five types of recyclable waste, achieving both high speed and accuracy [1,2]. Likewise, White et al. (2020) implemented convolutional neural networks in smart trash bins, successfully classifying six types of waste with an accuracy of 97% [3,4]. Furthermore, Alrayes et al. (2023) employed VisionTransformer for automatic waste sorting, obtaining an accuracy of 95.8% on the TrashNet dataset [5,6,7]. These approaches, however, typically rely on sufficient lighting conditions to enable the model to effectively learn the visual features of the waste.

Despite these efforts, existing low-light garbage recognition approaches still face notable limitations. Many methods focus primarily on improving recognition models without explicitly addressing the degradation of visual features caused by uneven illumination and local overexposure. Conversely, low-light image enhancement methods are often evaluated independently of downstream recognition tasks, and their impact on semantic feature learning is not fully explored. As a result, the potential of coupling low-light enhancement with task-oriented feature attention remains insufficiently investigated in the context of garbage recognition.

However, as Xiao et al. (2020) point out [8,9,10], real-world scenarios often face challenges, such as low light conditions due to time of day changes, weather conditions, and a lack of artificial lighting. Smart waste detection devices operating in this environment struggle to capture images due to insufficient light, making waste identification difficult. Chen et al. (2022) explored this problem by testing the recyclable garbage detection robot under different lighting conditions and found that low-light scenes did increase the challenge of garbage classification [11,12]. Gundupalli et al. (2016) also observed a decline in recognition performance of their recyclable waste detection robot in a low-light environment [13,14]. Although Yadav et al. (2021) suggest that a high-quality camera with a low-light lens could mitigate these problems, it would significantly raise costs [15,16]. Introducing additional lighting serves as another solution; however, it is not always feasible and is constrained to specific settings. In the face of these challenges, advances in deep learning and artificial intelligence technology offer solutions. Utilizing deep learning algorithms, image recognition models optimized for low-light conditions can be developed. These models can be trained with large amounts of training data to learn how to efficiently identify and sort trash in low-light situations. For instance, neural networks trained using deep learning techniques are more adept at processing blurry or low-contrast images, enabling them to extract meaningful information effectively.

Given the above analysis, this work adopts MBLLEN as the low-light enhancement backbone due to its multi-branch architecture, which is effective in modeling diverse illumination patterns. However, MBLLEN may still introduce local over-enhancement in regions with uneven lighting, which can negatively affect downstream recognition. To address this issue, CBAM is incorporated to guide the network toward discriminative feature regions while suppressing over-enhanced or less informative responses. This combination enables the enhancement network to better serve the subsequent garbage recognition task under low-illumination conditions. Specifically, the main contributions of this paper can be summarized as follows:

- (1)

- We propose a waste detection model optimized for low-light conditions. This model is capable of extracting more information from low-light images, thereby enhancing recognition accuracy.

- (2)

- The attention mechanism is introduced into the multi-channel dark light enhancement network. By integrating multiple parallel image enhancement pathways, this study achieves a more comprehensive and accurate capture of image information features, enhancing image clarity and visibility through feature fusion.

- (3)

- By introducing the attention mechanism, the network can pay more attention to the feature salience of different channels while maintaining the advantage of multi-branch parallelism, so as to improve the robustness and generalization of the network.

The remainder of this paper is organized as follows. Section 2 reviews related work on garbage recognition and low-light image enhancement. Section 3 describes the proposed methodology, including the attention-enhanced low-light enhancement network and the garbage recognition model. Section 4 presents the experimental setup and evaluation results under low-illumination conditions. Section 5 discusses the experimental findings and limitations of the proposed approach. Finally, Section 6 concludes the paper and outlines directions for future work.

2. Related Works

2.1. Object Detection and Waste Detection Model Low-Illumination Image Detection Algorithm

Waste identification is the process of automatic identification and classification of different types of waste using computer vision technology, a critical step in advancing environmental protection and enhancing resource recycling efficiency. Recently, advancements in deep learning technology have markedly enhanced the accuracy of waste identification. For example, Aishwarya used the YOLO algorithm to detect custom objects and came up with a method to detect non-biodegradable waste in garbage bins. Regarding metal detection accuracy, it can reach 75% [17,18].

White deploys convolutional neural networks in smart bins to classify six types of waste with 97% accuracy (White et al., 2020 [3]). Alrayes used VisionTransformer for automatic garbage sorting, achieving 95.8% accuracy on the TrashNet dataset (Alrayes et al., 2023 [5]).

Although these methods have achieved promising performance in waste detection and classification, most of them are developed and evaluated under normal or well-controlled illumination conditions. Their performance may degrade significantly in low-light environments, where visual features are severely distorted. Moreover, these approaches primarily focus on improving detection architectures, without explicitly considering the impact of illumination degradation on feature representation.

2.2. Low-Illumination Image Detection Algorithm

Recent advances in deep learning, particularly convolutional neural networks (CNNs), are tackling the challenge of garbage recognition in low-light conditions. Through enhancing image quality and adapting models to variable lighting, these techniques strive to improve recognition accuracy, providing promising applications in environmental protection and resource recycling, even in complex environments.

Lore and his team pioneered the enhancement and de-noising of low-light images using a depth autoencoder trained on synthetic data [19,20]. Starting with the Retinex theory, Chen et al. used a decomposition network and an illumination adjustment network, combined with the BM3D algorithm to remove noise, and realized end-to-end image enhancement. Ignatov et al. introduced content, color, and texture perception loss functions, and applied residual convolutional neural network technology to poor-quality mobile phone photos to improve image quality [21,22].

Kim et al. designed a global enhancement network and a local enhancement network to improve the sharpness of paired and unpaired images, with the unpaired images using the CycleGAN network [23,24]. Wang et al., for the first time, used the global illumination estimation of the input image as a prior, and sent the global features and local features together into the coDEC network for detail reconstruction, which is a common and effective image enhancement method [25,26]. The biggest advantage of this method is that it can utilize the global features of the input image in the reconstruction process, so as to improve the generation effect and quality and meet the needs of specific application fields.

Jiang Zetao et al. performed the step-by-step reconstruction of global and local features, utilizing a multi-reconstruction variational autoencoder for progressive enhancement, which effectively improved the enhancement results [27]. Zhang et al. decomposed the image into light and reflection components, employing separate convolutional neural networks to adjust the light components and eliminate degradation effects on the reflection components, thereby achieving superior image enhancement [28,29].

To enhance image quality, Wang et al. proposed employing convolutional neural networks that integrate local and global features to process intermediate light images for image enhancement [30,31]. A prevalent method involves the use of convolutional neural networks that merge local and global features to interpret intermediate light images. This method solves the problem of overexposure and edge artifacts. RAW images store more information, including camera parameters.

Consequently, Chen et al. proposed an end-to-end comprehensive convolutional network capable of deeply reconstructing and enhancing images through the integration of convolutional layers, pooling layers, and other operations, enabling the entire process to commence directly from the original RAW input data and conclude with the final image outcome [32,33]. This approach circumvents issues arising from flaws in the built-in ISP algorithm and achieves superior brightness enhancement and noise reduction outcomes. Ignatov et al. used pyramid convolutional neural networks to directly convert RAW to RGB images, but the running time and resource consumption were insufficient.

While these low-light image enhancement methods are effective in improving visual quality, they are typically evaluated using image-level metrics such as PSNR and SSIM [34,35,36]. Their influence on downstream high-level vision tasks, such as object recognition and garbage classification, is not always explicitly analyzed. As a result, it remains unclear how enhancement strategies can be optimally integrated with recognition models to improve semantic performance under low-illumination conditions.

In contrast to the above studies, this work focuses on coupling low-light image enhancement with garbage recognition in a unified framework. By introducing an attention mechanism into a multi-branch enhancement network, the proposed method aims to reduce the adverse effects of uneven illumination on feature learning, thereby improving recognition performance in low-light scenarios.

3. Methods

3.1. Multi-Channel Image Enhancement Algorithm Based on Attention Mechanism

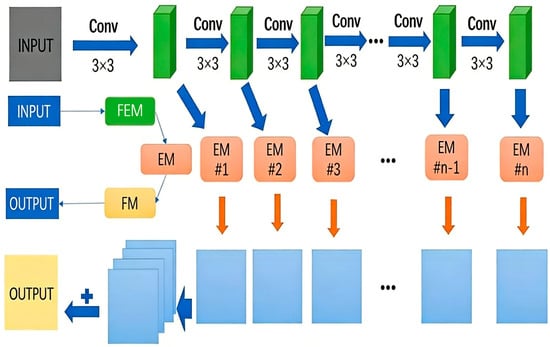

As shown in Figure 1, the MBLLEN consists of three modules: the feature extraction module (FEM), the enhancement module (EM), and the fusion module (FM).

Figure 1.

MBLLEN structure.

FEM is the feature extraction module, which is a single-stream network with 10 convolutional layers, each using a kernel size of , step length of 1, and ReLU nonlinear, without pooling operations. The initial input is a low-light color image. The output from each layer serves as both the input for the subsequent layer and for the corresponding subnet within the EM.

The EM comprises subnets corresponding to the number of layers in the FEM. Each subnet uses the output of a certain layer in the FEM as input to generate a color image of the same size as the original low-light image. Each subnet features a symmetric structure, incorporating both convolution and deconvolution operations. The first convolution layer uses eight convolution nuclei of size , step length 1 and ReLU nonlinear activation function. Next, there are 3 convolutional layers and 3 deconvolution layers, using a kernel size of , a step length of 1, and a ReLU nonlinear activation function. The numbers are 16, 16, 16, 16, 8, and 3. Throughout the training process, all subnets undergo simultaneous yet independent training, without sharing any learning parameters.

The FM takes all the output from the EM and produces the final enhanced image. All outputs from EM are connected into the color channel dimension and merged using a convolution kernel of to produce a weighted sum with learnable weights.

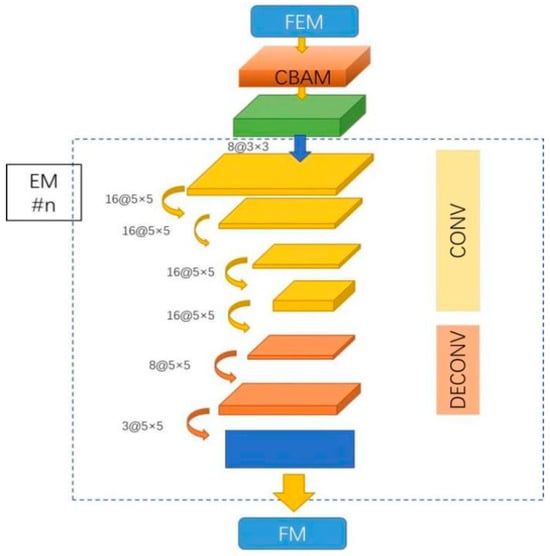

MBLLEN is a deep neural network designed for low-light image enhancement, capable of improving the quality of low-light images while preserving high levels of detail. However, the network may introduce local overexposure during the enhancement process, which can negatively affect the visual quality of the resulting image and reduce the performance of image-related tasks. To address this issue, we introduce an attention mechanism to the MBLLEN, which helps selectively focus on important features of the input image and adjust the processing across different regions, thereby improving the overall enhancement process.

The use of the attention mechanism helps reduce the fluctuation of pixel intensity in regions prone to overexposure, ensuring that the enhancement process is more localized and controlled. Specifically, the weighted range of the attention mechanism is limited to localized regions of the image, which helps adjust feature representation in a more targeted manner. This focused approach enables better enhancement in critical areas without unnecessarily altering other parts of the image.

Among various attention mechanisms—such as self-attention, global spatial coordinate attention, and attention matrix CNN—we chose to employ the CBAM for this study. CBAM is a lightweight yet effective attention mechanism that combines both channel-wise and spatial attention. This dual attention strategy allows the model to focus on important features both in terms of the channel dimension (relevant feature maps) and the spatial dimension (relevant regions within the feature maps). By emphasizing these key features, CBAM helps the MBLLEN effectively enhance the image while minimizing the effects of local overexposure. The use of CBAM ensures that the enhancement process is both accurate and efficient, making it particularly suitable for low-light image enhancement tasks where precise adjustments are crucial.

Incorporating the CBAM attention mechanism into the MBLLEN thus improves the model’s image processing capabilities, effectively mitigating the negative effects of local overexposure and producing better-enhanced images with improved visual quality and task performance.

In this paper, the CBAM attention module is introduced into the FEM feature extraction module to extract more accurate feature information and enhance the adaptability of the network under uneven illumination.

Firstly, the CBAM attention module is defined. The CBAM comprises two components: the channel attention mechanism and the spatial attention mechanism. The channel attention mechanism aids the network in understanding the correlations between different channels. Similarly, the spatial attention mechanism enables the network to grasp the correlation between local areas. Consequently, this study segmented the CBAM into two submodules. The first part is ChannelAttentionModule (CAM). The channel attention module weights the features of different channels by learning the dependencies between channels. Channel weights are derived through a global average pooling operation, known as the squeeze operation. Subsequently, an MLP with a linear and hidden layer, along with a non-linear ReLU function, calculates the weighted output vector. This involves mathematical operations (multiplication and addition) with the original input feature map to generate a new channel-wise gated feature map. The second part is the SpatialAttentionModule (SAM). The spatial attention module weights the features of local areas by learning spatial dependencies. Similarly to CAM, squeeze operations were first implemented in SAM. The difference is that instead of calculating just one global context, the context vector of each pixel is obtained by successively performing a convolution and then a convolution. Following this, similar to CAM, the “context vector” is weighted via MLP, yielding an explicitly weighted output for the region’s spatial features. By multiplying the features of the output of the channel attention module and the features of the output of the spatial attention module, the CBAM output vector of the main body of the attention channel and spatial information is obtained.

Following this, the CBAM is integrated between the FEM feature extraction module and the EM enhancement module. During the feature extraction stage post-FEM, the attention mechanism is employed to identify crucial features, adjust focus on varying brightness regions within the image, and weight the input features, thereby enhancing the FEM’s feature extraction efficacy.

The input image is convolved to the FEM; The FEM output is normalized; The CBAM attention module performs feature extraction on the normalized results. Calculate the mean average of the variables in CBAM. The mean value is subtracted from each variable in CBAM and assigned to the CBAM feature map. The original FEM output, the normalized result, and the CBAM processing result are combined and output.

The structure of the multi-branch low-light enhancement network based on the attention mechanism is shown in Figure 2.

Figure 2.

Network structure for introducing the attention mechanism.

3.2. Data Preparation

The PASCAL Visual Object Classes (PASCALVOC) image data are used to generate a large number of synthetic low-light images for training the low-light enhancement network. Compared with normal-light images, low-light images are characterized by reduced brightness and increased noise. To simulate brightness degradation, each channel of a normally exposed image is adjusted using gamma correction. In addition, Poisson-distributed noise with a peak value of 200 is added to simulate sensor noise under low-illumination conditions. The same low-light image generation procedure is applied consistently throughout the experiments.

The self-collected TrashBig dataset is used for garbage recognition and contains eight categories: battery, cardboard, drugs, glass, metal, paper, plastic, and vegetable. The dataset consists of approximately 4000 images for training and 480 images for validation, with around 60 images per category in the validation set. No single category dominates the dataset, which helps reduce category bias during training and evaluation.

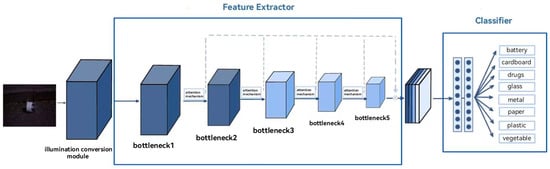

3.3. Model

The network model constructed in this paper consists of two parts: a feature extractor and a classifier. The overall structure is shown in Figure 3. In the figure, the feature extractor consists of ResNet101 as its backbone, which contains five bottlenecks. The attention mechanism module is added to different bottlenecks, and the features extracted from different modules are integrated by feature fusion (as shown by the broken line in Figure 3) to extract the feature information of the image from the input.

where stands for feature extractor. The classifier, consisting of two fully connected layers and a Softmax classifier, classifies the extracted feature information to obtain the final score of the image under each category:

where stands for classifier.

Figure 3.

Overall architecture of the proposed garbage recognition framework.

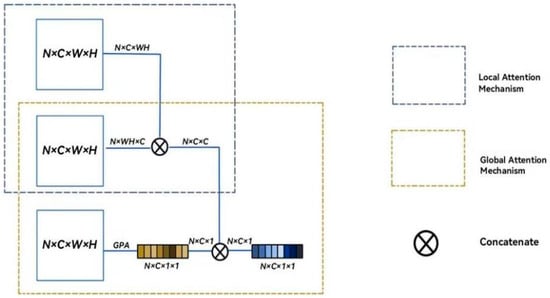

The concept of the attention mechanism is inspired by studies on human vision, where humans selectively focus on specific areas of the retina based on immediate needs, allocating limited processing resources to the most important regions. In the context of waste image classification, there can be considerable variation in feature representation within the same waste category, which can hinder accurate classification. To address this, it is essential to focus precisely on the critical areas of the image. Inspired by this idea, we incorporate an attention mechanism module that directs the network to focus on the feature regions most relevant for classification, thereby improving the feature extraction process. The specific structure of the attention mechanism is illustrated in Figure 4.

Figure 4.

Schematic diagram of the attention mechanism used in this study. N, C, H, and W denote the batch size, channel number, and spatial dimensions (height and width) of the feature map, respectively.

Here, we represent the characteristics extracted from each bottleneck with ; its size is . The local attention mechanism module is constructed using the Gram matrix, and is multiplied by its transpose to obtain a local feature of size :

This operation can obtain the correlation between each element in the feature map, making the element with a larger value larger and the element with smaller data smaller, that is, highlighting the importance of each part, making the features that help to judge the category more obvious, while suppressing the features that affect the judgment.

Then, the global average pooling operation (GAP) is performed on , which can retain the spatial and semantic information extracted by the feature , and obtain the global feature of size 1:

represents the most significant feature of the feature , which also fits into the global attention mechanism defined. The overall feature extracted after the attention mechanism is fused:

where is the convolution kernel of 1, is the bias, Concat represents the fusion operation, which aims to use feature information of different scales to avoid information loss and further improve the robustness of the model.

4. Experimental Validation

4.1. Image Evaluation Indicators

- (1)

- Improved structural similarity (

The initial structural similarity is used to measure the similarity between images, including brightness, contrast, and structure. The structural similarity between two images can be calculated as follows:

where and represent the average values of all pixel values of images X and Y, respectively, and represent the standard deviation of all pixel values of images X and Y, respectively, represents the covariance between two images, and , and are three constants.

In Formula (1), three parts measure brightness, contrast, and structure information between images, respectively.

The formula for calculating the structural similarity between the fused image (F) and the two source images proposed in the literature is as follows:

The more similar the fused image’s structure is to the source image, the closer the value of is to 1.

- (2)

- PSNR

The PSNR (peak signal-to-noise ratio) represents the ratio of peak power to noise power in the fused image, and can be used to measure the degree of distortion in the process of multi-focus image fusion.

PSNR is defined as

where r is the maximum number of pixels in the fused image. If a picture is represented by n bits, then there is . MSE is the mean square error, defined as follows:

where A and B represent two original images. In addition,

where M and N represent the height and width of the image, respectively. , represents the pixel values of images X and F at positions respectively. This formula calculates the average squared difference between the corresponding pixel values of the two images, providing a measure of the image quality or error. It is commonly used for evaluating image reconstruction or enhancement tasks, where a lower MSE value indicates a smaller difference between the original and processed images.

In general, a larger peak signal-to-noise ratio (PSNR) indicates that the fused image is closer to the source image, with less distortion in the multi-focus image fusion process. Conversely, a smaller PSNR value suggests greater distortion. Therefore, a higher PSNR value reflects improved image quality and enhanced performance of the multi-focus image fusion algorithm.

4.2. Low Illumination Enhancement Effect

Table 1 presents the experimental results for the two objects. The data clearly indicates that the neural network model incorporating the attention mechanism outperforms the model without it across the mentioned datasets. Specifically, the PSNR score of the model with the attention mechanism is approximately 3% higher than that of the model without, highlighting the positive influence of the attention mechanism on the overall performance of the model.

Table 1.

Experimental results of low illumination enhancement.

Furthermore, evaluating the enhancement effect of the neural network model from a subjective perception standpoint is essential. Image enhancement quality is frequently subject to subjective evaluation, as individuals possess varied esthetic preferences and pursuits regarding image visual effects. After enhancing the image, observe whether the effect of the overall image is clearer and clearer, whether the details are more prominent, whether the color is fuller and natural, and whether it enhances the visual effect and beauty. The images before and after enhancement are compared to observe the differences and whether the enhancement effect meets their expectations. At the same time, the enhanced image can be compared with other similar images to judge whether it is better.

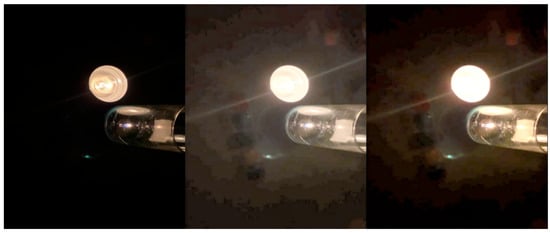

The visual examples are provided to qualitatively illustrate the effects of low-light enhancement. As shown in Figure 5 and Figure 6, the proposed method improves the visibility of local structures compared with the original low-light inputs, with clearer edges and more discernible details in certain regions. These visual results suggest that the enhancement process can increase local contrast and improve perceptual clarity under moderately low-illumination conditions. The visual comparisons are intended to complement the quantitative metrics and demonstrate representative enhancement behaviors rather than to serve as an exhaustive evaluation.

Figure 5.

Enhanced example 1. Left: original image; right: enhanced image.

Figure 6.

Enhanced example 2. Left: original image; right: enhanced image.

Figure 7 and Figure 8 present challenging cases captured under extreme low-light conditions, where large portions of the scene remain unrecoverable due to severe darkness. In such cases, the baseline method exhibits noticeable overexposure in bright regions, resulting in the loss of internal structural details. By contrast, after introducing the attention mechanism, some internal line structures within the bright regions become partially visible. Although overexposure is not completely eliminated in the final enhanced images, it is alleviated to a certain extent, indicating the potential of the attention-enhanced approach to mitigate local over-enhancement in difficult illumination scenarios.

Figure 7.

MBLLEN enhancement effect.

Figure 8.

Enhanced effects of our model.

4.3. Identification Test

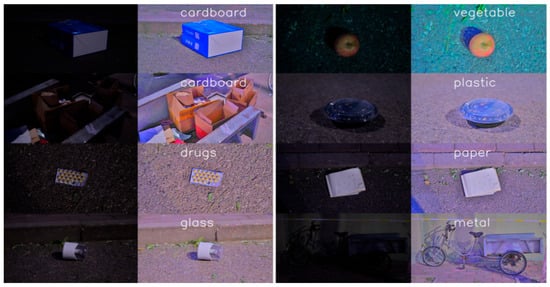

All training and testing of the models in this paper were conducted using NVIDIA 3060 GPUs. The proposed garbage recognition model is based on the self-collected TrashBig dataset, which includes 8 categories: battery, cardboard, drugs, glass, metal, paper, plastic, and vegetable. The dataset is designed to ensure richness and diversity, representing real-world scenarios with waste items captured in various environmental conditions, such as different lighting, backgrounds, and levels of occlusion. The training set consists of approximately 4000 images, providing a broad range of examples for model training. The validation set, with around 60 images per category (totaling about 480 images), ensures the model is evaluated across diverse scenarios. Data augmentation techniques were also applied to further enhance the diversity of the dataset. This comprehensive dataset helps the model effectively handle challenges like low-light conditions, object overlap, and category variation, improving its robustness and accuracy for real-world applications.

The proposed model is compared with the Dark-Waste, Faster R-CNN, and Swin-Transformer object detection models on our dataset. The Precision, Recall, and F1 of the four models were used to compare after the test. The experimental results are shown in Table 2.

Table 2.

P, R, and F1-score of the four models on dataset.

Due to the limited generalization ability of the feature extractor in handling the feature variations in garbage objects, the accuracy of Faster R-CNN is relatively low. As a Transformer-based model, Swin-T is capable of capturing long-range dependencies when processing image data, leading to a more balanced performance in both accuracy and recall. Similarly to DarkWaste, the model proposed in this paper focuses on reducing false positives during training, which may slightly compromise its ability to detect all positive instances. By incorporating an attention mechanism, this model enhances its capability to learn from low-illumination images, improving performance in such challenging conditions. As a result, the proposed model achieves the highest accuracy and F1 score.

As shown in Table 3, in this study, we conducted a detailed evaluation of the performance of the Illumination Conversion (IC) module combined with different models on the TrashBig dataset, with a particular focus on demonstrating the superiority of the “Proposed” method in waste classification tasks. The experimental results show that the IC module effectively improves the illumination quality of images in low-light environments, thereby enhancing the classification performance of the models.

Table 3.

Test results of IC combined with different models on TrashBig.

Specifically, by integrating the IC module with classic models such as FRCNN, YOLOv5, and Dark-Waste, significant performance improvements were observed across multiple categories. For instance, the AP values for the “battery” and “cardboard” categories reached 89.5% and 80.1%, respectively, significantly outperforming other models. The IC module particularly enhanced the model’s discriminative ability under low-light conditions, improving classification accuracy. For the “drugs” and “vegetable” categories, the AP values were 76.8% and 77.3%, respectively, also showing improvements compared to other methods.

Although the IC module also led to notable performance improvements when applied to other models, the “Proposed” method, which combines the IC module with innovative model design, consistently demonstrated superior performance across all categories. This was especially evident in the classification of the “plastic” and “paper” categories, further confirming the efficacy of the proposed method under low-light conditions. The introduction of the IC module enhanced the recognizability of objects, resulting in more accurate classification outcomes in low-light environments.

These experimental results indicate that the Illumination Conversion (IC) module can effectively address waste classification problems under low-light conditions, providing critical technical support for object recognition tasks in illumination-limited environments.

The following section presents a rendering of our model applied in a real-world low-light environment. Figure 9 illustrates a series of garbage recognition scenarios captured under low light conditions, with each subgraph labeled according to the identified object’s category. Starting from the top left, the first subgraph shows a cardboard box clearly identified against a dim background, followed by an apple recognized on the grass. These images highlight the model’s success in identifying and classifying waste even under low-light conditions. The subsequent subgraphs depict various types of waste, including cardboard in bins, plastic covers on roads, and paper on lawns. Labels such as “cardboard,” “plastic,” and “paper” beside each subgraph indicate the model’s accuracy in classifying the material type of each item. In the lower-right corner, a piece of glass and a bicycle are also successfully identified in a nighttime scene, demonstrating the model’s ability to handle more complex and dynamic environments. Overall, these renderings showcase the practicality and effectiveness of our model for garbage identification tasks in low-light settings.

Figure 9.

Image detection example. A total of eight sets of data were tested in the figure. The left side of each set of data is the original image, and the right side is the enhanced recognition effect image.

5. Discussion

The experimental results indicate that integrating low-light image enhancement with attention-guided feature learning can improve garbage recognition performance under low-illumination conditions. However, the effectiveness of the proposed approach is inherently constrained by environmental complexity. In particular, extreme illumination variations, strong glare, and deep shadows may limit the recoverability of visual information, which in turn affects recognition accuracy. In addition, practical scenarios often involve object occlusion, overlapping waste items, and substantial intra-class variability in terms of shape, color, and size, further increasing the difficulty of reliable classification. From a data perspective, issues such as class imbalance and annotation noise may also restrict the generalization ability of the model. These observations suggest that future improvements should consider both more robust enhancement strategies and better handling of real-world data uncertainties.

6. Conclusions

This paper studies garbage recognition under low-illumination conditions by integrating low-light image enhancement with attention-guided feature learning. An attention-enhanced multi-branch low-light enhancement network is employed to alleviate uneven illumination and local overexposure, and the enhanced images are used for subsequent garbage recognition. Experimental results on a self-collected low-light garbage dataset indicate that the proposed enhancement–recognition pipeline achieves consistent improvements in image quality and recognition performance compared with baseline methods. Nevertheless, the performance of garbage recognition systems can still be affected by complex environmental factors such as severe illumination variations, object occlusion, and overlapping waste items, as well as dataset-related issues including class imbalance and annotation noise. Addressing these challenges remains an important direction for future research, particularly for improving robustness under more diverse real-world conditions.

Author Contributions

Conceptualization, Z.L. and Y.D.; methodology, Z.L. and Y.D.; software, W.W.; validation, Z.L., Y.D., C.Z., W.W. and S.A.; formal analysis, Y.D. and S.A.; investigation, C.Z. and S.A.; resources, Z.L. and C.Z.; data curation, C.Z., W.W. and S.A.; writing—original draft preparation, Y.D.; writing—review and editing, Z.L., Y.D. and W.W.; visualization, W.W. and S.A.; supervision, Z.L.; project administration, Z.L. and Y.D.; funding acquisition, Z.L.; Z.L. and Y.D. contributed equally to this work and share first authorship. All authors have read and agreed to the published version of the manuscript and agree to be accountable for all aspects of the work.

Funding

This work was supported in part by the Special Fund for High-Quality Development of the Ministry of Industry and Information Technology (Grant No. TC220A04A-182); the National Natural Science Foundation of China (Grant No. 62271045); the Key Program for International Science and Technology Cooperation Projects of China (Grant No. 2022YFE0112300); the Fundamental Research Funds for the Central Universities (Grants No. FRF-IDRY-23-027 and FRF-KST-25-008); the Young Teaching Backbone Talent Program of the University of Science and Technology Beijing (Grant No. JXGG202509); and the CCF-NSFOCUS “Kunpeng” Research Fund (Grant No. CCF-NSFOCUS 202417). The author is also supported by the 2024 Xiaomi Young Scholar Program.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Zhang, Q.; Yang, Q.; Zhang, X.; Wei, W.; Bao, Q.; Su, J.; Liu, X. A multi-label waste detection model based on transfer learning. Resour. Conserv. Recycl. 2022, 181, 106235. [Google Scholar] [CrossRef]

- Hu, Y.; Zhan, P.; Xu, Y.; Zhao, J.; Li, Y.; Li, X. Temporal representation learning for time series classification. Neural Comput. Appl. 2021, 33, 3169–3182. [Google Scholar] [CrossRef]

- White, G.; Cabrera, C.; Palade, A.; Li, F.; Clarke, S. WasteNet: Waste classification at the edge for smart bins. arXiv 2020, arXiv:2006.05873. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Ren, D.; Liu, W.; Ye, R.; Hu, Q.; Zuo, W. Enhancing geometric factors in model learning and inference for object detection and instance segmentation. IEEE Trans. Cybern. 2021, 52, 8574–8586. [Google Scholar] [CrossRef]

- Alrayes, F.S.; Asiri, M.M.; Maashi, M.S.; Nour, M.K.; Rizwanullah, M.; Osman, A.E.; Drar, S.; Zamani, A.S. Waste classification using vision transformer based on multilayer hybrid convolution neural network. Urban Clim. 2023, 49, 101483. [Google Scholar] [CrossRef]

- Qu, Y.; Ou, Y.; Xiong, R. Low illumination enhancement for object detection in self-driving. In Proceedings of the 2019 IEEE International Conference on Robotics and Biomimetics (ROBIO), Dali, China, 6–8 December 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1738–1743. [Google Scholar]

- Martinec, D.; Herman, I.; Šebek, M. On the necessity of symmetric positional coupling for string stability. IEEE Trans. Control. Netw. Syst. 2016, 5, 45–54. [Google Scholar] [CrossRef]

- Xiao, Y.; Jiang, A.; Ye, J.; Wang, M.-W. Making of night vision: Object detection under low-illumination. IEEE Access 2020, 8, 123075–123086. [Google Scholar] [CrossRef]

- Zhang, M.; Xu, S.; Song, W.; He, Q.; Wei, Q. Lightweight underwater object detection based on yolo v4 and multi-scale attentional feature fusion. Remote. Sens. 2021, 13, 4706. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, Singapore, 20–27 January 2020; Volume 34, pp. 12993–13000. [Google Scholar]

- Chen, X.; Huang, H.; Liu, Y.; Li, J.; Liu, M. Robot for automatic waste sorting on construction sites. Autom. Constr. 2022, 141, 104387. [Google Scholar] [CrossRef]

- Jiang, X.; Hu, H.; Qin, Y.; Hu, Y.; Ding, R. A real-time rural domestic garbage detection algorithm with an improved YOLOv5s network model. Sci. Rep. 2022, 12, 16802. [Google Scholar] [CrossRef] [PubMed]

- Gundupalli, S.P.; Hait, S.; Thakur, A. Automated municipal solid waste sorting for recycling using a mobile manipulator. In Proceedings of the International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Houston, TX, USA, 23–26 August 2026; American Society of Mechanical Engineers: Livingston, NJ, USA, 2016; Volume 50152, p. V05AT07A045. [Google Scholar]

- Ma, W.; Yu, J.; Wang, X. An improved faster R-CNN based spam detection and classification method. Comput. Eng. 2021, 8, 294–300. [Google Scholar]

- Yadav, S.; Shanmugam, A.; Hima, V.; Suresh, N. Waste classification and segregation: Machine learning and iot approach. In Proceedings of the 2021 2nd International Conference on Intelligent Engineering and Management (ICIEM), London, UK, 28–30 April 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 233–238. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Aishwarya, A.; Wadhwa, P.; Owais, O.; Vashisht, V. A waste management technique to detect and separate non-biodegradable waste using machine learning and YOLO algorithm. In Proceedings of the 2021 11th International Conference on Cloud Computing, Data Science & Engineering (Confluence), Noida, India, 28–29 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 443–447. [Google Scholar]

- Zhang, C.J.; Zhu, L.; Yu, L. Review of attention mechanism in convolutional neural networks. Comput. Eng. Appl. 2021, 57, 64–72. [Google Scholar]

- Lore, K.G.; Akintayo, A.; Sarkar, S. LLNet: A deep autoencoder approach to natural low-light image enhancement. Pattern Recognit. 2017, 61, 650–662. [Google Scholar] [CrossRef]

- Jung, H.; Kim, Y.; Jang, H.; Ha, N.; Sohn, K. Unsupervised deep image fusion with structure tensor representations. IEEE Trans. Image Process. 2020, 29, 3845–3858. [Google Scholar] [CrossRef] [PubMed]

- Ignatov, A.; Kobyshev, N.; Timofte, R.; Vanhoey, K.; Van Gool, L. Dslr-quality photos on mobile devices with deep convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3277–3285. [Google Scholar]

- Liang, X.; Chen, X.; Ren, K.; Miao, X.; Chen, Z.; Jin, Y. Low-light image enhancement via adaptive frequency decomposition network. Sci. Rep. 2023, 13, 14107. [Google Scholar] [CrossRef]

- Kim, H.U.; Koh, Y.J.; Kim, C.S. Global and local enhancement networks for paired and unpaired image enhancement. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XXV 16. Springer International Publishing: Cham, Switzerland, 2020; pp. 339–354. [Google Scholar]

- Wang, Z.; Cun, X.; Bao, J.; Zhou, W.; Liu, J.; Li, H. A general U-shaped transformer for image restoration. In Proceedings of the 2022 IEEE CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 17662–17672. [Google Scholar]

- Wang, W.; Wei, C.; Yang, W.; Liu, J. Gladnet: Low-light enhancement network with global awareness. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, 15–19 May 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 751–755. [Google Scholar]

- Shao, M.W.; Li, L.; Meng, D.Y.; Zuo, W.M. Uncertainty guided multi-scale attention network for raindrop removal from a single image. IEEE Trans. Image Process. 2021, 30, 4828–4839. [Google Scholar] [CrossRef]

- Qian, Y.; Jiang, Z.; He, Y.; Zhang, S.; Jiang, S. Multi-scale error feedback network for low-light image enhancement. Neural Comput. Appl. 2022, 34, 21301–21317. [Google Scholar] [CrossRef]

- Zhang, S.; Lam, E.Y. An effective decomposition-enhancement method to restore light field images captured in the dark. Signal Process. 2021, 189, 108279. [Google Scholar] [CrossRef]

- Wang, J.; Yang, Y.; Hua, Y. Image quality enhancement using hybrid attention networks. IET Image Process. 2022, 16, 521–534. [Google Scholar] [CrossRef]

- Khan, R.; Akbar, S.; Khan, A.; Marwan, M.; Qaisar, Z.H.; Mehmood, A.; Shahid, F.; Munir, K.; Zheng, Z. Dental image enhancement network for early diagnosis of oral dental disease. Sci. Rep. 2023, 13, 5312. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 295–307. [Google Scholar] [CrossRef]

- Xu, J.; Hou, Y.; Ren, D.; Liu, L.; Zhu, F.; Yu, M.; Wang, H.; Shao, L. Star: A structure and texture aware retinex model. IEEE Trans. Image Process. 2020, 29, 5022–5037. [Google Scholar] [CrossRef] [PubMed]

- Ese, A.K.; Tutsoy, O. Medical image reasoning with the convolutional neural network-based fuzzy logic. In Proceedings of the 4th International Congress on Engineering Sciences and Multidisciplinary, Baghdad, Iraq, 3–4 June 2022. [Google Scholar]

- Xiang, F.; Jian, Z.; Liang, P.; Gu, X.Q. Robust image fusion with block sparse representation and online dictionary learning. IET Image Process. 2018, 12, 345–353. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, X.; Zhang, Q.; Li, C.; Huang, F.; Tang, X.; Li, Z. Dual self-attention with co-attention networks for visual question answering. Pattern Recognit. 2021, 117, 107956. [Google Scholar] [CrossRef]

- He, X.; Wang, Y.; Zhao, S.; Chen, X. Co-attention fusion network for multimodal skin cancer diagnosis. Pattern Recognit. 2023, 133, 108990. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.