Abstract

Event-based social network is a novel platform where users establish social relationships and realize interest matching through participation in offline events. However, event recommendation faces severe challenges, including extreme data sparsity due to the short life cycles of events, and the dynamic interplay between individual and group preferences on fine-grained features. Existing methods ignore the users’ diverse and personalized preferences across different event features such as venue and organizer. We first conduct a data analysis to argue that not only do users themselves have different preferences for different features, but more importantly, these preferences dynamically influence the behavior of different groups they belong to. Therefore, in this work, we propose a novel Multiple Attention Group Event Recommendation (MAGER) framework based on Neural Collaborative Filtering to address these challenges. MAGER first employs fine-grained feature attention to generate personalized event representations, and then dynamically aggregates member preferences and influences through a group-level attention mechanism. More importantly, a heterogeneous attention structure integrates these learning modules, enabling generation of more accurate representations of event, users, and groups. We conduct extensive experiments on three-real world datasets, the experimental results show that MAGER achieves substantial improvements in user and group recommendations effectiveness compared to baselines in terms of HR@K and NDCG@K. Specifically, HR@5 improvement of 3.63–13.07%; NDCG@K improvement of 5.63–18.55% for user recommendation, and HR@5 improvement of 2.05–19.02%; NDCG@K improvement of 2.51–36.12% for group recommendation on three dataset.

1. Introduction

1.1. Research Background

Event-based Social Networks (EBSNs) as a novel social network, such as Douban-Event (http://beijing.douban.com/, accessed on 23 November 2025) and Meetup (http://www.meetup.com/, accessed on 23 November 2025), gradually attracting the increasing attention of academia [] and industry [,,,]. Unlike traditional social networks, users on EBSN participation in offline or online events, users not only establish social relationships in the network, but also realize interest matching and offline interaction by participating in various events. Event has the characteristics of a short life cycle and a strong timeliness, so it is usually necessary to recommend appropriate events to users before the event starts, so as to ensure that users make participation decisions in a limited time [,]. However, event often do not accumulate enough history interaction before recommendation, which makes the event recommendation face a serious sparse interaction problem, which seriously affects the performance of recommender systems in EBSN scenarios.

1.2. Analysis of Existing Problems

In event recommendation scenario, previous studies mainly focus on how to exploit the event context features to alleviate the data sparsity problem. For example, Du et al. [], Liao et al. [], and Wang et al. [] both propose methods to model event features using multidimensional context information, which improve the recommendation effect to a certain extent. Furthermore, Macedo et al. [] propose a multi-context learn-to-rank framework, which comprehensively considers the user’s social relationship, event content, and time preference characteristics, and improves the accuracy of recommendation. Du et al. [] utilize the intrinsic correlation between the event organizer and the event content to recommend event for group, which alleviates the problem of feature sparsity caused by the insufficient information of the event text. Specific features can effectively alleviate the sparse interaction problem, and improving the interpretability of the recommendation []. However, they still adopt a feature stacking strategy on the individual level, ignoring the personalized preferences of users on fine-grained features from the group view.

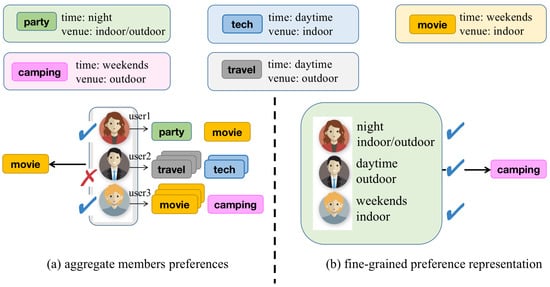

Intuitively, users have different degree preferences for different features such as venue, time, etc. Take an instance, as shown in Figure 1a, user2 prefers to participate in events at daytime but prefers outdoor events over indoor ones. And user3 prefers the event on weekends but has a greater preference for indoor. The difference in one feature may have a significant impact on the user’s behavior. This phenomenon shows that the exploiting of fine-grained feature plays a non-negligible role in accurately capturing user interests and their behavior patterns. Considering this challenge, Lai et al. [] calculate user’s preference scores separately for different features of target event, such as proposing a soft spatial constraint to compute spatiotemporal preference scores. However, these preference scores from various features are simply linearly superimposed and separately computed user preferences across different features, there is a lack of a concise strategy that effectively unifies user preferences across multiple features. More important, users’ different preferences for fine-grained features have a decisive influence in group decision, as shown in Figure 1b, users’ preference for fine-grained features is a decisive factor in group behavior.

Figure 1.

A case: the influence of a user’s interest under event features on their group.

Unlike simple aggregation methods based on historical interactions, such as AGREE [], which only measure the preferences of members at the user–item interaction level, this consideration can help the group discover events that better align with the group consensus intentions, enhancing group satisfaction. In the group recommendation problem, the interests of group members are different, and their influence on the group behavior is also different. Some studies [,] start trying to use the deep method based to modeling group behavior. For example, the ConsRec [] uses hypergraph learning to reduce the complexity of the aggregation process. However, these methods often ignore the importance of fine-grained features under sparse interactions problem, we argue that such coarse-grained strategies are ineffective to learning member preference and result in the imprecise for group representation.

1.3. Solutions and Advantages

To formalize the significance of fine-grained features for event recommendation, we present the sparse interaction situation between user/group and event, as well as between user/group and fine-grained features in a quantitative manner. We calculate the sparsity of user/group–event interactions and user/group–feature interactions in the Douban-Event and Meetup datasets. The results are shown in Table 1. For each dataset, we randomly select 1000 users and 1000 groups and compute their proportion of interaction with events and each fine-grained feature. Here, the variables represent the organizer, venue, week, and hour features, respectively, as defined in Section 3. From the results in Table 1, we observe that in the Douban-Event, is the smallest, indicating that user–event interactions are the sparsest, while are relatively larger, meaning that user/group interactions with each feature are denser. By contrast, in the Meetup, is smaller than because many of the events attended by a user/group are organized by the same organizer, which leads to a very low values.

Table 1.

The proportion of interaction with events and the proportion of interaction with each features with randomly an User and a Group in Douban-Event Beijing dataset (the is the proportion of the number of events that user/group has participated in to the total number of events, and the is the proportion of the number of organizer that user/group has participated in to the total number of organizers, etc.).

In summary, user/group interactions with fine-grained features are denser and beneficial for event recommendation, making the mining of fine-grained features crucial. Therefore, this paper aims to address the problem of extreme sparsity of user/group event by exploiting the user/group preferences for fine-grained features of event, proposing a multiple attention based group recommendation framework. Specifically, the two key challenges are as follows: I. The problem of extreme sparsity of user/group-event, since events often have short life cycles in EBSNs, making it difficult to accumulate historical feedback. II. The lack of a member-level fine-grained feature fusion framework with group-oriented, where insufficient exploration of users’ diverse preferences for event features leads to neglecting the impact of members’ dynamic fine-grained preferences on group decision-making.

1.4. Main Work and Structure

In this paper, we propose a multiple attention mechanism that initially obtains personalized event representation through fine-grained feature modeling, followed by aggregation at the group-level based on member influence to derive precise group preference representation. Building upon this foundation, we present a group event recommendation framework named MAGER (Multi-level Attention Group Event Recommendation). Inspired by Neural Collaborative Filtering, this framework aims to simultaneously capture interaction information between event and user/group. Specifically, the MAGER framework first generates the initial user embedding by using a mechanism based on time decay. Subsequently, we obtain event embeddings through a fine-grained feature attention across different features, and an attention module further integrates members’ event preferences with their influence within the group to generate the aggregated group representation. This hierarchical attention mechanism not only effectively addresses challenges caused by sparse interaction data in event recommendation but also enables precise capture of user preferences at the feature-level, thereby providing a more nuanced basis for group decision-making.

The main contributions of this work can be summarized as follows:

- We conduct data analysis to demonstrate that user/group interactions with fine-grained features are denser than the interactions with events, which helps alleviate the recommendation accuracy degradation. Furthermore, we explore how different members’ diverse preferences for features influence the behavior of different groups.

- We first propose a group recommendation framework that integrates fine-grained feature attention with member weight attention, capable of capturing both personalized interests of group members and their relative influence within the group.

- To address the challenges of sparse textual content and insufficient interactions, we design a fine-grained feature attention module that extracts users’ latent interest features from multiple features.

- We conduct extensive experiments on three real-world EBSN datasets, the results show that MAGER framework has significant improvements in both recommendation effectiveness and efficiency compare with state-of-the-art methods.

The remaining sections of this paper are organized as follows: Section 2 introduces related work including reviews the research of event recommendations in EBSNs and group recommendation. Section 3 is problem definition that formalizes the task of our work and introduces all parameters used in our model. Section 4 gives a data analysis case. Section 5 provides a detailed description of our proposed MAGER framework. Section 6 reports experimental results and corresponding analyses. Section 7 discusses this paper.

2. Related Work

2.1. Event Recommendation in EBSNs

Event recommendation in EBSNs has emerged as a critical research area, addressing challenges such as cold-start, data sparsity. Existing studies propose diverse methodologies to enhance recommendation accuracy by leveraging multi-dimensional contextual and advanced machine learning techniques [,]. One prominent approach integrates multiple contextual signals to address the cold-start problem, Macedo et al. [] combine content, collaborative, social, location, and temporal signals to rank events, demonstrating superior performance. Lai et al. [] further distinguished preference factors from spatial–temporal constraints, using factorization machine to model user decisions under implicit feedback. Liu et al. [] unify interactions among users, events, groups, and locations into a pairwise matrix factorization framework. These methods highlight the importance of multi-source contextual data in mitigating the transient nature of events.

Representation learning and graph-based models have gained traction for their ability to model heterogeneous relationships [,]. Zhang et al. [] employ a denoising framework to attenuate noise in contextual features, enhancing robustness against data sparsity. Liu et al. [] refine embeddings by propagating information through a user-event-intent graph, addressing feature sparsity via contextual intent nodes. Jiang et al. [] extract semantic features from meta-graphs using convolutional networks and attention mechanisms, revealing latent user-event preferences. These works underscore the potential of graph structures in capturing complex entity interactions.

Social influences are increasingly recognized as vital factors. Taking into account the influence of adjacent event and users, Sun et al. [] propose a social event matching method based on graph neural networks, designing an affinity calculation model to predict the affinity between users and new events, while considering the bilateral constraints extracted from user and event attributes. Liang et al. [] introduce a prefiltering algorithm to balance global satisfaction and individual fairness, addressing biases in group recommendations. Additionally, taking into account the significance of the organizer, TrinhHuy et al. [] estimate the scale of the participants for the event organizers, classifying the participants based on K-means clustering and trained a classification model using the constructed dataset to predict the scale of the new event. Han et al. [] distinguish organizer roles and dynamic influence patterns, modeling their impact on user preferences through topic models.

2.2. Group Recommendation

Group recommendation focuses on providing items that meet the collective preferences of multiple users. Recent studies tackle challenges like data sparsity and dynamic group behaviors using graph models and attention. Li et al. [] model user preferences by connecting sequences and dynamic group partner influences through dynamic graph structures. Liang et al. [] enhance fuzzy preference profiling by representing user–group–item interactions as heterogeneous graphs, achieving robust performance in incomplete data scenarios. After they propose a hierarchical attention network for cross-domain group recommendation [], transferring knowledge from data-rich domains to sparse target domains via adversarial learning. Huang et al. [] propose a two-tower group recommendation framework that leverages similar-user features and multi-scale contrastive learning to more effectively model user–group interactions in EBSNs. The topic model has also attracted attention in the group recommendation task, Du et al. [] propose a topic model based to alleviates cold-start issues by modeling organizer–content correlations.

Graph-based models dominate group recommendation research due to their ability to capture complex relationships. Liao et al. [] integrate explicit and implicit information via multi-head attention on dual user-event graphs. Deng et al. [] further incorporate users’ long- and short-term preferences using graph attention networks, fusing temporal dynamics with heterogeneous attributes for richer group representations. For occasional groups with limited interaction data, Guo et al. [] employ hypergraph embedding to exploit group overlaps and user–user interactions, enhancing representation learning through hierarchical hyperedge embedding. Hypergraph convolution based recommendation models have been developed. Zhao et al. [] disentangle member–item interactions via a masked autoencoder, mitigating noise through degree-sensitive masking and disentangled hypergraph convolutions. Wu et al. [] explore group consensus through multi-view learning, utilizing hypergraph convolution for efficient aggregation. Attention mechanism-based methods, Yin et al. [] leverage attention mechanisms and bipartite graph embeddings to model users’ global and local social influences, adapting weights dynamically across groups. Huang et al. [] enhance group semantic features via multi-attention networks, integrating co-occurrence, social, and descriptive attributes to refine preference learning.

To conduct a more detailed comparison, we select some representative methods of group recommendation and compared them with MAGER to further highlight our novelty and differences, as shown in Table 2. Firstly, we can observe that group recommendation methods always aggregate member preference to generate group representation. The difference lies in the different network architectures designed, such as CVTM using a probabilistic generation model, HyperGroup using a graph convolutional network, while our MAGER utilizes a neural collaborative filtering architecture. Its advantage lies in the simple structure, stable training, and suitability for sparse data, etc. Secondly, we can observe that these comparison methods do not aggregate member preferences from fine-grained features perspective. For example, VTGAE [] uses event spatiotemporal features, but only concatenates these features to generate event embeddings. This is also the way most methods handle item features. This method ignores the variability of users or groups’ preferences for different features. In summary, MAGER not only aggregates fine-grained features, member preferences, and group-level preferences for features, but more importantly, it forms a dependency relationship among the three attention modules, enabling it to capture the dynamic preferences of users and groups at different features. This is significantly different from other general co-attention networks.

Table 2.

Comparison different methods. “agg.” denotes that the aggregation. “dynamic preference” denotes the initial embedding of user or group. Note: ✓: used; ✗: not used.

3. Problem Definition

We use a boldface capital letter to denote a matrix (e.g., X), a boldface lowercase letter to denote a vector (e.g., u), a capital squiggle letter to denote a set (e.g., ), a capital letter to denote a amount (e.g., M), and a lowercase letter to denote a scalar (e.g., g). The notations of our model MAGER are shown in Table 3.

Table 3.

Notations of MAGER parameters.

A group set is represented as , a event set is represented as , and a user set is represented as . Suppose that a group has M members, i.e., , and an event has four features: organizer , venue , day of week and hour of day . Specifically, the organizer is an initiator of event, the venue is the location of event, and the starting time of the event is regarded as a two-dimensional time pattern, consisting of “week” and “hour”. Therefore, we define an event participation record as:

An Event Participation Record. When a user participates in an event, we can create an event participation record . And when a group participates in an event, we can create an event participation record . The w and h form a two-dimensional coordinate that event e started, which means the time pattern hour of day h and day of week w.

There are two participation interaction forms: user–event and group–event. We use X to denote group–event interaction matrix, i.e., , where indicates group has participated in event , and create a participation record . We use Y to denote user–event interaction matrix, i.e., , where indicates user has participated in event , and create a participation record . Our task is to recommend a rank event list to a group or a user, that is, to achieve both group recommendation task and personalized recommendation task. Formally, it is written as follows:

Input: User set , Group set , Event set , user–event interaction , group–event interaction .

Output: A function , a function , which means to map an event to a real score for each user or each group.

4. Data Analysis and Motivation

The previous studies ignore the impact of users’ personalized preferences for fine-grained features of item on group decision-making. For instance, various aggregation-based methods such as AGREE [], ConsRec [] and PGR-PM [] merely learn members’ dynamic preferences toward items. We argue that not only do users themselves have different preferences for different fine-grained features, but also these preferences dynamically influence the behavior of the different groups they belong to. For example, a student may personally prefer attending events on weekends, with their family group to frequently engage in weekend events, while their social group may only focus on party-related events without considering time constraints. It may be known that the student has high weight in their family group, and the decisions of the family group follow their preferences. However, when the student is in the social group, their time preference no longer exists; rather, they align with the will of the social group. This indicates that the student’s preferences for the fine-grained features change dynamically depending on the different groups they are in.

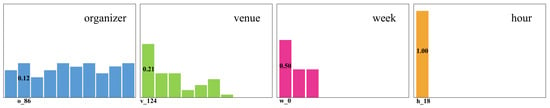

To elaborate further, we conduct a case study: a distribution chart is used to quantify the heterogeneity of individual preferences in terms of fine-grained features. Specifically, we randomly select a user and we statistically analysis the distribution of their fine-grained preferences for the events they participated in, as shown in Figure 2. A total of 18 events were participated, which were held by 10 different organizers, held at 7 different venues, and distributed across 3 different weeks, all scheduled within the same hour of the day. From the distribution of these features, it is evident that the user exhibits distinct preferences toward event features. For example, the user shows diverse preferences with respect to organizers, whereas their preference for the hour feature is highly concentrated.

Figure 2.

The distribution of user preferences for fine-grained features of event.

Moreover, we demonstrate that there are significant differences in the attention weights that individual users assign to the features of the event when they participate in different groups. (1) We show the changes in a single user’s preference for specific features across different groups, as shown in Figure 3a. We select the same user and randomly identified two groups he had participated in, namely group 1 and group 2. We select the features that this user interacted with most frequently, such as organizer o_86, venue v_124, week w_0, and hour h_18, and calculate the frequency of participate for these features in group 1 and group 2. Here, the total number event participated in by group 1 and group 2 was 10 times each. It can be seen that group 1 has no preference for the o_86 and v_124 features, and group 2 has no preference for the o_86 feature. This proves that a single user’s preference for fine-grained features will significantly change as he moves between different groups. (2) We present the heat map of the attention weights for the event features when group 1 and group 2 participate in the same event. As shown in Figure 3b, we can observe that there is a significant difference in the attention weights given to the features by group 1 and group 2.

Figure 3.

The distribution of attention weights that a single user assigns to the different features when participating in different groups. (a) user preference for features across groups; (b) weight heatmap of features for groups.

These findings again prove our motivation: users not only exhibit dynamic preferences for fine-grained features but also that these preferences further influence the decision-making of their groups.

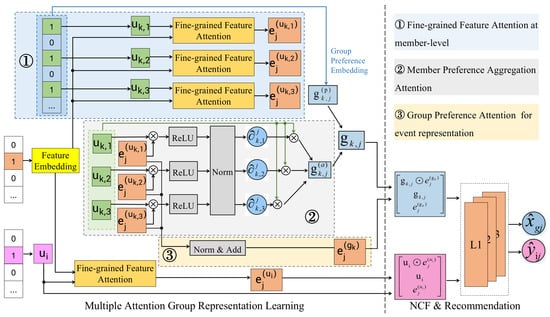

5. Methodology

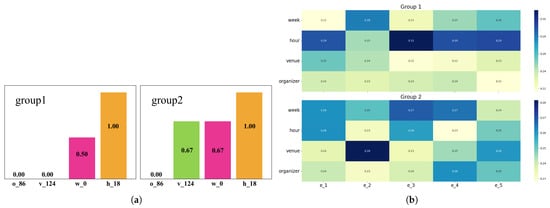

The differences between our MAGER and the general co-attention network as shown in Figure 4. The novelty of MAGER lies first in the use of multi-attention cross-feature to capture the fine-grained features of events, rather than simply aggregating through MLP. Secondly, MAGER includes dual-layer attentions on both user side and group side, while most general co-attention networks only contain a single-layer user–item attention. Most importantly, the attention calculation of MAGER relies on the candidate event context, rather than statically weighting members or historical behaviors. Specifically, the overall architecture of our model MAGER as shown in Figure 5, which illustrates the recommendation tasks in this paper, i.e., recommend well-pleasing events for groups or users. Specifically, the MAGER is divided into three components. The first one is Multiple Attention Group Representation Learning, the second is Neural Collaborative Filtering for modeling group–event interactions and user–event interactions, and the third is Recommendation and Optimization for learning model parameters. In the first component, we design three attention modules: the first one is obtaining the event representation by using fine-grained features (such as ); the second is generating the group ’s aggregated preference embedding based on the preferences of members; the third is obtaining the event representation at the group-level by using fine-grained features.

Figure 4.

The comparison between the general co-attention network for group recommendation and the MAGER framework.

Figure 5.

The framework of MAGER.

Furthermore, to generate the initial embeddings of users, we take into account the real-world scenario and that the interests of users and groups change over time, so we design a mechanism based on time decay to generate the dynamic preference representations of users. We assume that the historical interaction sequence of user u is , where represents an interactive event and is the timestamp. The influence of the interaction term decreases with the passage of time. Therefore, we define the time decay weight as follows:

where denotes the attenuation rate. Then the user’s dynamic preference embedding is

where is an event embedding that user u interacts with which is obtained based on “Fine-grained Attention” module. The dynamic user preference embedding is used to generate the group aggregated preference representation.

5.1. Multiple Attention Group Representation Learning

In this section, we introduce the significant module of our framework: Multiple Attention Group Representation Learning module that consists of three attention structures: (1) fine-grained feature-based attention event representation that first aims to learn event representation based on event features and target user by attentive mechanism; (2) member of group embedding aggregation that aims to estimate group preference on an event by a aggregate function; (3) group representation and group attention event representation which obtains final group embedding and corresponding event embedding. This three-level hierarchical attention structure, combined with the joint modeling of user- and group-level recommendations, is unique to our framework and specifically addresses the challenges in EBSNs.

5.1.1. Fine-Grained Feature-Based Attention for Event Representation

In EBSN scenarios, different users may have varying preferences for different features of event. Therefore, when modeling event representations, simply averaging or performing weighted summation of all features may fail to adequately capture users’ personalized preferences. To address this, we introduce a feature-based attention mechanism in this module to learn refined event representations, thereby better modeling users’ preference weights for different event features.

In this module, we learn event representation based on different features and target user. We propose a method to weight sum the embedding of different features, in which the weight parameters present the influence of each feature in deciding user behavior preference on event. To have an intuition for when a feature of event appears more in the preference behavior of a user, it should have more weight in the event representation. We assume event has four fine-grained features , each feature’s embedding vector are , , , , respectively. Assign an independent attention head to each feature as follows:

then influence weights of each feature are obtained through feature-level attention, taking the organizer feature as an example, the formula is as follow:

where the is a learnable weight matrix, the is a learnable vector. Then the final event representation is as follows:

5.1.2. Member of Group Embedding Aggregation

After learning the feature-based attentive event representation, we further introduce a group representation module to integrate the interest features of group members and generate the final group embedding representation. Since group recommendations differ from personalized recommendations, a group consists of multiple members who may exhibit varying interests in the same event; thus, how to effectively aggregating members’ interest is a critical challenge in group recommendation task. Inspired by [], we use a neural attention network to highlight the influence of members of group on target event for aggregating member embedding vector. In this module, each group , where the embedding representation of each member is derived through attention weighted computation with the target event. Subsequently, we assign a weight to each member in the group to measure their influence on the target event. The final group embedding representation is then generated by performing a weighted summation of the members’ embedding representations based on these weights.

Specifically, our goal is to learn a member-level attention weight to quantify the influence of member in the group . This weight holistically considers both the member’s personal interest preferences and their social influence within the group. For instance, in social network, certain members (e.g., opinion leader) exert greater influence on group event decision, and thus their weights should be higher. Based on this, we define the group embedding representation as follows:

where is an aggregate dynamic preference representation of group . denotes dynamic preference embedding of member. denotes the number of members of group . We use the was obtained by “Fine-grained Features Attention” module to compute the influence weight of member . Then the attention weight of each member as follows:

where is a learnable weight matrix, and the is a learnable vector. denotes concatenation operate.

5.1.3. Group Representation and Group-Attention Event Representation

Besides the group embedding vector derived from member embedding aggregation, the group own preference embedding cannot be ignored. In some scenarios, a group’s event decision is influenced not only by the individual interests of its members but also by the group’s consensus preferences, particularly when members have a common purpose. For instance, a group formed by a reading enthusiast and a travel enthusiast might ultimately choose to watch a movie together. In such cases, relying solely on individual member preferences may fail to capture the group’s holistic intent. Thus, for group recommendation tasks, we propose integrating both the member-aggregated embedding and the group consensus preference embedding into the final group representation. The group consensus preference embedding, denoted as , can be learned from group–event historical interactions. Like the user dynamic preference embedding, the group consensus preference embedding is also calculated based on a time decay mechanism as follows:

where denotes the historical interaction sequence of group , denotes the time decay weight, and the is an event embedding that group interacted with which is obtained based on “Fine-grained Feature Attention” module. Therefore, we define the final group embedding as follows:

where the is the group’s dynamic aggregation representation obtained in the above section by aggregating the members’ embedding.

After modeling the aggregation of member interests and modeling the group consensus preferences, we further generate the event representation for group to better characterize the group consensus interest in different event. To achieve this, we introduce a “Norm and Add” (Normalization and Weighted Summation) module to integrate personalized event embeddings of group members and produce the final group event representation. Specifically, we first utilize the “fine-grained feature attention” module to obtain event embeddings for each member. These embeddings capture the degree of preference each member holds for various event features. However, since different members exert varying levels of influence in group decision-making, we further normalize these member-specific event representations and assign appropriate weights to ensure that the final group event representation effectively reflects the collective preference trend of the group. Thus the event representation for group is as follows:

where the is the event embedding for group . denotes feature embedding of event . Then the weight of each feature is as follows:

where is a learnable weight matrix, and the is the final group representation. We can predict the group preferences after obtaining the final group representations and the corresponding event representations.

5.2. Interaction Learning with Neural Collaborative Filtering

We adopt Neural Collaborative Filtering (NCF) to model the interaction information between group–event and user–event. NCF is a deep-learning-based approach that learns complex interaction relationships between users/groups and target event through nonlinear transformations and Multi-Layer Perceptrons (MLP), thereby enhancing recommendation accuracy. Specifically, for each group–event interaction pair , we first obtain the embedding representations of group and event via a representation learning module, which can be acquired from a pre-trained embedding layer. Subsequently, the obtained group and event embeddings are fed into a pooling layer to further learn interaction relationships. The output embedding vectors of the pooling layer for user and group are as follows:

where include two recommendation tasks: group recommendation and user recommendation.

After the pooling layer, we feed the interaction representation into a fully connected layer to further learn the complex relationships between users/groups and events and compute the final prediction score. The primary role of the fully connected layer is to perform nonlinear transformations, enhancing the model’s expressive capacity. Specifically, for the target group and target user , we construct independent fully connected neural networks, obtaining the final hidden representation through layer-wise computations. The outputs of neurons in the L-th layer are defined as follows:

where the is the weight matrix of the l-th layer, mapping input features to the hidden space. is the bias vector of the l-th layer, enhancing the model’s learning capacity. The ReLU activation function introduces nonlinearity, empowering the model with stronger expressive capabilities. After obtaining the hidden representation of the final layer, we compute the final recommendation scores using a single-layer linear transformation. The predicted score for target group on event and the predicted score for target user on event are calculated as follows:

where the is the weight parameter of prediction layer of group recommendation, the is the weight parameter of prediction layer of user recommendation.

5.3. Recommendation and Optimization

We adopt the Regression-based Pairwise Loss (RPL) [] as the optimization objective to learn model parameters and jointly model group–event and user–event interactions. The core idea of this loss function is to optimize the preference ranking of users or groups across different events through pairwise comparisons, enabling the model to better capture the true interests of users or groups. To ensure effective learning of group preferences, we define the group-event loss function as follows:

where denotes the group–event interaction data in the training set for group g. The triplet indicates that group g has interacted with event j but not with event . Here and represent the model’s predicted preference scores of group g for event j and event , respectively. The objective of this loss function is to ensure that the predicted score for the interacted event j is higher than that for the non-interacted event , i.e., . The optimization aims to minimize the squared error of , thereby enforcing a margin of approximately 1 between the predicted scores to enhance the model’s discriminative capability.

Similarly, for the user–event recommendation task, we apply the same optimization strategy, defined as follows:

By maintaining a reasonable margin between scores, i.e., , the model effectively learns the user’s true preferences. To optimize the model, we employ Stochastic Gradient Descent (SGD) to minimize the combined losses and .

In recommendation tasks, another widely used pairwise learning method is Bayesian Personalized Ranking (BPR) []. However, BPR suffers from a potential issue in multi-layer models: its loss can be reduced simply by scaling up the hidden weights, leading to a trivial solution and necessitating strict regularization to constrain the parameters. In contrast, the regression-based pairwise loss (RPL) directly enforces a fixed margin between positive and negative samples (i.e., ), which prevents such trivial solutions and enables stable optimization without additional regularization. This property makes the loss particularly suitable for integration with our fine-grained attention mechanism, as it provides clearer and more robust optimization signals for the model.

6. Experiments

6.1. Data Preparation

Experiments are conducted on three real-world datasets: the Meetup dataset [], Douban-Event dataset [] and Yelp dataset (https://www.kaggle.com/datasets/yelp-dataset/yelp-dataset, accessed on 23 November 2025), which are popular in EBSNs. The basic statistics of these datasets as shown in Table 4. For these datasets, we collect event data from 1 January 2015 to 31 December 2016, including participants, start time, venue, and organizers of each event. Since these datasets lack group information, we generate synthetic groups based on the following condition: users who participated in the same event at the same time and location more than three times. We define group recommendation task as that recommend events to ephemeral groups of users. Notably, we generated groups of different sizes (ranging from 2 to 6) to validate the effectiveness of method across different group sizes.

Table 4.

Basic statistics of Douban-Event and Meetup Datasets. Note: # denotes number of.

6.2. Experimental Methods

6.2.1. Evaluation Methods

We employ HR (Hit Ratio) and NDCG (Normalized Discounted Cumulative Gain) metrics to evaluate the Top-N (N = 5, 10, 15, 20) recommendation performance of our model and baseline methods. HR measures whether the testing event is ranked in the Top-N list. The formula for HR is as follows:

NDCG evaluates the ranking quality of recommended event by considering their positions in the list. NDCG is the normalized version of DCG, which discounts the contribution of lower-ranked events (later positions have smaller gains). The formula for DCG is as follows:

where is the graded relevance of the event at position i. If the event in recommendation list at i appears in the test set , otherwise .

For the dataset split, we employ the time-aware validation method [] to evaluate our model MAGER and the baseline methods. Given a user profile, which is a collection of participation records, to rank participation records according to the corresponding timestamps. The earlier 70% records are selected as the training set, and the recent 30% records are used as the test set.

6.2.2. Baselines

To justify the effectiveness of our model, we compare our model MAGER with the following state-of-the-art baselines:

STARec [] proposes a latest time-aware model, which distinguishes between user social preferences and individual interests, explicitly depicting the inconsistency between social preferences and actual decisions.

CVTM [] proposes a group recommendation model based on topic model, which finds the correlation of content information and the venue.

GBGCN [] addresses the group-buying recommendation problem in social e-commerce and constructs directed heterogeneous graphs to integrate user-initiated, participation behaviors, and social network data.

AGREE [] introduces a model that dynamically adapts group member weights through attention mechanism to address preference aggregation in group recommendation, integrating NCF to capture group–item interactions.

HyperGroup [] uses hierarchical hyperedge embedding and graph neural networks to enhance group representation learning through user–user interactions and hypergraph-based group similarity.

ConsRec [] addresses consensus learning through multi-view (member-level aggregation, item-level tastes, and group-level preferences) fusion and employs a hypergraph neural network for member interest aggregation.

GroupIM [] proposes a novel framework that addresses group interaction sparsity through data-driven regularization strategies, leveraging user preference covariance and contextual relevance.

GroupAV [] introduces a multi-view attentive variational network for group recommendation that employs Variational AutoEncoder (VAE) and density-based preference modeling to address user interest drift.

DHMAE [] integrates a disentangled hypergraph neural network with a graph masked autoencoder to eliminate reliance on data augmentation and address noise by reconstructing masked node/hyperedge features.

6.3. Experimental Result and Analysis

6.3.1. Effectiveness of MAGER

We compare the performance of MAGER with baselines in top-N user/group recommendation on Douban-Event, Meetup and Yelp datasets, as shown in Table 5, Table 6, Table 7, Table 8 and Table 9. We show the results of HR@N and NDCG@N metrics. To support the significance of our improvements, we report the performance improvements using statistical tests. Using t tests, as shown in Table 10, we report the different in HR and NDCG for MAGER and baselines on Shanghai and Phoenix datasets. Our method achieve statistical significance, where p-values < 0.01 over all baselines for group recommendation in two datasets.

Table 5.

Recommendation performance of MAGER and baselines for Group/User Recommendation on HR and NDCG metrics on Douban-Event Shanghai dataset (bold: best; underline: runner-up).

Table 6.

Recommendation performance of MAGER and baselines for Group/User Recommendation on HR and NDCG metrics on Douban-Event Beijing dataset (bold: best; underline: runner-up).

Table 7.

Recommendation performance of MAGER and baselines for Group/User Recommendation on HR and NDCG metrics on Meetup Phoenix dataset (bold: best; underline: runner-up).

Table 8.

Recommendation performance of MAGER and baselines for Group/User Recommendation on HR and NDCG metrics on Meetup Chicago dataset (bold: best; underline: runner-up).

Table 9.

Recommendation performance of MAGER and baselines for Group/User Recommendation on HR and NDCG metrics on Yelp dataset (bold: best; underline: runner-up).

Table 10.

p-Values of all the t-tests over top@5 to top@20 carried out to compare the performance of all baselines.

Recommendation on Douban-Event. From these results, as shown in Table 5 and Table 6, we can observe the following: (1) In user recommendation task, MAGER consistently and significantly outperforms all baselines, and MAGER provides such as 13.07% on HR@5 and 18.55% on NDCG@5 improvement over STARec on Shanghai. And MAGER provides such as 12.85% on HR@5 improvement over STARec and 17.97% on NDCG@5 improvement over GroupAV on Beijing. (2) In group recommendation task, MAGER also consistently and significantly outperforms all baselines, and MAGER provides such as 19.02% on HR@5 improvement over GroupAV and 36.12% on NDCG@5 improvement over ConsRec on Shanghai. And MAGER provides such as 9.71% on HR@5 improvement over GroupAV and 20.35% on NDCG@5 improvement over ConsRec on Beijing. (3) Both Agree and ConsRec are aggregation-based methods, but ConsRec demonstrates significantly superior performance compared to AGREE. The possible reason from the denser group–event interactions in the Douban-Event, as shown in Table 4, which enables ConsRec to achieve better recommendation effectiveness. (4) GroupIM is a contrastive learning-based method, which often generate contrastive views by adopting random strategies, which impairs the performance of this method. And CVTM and GBGCN both are a method that does not use member aggregation strategy, which cannot improve the effectiveness of group recommendation. (5) GroupAV achieves the best results among all baselines for group recommendation, the possible reason is that GroupAV learns group preferences from multiple views. However, MAGER still outperforms GroupAV. since MAGER not only exploiting the multi-view preferences of the group, also to learn the preferences of members and groups for fine-grained features. This is very helpful in the EBSNs scenario, as using only event ID embeddings would significantly reduce the recommendation effect. (6) STARec performs the best in user recommendation. The main reason is that STARec models rich contextual information, such as location, time, and social relationships, which are highly applicable in EBSNs. Another important reason is that STARec is latest time-aware related, which is in line with the EBSN scenario and can capture dynamic user/group preferences.

Recommendation on Meetup. From these results, as shown in Table 7 and Table 8, we can observe that (1) In user recommendation task, MAGER consistently and significantly outperforms all baselines, and MAGER provides such as 4.17% on HR@5 and 12.77% on NDCG@5 improvement over ConsRec on Phoenix. And MAGER provides such as 4.92% on HR@5 improvement over ConsRec and 8.60% on NDCG@5 improvement over DHMAE on Chicago. (2) In group recommendation task, MAGER also consistently and significantly outperforms all baselines, and MAGER provides such as 5.97% on HR@5 and 6.68% on NDCG@5 improvement over GroupAV on Phoenix. And MAGER provides such as 10.89% on HR@5 and 4.79% on NDCG@5 improvement over GroupAV on Chicago. (3) CVTM has better performance of recommendation than Douban-Event, and the possible reason is that there are more event text information in Meetup that can be mined. (4) ConsRec is better than DHMAE in user recommendation and worse than DHMAE in group recommendation, on both datasets, showing the effectiveness of the hypergraph-based method (DHMAE) over the aggregation-based method (ConsRec) on group recommendation.

Recommendation on Yelp. From these results, as shown in Table 9, we can observe that (1) In User Recommendation task, MAGER consistently and significantly outperforms all baselines, and MAGER provides such as 3.63% on HR@5 and 5.63% on NDCG@5 over ConsRec. (2) In Group Recommendation task, MAGER consistently and significantly outperforms all baselines, and MAGER provides such as 2.05% on HR@5 and 2.51% on NDCG@5 over ConsRec. (3) On the Yelp dataset, the ConsRec method outperforms all other baselines, probably because ConsRec focuses primarily on preference consistency among group members. Since the businesses in the Yelp dataset mainly consist of shopping centers, restaurants, and hotels, they align better with the consistent member preferences modeled by ConsRec. (4) AGREE shows significantly better performance compared to the Douban-Event and Meetup datasets. A possible reason is that only venue and time features are available in Yelp, while the absence of more features (e.g., organizer) greatly diminishes the advantage of MAGER. Nevertheless, the fine-grained feature attention method still outperforms the simple concatenation way.

6.3.2. Contribution of Different Components

We conduct two types of ablation studies. The first one is to compare the contributions of different features of event to the recommendation performance of our MAGER and to compare different feature fusion methods. The second is to compare the influence of different attention component on our model MAGER.

(1) We first employ the Leave-One-Feature-Out method [] to evaluate the impact of different event features on the MAGER’s recommendation performance. This method removes single features in turn and observes changes in recommendation performance to quantify their importance. The experimental results, as shown in Table 11, Table 12 and Table 13, reveal the following observation: The organizer feature has the most significant influence on the recommendations, indicating that organizer plays a critical role in modeling group/user preferences. This is likely because users tend to follow events hosted by specific organizers (e.g., familiar communities, institutions, or individuals), which strongly influences their participation decisions.

Table 11.

The performance of different MAGER variants on Douban-Event dataset (bold: best; underline: runner-up).

Table 12.

The performance of different MAGER variants on Meetup dataset (bold: best; underline: runner-up).

Table 13.

The performance of different MAGER variants on Yelp dataset (bold: best; underline: runner-up).

In addition, the AverageFeatureEmbedding variant, ConcatenateFeatureEmbedding variant, and NoFeatures variant are designed to evaluate the effectiveness of our proposed fine-grained feature attention method. The results show that the performance of the Concat.FeatureEmbedding method is the best. This is because the concatenate method retains complete feature information and performs better in nonlinear models (such as NCF). And the NoFeatures variant uses only the event ID embedding and has the poorest performance, which indicates the significance of fine-grained features. To verify the significance of the dynamic update mechanism, we design the MAGER-Static variant that uses static group and user embeddings. The results demonstrate that the dynamic update mechanism is helpful in improving the MAGER performance.

(2) There are mainly three key attention components: one is the Fine-grained Feature Attention for event representation (FFA), the second is the Member Aggregation Attention for group preference representation (MAA), and the third is the Group Preference Attention for event representation (GPA). From the result of Table 14, we can observe that the performance of the model without GPA (w/o GPA) is the best, indicating that the GPA component has the least impact on MAGER. When MAGER model is performing group recommendation, it needs to generate group representations and corresponding event representations. Since the representation of event requires the participation of the target user, the GPA component is constructed when generating event representations. In the w/o GPA, we generate event presentations using a simple ConcatenateFeatureEmbedding method, but in the user recommendation, the event representation still uses the FFA component to generate. From the results, it can be seen that removing the GPA component has a relatively small impact on the effect, and the effect remains unchanged for the user recommendation task. However, the performance of the w/o MAA is the worst, which indicates that for MAGER model, the attention dynamic aggregation of the preference of group members is the most important. Note that the w/o MAA uses the average member preference method to aggregate the group members’ preferences. Finally, we design a variant of NoMultiAttention, i.e., Ave.Feature and Ave.Member, which means that all the three attention modules of MAGER have been removed and replaced by simple averaging operation. Specifically, averaging feature embeddings, averaging member preference embeddings, and averaging feature embeddings for group preference. The results show that the performance of Ave.Feature and Ave.Member is much worse than that of MAGER and even worse than removing a single attention, this proves our multi-attention structure is effective.

Table 14.

The influence of attention component for MAGER (bold: best; underline: runner-up).

6.3.3. Sensitivity to Data Sparsity

To investigate the performance of our MAGER under data sparsity, we conduct a comparative experiment on sparse training datasets from Douban-Event Shanghai and Meetup Phoenix. The sparse training datasets are obtained by randomly reducing the original training data by ratios ranging from 5% to 20% in increments of 5, while keeping the testing datasets unchanged []. Notably, if a user’s interaction data was completely removed during preprocessing, their testing data will be remove. We only report the results with 20% less training data. As shown in Table 15, we observe that the performance of all methods degradation with sparser training sets. Nevertheless, MAGER consistently achieves the best performance in both group and user recommendation, which confirms the effectiveness under sparse data.

Table 15.

Sensitivity to data sparsity of MAGER (for 20% less training data).

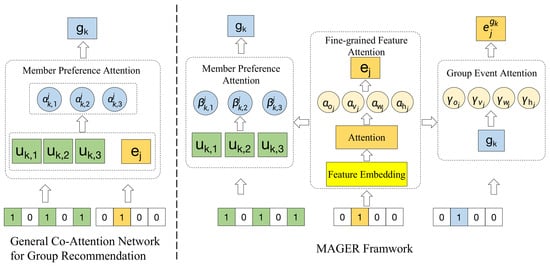

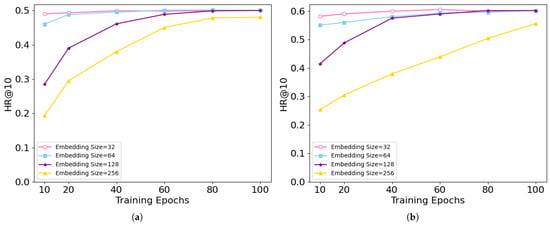

6.3.4. Influence of Different Embedding Sizes

Figure 6 presents the impact of varying embedding sizes on the group recommendation performance of the MAGER method on Douban-Event Shanghai and Meetup Phoenix datasets, with HR@10 metric. Experimental results demonstrate that an embedding size of 128 achieves the most stable convergence behavior and the highest final performance across all evaluation metrics. In contrast, embedding sizes of 32 and 64 allow for relatively rapid initial convergence but exhibit limited performance improvements during later training stages, ultimately reaching levels comparable to the 128 setting. This suggests that while smaller embeddings enable the proposed attention-based neural collaborative filtering to quickly capture group-level participation preferences, they lack sufficient representational capacity for finer-grained preference modeling. Notably, when the embedding size is increased to 256, the performance metrics during training remain significantly lower compared to the 128 embedding size. This degradation indicates that excessively large embeddings introduce redundancy and noise, impairing both convergence efficiency and preference representation quality, thereby leading to suboptimal recommendation outcomes. These findings highlight the importance of carefully tuning the embedding size to balance model expressiveness and generalization ability.

Figure 6.

The performance of MAGER for different embedding size on Douban-Event Shanghai and Meetup Phoenix datasets for group recommendation task. (a) Douban-Event Shanghai; (b) Meetup Phoenix.

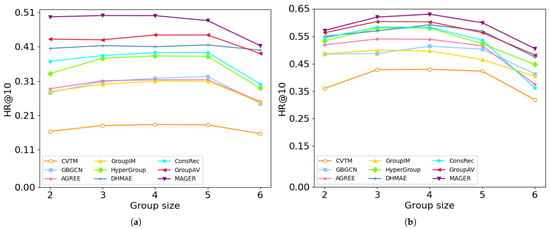

6.3.5. Influence of Group Size

The performance of various group recommendation methods under different group sizes is illustrated in Figure 7 on the Douban-Event and Meetup datasets respectively. The experimental results reveal minimal differences in recommendation performance across different methods and group sizes. The possible reasons are as follows: (1) The method of group formation plays a crucial role in these results. In our experimental data, groups were created by selecting users with similar interests, leading to strong preference homogeneity among group members. This characteristic ensures stable collective preferences regardless of variations in group size, thus minimizing fluctuations in performance for each method. (2) The relatively small size of the groups used in the experiment also had an impact on this result. Due to the limited size of the groups, the influence on the recommendation model was also restricted. However, significantly expanding the group size (for example, increasing to more than 10 people) might introduce greater diversity in group preferences, which could potentially exacerbate the performance differences between different methods. For instance, a larger group might experience more conflicts of interest among its members, making the collective preference prediction more complex and affecting the quality of the recommendations. (3) From the trends of the lines in Figure 7, we can observe that for HR@10, when the group size = 6, the performance of almost all methods experiences a slight decline. One possible reason is that HR measures the proportion of hits in the recommendation list, and as the group size increases, individual member interests become more diverse, making it more difficult for the recommendation list to satisfy all members simultaneously. For instance, when the group size = 2, it only needs to cover the interests of two members, whereas for a size = 6, it must satisfy six members at once, leading to a lower hit rate.

Figure 7.

The performance of MAGER for different size of groups for group recommendation task. (a) Douban-Event Shanghai; (b) Meetup Phoenix.

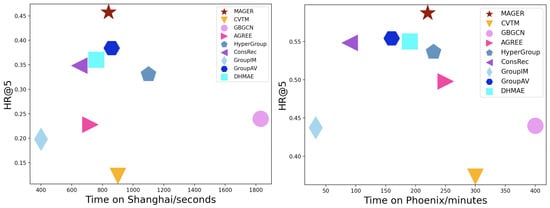

6.3.6. Efficiency Study

We evaluate the efficiency of our MAGER by comparing the total running time (training plus testing) with all baselines on two datasets, as shown in Figure 8, which shows the HR@5 and running time (seconds/minutes). We conduct the experiments on GPU machines of Nvidia Tesla V100 with 32 GB memory. We can observe that our MAGER performs relatively efficiently on both of the two different datasets, but MAGER still has the best recommendation accuracy compared to baselines. Shanghai and Phoenix are two datasets of different sizes. The model MAGER perform nearly well in terms of efficiency on both datasets of these two sizes.

Figure 8.

Efficiency study.

7. Discussion

In this work, we propose MAGER, a group event recommendation framework based on multiple attention mechanisms, which effectively captures user and group preferences at the fine-grained feature level through a heterogeneous attentions structure. Experiments on three real-world datasets demonstrate that MAGER significantly outperforms existing methods in both group and user recommendation tasks, validating the importance of feature-level attention and heterogeneous attentions structure in modeling dynamic group preferences.

7.1. Theoretical and Practical Implications

This work demonstrates that the Fine-grained Feature Attention (FFA) module plays a crucial role in capturing user and group preferences in EBSNs. A key theoretical insight revealed by our empirical analysis is that among all event features, the organizer exhibits the most dominant influence on both user and group behaviors. This finding indicates that users tend to rely heavily on organizer credibility when selecting events, which substantially shapes group preferences. Therefore, future EBSN recommendation models should avoid treating all features uniformly and instead explicitly differentiate the contributions of individual features, especially organizer-related signals, when modeling user and group decisions. This insight enhances our theoretical understanding of how fine-grained features affect group preferences in EBSNs scenarios. From a practical perspective, MAGER demonstrates robust performance under sparse interaction, which are common in real-world platforms such as Douban-Event and Meetup where new events emerge frequently and user interaction histories are limited. By accurately learning feature-level preferences, even when user histories are short, MAGER improves recommendation quality in dynamic and data sparse. This highlights the practical value of incorporating feature-aware attention mechanisms in EBSN recommenders, particularly in platforms characterized by fast-evolving event life cycles. Overall, this study provides both theoretical insights into feature-specific preference modeling and practical evidence of adaptability to sparse and dynamic social settings.

7.2. Limitations

Despite its advantages, MAGER has several limitations. First, MAGER does not explicitly capture latent correlations among different features, which may reduce the richness of event representations. Second, our experiments are conducted on two structurally similar EBSNs, and thus further validation is needed on more heterogeneous platforms. Third, although the hierarchical attention design improves performance, its computational cost increases with the number of event features, which may limit scalability in large-scale applications. More importantly, the use of synthetic groups constitutes a key limitation. In this study, groups are generated by clustering users who have participated in the same event more than three times. As shown in Section 6.3.5, this construction method leads to strong preference homogeneity among group members, which in turn results in minimal performance variation across different group sizes. This homogeneity, in turn, minimized performance fluctuations across different group sizes, potentially masking the true challenges of modeling dynamic group preferences. Future work should therefore explore more realistic or dynamic group formation mechanisms to better reflect real-world group behaviors and to more rigorously evaluate the model’s capability in handling preference diversity.

7.3. Future Work

Future work could explore more diverse group construction methods, such as forming groups based on social or geographical criteria with minimal overlap in historical activities, to evaluate the model’s robustness under preference conflicts and low-consistency conditions. Additionally, introducing a cross-feature attention mechanism to explicitly model correlations between features, e.g., organizer and venue, and incorporating this information into NCF could further enrich event representations. Finally, applying MAGER to a wider range of heterogeneous EBSN platforms and integrating multi-modal event features would help validate its generalizability and practical applicability.

Author Contributions

Conceptualization, X.H. and X.M.; methodology, X.H.; software, X.H.; validation, X.H., X.M., and Y.Z.; formal analysis, X.H. and X.M.; investigation, X.H.; resources, X.M.; writing—original draft preparation, X.H.; writing—review and editing, X.H. and X.M.; supervision, X.M. and Y.Z.; project administration, X.H.; funding acquisition, X.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding. The APC was funded by the authors.

Data Availability Statement

The datasets, models, and code used in this research are available upon request from the corresponding author, subject to reasonable conditions.

Acknowledgments

This study would not have been possible without the encouragement and ongoing support of Beijing University of Posts and Telecommunications.

Conflicts of Interest

The author declares no conflicts of interest.

References

- Lan, T.; Guo, L.; Li, X.; Chen, G. Research on the Prediction System of Event Attendance in an Event-Based Social Network. Wirel. Commun. Mob. Comput. 2022, 2022, 1701345. [Google Scholar] [CrossRef]

- Manikandan, G.; Sam, R.S.; Gilbert, S.F.; Srikanth, K. Event-Based Social Networking System With Recommendation Engine. Int. J. Intell. Inf. Technol. (IJIIT) 2024, 20, 1–16. [Google Scholar] [CrossRef]

- Zhang, A.X.; Bhardwaj, A.; Karger, D. Confer: A conference recommendation and meetup tool. In Proceedings of the 19th ACM Conference on Computer Supported Cooperative Work and Social Computing Companion, San Francisco, CA, USA, 27 February–2 March 2016; pp. 118–121. [Google Scholar]

- Müngen, A.A.; Kaya, M. A novel method for event recommendation in meetup. In Proceedings of the 2017 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining, Sydney, Australia, 31 July–3 August 2017; pp. 959–965. [Google Scholar]

- Wang, Z.; Zhang, Y.; Li, Y.; Wang, Q.; Xia, F. Exploiting social influence for context-aware event recommendation in event-based social networks. In Proceedings of the IEEE INFOCOM 2017—IEEE Conference on Computer Communications, Atlanta, GA, USA, 1–4 May 2017; pp. 1–9. [Google Scholar]

- Du, Y.; Meng, X.; Zhang, Y.; Lv, P. GERF: A Group Event Recommendation Framework Based on Learning-to-Rank. IEEE Trans. Knowl. Data Eng. 2020, 32, 674–687. [Google Scholar] [CrossRef]

- Pham, T.A.N.; Li, X.; Cong, G.; Zhang, Z. A general graph-based model for recommendation in event-based social networks. In Proceedings of the 2015 IEEE 31st International Conference on Data Engineering, Seoul, Republic of Korea, 13–17 April 2015; pp. 567–578. [Google Scholar]

- Liao, Y.; Lam, W.; Bing, L.; Shen, X. Joint modeling of participant influence and latent topics for recommendation in event-based social networks. ACM Trans. Inf. Syst. (TOIS) 2018, 36, 1–31. [Google Scholar] [CrossRef]

- Wang, Y.; Tang, J. Event2Vec: Learning event representations using spatial-temporal information for recommendation. In Proceedings of the Pacific-Asia Conference on Knowledge Discovery and Data Mining, Macau, China, 14–17 April 2019; pp. 314–326. [Google Scholar]

- Macedo, A.Q.; Marinho, L.B.; Santos, R.L. Context-Aware Event Recommendation in Event-based Social Networks. In Proceedings of the ACM RecSys, Vienna, Austria, 16–20 September 2015; pp. 123–130. [Google Scholar] [CrossRef]

- Du, Y.; Meng, X.; Zhang, Y. CVTM: A Content-Venue-Aware Topic Model for Group Event Recommendation. IEEE Trans. Knowl. Data Eng. 2020, 32, 1290–1303. [Google Scholar] [CrossRef]

- Han, X.; Meng, X.; Zhang, Y. Exploiting multiple influence pattern of event organizer for event recommendation. Inf. Process. Manag. 2025, 62, 103966. [Google Scholar] [CrossRef]

- Lai, Y.; Zhang, Y.; Meng, X.; Du, Y. Preference and Constraint Factor Model for Event Recommendation. IEEE Trans. Knowl. Data Eng. 2022, 34, 4982–4993. [Google Scholar] [CrossRef]

- Cao, D.; He, X.; Miao, L.; An, Y.; Yang, C.; Hong, R. Attentive group recommendation. In Proceedings of the ACM SIGIR, Ann Arbor, MI, USA, 8–12 July 2018; pp. 645–654. [Google Scholar]

- Guo, L.; Yin, H.; Wang, Q.; Cui, B.; Huang, Z.; Cui, L. Group Recommendation with Latent Voting Mechanism. In Proceedings of the 2020 IEEE 36th International Conference on Data Engineering (ICDE), Dallas, TX, USA, 20–24 April 2020; pp. 121–132. [Google Scholar]

- He, Z.; Chow, C.Y.; Zhang, J.D. GAME: Learning Graphical and Attentive Multi-view Embeddings for Occasional Group Recommendation. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR ’20), Virtual, 25–30 July 2020; pp. 649–658. [Google Scholar]

- Wu, X.; Xiong, Y.; Zhang, Y.; Jiao, Y.; Zhang, J.; Zhu, Y.; Yu, P.S. Consrec: Learning consensus behind interactions for group recommendation. In Proceedings of the ACM Web Conference 2023 (WWW), Austin, TX, USA, 30 April–4 May 2023; pp. 240–250. [Google Scholar]

- Zhang, S.; Meng, X.; Zhang, Y. Variational Type Graph Autoencoder for Denoising on Event Recommendation. ACM Trans. Inf. Syst. 2025, 43. [Google Scholar] [CrossRef]

- Liu, C.Y.; Zhou, C.; Wu, J.; Xie, H.; Hu, Y.; Guo, L. CPMF: A collective pairwise matrix factorization model for upcoming event recommendation. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 1532–1539. [Google Scholar]

- Liu, F.; Cheng, Z.; Zhu, L.; Gao, Z.; Nie, L. Interest-aware message-passing GCN for recommendation. In Proceedings of the Web Conference 2021, Ljubljana, Slovenia, 19–23 April 2021; pp. 1296–1305. [Google Scholar]

- Mo, Y.; Li, B.; Wang, B.; Yang, L.T.; Xu, M. Event recommendation in social networks based on reverse random walk and participant scale control. Future Gener. Comput. Syst. 2018, 79, 383–395. [Google Scholar] [CrossRef]

- Liu, D.; He, M.; Luo, J.; Lin, J.; Wang, M.; Zhang, X.; Pan, W.; Ming, Z. User-Event Graph Embedding Learning for Context-Aware Recommendation. In Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Washington, DC, USA, 14–18 August 2022; pp. 1051–1059. [Google Scholar] [CrossRef]

- Jiang, X.; Sun, H.; Zhang, B.; He, L.; Jia, X. A novel meta-graph-based attention model for event recommendation. Neural Comput. Appl. 2022, 34, 14659–14682. [Google Scholar] [CrossRef]

- Sun, B.; Wei, X.; Cui, J.; Wu, Y. Social activity matching with graph neural network in event-based social networks. Int. J. Mach. Learn. Cybern. 2023, 14, 1989–2005. [Google Scholar] [CrossRef]

- Liang, Y. FAER: Fairness-Aware Event-Participant Recommendation in Event Based Social Networks. IEEE Trans. Big Data 2024, 10, 655–668. [Google Scholar] [CrossRef]

- TrinhHuy, T.; Trinh, T.; NguyenViet, H.; Emara, T.Z.; VuongThi, N. Event scale prediction in event-based social networks. In Proceedings of the International Conference on Industrial Networks and Intelligent Systems, Da Nang, Vietnam, 20–21 February 2025; pp. 29–41. [Google Scholar]

- Li, R.; Meng, X.; Zhang, Y. Group-Aware Dynamic Graph Representation Learning for Next POI Recommendation. IEEE Trans. Knowl. Data Eng. 2025, 37, 2614–2625. [Google Scholar] [CrossRef]

- Liang, R.; Zhang, Q.; Wang, J. Hierarchical fuzzy graph attention network for group recommendation. In Proceedings of the 2021 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE), Luxembourg, 11–14 July 2021; pp. 1–6. [Google Scholar]

- Liang, R.; Zhang, Q.; Wang, J.; Lu, J. A hierarchical attention network for cross-domain group recommendation. IEEE Trans. Neural Netw. Learn. Syst. 2022, 35, 3859–3873. [Google Scholar] [CrossRef] [PubMed]

- Huang, X.; Zhou, Z.; Li, J.; Xiong, N.N.; Yi, Y.; Liu, J.; Liao, G. An Effective Multi-Scale Contrastive Learning System for Online Group Recommendation Services in Event-Based Social Networks. IEEE Trans. Serv. Comput. 2025, 18, 2616–2631. [Google Scholar] [CrossRef]

- Liao, G.; Deng, X.; Wan, C.; Liu, X. Group event recommendation based on graph multi-head attention network combining explicit and implicit information. Inf. Process. Manag. 2022, 59, 102797. [Google Scholar] [CrossRef]

- Deng, X.; Liao, G.; Zeng, Y. Group event recommendation based on a heterogeneous attribute graph considering long- and short-term preferences. J. Intell. Inf. Syst. 2023, 61, 271–297. [Google Scholar] [CrossRef]

- Guo, L.; Yin, H.; Chen, T.; Zhang, X.; Zheng, K. Hierarchical Hyperedge Embedding-Based Representation Learning for Group Recommendation. ACM Trans. Inf. Syst. 2021, 40, 3. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhang, H.; Bai, Q.; Nie, C.; Yuan, X. DHMAE: A Disentangled Hypergraph Masked Autoencoder for Group Recommendation. In Proceedings of the 47th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR ’24), Washington, DC, USA, 14–18 July 2024; pp. 914–923. [Google Scholar] [CrossRef]

- Yin, H.; Wang, Q.; Zheng, K.; Li, Z.; Yang, J.; Zhou, X. Social influence-based group representation learning for group recommendation. In Proceedings of the 2019 IEEE 35th International Conference on Data Engineering (ICDE), Macao, China, 8–11 April 2019; pp. 566–577. [Google Scholar]

- Huang, Z.; Xu, X.; Zhu, H.; Zhou, M. An efficient group recommendation model with multiattention-based neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 4461–4474. [Google Scholar] [CrossRef]

- Zhang, J.; Gao, C.; Jin, D.; Li, Y. Group-buying recommendation for social e-commerce. In Proceedings of the 2021 IEEE 37th International Conference on Data Engineering (ICDE), Chania, Greece, 19–22 April 2021; pp. 1536–1547. [Google Scholar]

- Sankar, A.; Wu, Y.; Wu, Y.; Zhang, W.; Yang, H.; Sundaram, H. GroupIM: A Mutual Information Maximization Framework for Neural Group Recommendation. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual, 25–30 July 2020; pp. 1279–1288. [Google Scholar] [CrossRef]

- Yang, W.; Xu, J.; Zhou, R.; Chen, L.; Li, J.; Zhao, P.; Liu, C. Multi-view Attentive Variational Learning for Group Recommendation. In Proceedings of the 2024 IEEE 40th International Conference on Data Engineering (ICDE), Utrecht, The Netherlands, 13–16 May 2024; pp. 5022–5034. [Google Scholar] [CrossRef]

- Ma, Y.; Jiang, Z.Z.; Sun, M.; Yuan, Y.; Wang, G. Where Shall We Go: Point-of-Interest Group Recommendation With User Preference Embedding. IEEE Trans. Big Data 2025, 11, 1614–1627. [Google Scholar] [CrossRef]

- Wang, X.; He, X.; Nie, L.; Chua, T.S. Item Silk Road: Recommending Items from Information Domains to Social Users. In Proceedings of the 40th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR ’17), Shinjuku, Japan, 7–11 August 2017; pp. 185–194. [Google Scholar] [CrossRef]

- Wu, J.; Wang, X.; Gao, X.; Chen, J.; Fu, H.; Qiu, T. On the Effectiveness of Sampled Softmax Loss for Item Recommendation. ACM Trans. Inf. Syst. 2024, 42, 98. [Google Scholar] [CrossRef]

- Ji, W.; Meng, X.; Zhang, Y. STARec: Adaptive Learning with Spatiotemporal and Activity Influence for POI Recommendation. ACM Trans. Inf. Syst. 2021, 40, 65. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).