Abstract

Deep learning-based synthetic aperture radar (SAR) target recognition often suffers from overfitting under few-shot conditions, making it difficult to fully exploit the discriminative features contained in limited samples. Moreover, SAR targets frequently exhibit highly similar background scattering patterns, which further increase intra-class variations and reduce inter-class separability, thereby constraining the performance of few-shot recognition. To address these challenges, this paper proposes an adaptive contrastive metric (ACM) network with background suppression for few-shot SAR target recognition. Specifically, a spatial squeeze-and-excitation (SSE) attention module is introduced to adaptively highlight salient scattering structures of the target while effectively suppressing noise and irrelevant background interference, thus enhancing the robustness of feature representation. In addition, an ACM module is designed, where query samples are compared not only with their corresponding support class but also with the remaining classes. This enables explicit suppression of confusing background features and enlarges inter-class margins, thereby improving the discriminability of the learned feature space. The experimental results on publicly available SAR target recognition datasets demonstrate that the proposed method achieves significant improvements in background suppression and consistently outperforms several state-of-the-art metric-based few-shot learning approaches, validating the effectiveness and generalizability of the proposed framework.

1. Introduction

Synthetic aperture radar (SAR), as an active microwave imaging technology, can acquire ground object information under all weather and all-day conditions, with advantages such as strong penetration, high anti-interference capability, and fine resolution. Unlike optical or infrared imaging, SAR does not rely on natural illumination or weather conditions, and thus remains stable in complex environments. It has been widely applied in military reconnaissance [], disaster monitoring [], resource exploration [], and environmental sensing []. However, SAR imagery also faces unique challenges, including speckle noise interference, high similarity between targets and backgrounds, and difficulties in sample acquisition. These issues severely constrain the performance of deep learning–based automatic target recognition (ATR). Therefore, achieving robust SAR target recognition under limited-sample conditions has become a critical scientific problem that urgently needs to be addressed in the field of intelligent remote sensing interpretation.

Traditional SAR target recognition methods [,,,,] primarily include template matching, statistical modeling, and shallow machine learning approaches. By extracting geometric structures, scattering characteristics, or texture information, these methods significantly advanced the early development of automatic SAR image interpretation and laid an important foundation for subsequent research. With the evolution of pattern recognition and computational intelligence, researchers began to integrate feature engineering with classification models to further improve recognition accuracy and efficiency. Nevertheless, the true breakthrough came with the rise of deep learning, which opened a new chapter for SAR target recognition. In particular, the adoption of convolutional neural networks (CNNs) and other end-to-end feature learning methods [,,,,,,] has enabled models to automatically learn hierarchical representations directly from raw SAR data, greatly enhancing the expressive power and discriminative capacity of feature extraction, and driving SAR intelligent recognition into a new stage of development.

The abundance of data has been a cornerstone of deep learning’s remarkable success, providing models with sufficient samples and diverse information to better capture data characteristics and learn effective representations. However, when training data are limited, CNN-based deep models are prone to overfitting, resulting in degraded recognition performance. Compared with optical imagery, the acquisition of SAR data poses greater challenges in practical applications: on the one hand, SAR image collection is constrained by sensor platforms, observation conditions, and security considerations; on the other hand, annotating SAR data requires domain expertise, making the process both time-consuming and costly. To address the bottleneck of insufficient SAR samples, researchers have explored a wide range of strategies. To alleviate the high cost of annotation, semi-supervised learning [] exploits a mixture of limited labeled samples and abundant unlabeled data to improve model performance and generalization, while active learning focuses on labeling the most informative samples to maximize efficiency under limited annotation budgets. For cases where large-scale SAR samples are difficult to obtain, methods such as data augmentation [], data generation [], and transfer learning [] have been widely applied to enrich sample diversity, synthesize training data, or transfer knowledge from other domains. These strategies open up new avenues for SAR target recognition and provide solid support for achieving efficient recognition under limited-sample conditions.

Under limited-sample conditions, conventional deep learning approaches often fail to achieve satisfactory performance. To address this challenge, researchers have increasingly turned to few-shot learning (FSL) as an effective paradigm for handling data scarcity. Mainstream FSL methods can be broadly categorized into three groups: (1) metric-based methods, which construct a metric space and perform classification by comparing similarities between samples; (2) optimization-based methods, which leverage meta-learning frameworks to quickly adapt to new classes across tasks; and (3) model-based methods, which employ generative models or external memory mechanisms to enrich sample representations. Among these approaches, metric-based FSL stands out for its simplicity, computational efficiency, and strong discriminative capability even with extremely limited samples, making it particularly suitable for SAR target recognition. Therefore, this study adopts metric-based FSL as its core methodology to explore new ways of enhancing SAR target recognition under limited-sample conditions.

To tackle the aforementioned challenges, this paper proposes an adaptive contrastive metric (ACM) network with background suppression for few-shot SAR target recognition. Unlike conventional metric-based methods that primarily rely on limited support samples for direct matching, our framework leverages a spatial squeeze-and-excitation (SSE) attention module to selectively emphasize the salient scattering structures of the target while mitigating irrelevant background, thereby reinforcing the robustness of feature representation. Furthermore, an ACM module is developed, which explicitly incorporates contrasts with the remaining classes. This design enables the suppression of confusing background features and the enlargement of inter-class margins. By jointly optimizing feature robustness and discriminability, the proposed method provides a novel solution to improve recognition performance under few-shot conditions.

The main contributions of this study can be summarized as follows:

We propose a few-shot SAR target recognition method that integrates an ACM network with background suppression, effectively alleviating the problems of overfitting and insufficient inter-class separability under limited training samples.

An SSE attention module is introduced to adaptively emphasize the salient scattering structures of the target while suppressing noise and irrelevant background, thereby improving the robustness and discriminability of feature representation.

An ACM module is proposed and combined with the image-to-class (I2C) module to construct a discriminative metric module. This module is not only used to evaluate the similarity between query samples and their corresponding support classes but is also explicitly extended to incorporate comparisons with other classes. Through this design, background interference can be effectively suppressed, inter-class separability is enhanced, and the model’s accuracy in target recognition tasks is significantly improved.

The remainder of this paper is organized as follows: Section 2 provides a brief review of research progress on few-shot SAR target recognition and metric-based FSL methods. Section 3 presents a detailed description of the proposed framework and its core modules. Section 4 analyzes the experimental results to validate the effectiveness of the method. Section 5 concludes this work and outlines future research directions.

2. Related Works

2.1. Metric-Based FSL Algorithms

In recent years, metric learning has become one of the mainstream approaches in FSL. Koch et al. [] first proposed the Siamese network, which measures the similarity between sample pairs for one-shot image recognition. Subsequently, Vinyals et al. [] introduced matching networks, which employ an attention mechanism to establish matching relationships between support and query sets. Snell et al. [] proposed prototypical networks, which construct a metric space using class prototypes. Sung et al. [] further developed relation networks, learning a nonlinear metric function to improve few-shot classification performance. In addition, Li et al. [] introduced distribution consistency constraints and designed a covariance metric network to enhance feature distribution modeling.

Recently, attention and contrastive learning mechanisms have been increasingly integrated into metric-based frameworks to enhance discriminative capability. For example, DeepEMD [] introduced a differentiable Earth Mover’s Distance to achieve fine-grained instance-level matching, while Few-shot Embedding Adaptation Transformer (FEAT) [] employed set-to-set embedding adaptation with Transformer-based attention to improve task-specific representation learning. Beyond these attention-based extensions, contrastive frameworks such as SimCLR [] and Contrastive Language–Image Pre-training (CLIP) [] further expanded the metric-learning paradigm by explicitly optimizing intra-class compactness and inter-class separability through contrastive alignment. These advances bridge traditional prototype-based FSL and modern contrastive representation learning, providing a broader conceptual foundation for our proposed adaptive contrastive metric framework.

However, most of the above methods mainly rely on global image-level features for metric computation, which limits their ability to capture fine-grained discriminative information. This drawback becomes particularly evident under complex backgrounds or when inter-class similarity is high. To address this issue, Li et al. [] revisited local feature descriptors and proposed an I2C metric that effectively alleviates the shortcomings of global metrics, offering a new perspective for metric-based FSL.

2.2. Metric-Based FSL Algorithms for SAR ATR

In recent years, few-shot SAR target recognition has emerged as a significant research direction in intelligent remote sensing interpretation. Early studies primarily focused on transfer learning and cross-domain adaptation, where deep transfer learning [,] and cross-modal knowledge transfer [] were employed to mitigate the performance degradation caused by limited samples. Subsequently, extensive efforts have been devoted to metric learning frameworks, including transductive prototypical attention reasoning network discriminative metric networks [], Fourier- or SVD-based feature reconstruction metrics [,,], as well as prototype-based and cosine prototype learning approaches [,], all of which effectively improve discriminability under few-shot settings. Meanwhile, meta-learning concepts have been introduced into SAR recognition, such as hyperparameter-based fast adaptation [], which further enhances task-level adaptability. Building on these advances, researchers have increasingly emphasized the integration of structural features and prior knowledge, for example, scattering attribute-based feature modeling [], transformer-enhanced few-shot SAR-ATR model [], and Siamese subspace classification networks []. These approaches aim to capture better the physical scattering properties and geometric relations of SAR targets. In parallel, some studies have investigated imaging conditions and geometric priors, such as optimal azimuth angle selection [], to improve recognition stability under limited-sample scenarios.

Despite these advances, few-shot SAR target recognition remains highly challenging due to the unique imaging characteristics of SAR data. The presence of speckle noise, complex and highly similar background scattering, and view-dependent target structures leads to large intra-class variations and small inter-class margins. Moreover, the limited availability of labeled samples restricts deep models from fully exploiting discriminative scattering features, while strong correlations between targets and their surrounding backgrounds often cause overfitting to contextual cues rather than intrinsic target structures. To address these challenges, this paper proposes an adaptive contrastive metric (ACM) network with background suppression, which incorporates a spatial squeeze-and-excitation (SSE) module to emphasize salient scattering regions and suppress irrelevant background interference, and an ACM module to perform cross-class contrastive alignment that explicitly enlarges inter-class separability and mitigates background confusion. Together, these designs enhance the robustness, discriminability, and generalization capability of few-shot SAR target recognition under complex imaging conditions.

3. Method

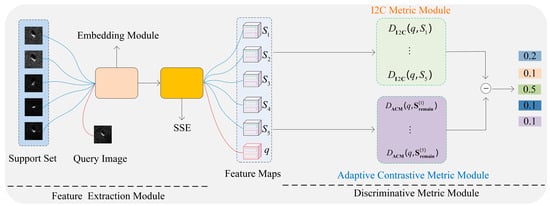

The proposed method consists of three main components: a feature extraction module, an SSE attention module, and a discriminative metric module. First, both the support samples and query samples are passed through the embedding module to extract deep feature representations, thereby constructing a more discriminative feature space. The SSE module models the spatial distribution of feature maps by generating an attention map that emphasizes target regions and suppresses background noise, thereby improving feature robustness and discriminability. The discriminative metric module is composed of two submodules: the I2C metric, which measures the similarity between a query sample and its corresponding support class, and the ACM module, which evaluates the similarity between the query sample and other non-target support classes. To enhance discriminability, the final metric result is obtained by subtracting the latter from the former, which explicitly pulls the query sample closer to its positive class while pushing it farther away from the other classes. During training, a cross-entropy loss is employed to optimize the overall metric result. The overall framework of the proposed approach is illustrated in Figure 1.

Figure 1.

The architecture of the proposed method.

3.1. Embedding Module

Given a SAR image , it is first fed into the embedding module to extract feature representations, which can be formulated as

where denotes the learnable parameters of the embedding module and represents the output feature map. Here, is the number of channels while and correspond to the spatial dimensions.

The embedding module comprises four convolutional blocks designed to progressively extract discriminative features from SAR images. The first two blocks each include a convolutional layer, batch normalization (BN), leaky ReLU activation, and a pooling operation. The convolutional layers use a kernel with a stride of 1 and padding of 1 to preserve spatial dimensions, while pooling reduces resolution. The last two blocks consist of a convolutional layer, BN, and leaky ReLU without pooling, to retain more spatial details.

3.2. SSE Attention Module

To enhance the model’s ability to focus on crucial spatial regions, we incorporate an SSE attention module. This module takes an input feature tensor of shape and produces a refined feature tensor of the same size. The operation of this module can be summarized in three steps:

- 1.

- Spatial Information Squeeze: We first employ a bias-free convolutional layer to reduce the channel dimension of the input features from to , generating an intermediate feature map . This operation aggregates information from all channels at each individual spatial location , which can be depicted as

- 2.

- Attention Weight Excitation: The intermediate feature map is then passed through a Sigmoid activation function to produce a spatial attention weight map with values in the range , which can be expressed as

- 3.

- Feature Reweighting: Finally, adaptive modulation of the features is achieved by performing element-wise multiplication between the attention map and the original input features . This enhances the feature responses in salient regions while suppressing those in non-salient ones, which can be represented as

3.3. Discriminative Metric Module

I2C metric module: In traditional metric-based FSL, most approaches rely on image-level feature representations to compute class similarity. However, under few-shot conditions, global features often fail to accurately capture the true distribution of classes. To address this issue, Li et al. proposed the DN4 algorithm, which applies the I2C similarity with a metric based on local descriptors. This approach provides richer and more discriminative fine-grained feature representations.

Specifically, given a query sample and a support set , their local descriptors are extracted by the embedding Module and the SSE, which can be expressed as

where denotes the number of local descriptors per image, and is the number of support samples.

The image-to-class similarity metric based on local descriptors can then be expressed as:

where represents the set of nearest neighbors of query descriptor among all support descriptors. In our experiments, is set to 3. The cosine similarity is defined as:

This local descriptor–based metric effectively captures fine-grained scattering patterns and spatial structural information, making it more discriminative than global representations. Moreover, the I2C metric module introduces no additional learnable parameters, which helps mitigate the risk of overfitting under few-shot conditions.

Adaptive contrastive measure module: The support set feature can be expressed as

where is the number of classes, is the number of samples per class, and is the feature dimension.

- (1)

- Intra-Class Remainder Set Construction

For any sample from class , construct the intra-class remainder set by excluding this sample, which can be represented as

where is arranged as a matrix of size , representing all sample features in class except .

- (2)

- Intra-Class Feature Aggregation

Apply attentive pooling to to obtain the intra-class summary vector, which can be expressed as

The attention weights are computed as

where is a learnable parameter. Attentive pooling performs a data-driven weighted aggregation of intra-class samples, making the aggregation focus more on the intra-class elements relevant to .

- (3)

- Inter-Class Remainder Set Construction

For class , gather all samples from all other classes to serve as the inter-class

This forms a matrix of size , encompassing the features of all samples not belonging to class .

- (4)

- Inter-Class Feature Aggregation

Apply max pooling to to obtain the inter-class summary vector, which can be denoted as

Max pooling selects the most significant response across classes for each dimension, thereby preserving the “most distinctive” inter-class cues. For greater robustness, this can be replaced with average pooling or attentive pooling without changing the overall framework.

- (5)

- Dual-Level Feature Fusion

Concatenate the intra-class and inter-class summary vectors and feed them into a lightweight fusion network to obtain the enhanced representation for , which can be indicated as

where denotes vector-level concatenation, and is a two-layer MLP:

If the desired output dimension is to match the original feature dimension, let , , where is the hidden dimension.

This step establishes a learnable interactive mapping between the “intra-class consistency summary” and the “inter-class discriminative summary”, outputting as the refined feature intended for downstream metric learning/matching tasks.

- (6)

- Final Remainder Set Construction

For class , aggregate all its enhanced representations:

and stack them along the class dimension to obtain:

where denotes stacking along the class dimension.

Therefore, the process of adaptive contrastive metric can be expressed as

Therefore, the proposed discriminative metric can be represented as

Here, denotes the set of all support classes, and represents the remaining support classes. By introducing the ACM module, the relation between the query sample and both target and non-target classes can be jointly evaluated, which enhances the discriminative power of the metric function. This parameter-free design also helps suppress background-related features and reduces the risk of overfitting under few-shot settings.

4. Experiments

4.1. Datasets

The MSTAR dataset, jointly released by the Defense Advanced Research Projects Agency (DARPA) and the U.S. Air Force, is one of the most representative benchmark datasets in the field of SAR target recognition and is widely used in automatic target recognition research. It was collected using X-band spotlight SAR imaging with a resolution of 0.3 m and includes side-looking SAR images of more than ten typical ground targets, such as 2S1, BMP-2, BRDM2, BTR-60, BTR-70, D-7, T-62, T-72, ZIL-131, and ZSU-234. For each target class, a large number of images were acquired under different azimuth angles (0–360°), depression angles, and imaging conditions. With its diverse target categories, complex imaging scenarios, and wide angular coverage, the MSTAR dataset also contains occluded and structurally similar targets, making it a valuable benchmark for evaluating models in few-shot recognition, inter-class similarity discrimination, and background suppression. Consequently, it has become the most widely used and one of the most challenging public benchmark datasets for SAR target recognition research.

The OpenSARShip dataset is a publicly available high-resolution SAR benchmark dataset for ship recognition, released by the Aerospace Information Research Institute of the Chinese Academy of Sciences and related institutions. It is constructed from Sentinel-1 satellite C-band SAR imagery and contains a large number of ship samples collected under diverse scenarios, covering various types of civilian and commercial vessels. Each image has a spatial resolution of approximately 10 m and spans a wide range of environments, including ports, coastal areas, and open sea routes. The dataset provides precise bounding box annotations and class labels for ship targets, enabling tasks such as detection, classification, and FSL. With its large scale, diverse categories, and complex scene variations, OpenSARShip serves as an important benchmark for evaluating the robustness and generalization of models in challenging maritime environments. Consequently, it has become one of the most widely used open datasets in SAR-based ship recognition research.

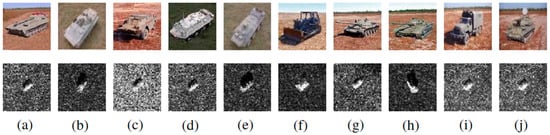

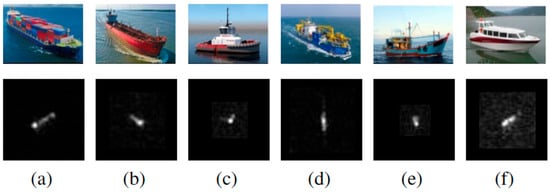

Examples of optical and SAR images from the MSTAR and OpenSARShip datasets are shown in Figure 2 and Figure 3, while the corresponding training and testing set partitions are presented in Table 1 and Table 2.

Figure 2.

MSTAR dataset: (a) 2S1; (b) BMP-2; (c) BRDM-2; (d) BTR-60; (e) BTR-70; (f) D-7; (g) T-62; (h) T-72; (i) ZIL-131; (j) ZSU-234.

Figure 3.

OpenSARship dataset: (a) Cargo; (b) Tanker; (c) Tug; (d) Dredging; (e) Fishing; (f) Passengers.

Table 1.

Training and testing partition of the MSTAR dataset.

Table 2.

Training and testing partition of the OpenSARship dataset.

4.2. Experimental Setup

The training and inference experiments in this study were conducted in a Linux environment with the following hardware and software configurations: the server is equipped with 2 × Intel Xeon Platinum 8558 CPUs (96 cores in total) with a maximum frequency of 4.0 GHz; 2.0 TB of RAM; and 2 × NVIDIA H20 GPUs (each with 97,871 MiB of memory). The CUDA version is 12.4, and the driver version is 550.144.03. The experimental environment was built using Python 3.8 and PyTorch 1.13 in Linux (Ubuntu). This configuration provides stable computational support for model training and inference, ensuring the reliability and reproducibility of the experimental results. The input and output channel numbers of each convolutional layer in the feature extraction network are listed in Table 3.

Table 3.

Input and output channel configuration of each convolutional layer in the feature extraction network.

Additionally, each image is resized to a fixed resolution (e.g., 84 × 84), converted into a tensor format, and normalized to the range of [−1, 1]. This procedure helps mitigate the effects of resolution differences between datasets and stabilizes the training process. The same preprocessing steps are applied across all datasets to ensure experimental consistency and reproducibility.

4.3. Contrast Experiments

On the MSTAR (5-way) and OpenSARShip (3-way) benchmarks, we compare our method against metric-based baselines (ProtoNet, Baseline++, DN4, Meta-Baseline, CPN) as well as a Transformer-based model (ACL) [] and a generative model (TFH) []. As reported in Table 4 and Table 5, our approach achieves the best accuracy across most n-shot settings, e.g., it improves over the strongest prior baseline by 2.61% in 5-way 1-shot and 2.55% in 3-way 2-shot. The generative TFH does not surpass our method largely because its reconstruction/likelihood objectives tend to preserve background energy and speckle patterns, which weakens discriminative margins under severe background similarity and very low shots; it is also more sensitive to resolution/domain shifts across SAR datasets. The Transformer ACL underperforms in the extreme low-shot regime due to its higher data hunger and weaker inductive bias for small, noisy SAR sets, leading to overfitting and unstable attention to clutter. In contrast, our framework—combining SSE for background suppression with ACM for explicit cross-class contrast—directly enlarges inter-class margins while stabilizing features in cluttered scenes, yielding higher mean accuracy and lower variance.

Table 4.

Performance comparison of the proposed method and other algorithms on the MSTAR dataset.

Table 5.

Performance comparison of the proposed method and other algorithms on the OpenSARship dataset.

4.4. Ablation Experiments

To verify the effectiveness of the proposed SSE and ACM modules, a series of experiments were conducted on the MSTAR and OpenSARShip datasets, as shown in Table 6 and Table 7. It can be observed that incorporating the SSE and ACM modules into the I2C framework leads to a significant improvement in classification accuracy. Specifically, the SSE attention module adaptively emphasizes salient scattering structures of the targets while suppressing noise and irrelevant background, thereby enhancing the robustness and discriminability of feature representations. The ACM discriminative metric module, which considers all support classes, evaluates not only the similarity between query samples and their corresponding target classes but also explicit comparisons with non-target classes, effectively reducing background interference and improving inter-class separability. These results demonstrate the effectiveness and generalization capability of the proposed modules in improving SAR target recognition performance.

Table 6.

Accuracy comparison between the ACM module and the I2C metric module on the MSTAR Dataset.

Table 7.

Accuracy comparison between the ACM module and the I2C metric module on the OpenSARShip Dataset.

During the metric process, it is necessary to select an appropriate hyperparameter to identify the most relevant nearest neighbors for each local descriptor of the query image. To determine the optimal value of , we conducted a series of experiments on the MSTAR dataset (5-way 5-shot) and the OpenSARShip dataset (3-way 5-shot), comparing classification performance under different settings. As shown in Table 8, the model achieved the highest accuracy when . Therefore, is fixed to 3 in this study.

Table 8.

Accuracy comparison on the MSTAR (5-way 5-shot) and OpenSARShip (3-way 5-shot) datasets with different values.

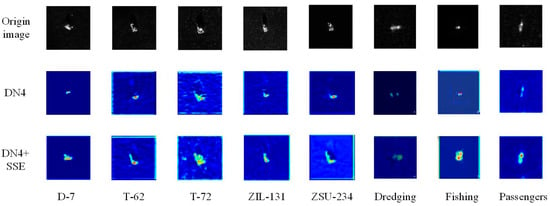

4.5. Visualization

OpenSARship Dataset

To evaluate the effectiveness of the SSE module, we employed Grad-CAM to visualize the feature responses on the MSTAR and OpenSARShip datasets and compared them with the results obtained without using SSE. As shown in Figure 4, the incorporation of SSE enables the model to better focus on target regions, leading to more accurate localization. This improvement arises because SSE considers both the spatial position of each target and its surrounding contextual information during feature extraction. In contrast, the model without SSE exhibits more scattered attention regions and is more susceptible to background clutter.

Figure 4.

Grad-CAM visualization of samples on the MSTAR dataset and the OpenSARShip dataset.

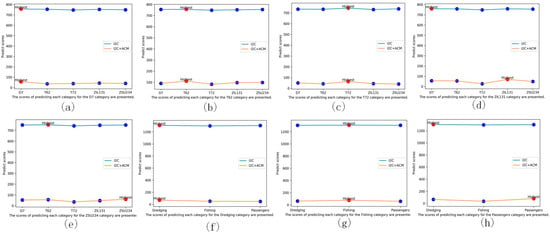

To further verify the effectiveness of the ACM module, Figure 5 illustrates the prediction score distribution of each category when misclassified as other categories. It can be observed that without ACM, the prediction scores among the different categories are relatively similar, mainly due to the high background similarity between targets and the large proportion of background regions in SAR images. After introducing ACM, the scores of each category misclassified as others decrease significantly, and the score differences between categories become more distinct. Taking ZIL131, ZSU234, and Passengers as examples, the model without ACM tends to be misled by background interference, resulting in close prediction scores across categories. In contrast, with ACM, the model correctly identifies targets by simultaneously measuring the similarity between query samples and both target and non-target classes, thereby effectively reducing the influence of background clutter on metric computation. This substantially enhances inter-class discriminability and improves the overall metric performance of the model.

Figure 5.

The prediction scores of the query sample and each supported class (similarity scores are calculated under the I2C metric module and ACM module on the MSTAR (a–e) and OpenSARship (f–h) datasets). (a) D7. (b) T62. (c) T72. (d) ZIL131. (e) ZSU234. (f) Dredging. (g) Fishing. (h) Passengers.

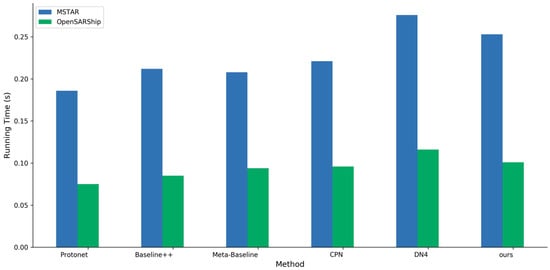

4.6. Running Time

Figure 6 presents the average running time per episode for the different algorithms on the MSTAR (5-way 5-shot) and OpenSARShip (3-way 5-shot) datasets. Each episode consists of 75 query samples for MSTAR and 45 query samples for OpenSARShip. As shown in the figure, our method achieves a slightly lower running time than DN4, primarily due to the use of broadcast mechanisms and matrix operations. Although the proposed approach involves a marginally higher computational cost compared with the simplest baselines, it consistently achieves the highest recognition accuracy on both MSTAR and OpenSARShip datasets, demonstrating superior overall performance.

Figure 6.

Running time of different algorithms on the MSTAR and the OpenSARShip dataset.

5. Conclusions

This paper proposes an ACM network for few-shot SAR target recognition. By incorporating a spatial attention mechanism, the method adaptively highlights the salient scattering structures of the target while attenuating noise and irrelevant background interference, thereby enhancing the robustness of feature representations. Furthermore, an adaptive contrastive metric module is designed in which query samples are compared not only with their corresponding support classes but also with residual classes, effectively enlarging inter-class margins and strengthening feature discriminability. The experimental results on public SAR datasets demonstrate that the proposed method consistently outperforms several state-of-the-art metric-based FSL approaches in terms of background suppression and recognition accuracy, validating the effectiveness of the proposed method.

In terms of practical significance, the proposed method effectively suppresses strong background clutter in real-world SAR applications (via the SSE module) and addresses the inherent challenge of high inter-class similarity in SAR targets (via the ACM module). This improves recognition reliability in complex environments. Regarding limitations and future work, the scalability of the method on real-world, large-scale data remains to be validated. Therefore, our future research will focus on cross-domain few-shot learning to enhance the model’s generalization capability. Simultaneously, we will optimize the algorithm to improve real-time performance, striving to achieve a better trade-off between recognition accuracy and processing speed. Furthermore, we will not only focus on improving the accuracy of SAR target recognition but also emphasize the practical benefits and performance gains that the proposed method brings to real-world SAR recognition tasks. The code of this paper will be released at https://github.com/Daniel123jia (accessed on 1 November 2025).

Author Contributions

R.C. contributed the central idea, analyzed most of the data, and wrote the initial draft of the paper. C.H. and F.Y. contributed the idea of the simulation experiment and provided constructive suggestions. J.Z. contributed to the revisions of the paper and polished the language. All authors discussed the results and revised the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The OpenSARship dataset is available at https://opensar.sjtu.edu.cn/ (accessed on 1 November 2025), and the MSTAR dataset is available at https://www.sdms.afrl.af.mil/index.php?collection=mstar (accessed on 1 November 2025).

Acknowledgments

The authors would like to thank the Defense Advanced Research Projects Agency (DARPA) and the U.S. Air Force Research Laboratory (AFRL) for providing the MSTAR dataset, and Shanghai Jiao Tong University for releasing the OpenSARShip dataset. We also sincerely appreciate the valuable comments and suggestions from the anonymous reviewers, which have greatly improved the quality and completeness of this paper.

Conflicts of Interest

Authors Rui Cai, Chao Huang, and Feng Yu were employed by AVIC Xi’an Aircraft Industry Group Company Ltd. The remaining author declares that the study was conducted without any commercial or financial relationships that could be interpreted as a potential conflict of interest.

References

- Ismail, R.; Muthukumaraswamy, S. Military Reconnaissance and Rescue Robot with Real-Time Object Detection. In Intelligent Manufacturing and Energy Sustainability: Proceedings of ICIMES 2020; Springer: Singapore, 2021; pp. 637–648. [Google Scholar]

- Yamaguchi, Y. Disaster monitoring by fully polarimetric SAR data acquired with ALOS-PALSAR. Proc. IEEE 2012, 100, 2851–2860. [Google Scholar] [CrossRef]

- Choe, B.H. Polarimetric Synthetic Aperture Radar (SAR) Application for Geological Mapping and Resource Exploration in the Canadian Arctic. Ph.D. Thesis, The University of Western Ontario (Canada), Ontario, ON, Canada, 2017. [Google Scholar]

- Errico, A.; Angelino, C.V.; Cicala, L.; Persechino, G.; Ferrara, C.; Lega, M.; Vallario, A.; Parente, C.; Masi, G.; Gaetano, R.; et al. Detection of Environmental Hazards through the Feature-Based Fusion of Optical and SAR Data: A Case Study in Southern Italy. Int. J. Remote Sens. 2015, 36, 3345–3367. [Google Scholar] [CrossRef]

- Ding, B.; Wen, G.; Ma, C.; Yang, X. Decision Fusion Based on Physically Relevant Features for SAR ATR. IET Radar Sonar Navig. 2017, 11, 682–690. [Google Scholar] [CrossRef]

- Huang, Y.; Liao, G.; Zhang, Z.; Xiang, Y.; Li, J.; Nehorai, A. SAR Automatic Target Recognition using Joint Low-Rank and Sparse Multiview Denoising. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1570–1574. [Google Scholar] [CrossRef]

- Tao, L.; Jiang, X.; Liu, X.; Li, Z.; Zhou, Z. Multiscale Supervised Kernel Dictionary Learning for SAR Target Recognition. IEEE Trans. Geosci. Remote Sens. 2020, 58, 6281–6297. [Google Scholar] [CrossRef]

- Wang, C.; Shi, J.; Zhou, Y.; Li, L.; Yang, X.; Zhang, T.; Wei, S.; Zhang, X.; Tao, C. Label Noise Modeling and Correction via Loss Curve Fitting for SAR ATR. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5216210. [Google Scholar] [CrossRef]

- Zhou, Z.; Cao, Z.; Pi, Y. Subdictionary-Based Joint Sparse Representation for SAR Target Recognition using Multilevel Reconstruction. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6877–6887. [Google Scholar] [CrossRef]

- Huang, Z.; Pan, Z.; Lei, B. Transfer Learning with Deep Convolutional Neural Network for SAR Target Classification with Limited Labeled Data. Remote Sens. 2017, 9, 907. [Google Scholar] [CrossRef]

- Chen, S.; Wang, H.; Xu, F.; Jin, Y.Q. Target Classification using the Deep Convolutional Networks for SAR Images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4806–4817. [Google Scholar] [CrossRef]

- Ai, J.; Mao, Y.; Luo, Q.; Jia, L.; Xing, M. SAR Target Classification using the Multikernel-Size Feature Fusion-Based Convolutional Neural Network. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5214313. [Google Scholar] [CrossRef]

- Yu, L.; Hu, Y.; Xie, X.; Lin, Y.; Hong, W. Complex-Valued Full Convolutional Neural Network for SAR Target Classification. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1752–1756. [Google Scholar] [CrossRef]

- Zhang, F.; Wang, Y.; Ni, J.; Zhou, Y.; Hu, W. SAR Target Small Sample Recognition Based on CNN Cascaded Features and AdaBoost Rotation Forest. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1008–1012. [Google Scholar] [CrossRef]

- Yu, J.; Chen, J.; Wan, H.; Zhou, Z.; Cao, Y.; Huang, Z.; Li, Y.; Wu, B.; Yao, B. Sargap: A full-link general decoupling automatic pruning algorithm for deep learning-based sar target detectors. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–18. [Google Scholar] [CrossRef]

- Guo, Y.; Chen, S.; Zhan, R.; Wang, W.; Zhang, J. Deformable Feature Fusion and Accurate Anchors Prediction for Lightweight SAR Ship Detector Based on Dynamic Hierarchical Model Pruning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 15019–15036. [Google Scholar] [CrossRef]

- Zhang, X.; Luo, Y.; Hu, L. Semi-Supervised SAR ATR via Epoch and Uncertainty-Aware Pseudo-Label Exploitation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5209015. [Google Scholar] [CrossRef]

- Wang, Z.; Du, L.; Mao, J.; Liu, B.; Yang, D. SAR Target Detection Based on SSD with Data Augmentation and Transfer Learning. IEEE Geosci. Remote Sens. Lett. 2019, 16, 150–154. [Google Scholar] [CrossRef]

- Sun, Y.; Wang, Y.; Liu, H.; Wang, N.; Wang, J. SAR Target Recognition with Limited Training Data Based on Angular Rotation Generative Network. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1928–1932. [Google Scholar] [CrossRef]

- Zhang, C.; Wang, Y.; Liu, H.; Sun, Y.; Hu, L. SAR Target Recognition using Only Simulated Data for Training by Hierarchically Combining CNN and Image Similarity. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Koch, G.; Zemel, R.; Salakhutdinov, R. Siamese Neural Networks for One-Shot Image Recognition. In Proceedings of the International Conference on Machine Learning (ICML), Vancouver, BC, Canada, 13–19 July 2015; Volume 2. [Google Scholar]

- Vinyals, O.; Blundell, C.; Lillicrap, T.; Wierstra, D. Matching Networks for One Shot Learning. In Proceedings of the International Conference on Neural Information Processing Systems, San Diego, CA, USA, 2–7 December 2025; Volume 29, pp. 3630–3638. [Google Scholar]

- Snell, J.; Swersky, K.; Zemel, R. Prototypical Networks for Few-Shot Learning. In Proceedings of the International Conference on Neural Information Processing Systems, San Diego, CA, USA, 2–7 December 2025; Volume 30, pp. 4080–4090. [Google Scholar]

- Sung, F.; Yang, Y.; Zhang, L.; Xiang, T.; Torr, P.H.; Hospedales, T.M. Hospedales, Learning to Compare: Relation Network for Few-Shot Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 1199–1208. [Google Scholar]

- Li, W.; Xu, J.; Huo, J.; Wang, L.; Gao, Y.; Luo, J. Distribution Consistency Based Covariance Metric Networks for Few-Shot Learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 8642–8649. [Google Scholar]

- Zhang, C.; Cai, Y.; Lin, G.; Shen, C. DeepEMD: Few-shot image classification with differentiable earth mover’s distance and structured classifiers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 12203–12213. [Google Scholar]

- Ye, H.J.; Hu, H.; Zhan, D.C.; Sha, F. Few-shot learning via embedding adaptation with set-to-set functions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 8808–8817. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. Simclr: A simple framework for contrastive learning of visual representations. In Proceedings of the International Conference on Learning Representations (ICLR), New York, NY, USA, 26–30 April 2020. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, Virtual Event, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Li, W.; Wang, L.; Xu, J.; Huo, J.; Gao, Y.; Luo, J. Revisiting Local Descriptor Based Image-to-Class Measure for Few-Shot Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 7260–7268. [Google Scholar]

- Rostami, M.; Kolouri, S.; Eaton, E.; Kim, K. Deep Transfer Learning for Few-Shot SAR Image Classification. Remote Sens. 2019, 11, 1374. [Google Scholar] [CrossRef]

- Rostami, M.; Kolouri, S.; Eaton, E.; Kim, K. SAR Image Classification using Few-Shot Cross-Domain Transfer Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Tai, Y.; Tan, Y.; Xiong, S.; Tian, J. Cross-Domain Few-Shot Learning between Different Imaging Modals for Fine-Grained Target Recognition. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 9186–9197. [Google Scholar] [CrossRef]

- Ren, H.; Liu, S.; Yu, X.; Zou, L.; Zhou, Y.; Wang, X.; Tang, H. Transductive prototypical attention reasoning network for few-shot SAR target recognition. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–13. [Google Scholar] [CrossRef]

- Zheng, J.; Li, M.; Chen, H.; Zhang, P.; Wu, Y. Deep Fourier-Based Task-Aware Metric Network for Few-Shot SAR Target Classification. IEEE Trans. Instrum. Meas. 2025, 74, 1–14. [Google Scholar] [CrossRef]

- Zheng, J.; Li, M.; Li, X.; Zhang, P.; Wu, Y. SVD-Based Feature Reconstruction Metric Network with Active Contrast Loss for Few-Shot SAR Target Recognition. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 7391–7405. [Google Scholar] [CrossRef]

- Zheng, J.; Li, M.; Li, X.; Zhang, P.; Wu, Y. Revisiting Local and Global Descriptor-Based Metric Network for Few-Shot SAR Target Classification. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–14. [Google Scholar] [CrossRef]

- Cai, J.; Zhang, Y.; Guo, J.; Zhao, X.; Lv, J.; Hu, Y. ST-PN: A Spatial Transformed Prototypical Network for Few-Shot SAR Image Classification. Remote Sens. 2022, 14, 2019. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhao, L.; Ding, D.; Hu, D.; Kuang, G.; Liu, L. Few-Shot Class-Incremental SAR Target Recognition via Cosine Prototype Learning. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–18. [Google Scholar] [CrossRef]

- Zeng, Z.; Sun, J.; Wang, Y.; Gu, D.; Han, Z.; Hong, W. Few-Shot SAR Target Recognition through Meta Adaptive Hyper-Parameters Learning for Fast Adaptation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–17. [Google Scholar]

- Qin, J.; Zou, B.; Chen, Y.; Li, H.; Zhang, L. Scattering Attribute Embedded Network for Few-Shot SAR ATR. IEEE Trans. Aerosp. Electron. Syst. 2024, 60, 4182–4197. [Google Scholar] [CrossRef]

- Zhao, X.; Lv, X.; Cai, J.; Zhang, Y.; Qiu, X.; Wu, Y. Few-shot sar-atr based on instance-aware transformer. Remote Sens. 2022, 14, 1884. [Google Scholar] [CrossRef]

- Ren, H.; Yu, X.; Wang, X.; Liu, S.; Zou, L.; Wang, X. Siamese Subspace Classification Network for Few-Shot SAR Automatic Target Recognition. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 2634–2637. [Google Scholar]

- Zhang, L.; Leng, X.; Feng, S.; Ma, X.; Ji, K.; Kuang, G.; Liu, L. Optimal Azimuth Angle Selection for Limited SAR Vehicle Target Recognition. Int. J. Appl. Earth Obs. Geoinf. 2024, 128, 103707. [Google Scholar] [CrossRef]

- He, Y.; Liang, W.; Zhao, D.; Zhou, H.Y.; Ge, W.; Yu, Y.; Zhang, W. Attribute surrogates learning and spectral tokens pooling in transformers for few-shot learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 9119–9129. [Google Scholar]

- Lazarou, M.; Stathaki, T.; Avrithis, Y. Tensor feature hallucination for few-shot learning. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2022; pp. 3500–3510. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).