Abstract

Digital image watermarking is a vital tool for copyright protection and content authentication. However, most existing methods perform well only under single noise types, while real-world applications often involve composite noises with multiple distortions, leading to poor robustness. To address this issue, we propose a robust image watermarking scheme. To improve performance under combined noise conditions, a two-stage training strategy is introduced: in the first stage, noise intensity increases gradually to stabilize training; in the second stage, mixed strong noises are applied to enhance generalization against complex attacks. Specifically, a strength-balanced watermark optimization algorithm is employed during the testing stage to improve visual quality while maintaining strong robustness. Furthermore, to improve robustness against JPEG compression, we adopt a differentiable fine-grained JPEG module that accurately simulates real compression and enables gradient backpropagation during training. Experimental results demonstrate the superiority of the proposed method under various single and combined distortions. Under noise-free conditions, it achieves 0% bit error rate and 53.55 dB PSNR. Under composite distortions, our scheme maintains a low average BER of 2.40% and a PSNR of 42.70 dB.

1. Introduction

With the explosive growth of digital content, protecting intellectual property rights and ensuring the integrity of information have become particularly important [,]. The image-blind watermarking technique serves as a key tool, offering robust copyright protection and verification for digital images with high imperceptibility [,]. However, with the diversification of multimedia applications and technological advances, traditional watermarking schemes gradually show their limitations, especially in dealing with combined noise conditions and maintaining balance between robustness and imperceptibility [].

Traditional digital watermarking schemes are usually divided into pixel and frequency domain schemes. The pixel domain-based schemes perform the watermark-embedding process by adjusting the pixel data of the image, through processes such as pixel value adjustment, pixel block shifting, and pixel feature encoding [,,,]. These schemes are favored for their good imperceptibility, but exhibit low robustness against various attacks. In contrast, frequency domain-based schemes perform the watermarked embedding process by adjusting the coefficients in the transform domain of the image, through processes such as DCT coefficient adjustment [,], and DWT coefficient modification [,]. These schemes improve attack resistance at the expense of imperceptibility.

The swift progress in deep learning has led researchers to adopt it in the field of digital watermarking, resulting in numerous innovative schemes [,,,,]. These schemes mainly focus on improving models [], training strategies [], and noise layer design [] to enhance model performance. However, these models tend to perform poorly under combined noise conditions. This is because various types of noise require more additional watermark residual information to maintain a high decoding rate, thereby reducing imperceptibility of the watermark image.

In deep learning models, the noise layer is essential for enabling the model to effectively learn various noise features and withstand different types of distortions. Consequently, it is important to identify functions that accurately model real-world noise. For most of the differentiable digital distortions, researchers have proposed relevant mathematical functions to improve robustness of the model under such distortions, while JPEG compression presents a challenge because it involves non-differentiable operations such as quantization and cropping []. As a result, existing models exhibit a low watermark-decoding rate under JPEG compression distortion, failing to meet practical application standards for watermark decoding.

Following the research and analysis presented above, we can summarize the limitations and challenges faced by current image-watermarking schemes: existing schemes fail to effectively balance watermark imperceptibility and robustness under combined noise conditions. Moreover, since JPEG compression involves non-differentiable operations, it restricts the model’s ability to learn noise distribution []. Consequently, most models exhibit poor robustness against JPEG compression, limiting their practical application. To address the above issues, we propose a novel two-stage training scheme for model training. In the testing phase, we introduce a strength-balanced watermarking optimization algorithm, enabling the model to achieve an optimal trade-off between watermarking imperceptibility and robustness under combined noise conditions. To enhance the model’s robustness against JPEG compression, we introduce a differentiable fine-grained JPEG compression module, closely mimicking real JPEG compression. Experimental results demonstrate superior outcomes compared to previous schemes.

In summary, the main contributions of this paper include the following three aspects:

- •

- A novel two-stage watermarking model-training strategy is proposed, which effectively ensure the robustness of watermarking under combined noise conditions.

- •

- The strength-balanced watermarking optimization algorithm is proposed to optimize imperceptibility and robustness of watermarks in the testing phase.

- •

- A differentiable JPEG compression module is introduced to enhance the model’s robustness against JPEG compression distortions.

The remainder of this paper is organized as follows: Section 2 provides a brief review of related work. Section 3 details the proposed scheme. Section 4 outlines the experimental setup, evaluation metrics, and details of the experiment results. Finally, Section 5 presents the conclusion of our study.

2. Related Work

2.1. Image Watermarking Based on Deep Learning

The deep learning-based watermarking model was first proposed by Kandi et al. [] in 2017. By employing autoencoder convolutional neural networks (CNNs), it brings better imperceptibility and robustness than traditional schemes. HiDDeN [] was the first paper introducing adversarial networks to image-blind watermarking. It was also the first end-to-end scheme using neural networks. The end-to-end framework enables joint learning of encoder and decoder, but training both modules makes it challenging to optimize the trade-off between watermark robustness and imperceptibility. Liu et al. [] proposed the TSDL training strategy. The scheme consists of two phases: noise-free end-to-end adversary training stage and noise-aware decoder-only training stage. This scheme effectively counters black-box noise. However, further improvements are required in terms of watermark robustness and imperceptibility under combined noise conditions. Table 1 compares representative deep watermarking methods along several critical dimensions, including robustness to single and composite distortions, imperceptibility, use of differentiable JPEG approximation, training strategy, and generalization capability. The comparison underscores limitations of existing frameworks in jointly addressing practical challenges such as strong mixed noise and non-differentiable transformations. Motivated by these schemes, we design a novel two-stage training strategy. By using different noise strengths with loss functions for training in different stages, robustness and imperceptibility of watermarked images can be significantly improved.

Table 1.

Comparison of existing deep image watermarking methods across key features including robustness, imperceptibility, differentiable JPEG use, and training strategy.

2.2. Strength Factor

Strength factor was explored by several scholars within the realm of deep learning-based watermarking schemes. Redmark [] and TSDL [] employed the strength factor to control robustness and imperceptibility of watermarking, yet overall enhancement was limited without significant improvements. MBRS [] used the strength factor during testing to adjust PSNR values for different models, enabling a clearer comparison of watermark-decoding rates. However, in-depth exploration of strength factor remains insufficient. Later, the Adoptor scheme [] proposed an adaptive strength factor training strategy that introduces a novel loss function during the training phase, aiming to ensure high watermark-decoding rates while maximizing PSNR and SSIM values for each image. Nevertheless, this scheme necessitates retraining model for different datasets, thereby lacking generalizability. To address this limitation, we propose a strength-balanced watermarking optimization algorithm to generate watermarked images with high imperceptibility against various types and intensities of distortions.

2.3. JPEG Simulations

Among various types of distortions faced by watermarking, existing deep learning schemes cannot effectively measure watermarking performance under JPEG compression []. This limitation arises because rounding and truncation operations inherent in JPEG compression are non-differentiable. Consequently, scholars have proposed several schemes. HiDDeN [] introduced JPEG-Mask and JPEG-Drop schemes as alternatives to actual JPEG compression. The two-stage training scheme proposed by TSDL [] represents an improvement over simulation schemes. However, since only decoder is trained in the second stage, information loss after compression occurs, affecting final performance. MBRS [] proposes a training strategy that combines real and simulated JPEG compression and has achieved significant results. However, when trained jointly with other noises, robustness of MBRS [] against JPEG compression decreases because adding other noises distorts the original combined training mechanism. To address these challenges, this paper introduces a refined JPEG compression function module, which is integrated into the noise layer for direct application during training, similar to other differentiable noise types.

3. Methodology

3.1. Model Architecture

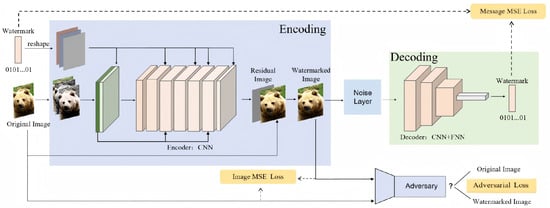

The overall architecture of our model is shown in Figure 1. It consists of four parts. (1) Encoder: The encoder contains parameters , the inputs are original image and original watermark , and output is residual image ; thus, watermarked image is obtained as follows:

(2) Noise layer: A joint noise layer is constructed by fitting a mathematical function to various noises. The input to the noise layer is watermarked image and output is noise image . (3) Decoder: The decoder, also with parameters , takes noisy image as input and outputs decoded watermark . (4) Adversary: The adversary has parameters to differentiate between the original image and watermarked image . The main function of adversary is to distinguish the difference between watermarked image and original image , so as to further improve the encoder’s ability to fuse features of the watermark and original image, and thus improve the quality of the watermarked image.

Figure 1.

Overall framework of our scheme. The framework has four modules: (1) encoder, (2) decoder, (3) noise layer, and (4) adversary.

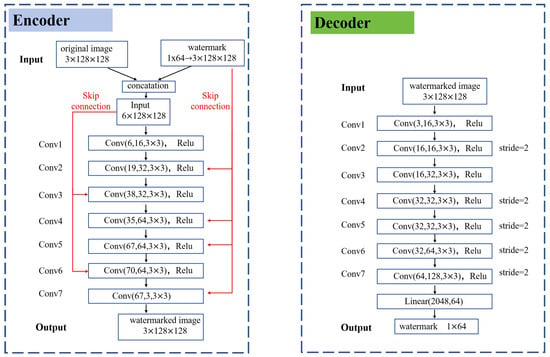

Encoder. Encoder architecture of our model is shown in the left figure of Figure 2. The encoder E is a network trained with parameters to embed the watermark into the original image. The watermark is expanded redundantly into a form of , matching the dimensions of original image. Then, this extended watermark is concatenated with cover image to generate a residual image . This residual image is then added to the cover image to produce the watermarked image. The encoder consists of seven convolutional layers. To capture features from both the watermark and cover image at multiple levels, outputs from layers 2, 4, 5, and 7 are concatenated with the watermarked image, respectively, while outputs from convolutional layers 3 and 6 are spliced with the original encoded image. Utilizing these multi-level convolutional mappings allows for more efficient learning of the watermark pattern. An MSE loss function is applied during training of the encoder, aimed at minimizing the difference between the watermarked image and original image.

Figure 2.

Detailed structure diagram of encoder and decoder; both encoder and decoder consist of seven convolutional blocks. Symmetric skip connections in the encoder allow the encoder to fuse multi-scale features.

Decoder. The decoder D with parameters is trained to extract the watermark from a noise image . As shown in the right figure of Figure 2, the main structure of the decoder is similar to the encoder, using seven convolutional layers and one fully connected layer. To ensure the final output has the same structure as the original watermark , the stride sizes of Conv1 and Conv3 are set to the default value of 1, whereas the stride sizes of remaining layers are set to 2 for reducing feature tensor size. After Conv7, a flatten operation is performed on remaining neurons, and then a dense layer is used to output a one-dimensional tensor which has the same size as . We also applied MSE loss function to train the decoder, to minimize the difference between recovered watermark and original watermark , aiming to make each bit same.

Adversary. In our scheme, the adversary consists of three layers of convolutional layers followed by a pooling layer for classification. The adversary is utilized to predict whether an image contains watermark information. It is trained by minimizing the following loss function. Update parameters to minimize

And update parameters to minimize

Noise layer. The noise layer is a crucial component of the model for achieving robustness. Common differentiable noises, such as crop, Gaussian filtering (GF), Gaussian noise (GN), resize, and salt and pepper (S&P) noise can be directly modeled using existing mathematical functions. However, for non-differentiable noise types like JPEG compression, existing fitting functions are not particularly effective. To address this issue, we introduce a refined JPEG compression function module designed in Reich et al. []. To the best of our knowledge, this is the first application of this scheme in the field of watermarking. This JPEG compression module performs differentiable processing on quantization, rounding down, truncation, and other operations, making it directly applicable for training deep learning models under highly fitting real JPEG compression. Specifically, for differentiable quantization, the JPEG compression module uses polynomial approximation proposed by Shin et al. [] to approximate quantization operation using the rounding function.

In the quantization step, a given JPEG quality Q is mapped to a scale factor s. The scaling factor s is calculated as follows:

Since scaling factor s and the corresponding quantization table need to be stored as integers, a differentiable scale floor function is necessary. The JPEG compression module utilizes polynomial rounding to approximate downward rounding function, offering advantages over alternative schemes.

For differentiable quantization table (QT) clipping, the JPEG compression module employs a differentiable clipping technique to constrain values within an appropriate range.

3.2. Model Training and Testing Strategy

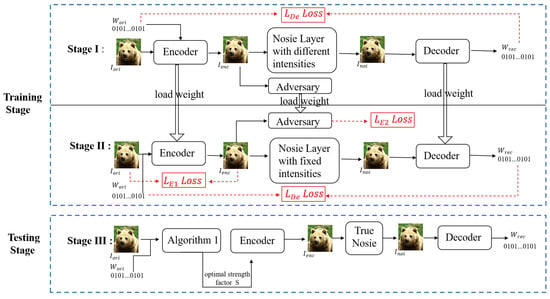

The scheme employed in our scheme comprises three steps: two training stages and a testing stage for adaptive control of watermarking performance through strength factor adjustment. During training, the method learns to generate residual images that are robust to various high-intensity distortions. At test time, a strength-balanced watermarking optimization algorithm is applied to adaptively produce high-quality watermarked images under diverse noise conditions. The entire process of model training and testing strategy is shown in Figure 3.

Figure 3.

Two-stage training and adaptive testing framework for robust watermarking. Stage I stabilizes feature learning under variable distortions, while Stage II refines imperceptibility and robustness via multi-loss optimization; Stage III applies strength-balanced embedding at inference time.

In the first training stage, the model is trained under various strong noise distortion conditions. To enhance stability and accelerate training speed, only the loss function of the decoder is utilized in this phase, as represented by the following equation.

At the same time, to improve robustness of the model under combined noise conditions, we choose to train a single model that can withstand a variety of noises, rather than training separate models for each type of noise. The noise layer we used is shown as follows. When training the model, the watermarked image is randomly distorted by one of the noises in the noise pool before decoding, which ensures that the model has good robustness to various types of noises. By covering such aggressive distortions early in training, the model learns generalized robustness across diverse attack scenarios.

As shown in corresponding variables in Table 2, during the training process, the model randomly selects different types of noises with varying intensities each time. Consequently, the model learns generalized features under diverse noise conditions. The first stage of training is designed to enable the decoder to efficiently learn robust features for various types of noise.

Table 2.

The parameter settings of the noise pool in different training stages.

In the second stage, building upon the robust decoder obtained from the first stage of training, the model is further trained using all the loss functions. This ensures that the model benefits from both initial robustness and optimization across all parameters.

Our focus shifts toward enhancing imperceptibility while preserving robustness. To achieve this balance, we fix distortion parameters to moderate levels—representative of realistic but less extreme perturbations. This design choice enables the model to prioritize perceptual quality without sacrificing resilience under practical conditions. And the training is continued using a fixed medium intensity noise as shown in Table 2. This training strategy improves the quality of watermarked images while maintaining robustness of the watermark.

While both our method and TSDL [] adopt a staged training paradigm, their objectives and design philosophies are different. TSDL [] first prioritizes generating high-fidelity watermarked images and only subsequently incorporates robustness considerations. In contrast, our staged design is a targeted technical strategy to achieve strong resilience against composite distortions. The first stage directly optimizes for robustness by exposing the model to increasingly severe synthetic noise and differentiable approximations of common attacks, thereby learning distortion-invariant watermark representations early in training. The second stage focus shifts toward enhancing imperceptibility while preserving robustness.

In the testing phase, to further improve the quality of watermarked image, we designed a strength-balanced watermarking optimization algorithm to measure the quality of watermarked image and decoding accuracy; the algorithm process is shown in Algorithm 1. The core principle behind our algorithm is that since watermarked image is derived from direct addition of original image and residual image , robustness and imperceptibility of the watermark are mainly influenced by residual image. Therefore, we use strength factor S to adjust the weight of residual image during the testing phase. The relationship can be represented as follows:

By adjusting S, we aim to identify an optimal value in different noise conditions that ensures watermark imperceptibility while maintaining an acceptable decoding loss rate. In our implementation, the strength-balancing algorithm adopts a sequential search strategy: it starts from small watermark strength and incrementally increases S by a fixed step until either the extracted watermark achieves the target bit error rate or the maximum number of attempts is reached. This design ensures deterministic runtime—for each image, the algorithm performs at most iterations, which in our experiments is set to 50. The strength factor S is bounded within and optimized independently for each test image. Starting from , we iteratively enhance S until the extracted watermark satisfies a target BER threshold, thus achieving the best possible visual quality while preserving robustness.

| Algorithm 1 Method of the Strength-Balanced Watermarking Optimization |

|

4. Experiments

To validate effectiveness of the proposed scheme, this section provides a detailed introduction to the experimental process and results. The content encompasses five main parts: First, we describe the datasets and explain how we chose the evaluation metrics. Second, we detail the experimental steps, including model training and testing setups. Third, we analyze the scheme’s performance in terms of watermark invisibility and robustness. Fourth, we compare our scheme with state-of-the-art schemes to show its advantages. Finally, ablation experiments verify the effectiveness of our refined JPEG compression module and explain why our model is simple yet effective.

4.1. Datasets and Evaluation Metrics

Datasets. Similar to other schemes, we randomly selected 10,000 natural images from the COCO dataset [] as the training set and an additional 1000 images as the validation set. We used 5000 COCO datasets [] different from the training and validation sets as the test set. To better demonstrate the scheme’s generalization ability, we randomly selected 1000 images from the Mini-ImageNet [] dataset of different categories as the test set. The evaluation will be based on the average performance metrics reported on the test set.

Metrics. To evaluate the performance of our scheme, we use a series of quantitative metrics. The robustness of watermarks measured by the bit error ratio (BER), which refers to the error between original watermark and extracted watermark . The imperceptibility of watermarked images is measured by Signal-to-Noise Ratio (PSNR) and Structural Similarity (SSIM).

where is the maximum pixel value of test image, and refers to the Mean Squared Error. , , refer to the average, variances, and covariance of images, respectively. and are two constants to prevent unstable results. For better result presentation, we adopt the average strength factor S obtained from the test set as the final result. In the comparison table with other scheme, the best-performing values are highlighted in bold.

Baseline. Our baselines for comparison are HiDDeN [], MBRS [], Adaptor [], and MCFN []. Their works all use convolutional neural networks as the model framework and use strength factors to control the experimental results. HiDDeN [] and MBRS [] opened the source code of their model. We are unable to achieve the best performance they reported. Adaptor [] and MCFN [] are not yet open-source. So to respect their reported results, we directly use the results published in MCFN []. Following standard practice in deep image watermarking above, we report mean BER and PSNR over the entire test set. All baselines are evaluated under identical distortion settings to ensure fair comparison.

4.2. Implementation Details

The model training in this scheme is divided into two stages. During training and testing, all images are uniformly resized to . To maintain consistency with other schemes, we randomly embed bits of information into each image. The entire training process is conducted on NVIDIA GeForce RTX 3090.

In the first stage, the initial learning rate is set to , with a batch size 32, and the weight factor is 10. These parameters were set based on the HiDDeN []. Additionally, the learning rate is automatically halved every 20 epochs to ensure rapid convergence in the early training stages and more precise learning of watermark robustness features. In the second stage, the initial learning rate is set to and a reduced batch size 8, weight factor is 6.18, is 10, and is 0.001.

Both stages involve training for 100 epochs and employ the Adam optimizer with default hyperparameters. For the noise layer, we use a randomly mixed high-intensity noise layer, as described earlier. This scheme aims to enhance the model’s resistance to various distortions by exposing it to different noises, thereby improving the robustness in practical applications.

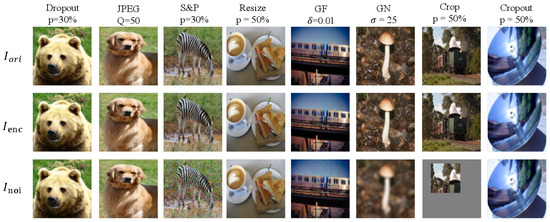

4.3. Imperceptibility and Robustness Tests

We evaluate the imperceptibility and robustness of our scheme using a model trained under combined noise conditions. Extensive tests were conducted with varying noise intensities, and Figure 4 shows visual quality results of each noise. Each column shows test results for each specific noise, and the name of noise and noise intensity are shown in the first row. The next two rows are original image and watermarked image . We can find that images and are almost indistinguishable visually, which shows that our model has good invisibility. The bottom row shows noise image attacked by a specific noise.

Figure 4.

Visual comparisons of the experimental results under different traditional noises. Each column corresponds to a type of noise. Top: original image ; middle: encoded image ; bottom: noisy image .

Table 3 shows quantitative results for robustness and imperceptibility of our blind-watermarking scheme under various single distortions on 1000 Mini-ImageNet images. The metrics include BER, PSNR, SSIM, and a strength factor S which collectively assess both the fidelity of the watermarked image and the accuracy of watermark extraction.

Table 3.

Results of robustness and imperceptibility against one single distortion under 1000 images in Mini-ImageNet dataset.

In the Identity case, our scheme achieves a PSNR value of 53.55 and a BER of less than 0.01%, which demonstrates the high imperceptibility and robustness of the scheme. Under different types of noise, the performance varies due to their distinct impacts on image structure and frequency content. Overall, the average performance across all distortions shows a BER of 2.40%, PSNR of 42.70 dB, SSIM of 0.953, and S of 0.227, demonstrating consistent and superior performance compared to state-of-the-art schemes under diverse attack scenarios.

Table 4 shows the robustness and imperceptibility results of our watermarking scheme under two types of combined noise distortions, tested on 1000 images from the Mini-ImageNet [] dataset. Each row represents a different combination of noise attacks, such as SP followed by Crop, or JPEG compression combined with GF. The PSNR values for all test cases are above 30 dB, which means the watermarked image remains visually very close to the original image, even after multiple distortions. The BER values are kept below 5%, showing that the watermark can still be accurately extracted under complex attack conditions. These results show that our scheme is effective under both single and real-world combined distortions, where multiple attacks occur sequentially. This makes our scheme more practical and reliable for real applications.

Table 4.

The robustness and imperceptibility results of two types of combined noise across 1000 images in the Mini-ImageNet dataset. The meaning of ‘SP(0.1) + Crop(0.5)’ is that the watermarked image is first processed by SP(0.1) and then processed by Crop(0.5) to obtain a noisy image containing two types of distortions.

4.4. Comparison with the State of the Arts

We compare our scheme with several state-of-the-art watermarking schemes, including HiDDeN [], MBRS [], and Adaptor [], which also use strength factors to balance imperceptibility and robustness. To fully evaluate the performance of our scheme, we conduct extensive experiments on 5000 images from the COCO [] dataset under different noise types and intensities. We adopt commonly used noise from prior watermarking studies. These noises are categorized into two types: differentiable and non-differentiable. Differentiable noise includes Crop, Dropout, and Gaussian noise. Non-differentiable noise includes JPEG compression.

4.4.1. Testing of Differentiable Noise

Differentiable noise can be categorized into geometric noise and non-geometric noise. Crop noise is one of the most common geometric distortions, where part of the image is removed. This can seriously affect watermark extraction because the embedded watermark may be partially or completely lost. In our experiments, we test three crop ratios R of 0.3, 0.5, and 0.7, meaning that 9%, 25%, and 49% of the watermarked image is kept, respectively. As shown in Table 5, when , our scheme achieves a BER of only 0.02%, while HiDDeN [], MBRS [], and Adaptor [] have BERs of 15.8%, 9.0%, and 0.86%, respectively. This shows that our scheme can still recover the watermark accurately even when a large portion of the image is cropped. At the same time, our PSNR value reaches 43.52 dB, which is higher than all other schemes, indicating better visual quality.

Table 5.

Comparison of experimental results for different crop ratios under 5000 images in COCO dataset.

Even at smaller crop ratios like , our scheme maintains a low BER and high PSNR, outperforming others.

In non-geometric noise, we choose Gaussian noise and Dropout for comparative evaluation. These noises affect the pixel values directly and can reduce both image quality and watermark extraction accuracy.

Gaussian noise adds random noise to each pixel, which affects the signal strength of the watermark. We test three noise levels: of , , and . From Table 6, at of , our scheme maintains a BER of 0.00% and a PSNR of 43.54 dB, outperforming others. Even at high noise levels, our watermark remains fully recoverable.

Table 6.

Comparison of experimental results of Gaussian noise under different under 5000 images in COCO dataset.

Dropout noise randomly removes pixels from the image, which may destroy part of the embedded watermark. In our experiments, we test three dropout ratios R of 0.3, 0.5, and 0.7. As shown in Table 7, when , our scheme achieves a BER of 0.00%, while HiDDeN [] has a BER of 23.5%, MBRS [] is 0.0051%, and Adaptor [] is 0.0050%. This shows that our scheme can still recover the watermark perfectly even when 70% of the pixels are removed. At the same time, our PSNR reaches 50.33 dB, which is higher than all other schemes, indicating excellent visual quality.

Table 7.

Comparison of experimental results of dropout at different ratios under 5000 images in COCO dataset.

This improvement comes from our proposed strength-balanced optimization algorithm, which dynamically adjusts the watermark strength during testing. It ensures strong robustness without sacrificing image quality. These results demonstrate that our scheme is more effective than existing approaches in handling geometric distortions, especially under severe cropping conditions.

4.4.2. Testing of Non-Differentiable Noise

JPEG compression is a common non-differentiable distortion that reduces image quality by removing high-frequency details. This can damage the watermark signal, especially when the compression quality is low. In our experiments, we test three JPEG quality factors Q of 30, 50, and 70. Lower values mean stronger compression and more loss of image information.

As shown in Table 8, at , our scheme achieves a BER of 0.041%, which is much lower than HiDDeN [] (39.8%) and MBRS [] (0.61%), showing strong robustness even under heavy compression. At , our BER drops to 0.001%, indicating nearly perfect watermark recovery. In addition, our PSNR values are slightly lower than those of Adaptor [] and MBRS [], but still above 37 dB, meaning the visual quality remains good. For example, at , our PSNR is 36.16 dB, close to Adaptor’s [], while maintaining a very low BER.

Table 8.

Robustness and imperceptibility test results for JPEG compression under 5000 images in COCO dataset.

4.4.3. Testing of Combined Noise

To fairly evaluate the performance of our scheme compared with other schemes, we use the same dataset as MCFN [] and randomly selected 5000 images as the test set for the experiment at combined noise condition. Table 9 shows values of each metric for each scheme under different noises, and our scheme not only achieves a higher PSNR than other schemes but also excels in robustness tests.

Table 9.

Results of robustness and imperceptibility compared with different models under 5000 images in COCO dataset.

To further illustrate the performance of our scheme, we provide a detailed analysis based on the results shown in Table 9. Under Dropout (), our scheme achieves a PSNR of 50.80 dB, which is higher than HiDDeN [] (35.04 dB), MBRS [] (41.6 dB), Adaptor [] (44.53 dB), and MCFN [] (49.75 dB). Similarly, under Crop (), our scheme maintains a high PSNR of 48.46 dB, outperforming all other schemes. In terms of JPEG compression (), our scheme achieves a PSNR of 36.31 dB, which is significantly higher than other schemes. Notably, our scheme also achieves the lowest bit error rate (BER) across all noise types. This comprehensive evaluation confirms that our scheme not only enhances the quality of watermarked images but also ensures robust watermarking under various types of noise conditions.

4.5. Ablation Experiments

To further validate our idea, we will conduct ablation experiments, targeting to independently validate the two-stage training strategy, network architecture design, and differentiable JPEG compression module.

4.5.1. Two-Stage Training Strategy

The two-stage training strategy is introduced to improve model robustness under strong and mixed noise conditions. To verify its necessity, we train the same network using only a single stage with the combined total loss, without the initial pre-training phase. Under this setting, the watermark decoding rate during training stabilizes around 50%. This poor performance suggests that model struggles to simultaneously learn robustness against multiple strong distortions when all losses are applied from the beginning. The conflicting gradients from different loss terms hinder stable convergence. In contrast, our two-stage approach first stabilizes feature learning in the pre-training stage, then enhances robustness in the fine-tuning stage, leading to significantly better generalization.

4.5.2. Network Architecture Design

We evaluate the impact of network architecture by replacing our lightweight design with the more complex model used in MBRS []. During the training stage, the MBRS [] model performs well on the training set with a BER of 0, while the BER on validation set is as high as 20%, indicating that model is not able to effectively learn the mixed noise features, leading to overfitting of model. Excessive model complexity may be the cause of the problem. MBRS contains a large number of attention modules which tend to focus more on feature extraction from the original image. In contrast, our scheme uses only a combination of convolutional and watermarked embeddings, allowing the model to focus more on the learning of residual image, which can help model converge and achieve better results.

4.5.3. Differentiable JPEG Compression Module

To verify the advantage of the JPEG differentiable compression layer used in our scheme, we replace its original mixed training scheme with our noise layer based on the MBRS [] scheme. We also compare with the scheme JPEG-SS used in the model HiDDeN [], and the experimental results obtained are shown in Table 10. The experimental results show that our scheme can be closer to the real JPEG compression and obtain better results in the robustness test. This is because our module more closely simulates the actual quantization and rounding operations in standard JPEG encoding, while still allowing gradient approximation for training. As a result, the model becomes more resilient to JPEG compression noise.

Table 10.

Comparison among MBRS, JPEG-SS, and the differentiable JPEG compression of our scheme.

5. Discussion

Our approach delivers strong robustness and visual quality under a wide range of common image degradations, but several limitations warrant discussion. As shown in Table 11, since the same encoder–decoder architecture is shared between Stage 1 and Stage 2, the total number of parameters in our model is the sum of the parameters used across both stages, amounting to 0.84 M. The overall computational complexity is 3.98G FLOPs, corresponding to the full two-stage inference pipeline. Although our model architecture is lightweight, with fewer parameters and lower FLOPs than other baselines, the sequential training process approximately doubles the total training time compared to single-stage approaches. At inference time, an additional latency arises from our adaptive strength calibration, which tailors watermark intensity to each input image to balance detectability and imperceptibility. Though this step is key to consistent performance across diverse content, it may be prohibitive in latency-sensitive applications. Future efforts could replace this iterative search with a learned predictor to retain benefits while improving speed.

Table 11.

Comparison of model parameters, FLOPs, and inference time under 5000 images in COCO dataset.

Generalization behavior also reveals both strengths and boundaries. Evaluations on the ImageNet [] dataset show performance comparable to that on in-domain benchmarks, suggesting the model captures transferable features rather than overfitting to specific textures or structures. This resilience is likely reinforced by the aggressive and varied distortion augmentation used during the first training stage. Nevertheless, like most data-driven watermarking schemes, our method assumes the test-time distortions resemble those seen during training. It handles mild, low-intensity unseen perturbations reasonably well but struggles when confronted with unseen distortion. These limitations define the current boundary of our method’s applicability.

For large-scale datasets, the per-image adaptive strength optimization in our inference pipeline introduces non-negligible latency. As demonstrated in Table 11, our method incurs higher inference latency than baseline approaches due to the per-image adaptive strength optimization, especially under severe distortions that require more iterations. While this component is critical for robustness, it limits scalability on large datasets. Future work will explore efficient alternatives to reduce runtime without compromising performance.

From a practical standpoint, our scheme is well-suited for copyright protection and content provenance tracking in digital media distribution. By ensuring reliable watermark recovery even after common editing operations, it enables creators and platforms to verify ownership or trace unauthorized reuse. Moreover, the core principles of our framework can offer a reference design which can be adapted to video watermarking.

6. Conclusions

In this paper, in order to further improve robustness and imperceptibility of watermarking in combined noise conditions, we propose a two-phase training strategy aimed at obtaining a model with high robustness to multiple types of noise. In the testing phase, we employ a strength-balanced watermarking optimization algorithm to further improve imperceptibility of watermarking while ensuring high robustness. Moreover, to enhance the robustness of existing schemes to JPEG compression, we introduce a fine-grained JPEG compression module. Experimental results show that our scheme outperforms other existing schemes and demonstrates significant practical value. Specifically, the scheme allows selection of optimal strength factors based on the application scenarios to achieve best performance. In future studies, we will continue to optimize the algorithm to improve its efficiency and extend application scenarios of this scheme so that it can work effectively in more complex distortion conditions, such as print-and-scan or print-and-shoot scenarios.

Author Contributions

Conceptualization, H.D. and X.Y.; methodology, H.D. and F.C.; software, H.D.; validation, P.G., R.L., and X.Y.; formal analysis, F.C.; investigation, H.D.; resources, X.Y.; data curation, H.D.; writing—original draft preparation, H.D.; writing—review and editing, H.D.; visualization, P.G.; supervision, F.C.; project administration, H.D.; funding acquisition, X.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China under Grant 62271496.

Data Availability Statement

The data presented in this study are available upon request from the corresponding authors.

Acknowledgments

We sincerely appreciate all the anonymous reviewers for their insightful and constructive comments to improve this work.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Hu, K.; Wang, M.; Ma, X.; Chen, J.; Wang, X.; Wang, X. Learning-based image steganography and watermarking: A survey. Expert Syst. Appl. 2024, 249, 123715. [Google Scholar] [CrossRef]

- Hosny, K.M.; Magdi, A.; ElKomy, O.; Hamza, H.M. Digital image watermarking using deep learning: A survey. Comput. Sci. Rev. 2024, 53, 100662. [Google Scholar] [CrossRef]

- Szepanski, W. A signal theoretic method for creating forgery-proof documents for automatic verification. In Proceedings of the Carnahan Conference on Crime Countermeasures, Lexington, KY, USA, 16–18 May 1979; pp. 101–109. [Google Scholar]

- Cox, I.; Miller, M.; Bloom, J.; Fridrich, J.; Kalker, T. Digital Watermarking and Steganography; Morgan Kaufmann Publishers: Amsterdam, The Netherlands, 2008. [Google Scholar]

- Singh, O.P.; Singh, A.K.; Srivastava, G.; Kumar, N. Image watermarking using soft computing techniques: A comprehensive survey. Multimed. Tools Appl. 2021, 80, 30367–30398. [Google Scholar] [CrossRef]

- Mukherjee, D.P.; Maitra, S.; Acton, S.T. Spatial domain digital watermarking of multimedia objects for buyer authentication. IEEE Trans. Multimed. 2004, 6, 1–15. [Google Scholar] [CrossRef]

- Karybali, I.G.; Berberidis, K. Efficient spatial image watermarking via new perceptual masking and blind detection schemes. IEEE Trans. Inf. Forensics Secur. 2006, 1, 256–274. [Google Scholar] [CrossRef]

- Hua, G.; Xiang, Y.; Zhang, L.Y. Informed histogram-based watermarking. IEEE Signal Process. Lett. 2020, 27, 236–240. [Google Scholar] [CrossRef]

- Zhou, N.R.; Hu, L.L.; Huang, Z.W.; Wang, M.M.; Luo, G.S. Novel multiple color images encryption and decryption scheme based on a bit-level extension algorithm. Expert Syst. Appl. 2024, 238, 122052. [Google Scholar] [CrossRef]

- Hamidi, M.; Haziti, M.E.; Cherifi, H.; Hassouni, M.E. Hybrid blind robust image watermarking technique based on DFT-DCT and Arnold transform. Multimed. Tools Appl. 2018, 77, 27181–27214. [Google Scholar] [CrossRef]

- Zhou, N.R.; Wu, J.W.; Chen, M.X.; Wang, M.M. A Quantum Image Encryption and Watermarking Algorithm Based on QDCT and Baker map. Int. J. Theor. Phys. 2024, 63, 100. [Google Scholar] [CrossRef]

- Parah, S.A.; Sheikh, J.A.; Akhoon, J.A.; Loan, N.A.; Bhat, G.M. Information hiding in edges: A high capacity information hiding technique using hybrid edge detection. Multimed. Tools Appl. 2018, 77, 185–207. [Google Scholar] [CrossRef]

- Rabie, T.; Kamel, I. Toward optimal embedding capacity for transform domain steganography: A quad-tree adaptive-region approach. Multimed. Tools Appl. 2017, 76, 8627–8650. [Google Scholar] [CrossRef]

- Zhu, J. HiDDeN: Hiding data with deep networks. arXiv 2018, arXiv:1807.09937. [Google Scholar]

- Wen, B.; Aydore, S. Romark: A robust watermarking system using adversarial training. arXiv 2019, arXiv:1910.01221. [Google Scholar] [CrossRef]

- Luo, X.; Zhan, R.; Chang, H.; Yang, F.; Milanfar, P. Distortion agnostic deep watermarking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 13548–13557. [Google Scholar]

- Mun, S.M.; Nam, S.H.; Jang, H.; Kim, D.; Lee, H.K. Finding robust domain from attacks: A learning framework for blind watermarking. Neurocomputing 2019, 337, 191–202. [Google Scholar] [CrossRef]

- Ahmadi, M.; Norouzi, A.; Karimi, N.; Samavi, S.; Emami, A. ReDMark: Framework for residual diffusion watermarking based on deep networks. Expert Syst. Appl. 2020, 146, 113157. [Google Scholar] [CrossRef]

- Zhang, C.; Benz, P.; Karjauv, A.; Sun, G.; Kweon, I.S. Udh: Universal deep hiding for steganography, watermarking, and light field messaging. Adv. Neural Inf. Process. Syst. 2020, 33, 10223–10234. [Google Scholar]

- Liu, Y.; Guo, M.; Zhang, J.; Zhu, Y.; Xie, X. A novel two-stage separable deep learning framework for practical blind watermarking. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 1509–1517. [Google Scholar]

- Jia, Z.; Fang, H.; Zhang, W. Mbrs: Enhancing robustness of dnn-based watermarking by mini-batch of real and simulated jpeg compression. In Proceedings of the 29th ACM International Conference on Multimedia, Chengdu, China, 20–24 October 2021; pp. 41–49. [Google Scholar]

- Wallace, G.K. The JPEG still picture compression standard. Commun. ACM 1991, 34, 30–44. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Kandi, H.; Mishra, D.; Gorthi, S.R.S. Exploring the learning capabilities of convolutional neural networks for robust image watermarking. Comput. Secur. 2017, 65, 247–268. [Google Scholar] [CrossRef]

- Wang, B.; Wu, Y.; Wang, G. Adaptor: Improving the robustness and imperceptibility of watermarking by the adaptive strength factor. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 6260–6272. [Google Scholar] [CrossRef]

- Reich, C.; Debnath, B.; Patel, D.; Chakradhar, S. Differentiable jpeg: The devil is in the details. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Tucson, AZ, USA, 28 February–4 March 2024; pp. 4126–4135. [Google Scholar]

- Shin, R.; Song, D. Jpeg-resistant adversarial images. In Proceedings of the NIPS 2017 Workshop on Machine Learning and Computer Security, Long Beach, CA, USA, 8 December 2017; Volume 1, p. 8. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami Beach, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Wu, Y.; Wang, B.; Wang, G.; Liao, X. MCFN: Multi-scale Crossover Feed-forward Network for high performance watermarking. Neurocomputing 2025, 622, 129282. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).