Explainable AI for Federated Learning-Based Intrusion Detection Systems in Connected Vehicles

Abstract

1. Introduction

1.1. Contributions

- V2I Federated IDS: We develop a unified FL-based intrusion detection framework that secures both in-vehicle (Controller Area Network—CAN) and external (IP-based) communications. By jointly modeling internal and external data streams, the proposed dual-layer design captures a complete spectrum of threat behaviors spanning in-vehicle and vehicle-to-infrastructure (V2I) interactions.

- Federated Explainability Aggregation: A novel explainability aggregation mechanism is introduced to combine client-side SHAP and LIME explanations into a global interpretability map. Unlike existing studies that apply explainability post hoc, our approach embeds XAI computation directly into the federated training process. This integration allows continuous learning of globally significant features and provides transparency during model convergence.

- Personalized and Adaptive FL-XAI Framework: Each participating vehicle maintains a personalized model variant that dynamically adapts to its specific operational and communication conditions. The central server aggregates explanation maps from all clients to guide personalized updates, ensuring context-aware threat detection and consistent interpretability across diverse vehicular environments.

1.2. Organization of the Paper

2. Background and Related Work

2.1. FL in IoT and Vehicular Networks

2.2. Explainable AI in Security-Critical and Federated Systems

2.3. Explainability in FL and Open Challenges

3. Proposed Method

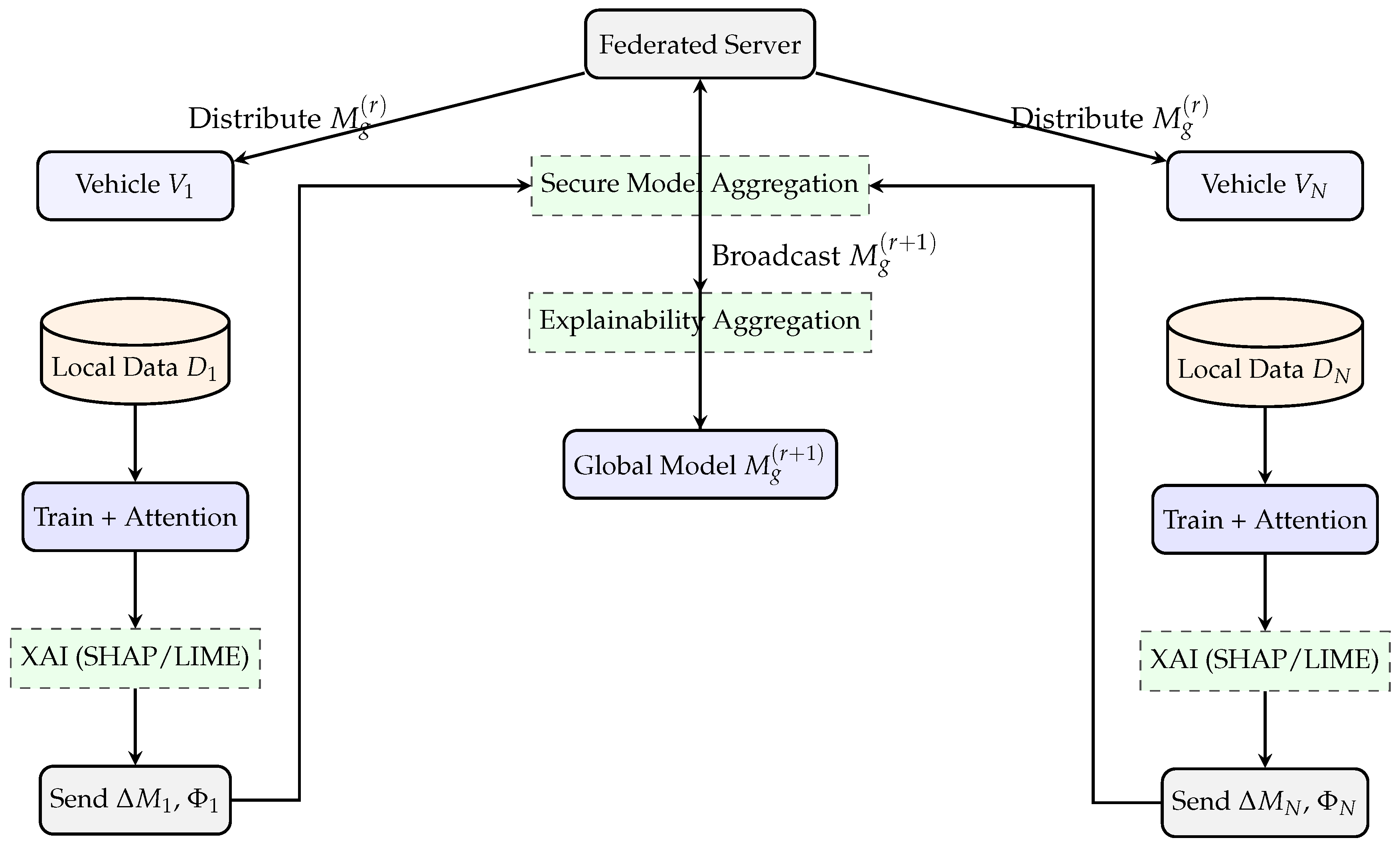

3.1. Federated Explainability Aggregation Mechanism

3.2. Explainable AI Integration

- Attention-based interpretability: During local training, attention modules assign dynamic weights to temporal and feature-level dependencies, enabling the visualization of influential CAN IDs and network attributes that drive the detection outcomes.

- Local explanations: Post-training, SHAP and LIME are applied to derive feature attribution scores and instance-level rationales. These local explanations help engineers understand the causes of detected anomalies, identify potential misclassifications, and mitigate false alarms.

- Federated aggregation of explanations: The server collects high-importance features from all clients’ SHAP results and constructs a global interpretability map . This federated map uncovers system-wide intrusion trends and cross-client feature relevance without requiring any raw data exchange.

3.3. Architecture

| Algorithm 1 Explainable FL-based Intrusion Detection System for Connected Vehicles (XAI-FL-IDS) |

|

- Line 1: The global model is initialized at the server. This model will be iteratively refined through federated training.

- Lines 2–3: For each communication round , the current global model is distributed to all N client vehicles participating in training.

- Lines 4–5: Each vehicle loads its private local dataset , which is composed of samples from CICIoVdataset 2024 and CICEVSE2024. The local model is initialized using the global model received from the server.

- Lines 6–8: Each vehicle trains the local model for E epochs using stochastic gradient descent (SGD) with a fixed learning rate . During training, attention weights are computed to capture the most influential input features, enabling built-in interpretability.

- Line 9: After training, SHAP (Shapley Additive Explanations) is applied to the local model to compute global feature importance scores, resulting in an explanation map for each client.

- Line 10: Optionally, LIME is applied to generate local, instance-specific explanations to support case-level interpretability. In our experiments, we applied this one.

- Line 11: Each client sends its model update and local explanation map to the central server.

- Line 12: The server aggregates all client model updates to construct a new global model . A robust aggregation rule, such as Krum, may be used to enhance resistance to adversarial updates.

- Line 13: In parallel, the server aggregates local explanation maps to generate a global interpretability map , summarizing the most important features across all clients.

- Line 14: The server uses the global explanation map to guide attention-based weighting or refinement of the next-round global model.

- Line 15: After all R communication rounds, the algorithm returns the final global model and its associated global explanation map .

3.4. Time Complexity Analysis

4. Experimental Setup

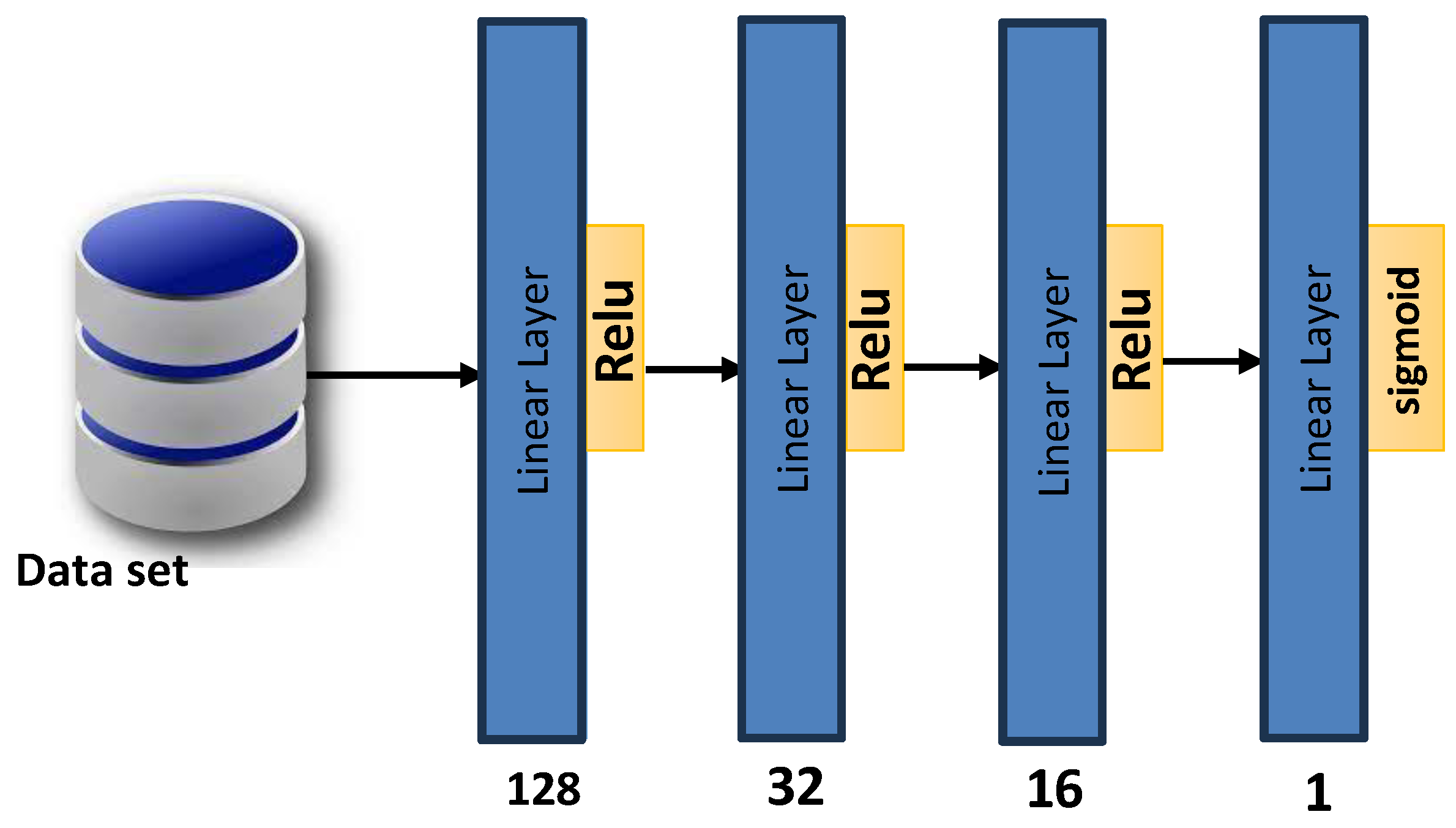

4.1. Local Model

- An input layer of size 128 corresponding to the number of features extracted from each dataset sample.

- A first hidden layer with 32 neurons and ReLU activation.

- A second hidden layer with 16 neurons and ReLU activation.

- An output layer with a single neuron and sigmoid activation, producing a binary classification output indicating benign or malicious traffic.

4.2. Simulation Environment

4.3. Datasets Description

4.4. Evaluation Metrics

- Accuracy: The ratio of correctly classified samples to the total number of samples, while commonly used, accuracy can be misleading in imbalanced datasets. Accuracy is calculated as Equation (7):

- Precision: The ratio of true positive predictions to all predicted positive instances. High precision indicates a low false alarm rate and is crucial for reducing unnecessary alerts in IDS. Precision is calculated as Equation (8):

- Recall (Detection Rate): The ratio of true positive predictions to all actual positive instances. High recall means fewer missed attacks, which is critical in safety-critical systems like connected vehicles. Recall is calculated as Equation (9):

- F1-Score: The harmonic mean of precision and recall. It provides a single metric that balances false positives and false negatives, especially useful when the classes are imbalanced. F1-Score is calculated as Equation (10):

- False Positive Rate (FPR): Measures the proportion of benign traffic incorrectly flagged as malicious. FPR is calculated as Equation (11):

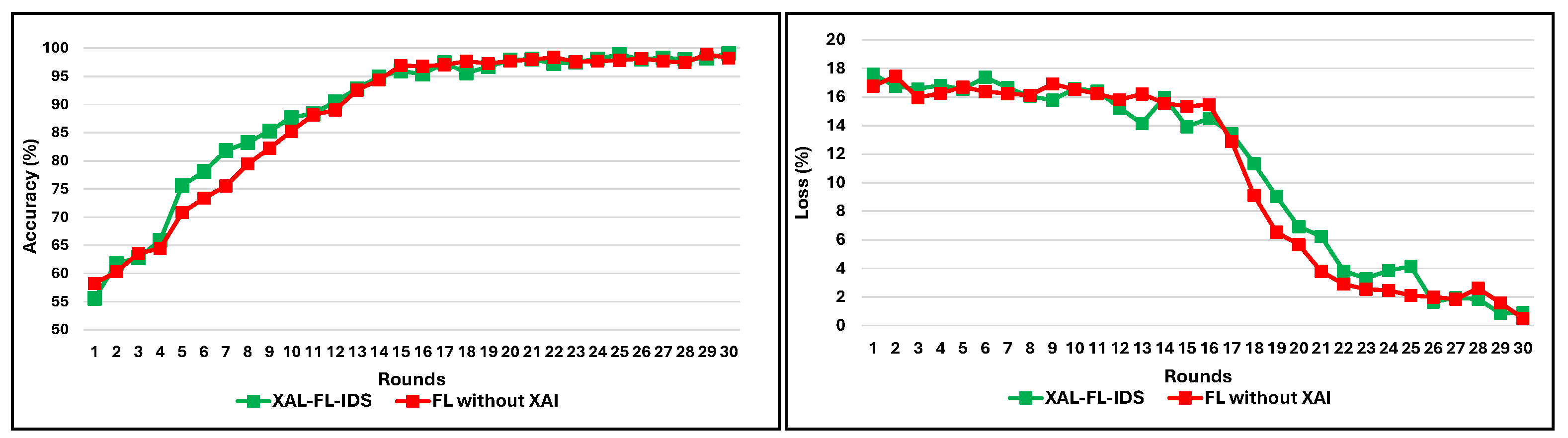

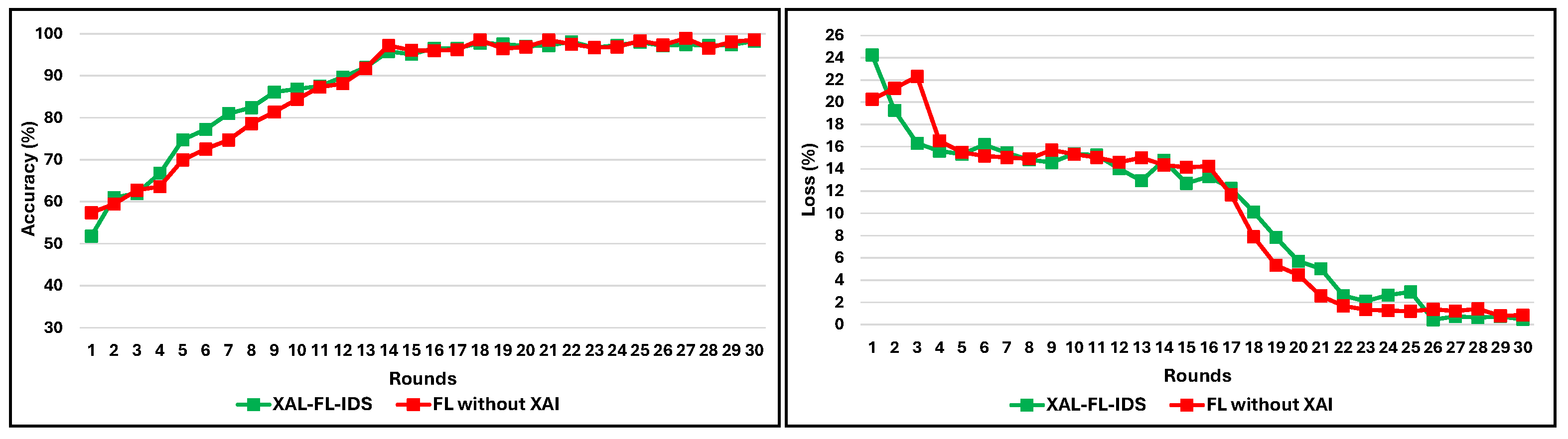

- Training Convergence and Round-wise Accuracy: In FL, we also track model accuracy and loss across communication rounds to assess convergence behavior and training stability.

5. Results and Discussion

5.1. Training Convergence and Learning Dynamics

5.2. Classification Performance Comparison

6. Conclusions and Future Works

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Kurachi, Y.; Kubo, K.; Fujii, T. Cybersecurity for 5G-V2X: Attack Surfaces and Mitigation Techniques. In Proceedings of the 93rd IEEE Vehicular Technology Conference (VTC Spring), Virtual, 25 April–19 May 2021. [Google Scholar]

- Duan, Z.; Mahmood, J.; Yang, Y.; Berwo, M.A.; Yassin, A.K.A.; Bhutta, M.N.M.; Chaudhry, S.A. TFPPASV: A Three-Factor Privacy Preserving Authentication Scheme for VANETs. Secur. Commun. Netw. 2022, 2022, 8259927. [Google Scholar] [CrossRef]

- Sudhina Kumar, G.K.; Krishna Prakasha, K.; Balachandra, M.; Rajarajan, M. Explainable Federated Framework for Enhanced Security and Privacy in Connected Vehicles Against Advanced Persistent Threats. IEEE Open J. Veh. Technol. 2025, 6, 1438–1463. [Google Scholar] [CrossRef]

- Taheri, R.; Gegov, A.; Arabikhan, F.; Ichtev, A.; Georgieva, P. Explainable Artificial Intelligence for Intrusion Detection in Connected Vehicles. Commun. Comput. Inf. Sci. (CCIS) 2025, 2768, 162–176. [Google Scholar]

- Baidar, R.; Maric, S.; Abbas, R. Hybrid Deep Learning–Federated Learning Powered Intrusion Detection System for IoT/5G Advanced Edge Computing Network. arXiv 2025, arXiv:2509.15555. [Google Scholar]

- Taheri, R.; Pooranian, Z.; Martinelli, F. Enhancing the Robustness of Federated Learning-Based Intrusion Detection Systems in Transportation Networks. In Proceedings of the 2025 IEEE International Conference on High Performance Computing and Communications (HPCC), Exeter, UK, 13–15 August 2025. [Google Scholar]

- Buyuktanir, B.; Altinkaya, Ş.; Karatas Baydogmus, G.; Yildiz, K. Federated Learning in Intrusion Detection: Advancements, Applications, and Future Directions. Clust. Comput. 2025, 28, 1–25. [Google Scholar] [CrossRef]

- Almaazmi, K.I.A.; Almheiri, S.J.; Khan, M.A.; Shah, A.A.; Abbas, S.; Ahmad, M. Enhancing Smart City Sustainability with Explainable Federated Learning for Vehicular Energy Control. Sci. Rep. 2025, 15, 23888. [Google Scholar] [CrossRef] [PubMed]

- Abdallah, E.E.; Aloqaily, A.; Fayez, H. Identifying Intrusion Attempts on Connected and Autonomous Vehicles: A Survey. Procedia Comput. Sci. 2023, 220, 307–314. [Google Scholar] [CrossRef]

- Luo, F.; Wang, J.; Zhang, X.; Jiang, Y.; Li, Z.; Luo, C. In-Vehicle Network Intrusion Detection Systems: A Systematic Survey of Deep Learning-Based Approaches. PeerJ Comput. Sci. 2023, 9, e1648. [Google Scholar] [CrossRef] [PubMed]

- Temitope, A.; Onumanyi, A.J.; Zuccoli, F.; Kolog, E.A. Federated Learning-Based Intrusion Detection for Secure In-Vehicle Communication Networks. In Proceedings of the 14th International Conference on Ambient Systems, Networks and Technologies (ANT), Leuven, Belgium, 23–25 April 2025; pp. 1082–1090. [Google Scholar]

- Abdel Hakeem, S.A.; Kim, H. Advancing Intrusion Detection in V2X Networks: A Survey on Machine Learning, Federated Learning, and Edge AI for Security. IEEE Trans. Intell. Transp. Syst. 2025; Early Access. [Google Scholar]

- Huang, K.; Wang, H.; Ni, L.; Xian, M.; Zhang, Y. FED-IoV: Privacy-Preserving Federated Intrusion Detection with a Lightweight CNN for Internet of Vehicles. IEEE Internet Things J. 2024; Forthcoming. [Google Scholar]

- Xie, N.; Jia, W.; Li, Y.; Li, L.; Wen, M. IoV-BCFL: An Intrusion Detection Method for IoV Based on Blockchain and Federated Learning. Ad Hoc Netw. 2024, 163, 103590. [Google Scholar] [CrossRef]

- Almansour, S.; Dhiman, G.; Alotaibi, B.; Gupta, D. Adaptive Personalized Federated Learning with Lightweight Convolutional Network for Intrusion Detection in IoV. Sci. Rep. 2025, 15, 35604. [Google Scholar] [CrossRef] [PubMed]

- Nwakanma, C.I.; Ahakonye, L.A.C.; Njoku, J.N.; Odirichukwu, J.C.; Okolie, S.A.; Uzondu, C.; Ndubuisi Nweke, C.C.; Kim, D.-S. Explainable Artificial Intelligence for Intrusion Detection and Mitigation in Intelligent Connected Vehicles: A Review. Appl. Sci. 2023, 13, 1252. [Google Scholar] [CrossRef]

- Chamola, V.; Hassija, V.; Sulthana, A.R.; Ghosh, D.; Dhingra, D.; Sikdar, B. A Review of Trustworthy and Explainable Artificial Intelligence (XAI). IEEE Access 2023, 11, 78994–79015. [Google Scholar] [CrossRef]

- Saheed, Y.K.; Chukwuere, J.E. XAIEnsembleTL-IoV: An Explainable Ensemble Transfer Learning Framework for Zero-Day Botnet Attack Detection in Internet of Vehicles. Electronics 2024, 13, 1134. [Google Scholar]

- Alabbadi, A.; Bajaber, F. An Intrusion Detection System over IoT Data Streams Using eXplainable Artificial Intelligence. Sensors 2025, 25, 847. [Google Scholar] [CrossRef] [PubMed]

- Fatema, K.; Dey, S.K.; Anannya, M.; Khan, R.T.; Rashid, M.M.; Su, C.; Mazumder, R. FedXAI-IDS: A Federated Learning Approach with SHAP-Based Explainable Intrusion Detection for IoT Networks. Future Internet 2025, 17, 234. [Google Scholar] [CrossRef]

- Zhang, H.; Wu, B.; Yuan, X.; Pan, S.; Tong, H.; Pei, J. Trustworthy Graph Neural Networks: Aspects, Methods, and Trends. Proc. IEEE 2024, 112, 97–139. [Google Scholar] [CrossRef]

- Wu, B.; Li, J.; Yu, J.; Bian, Y.; Zhang, H.; Chen, C.; Hou, C.; Fu, G.; Chen, L.; Xu, T. A Survey of Trustworthy Graph Learning: Reliability, Explainability, and Privacy Protection. arXiv 2022, arXiv:2205.10014. [Google Scholar]

- Sanjalawe, Y.; Allehyani, B.; Kurdi, G.; Makhadmeh, S.; Jaradat, A.; Hijazi, D. Forensic Analysis of Cyberattacks in Electric Vehicle Charging Systems Using Host-Level Data. Comput. Mater. Contin. 2025, 85, 3289–3320. [Google Scholar] [CrossRef]

- Uddin, M.A.; Chu, N.H.; Rafeh, R.; Barika, M. A Scalable Hierarchical Intrusion Detection System for Internet of Vehicles. arXiv 2025, arXiv:2505.16215. [Google Scholar] [CrossRef]

| Reference | Technique/Approach | Main Outcome |

|---|---|---|

| Temitope et al. [11] | FL-based IDS for secure in-vehicle communication networks | Demonstrated effective privacy-preserving detection in V2X environments. |

| Huang et al. [13] | Federated IDS using lightweight MobileNet-Tiny CNN for IoV | Achieved >98% accuracy with minimal latency on edge devices. |

| Xie et al. [14] | IoV-BCFL: Blockchain-based Federated Learning for secure parameter exchange | Improved trust and auditability of federated model updates. |

| Almansour et al. [15] | Adaptive Personalized FL with depthwise convolutional bottleneck network | +5% precision gain vs. FedAvg; higher recall and F1-score. |

| Nwakanma et al. [16] | Survey on Explainable AI for connected vehicle security | Highlighted open challenges in defining explanation granularity and assessing interpretability quality. |

| Saheed and Chukwuere [18] | XAIEnsembleTL-IoV: Ensemble Transfer Learning with SHAP-based attribution | Detected zero-day botnet attacks and provided interpretable feature attributions. |

| Alabbadi and Bajaber [19] | Real-time IDS with LIME-based explanations | Enabled on-the-fly interpretability for detected anomalies. |

| Fatema et al. [20] | FedXAI-IDS: Federated Learning with SHAP-based explanations | Achieved interpretable, privacy-preserving intrusion detection with maintained confidentiality. |

| Proposed (This Work) | XAI-FL-IDS: Federated Learning with SHAP and LIME integration and attention-based interpretability | 99.07% accuracy (CICEVSE2024) and 96.71% (CICIoV2024); low FPR, high F1-score, and enhanced model transparency. |

| Dataset | Model | Accuracy (%) | FPR (%) |

|---|---|---|---|

| CICEVSE2024 | Centralized IDS [23] | 99.26 | 1.81 |

| FL without XAI [24] | 98.23 | 2.11 | |

| Local Model Only | 99.31 | 1.07 | |

| XAI-FL-IDS (Proposed) | 99.07 | 1.31 | |

| CICIoV2024 | Centralized IDS [23] | 97.71 | 2.33 |

| FL without XAI [24] | 96.14 | 3.21 | |

| Local Model Only | 97.53 | 2.19 | |

| XAI-FL-IDS (Proposed) | 96.71 | 2.67 |

| Dataset | Model | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|---|

| CICEVSE2024 | Centralized IDS [23] | 97.19 | 97.15 | 97.15 |

| FL without XAI [24] | 97.24 | 97.11 | 97.17 | |

| Local Model Only | 94.8 | 95.5 | 95.2 | |

| XAI-FL-IDS (Proposed) | 98.9 | 98.4 | 98.5 | |

| CICIoV2024 | Centralized IDS [23] | 99.9 | 99.9 | 99.9 |

| FL without XAI [24] | 99.9 | 99.9 | 99.9 | |

| Local Model Only | 97.63 | 98.11 | 98.02 | |

| XAI-FL-IDS (Proposed) | 98.24 | 97.92 | 98.56 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Taheri, R.; Jafari, R.; Gegov, A.; Arabikhan, F.; Ichtev, A. Explainable AI for Federated Learning-Based Intrusion Detection Systems in Connected Vehicles. Electronics 2025, 14, 4508. https://doi.org/10.3390/electronics14224508

Taheri R, Jafari R, Gegov A, Arabikhan F, Ichtev A. Explainable AI for Federated Learning-Based Intrusion Detection Systems in Connected Vehicles. Electronics. 2025; 14(22):4508. https://doi.org/10.3390/electronics14224508

Chicago/Turabian StyleTaheri, Ramin, Raheleh Jafari, Alexander Gegov, Farzad Arabikhan, and Alexandar Ichtev. 2025. "Explainable AI for Federated Learning-Based Intrusion Detection Systems in Connected Vehicles" Electronics 14, no. 22: 4508. https://doi.org/10.3390/electronics14224508

APA StyleTaheri, R., Jafari, R., Gegov, A., Arabikhan, F., & Ichtev, A. (2025). Explainable AI for Federated Learning-Based Intrusion Detection Systems in Connected Vehicles. Electronics, 14(22), 4508. https://doi.org/10.3390/electronics14224508