ADPGAN: Anti-Compression Attention-Based Diffusion Pattern Steganography Model Using GAN

Abstract

1. Introduction

2. Related Work

2.1. Image Steganography

2.2. Dense-Net

2.3. Attention Mechanism

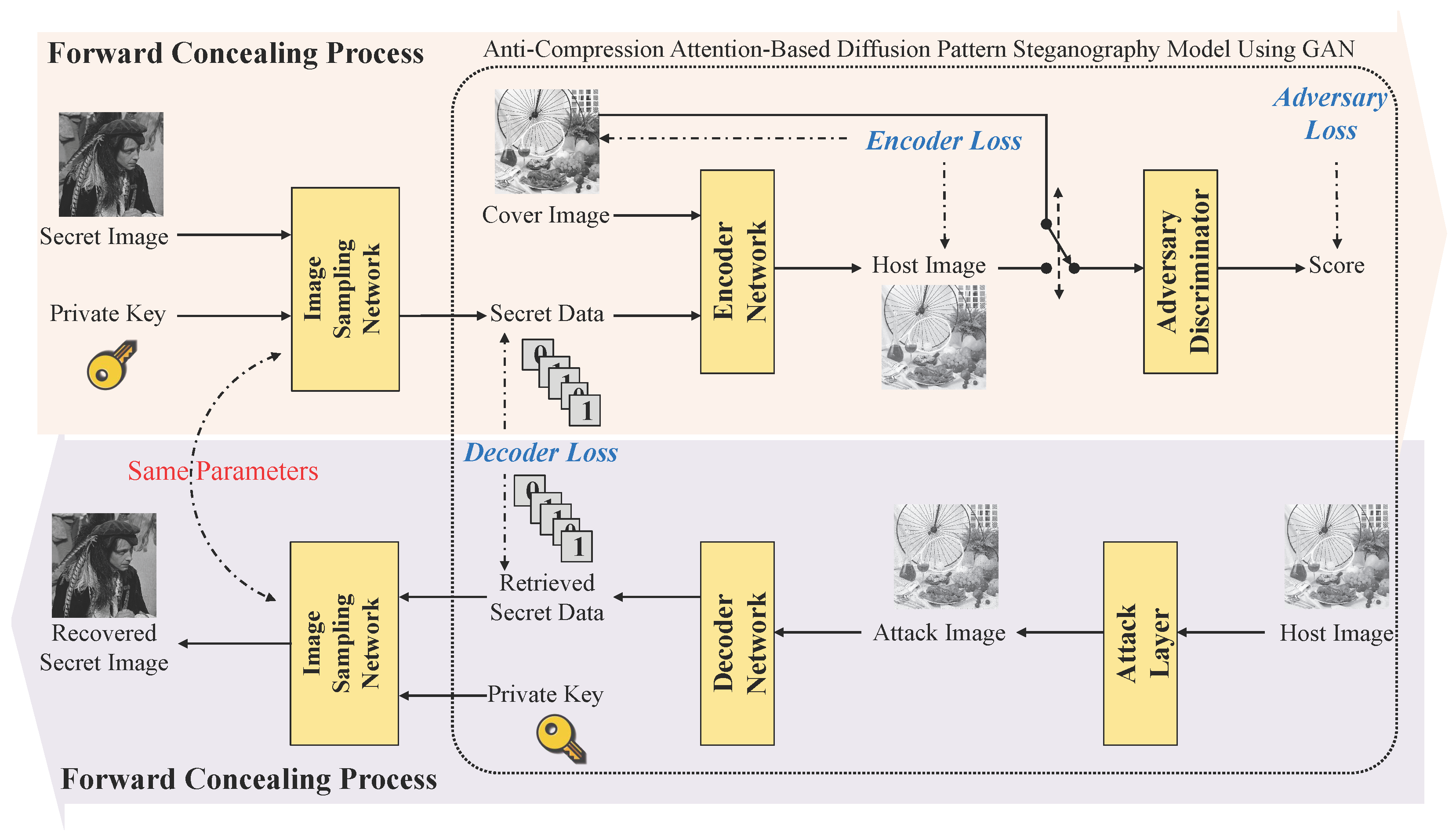

3. Proposed Method

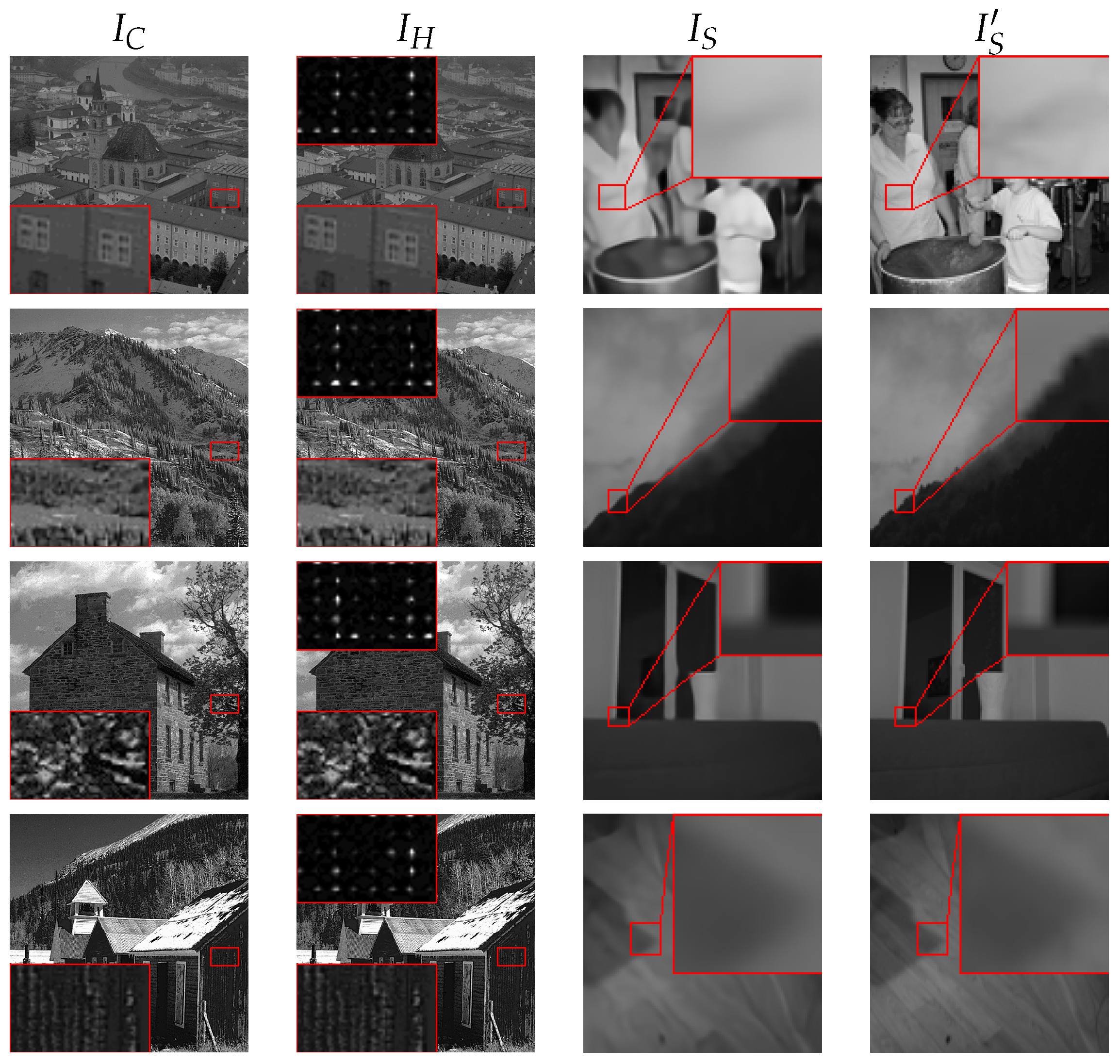

3.1. Image Sampling Network

3.2. Anti-Compression Attention-Based Diffusion Pattern Steganography Model Using GAN

3.2.1. Encoder Network

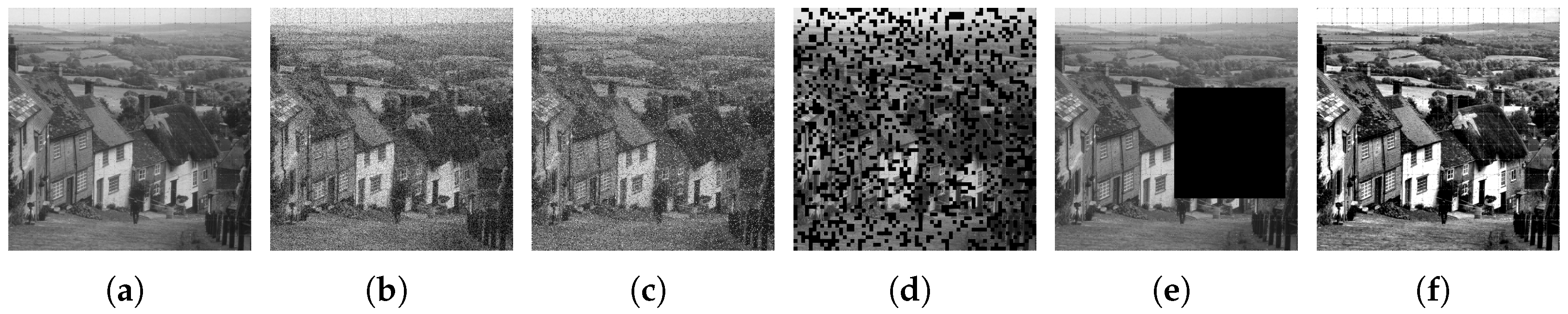

3.2.2. Attack Network

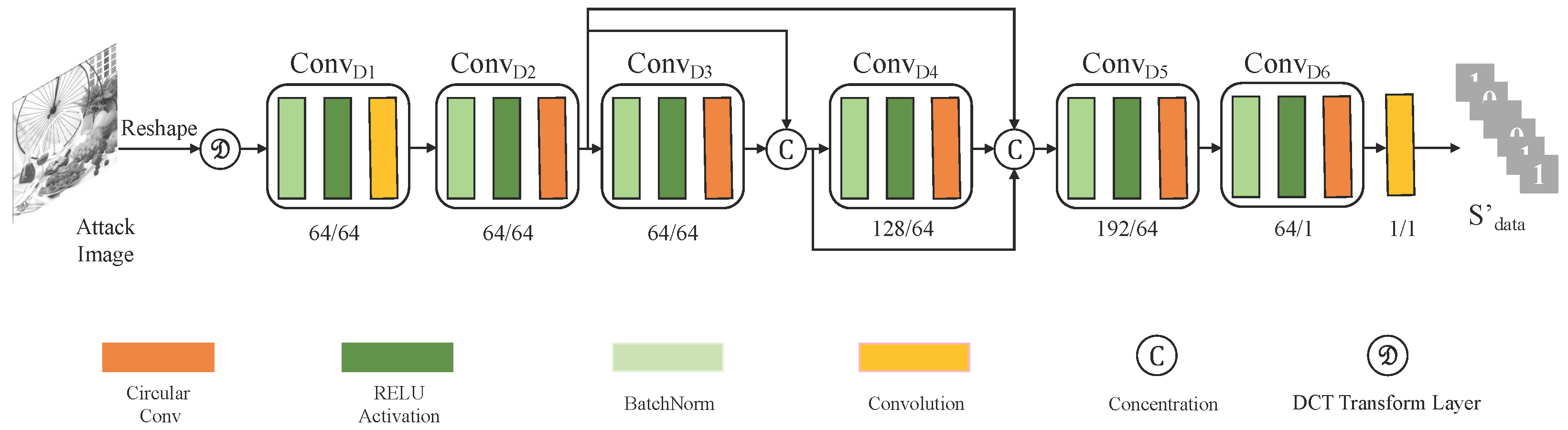

3.2.3. Decoder Network

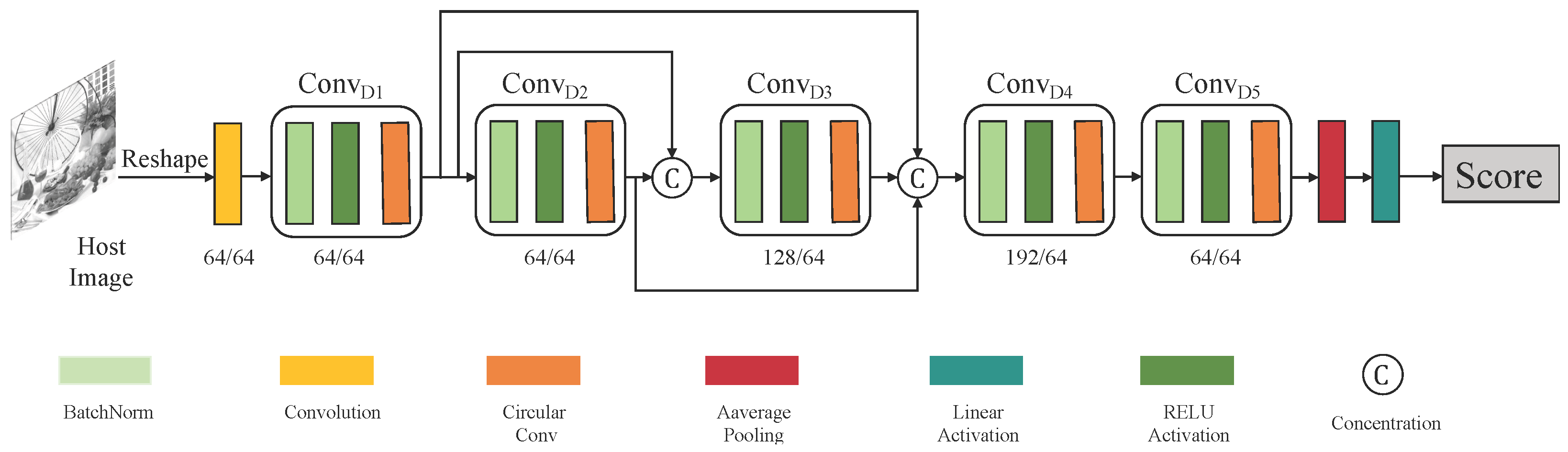

3.2.4. Adversary Discriminator

3.3. Training Objective

4. Experimental Results

4.1. Experimental Setup and Evaluation Metrics

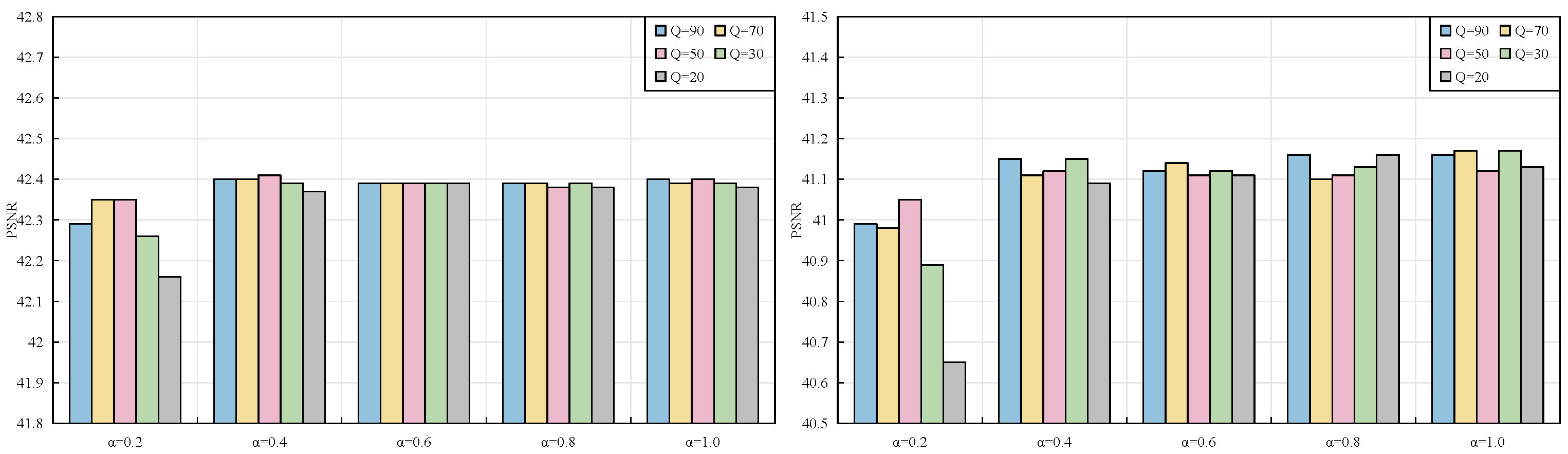

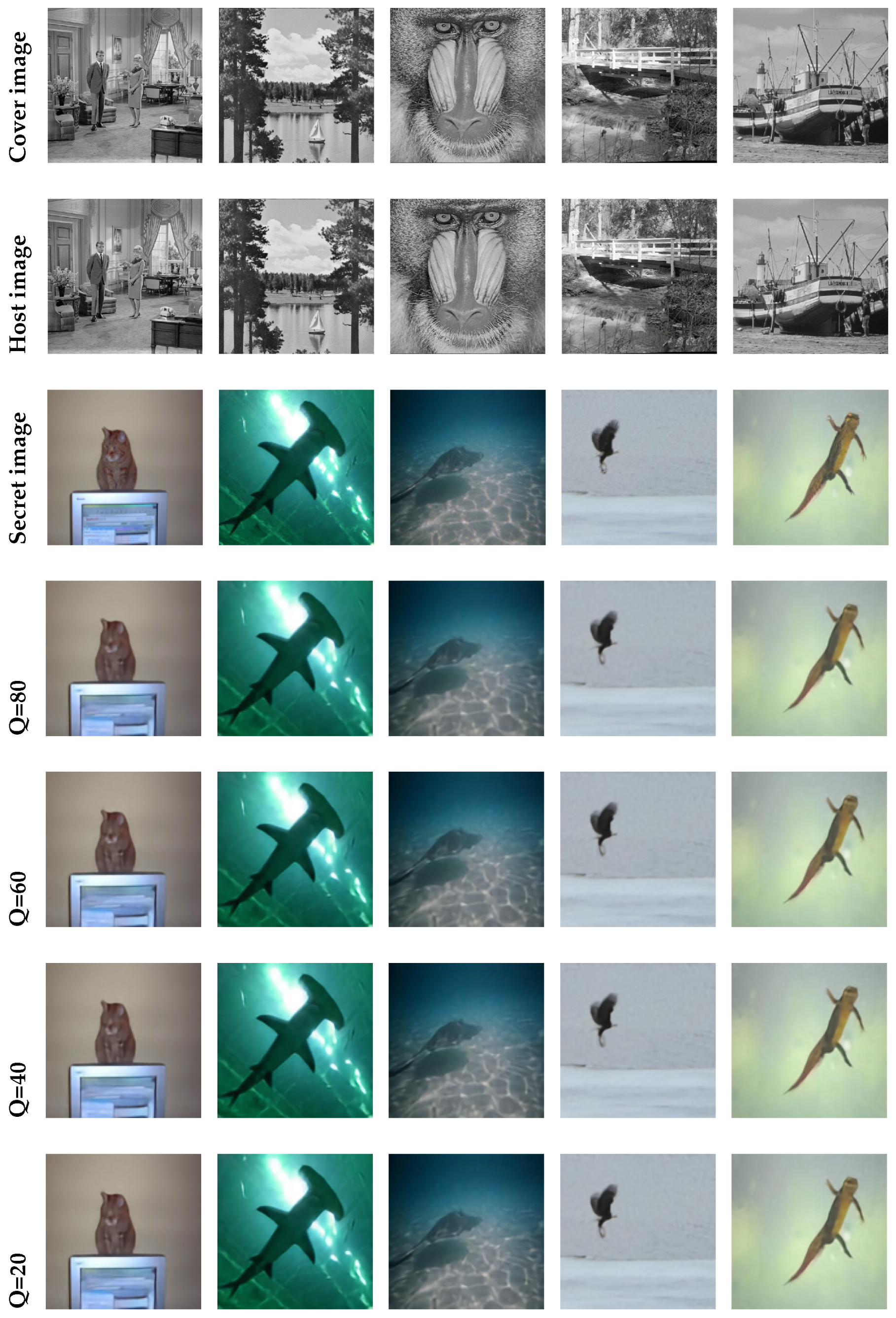

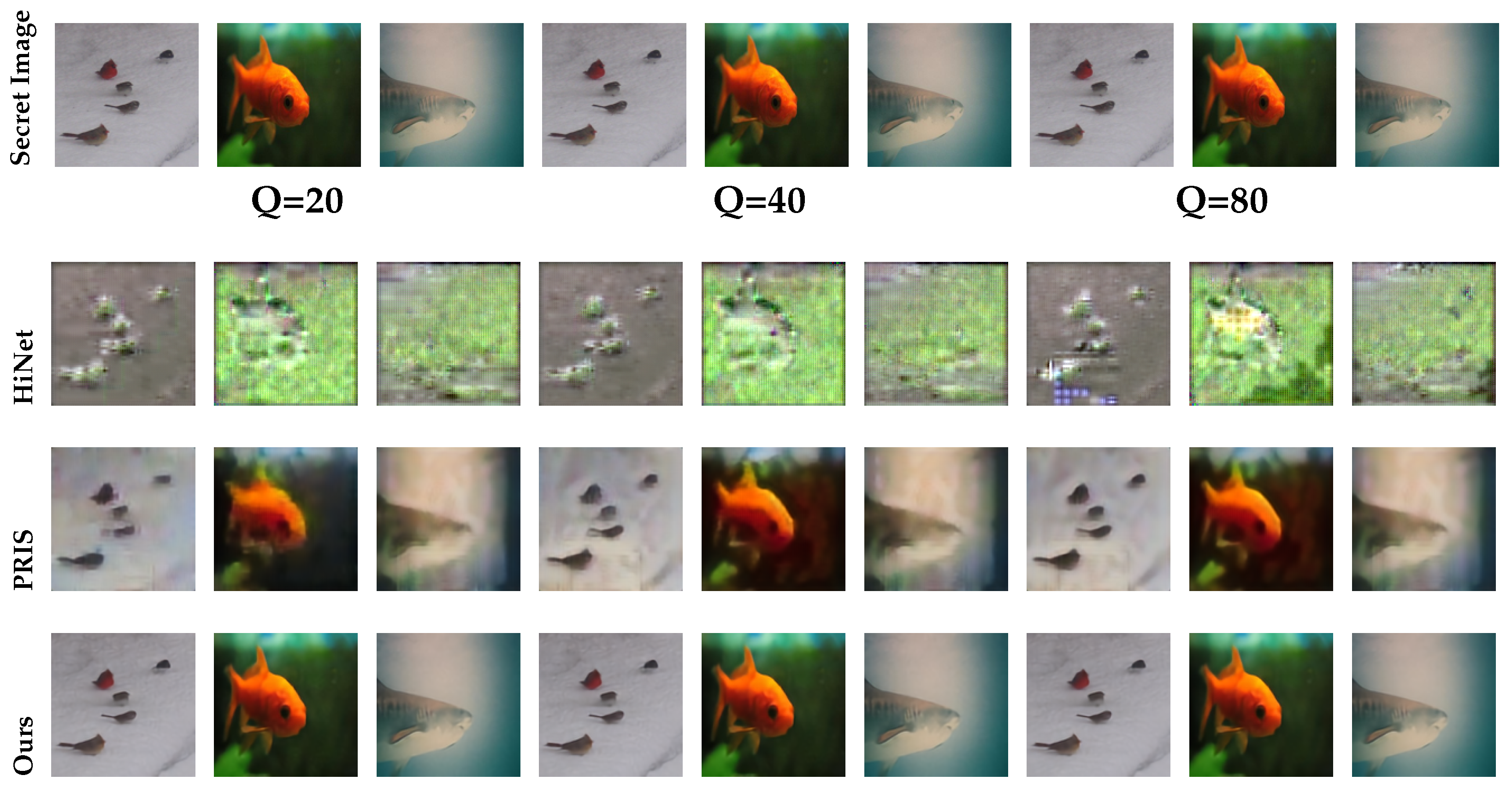

4.2. Framework Invisibility and Robustness Evaluation

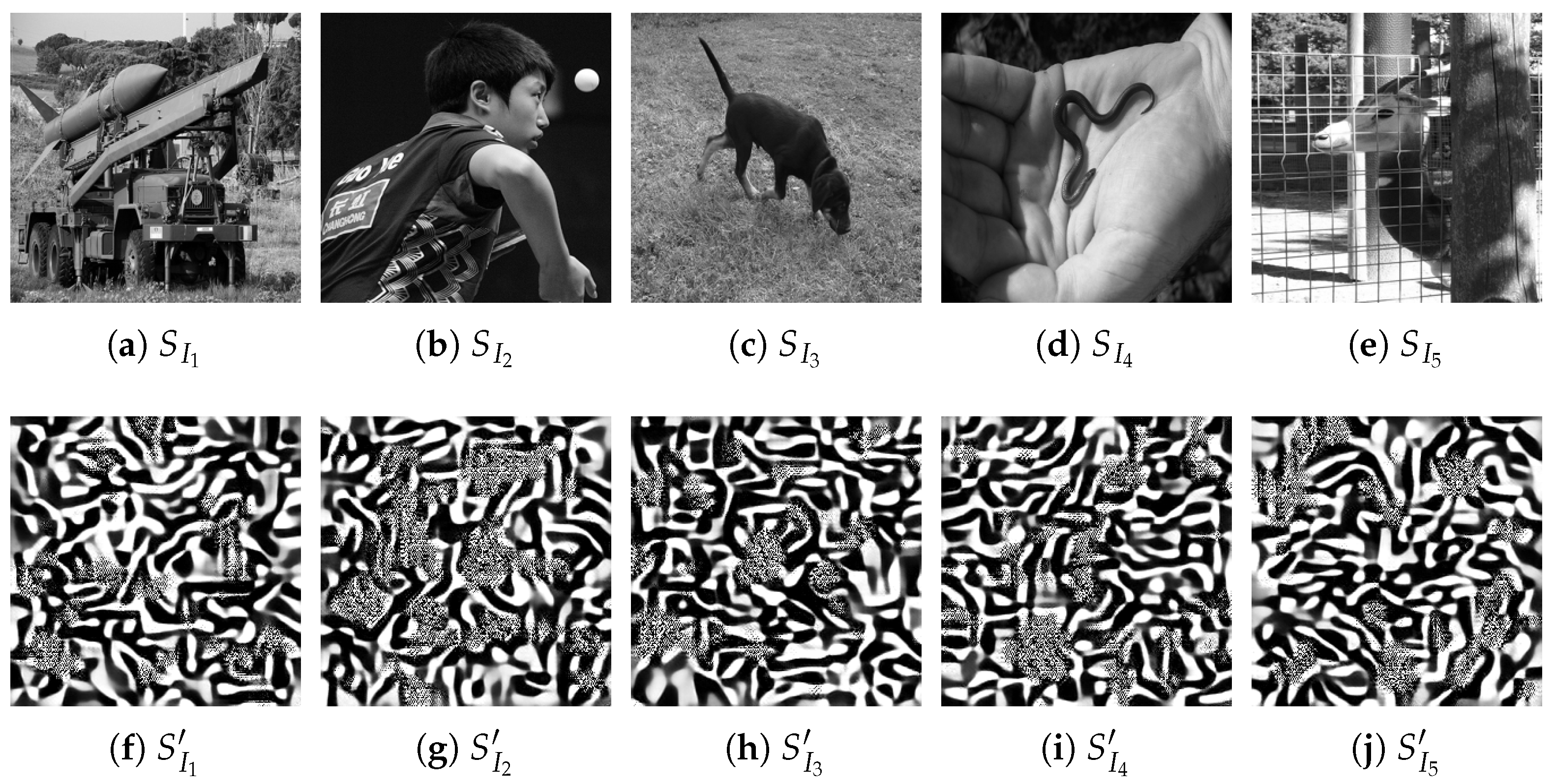

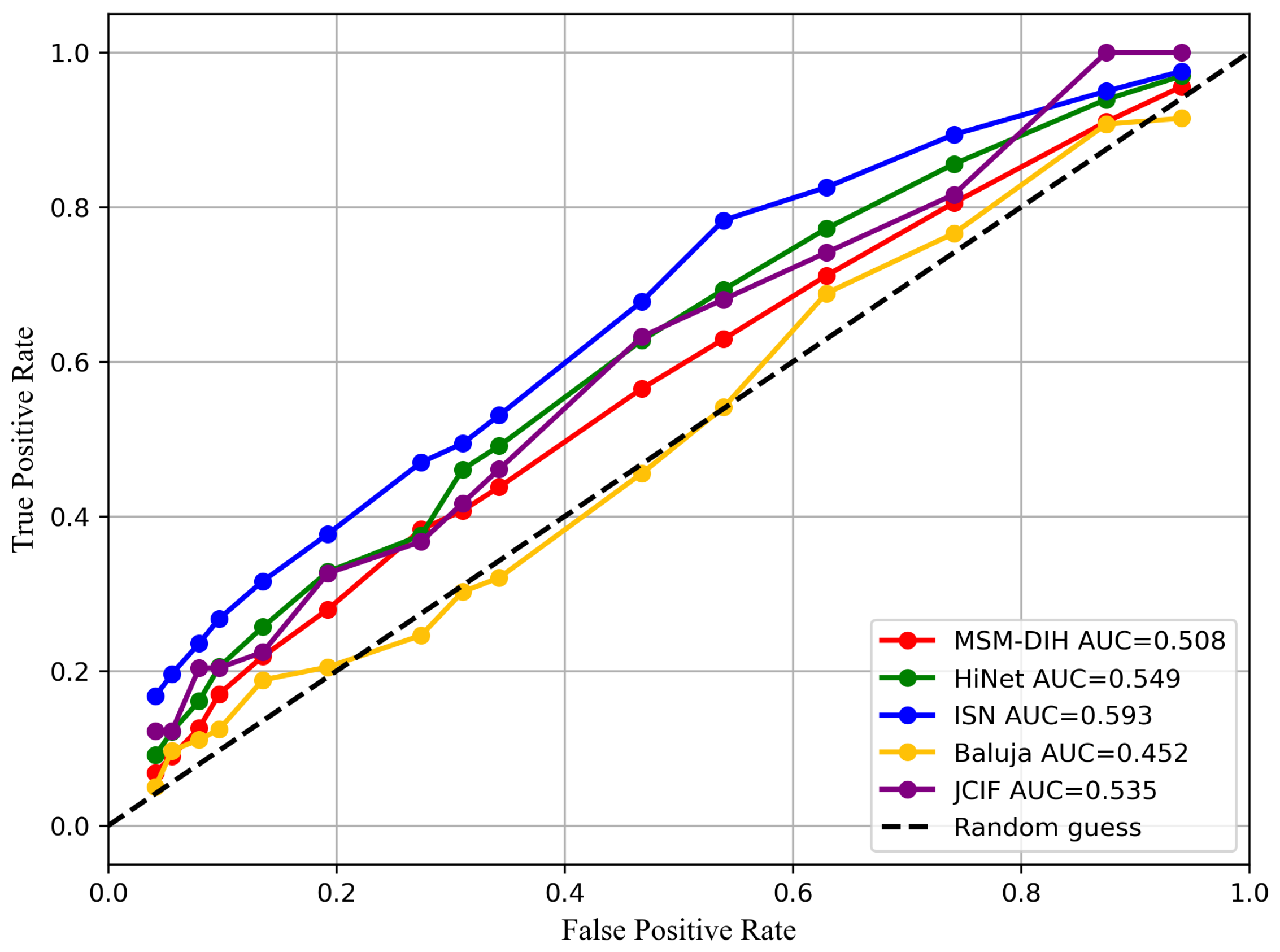

4.3. Framework Security Evaluation

4.4. Ablation Study

4.5. RGB Visualization

4.6. Steganographic Analysis

4.7. Comparison with State-of-the-Art

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Anand, A.; Singh, A.K. A Hybrid Optimization-Based Medical Data Hiding Scheme for Industrial Internet of Things Security. IEEE Trans. Ind. Informat. 2023, 19, 1051–1058. [Google Scholar] [CrossRef]

- Melman, A.; Evsutin, O.; Smirnov, D. An Image Watermarking Algorithm in DCT Domain Based on Optimal Patterns. In Proceedings of the 2023 XVIII International Symposium Problems of Redundancy in Information and Control Systems (REDUNDANCY), Moscow, Russian, 24–27 October 2023; pp. 1–5. [Google Scholar]

- Kamal, A.A. Searchable encryption of image based on secret sharing scheme. In Proceedings of the 2017 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Kuala Lumpur, Malaysia, 12–15 December 2017; pp. 1495–1503. [Google Scholar]

- Peng, Y.; Fu, C.; Cao, G.; Song, W.; Chen, J.; Sham, C.-W. JPEG-compatible joint image compression and encryption algorithm with file size preservation. ACM Trans. Multimed. Comput. Commun. Appl. 2024, 20, 105. [Google Scholar] [CrossRef]

- Yang, H.; Xu, Y.; Liu, X. DKiS: Decay weight invertible image steganography with private key. Neural Netw. 2025, 185, 107148. [Google Scholar] [CrossRef] [PubMed]

- Singh, H.K.; Baranwal, N.; Singh, K.N.; Singh, A.K. GANMarked: Using secure GAN for information hiding in digital images. IEEE Trans. Consum. Electron. 2024, 70, 6189–6195. [Google Scholar] [CrossRef]

- Ahmadi, M.; Norouzi, A.; Karimi, N.; Samavi, S.; Emami, A. ReDMark: Framework for residual diffusion watermarking based on deep networks. Expert Syst. Appl. 2020, 146, 113157. [Google Scholar] [CrossRef]

- Yang, H.; Xu, Y.; Liu, X.; Ma, X. PRIS: Practical robust invertible network for image steganography. Eng. Appl. Artif. Intell. 2024, 133, 108419. [Google Scholar] [CrossRef]

- Jia, Z.; Fang, H.; Zhang, W. MBRS: Enhancing robustness of DNN-based watermarking by mini-batch of real and simulated JPEG compression. In Proceedings of the 29th ACM International Conference on Multimedia, New York, NY, USA, 20–24 October 2021; pp. 41–49. [Google Scholar] [CrossRef]

- Chen, Z.; Liu, Y.; Ke, G.; Wang, J.; Zhao, W.; Lo, S. A region-selective anti-compression image encryption algorithm based on deep networks. Int. J. Comput. Intell. Syst. 2024, 17, 117. [Google Scholar] [CrossRef]

- Mielikainen, J. LSB matching revisited. IEEE Signal Process. Lett. 2006, 13, 285–287. [Google Scholar] [CrossRef]

- Jing, J.; Deng, X.; Xu, M.; Wang, J.; Guan, Z. HiNet: Deep Image Hiding by Invertible Network. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 4713–4722. [Google Scholar]

- Lu, S.-P.S. Large-capacity Image Steganography Based on Invertible Neural Networks. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 10811–10820. [Google Scholar]

- Cui, Q.; Tang, W.; Zhou, Z.; Meng, R.; Nan, G.; Shi, Y.-Q. Meta Security Metric Learning for Secure Deep Image Hiding. IEEE Trans. Dependable Secur. Comput. 2024, 21, 4907–4920. [Google Scholar] [CrossRef]

- Tembhare, N.P.; Tembhare, P.U.; Chauhan, C.U. Chest X-ray analysis using deep learning. Int. J. Sci. Technol. Eng. 2023, 11, 1441–1447. [Google Scholar]

- Huang, G.; Liu, Z.; Pleiss, G.; van der Maaten, L.; Weinberger, K.Q. Convolutional networks with dense connectivity. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 8704–8716. [Google Scholar] [CrossRef]

- Glorot, X.; Bordes, A.; Bengio, Y. Deep sparse rectifier neural networks. J. Mach. Learn. Res. 2011, 15, 315–323. [Google Scholar]

- Szegedy, C.C. Rethinking the inception architecture for computer vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; Volume 42, pp. 2011–2023. [Google Scholar]

- Tan, J.; Liao, X.; Liu, J.; Cao, Y.; Jiang, H. Channel Attention Image Steganography With Generative Adversarial Networks. IEEE Trans. Netw. Sci. Eng. 2022, 9, 888–903. [Google Scholar] [CrossRef]

- Wang, J.; Wang, H.; Zhang, J.; Wu, H.; Luo, X.; Ma, B. Invisible Adversarial Watermarking: A Novel Security Mechanism for Enhancing Copyright Protection. ACM Trans. Multimed. Comput. Commun. Appl. 2025, 21, 1–22. [Google Scholar] [CrossRef]

- Xie, S.; Zhao, C.; Sun, N.; Li, W.; Ling, H. Picking watermarks from noise (PWFN): An improved robust watermarking model against intensive distortions. In Proceedings of the 2024 IEEE International Conference on Multimedia and Expo (ICME), Niagara Falls, ON, Canada, 15–19 July 2024. [Google Scholar] [CrossRef]

- Huang, J.; Luo, T.; Li, L.; Yang, G.; Xu, H.; Chang, C.-C. ARWGAN: Attention-guided robust image watermarking model based on GAN. IEEE Trans. Instrum. Meas. 2023, 72, 5018417. [Google Scholar] [CrossRef]

- Xiao, M.; Zheng, S.; Liu, C.; Lin, Z.; Liu, T.-Y. Invertible rescaling network and its extensions. Int. J. Comput. Vis. 2023, 131, 134–159. [Google Scholar] [CrossRef]

- Lepcha, D.C.; Goyal, B.; Dogra, A.; Goyal, V. Image super-resolution: A comprehensive review, recent trends, challenges and applications. Inf. Fusion 2023, 91, 230–260. [Google Scholar] [CrossRef]

- Dabas, P.; Khanna, K. A study on spatial and transform domain watermarking techniques. Int. J. Comput. Appl. 2013, 71, 38–41. [Google Scholar] [CrossRef]

- Wang, D.; Yang, G.; Chen, J.; Ding, X. GAN-based adaptive cost learning for enhanced image steganography security. Expert Syst. Appl. 2024, 249, 123471. [Google Scholar] [CrossRef]

- Agustsson, E.A.; Timofte, R. Ntire 2017 challenge on single image super-resolution: Dataset and study. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 126–135. [Google Scholar]

- Krizhevsky, A. Learning multiple layers of features from tiny images. Tech. Rep. 2009. Available online: https://www.cs.toronto.edu/~kriz/cifar.html (accessed on 6 October 2025).

- Everingham, M.M. The PASCAL Visual Object Classes Challenge 2012 (VOC2012) Results. PASCAL VOC2012 Workshop. 2012. Available online: http://www.pascal-network.org/challenges/VOC/voc2012/workshop/index.html (accessed on 6 October 2025).

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Li, F.-F. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Fdez-Vidal, X.R. Visual distinctness metric for coder performance evaluation. In Proceedings of the Visual Distinctness Metric Conference, 2014; Available online: http://decsai.ugr.es/cvg/CG/base.htm (accessed on 6 October 2025).

- Bas, P.P. Break our steganographic system: The ins and outs of organizing BOSS. In International Workshop on Information Hiding; Springer: Berlin/Heidelberg, Germany, 2011; pp. 59–70. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common objects in context. In Proceedings of the Computer Vision—ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Li, R.Z.; Liu, Q.L.; Liu, L.F. Novel image encryption algorithm based on improved logistic map. IET Image Process. 2019, 13, 125–134. [Google Scholar] [CrossRef]

- Zeng, J.; Tan, S.; Liu, G.; Li, B.; Huang, J. WISERNet: Wider Separate-Then-Reunion Network for Steganalysis of Color Images. IEEE Trans. Inf. Forensics Secur. 2019, 14, 2735–2748. [Google Scholar] [CrossRef]

- Xu, G.; Wu, H.-Z.; Shi, Y.-Q. Structural Design of Convolutional Neural Networks for Steganalysis. IEEE Signal Process. Lett. 2016, 23, 708–712. [Google Scholar] [CrossRef]

- Boroumand, M.; Chen, M.; Fridrich, J. Deep Residual Network for Steganalysis of Digital Images. IEEE Trans. Inf. Forensics Secur. 2019, 14, 1181–1193. [Google Scholar] [CrossRef]

- Deng, X.; Chen, B.; Luo, W.; Luo, D. Fast and Effective Global Covariance Pooling Network for Image Steganalysis. In Proceedings of the ACM Workshop on Information Hiding and Multimedia Security, New York, NY, USA, 3–5 July 2019; pp. 230–234. [Google Scholar]

- Zhang, R.; Zhu, F.; Liu, J.; Liu, G. Depth-Wise Separable Convolutions and Multi-Level Pooling for an Efficient Spatial CNN-Based Steganalysis. IEEE Trans. Inf. Forensics Secur. 2020, 15, 1138–1150. [Google Scholar] [CrossRef]

- Boehm, B. StegExpose—A Tool for Detecting LSB Steganography. arXiv 2014, arXiv:1410.6656v1. [Google Scholar] [CrossRef]

- Mun, S.-M.S.; Nam, S.-H.N.; Jang, H.-U.J.; Kim, D.K.; Lee, H.-K.L. A robust blind watermarking using convolutional neural network. arXiv 2017, arXiv:1704.03248. [Google Scholar] [CrossRef]

- Zhong, X.X.; Huang, P.-C.H.; Mastorakis, S.M.; Shih, F.Y. An automated and robust image watermarking scheme based on deep neural networks. IEEE Trans. Multimed. 2020, 23, 1951–1961. [Google Scholar] [CrossRef]

- Luo, X.Y.; Zhan, R.H.; Chang, H.W.; Yang, F.Y.; Milanfar, P. Distortion agnostic deep watermarking. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 13548–13557. [Google Scholar]

- Chen, B.B.; Wu, Y.W.; Coatrieux, G.C.; Chen, X.C.; Zheng, Y.H. JSNet: A simulation network of JPEG lossy compression and restoration for robust image watermarking against JPEG attack. Comput. Vis. Image Underst. 2020, 197, 103015. [Google Scholar] [CrossRef]

- Li, Z.Z.; Zhang, X.S.; Yang, Y.I.; Gong, X.X. Mixed Order Attention Watermark Network Against JPEG Compression. In Proceedings of the 2024 21st International Computer Conference on Wavelet Active Media Technology and Information Processing (ICCWAMTIP), Chengdu, China, 14–16 December 2024; pp. 1–7. [Google Scholar]

- Baluja, S. Hiding Images within Images. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 1685–1697. [Google Scholar] [CrossRef] [PubMed]

- Guan, Z.; Jing, J.; Deng, X.; Xu, M.; Jiang, L.; Zhang, Z.; Li, Y. DeepMIH: Deep Invertible Network for Multiple Image Hiding. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 372–390. [Google Scholar] [CrossRef] [PubMed]

- Xu, Y.; Mou, C.; Hu, Y.; Xie, J.; Zhang, J. Robust Invertible Image Steganography. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 7865–7874. [Google Scholar]

- Yu, J.; Zhang, X.; Xu, Y.; Zhang, J. CRoSS: Diffusion Model Makes Controllable, Robust and Secure Image Steganography. In Proceedings of the 37th International Conference on Neural Information Processing Systems, Red Hook, NY, USA, 10–16 December 2023. [Google Scholar] [CrossRef]

- Xu, Y.; Zhang, X.; Meng, X.; Mou, C.; Zhang, J. Diffusion-Based Hierarchical Image Steganography. In Proceedings of the 2025 IEEE International Conference on Multimedia and Expo (ICME), Nantes, France, 30 June–4 July 2025. [Google Scholar] [CrossRef]

| Parameter | Value | Description |

|---|---|---|

| (W, H) | (32, 32) | Size of training image |

| (M, N) | (8, 8) | Size of divided image part |

| (S, R) | (4, 4) | Size of reshaped binary data |

| Epoch | 150 | Training epoch number |

| LR | Learning rate | |

| 0.4 | Encoder’s loss function weights | |

| 0.6 | Decoder’s loss function weights | |

| Discriminator’s loss function weights |

| Image Quality | Robustness (BER) | |||||

|---|---|---|---|---|---|---|

| (PSNR/SSIM) | Q = 90 | Q = 70 | Q = 50 | Q = 30 | Q = 20 | |

| 0.2 | ||||||

| 0.4 | ||||||

| 0.6 | 0 | 0 | 0 | 0 | ||

| 0.8 | 0 | 0 | 0 | 0 | 0 | |

| 1.0 | 0 | 0 | 0 | 0 | 0 | |

| Image Size | PSNR | SSIM | BER | ||

|---|---|---|---|---|---|

| 29.78 | 48.06 | 0.851 | 0.97 | 0 | |

| 29.66 | 42.90 | 0.847 | 0.981 | 0 | |

| 29.69 | 38.72 | 0.847 | 0.951 | 0 | |

| Datasets | PSNR | SSIM | BER | ||

|---|---|---|---|---|---|

| COCO | 29.76 | 41.88 | 0.851 | 0.976 | 0 |

| BOSSBase (v1.01) | 29.67 | 42.19 | 0.850 | 0.958 | 0 |

| Image_Net | 29.66 | 42.90 | 0.846 | 0.963 | 0 |

| Image Quality (PSNR/SSIM) | ||

|---|---|---|

| 1 | 8.561/−0.0136 | 6.228/0.0163 |

| 2 | 6.738/−0.0259 | 5.536/0.0160 |

| 3 | 8.659/−0.0307 | 6.177/0.0156 |

| 4 | 8.127/−0.0005 | 5.760/0.0125 |

| 5 | 7.896/0.0001 | 5.992/0.0224 |

| Attack | Robustness (% BER) | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Gaussian Noise () | Salt & Pepper Noise (%) | Grid Crop (%) | Cropping (%) | Sharpening (Radius) | |||||||||||

| 40 | 45 | 50 | 10 | 15 | 20 | 30 | 40 | 50 | 10 | 20 | 30 | 30 | 40 | 50 | |

| ADPGAN | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |||||||

| SE Block | Cir_Conv | ConvBNReLU | A = 1.0 | ||

|---|---|---|---|---|---|

| Image Quality (PSNR/SSIM) | BER (Q = 20) | BER (Q = 10) | |||

| ✓ 1 | ✓ | × 2 | 28.00/0.840 | 0 | 0 |

| × | ✓ | ✓ | 29.08/0.8543 | 0 | 0 |

| × | × | × | 28.90/0.854 | 0 | 0 |

| ✓ | × | ✓ | 28.53/0.847 | 0 | 0 |

| ✓ | ✓ | ✓ | 28.19/0.841 | 0 | 0 |

| SE Block | Cir_Conv | ConvBNReLU | A = 0.2 | ||

| Image Quality (PSNR/SSIM) | BER (Q = 20) | BER (Q = 20) | |||

| ✓ | ✓ | × | 40.76/0.997 | 0.260 | 0.321 |

| × | ✓ | ✓ | 41.89/0.987 | 0.201 | 0.304 |

| × | × | × | 41.58/0.981 | 0.213 | 0.288 |

| ✓ | × | ✓ | 41.39/0.980 | 0.244 | 0.314 |

| ✓ | ✓ | ✓ | 40.96/0.979 | 0.195 | 0.26 |

| Model | A = 0.2 | A = 1.0 | ||||

|---|---|---|---|---|---|---|

| Image Quality (PSNR/SSIM) | BER (Q = 20) | BER (Q = 10) | Image Quality (PSNR/SSIM) | BER (Q = 20) | BER (Q = 10) | |

| 41.51/0.989 | 0.285 | 0.302 | 28.57/0.853 | 0 | 0 | |

| 39.38/0.965 | 0.264 | 0.326 | 27.59/0.843 | 0.004 | 0.007 | |

| 40.05/0.971 | 0.230 | 0.305 | 27.28/0.834 | 0 | 0.002 | |

| ADPGAN | 40.96/0.976 | 0.195 | 0.260 | 28.19/0.849 | 0 | 0 |

| Model | A = 0.2 | A = 1.0 | ||||

|---|---|---|---|---|---|---|

| Image Quality (PSNR/SSIM) | BER (Q = 20) | BER (Q = 10) | Image Quality (PSNR/SSIM) | BER (Q = 20) | BER (Q = 10) | |

| ADPGAN | 40.96/0.985 | 0.195 | 0.260 | 28.19/0.849 | 0 | 0 |

| 38.25/0.980 | 0.275 | 0.335 | 26.70/0.820 | 0.176 | 0.245 | |

| 40.25/0.982 | 0.226 | 0.292 | 28.70/0.838 | 0 | 0.005 | |

| 51.06/0.999 | 0.433 | 0.449 | 47.29/0.995 | 0.292 | 0.364 | |

| Quality Factor | PSNR | SSIM | BER | ||

|---|---|---|---|---|---|

| 90 | 34.99 | 42.41 | 0.919 | 0.990 | 0 |

| 80 | 35.04 | 42.80 | 0.923 | 0.993 | 0 |

| 70 | 35.33 | 42.73 | 0.931 | 0.991 | 0 |

| 60 | 34.71 | 42.50 | 0.899 | 0.992 | |

| 50 | 35.31 | 42.19 | 0.934 | 0.989 | |

| 40 | 35.16 | 41.50 | 0.929 | 0.992 | |

| DIH | XuNet [37] | SRNet [38] | CovPoolNet [39] | ZhuNet [40] | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Methods | ||||||||||||

| MSM-DIH [14] | 76.6 | 1.00 | 38.8 | 36.6 | 52.4 | 44.5 | 8.00 | 5.00 | 6.50 | 12.8 | 9.00 | 10.9 |

| HiNet [12] | 13.8 | 14.2 | 14.0 | 0.40 | 0.00 | 0.20 | 3.30 | 0.20 | 1.75 | 0.00 | 8.20 | 4.10 |

| ISN [13] | 10.4 | 19.4 | 14.9 | 0.40 | 0.20 | 0.30 | 3.80 | 4.00 | 3.90 | 7.20 | 43.4 | 25.3 |

| 67.8 | 26.9 | 47.4 | 89.1 | 7.30 | 48.2 | 51.1 | 23.1 | 37.1 | 0.00 | 49.1 | 24.1 | |

| Method | 30 | 50 | 70 | 90 |

|---|---|---|---|---|

| Mun [42] | 37.8% | 36.1% | 34.4% | 32.2% |

| HiDDeN [12] | 34.6% | 32.6% | 31.3% | 30.9% |

| Zhong [43] | 9.3% | 6.8% | 4.5% | 2.3% |

| Luo [44] | 23.9% | 17.6% | 11.2% | 4.3% |

| CRWNet [45] | 25.7% | 24.5% | 23.8% | 22.6% |

| ReDMark [7] | 9.8% | 6.7% | 2.3% | 1.3% |

| MOANet [46] | 6.9% | 4.8% | 2.2% | 1.3% |

| ADPGAN | 0 | 0 | 0 | 0 |

| Methods | Clean | Gaussian Noise () | Gaussian Denoiser () [3] | JPEG Compression (Q) | JPEG Enhancer (Q) [3] | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 10 | 20 | 30 | 10 | 20 | 30 | 20 | 40 | 80 | 20 | 40 | 80 | ||

| Baluja [47] | 34.24 | 10.30 | 7.54 | 6.92 | 7.97 | 6.10 | 5.49 | 6.59 | 8.33 | 11.92 | 5.21 | 6.98 | 9.88 |

| ISN [13] | 41.83 | 12.75 | 10.98 | 9.93 | 11.94 | 9.44 | 6.65 | 7.15 | 9.69 | 13.44 | 5.88 | 8.08 | 11.63 |

| DeepMIH [48] | 42.98 | 12.91 | 11.54 | 10.23 | 11.87 | 9.32 | 6.87 | 7.03 | 9.78 | 13.23 | 5.59 | 8.21 | 11.88 |

| RIIS [49] | 43.78 | 26.03 | 18.89 | 15.85 | 20.89 | 15.97 | 13.92 | 22.03 | 25.41 | 26.02 | 13.88 | 16.74 | 20.13 |

| CRoSS [50] | 23.79 | 21.89 | 20.19 | 18.77 | 21.39 | 21.24 | 21.02 | 21.74 | 22.74 | 23.51 | 20.60 | 21.222 | 21.19 |

| HIS [51] | 28.39 | 23.49 | 21.88 | 20.02 | 21.73 | 22.41 | 21.98 | 23.21 | 26.11 | 26.23 | 22.42 | 23.23 | 23.15 |

| Ours | 40.26 | 40.25 | 40.26 | 40.24 | 39.47 | 39.28 | 39.02 | 39.70 | 39.77 | 40.24 | 38.12 | 38.24 | 38.55 |

| Framework | CPU | GPU | RAM (G) | Training Time (H) | Test Time (S) | Param (M) | Memory (M) |

|---|---|---|---|---|---|---|---|

| JCIF | Intel Core i9 | GeForce RTX 4090 | 64 | 171 (99) | 5.04 | 5.24 (2.02) | 20.04 (7.77) |

| Intel Core i9 | GeForce RTX 4090 | 64 | 103 (65) | 5.66 | 22.82 (18.46) | 87.40 (70.79) | |

| JCIF | Intel Core i7 | − | 16 | − | 8.32 | 5.24 (2.02) | 20.04 (7.77) |

| Intel Core i7 | − | 16 | − | 9.48 | 22.82 (18.46) | 87.40 (70.79) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Z.-Q.; Huang, Y.-H.; Chen, X.-Y.; Lo, S.-L. ADPGAN: Anti-Compression Attention-Based Diffusion Pattern Steganography Model Using GAN. Electronics 2025, 14, 4426. https://doi.org/10.3390/electronics14224426

Chen Z-Q, Huang Y-H, Chen X-Y, Lo S-L. ADPGAN: Anti-Compression Attention-Based Diffusion Pattern Steganography Model Using GAN. Electronics. 2025; 14(22):4426. https://doi.org/10.3390/electronics14224426

Chicago/Turabian StyleChen, Zhen-Qiang, Yu-Hang Huang, Xin-Yuan Chen, and Sio-Long Lo. 2025. "ADPGAN: Anti-Compression Attention-Based Diffusion Pattern Steganography Model Using GAN" Electronics 14, no. 22: 4426. https://doi.org/10.3390/electronics14224426

APA StyleChen, Z.-Q., Huang, Y.-H., Chen, X.-Y., & Lo, S.-L. (2025). ADPGAN: Anti-Compression Attention-Based Diffusion Pattern Steganography Model Using GAN. Electronics, 14(22), 4426. https://doi.org/10.3390/electronics14224426