Abstract

Text-based CAPTCHAs remain a widely deployed mechanism for mitigating automated attacks across web platforms. However, the increasing effectiveness of convolutional neural networks (CNNs) and advanced computer vision models poses significant challenges to their reliability as a security measure. This study presents a comprehensive forensic and security-oriented analysis of text-based CAPTCHA systems, focusing on how individual and combined visual distortion features affect human usability and machine solvability. A real-world dataset comprising 45,166 CAPTCHA samples was generated under controlled conditions, integrating diverse anti-recognition, anti-segmentation, and anti-classification features. Recognition performance was systematically evaluated using both a CNN-based solver and actual human interaction data collected through an online exam platform. Results reveal that while traditional features such as warping and distortion still degrade machine accuracy to some extent, newer features like the hollow scheme and multi-layer structures offer better resistance against CNN-based attacks while maintaining human readability. Correlation and SHAP-based analyses were employed to quantify feature influence and identify configurations that optimize human–machine separability. This work contributes a publicly available dataset and a feature-impact framework, enabling deeper investigations into adversarial robustness, CAPTCHA resistance modeling, and security-aware human interaction systems. The findings underscore the need for adaptive CAPTCHA mechanisms that are both human-centric and resilient against evolving AI-based attacks.

1. Introduction

The internet has transformed significantly in recent decades, shifting from the static, information-sharing Web 1.0 to the interactive and user-focused Web 2.0. Applications from almost every industry—banking, education, commerce—are now being completed through swift processes over the web. Nowadays, every web service involves some sort of registration, login, or payment, among other services. Users can access and interact with various web-based applications by filling in their username and password information, bank wallet addresses, ID numbers, and similar unique information in an online form. This expands the attack surface for web applications in various ways, creating an open door for attackers. The abuse of such services, using automated methods, is the first step towards more sophisticated attacks that can result in substantial revenue for the attackers [1]. For instance, setting up a new email account requires completing an internet form. If the spammers were to complete all these forms individually, the process would take too long, and they would not manage to create several fictitious addresses. Nonetheless, it is quite easy to compose a program (or an automated bot) to swiftly complete the forms automatically without human involvement [2]. As another example, an attacker uses the “trial and error” method to guess passwords, a brute force attack, by generating random passwords based on the user’s personal information. The attacker repeats this until they achieve success, which may take hours, days, months, or even years [3]. Various security measures have been developed to reduce the attack surface and prevent automated processes. One of the technologies frequently used among these measures is CAPTCHA [4]. The Completely Automated Public Turing Test to Tell Computers and Humans Apart is known by its acronym, CAPTCHA, a riddle humans can easily answer, but automated computer programs find it challenging. They are employed in communication channels to verify the “presence of a human” [5]. Modern web applications increasingly encounter challenges related to data privacy, interoperability, and scalability. These factors directly shape the design and deployment of authentication mechanisms, particularly CAPTCHA systems. Therefore, developing CAPTCHA solutions that are both secure and user-friendly is essential for maintaining resilience against automated attacks while ensuring seamless human interaction. CAPTCHAs are commonly employed to prevent dictionary attacks, search engine bots, spam comments, and fraudulent voting activities, as well as to protect website registrations and email addresses from malicious web scrapers.

Different kinds of CAPTCHA models have been introduced since the first real-world example of an Automated Turing Test, which was a system created by Altavista to stop “bots” from automatically registering web pages, and Ahn et al. introduced the concept of a CAPTCHA along with several useful suggestions for Automated Turing Tests [6]. It is relevant to consider CAPTCHAs in two general categories: optical and non-optical character recognition (OCR and non-OCR, respectively), as they have consistently gained popularity as a class of human interaction proofs. While non-OCR primarily concentrates on multimedia (images, audio, and video), OCR is mostly text-based [7]. CAPTCHA schemes can be categorized as cognitive/behavioral-based, video-based, audio-based, and image-based for non-OCR types. Cognitive-based CAPTCHA techniques employ physical (something you own), knowledge-based (something you know), and biometric (something you are) components, either with or without the assistance of sensors like an accelerometer or gyroscope [8]. With video-based CAPTCHA, a short-length video is displayed to the user, and some related questions, like the background color of the wall, the number of dogs lying, etc., are asked to be answered [9]. Audio-based CAPTCHAs record how letters or numbers are pronounced against a backdrop of noise and at random speech intervals [10]. Image-based CAPTCHAs use visual elements of components like bridges, vehicles, pedestrian crossings, and more. Essentially, the user is anticipated to evaluate the theme and choose images based on the specified keyword or choose the ones separate from it as directed [11]. In this context, numerous CAPTCHA systems have been proposed. Google reCAPTCHA v2 features a checkbox that states, “I’m not a robot.” It gathers extensive information about the user, such as mouse movements and clicks, keyboard navigation, browser language, cookies retained for the past six months, touches (for touch-enabled devices), installed plugins, and more. On the other hand, Google reCAPTCHA v3 is an invisible, non-interactive Turing Test that collects user data without showing any checkbox, bringing back a score reflecting a certainty that the user is a human or a robot [12]. The game-oriented CAPTCHA employs text-based concept markers. In this manner, a bot equipped with computer vision capabilities can easily identify the text within the game. However, to solve the CAPTCHA, the bot also needs to analyze the relationship between the concepts, either by searching online or accessing the database [13]. Text-based CAPTCHAs are categorized under the optical-recognition-based class. By creating and evaluating distorted text displayed as an image to the user that is readable by humans but not by modern computer programs, text-based CAPTCHA defends web applications against bots.

Every CAPTCHA type has a unique mix of benefits and drawbacks. While many studies in the literature try to make CAPTCHA schemes and types more secure, the attack methods that have reached a 100% success rate are another field of study. Text-based CAPTCHAs, whose features will be detailed in the next section, have been used for many years and remain the most popular [8,14,15]. Guidelines and recommendations like those issued by the World Wide Web Consortium (W3C) are designed to make websites more accessible to everyone. To improve user experience, accessible website design prioritizes content, presentation, functionality, and interaction. However, because of their unfriendly nature, CAPTCHA tests can cause frustration, a bad user experience, user abandonment, and lower website conversion rates [16]. Audio-based CAPTCHAs are criticized for being accessible mostly in English; hence, they require the user to have English skills and a broad vocabulary [17]. The video-based CAPTCHA system requires time to watch, and if there is limited internet bandwidth, the video may take longer to download [18]. Acien et al. [19] contend that Google’s algorithms handle private data, raising serious questions about how they adhere to recent laws like the European General Data Protection Regulation (GDPR). Prince & Isasi [20] announced that Cloudflare, one of the biggest companies that provides content delivery network services and cybersecurity, stopped using reCAPTCHA because of privacy concerns. The limitation of the game-based CAPTCHA, according to Narayanaswamy [21], is that players might not read the game’s rules or comprehend a game without any rules, which could cause them to take the incorrect steps to complete the CAPTCHA. Ababtain and Engels [22] highlight that using accelerometer readings in gesture-based CAPTCHAs, a behavior-based CAPTCHA, causes several problems, such as control issues and potential challenges for certain elderly individuals in adjusting the direction. They discovered that older adults (those over 60) had usability problems, took longer to complete the CAPTCHA, and occasionally gave up and skipped the task. Chang et al. [23] presented a method for compromising commonly used slider CAPTCHAs, achieving success rates between 87.5% and 100%. The experimental findings demonstrate that slider CAPTCHAs, a form of behavior-verification CAPTCHA, fail to completely distinguish between humans and bots, particularly in terms of mouse operation behavior. Graphic-based CAPTCHA, as highlighted by Gutub and Kheshaifaty [24], provides a human authentication tool with multiple images to process, but it takes longer to load, resulting in a noticeable delay because of existing networks and situations with limited capabilities.

The text-based CAPTCHA scheme still has uses and can be applied to security systems when taking into account the usability cases mentioned above [25]. Because most people have been taught to recognize characters since they were young, and because text-based CAPTCHAs are simple enough for users worldwide to understand with little guidance, they are widely used [26]. Matsuura et al. [27] highlight that text-based CAPTCHAs are the most widely used kind due to their affordability and ease of use. Compared to other types of CAPTCHAs, text-based CAPTCHAs can be created very easily [28], using fewer resources, without any cost to the party producing them, while also providing an advantage in resource usage and usability for the user trying to solve them. Conti et al. [29] also emphasize that many users still prefer text-based CAPTCHAs due to their familiarity, sense of security, and control.

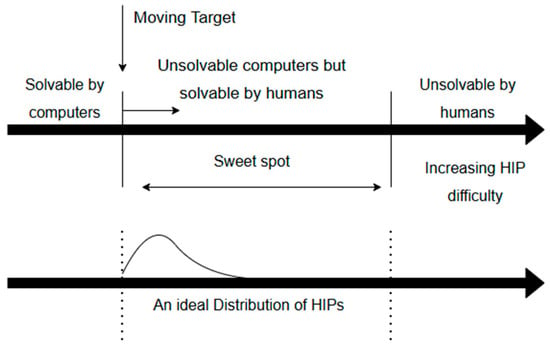

While security requirements and service providers force authentication designers to increase the access control difficulty of CAPTCHAs to thwart potential computer robots or automated programs by adding elements, usability considerations limit IT designers to creating schemes that are only as difficult as necessary. This makes striking a balance between the two trade-offs difficult. Bursztein et al. [30] conducted a comprehensive study on CAPTCHA usability. It stated that most research concentrated on making CAPTCHAs challenging for machines rather than paying much attention to usability issues and that humans frequently find them challenging to complete. Although text-based CAPTCHAs are the most popular in web applications, Rahman [31] notes they have certain common flaws. Object Character Recognition (OCR) can be used to identify texts when there are very few characters, classes, or digits; however, when noise and distortion are added to text-based CAPTCHAs, it frequently becomes difficult for humans to recognize them. As illustrated in Figure 1, the key points of CAPTCHA design lie in exploring the gap of recognition ability between human users and computers.

Figure 1.

Using CAPTCHA/HIP for human and computer classification based on their difference in recognition ability [32].

Figure 1 illustrates the distribution of difficulty levels for Human Interactive Proofs (HIPs), highlighting the ideal challenge point. On the left side, simple CAPTCHAs that can be easily solved by computers are represented. The central region, referred to as the “sweet spot,” denotes CAPTCHAs that are solvable by humans but remain challenging for machines. On the right side, CAPTCHAs that are unsolvable for both humans and machines are depicted.

The literature and real-world cases show that text-based CAPTCHA is still the most popular type. However, the security and usability dilemma is still a problem. The reliability of CAPTCHAs is constantly being tested due to machines’ ever-advancing visual recognition algorithms. To ensure that CAPTCHAs remain effective, new designs and techniques are required that are easily perceivable by the human eye while posing challenges for machines. Today, numerous datasets exist to assess and enhance machines’ ability to solve CAPTCHA tests. However, the specific techniques applied in the images used within these datasets and the effectiveness of different features remain unclear. This lack of clarity prevents a definitive understanding of which CAPTCHA features can be successfully solved using machine learning or image recognition techniques. Additionally, these datasets provide limited information on human-centric metrics such as users’ reading success and readability. Most research focuses primarily on the performance of machines in solving CAPTCHAs, thereby neglecting the fundamental goal of CAPTCHAs—to make recognition easier for humans while making it more difficult for machines. Ensuring this balance is crucial for CAPTCHAs to serve as a reliable human–robot distinction mechanism. Moreover, with AI-powered algorithms, CAPTCHAs that are difficult for humans to solve are often relatively easy for machines, contradicting the core philosophy of CAPTCHA. Consequently, rather than reinforcing the statement “I am not a robot,” this situation increasingly aligns with the notion of “I am not human.”

This paper presents an in-depth and exhaustive study of different security features to create text-based CAPTCHAs and their effect on usability and security. We combined different security features to create text-based CAPTCHAs. A comprehensive analysis was conducted to determine which features in the design of the CAPTCHA would make it easier for humans to read but harder to solve by machine learning. We also revealed which features would be rendered useless when combined in the CAPTCHAs to be created.

This paper has the following major contributions:

- Identifying which CAPTCHA features are more difficult for machines to solve while remaining easily readable for users.

- A dual evaluation using both a CNN-based solver and real human interaction data to jointly analyze usability and robustness.

- Development of a dataset derived from real-world CAPTCHA implementations, which possesses features that allow for the evaluation of both user efficiency and the success of machine learning algorithms in solving CAPTCHA tests. This dataset provides a framework for simultaneously assessing human and machine performance in CAPTCHA design, offering a valuable resource for future CAPTCHA research and analysis.

The study is organized as follows: The Section 1 provides a general definition of CAPTCHA tests and discusses existing challenges. The Section 2 reviews academic studies related to the problem statement. In the Section 3, our methodology is discussed in detail, along with the features used in CAPTCHAs and the development process of the proposed dataset. In the Section 4, our findings are presented, focusing on examining usability and security in the context of human and machine performance using our dataset. Finally, the Section 5 discusses the findings and explores future research directions for improving CAPTCHA tests.

2. Related Work

In the literature, there have been studies on making it difficult to solve CAPTCHA schemes in an automated way for many years, as well as studies on their usability. It is seen that the variables related to usability are gathered around the “time of” and “accuracy of” CAPTCHA solving [33,34]. As Chow et al. [26] emphasized, text-based CAPTCHAs are simple enough for users worldwide to understand with little guidance; however, the accuracy of users solving a text-based CAPTCHA is increasingly important.

A text-based CAPTCHA generator with an optimizer that maximizes the degree of distortion is used in an experimental investigation by Alsuhibany [35] to improve the accuracy of human CAPTCHA solvers. Fifty-three volunteers participated in the experiment using a pre–post-test design. Although the evaluation’s findings demonstrated that the optimization technique greatly improved the created CAPTCHAs’ solvability, the study focused solely on usability and neglected to check for security. Furthermore, compared to our research, the experimental investigation had a significantly smaller sample size.

Raman et al. [36] conducted a study to uncover the usability problems with CAPTCHAs and to offer a way to assess how well security and usability are balanced in CAPTCHAs. They obtained evidence through a literature review, which served as the foundation for creating a list of pertinent usability issues and features. These noted characteristics and issues established the basis for creating the comprehensive CAPTCHA usability model. The applicability and accuracy of the developed model have not been tested experimentally, and a CAPTCHA dataset that can be made available for use has not been created.

Brodić and Amelio [37], in their research, examined the usability of text-based CAPTCHAs on tablet computers, focusing on user performance and demographic factors. The study involving 125 Internet users evaluated the completion time and success rate of solving text-based CAPTCHAs containing only letters or numbers. Participants were categorized based on gender, age, education level, and Internet experience. The data collected were analyzed using association rule mining to identify patterns linking demographic characteristics with CAPTCHA solving efficiency.

Alsuhibany and Alnoshan [38] conducted a study comparing the usability and security of interactive and text-based Arabic CAPTCHA schemes. To assess usability, an experiment was performed on mobile devices, measuring efficiency (the time taken to solve a CAPTCHA) and effectiveness (accuracy in entering characters for text-based CAPTCHAs). The study generated 5000 samples, and participants were presented with 20 different interfaces containing randomly selected CAPTCHA images with varying distortion levels. For security evaluation, the study applied preprocessing, segmentation, and recognition techniques to assess CAPTCHA robustness. In the case of text-based CAPTCHAs, a Google API OCR engine was used to recognize characters, with all 5000 samples processed through this system. Although the study considers both usability and security, the dataset consists of Arabic letters only, and the evaluation is conducted for mobile devices only as well.

Kumar et al. [39] developed a Hindi-language-based CAPTCHA and evaluated its security and usability. By successfully breaking 20 existing schemes, they established design benchmarks, including appropriate colors, varied backgrounds, multiple noisy patches, and controlled distortions. The new CAPTCHA consists of five to eight characters, generated using five different segmentation methods. For security testing, 1000 samples were analyzed using multiple attack algorithms and image processing tools (e.g., binarization, filters, and morphology), but none could segment or recognize the CAPTCHA. Usability testing with 55 users on 550 samples showed a 90% accuracy rate, with an average solving time of 10 s per CAPTCHA. Although the study has covered both usability and security issues together, it does not provide information on which security feature affects the usability and security of CAPTCHA, which can be a guide for researchers and developers. The study, which was conducted only for the Hindi alphabet, does not provide a dataset.

Chatrangsan and Tangmanee [40] examined the impact of letter spacing, disturbing line orientation, and disturbing line color on the usability and robustness of text-based CAPTCHAs. A total of 240 CAPTCHAs, generated under 12 different conditions (3 letter spacings × 2 line orientations × 2 line colors), were tested by undergraduate students. Participants solved CAPTCHAs within an application, and their responses were recorded for usability analysis. For security evaluation, the same 240 CAPTCHAs were processed using the GSA CAPTCHA Breaker to measure robustness. Results showed that participants achieved an 88.8% accuracy rate, with no significant differences among the three factors, while the automated tool successfully recognized 39.6% of the CAPTCHAs. Although the study addresses both usability and security, it does not analyze the effect of security features on usability. Additionally, its contribution to CAPTCHA resistance is limited to three security features, and it does not provide a dataset for future research.

Olanrewaju et al. [41] conducted an experimental study in which they developed a CAPTCHA system. A total of 220 students participated in the experiment, where they were required to recognize and input various types of CAPTCHA codes through a web interface. During the test, participants submitted characters from five randomly generated CAPTCHAs. For each submitted CAPTCHA, the solving time and response time for both solved and unsolved CAPTCHAs were recorded. The study assessed the success rate and accuracy of different CAPTCHA types, employing a quantitative approach to evaluate the usability of the developed text-based CAPTCHAs incorporating accented characters. The usability test was carried out with a total of 1108 CAPTCHA codes. However, they did not focus on whether or not the types of CAPTCHAs are secure. Incorporating accented characters into existing text-based CAPTCHAs is expected to enhance their resistance to attacks.

Alrasheed and Alsuhibany [42] conducted a study to enhance the usability and security of handwritten Arabic CAPTCHA schemes. Five different perturbation models, which are EOT, SGTCS, JSMA, IAN, and CTC, were applied to constructed meaningful Arabic words. The security level of CAPTCHAs is evaluated using Google vision API. For usability evaluation, 294 participants were asked to solve CAPTCHAs, and the metrics of time to solve the CAPTCHA and accuracy values were used.

To better position this study within the existing literature, Table 1 summarizes and compares recent CAPTCHA-related works in terms of their objectives, research gaps, problems, and limitations. The comparison highlights how this study advances prior work by integrating large-scale usability testing with CNN-based solvability analysis.

Table 1.

Comparison of previous studies on text-based CAPTCHA security and usability.

As shown in Table 1, previous studies have generally focused on either usability or security in isolation. In contrast, this research integrates both dimensions through a unified evaluation framework, offering deeper insights into how specific CAPTCHA characteristics affect human performance and algorithmic solvability. Building upon the strengths of prior works while addressing their limitations, this study proposes a more comprehensive and effective approach to CAPTCHA design.

A thorough examination was conducted on the various security features employed in text-based CAPTCHAs, assessing their respective impacts on usability and security. Multiple protection mechanisms were incorporated to generate diverse CAPTCHA instances, followed by extensive analyses to determine which design factors enhance human readability while increasing resistance to machine learning-based attacks. Unlike earlier studies that often relied on solving specific CAPTCHA datasets or small-scale samples, our approach combines real human performance data with CNN-driven evaluation within a unified, explainable framework. This methodology enables quantifiable assessment of human–machine separability across multiple feature combinations. Moreover, we present a large-scale dataset capturing genuine user interactions in realistic environments, providing a valuable resource for future research and comparative studies.

3. Methodology

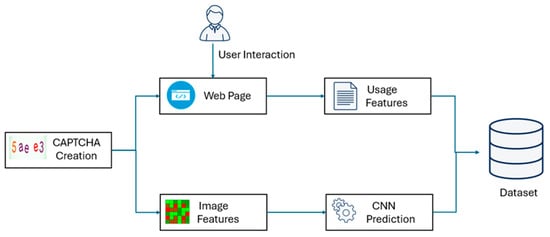

This section outlines the methodology for collecting, processing, and analyzing CAPTCHA data. The proposed pipeline integrates user interaction with CAPTCHA challenges, extracting both usage-based and image-based features. A convolutional neural network (CNN) is employed to predict CAPTCHA outcomes, while all relevant data is stored for further analysis. Additionally, human usability is considered to assess the balance between security and accessibility in CAPTCHA design. There are 6 major steps involved in our methodology, as seen in Figure 2.

Figure 2.

CAPTCHA data collection and analysis pipeline.

- Defining security features of text-based CAPTCHA: Defining which security features are used in literature and real-world examples.

- Creation of the dataset and use by participants: Generating the dataset using pre-defined security features and creating an environment to evaluate the usability of the dataset.

- CNN-based CAPTCHA solving: Attacking the CAPTCHAs in the dataset to solve them.

- Evaluation: Evaluating the usability and security of the CAPTCHAs according to security features.

- Recommendations: Providing recommendations to developers for both secure and useable CAPTCHA deployment based on our study.

3.1. Defining Security Features of Text-Based CAPTCHA

Since text-based CAPTCHA was the first type used against automated attacks and processes and is still popular, it has been the most studied type in the literature. The existence of many successful studies that try to measure the security of text-based CAPTCHAs by trying to solve them by attacking using machine learning and optical character recognition (OCR) techniques has resulted in researchers trying different features and implementing them in text-based CAPTCHAs. For character recognition, three steps are applied to CAPTCHA images. Preprocessing transforms a CAPTCHA image into black and white by eliminating as much noise as possible. Following this step, segmentation takes place, dividing the CAPTCHA image’s word into individual characters. Finally, the recognition process is carried out to identify the characters within the CAPTCHA image [38]. Security features applied to CAPTCHA play the role of anti-recognition of the characters. We defined security features under the variety of changes in image distortion parameters according to the literature. Creating a reading CAPTCHA test involves several key factors that influence both its difficulty and ease of use [32,43,44,45]. Table 2 shows a sample created for this study using each evaluated security feature.

Table 2.

CAPTCHA samples created from each security feature.

- Character Set: Selection of numbers and letters used in the CAPTCHA.

- Affine Transformations: Modifications such as translation, rotation, hollowing, twisting, and scaling applied to characters.

- Perspective Transformations and Image Warping: Distortions applied to the image, including global warping (affecting entire characters) and local warping (at the pixel level). Combining two conventional single-layer CAPTCHAs, known as a two-layer structure, can also be applied.

- Adversarial Clutter: Adding random elements like lines, dots, or geometric shapes that overlap with the characters. By removing the space between adjacent characters, overlapping makes it harder for computers to distinguish between different characters.

- Background and Foreground Textures: The use of textures to create a colored image from bi-level or grayscale masks generated in previous steps.

All CAPTCHA samples were generated as five-character alphanumeric strings uniformly drawn from [A–Z, 0–9], ensuring a consistent difficulty level across all images.

3.2. Creation of the Dataset and Use by Participants

Considering the security features mentioned above, the CAPTCHAs generated for dataset production were created as part of the study. As can be seen in Table 2, the literature generally defines 13 different security features that can be used to generate a CAPTCHA. A CAPTCHA may include just one of these security features or incorporate all 13. When a CAPTCHA is generated using a single security feature, 13 different CAPTCHA schemes can be obtained. When two security features are used, 78 different CAPTCHA schemes can be generated based on the combination formula shown in Equation (1) (C (13,2)). Only one CAPTCHA scheme will be possible if all 13 security features are applied.

In their study, Shibbir et al. [46] classified CAPTCHAs containing between 0 and 6 security features as “moderately secure,” while those incorporating 7 or more features were deemed secure. Based on this, it was decided that the CAPTCHAs created for this study would include 7 security features. Using Equation (1), for C (13,7), 1716 different CAPTCHA schemes can be generated. If the 7 security features were selected randomly for the dataset, it could result in some CAPTCHAs containing only features from the anti-preprocessing class. Additionally, since the usability evaluation of CAPTCHAs in this study will be conducted based on real user interactions, it was considered that a sufficient and optimum number of user inputs might not be available for all 1716 different schemes. To define the security features in each CAPTCHA, a semi-random selection method was applied. Five features were fixed, one was chosen from a mutually exclusive pair (deformation or blurring), and one additional feature was selected at random from the remaining options. The fixed features were wrapping and distortion from the anti-classification category, warping and rotation from the anti-preprocessing category, and overlapping characters from the anti-segmentation category. Either deformation or blurring was applied, but not both, because preliminary tests showed that using them together reduced text readability. One more feature was then added at random to increase variation. As a result, each CAPTCHA contained exactly seven active features: five fixed, one from the deformation or blurring pair, and one random. This approach ensured a balanced distribution of security elements while maintaining an acceptable level of readability. Although this configuration simulates real-world CAPTCHA designs, it limits the ability to isolate the individual effect of each distortion type. Future versions of the dataset will include controlled subsets to evaluate single-factor impacts.

To create the dataset used in this study, CAPTCHA was applied to user login processes in the online exams conducted following in-service training sessions provided by a public university. Each time a user attempted to log in, a CAPTCHA meeting the specified security criteria was generated by the system and stored in the database in base64 format. Data from a total of 2780 anonymized user sessions were included in the analysis. For each CAPTCHA displayed to a user, the system recorded whether it was solved correctly or incorrectly, along with its security features. The presence of multiple incorrect attempts for the same CAPTCHA in the dataset indicates that users made several attempts to solve it. As a result, the dataset contains 45,166 text-based CAPTCHAs, with a total of 67,758 different operation log entries recorded. The specific features captured for these log entries are shown below in Table 3.

Table 3.

Feature sections of the generated dataset.

Each CAPTCHA image was assigned a unique identifier (CAPTCHA_ID). Both the CNN model and human participants solved the same set of 45,166 CAPTCHA images. The CNN first attempted all images, and the same images were subsequently shown to human users through the web interface. Multiple user attempts could exist for a single image, resulting in 67,758 log entries. Human responses were matched to their corresponding CAPTCHA_IDs, allowing one-to-one comparison of human and machine performance on identical samples.

In this study, all experiments were conducted within an operational CAPTCHA system used by real users under authentic deployment conditions. The interaction data were collected from live user sessions rather than simulated environments, ensuring that the evaluation reflects real-world user behavior.

3.3. Convolutional Neural Network-Based CAPTCHA Solving Model

CAPTCHA systems are widely used as a security measure against bot attacks on the internet. Both humans and machine learning algorithms are now attempting to solve these tests. Traditional CAPTCHA systems aim to filter out bots by relying on humans’ ability to recognize visual or textual patterns. However, with the significant advancements in deep learning in recent years, machines have become increasingly capable of solving these tests [47]. Convolutional neural networks, machine learning-based models, have achieved remarkable success, particularly in the field of image processing, and are recognized as one of the most powerful techniques in modern machine learning [48,49,50]. Since CNNs use weight sharing to reduce the number of parameters in the network, optimizing numerous parameters becomes unnecessary, as this approach allows for faster convergence [51]. Due to their superiority in learning, abstracting, and recognizing visual patterns, CNNs offer significant advantages in tasks such as CAPTCHA solving [52].

Based on these advantages, a CNN-based solver was adopted in this study, since convolutional architectures represent the most widely used and reliable baseline for CAPTCHA recognition. This allows direct comparison with prior works and reliable benchmarking. Human performance data were collected in parallel to reflect real usability, enabling a dual-perspective evaluation of machine solvability versus human readability under identical conditions. Alternative solvers such as Transformer-based models are considered for future extensions.

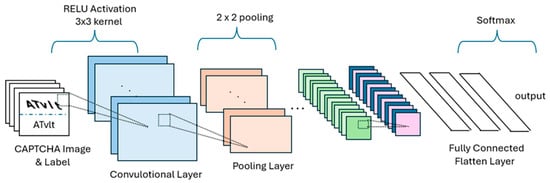

Models developed with CNN take CAPTCHA images as input, process them through a series of transformations to extract meaningful features, and ultimately generate probability predictions for each CAPTCHA character to reconstruct the correct text. In the CNN model developed for this study, various layers perform operations at different levels on the image, enabling the model to learn. These layers are summarized in Figure 3.

Figure 3.

An overview of the CAPTCHA solving model based on CNN.

In applying the architecture summarized in Figure 3, we employed a single CNN-based architecture to evaluate the machine solvability of text-based CAPTCHAs. This design choice was made to maintain a controlled comparison between human and machine performance, rather than to benchmark different model families. While this limits the architectural diversity, it enables a more focused analysis of human–machine solvability under uniform model conditions.

Model Training and Validation Configuration

In this study, a convolutional neural network (CNN) model was trained using the dataset produced at our university. The dataset consists of 45,166 CAPTCHA images, each resized to 50 × 200 pixels and converted to grayscale (single channel) format. The dataset was randomly divided into 90% for training (comprising 80% training and 10% validation) and 10% for testing.

No additional data augmentation was applied because the dataset already contained a sufficiently large number of samples, ensuring adequate variability for training and evaluation. Before training, all images were normalized by scaling pixel intensity values from the 0–255 range to the [0, 1] range.

Each CAPTCHA contains five characters, and every character belongs to one of 36 possible classes (26 lowercase letters + 10 digits). The output layer of the CNN was therefore structured as a (5 × 36) one-hot encoded matrix to represent the prediction of each character position.

The model was trained using the Adam optimizer with an initial learning rate of 0.001. Early stopping was employed when the validation loss did not improve for 10 consecutive epochs. Training was carried out for up to 100 epochs using mini-batches of 32 samples.

To ensure reproducibility of results, all random processes were fixed by setting the random seed to 42 for NumPy (v2.3), Python (v3.12), and TensorFlow (v2.18) environments.

3.4. Assessing CAPTCHA Features for Security and Usability

CAPTCHAs, widely used in security systems, are designed to distinguish between humans and machines. However, advancements in artificial intelligence and machine learning have made solving CAPTCHAs easier, potentially reducing their effectiveness. This study investigates how different CAPTCHA features impact human readability and machine solvers’ ability to bypass them. To achieve this, a real dataset was created, and factors affecting both human and machine performance were analyzed.

During the analysis process, Pearson correlation analysis and the SHAP (SHapley Additive ExPlanations) method were employed to assess the impact of CAPTCHA features on human and machine performance. The Pearson correlation coefficient identifies linear relationships between variables and was used to measure how specific CAPTCHA features influence human and machine performance. The Pearson correlation matrix presents a table of pairwise linear relationships between all variables in a dataset [53], quantifying these relationships through correlation coefficients [54]. However, as this method only examines linear relationships, it may overlook more complex interactions. Therefore, SHAP analysis was applied to evaluate CAPTCHA features’ impact on human and machine performance from a causal and contributory perspective [55]. SHAP measures the marginal contribution of each feature to the model’s prediction for a given dataset instance [56]. Based on Shapley values, this approach quantifies the individual effect of each CAPTCHA feature on solving performance, identifying which factors make CAPTCHAs harder for humans while facilitating machine solvers or vice versa.

In this study, Pearson correlation analysis was used to determine linear relationships, whereas SHAP analysis provided deeper insights into how specific features influence CAPTCHA solving performance. The findings contribute to improving CAPTCHA designs, making them more secure while ensuring they remain readable for humans but challenging for automated systems. To ensure the statistical validity and robustness of this analytical approach, the following methodological clarifications are necessary:

In the correlation analysis, Pearson’s r was applied only to continuous variables such as accuracy rates. For binary or categorical features, the correlation values were interpreted as indicative trends rather than strict linear measures. No data reweighting or statistical normalization was performed; correlations were evaluated in proportional terms to describe general tendencies.

To ensure the robustness of the SHAP-based interpretation, the model was trained three times using different random seeds. The resulting feature importance rankings remained consistent, with variance below 0.02 across runs. Additionally, pairwise feature dependencies were examined using Spearman’s correlation, which showed low inter-feature collinearity (|ρ| < 0.35), confirming the stability of the SHAP attributions.

In addition to these measures, further statistical validation was performed to strengthen the reliability of SHAP-based feature attributions. Bootstrap resampling with 1000 iterations was applied to estimate confidence intervals for feature importance values, ensuring that the reported SHAP contributions are statistically stable. Moreover, multicollinearity among input features was evaluated using the variance inflation factor (VIF), confirming that no strong inter-feature dependencies biased the SHAP results. These procedures collectively enhance the statistical credibility and reproducibility of the interpretability analysis.

In the initial stage of the model, Conv2D layers are used to extract fundamental features from CAPTCHA images. These layers detect visual patterns such as edges, corners, curves, and textures. This study employed 32 filters of size 3 × 3, and the Rectified Linear Unit (ReLU) activation function was chosen. BatchNormalization was applied to normalize the output of each layer, enhancing the model’s stability. Normalization improves learning and generalization capabilities.

MaxPooling2D was employed to reduce the size of feature maps using a 2 × 2 pooling window. The maximum value within each window was selected to preserve essential information. To prevent overfitting, a certain proportion of neurons was randomly deactivated. In this study, a dropout rate of 10% (0.1) was used to encourage the model to learn diverse features. The multi-dimensional data processed through convolutional and pooling layers were flattened using a Flatten layer and then passed to Dense layers for classification. At this stage, the extracted features were combined and utilized for final character classification.

In the final phase of the model, each character within the CAPTCHA was predicted separately. Assuming that each CAPTCHA consists of five characters, a prediction mechanism with X classes was created for each character, where X represents the total number of characters, including uppercase and lowercase letters and digits. The model’s output is structured as (5 × X) and reshaped into (5, X) to enable individual character predictions. The Softmax activation function was applied in the final layer to determine the class of each character based on probability distributions.

4. Results and Discussion

This section presents a detailed analysis of CAPTCHA security and usability using our dataset. We examine the impact of various security features on human and machine performance, highlighting key trends in CAPTCHA effectiveness. Our findings suggest a critical challenge in CAPTCHA design: while modern CAPTCHA solvers powered by machine learning have significantly improved, human accuracy remains relatively low. This paradox necessitates a reevaluation of CAPTCHA security mechanisms to balance robustness against automated attacks while ensuring usability for human users.

Before detailing the feature-by-feature analysis, it is useful to note some general usability metrics and limitations identified in this study. In addition to accuracy, the average solving time per participant was 8.15 s, providing an additional measure of CAPTCHA usability. However, user abandonment rates could not be systematically measured in this study and are identified as a limitation. Furthermore, since all CAPTCHA samples combined multiple distortions such as warping, rotation, and overlapping characters, isolating their individual effects was not possible. Future experiments will include single-factor CAPTCHA variants, comprehensive timing data, and abandonment metrics to better quantify human usability and the impact of individual distortion types.

Table 4 presents the number of CAPTCHA instances in which each security feature was applied (True) and those where it was not used (False). This distribution reflects a carefully structured dataset designed to evaluate the impact of different security features on usability and security.

Table 4.

Distribution of CAPTCHA security features in the dataset.

As shown in Table 4, certain features such as warping, overlapping, rotation, wrapping, and distortion were applied to all CAPTCHA instances (67,758 True, 0 False) and were excluded from individual feature-isolation analysis. Meanwhile, other features such as textured background, connected characters, hollow scheme, color variation, multi-layer structure, and noisy background were present in varying proportions. This distribution indicates that the dataset was carefully balanced to test the effectiveness of different CAPTCHA security features in resisting automated attacks.

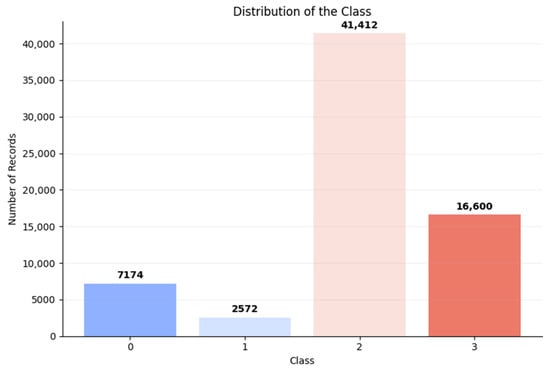

To further evaluate CAPTCHA security, we assessed solving performance by both humans and machines, categorizing the outcomes into four distinct classes, as presented in Table 5. The distribution of records across these classes is illustrated in Figure 4.

Table 5.

Classification of CAPTCHA solving performance by humans and machines.

Figure 4.

Distribution of CAPTCHA classification in the dataset.

It is important to clarify that these four classes, presented in Table 5 and Figure 4, were defined after model inference for comparative analysis only. Specifically, Classes 1 and 2 represent cases where either humans or the CNN solver succeeded exclusively, and they were the focus of SHAP and correlation analyses. Classes 0 (both succeeded) and 3 (both failed) were excluded, as they provide limited interpretive value. Since these classes were created post hoc and not used in CNN training, class imbalance does not affect the model. Differences in the number of samples between Classes 1 and 2 were treated descriptively, analyzed proportionally rather than through statistical weighting.

Following this classification, we examined how each security feature in the dataset influenced different CAPTCHA classifications, particularly focusing on how CAPTCHA complexity affects humans and machines differently. This analysis provides crucial insights into improving both security and usability in CAPTCHA design.

To quantify these effects, during the initial evaluation phase, we analyzed a total of 67,758 CAPTCHA records and found that the CNN-based machine learning model correctly solved 48,586 instances, while human users successfully solved only 9746. Based on these numbers, the overall machine accuracy was 71.71%, whereas human accuracy remained significantly lower at just 14.38%.

This performance gap between humans and machines can be largely attributed to methodological and dataset differences. In our experiment, any case where a user refreshed the CAPTCHA without submitting an answer was also recorded as a failed attempt, as this reflects real-world usability difficulty. Furthermore, our CAPTCHA dataset included a larger number of concurrent security features than prior works. For example, Chatrangsan and Tangmanee [40] achieved 88% accuracy using only three visual parameters (three letter spacings × two line orientations × two line colors), whereas our dataset incorporated up to seven overlapping features. In addition, our participant pool consisted of users from a broad range of ages and educational backgrounds, rather than only university students, providing a more realistic measure of usability.

These findings indicate that while CAPTCHA systems are designed to distinguish humans from automated bots, modern machine learning models now outperform human users in solving them. This paradox suggests that CAPTCHAs, instead of filtering out automated programs, may increasingly exclude human users. Consequently, CAPTCHA security must be evaluated not only in terms of differentiating humans from bots but also in terms of its resilience against machine learning-based solvers.

The high success rate of machine-based CAPTCHA solvers highlights potential security vulnerabilities in current CAPTCHA designs. These results emphasize the necessity of updating and improving CAPTCHA mechanisms to maintain their effectiveness. Given that machine learning models can solve most existing CAPTCHAs, there is a growing need for more sophisticated and adaptive CAPTCHA strategies. Additionally, usability improvements should be considered to enhance human success rates and overall user experience.

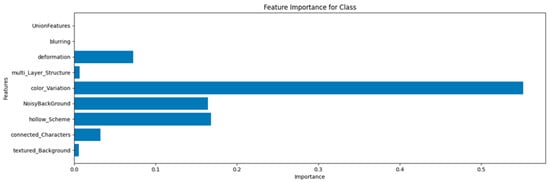

To gain further insight, we conducted a more detailed analysis of the CAPTCHA classes categorized in Table 4, identifying the most effective security features. Since warping, overlapping, rotation, wrapping, and distortion were applied together in all CAPTCHA instances, we represented them as UnionFeatures in Figure 5. The most influential features impacting human performance, regardless of machine accuracy, are illustrated in Figure 5.

Figure 5.

Most influential features in class determination.

Figure 5 illustrates the impact of different security features on CAPTCHA classification using a CNN-based analysis. The results indicate that color variation (0.55) has the most significant influence on CAPTCHA difficulty, making it the strongest determining factor. Additionally, hollow scheme (0.167) and noisy background (0.164) also play a crucial role in enhancing security. Deformation (0.072) has a moderate effect, whereas connected characters (0.032) and multi-layer structure (0.006) contribute minimally to classification outcomes. On the other hand, textured background (0.005) and blurring (0.0) show little to no impact, suggesting that these features do not effectively differentiate between human and machine solvers.

This analysis demonstrates that color variation, noisy backgrounds, and hollow schemes significantly influence CAPTCHA classification. In contrast, some commonly used security measures, such as blurring and textured backgrounds, appear to have little effect on performance. These findings indicate that CAPTCHA designers should prioritize features that make CAPTCHAs harder for machines but remain legible for human users.

Since the ideal outcome is Class 1 (humans succeed, machines fail) and the least desirable outcome is Class 2 (machines succeed, humans fail), we conducted a class-based analysis to determine which features most effectively challenge machines while minimizing human difficulty. This analysis provides a deeper understanding of how CAPTCHA designs can be optimized to balance security and usability effectively.

4.1. Class-Based Analyses

4.1.1. Humans Succeed, Machines Fail (Class 1)

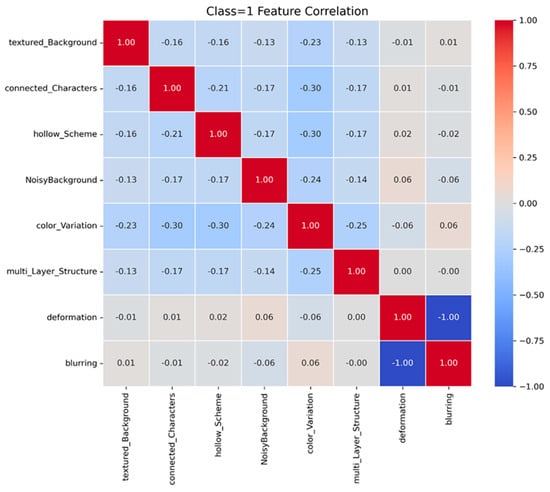

Figure 6 illustrates correlations among security features for Class 1 CAPTCHAs. Notably, color variation, connected characters, and hollow scheme show negative correlations with each other (e.g., −0.30). When these features are used in combination, human accuracy may be adversely affected. CAPTCHA design should balance features that are difficult for machines but still reasonably solvable by humans.

Figure 6.

Class 1 feature correlation.

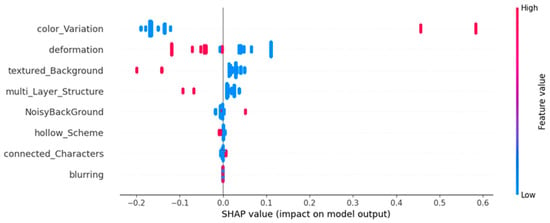

Figure 7 illustrates the SHAP values for each feature’s impact on the model, where higher SHAP values (shown in red) indicate a stronger positive effect on human success. Color variation emerges as the most influential feature, significantly improving human accuracy when its SHAP value is high. Deformation, textured background, multi-layer structure, and noisy background also contribute to human success, though their impact appears to be more inconsistent. In contrast, connected characters and hollow scheme occasionally show negative SHAP values, suggesting that in certain contexts, these features may actually make CAPTCHAs more challenging for humans. Blurring, on the other hand, has virtually no effect on the model’s predictions, indicating its minimal relevance in distinguishing human solvers from machines.

Figure 7.

SHAP values for Class 1.

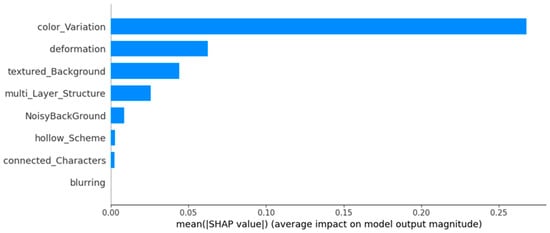

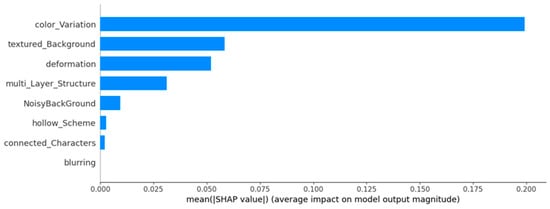

Figure 8 presents the average SHAP values for each feature in Class 1. Color variation stands out as the most impactful, followed by deformation and textured background. Multi-layer structure and noisy background have lesser influence, while hollow scheme, connected characters, and blurring show minimal effects. Overall, this suggests that features such as color variation and shape distortion are more decisive in enhancing security without overly compromising human usability.

Figure 8.

Mean SHAP values for Class 1.

4.1.2. Machines Succeed, Humans Fail (Class 2)

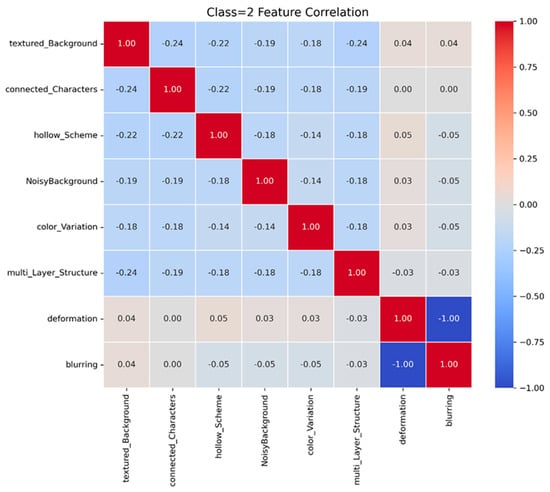

Figure 9 displays correlations among security features for Class 2 CAPTCHAs. Textured background, connected characters, hollow scheme, and multi-layer structure exhibit notable negative correlations. When combined, they may reduce machine accuracy. Meanwhile, color variation and noisy background also appear to limit machine success but must be balanced carefully to avoid excessively hindering human solvers.

Figure 9.

Class 2 feature correlation.

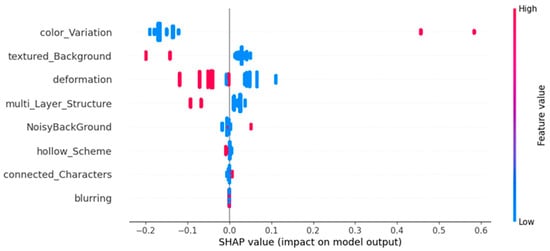

Figure 10 presents the SHAP values for each feature in Class 2, highlighting their influence on machine performance. Color variation, when associated with high SHAP values (shown in red), significantly increases the model’s confidence, indicating that these CAPTCHAs are easier for machines to solve. Additionally, textured background, deformation, and multi-layer structure contribute to the model’s predictive strength, further reinforcing machine success. In contrast, hollow scheme, connected characters, and blurring have minimal impact, suggesting that some traditional security measures may no longer be as effective against modern machine learning-based attacks.

Figure 10.

SHAP values for Class 2.

Figure 11 presents the average SHAP values for Class 2, highlighting the features that contribute to making CAPTCHAs more solvable by machines. Color variation stands out as the most dominant factor, significantly increasing machine success. Textured background and deformation also play notable roles, though their influence is somewhat weaker. Multi-layer structure and noisy background exhibit moderate effects, while hollow scheme, connected characters, and blurring remain the least impactful, indicating their limited effectiveness in resisting machine-based solvers.

Figure 11.

Mean SHAP values for Class 2.

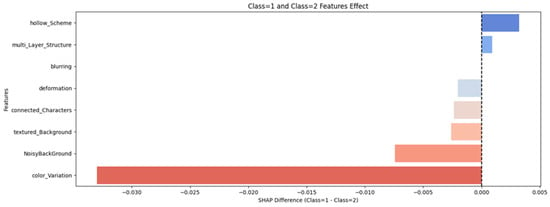

4.1.3. Key Feature Differences Between Human-Solvable and Machine-Solvable CAPTCHAs

Figure 12 compares Class 1 (humans succeed, machines fail) and Class 2 (machines succeed, humans fail), emphasizing the most decisive features in CAPTCHA classification. Color variation has the strongest influence, shifting outcomes toward Class 2, suggesting that increased color variation makes CAPTCHAs more challenging for humans while remaining relatively easier for machines. Similarly, noisy background, textured background, and connected characters contribute to this shift, indicating that these features tend to hinder human solvers more than machine solvers. In contrast, hollow scheme and multi-layer structure show a positive correlation with Class 1, meaning that they assist human users while posing greater difficulty for automated solvers. Blurring and deformation, on the other hand, exhibit minimal overall impact on CAPTCHA classification, suggesting that they are less influential in distinguishing between human and machine performance.

Figure 12.

SHAP impact of features on Class 1 vs. Class 2 CAPTCHA classification.

These observations were further examined using SHAP analysis. SHAP values were computed separately for each outcome class. Consequently, a single feature can display opposite SHAP contributions across classes. For example, color variation improves human readability (positive SHAP in Class 1) but simultaneously enhances CNN recognition (positive SHAP in Class 2). This dual effect indicates that some visual cues benefit both perception systems in different ways. Future extensions will incorporate bootstrap confidence intervals and collinearity checks to quantify SHAP stability.

Beyond these quantitative findings, qualitatively, multi-layer and hollow effects improved human readability more than color–noise combinations, suggesting that controlled deformation is preferable to random visual clutter.

In summary, adding color variation or a noisy background tends to disadvantage human users while benefiting automated solvers. On the other hand, features like hollow scheme and multi-layer structure favor human success while challenging machines. To develop effective and user-friendly CAPTCHA systems, it is essential to prioritize features that strengthen Class 1 while carefully managing those that contribute to Class 2.

4.2. Ethical and Privacy Considerations

The collection and analysis of human response data were handled with strict adherence to privacy. All data used in this study were derived from pre-existing interaction logs generated during online exams. In this study, no demographic or personally identifying information was recorded. All data were fully anonymized prior to analysis, ensuring that no user interactions could be traced back to an individual. The analysis was conducted purely on these anonymized datasets, adhering to data minimization principles.

5. Conclusions and Future Work

This study presents a comprehensive analysis comparing the ability of humans and machines to solve CAPTCHAs, utilizing real-world data to ensure practical relevance. The experimental findings support the hypothesis that each additional feature incorporated during CAPTCHA generation increases the difficulty of recognition for both human users and automated systems. By systematically analyzing how different CAPTCHA features affect readability, this research identifies optimal feature combinations that effectively enhance resistance against automated solvers while preserving human usability. Furthermore, the study provides valuable insights into the design of human-solvable but machine-resistant CAPTCHAs, which are essential for strengthening cybersecurity measures against adversarial attacks. A key contribution of this work is the identification of feature correlations that play a decisive role in determining the complexity and effectiveness of different CAPTCHA classes.

The findings of this study highlight the disparity between human and machine performance in solving CAPTCHAs. Real-world dataset analysis reveals that while a moderately complex machine learning algorithm achieves over 70% success in CAPTCHA recognition, human participants successfully solve only about 14%. Furthermore, specific CAPTCHA features influence human and machine performance differently. Elements such as color variation, noisy background, textured background, connected characters, and deformation increase difficulty for both humans and machines, but they pose a greater challenge to human users. In contrast, features like hollow scheme and multi-layer structure primarily hinder automated solvers while having a lesser impact on human users. These insights emphasize the need for a strategic balance in CAPTCHA design, ensuring that security mechanisms remain effective against automated attacks while maintaining accessibility for legitimate users.

Building upon these results, the combination of CNN-based solver results and real human interaction data provides complementary insights. While the CNN solver quantifies computational vulnerability, human response data reveal usability constraints. This combined approach strengthens forensic interpretability and supports designing adaptive CAPTCHA mechanisms that balance security and human accessibility.

While this study focuses on a single CNN-based solver, it is important to note that different deep learning architectures may exhibit distinct recognition behaviors and robustness levels. For instance, ResNet models could offer better generalization under varying distortions due to their residual connections, whereas Transformer-based or hybrid CNN–RNN architectures might capture more complex sequential dependencies in text patterns. Future work will explore these architectures to provide a broader understanding of CAPTCHA vulnerability across diverse solver families.

As the experiments were performed in a live deployment, external factors such as network latency and device heterogeneity were naturally incorporated. However, future work will include a more systematic analysis of these environmental parameters to better quantify their influence on both human and machine performance.

Despite these complementary insights, the overall usability–security balance of text-based CAPTCHAs continues to decline. Even though design elements such as color variation and hollow schemes temporarily aid human perception, they also enhance CNN feature extraction. Building upon these findings, future research will focus on integrating Generative AI techniques to dynamically generate HIP challenges that optimize the balance between security and usability. The planned approach involves training generative models to create CAPTCHAs that adapt their complexity based on user interaction data while remaining resistant to state-of-the-art machine learning-based solvers, including deep learning and OCR-driven attacks. The effectiveness of these AI-generated CAPTCHAs will be assessed through performance evaluations, incorporating metrics such as accuracy, usability, and resilience against adversarial AI models. Moreover, future work will explore adaptive CAPTCHA mechanisms that leverage real-time behavioral analysis and adversarial threat detection to dynamically adjust CAPTCHA difficulty. This approach can improve security while ensuring an intuitive and seamless user experience. The insights gained from this study will serve as a foundation for the next generation of CAPTCHA systems, contributing to developing more robust, intelligent, and adaptive security mechanisms in the face of evolving cyber threats.

Funding

This research received no external funding.

Informed Consent Statement

This study involved a secondary analysis of pre-existing, fully anonymized log data. No personally identifiable information was collected or accessed by the researchers. Therefore, participant informed consent was not required for this type of retrospective analysis.

Data Availability Statement

The dataset and test code are accessible at this link: https://osf.io/yw7ar/?view_only=9d6bbed6ca74402793c3a387aba8146c (accessed on 19 March 2025).

Acknowledgments

We want to express our special thanks to Harun Keçeci for his time and efforts in the online exam system.

Conflicts of Interest

The author declares no conflicts of interest.

References

- Hernández-Castro, C.J.; Barrero, D.F.; R-Moreno, M.D. BASECASS: A methodology for CAPTCHAs security assurance. J. Inf. Secur. Appl. 2021, 63, 103018. [Google Scholar] [CrossRef]

- Gao, Y.; Xu, G.; Li, L.; Luo, X.; Wang, C.; Sui, Y. Demystifying the underground ecosystem of account registration bots. In Proceedings of the 30th ACM Joint European Software Engineering Conference and Symposium on the Foundations of Software Engineering, Singapore, 14–18 November 2022; ACM: Singapore, 2022; pp. 897–909. [Google Scholar] [CrossRef]

- Dave, K.T. Brute-force Attack “Seeking but Distressing”. Int. J. Innov. Eng. Technol. (IJIET) 2013, 2, 75–78. [Google Scholar]

- ASüzen, A. UNI-CAPTCHA: A Novel Robust and Dynamic User-Non-Interaction CAPTCHA Model Based on Hybrid biLSTM+Softmax. J. Inf. Secur. Appl. 2021, 63, 103036. [Google Scholar] [CrossRef]

- Kumarasubramanian, A.; Ostrovsky, R.; Pandey, O.; Wadia, A. Cryptography Using Captcha Puzzles. In Public-Key Cryptography—PKC 2013; Kurosawa, K., Hanaoka, G., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2013; Volume 7778, pp. 89–106. [Google Scholar] [CrossRef]

- Von Ahn, L.; Blum, M.; Hopper, N.J.; Langford, J. CAPTCHA: Using Hard AI Problems for Security. In Advances in Cryptology—EUROCRYPT 2003; Biham, E., Ed.; in Lecture Notes in Computer Science; Springer: Berlin, Heidelberg, 2003; Volume 2656, pp. 294–311. [Google Scholar] [CrossRef]

- Igbekele, E.O.; Adebiyi, A.A.; Ibikunle, F.A.; Adebiyi, M.O.; Oludayo, O.O. Research trends on CAPTCHA: A systematic literature. Int. J. Electr. Comput. Eng. 2021, 11, 4300–4312. [Google Scholar] [CrossRef]

- Dinh, N.T.; Hoang, V.T. Recent advances of Captcha security analysis: A short literature review. Procedia Comput. Sci. 2023, 218, 2550–2562. [Google Scholar] [CrossRef]

- Johri, E.; Dharod, L.; Joshi, R.; Kulkarni, S.; Kundle, V. Video Captcha Proposition based on VQA, NLP, Deep Learning and Computer Vision. In Proceedings of the 2022 5th International Conference on Advances in Science and Technology (ICAST), Mumbai, India, 2–3 December 2022; IEEE: New York, NY, USA, 2022; pp. 196–200. [Google Scholar] [CrossRef]

- Alqahtani, F.H.; Alsulaiman, F.A. Is image-based CAPTCHA secure against attacks based on machine learning? An experimental study. Comput. Secur. 2020, 88, 101635. [Google Scholar] [CrossRef]

- Sukhani, K.; Sawant, S.; Maniar, S.; Pawar, R. Automating the Bypass of Image-based CAPTCHA and Assessing Security. In Proceedings of the 2021 12th International Conference on Computing Communication and Networking Technologies (ICCCNT), Kharagpur, India, 6–8 July 2021; IEEE: New York, NY, USA, 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Gaggi, O. A study on Accessibility of Google ReCAPTCHA Systems. In Open Challenges in Online Social Networks; ACM: Barcelona, Spain, 2022; pp. 25–30. [Google Scholar] [CrossRef]

- Umar, M.; Ayyub, S. A Review on Evolution of various CAPTCHA in the field of Web Security. Int. J. Res. Appl. Sci. Eng. Technol. 2022, 10, 72–78. [Google Scholar] [CrossRef]

- Zhang, J.; Tsai, M.-Y.; Kitchat, K.; Sun, M.-T.; Sakai, K.; Ku, W.-S.; Surasak, T.; Thaipisutikul, T. A secure annuli CAPTCHA system. Comput. Secur. 2023, 125, 103025. [Google Scholar] [CrossRef]

- Wan, X.; Johari, J.; Ruslan, F.A. Adaptive CAPTCHA: A CRNN-Based Text CAPTCHA Solver with Adaptive Fusion Filter Networks. Appl. Sci. 2024, 14, 5016. [Google Scholar] [CrossRef]

- Jeberson, W.; Jeberson, K. CAPTCHA: Impact of Users with Learning Disabilities, and Implementation of Dynamic Game based Captcha to Improve the Access for Users with Learning Disabilities. In Proceedings of the 2024 2nd International Conference on Disruptive Technologies (ICDT), Greater Noida, India, 15–16 March 2024; IEEE: New York, NY, USA, 2024; pp. 1624–1630. [Google Scholar] [CrossRef]

- Mathai, A.; Nirmal, A.; Chaudhari, P.; Deshmukh, V.; Dhamdhere, S.; Joglekar, P. Audio CAPTCHA for Visually Impaired. In Proceedings of the 2021 International Conference on Electrical, Computer, Communications and Mechatronics Engineering (ICECCME), Mauritius, Mauritius, 7–8 October 2021; IEEE: New York, NY, USA, 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Sheikh, S.A.; Banday, M.T. A Novel Animated CAPTCHA Technique based on Persistence of Vision. IJACSA 2022, 13. [Google Scholar] [CrossRef]

- Acien, A.; Morales, A.; Fierrez, J.; Vera-Rodriguez, R. BeCAPTCHA-Mouse: Synthetic mouse trajectories and improved bot detection. Pattern Recognit. 2022, 127, 108643. [Google Scholar] [CrossRef]

- Prince, M.; Isasi, S. Moving from reCAPTCHA to hCaptcha. The Cloudflare Blog. Available online: https://blog.cloudflare.com/moving-from-recaptcha-to-hcaptcha/ (accessed on 10 February 2025).

- Narayanaswamy, A. CAPTCHAs in reality: What might happen if they are easily broken? J. Stud. Res. 2022, 11. [Google Scholar] [CrossRef]

- Ababtain, E.; Engels, D. Gestures Based CAPTCHAs the Use of Sensor Readings to Solve CAPTCHA Challenge on Smartphones. In Proceedings of the 2019 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 5–7 December 2019; IEEE: New York, NY, USA, 2019; pp. 113–119. [Google Scholar] [CrossRef]

- Chang, G.; Gao, H.; Pei, G.; Luo, S.; Zhang, Y.; Cheng, N.; Tang, Y.; Guo, Q. The robustness of behavior-verification-based slider CAPTCHAs. J. Inf. Secur. Appl. 2024, 81, 103711. [Google Scholar] [CrossRef]

- Gutub, A.; Kheshaifaty, N. Practicality analysis of utilizing text-based CAPTCHA vs. graphic-based CAPTCHA authentication. Multimed Tools Appl. 2023, 82, 46577–46609. [Google Scholar] [CrossRef]

- Bhuiyan, T.A.U.H.; Wadud, M.A.H.; Mustafa, H.A. Secured Text Based CAPTCHA using Customized CNN with Style Transfer and GAN based Approach. In Proceedings of the 2024 2nd International Conference on Information and Communication Technology (ICICT), Dhaka, Bangladesh, 21–22 October 2024; IEEE: New York, NY, USA, 2024; pp. 135–139. [Google Scholar] [CrossRef]

- Chow, Y.-W.; Susilo, W.; Thorncharoensri, P. CAPTCHA Design and Security Issues. In Advances in Cyber Security: Principles, Techniques, and Applications; Li, K.-C., Chen, X., Susilo, W., Eds.; Springer: Singapore, 2019; pp. 69–92. [Google Scholar] [CrossRef]

- Matsuura, Y.; Kato, H.; Sasase, I. Adversarial Text-Based CAPTCHA Generation Method Utilizing Spatial Smoothing. In Proceedings of the 2021 IEEE Global Communications Conference (GLOBECOM), Madrid, Spain, 7–11 December 2021; IEEE: New York, NY, USA, 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Ouyang, Z.; Zhai, X.; Wu, J.; Yang, J.; Yue, D.; Dou, C.; Zhang, T. A cloud endpoint coordinating CAPTCHA based on multi-view stacking ensemble. Comput. Secur. 2021, 103, 102178. [Google Scholar] [CrossRef]

- Conti, M.; Pajola, L.; Tricomi, P.P. Turning captchas against humanity: Captcha-based attacks in online social media. Online Soc. Netw. Media 2023, 36, 100252. [Google Scholar] [CrossRef]

- Bursztein, E.; Bethard, S.; Fabry, C.; Mitchell, J.C.; Jurafsky, D. How Good Are Humans at Solving CAPTCHAs? A Large Scale Evaluation. In Proceedings of the 2010 IEEE Symposium on Security and Privacy, Oakland, CA, USA, 16–19 May 2010; IEEE: New York, NY, USA, 2010; pp. 399–413. [Google Scholar] [CrossRef]

- Rahman, R.U. Survey on Captcha Systems. J. Glob. Res. Comput. Sci. 2012, 3, 54–58. [Google Scholar]

- Chellapilla, K.; Larson, K.; Simard, P.Y.; Czerwinski, M. Building Segmentation Based Human-Friendly Human Interaction Proofs (HIPs). In Human Interactive Proofs; Baird, H.S., Lopresti, D.P., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2005; Volume 3517, pp. 1–26. [Google Scholar] [CrossRef]

- Brodić, D.; Janković, R. Usability Analysis of the Specific Captcha Types. In International Scientific Conference; Technical University of Gabrovo: Gabrovo, Bulgaria, 2016. [Google Scholar]

- Bursztein, X.E.; Martin, M.; Mitchell, J.C. Text-Based Captcha Strengths and Weaknesses. In Proceedings of the 18th ACM Conference on Computer and Communications Security (CCS ’11), New York, NY, USA, 17–21 October 2011; pp. 125–138. [Google Scholar] [CrossRef]

- Alsuhibany, S.A. Evaluating the usability of optimizing text-based CAPTCHA generation. Int. J. Adv. Comput. Sci. Appl. 2016, 7. Available online: https://www.researchgate.net/profile/Suliman-Alsuhibany/publication/307547347_Evaluating_the_Usability_of_Optimizing_Text-based_CAPTCHA_Generation/links/57c8303a08aefc4af34eaeb6/Evaluating-the-Usability-of-Optimizing-Text-based-CAPTCHA-Generation.pdf (accessed on 10 April 2025). [CrossRef]

- Raman, J.; Umapathy, K.; Huang, H. Security and User Experience: A Holistic Model for Captcha Usability Issues. In Proceedings of the Southern Association for Information Systems Conference, Atlanta, GA, USA, 23–24 March 2018. [Google Scholar]

- Brodić, D.; Amelio, A. Exploring the usability of the text-based CAPTCHA on tablet computers. Connect. Sci. 2019, 31, 430–444. [Google Scholar] [CrossRef]

- Alsuhibany, S.A.; Alnoshan, A.A. Interactive Handwritten and Text-Based Handwritten Arabic CAPTCHA Schemes for Mobile Devices: A Comparative Study. IEEE Access 2021, 9, 140991–141001. [Google Scholar] [CrossRef]

- Kumar, M.; Jindal, M.K.; Kumar, M. Design of innovative CAPTCHA for hindi language. Neural. Comput. Applic. 2022, 34, 4957–4992. [Google Scholar] [CrossRef]

- Chatrangsan, M.; Tangmanee, C. Robustness and user test on text-based CAPTCHA: Letter segmenting is not too easy or too hard. Array 2024, 21, 100335. [Google Scholar] [CrossRef]

- Olanrewaju, O.T.; Omilabu, A.A.; Nwufoh, C.V.; Adewale, F.O.; Osunade, O. An Accented Character-based Captcha System with Usability Test Using Solving Time and Response Time. IJITCS 2025, 17, 96–104. [Google Scholar] [CrossRef]

- Alrasheed, G.; Alsuhibany, S.A. Enhancing Security of Online Interfaces: Adversarial Handwritten Arabic CAPTCHA Generation. Appl. Sci. 2025, 15, 2972. [Google Scholar] [CrossRef]

- Hindle, A.; Godfrey, M.W.; Holt, R.C. Reverse Engineering CAPTCHAs. In Proceedings of the 2008 15th Working Conference on Reverse Engineering, Antwerp, Belgium, 15–18 October 2008; IEEE: New York, NY, USA, 2008; pp. 59–68. [Google Scholar] [CrossRef]

- Tang, M.; Gao, H.; Zhang, Y.; Liu, Y.; Zhang, P.; Wang, P. Research on Deep Learning Techniques in Breaking Text-Based Captchas and Designing Image-Based Captcha. IEEE Trans. Inform. Forensic Secur. 2018, 13, 2522–2537. [Google Scholar] [CrossRef]

- Wang, P.; Gao, H.; Guo, X.; Xiao, C.; Qi, F.; Yan, Z. An Experimental Investigation of Text-based CAPTCHA Attacks and Their Robustness. ACM Comput. Surv. 2023, 55, 1–38. [Google Scholar] [CrossRef]

- Shibbir, M.N.I.; Rahman, H.; Ferdous, M.S.; Chowdhury, F. Evaluating the security of CAPTCHAs utilized on Bangladeshi websites. Comput. Secur. 2024, 140, 103774. [Google Scholar] [CrossRef]

- Xu, Z.; Yan, Q. Boosting the transferability of adversarial CAPTCHAs. Comput. Secur. 2024, 145, 104000. [Google Scholar] [CrossRef]

- Nemavhola, A.; Chibaya, C.; Viriri, S. A Systematic Review of CNN Architectures, Databases, Performance Metrics, and Applications in Face Recognition. Information 2025, 16, 107. [Google Scholar] [CrossRef]

- Nemavhola, A.; Viriri, S.; Chibaya, C. A Scoping Review of Literature on Deep Learning Techniques for Face Recognition. Hum. Behav. Emerg. Technol. 2025, 2025, 5979728. [Google Scholar] [CrossRef]

- Rangel, G.; Cuevas-Tello, J.C.; Nunez-Varela, J.; Puente, C.; Silva-Trujillo, A.G. A Survey on Convolutional Neural Networks and Their Performance Limitations in Image Recognition Tasks. J. Sens. 2024, 2024, 2797320. [Google Scholar] [CrossRef]

- Wanda, P.; Jie, H.J. DeepProfile: Finding fake profile in online social network using dynamic CNN. J. Inf. Secur. Appl. 2020, 52, 102465. [Google Scholar] [CrossRef]

- Lu, S.; Huang, K.; Meraj, T.; Rauf, H.T. A novel CAPTCHA solver framework using deep skipping Convolutional Neural Networks. PeerJ Comput. Sci. 2022, 8, e879. [Google Scholar] [CrossRef]

- Khalil, N.Z.; Kong, N.; Fricke, H. The influence of GNP on the mechanical and thermomechanical properties of epoxy adhesive: Pearson correlation matrix and heatmap application in data interpretation. Polym. Compos. 2024, 45, 8997–9018. [Google Scholar] [CrossRef]

- Domingo, D.; Kareem, A.B.; Okwuosa, C.N.; Custodio, P.M.; Hur, J.-W. Transformer Core Fault Diagnosis via Current Signal Analysis with Pearson Correlation Feature Selection. Electronics 2024, 13, 926. [Google Scholar] [CrossRef]

- Panda, C.; Mishra, A.K.; Dash, A.K.; Nawab, H. Predicting and explaining severity of road accident using artificial intelligence techniques, SHAP and feature analysis. Int. J. Crashworthiness 2023, 28, 186–201. [Google Scholar] [CrossRef]

- Prendin, F.; Pavan, J.; Cappon, G.; Del Favero, S.; Sparacino, G.; Facchinetti, A. The importance of interpreting machine learning models for blood glucose prediction in diabetes: An analysis using SHAP. Sci. Rep. 2023, 13, 16865. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).