Optimized Implementation of YOLOv3-Tiny for Real-Time Image and Video Recognition on FPGA

Abstract

1. Introduction

2. Related Work

2.1. Embedded AI and Real-Time Inference

2.2. Dedicated Hardware Accelerators for Neural Network Inference

2.3. FPGA-Based Accelerators for Neural Network Inference

2.3.1. DPU-Based Solutions: Vitis AI and NVDLA

Vitis AI

NVDLA

2.3.2. Streaming Dataflow Tools

FINN

NN2FPGA

fpgaConvNet

2.4. Applications in Robotics and Drones

2.5. Gap Analysis

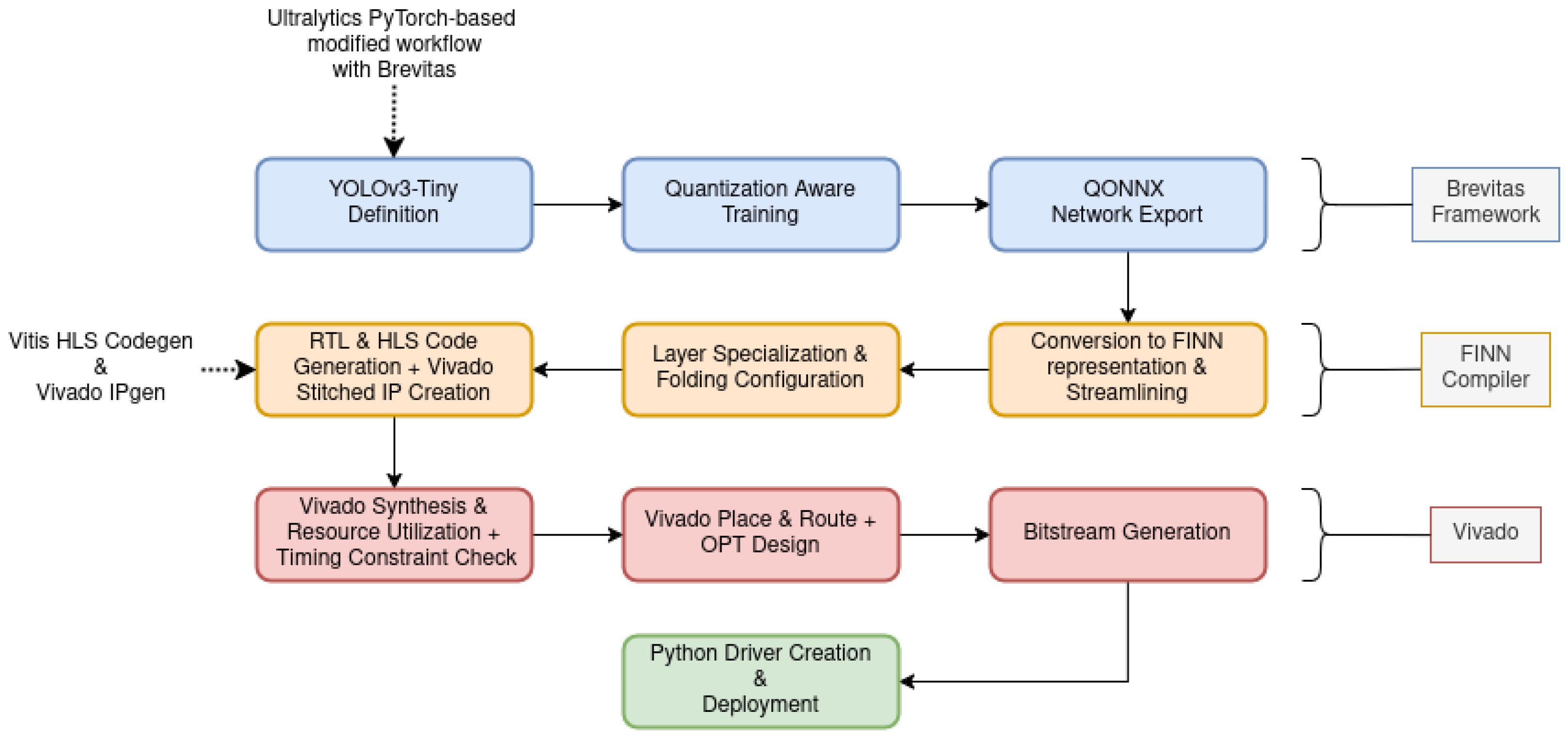

3. Materials and Methods

3.1. Model Selection and Architectural Adaptations

3.1.1. Integration of Brevitas for Quantization-Aware Training and Model Definition

3.1.2. Dataset Selection: VisDrone

3.1.3. Training Environment: Kaggle Notebooks

3.1.4. Training Parameters

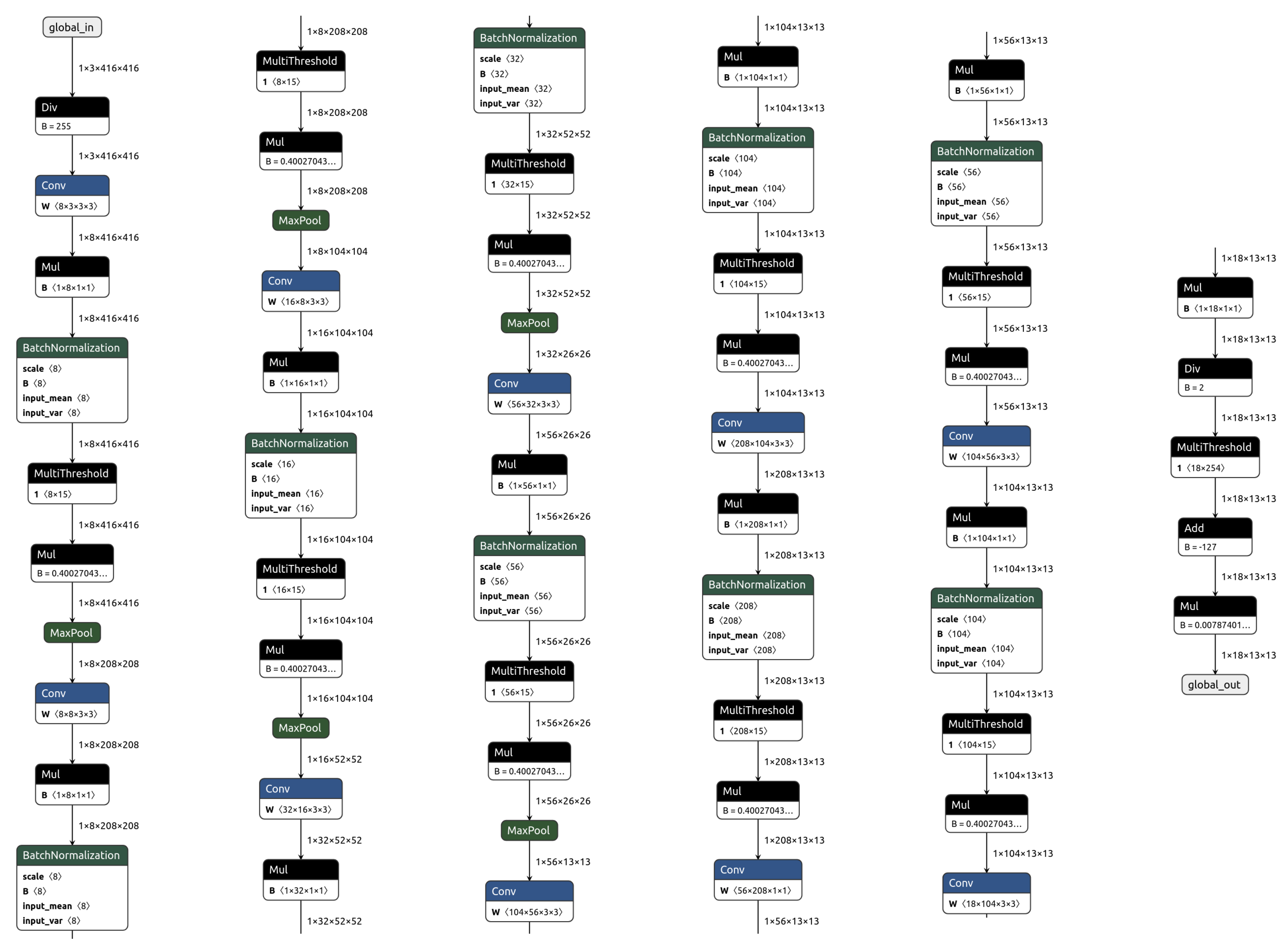

3.2. Exporting the Model to ONNX

- python export.py \

- --weights /path/to/train_run/weights/best.pt \

- --img 416 \

- --include finn_onnx

3.3. Coral AI Board Export

3.4. FINN Transformations: From ONNX to Synthesizable IP

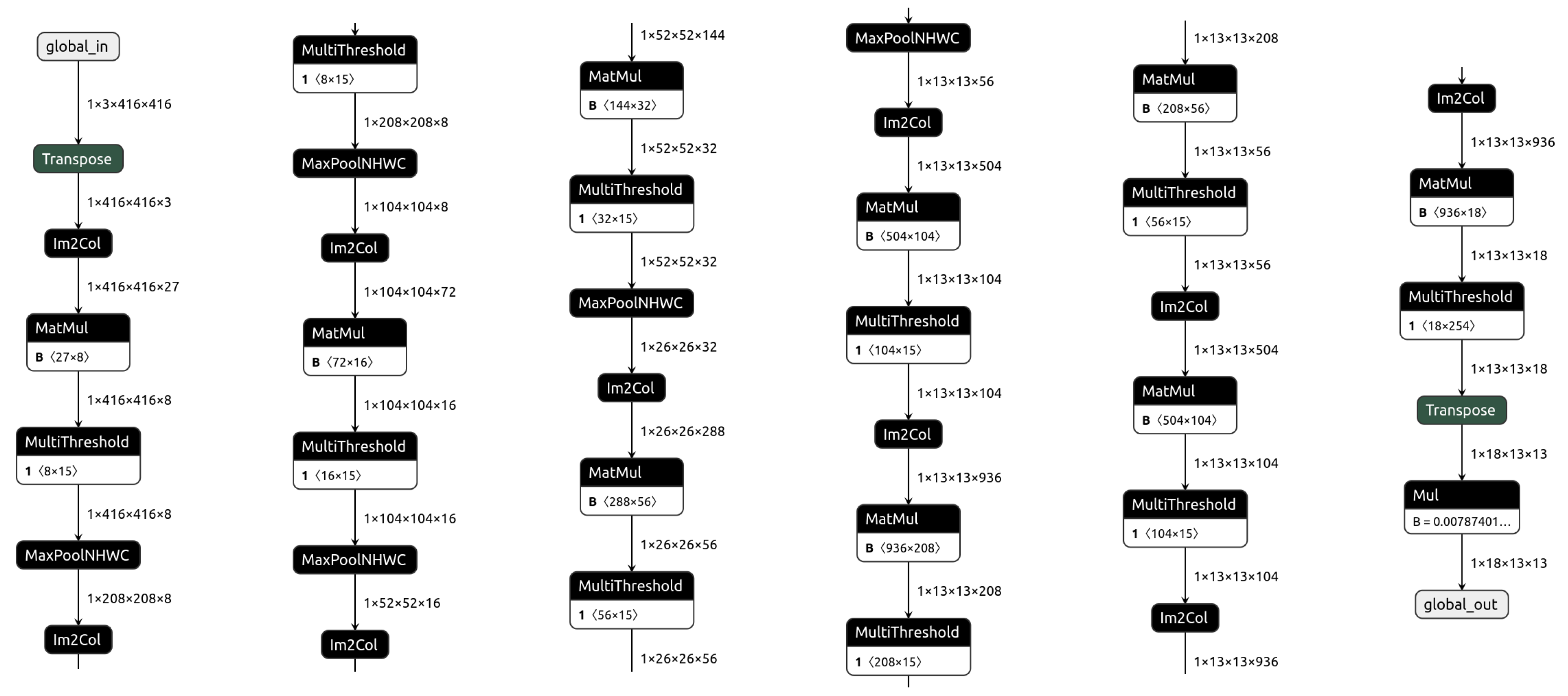

3.4.1. Transformation of ONNX Representation into a FINN Model Representation

3.4.2. Streamlining

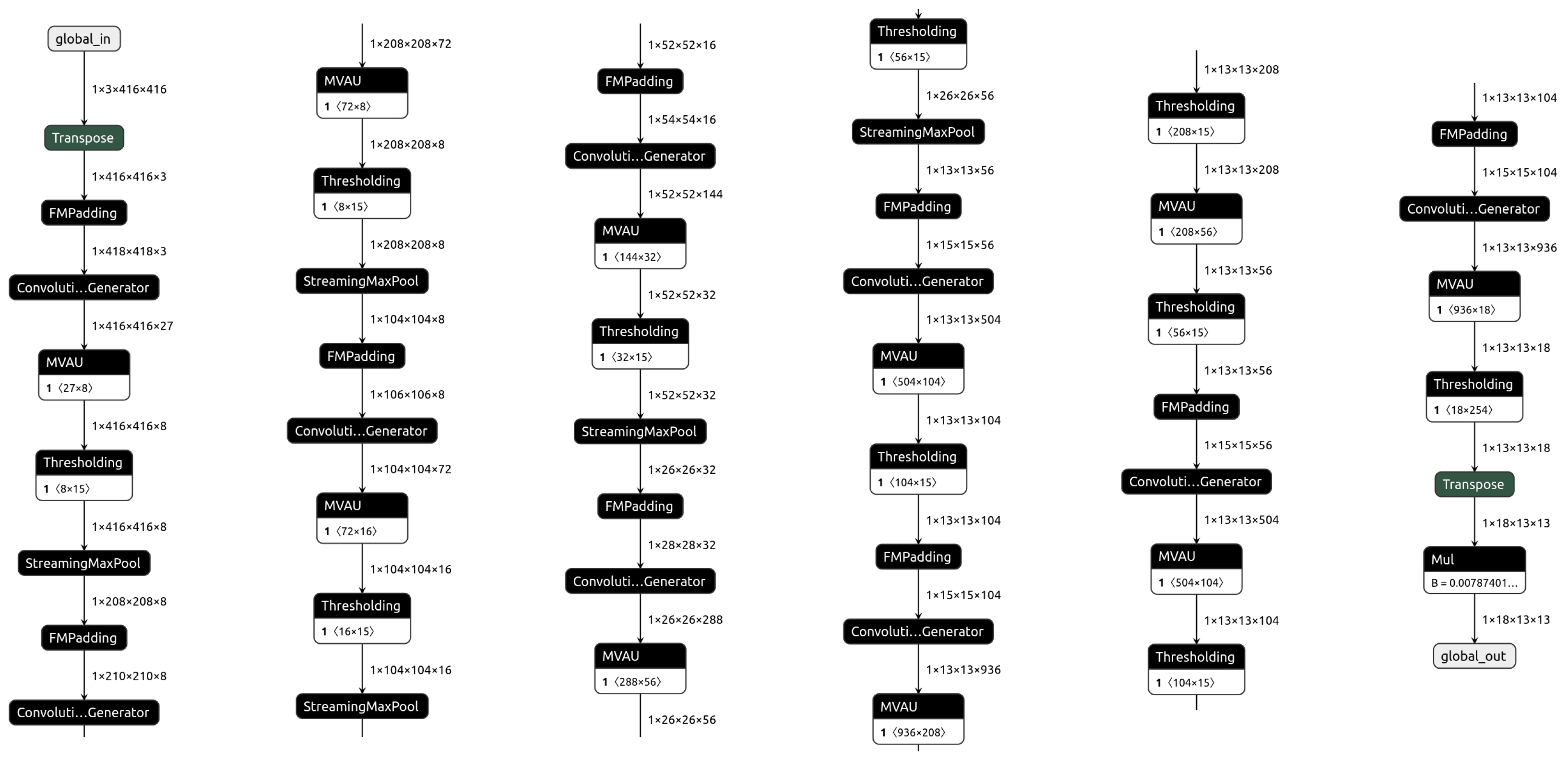

3.4.3. Hardware Mapping Transformation

3.4.4. Dataflow Partition

3.5. Layer Specialization: RTL vs. HLS Implementations

3.5.1. Matrix-Vector Unit (MVU) Implementations

3.5.2. RTL Implementation

3.5.3. HLS Implementation

3.5.4. Parallel Window Mode in SWG

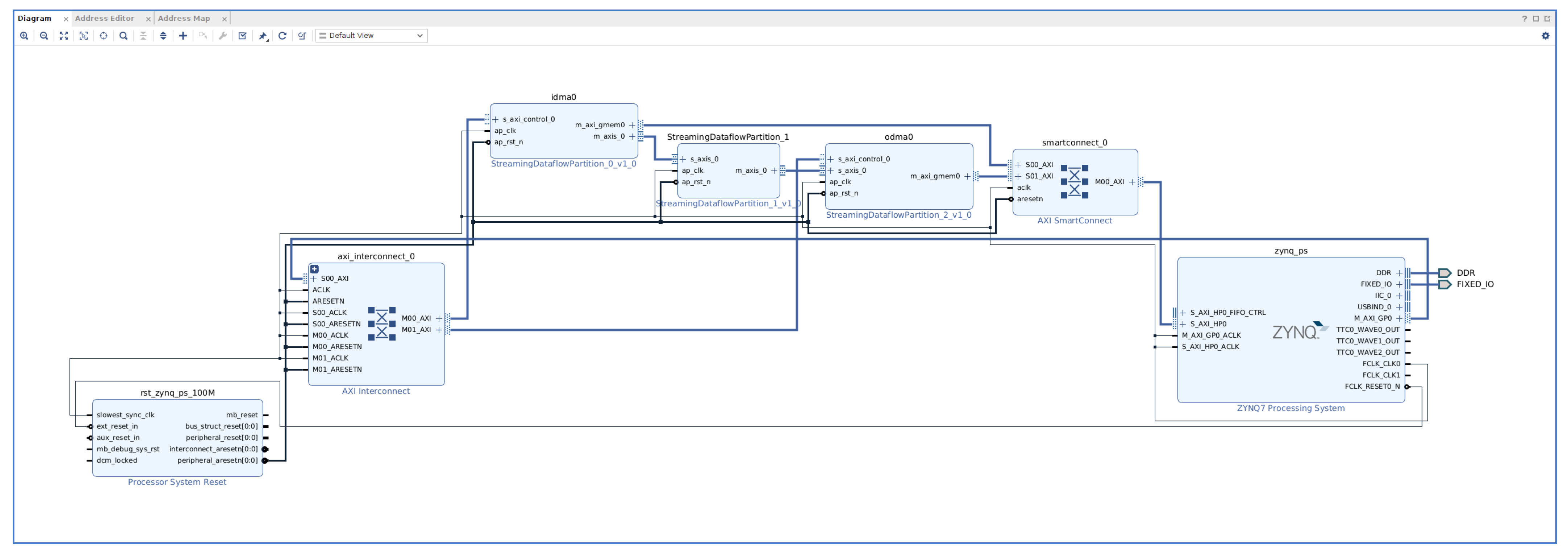

3.6. Folding Strategies

3.6.1. Maximizing Performance Within Resource Constraints

3.6.2. Equalizing Resource Allocation Across Layers

3.7. FIFO Integration and Data Buffering

3.7.1. Implementation Challenges

3.7.2. Initial FIFO Search Failure and StreamingMaxPool Issue

3.8. Weight Storage Optimization

3.9. Vitis HLS Code Generation

3.10. IP Stitching and Vivado Synthesis

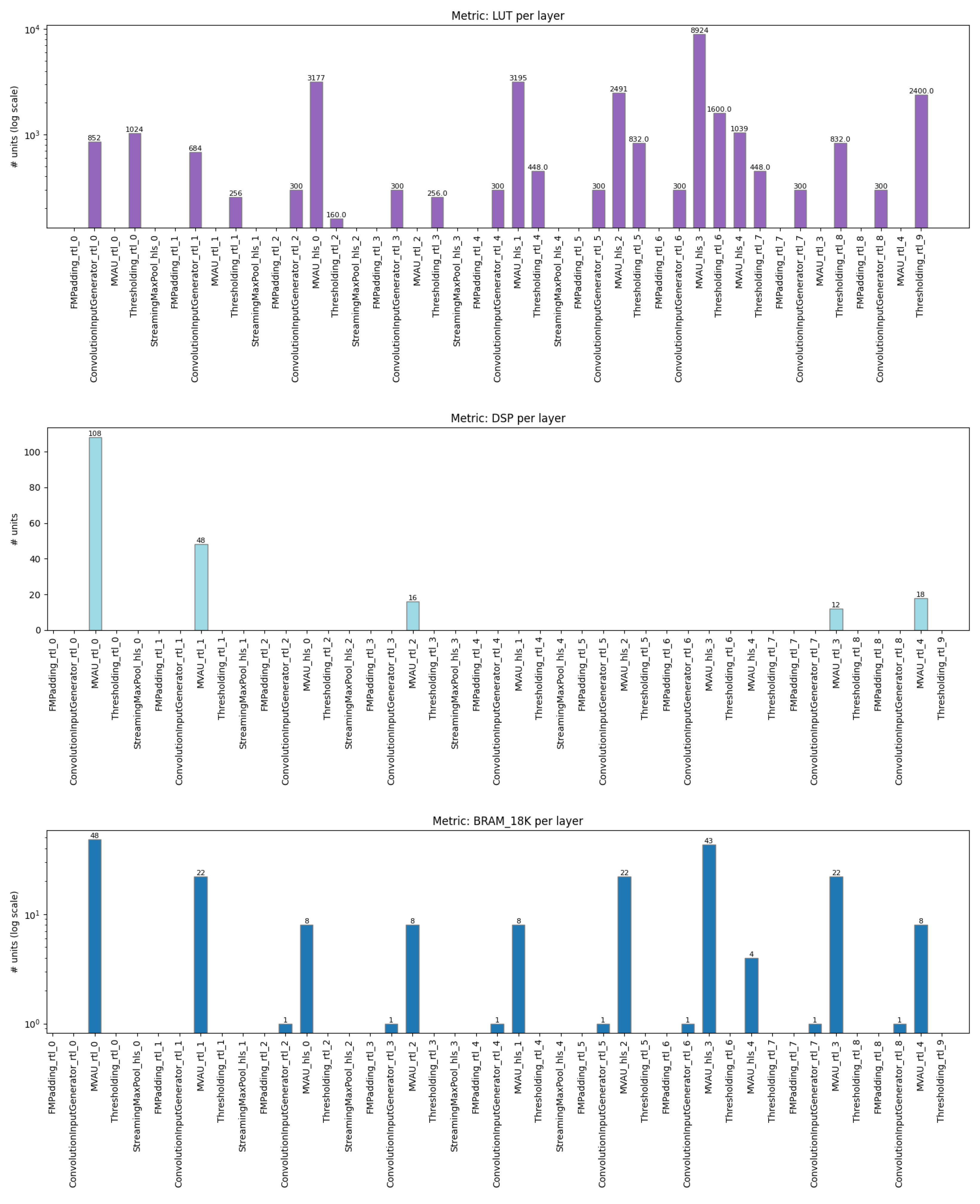

3.10.1. Vivado Synthesis and Implementation

3.10.2. PYNQ Driver Integration

- A Python class that interfaces with the IP cores via MMIO.

- Functions to handle data packing and communication with the DMA.

- A class to upload the generated bitfile onto the FPGA, specifying the target clock frequency (adjustable at runtime) and the batch size of frames to be sent to the DMA.

3.11. Streaming Driver for TPU-like Implementations

3.11.1. Development Approach

3.11.2. Pipeline Architecture and Multithreading

- Capture Thread: Responsible for capturing video frames from a specified source (e.g., webcam or video file) using OpenCV. Captured frames are preprocessed and enqueued for inference.

- Frame Dispatcher Thread: Retrieves preprocessed frames from the capture queue, formats them into binary messages with appropriate headers, and dispatches them to the InferServer via WebSockets.

- Post-processing Thread: Collects inference results from the inference result queue, matches them with the original frames, and performs necessary post-processing tasks such as bounding box rendering.

- Display Thread: Renders the post-processed frames to the user interface, enabling the real-time visualization of inference results.

3.11.3. Communication Protocol

- JSON Messages: Utilized for control commands such as model loading (load_model) and status inquiries (status). These messages enable on-the-fly configuration and monitoring of the InferServer.

- Binary Messages: Employed for transmitting inference requests and results. Binary messages consist of a custom header followed by payload data, facilitating efficient and compact data exchange.

3.11.4. JSON Command Handling

- load_model: Initiates the loading of a new neural network model onto the FPGA board. The model is provided in a custom .fnn format, which includes all necessary information for configuration and deployment, such as the bitstream and the hardware definition file, a dictionary containing input and output shapes definitions and model metadata, such as name and version.

- status: Retrieves the current status of the server, including model loading state, queue sizes, and active client connections.

3.11.5. Binary Inference Requests and Responses

- Header (16 bytes):

- –

- frame_id (4 bytes, integer): Unique identifier for the frame.

- –

- width (4 bytes, integer): Width of the frame.

- –

- height (4 bytes, integer): Height of the frame.

- –

- channels (2 bytes, short): Number of color channels.

- –

- dtype_code (2 bytes, short): Data type code indicating the format of the payload.

- Payload: Raw image data bytes.

3.11.6. Inference Processing Workflow

3.11.7. Frame Capture and Preprocessing

3.11.8. Dispatching Inference Requests

3.11.9. Batch Processing on the Server

3.11.10. Post-Processing and Result Visualization

3.11.11. Dynamic Board Configuration Using .fnn Format

3.11.12. Structure of the .fnn File

- model.bit: The bitstream file for FPGA configuration.

- model.hwh: The hardware handoff file detailing the FPGA’s hardware configuration.

- model.pkl: A pickle file containing model shape dictionaries and other metadata.

- model_info.json: A JSON file providing essential information such as maximum clock frequency, model name, and the dataset on which the model was trained.

3.11.13. Loading and Verifying the Model

- Model Extraction: Decodes the base64-encoded .fnn file and extracts its contents into a designated directory.

- Integrity Verification: Checks for the presence of all required files (model.bit, model.hwh, model.pkl, model_info.json) to ensure the model archive is complete and uncorrupted.

- Metadata Parsing: Loads and verifies the model_info.json file to extract configuration parameters such as clock frequency and model metadata.

- FPGA Configuration: Utilizes the PYNQ driver to load the bitstream onto the FPGA, configuring the hardware accelerator with the new model.

- Queue Initialization: Initializes the inference request queue and starts the inference worker thread to begin processing incoming data.

4. Results

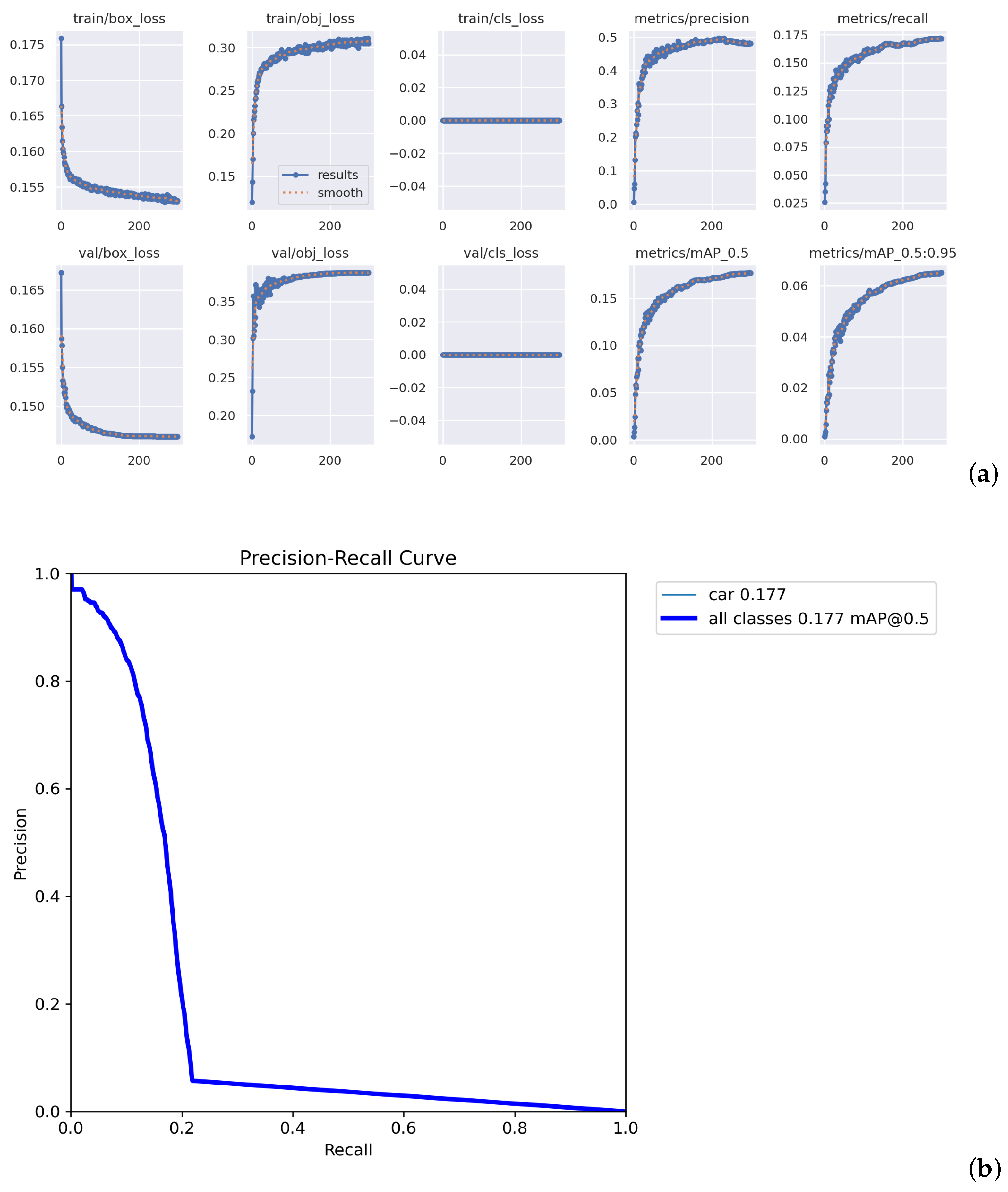

4.1. Network Training Results

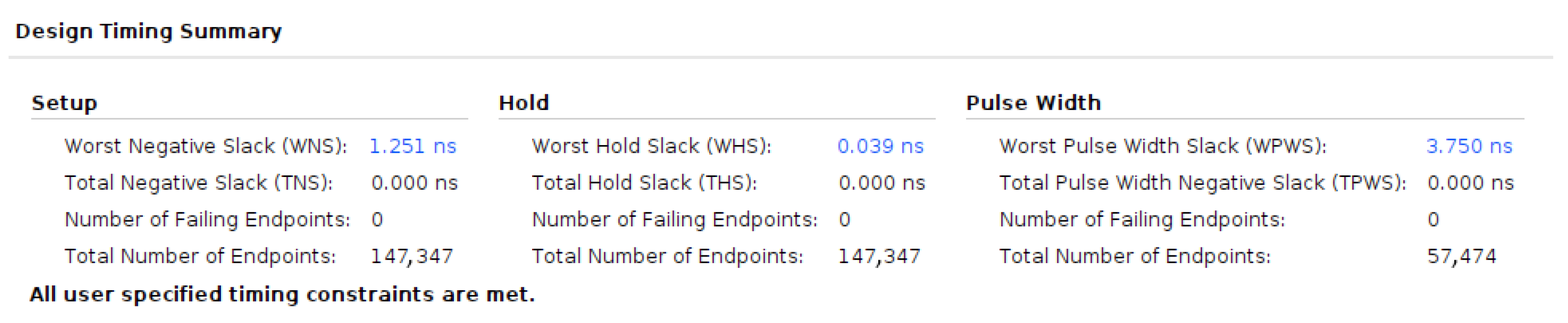

4.2. FPGA Implementation Results

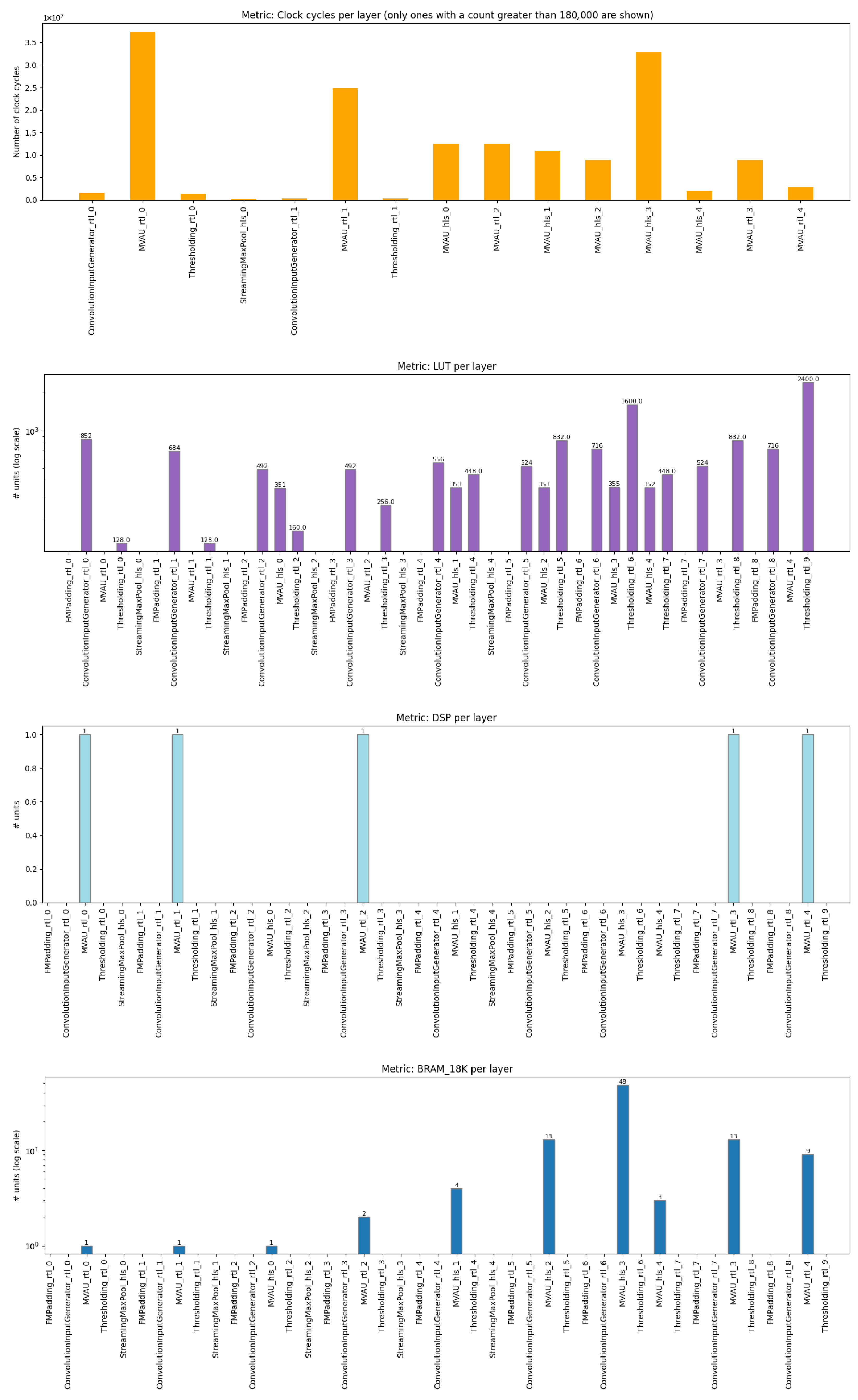

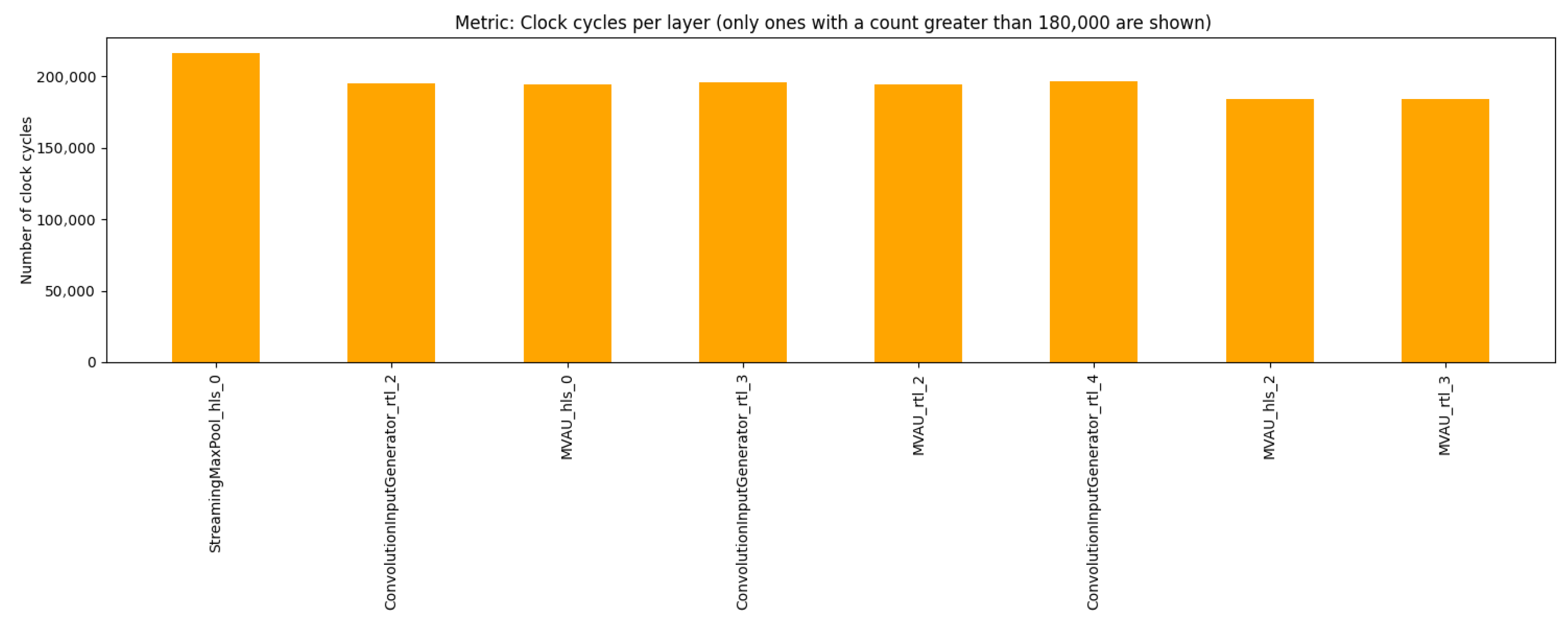

4.2.1. Synthesis Reports

4.2.2. RTL Simulation

4.2.3. MAC Operations

4.2.4. PL Resource Utilization

4.2.5. Performance Estimation, Simulation, and Evaluation

4.2.6. Frequency Scaling Performance Variations

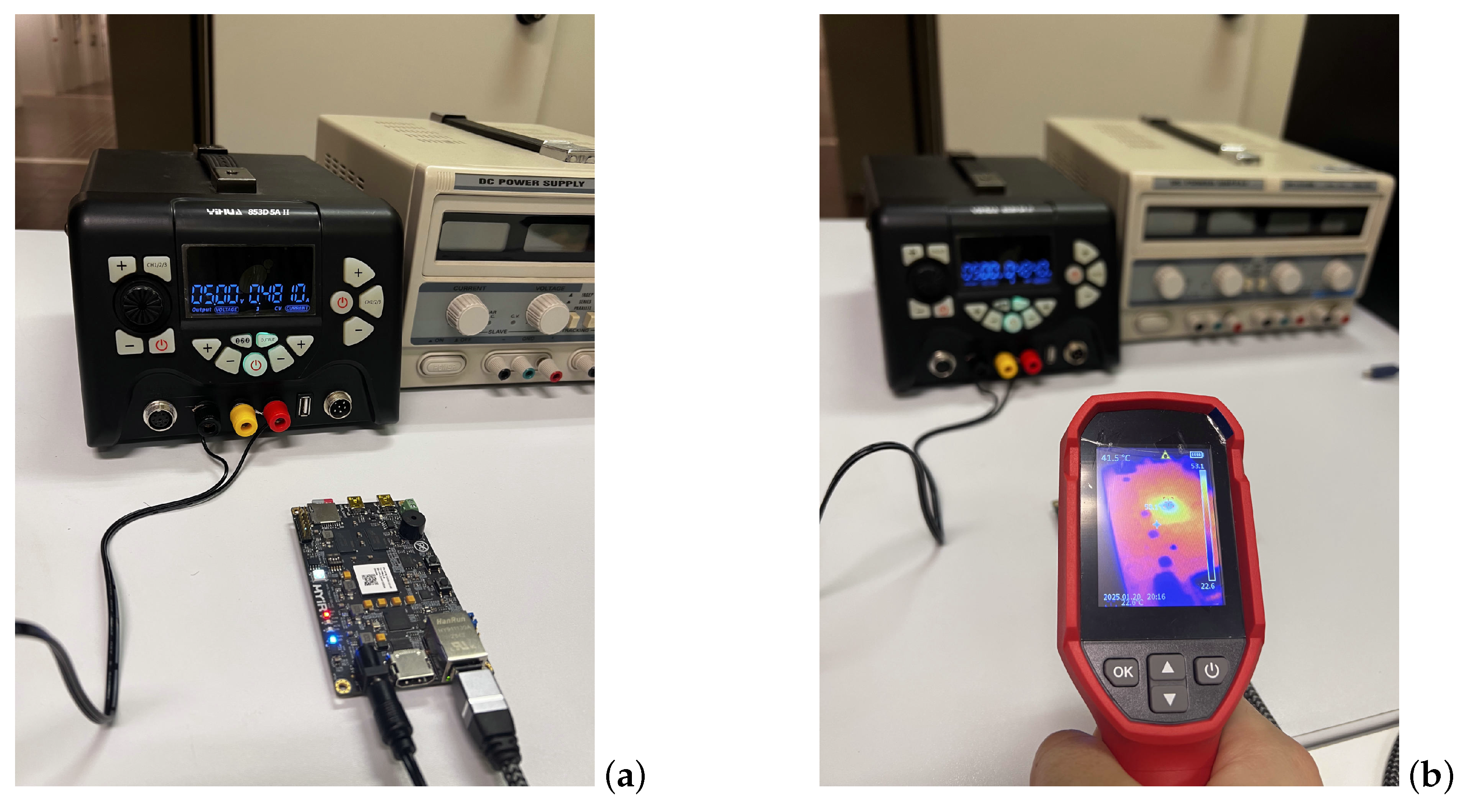

4.3. Power Consumption

4.3.1. Vivado Power Estimation

4.3.2. Measured Power Consumption

4.4. End-to-End System Throughput and Latency

4.5. Real-World Application Results

5. Discussion

5.1. Comparison with Coral TPU

5.2. Comparison with Existing YOLOv3-Tiny FPGA Implementations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| AOI | Area of Interest |

| ASIC | Application-Specific Integrated Circuit |

| AXI | Advanced eXtensible Interface |

| BN | Batch Normalization |

| BNN | Binarized Neural Network |

| CIG | Convolution Input Generator |

| CLB | Configurable Logic Block |

| CMOS | Complementary Metal–Oxide-Semiconductor |

| CNN | Convolutional Neural Network |

| COCO | Common Objects in Context |

| CUDA | Compute Unified Device Architecture |

| CPU | Central Processing Unit |

| DL | Deep Learning |

| DMA | Direct Memory Access |

| DNN | Deep Neural Network |

| DPU | Deep-Learning Processing Unit |

| DSP | Digital Signal Processing |

| FPGA | Field-Programmable Gate Array |

| FPS | Frames per Second |

| GPU | Graphics Processing Unit |

| HLS | High-Level Synthesis |

| HTTP | HyperText Transfer Protocol |

| IMU | Inertial Measurement Unit |

| IoU | Intersection over Union |

| IoT | Internet of Things |

| IP | Intellectual Property |

| ISA | Instruction Set Architecture |

| LUT | Look Up Table |

| MAC | Multiply And Accumulate |

| mAP | Mean Average Precision |

| MMIO | Memory Mapped I/O |

| MVU | Matrix-Vector multiplication Unit |

| NMS | Non-Maximum Suppression |

| NPU | Neural Processing Units |

| NVDLA | NVIDIA Deep Learning Accelerator |

| ONNX | Open Neural Network Exchange |

| OOM | Out Of Memory |

| PE | Processing Element |

| PL | Programmable Logic |

| PS | Processing System |

| PTQ | Post-Training Quantization |

| QAT | Quantization-Aware Training |

| QONNX | Quantized Open Neural Network Exchange |

| RTL | Register Transfer Level |

| RTOS | Real Time Operating System |

| SIMD | Single Instruction, Multiple Data |

| SoC | System on a Chip |

| SWG | Sliding Window Generator |

| TCP | Transmission Control Protocol |

| TNS | Total Negative Slack |

| TOPS | Tera Operations Per Second |

| TPU | Tensor Processing Unit |

| WNS | Worst Negative Slack |

| XSA | Xilinx Support Archive |

| YOLO | You Only Look Once |

References

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems; Pereira, F., Burges, C., Bottou, L., Weinberger, K., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2012; Volume 25. [Google Scholar]

- Chen, J.; Ran, X. Deep Learning With Edge Computing: A Review. Proc. IEEE 2019, 107, 1655–1674. [Google Scholar] [CrossRef]

- Lane, N.D.; Bhattacharya, S.; Mathur, A.; Georgiev, P.; Forlivesi, C.; Kawsar, F. Squeezing Deep Learning into Mobile and Embedded Devices. IEEE Pervasive Comput. 2017, 16, 82–88. [Google Scholar] [CrossRef]

- Jouppi, N.P.; Young, C.; Patil, N.; Patterson, D.; Agrawal, G.; Bajwa, R.; Bates, S.; Bhatia, S.; Boden, N.; Borchers, A.; et al. In-datacenter performance analysis of a tensor processing unit. In Proceedings of the 2017 ACM/IEEE 44th Annual International Symposium on Computer Architecture (ISCA), Toronto, ON, Canada, 24–28 June 2017; pp. 1–12. [Google Scholar] [CrossRef]

- Umuroglu, Y.; Fraser, N.J.; Gambardella, G.; Blott, M.; Leong, P.; Jahre, M.; Vissers, K. FINN: A Framework for Fast, Scalable Binarized Neural Network Inference. In Proceedings of the 2017 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 22–24 February 2017; pp. 65–74. [Google Scholar]

- Katkuri, A.V.R.; Madan, H.; Khatri, N.; Abdul-Qawy, A.S.H.; Patnaik, K.S. Autonomous UAV navigation using deep learning-based computer vision frameworks: A systematic literature review. Array 2024, 23, 100361. [Google Scholar] [CrossRef]

- Rejeb, A.; Abdollahi, A.; Rejeb, K.; Treiblmaier, H. Drones in agriculture: A review and bibliometric analysis. Comput. Electron. Agric. 2022, 198, 107017. [Google Scholar] [CrossRef]

- Han, S.; Liu, X.; Mao, H.; Pu, J.; Pedram, A.; Horowitz, M.A.; Dally, W.J. EIE: Efficient Inference Engine on Compressed Deep Neural Network. In Proceedings of the 2016 ACM/IEEE 43rd Annual International Symposium on Computer Architecture (ISCA), Seoul, Republic of Korea, 18–22 June 2016; pp. 243–254. [Google Scholar] [CrossRef]

- Lamberti, L.; Bellone, L.; Macan, L.; Natalizio, E.; Conti, F.; Palossi, D.; Benini, L. Distilling Tiny and Ultra-fast Deep Neural Networks for Autonomous Navigation on Nano-UAVs. IEEE Internet Things J. 2024, 11, 33269–33281. [Google Scholar] [CrossRef]

- Lamberti, L.; Niculescu, V.; Barciś, M.; Bellone, L.; Natalizio, E.; Benini, L.; Palossi, D. Tiny-PULP-Dronets: Squeezing Neural Networks for Faster and Lighter Inference on Multi-Tasking Autonomous Nano-Drones. In Proceedings of the 2022 IEEE 4th International Conference on Artificial Intelligence Circuits and Systems (AICAS), Incheon, Republic of Korea, 13–15 June 2022; pp. 287–290. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018. [Google Scholar] [CrossRef]

- Blott, M.; Preußer, T.B.; Fraser, N.J.; Gambardella, G.; O’brien, K.; Umuroglu, Y.; Leeser, M.; Vissers, K. FINN-R: An end-to-end deep-learning framework for fast exploration of quantized neural networks. ACM Trans. Reconfigurable Technol. Syst. (TRETS) 2018, 11, 1–23. [Google Scholar] [CrossRef]

- Calì, R. Performance-Focused Implementation of Neural Networks for Real-Time Image and Video Recognition on Hybrid FPGA-CPU Architectures. Available online: https://tesi.univpm.it/handle/20.500.12075/20897 (accessed on 29 August 2025).

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Aplications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar] [CrossRef]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; Springer: Cham, Switzerland, 2018; pp. 122–138. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning (ICML), Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Han, S.; Mao, H.; Dally, W.J. Deep compression: Compressing deep neural networks with pruning, trained quantization and Huffman coding. In Proceedings of the International Conference on Learning Representations (ICLR), San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Jacob, B.; Kligys, S.; Chen, B.; Zhu, M.; Tang, M.; Howard, A.; Adam, H.; Kalenichenko, D. Quantization and Training of Neural Networks for Efficient Integer-Arithmetic-Only Inference. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2704–2713. [Google Scholar] [CrossRef]

- Courbariaux, M.; Hubara, I.; Soudry, D.; El-Yaniv, R.; Bengio, Y. Binarized Neural Networks: Training Deep Neural Networks with Weights and Activations Constrained to +1 or −1. arXiv 2016. [Google Scholar] [CrossRef]

- Rastegari, M.; Ordonez, V.; Redmon, J.; Farhadi, A. XNOR-Net: ImageNet Classification Using Binary Convolutional Neural Networks. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Cham, Switzerland; 2016; pp. 525–542. [Google Scholar]

- Zhou, S.; Wu, Y.; Ni, Z.; Zhou, X.; Wen, H.; Zou, Y. DoReFa-Net: Training low bitwidth convolutional neural networks with low bitwidth gradients. arXiv 2017, arXiv:1606.06160. [Google Scholar]

- Sze, V.; Chen, Y.H.; Yang, T.J.; Emer, J.S. Efficient Processing of Deep Neural Networks: A Tutorial and Survey. Proc. IEEE 2017, 105, 2295–2329. [Google Scholar] [CrossRef]

- NVIDIA. Jetson GPU Family, Enables Powerful Computational Capabilities for Embedded Solutions. Available online: https://www.nvidia.com/it-it/autonomous-machines/embedded-systems/ (accessed on 29 August 2025).

- STMicroelectronics. STM32N6: Our Very Own NPU in the Most Powerful STM32 to Inaugurate a New Era of Computing. Available online: https://blog.st.com/stm32n6/ (accessed on 29 August 2025).

- Google. Google’s Edge TPU family, Coral AI. Available online: https://coral.ai/ (accessed on 29 August 2025).

- Hailo. Hailo-8 M.2 AI Acceleration Module. Available online: https://hailo.ai/products/ai-accelerators/hailo-8-m2-ai-acceleration-module/ (accessed on 29 August 2025).

- Zhang, C.; Li, P.; Sun, G.; Guan, Y.; Xiao, B.; Cong, J. Optimizing FPGA-based accelerator design for deep convolutional neural networks. In Proceedings of the ACM/SIGDA International Symposium on Field-Programmable Gate Arrays (FPGA), Monterey, CA, USA, 22–24 February 2015; pp. 161–170. [Google Scholar]

- Qiu, J.; Wang, J.; Yao, S.; Guo, K.; Li, B.; Zhou, E.; Yu, J.; Tang, T.; Xu, N.; Song, S.; et al. Going deeper with embedded FPGA platform for convolutional neural networks. In Proceedings of the ACM/SIGDA International Symposium on Field-Programmable Gate Arrays (FPGA), Monterey, CA, USA, 21–23 February 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 26–35. [Google Scholar] [CrossRef]

- Nurvitadhi, E.; Venkatesh, G.; Marr, J.; Huang, R.; Sim, J.; Esmaeilzadeh, H. Can FPGAs beat GPUs in accelerating deep neural networks? In Proceedings of the ACM/SIGDA International Symposium on Field-Programmable Gate Arrays (FPGA), Monterey, CA, USA, 22–24 February 2017; pp. 5–14. [Google Scholar] [CrossRef]

- Venieris, S.I.; Bouganis, C.S. fpgaConvNet: A Framework for Mapping Convolutional Neural Networks on FPGAs. In Proceedings of the 2016 IEEE 24th Annual International Symposium on Field-Programmable Custom Computing Machines (FCCM), Washington, DC, USA, 1–3 May 2016; pp. 40–47. [Google Scholar] [CrossRef]

- Venieris, S.I.; Bouganis, C.S. fpgaConvNet: A toolflow for mapping diverse convolutional neural networks on FPGAs. arXiv 2017, arXiv:1711.08740. [Google Scholar]

- Liu, Z.; Dou, Y.; Jiang, J.; Xu, J.; Li, S.; Zhou, Y.; Xu, Y. Throughput-Optimized FPGA Accelerator for Deep Convolutional Neural Networks. ACM Trans. Reconfigurable Technol. Syst. (TRETS) 2017, 10, 1–23. [Google Scholar] [CrossRef]

- Li, R. Dataflow & Tiling Strategies in Edge-AI FPGA Accelerators: A Comprehensive Literature Review. arXiv 2025. [Google Scholar] [CrossRef]

- Yan, F.; Koch, A.; Sinnen, O. A survey on FPGA-based accelerator for ML models. arXiv 2024. [Google Scholar] [CrossRef]

- Chen, H.; Hao, C. DGNN-Booster: A Generic FPGA Accelerator Framework For Dynamic Graph Neural Network Inference. In Proceedings of the 2023 IEEE 31st Annual International Symposium on Field-Programmable Custom Computing Machines (FCCM), Marina Del Rey, CA, USA, 8–11 May 2023; pp. 195–201. [Google Scholar] [CrossRef]

- Carpegna, A.; Savino, A.; Carlo, S.D. Spiker+: A Framework for the Generation of Efficient Spiking Neural Networks FPGA Accelerators for Inference at the Edge. IEEE Trans. Emerg. Top. Comput. 2025, 13, 784–798. [Google Scholar] [CrossRef]

- Nag, S.; Susskind, Z.; Arora, A.; Bacellar, A.T.L.; Dutra, D.L.C.; Miranda, I.D.S.; Kailas, K.; John, E.B.; Breternitz, M.; Lima, P.M.V.; et al. LogicNets vs. ULEEN: Comparing two novel high throughput edge ML inference techniques on FPGA. In Proceedings of the 2024 IEEE 67th International Midwest Symposium on Circuits and Systems (MWSCAS), Springfield, MA, USA, 11–14 August 2024; pp. 1206–1211. [Google Scholar] [CrossRef]

- Jiang, Y.; Vaicaitis, A.; Dooley, J.; Leeser, M. Efficient Neural Networks on the Edge with FPGAs by Optimizing an Adaptive Activation Function. Sensors 2024, 24, 1829. [Google Scholar] [CrossRef]

- Bosio, R.; Minnella, F.; Urso, T.; Casu, M.R.; Lavagno, L.; Lazarescu, M.T.; Pasini, P. NN2FPGA: Optimizing CNN Inference on FPGAs With Binary Integer Programming. IEEE Trans.-Comput.-Aided Des. Integr. Circuits Syst. 2024, 44, 1807–1818. [Google Scholar] [CrossRef]

- Xilinx. Xilinx DPU Datasheet. Available online: https://docs.amd.com/r/en-US/pg338-dpu (accessed on 29 August 2025).

- NVIDIA. NVIDIA Deep Learning Accelerator (NVDLA). Available online: https://nvdla.org/ (accessed on 29 August 2025).

- Xilinx. Vitis AI: Support for Zynq-7000 Devices. Available online: https://adaptivesupport.amd.com/s/article/76742?language=en_US (accessed on 29 August 2025).

- Cesarano, G. FPGA Implementation of a Deep Learning Inference Accelerator for Autonomous Vehicles. Master’s Thesis, Politecnico di Torino, Turin, Italy, 2018. [Google Scholar]

- Wang, L. ZYNQ-NVDLA. Available online: https://github.com/LeiWang1999/ZYNQ-NVDLA?tab=readme-ov-file (accessed on 29 August 2025).

- Marino, V. Hardware Acceleration of AdderNet via High-Level Synthesis for FPGA. Master’s Thesis, Politecnico di Torino, Turin, Italy, 2024. [Google Scholar]

- Montgomerie-Corcoran, A.; Toupas, P.; Yu, Z.; Bouganis, C.S. SATAY: A Streaming Architecture Toolflow for Accelerating YOLO Models on FPGA Devices. arXiv 2023. [Google Scholar] [CrossRef]

- Toupas, P.; Yu, Z.; Bouganis, C.S.; Tzovaras, D. SMOF: Streaming Modern CNNs on FPGAs with Smart Off-Chip Eviction. arXiv 2024. [Google Scholar] [CrossRef]

- Kadi, M.A.; Rudolph, P.; Gohringer, D.; Hubner, M. Dynamic and partial reconfiguration of Zynq 7000 under Linux. In Proceedings of the 2013 International Conference on Reconfigurable Computing and FPGAs (ReConFig), Cancun, Mexico, 9–11 December 2013; pp. 1–5, ISSN 2325-6532. [Google Scholar] [CrossRef]

- Ma, Y.; Xu, Q.; Song, Z. Resource-Efficient Optimization for FPGA-Based Convolution Accelerator. Electronics 2023, 12, 4333. [Google Scholar] [CrossRef]

- Pistellato, M.; Bergamasco, F.; Bigaglia, G.; Gasparetto, A.; Albarelli, A.; Boschetti, M.; Passerone, R. Quantization-Aware NN Layers with High-throughput FPGA Implementation for Edge AI. Sensors 2023, 23, 4667. [Google Scholar] [CrossRef]

- Fraga-Lamas, P.; Ramos, L.; Mondéjar-Guerra, V.; Fernández-Caramés, T.M. A Review on IoT Deep Learning UAV Systems for Autonomous Obstacle Detection and Collision Avoidance. Remote Sens. 2019, 11, 2144. [Google Scholar] [CrossRef]

- Lahmeri, M.A.; Kishk, M.A.; Alouini, M.S. Artificial Intelligence for UAV-Enabled Wireless Networks: A Survey. IEEE Open J. Commun. Soc. 2021, 2, 1015–1040. [Google Scholar] [CrossRef]

- Zhou, L.; Yin, H.; Zhao, H.; Wei, J.; Hu, D.; Leung, V.C. A Comprehensive Survey of Artificial Intelligence Applications in UAV-Enabled Wireless Networks. Digit. Commun. Netw. 2024. [Google Scholar] [CrossRef]

- Maqueda, A.I.; Loquercio, A.; Gallego, G.; García, N.; Scaramuzza, D. Event-based vision meets deep learning on steering prediction for self-driving cars. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5419–5427. [Google Scholar]

- Deng, J.; Shi, Z.; Zhuo, C. Energy-Efficient Real-Time UAV Object Detection on Embedded Platforms. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2020, 39, 3123–3127. [Google Scholar] [CrossRef]

- Chen, T.; Du, Z.; Sun, N.; Wang, J.; Wu, C.; Chen, Y.; Temam, O. DianNao: A small-footprint high-throughput accelerator for ubiquitous machine-learning. SIGARCH Comput. Archit. News 2014, 42, 269–284. [Google Scholar] [CrossRef]

- Choi, K.; Sobelman, G.E. An Efficient CNN Accelerator for Low-Cost Edge Systems. ACM Trans. Embed. Comput. Syst. 2022, 21, 1–20. [Google Scholar] [CrossRef]

- Wang, Y.; Liao, Y.; Yang, J.; Wang, H.; Zhao, Y.; Zhang, C.; Xiao, B.; Xu, F.; Gao, Y.; Xu, M.; et al. An FPGA-based online reconfigurable CNN edge computing device for object detection. Microelectron. J. 2023, 137, 105805. [Google Scholar] [CrossRef]

- Procaccini, M.; Sahebi, A.; Giorgi, R. A survey of graph convolutional networks (GCNs) in FPGA-based accelerators. J. Big Data 2024, 11, 163. [Google Scholar] [CrossRef]

- Calì, R. Thesis Code Repository. Available online: https://github.com/sn0wst0rm/FINN-VisDrone-YOLO (accessed on 29 August 2025).

- Günay, B.; Okcu, S.B.; Bilge, H.c. LPYOLO: Low Precision YOLO for Face Detection on FPGA. arXiv 2022. [Google Scholar] [CrossRef]

- OKCU, S.B. Low Precision(quantized) Yolov5, Modified Verision of Ultralytics YOLOv5 Repo, Implementing Quantization Modules for PyTorch Using Brevitas. 2024. Available online: https://github.com/sefaburakokcu/quantized-yolov5 (accessed on 29 August 2025).

- Zhu, P.; Wen, L.; Du, D.; Bian, X.; Fan, H.; Hu, Q.; Ling, H. Detection and tracking meet drones challenge. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 7380–7399. [Google Scholar] [CrossRef] [PubMed]

- Kaggle. Kaggle: Your Machine Learning and Data Science Community. Available online: https://www.kaggle.com/ (accessed on 29 August 2025).

- Roeder, L. Netron, Visualizer for Neural Network, Deep Learning and Machine Learning Models. Available online: https://netron.app/ (accessed on 29 August 2025).

- feranick. Edge TPU Runtime Library (Libedgetpu). 2025. Available online: https://github.com/feranick/libedgetpu (accessed on 29 August 2025).

- Mrahorovic, M. Multi-Packed DSPs for MVU/VVU Layers · Xilinx/Finn · Discussion #1021. Available online: https://github.com/Xilinx/finn/discussions/1021 (accessed on 29 August 2025).

- Labs, X.R. Convolution Input Generator—FINN Documentation. Available online: https://finn.readthedocs.io/en/latest/internals.html#rtl-convolutioninputgenerator (accessed on 29 August 2025).

- Borras, H. Questions About the FIFO Depth Between Layers · Xilinx/Finn · Discussion #383. Available online: https://github.com/Xilinx/finn/discussions/383#discussioncomment-1449610 (accessed on 29 August 2025).

- Veripool. Verilator Software. Available online: https://www.veripool.org/verilator/ (accessed on 29 August 2025).

| Layer Type | Input | Output | Kernel | Stride | Activation |

|---|---|---|---|---|---|

| QuantConv | 416 × 416 × 3 | 416 × 416 × 8 | 3 × 3 | 1 | QuantReLU |

| MaxPooling | 416 × 416 × 8 | 208 × 208 × 8 | 2 × 2 | 2 | - |

| QuantConv | 208 × 208 × 8 | 208 × 208 × 8 | 3 × 3 | 1 | QuantReLU |

| MaxPooling | 208 × 208 × 8 | 104 × 104 × 8 | 2 × 2 | 2 | - |

| QuantConv | 104 × 104 × 8 | 104 × 104 × 16 | 3 × 3 | 1 | QuantReLU |

| MaxPooling | 104 × 104 × 16 | 52 × 52 × 16 | 2 × 2 | 2 | - |

| QuantConv | 52 × 52 × 16 | 52 × 52 × 32 | 3 × 3 | 1 | QuantReLU |

| MaxPooling | 52 × 52 × 32 | 26 × 26 × 32 | 2 × 2 | 2 | - |

| QuantConv | 26 × 26 × 32 | 26 × 26 × 56 | 3 × 3 | 1 | QuantReLU |

| MaxPooling | 26 × 26 × 56 | 13 × 13 × 56 | 2 × 2 | 2 | - |

| QuantConv | 13 × 13 × 56 | 13 × 13 × 104 | 3 × 3 | 1 | QuantReLU |

| MaxPooling | 13 × 13 × 104 | 13 × 13 × 104 | 2 × 2 | 2 | - |

| QuantConv | 13 × 13 × 104 | 13 × 13 × 208 | 3 × 3 | 1 | QuantReLU |

| QuantConv | 13 × 13 × 208 | 13 × 13 × 56 | 1 × 1 | 1 | QuantReLU |

| QuantConv | 13 × 13 × 56 | 13 × 13 × 104 | 3 × 3 | 1 | QuantReLU |

| QuantConv | 13 × 13 × 104 | 13 × 13 × 18 | 3 × 3 | 1 | QuantHardTanh |

| Package | Version |

|---|---|

| matplotlib | 3.5.1 |

| numpy | 1.26.3 |

| opencv-python | 4.10.0.84 |

| pillow | 11.1.0 |

| PyYAML | 6.0.2 |

| requests | 2.32.3 |

| scipy | 1.15.0 |

| torch | 1.13.1 |

| torchvision | 0.14.1 |

| tqdm | 4.67.1 |

| brevitas | 0.9.0 |

| tensorboard | 2.15.2 |

| pandas | 2.2.3 |

| seaborn | 0.13.2 |

| thop | 0.1.1.post2209072238 |

| Parameter | Value |

|---|---|

| Number of Epochs | 300 |

| Batch Size | 64 |

| Image Size | |

| Optimizer | SGD |

| Patience | 30 |

| DSP TYPE | Activations: [4,4]-bit (U)INT Weights: [4,4]-bit INT | Activations: (4,8]-bit (U)INT Weights: (4,8]-bit INT |

|---|---|---|

| HLS DSP | 1 MAC/DSP | 1 MAC/DSP |

| RTL DSP48E1 | 4 MAC/DSP | 2 MAC/DSP |

| RTL DSP48E2 | 4 MAC/DSP | 2 MAC/DSP |

| RTL DSP58 | 3 MAC/DSP | 3 MAC/DSP |

| Metric | YOLOv3-Tiny Original | YOLOv3-Tiny Pruned |

|---|---|---|

| Precision (P) | 67.06% | 48.16% |

| Recall (R) | 38.44% | 17.16% |

| mAP@0.5 | 41.76% | 17.68% |

| Box loss | 0.1220 | 0.1531 |

| Object loss | 0.1653 | 0.3049 |

| Classification loss | 0 | 0 |

| Layer | MAC Operations | Weights | Data Type | ||

|---|---|---|---|---|---|

| MVAU_rtl_0 | 37,380,096 | 216 | 8b–8b | ||

| MVAU_rtl_1 | 24,920,064 | 576 | 4b–4b | ||

| MVAU_hls_0 | 12,460,032 | 1152 | 4b–4b | ||

| MVAU_rtl_2 | 12,460,032 | 4608 | 4b–4b | ||

| MVAU_hls_1 | 10,902,528 | 16,128 | 4b–4b | ||

| MVAU_hls_2 | 8,858,304 | 52,416 | 4b–4b | ||

| MVAU_hls_3 | 32,902,272 | 194,688 | 4b–4b | ||

| MVAU_hls_4 | 1,968,512 | 11,648 | 4b–4b | ||

| MVAU_rtl_3 | 8,858,304 | 52,416 | 4b–4b | ||

| MVAU_rtl_4 | 2,847,312 | 16,848 | 4b–8b | ||

| Total | (8 b–8 b) | 37,380,096 | (8 b) | 17,064 | |

| (4 b–4 b) | 113,330,048 | (4 b) | 333,632 | ||

| (4 b–8 b) | 2,847,312 | — | — | ||

| Resource | Estimated | Actual | Available | Util% |

|---|---|---|---|---|

| BRAM_18K | 200 | 138 | 140 | 98.57 |

| LUT | 26,694 | 41,605 | 53,200 | 78.20 |

| DSP | 202 | 204 | 220 | 92.73 |

| URAM | 0 | — | — | — |

| Metric | Estimated | RTLSim | Actual (FINN) |

|---|---|---|---|

| Total Cycles | 4,586,895 → critical path | — | — |

| Max Cycles (Node) | 216,320 (StreamingMaxPool_hls_0) | 741,670 | — |

| Latency (ms) | 45.87 | 7.41 | 11.56 |

| Throughput (FPS) | 462.28 | 134.83 | 104.39 |

| Clock (MHz) | Batch | Runtime (ms) | Throughput (FPS) | DRAM In (Mb/s) | DRAM Out (Mb/s) |

|---|---|---|---|---|---|

| 50 | 1 | 22.774 | 43.909 | 22.796 | 0.134 |

| 50 | 100 | 1915.531 | 52.205 | 27.103 | 0.159 |

| 100 | 1 | 11.564 | 86.473 | 44.894 | 0.263 |

| 100 | 100 | 957.986 | 104.386 | 54.194 | 0.318 |

| 200 | 1 | 6.068 | 164.786 | 85.552 | 0.501 |

| 200 | 100 | 479.189 | 208.686 | 108.343 | 0.635 |

| Parameter | 50 MHz (ms) | 100 MHz (ms) | 200 MHz (ms) |

|---|---|---|---|

| Preprocessing | |||

| Driver Exec | |||

| Rescale | |||

| Detect | |||

| NMS | |||

| Box Process | |||

| Total Postprocess † |

| Name | Power (W) |

|---|---|

| top_wrapper | 3.129 |

| top_i | 3.129 |

| StreamingDataflowPartition_1 | 1.554 |

| inst | 1.554 |

| axi_interconnect_0 | 0.004 |

| s00_couplers | 0.003 |

| idma0 | 0.019 |

| inst | 0.019 |

| odma0 | 0.012 |

| inst | 0.012 |

| smartconnect_0 | 0.042 |

| inst | 0.042 |

| zynq_ps | 1.497 |

| inst | 1.497 |

| On-Chip Component | Power (W) | Used | Available | Utilization (%) |

|---|---|---|---|---|

| Clocks | 0.124 | 4 | — | — |

| Slice Logic | 0.428 | 114,377 | — | — |

| LUT as Logic | 0.384 | 37,928 | 53,200 | 71.29 |

| CARRY4 | 0.025 | 4605 | 13,300 | 34.62 |

| Register | 0.014 | 49,049 | 106,400 | 46.10 |

| F7/F8 Muxes | 0.002 | 1749 | 53,200 | 3.29 |

| LUT as Distributed RAM | 0.002 | 2478 | 17,400 | 14.24 |

| LUT as Shift Register | <0.001 | 1215 | 17,400 | 6.98 |

| Others | 0.000 | 2531 | — | — |

| Signals | 0.612 | 94,868 | — | — |

| Block RAM | 0.262 | 138 | 140 | 98.57 |

| DSPs | 0.207 | 204 | 220 | 92.73 |

| PS7 | 1.496 | 1 | — | — |

| Static Power | 0.229 | |||

| Total | 3.358 |

| Configuration | Power (W) |

|---|---|

| Idle (no bitstream) | 1.9 |

| 50 MHz | 2.12 |

| 100 MHz | 2.38 |

| 200 MHz | 2.55 |

| Clock (MHz) | Batch Size | Throughput (FPS) | Power (W) | Efficiency (FPS/W) |

|---|---|---|---|---|

| 50 | 1 | 43.91 | 2.12 | 20.71 |

| 100 | 52.21 | 2.12 | 24.63 | |

| 100 | 1 | 86.47 | 2.38 | 36.32 |

| 100 | 104.39 | 2.38 | 43.86 | |

| 200 | 1 | 164.79 | 2.55 | 64.63 |

| 100 | 208.69 | 2.55 | 81.88 |

| Coral Edge TPU | FINN on Z-Turn Board | ||||

|---|---|---|---|---|---|

| Metric | 250 MHz | 500 MHz | 50 MHz | 100 MHz | 200 MHz |

| Latency (ms) | 9.69 | 6.73 | 22.77 ‡ | 11.56 ‡ | 6.07 ‡ |

| Throughput (FPS) | 103.2 | 148.7 | 43.91 | 86.47 | 164.8 |

| Power (W) | 1.2 † | 2.4 † | 2.12 | 2.38 | 2.55 |

| Efficiency (FPS/W) | 86.0 | 62.0 | 20.7 | 36.3 | 64.5 |

| Reference | FPGA Board | Precision | Clock (MHz) | LUT (units) | DSP (units) | BRAM (units) | Latency (ms) | Throughput (FPS) | Power (W) | Efficiency (FPS/W) |

|---|---|---|---|---|---|---|---|---|---|---|

| [12] FINN-R | PYNQ-Z1 | 1W3A | 100 | 46.5 K | — | 280 (18 K) | 33.44 | 29.9 | 2.5 | 11.96 |

| [48] SATAY | VCU110 | 8W16A | 220 | 127 K | 1780 | 2090.5 (36 K) | 14.3 | 69.93 | 15.4 | 4.54 |

| [48] SATAY | VCU118 | 8W16A | 255 | 431 K | 6687 | 2148 (36 K) | 6.8 | 147.06 | 42.9 | 3.43 |

| [63] LPYOLO | Z7020 | 4W4A | 100 | 39.4 K | 203 | 91 (18 K) | 52.3 | 19.12 | 2.4 | 7.97 |

| This Work | Z7020 | 4W4A | 200 | 37.9 K | 204 | 138 (18 K) | 4.79 | 208.77 | 2.55 | 81.85 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Calì, R.; Falaschetti, L.; Biagetti, G. Optimized Implementation of YOLOv3-Tiny for Real-Time Image and Video Recognition on FPGA. Electronics 2025, 14, 3993. https://doi.org/10.3390/electronics14203993

Calì R, Falaschetti L, Biagetti G. Optimized Implementation of YOLOv3-Tiny for Real-Time Image and Video Recognition on FPGA. Electronics. 2025; 14(20):3993. https://doi.org/10.3390/electronics14203993

Chicago/Turabian StyleCalì, Riccardo, Laura Falaschetti, and Giorgio Biagetti. 2025. "Optimized Implementation of YOLOv3-Tiny for Real-Time Image and Video Recognition on FPGA" Electronics 14, no. 20: 3993. https://doi.org/10.3390/electronics14203993

APA StyleCalì, R., Falaschetti, L., & Biagetti, G. (2025). Optimized Implementation of YOLOv3-Tiny for Real-Time Image and Video Recognition on FPGA. Electronics, 14(20), 3993. https://doi.org/10.3390/electronics14203993