Dynamic Visual Privacy Governance Using Graph Convolutional Networks and Federated Reinforcement Learning

Abstract

1. Introduction

- Legacy architectures: Previous approaches are built only upon legacy architectures, which lack the powerful feature representation capabilities of modern state-of-the-art (SOTA) vision backbones like Vision Transformer (ViT) [5].

- Failure to model label correlations: Previous approaches fail to model the label correlations and co-occurrence patterns that exist between them (e.g., the “passport” attribute is highly correlated with “face”).

- Static user preference models: Their user preference models are typically static and collective, derived from one-off user studies, which cannot adapt to the dynamic and deeply personal nature of individual privacy boundaries.

- To overcome legacy architectures, we establish a new SOTA baseline by employing modern ConvNeXt [6] backbone and advocate for a more balanced evaluation protocol that emphasizes not only mAP but also the Overall F1-score (OF1), which is more representative of real-world performance in privacy-sensitive applications.

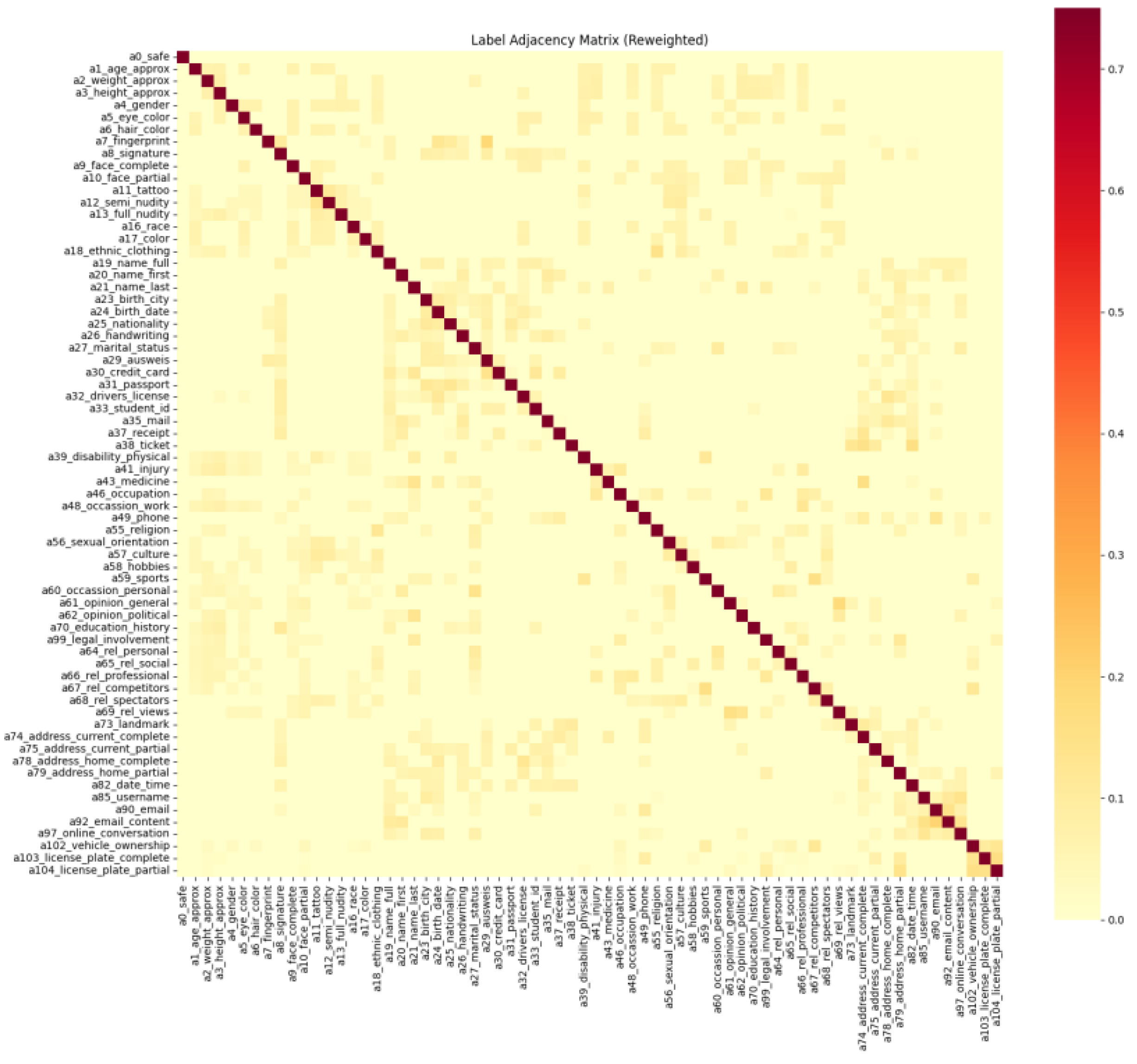

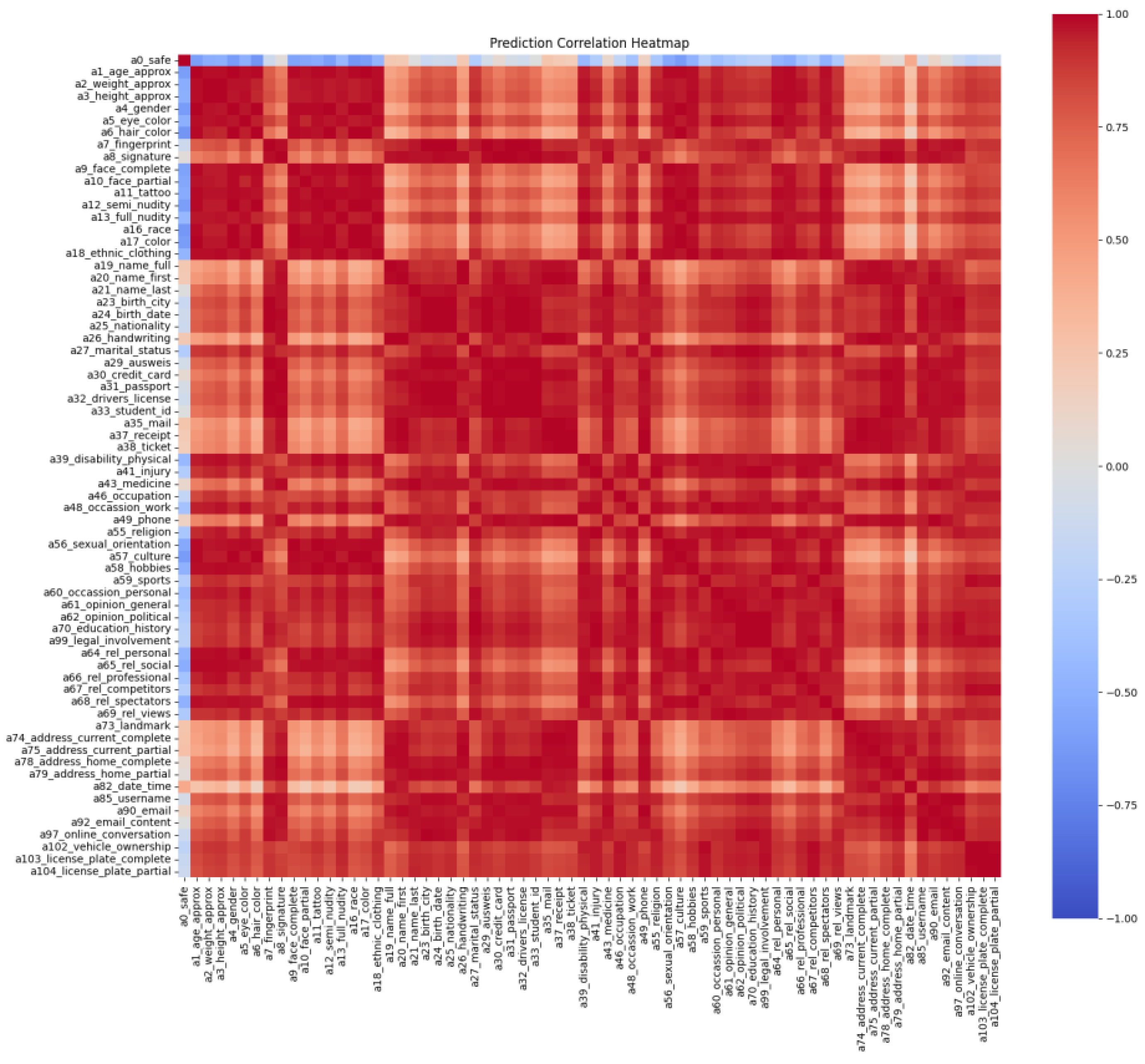

- To solve the problem of failure to model label correlations, we introduce Graph Convolutional Networks (GCNs) as a classifier head to explicitly leverage label correlations for more coherent and accurate predictions, a technique proven effective in general multi-label contexts [7].

- To replace the static user preference models, we design a dynamic and privacy-preserving framework that integrates Federated Learning (FL) [8] and Reinforcement Learning (RL) [9]. FL ensures that user preference models are trained on-device to preserve privacy, while RL allows the system to continuously and dynamically adapt to each user’s evolving privacy feedback, treating them as a unique individual.

2. Related Work

2.1. Visual Privacy Prediction

2.2. Modern Architectures for Visual Recognition

2.3. Multi-Label Recognition with Label Correlations

2.4. Personalized and Privacy-Preserving Learning

3. Proposed Methods

3.1. Privacy Attribute Recognition

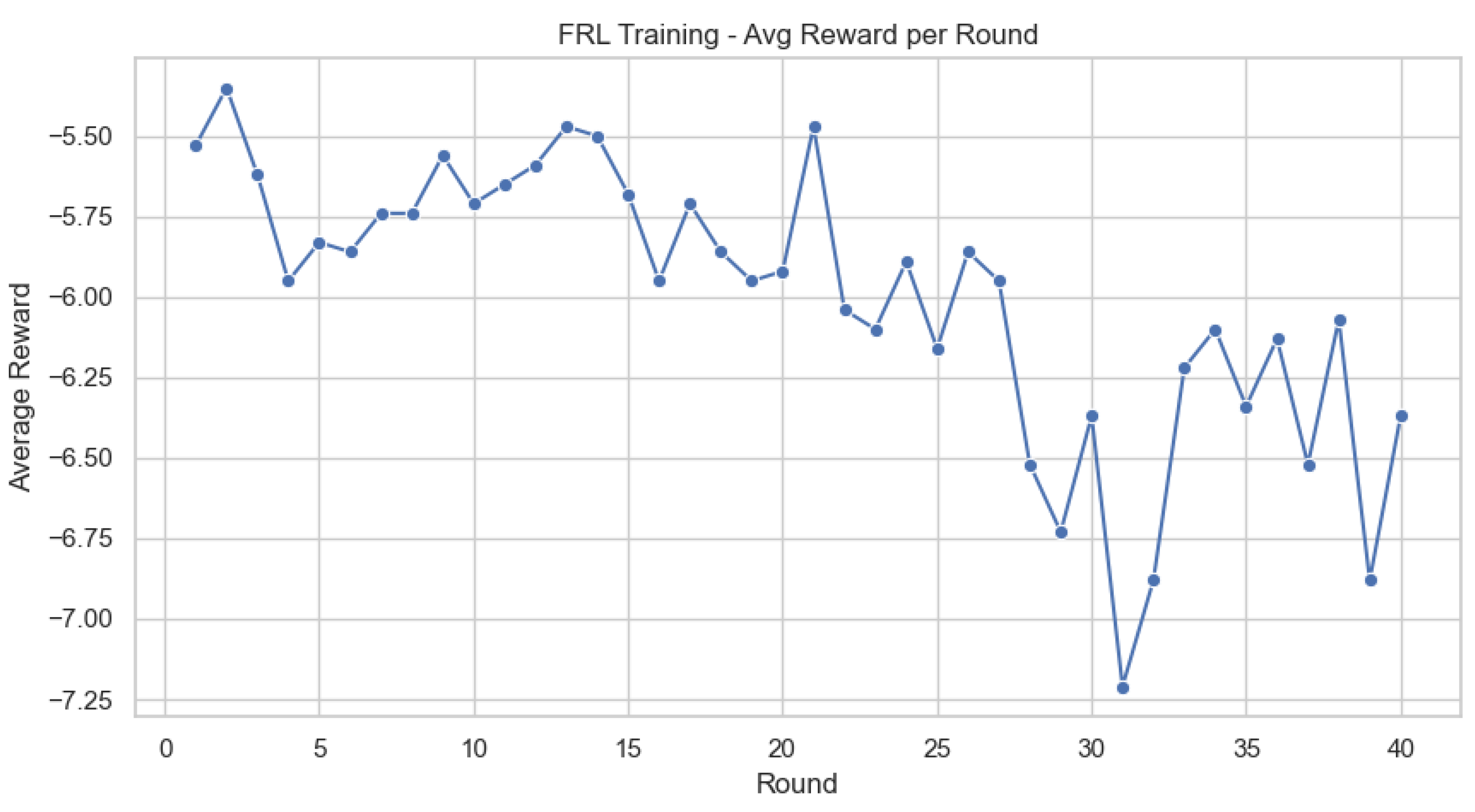

3.2. Dynamic and Personalized Governance via FRL

3.2.1. Simulation Environment

3.2.2. Agent Architecture

3.2.3. Two-Stage Learning Framework

| Algorithm 1: Online Fine-tuning from Corrective Feedback |

| Require: Agent , current state s, user feedback , learning rate episodes e, confidence threshold |

| Ensure: Updated agent |

| 1: Initialize optimizer for agent with learning rate . |

| 2: Get prediction confidences P from state s |

| 3: ← //Select indices of high-confidence predictions |

| 4: if is empty then return |

| 5: Initialize target vector T and weight vector W for indices in |

| 6: for each index I do 7: if I then 8: ←1.0, ←5.0//Reinforce correct suggestions 9: else if I then 10: ←0.0, ←10.0//Penalize incorrect suggestions more heavily 11: end if 12: end for 13: Initialize loss function = BCEWithLogitsLoss(weight = W) 14: for e = 1 to E do 15: Get prediction scores ← 16: ← select scores from with indices 17: loss←(, T) 18: Update agent by minimizing loss Via gradient descent 19: end for 20: return updated agent |

3.3. Dynamic Risk Assessment

4. Experimental

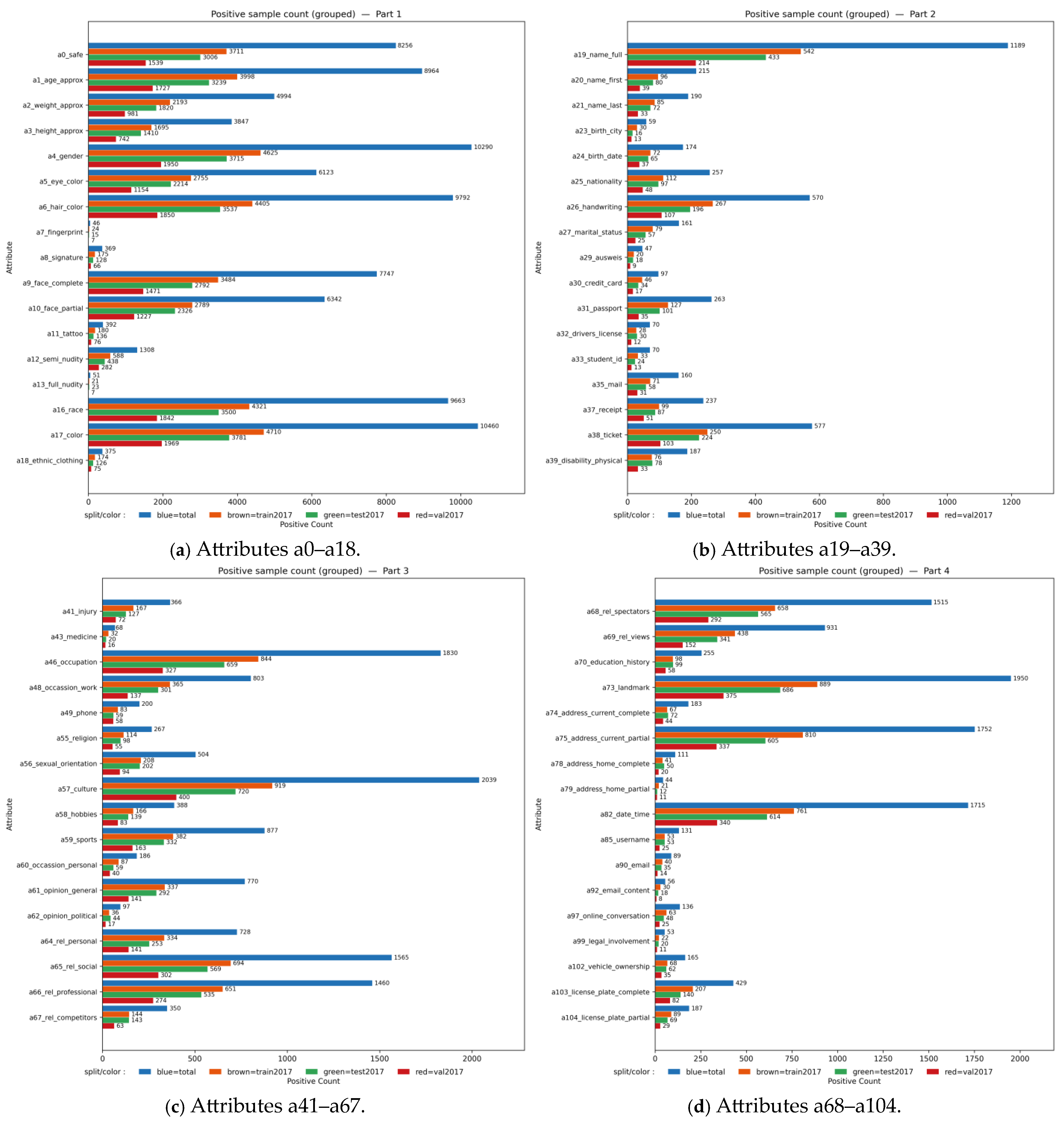

4.1. Experimental Setup

4.2. A New SOTA Architectures with ConvNeXt-Base Model

4.3. Structured Label Correlations with GCN

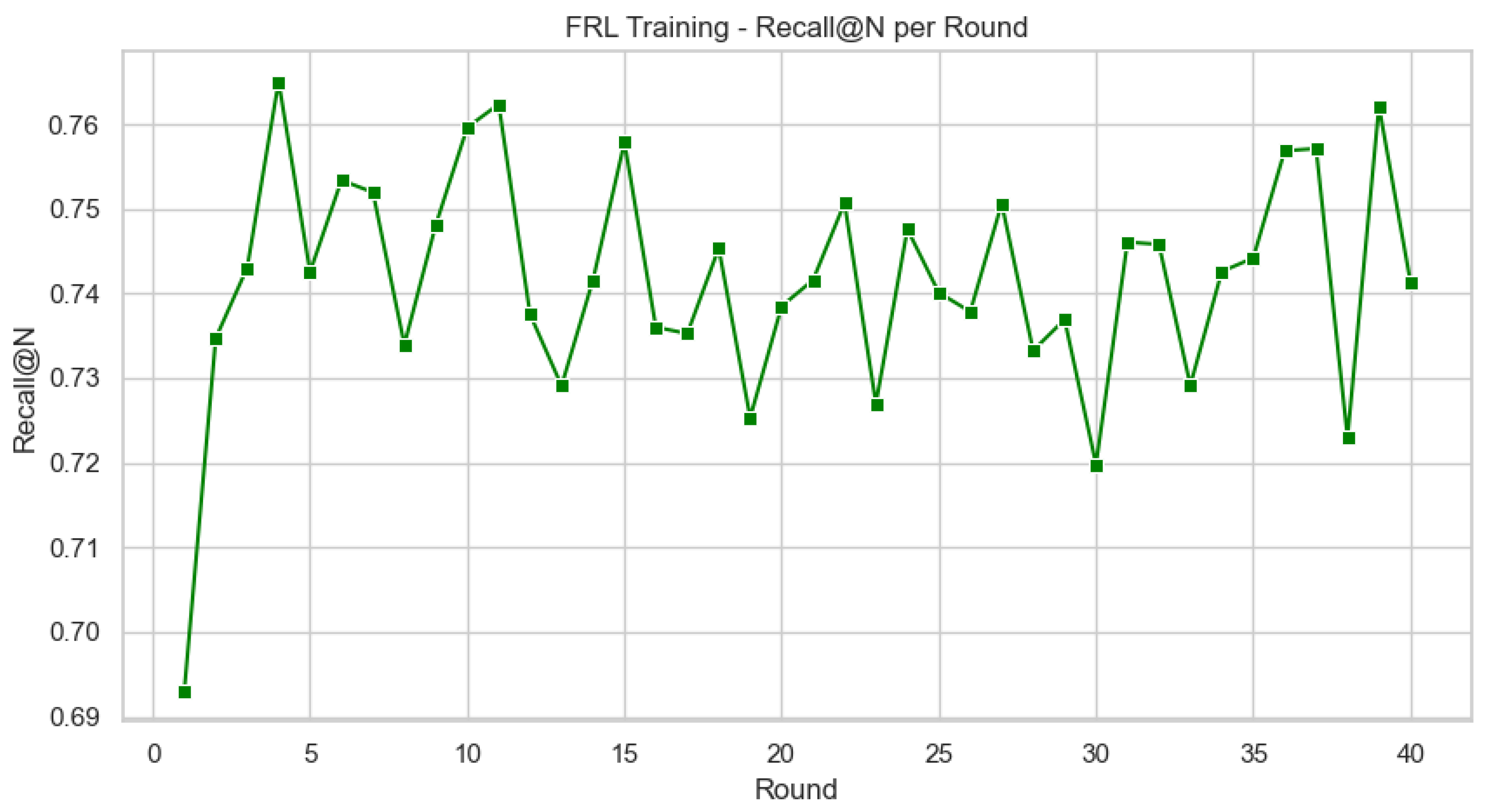

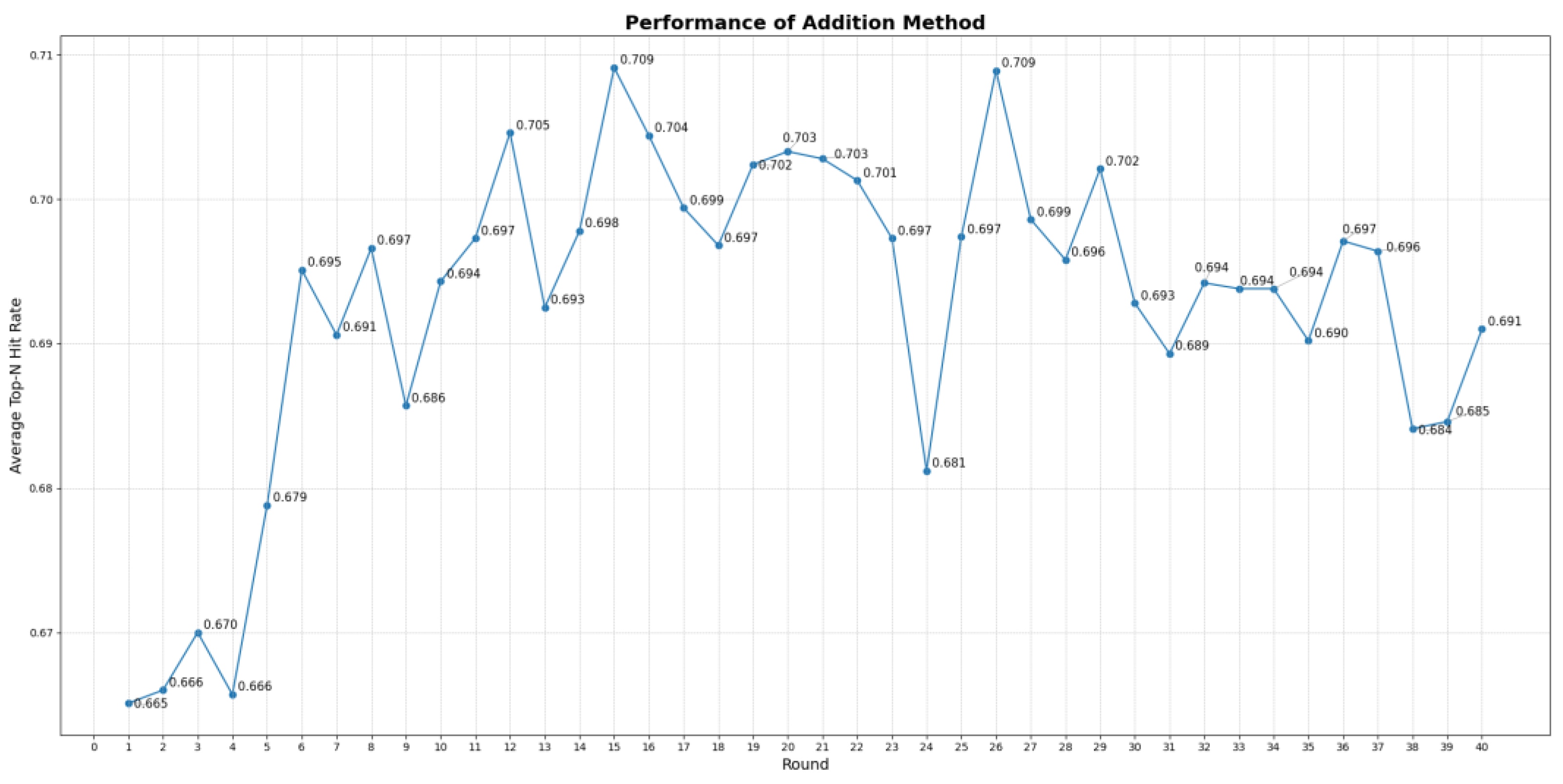

4.4. Dynamic Personalization with FRL

4.5. Computational Cost

- Average Classifier Latency: 19.1322 ms/image

- Average RL Agent Latency: 0.5352 ms/image

- Average Total Inference Latency: 19.6675 ms/image

5. Conclusions and Future Works

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| mAP | mean Average Precision |

| OP | Overall Precision |

| OR | Overall Recall |

| OF1 | Overall F1-score |

| GCN | Graph Convolutional Network |

| FL | Federated Learning |

| RL | Reinforcement Learning |

| FRL | Federated Reinforcement Learning |

| CNN | Convolutional Neural Network |

| ViT | Vision Transformer |

| ResNet | Residual Network |

| GNN | Graph Neural Network |

| MLR | Multi-Label Image Recognition |

| VISPR | Visual Privacy Dataset |

| PSSA | Privacy-Specific Spatial Attention |

| CLIP | Contrastive Language–Image Pre-training |

| GloVe | Global Vectors for Word Representation |

| PMI | Positive Pointwise Mutual Information |

| KNN | k-Nearest Neighbors |

| PMI-KNN | PMI-based kNN graph |

| MLP | Multi-Layer Perceptron |

| DQN | Deep Q-Network |

| AMP | Automatic Mixed Precision |

| BCEWithLogitsLoss | Binary Cross-Entropy with Logits Loss |

| pos_weight | positive class weighting |

| Adam | Adaptive Moment Estimation |

| AdamW | Adam with decoupled Weight Decay |

| GELU | Gaussian Error Linear Unit |

| LeakyReLU | Leaky Rectified Linear Unit |

| SVM | Support Vector Machines |

| NLP | Natural Language Processing |

References

- Gross, R.; Acquisti, A. Information revelation and privacy in online social networks. In Proceedings of the 2005 ACM Workshop on Privacy in the Electronic Society, Alexandria, VA, USA, 7 November 2005; pp. 71–80. [Google Scholar]

- Kokolakis, S. Privacy attitudes and privacy behaviour: A review of current research on the privacy paradox phenomenon. Comput. Secur. 2017, 64, 122–134. [Google Scholar] [CrossRef]

- Jiang, H.; Zuo, J.; Lu, Y. Connecting Visual Data to Privacy: Predicting and Measuring Privacy Risks in Images. Electronics 2025, 14, 811. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Mao, H.; Wu, C.-Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A convnet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11976–11986. [Google Scholar]

- Chen, Z.-M.; Wei, X.-S.; Wang, P.; Guo, Y. Multi-label image recognition with graph convolutional networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 5177–5186. [Google Scholar]

- Kairouz, P.; McMahan, H.B.; Avent, B.; Bellet, A.; Bennis, M.; Bhagoji, A.N.; Bonawitz, K.; Charles, Z.; Cormode, G.; Cummings, R. Advances and open problems in federated learning. Found. Trends Mach. Learn. 2021, 14, 1–210. [Google Scholar] [CrossRef]

- Qi, J.; Zhou, Q.; Lei, L.; Zheng, K. Federated reinforcement learning: Techniques, applications, and open challenges. arXiv 2021, arXiv:2108.11887. [Google Scholar] [CrossRef]

- Orekondy, T.; Schiele, B.; Fritz, M. Towards a visual privacy advisor: Understanding and predicting privacy risks in images. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3686–3695. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Chen, T.; Xu, M.; Hui, X.; Wu, H.; Lin, L. Learning semantic-specific graph representation for multi-label image recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 522–531. [Google Scholar]

- Guo, H.; Zheng, K.; Fan, X.; Yu, H.; Wang, S. Visual attention consistency under image transforms for multi-label image classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 729–739. [Google Scholar]

- Lanchantin, J.; Wang, T.; Ordonez, V.; Qi, Y. General multi-label image classification with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 16478–16488. [Google Scholar]

- Zhao, J.; Yan, K.; Zhao, Y.; Guo, X.; Huang, F.; Li, J. Transformer-based dual relation graph for multi-label image recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 163–172. [Google Scholar]

- Pati, S.; Kumar, S.; Varma, A.; Edwards, B.; Lu, C.; Qu, L.; Wang, J.J.; Lakshminarayanan, A.; Wang, S.-h.; Sheller, M.J. Privacy preservation for federated learning in health care. Patterns 2024, 5, 100974. [Google Scholar] [CrossRef] [PubMed]

- Brockman, G.; Cheung, V.; Pettersson, L.; Schneider, J.; Schulman, J.; Tang, J.; Zaremba, W. Openai gym. arXiv 2016, arXiv:1606.01540. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Fallah, A.; Mokhtari, A.; Ozdaglar, A. Personalized federated learning: A meta-learning approach. arXiv 2020, arXiv:2002.07948. [Google Scholar] [CrossRef]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Yao, L.; Mao, C.; Luo, Y. Graph convolutional networks for text classification. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 7370–7377. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Fang, Y.; Wang, W.; Xie, B.; Sun, Q.; Wu, L.; Wang, X.; Huang, T.; Wang, X.; Cao, Y. Eva: Exploring the limits of masked visual representation learning at scale. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 19358–19369. [Google Scholar]

| Method | Model | mAP (%) | OP | OR | OF1 |

|---|---|---|---|---|---|

| [3] | ResNet-50 + PSSA | 46.88 | 0.720 | 0.690 | 0.700 |

| Ours | ConvNeXt-Base + GCN | 52.88 | 0.713 | 0.837 | 0.770 |

| Backbone | Configuration | mAP (%) | OP | OR | OF1 |

|---|---|---|---|---|---|

| ResNet-50 | Oversample + Dropout | 50.53 | 0.685 | 0.815 | 0.744 |

| EVA-02 Base [24] | Baseline (AdamW) | 48.40 | 0.815 | 0.730 | 0.770 |

| Swin-Small | Baseline | 50.64 | 0.811 | 0.726 | 0.766 |

| ConvNeXt-Base | ConvNeXt-Base + GCN | 52.88 | 0.713 | 0.837 | 0.770 |

| Backbone | Label Embedding | Graph Construction | mAP (%) | OP | OR | OF1 |

|---|---|---|---|---|---|---|

| ConvNeXt-Base | GloVe (300d) | Binary (tau = 0.4) | 52.96 | 0.716 | 0.797 | 0.755 |

| PMI-KNN (k = 15) | 52.44 | 0.679 | 0.854 | 0.757 | ||

| CLIP (512d) | PMI-KNN (k = 15) | 52.88 | 0.713 | 0.837 | 0.770 |

| Head | mAP (%) | OP | OR | OF1 |

|---|---|---|---|---|

| CNN only (Linear) | 44.01 | 0.234 | 0.934 | 0.374 |

| GCN only | 38.48 | 0.805 | 0.375 | 0.511 |

| Ours | 52.88 | 0.713 | 0.837 | 0.770 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, C.; Lu, W.-X.; Chang, R.-I. Dynamic Visual Privacy Governance Using Graph Convolutional Networks and Federated Reinforcement Learning. Electronics 2025, 14, 3774. https://doi.org/10.3390/electronics14193774

Yang C, Lu W-X, Chang R-I. Dynamic Visual Privacy Governance Using Graph Convolutional Networks and Federated Reinforcement Learning. Electronics. 2025; 14(19):3774. https://doi.org/10.3390/electronics14193774

Chicago/Turabian StyleYang, Chih, Wei-Xun Lu, and Ray-I Chang. 2025. "Dynamic Visual Privacy Governance Using Graph Convolutional Networks and Federated Reinforcement Learning" Electronics 14, no. 19: 3774. https://doi.org/10.3390/electronics14193774

APA StyleYang, C., Lu, W.-X., & Chang, R.-I. (2025). Dynamic Visual Privacy Governance Using Graph Convolutional Networks and Federated Reinforcement Learning. Electronics, 14(19), 3774. https://doi.org/10.3390/electronics14193774