Abstract

The JAYA algorithm has been widely applied due to its simplicity and efficiency but is prone to entrapment in sub-optimal solutions. This study introduces the Lévy flight mechanism and proposes the CLJAYA-LF algorithm, which integrates large-step and small-step Lévy movements with a multi-strategy particle update mechanism. The large-step strategy enhances global exploration and helps escape local optima, while the small-step strategy improves fine-grained local search accuracy. Extensive experiments on the CEC2017 benchmark suite and real-world engineering optimization problems demonstrate the effectiveness of CLJAYA-LF. In 50-dimensional benchmark problems, it outperforms JAYA, JAYALF, and CLJAYA in 15 of 22 functions with lower mean fitness and competitive variance; in 100-dimensional problems, it achieves smaller variance in 17 of 24 functions. For engineering applications, CLJAYA-LF attains a mean of 16.9 and variance of 0.332 for the Step-cone Pulley, 1.44 × 10−15 and 3.14 × 10−15 for the Gear Train, and 0.535 and 0.0498 for the Planetary Gear Train, surpassing most JAYA variants. These results indicate that CLJAYA-LF delivers superior optimization performance while maintaining robust stability across dimensions and problem types, demonstrating significant potential for complex and high-dimensional optimization scenarios.

1. Introduction

Optimization techniques play a crucial role in today’s rapidly advancing technological era, with applications spanning engineering, healthcare, transportation, energy systems, and financial modeling. These methods improve efficiency, reduce costs, and enhance system performance. As the demand for optimization continues to grow, optimization algorithms have become a central focus in both academic research and industrial applications [1].

Traditional optimization methods, such as linear programming [2], dynamic programming [3], and gradient-based approaches [4], perform well for structured, low-dimensional, and convex problems. However, they face significant challenges when applied to complex real-world scenarios, which often feature multimodal landscapes with numerous local optima, high dimensionality, and nonlinear or discontinuous objective functions. Moreover, dynamic and stochastic environments, such as traffic systems and financial markets, add further complexity. These limitations highlight the need for novel approaches capable of effectively handling complex optimization tasks.

Nature-inspired algorithms have emerged to address these challenges, particularly excelling in large-scale, multimodal, and complex optimization problems [5,6]. These algorithms are valued for their simplicity, robust global search capabilities, and adaptability. Their core principle is to simulate natural laws or scientific phenomena, integrating randomized search with heuristic strategies to explore vast solution spaces efficiently while balancing global exploration and local exploitation.

Among evolutionary mechanism-based algorithms, the Genetic Algorithm (GA) [4,5,6] simulates natural selection and genetic variation to optimize objective functions, performing well on small- to medium-scale problems but prone to local optima in high-dimensional complex tasks. Evolution Strategies (ES) [7] emphasize individual variation and survival-of-the-fittest principles, improving robustness in complex landscapes. Differential Evolution (DE) [8,9] utilizes differences among individuals to guide optimization, achieving notable performance in continuous spaces. The recently proposed Alpha Evolution Algorithm [10,11] introduces adaptive evolution strategies to dynamically adjust search directions. The Beetle Antennae Search (BAS) [12] mimics beetle foraging behavior, offering simple yet effective global search but relatively slower convergence. While each algorithm has its strengths, single evolutionary algorithms may struggle to balance exploration and exploitation in high-dimensional multimodal problems, motivating the development of multi-strategy enhancements.

In swarm intelligence algorithms, Particle Swarm Optimization (PSO) [13,14,15] mimics bird flock foraging, combining simplicity and fast convergence, though it is prone to premature convergence. Artificial Bee Colony (ABC) [16] models collaborative foraging behavior to improve information sharing and solution quality. Grey Wolf Optimizer (GWO) [17] simulates wolf pack dynamics, enhancing global search through hierarchical guidance and cooperation. Algorithms such as Harris Hawks Optimization (HHO) [18] and Dwarf Mongoose Optimization (DMO) [19] utilize predatory or social behaviors to improve exploration in complex problems. The Egret Swarm Optimization Algorithm (ESOA) [20] combines sit-and-wait and aggressive strategies for efficient global search, demonstrating strong convergence and stability. PSO converges quickly but is susceptible to local optima, ABC exploits population information effectively but may lack local refinement, and GWO or HHO improve global exploration at the cost of increased computational complexity. This motivates hybrid or multi-strategy algorithms to balance efficiency, diversity, and convergence.

Human-inspired algorithms, including HEOA [21], TLBO [22,23], and HOA [24], simulate human learning, knowledge sharing, and decision-making, exhibiting strong adaptability in dynamic environments. The CTCM algorithm [25] models inter-tribal competition and intra-tribal cooperation, surpassing many advanced metaheuristics in convergence speed, stability, and global search capability.

Physics-inspired algorithms, such as Simulated Annealing [26], Water Flow Optimizer [27], Fick Law Algorithm (FLA) [28], and SABO [29], offer alternative search strategies by simulating natural or physical processes. These approaches are effective for certain constrained and continuous-space problems but often exhibit limited stability in high-dimensional nonlinear scenarios.

Despite these advances, most existing methods require careful parameter tuning, are sensitive to initial solutions, and are prone to local optima. The JAYA algorithm [30,31] mitigates some of these issues by using only the best and worst individuals to guide search, avoiding extra parameter settings. Nevertheless, JAYA can still reduce population diversity if the best individual is trapped in a local optimum. To address this, JAYA-LF [32] incorporates Lévy Flight-based random perturbations, and CLJAYA [33] employs multi-strategy learning to improve population information usage and search efficiency.

Building on these developments, this study proposes the CLJAYA-LF algorithm, which combines CLJAYA’s multi-strategy update mechanism with Lévy Flight’s large- and small-step perturbations. By preserving the guiding roles of the best and worst individuals, it overcomes the drawbacks of previous approaches, significantly enhancing the overall performance. The algorithm is evaluated on CEC2017 benchmark functions and real-world engineering optimization problems, demonstrating superior performance in both low- and high-dimensional tasks, validating its effectiveness and applicability in complex optimization scenarios. The main contributions of this work are summarized as follows:

- Integration of Lévy Flights into the CLJAYA Framework Lévy Flights are seamlessly incorporated into the CLJAYA framework. This integration effectively enriches the diversity of particles and significantly boosts both the exploration and exploitation capabilities of the algorithm.

- Enhanced global exploration: Using the large-step movement strategy of Lévy Flights, the CLJAYA-LF algorithm empowers particles to break free from local optima. Consequently, it substantially enhances the algorithm’s global exploration ability and effectively averts premature convergence towards suboptimal solutions.

- Improved Local Exploitation: The algorithm synergistically combines the small-step random movement strategy of Lévy Flights to strengthen local exploitation. This combination effectively tackles the problem where particles face a restricted search area when approaching the optimal and worst solutions.

- Superior Performance Evaluation: Through rigorous testing with the CEC 2017 benchmark suite, CLJAYA-LF demonstrates exceptional performance, surpassing existing advanced optimization methods. The results validate its effectiveness and robustness in both low- and high-dimensional optimization tasks.

The structure of the paper is as follows. Section 2 offers a concise introduction to the relevant works for this paper. Section 3 explains the proposed CLJAYA-LF. Section 4 presents the applications of CLJAYA-LF in numerical optimization. Section 5 presents the applications of CLJAYA-LF in engineering optimization. Finally, Section 6 draws conclusions from the research findings and results.

2. Related Works

2.1. CLJAYA Algorithm

The JAYA algorithm requires only two parameters, population size N and maximum number of iterations , to adjust the position of each solution in the search space, gradually guiding it towards the optimal solution. During the update process, each solution is encouraged to move away from the current worst solution and towards the best solution found so far. This update process can be expressed by the following equation:

where k denotes the iteration number of the algorithm. represents the j-th variable of the i-th particle. is the j-th variable of the best solution, and is the j-th variable of the worst solution. Furthermore, is the updated value of the variable j-th for the iteration. The parameters and are random variables generated from a uniform distribution .

Although the JAYA algorithm is intuitively simple, it relies on a single learning strategy and does not fully utilize the information from the entire population. If the best particle becomes trapped in a local optimum, other particles may also be drawn into this local optimum, thereby reducing population diversity and hindering the ability to escape from local optima. To address this limitation, the Comprehensive Learning JAYA Algorithm (CLJAYA) introduces three distinct learning strategies to enhance particle diversity and improve the algorithm’s search efficiency. The update rule for CLJAYA [33] is shown in (2) and (3). For these equations, and follow the normal distribution. , and follow the uniform distribution . represents the value of the j-th variable in the best individual currently. denotes the value of the variable j in the worst individual. M is the mean position of the current population, p and q are random integers with , and is a random variable that follows a uniform distribution .

and

2.2. Lévy Flight

Lévy Flight is a non-Gaussian random walk characterized by frequent small steps and occasional long jumps [34,35]. In the early stages of the search process, large step sizes allow the population to broadly explore the solution space, thereby improving the likelihood of escaping local optima and reducing the risk of premature convergence. In contrast, in the later stages, smaller steps facilitate refined exploitation near promising regions, leading to more effective convergence toward the global optimum. As a result, Lévy Flight has been widely integrated into metaheuristic algorithms [36,37,38].

The probability density function (PDF) of the Lévy distribution can be expressed as

where s denotes the step length, and is the stability parameter controlling the tail heaviness of the distribution. Smaller values of favor long jumps, while larger values result in more local steps.

The step length s is typically represented as

where and are random variables sampled from normal distributions. The scaling parameter ensures the Lévy distribution satisfies its stability condition and is defined as

where represents the Gamma function.

This formulation not only guarantees the stability of the Lévy distribution but also provides an effective balance between exploration and exploitation: large jumps enhance global exploration, while small steps strengthen local exploitation, thereby improving the overall optimization performance of the algorithm.

3. The Proposed CLJAYA-LF

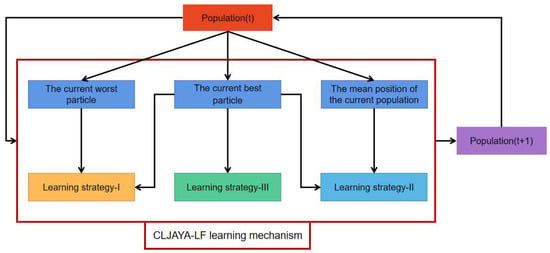

This section offers a detailed presentation of the proposed CLJAYA-LF algorithm. The CLJAYA-LF framework is shown in Figure 1. The population update in CLJAYA-LF is accomplished through the meticulously designed comprehensive learning mechanism, which incorporates three distinct learning strategies. The Comprehensive Learning Jaya Algorithm (CLJAYA) offers several notable advantages. Firstly, by integrating multiple learning strategies, CLJAYA significantly enhances its global search capability, effectively mitigating the risk of being trapped in local optima. Secondly, it eliminates the need for specific parameter tuning, streamlining both usage and debugging processes.

Figure 1.

CLJAYA-LF framework diagram.

Furthermore, CLJAYA ingeniously fuses the traditional JAYA strategy with the mean solution strategy and the best-solution-guided strategy. This synergy enables the algorithm to dynamically adjust search directions and step sizes, thereby accelerating convergence and improving accuracy.

In CLJAYA’s Strategy 1, the particle update is formulated as

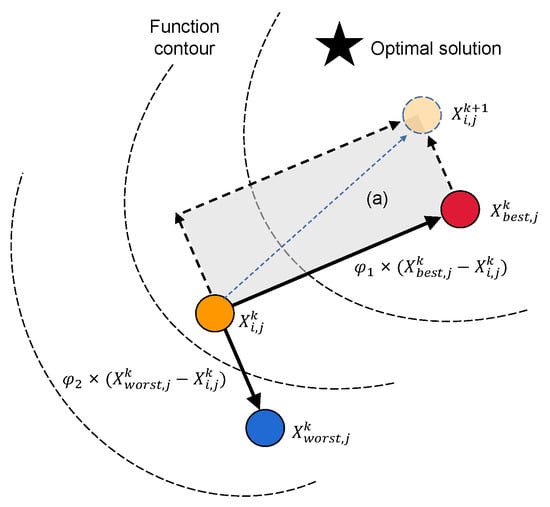

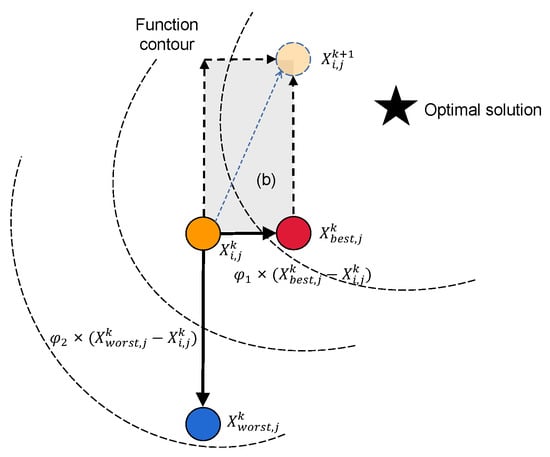

where are uniformly distributed random variables. Due to the limited range of and , the particle step size is constrained, especially when a particle is close to the best or worst positions, potentially causing the updated position to fall within the shaded regions shown in Figure 2 and Figure 3. Specifically,

Figure 2.

Particle update strategy 1 near worst particle.

Figure 3.

Particle update strategy 1 near best particle.

- When a particle is near the worst point , since , the update step is mainly determined by . If is small, the particle cannot effectively move away from the worst point, limiting global exploration.

- When a particle is near the best point , since , the update step is primarily governed by . If is small, the particle struggles to escape the local optimum, and its updated position may remain confined within a local region.

Moreover, the absolute value operation further restricts the flexibility of the update direction, causing the particle positions to be constrained within a certain region and thus forming the shaded areas.

To overcome this limitation, the Lévy flight mechanism is incorporated into Strategy 1. By introducing random perturbations drawn from a Lévy distribution into the update step, particles gain enhanced jump capability, allowing them to escape local optima while still performing small-scale local searches. Theoretically, the heavy-tailed property of Lévy flights enables particles to balance global exploration and local exploitation, thereby improving the overall algorithm performance. The updated formula is expressed as

where represents a random number drawn from a Lévy distribution. It should be noted that, compared to the original CLJAYA algorithm, the only additional parameter required in the equation is the power law exponent . Although this change is relatively simple, the new distribution significantly enhances the overall performance of the solution during optimization. Particle update strategies 2 and 3 inherit the update strategy of the original CLJAYA algorithm, fully utilizing population information to update particle positions based on the average position M of population particles [39].

The CLJAYA-LF algorithm, summarized in Algorithm 1, proceeds by initializing the population, computing the fitness values, and then iteratively updating solutions using multiple strategies based on randomly selected individuals. After each update, the fitness is evaluated, and the population is revised if improvements are found. The process continues until convergence, and the best solution is returned. In the CLJAYA-LF algorithm, several key parameters and variables are used to control the optimization process. The population size N determines the number of particles, while sets the maximum number of iterations, controlling when the algorithm terminates. The problem dimension specifies the number of decision variables for each particle, and and define the lower and upper bounds for each variable, thereby constraining the search space.

| Algorithm 1 The CLJAYA-LF Algorithm |

|

During the iterative update process, stores the updated particle positions after applying the selected strategy. In Strategy 3, and are randomly selected particle positions that introduce diversity into the population. The uniform random numbers , and control the step size and direction in Strategies 2 and 3, while is a random variable used to determine which strategy is applied for each particle. After each update, the fitness value of the new particle is computed as , and records the best fitness value found in each iteration for monitoring convergence.

4. Applications of CLJAYA-LF on Numerical Optimization

To rigorously and convincingly demonstrate the superior performance of the CLJAYA-LF algorithm, this section presents a comprehensive performance evaluation using the CEC2017 benchmark suite [40]. The CEC2017 test set comprises optimization problems derived from real-world scenarios, mathematical models, and artificially constructed benchmark challenges, offering significant complexity and diversity to address a wide range of optimization problems. It consists of 29 functions, categorized into four types: unimodal functions (F1 and F3), which assess algorithm performance in simple scenarios with a single global optimum; multimodal functions (F4–F10), which evaluate the algorithm’s ability to escape local optima and identify the global optimum; composite functions (F11–F20), which combine basic functions to simulate real-world problems with multiple interacting factors; and hybrid functions (F21–F30), which model complex engineering scenarios incorporating continuous and discrete variables or various operational rules. These diverse function types collectively enable a comprehensive evaluation of the algorithm’s performance under varied conditions, making the CEC2017 benchmark particularly well suited for assessing the effectiveness of the CLJAYA-LF algorithm.

Notably, to ensure the fairness and credibility of the performance evaluation, all parameter settings in this study adhere strictly to the standards specified in the relevant references. Specifically, the parameter configurations of all comparative algorithms follow the settings documented in the corresponding reference literature to maintain consistency with the original validation conditions. Meanwhile, the test parameters for the CEC2017 benchmark suite and the experimental parameters for various engineering problems are all directly adopted from the verified and mature configurations in the relevant references, without any arbitrary adjustments. This practice not only guarantees a high degree of consistency between the experimental conditions of this study and the literature benchmarks, eliminating interference from parameter deviations on the evaluation results, but also establishes a unified and comparable experimental framework for the performance comparison between the CLJAYA-LF algorithm and other comparative algorithms, laying a solid foundation for the objectivity and rigor of the subsequent evaluation conclusions.

Performance Comparison of Multiple Algorithms on CEC2017 Test Suite

To clearly demonstrate the advantages and disadvantages of the proposed method compared to other well-established algorithms of the same kind, we specifically selected several widely used and highly reputed algorithms in the field of optimization for performance comparison, including the PSO algorithm [13], HHO algorithm [18], DMO algorithm [19], SABO algorithm [29], JAYA algorithm [30], JAYA-LF algorithm [32], and CLJAYA algorithm [33]. To ensure a fair comparison of the 50-dimensional optimization problems of CEC2017, the population size and the maximum number of function evaluations for all algorithms were set to 100 and 1000, respectively. Each algorithm was independently run 100 times on each test function, and the mean absolute error (Mean) and standard deviation (STD) were recorded for each test function.

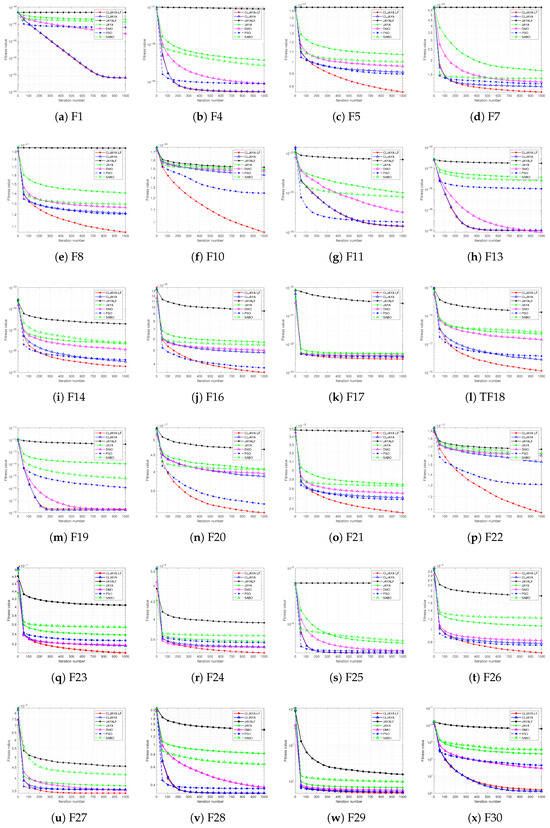

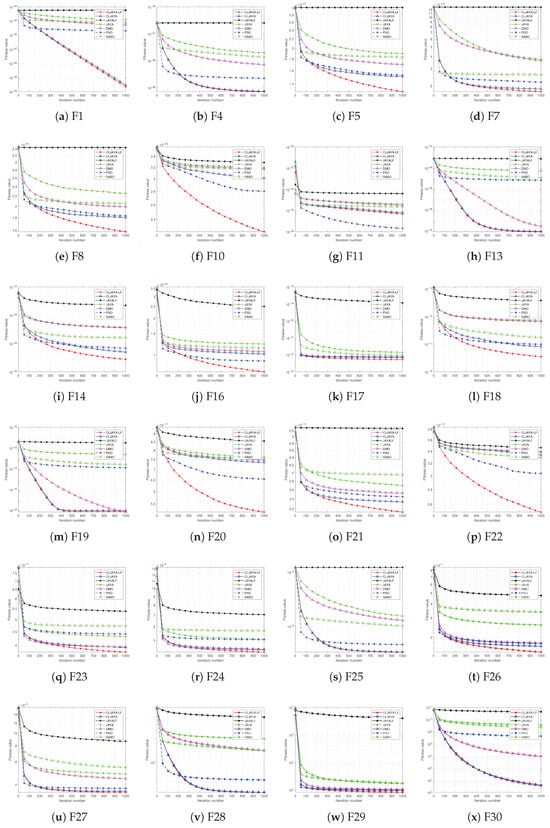

In Figure 4, it can be seen that in the optimization of unimodal functions (F1, F3), the CLJAYA-LF proposed in this paper exhibits smaller fitness value updates compared to the existing JAYA, JAYALF, and CLJAYA in F1, demonstrating optimal performance. In the optimization of simple multimodal functions (F4–F10), CLJAYA-LF demonstrates significantly better performance than JAYA, JAYALF, and CLJAYA in F4, F5, F7, F8, and F10. In the optimization of hybrid function (F11–F20), CLJAYA-LF shows significantly better performance than JAYA, JAYALF, CLJAYA, and other algorithms in F11, F14, F16–F18, and F20. In the optimization of composition functions (F21–F30), CLJAYA-LF significantly outperforms JAYA, JAYALF, CLJAYA, and other algorithms on F21–F29. Its performance is slightly inferior to CLJAYA in F30. Therefore, CLJAYA-LF demonstrates robust and superior optimization capabilities, making it a highly effective algorithm to solve complex optimization problems.

Figure 4.

Several convergence curves obtained using CLJAYA-LF, JAYA, CLJAYA, and other algorithms on 50-dimensional problems of the CEC2017 test suite. Panels are labeled as (a) F1, (b) F4, (c) F5, (d) F7, (e) F8, (f) F10, (g) F11, (h) F13, (i) F14, (j) F16, (k) F17, (l) TF18, (m) F19, (n) F20, (o) F21, (p) F22, (q) F23, (r) F24, (s) F25, (t) F26, (u) F27, (v) F28, (w) F29, (x) F30.

From the analysis of Table 1, the following conclusions can be drawn regarding the variance performance of the CLJAYA-LF algorithm on 22 functions with better performance. It is observed that in 15 of these 22 functions, CLJAYA-LF exhibits variance comparable to that of other methods, indicating that the algorithm not only achieves superior optimization performance but also maintains similar stability to existing methods, with relatively balanced variance control.

Table 1.

CEC2017 50D: algorithmic performance comparison.

We conducted a comparative performance analysis of the proposed CLJAYA algorithm on the 100-dimensional optimization problems from CEC2017. For both CLJAYA and the other algorithms, the population size was set to 150, and the maximum number of function evaluations was set to 1000. Each algorithm was independently executed 100 times on each test function. The relevant results are presented in Figure 5 and Table 2.

Figure 5.

Several convergence curves obtained using CLJAYA-LF, JAYA, CLJAYA, and other algorithms on 100-dimensional problems of the CEC2017 test suite. Panels are labeled as (a) F1, (b) F4, (c) F5, (d) F7, (e) F8, (f) F10, (g) F11, (h) F13, (i) F14, (j) F16, (k) F17, (l) F18, (m) F19, (n) F20, (o) F21, (p) F22, (q) F23, (r) F24, (s) F25, (t) F26, (u) F27, (v) F28, (w) F29, (x) F30.

Table 2.

CEC2017 100D: algorithmic performance comparison.

In Figure 5, it can be observed that the proposed CLJAYA-LF algorithm demonstrates smaller fitness value updates compared to existing algorithms, achieving optimal performance in F1, F4, F5, F7, F8, F10, F13, F14, F16, F17, F18, F20, and F21–F29. Although its performance is slightly inferior to CLJAYA on F30, there is a significant improvement in performance at 100 dimensions compared to the results at 50 dimensions.

From the analysis of Table 2, the following conclusions can be drawn: The CLJAYA-LF algorithm demonstrates smaller variance across 24 functions, with its variance outperforming the existing methods in 17 of these functions. This indicates that the algorithm not only achieves superior optimization performance but also maintains similar stability to the existing methods, with relatively balanced variance control. Compared to the 50-dimensional problems, the proposed algorithm shows better performance in the 100-dimensional case, proving that the method is not only suitable for high-dimensional optimization problems but also outperforms existing algorithms in such high-dimensional problems.

5. Applications of CLJAYA on Engineering Optimization

5.1. Step-Cone Pulley Problem

The main objective of this optimization problem is to minimize the weight of a four-step cone pulley by adjusting five design variables [41]. These variables include the diameters of the four steps of the pulley and the width of the pulley. During the optimization process, 11 non-linear constraints must be met to ensure that the pulley design meets practical performance requirements. One key constraint is that the output power of the pulley system must be at least 0.75 horsepower (hp). These nonlinear constraints involve limitations on the pulley’s dimensions, material strength requirements, transmission ratio conditions, compatibility with other components, and overall system efficiency. These constraints ensure that the designed pulley is not only lightweight but also has excellent mechanical performance and durability, meeting the demands of real-world engineering applications.

The experimental results are shown in Table 3. It is found that the mean value of the algorithm proposed in this paper is 1.69 × 101, and the variance is 3.32 × 10−1. These values are significantly smaller than the corresponding values of all other comparative algorithms, which fully demonstrates that the algorithm proposed in this paper has better stability and reliability, indicating that the proposed algorithm has a stronger global search ability. The worst value can reflect the algorithm’s risk-resistant ability under adverse conditions, and the median reflects the algorithm’s anti-interference performance, that is, the adaptability of the algorithm. The worst value of the algorithm proposed in this paper is 1.78 × 101, which is slightly lower than that of the CLJAYA algorithm. The median is 1.69 × 101, which is equal to the median of the CLJAYA algorithm, but both are significantly lower than those of the remaining algorithms. This indicates that the algorithm proposed in this paper is superior to existing algorithms in terms of adaptability. The best value can be used to measure the potential of the algorithm. The best value of the algorithm proposed in this paper is slightly higher than that of the CLJAYA and DMO algorithms. However, the DMO algorithm has poor stability, as its STD is high. Therefore, the algorithm proposed in this paper has a good potential similar to that of the CLJAYA algorithm.

Table 3.

Results of different algorithms for step-cone pulley problem.

5.2. Gear Train Design Problem

The gear system design problem is a classic example in the field of structural optimization. The objective is to determine the optimal number of teeth for each gear through proper parameter design, achieving performance optimization while meeting the design requirements. This problem involves four integer decision variables, each representing the number of teeth in a gear. The mathematical formulation of this problem is presented in [42].

As shown in Table 4, it is found that the mean of the algorithm proposed in this paper is 1.44 × 10−15, and the STD is 3.14 × 10−15. Compared with algorithms such as JAYA, JAYALF, CLJAYA, DMO, SABO, and PSO, the mean and variance of the algorithm proposed in this paper are significantly smaller. However, there is still a certain gap compared to the HHO algorithm. This fully demonstrates that, compared to the existing JAYA and its series of improved algorithms, the algorithm proposed in this paper performs outstandingly in terms of stability, reliability, and global search ability. However, in terms of overall performance, it is temporarily inferior to the HHO algorithm.

Table 4.

Results of different algorithms for gear train design problem.

Among the evaluation indicators of algorithm performance, the worst value can directly reflect the algorithm’s ability to resist risks under adverse conditions, while the median mainly reflects the algorithm’s anti-interference performance, which is directly related to the algorithm’s adaptability. The worst value of the algorithm proposed in this paper is 1.93 × 10−14, and the median is 2.14 × 10−16. In horizontal comparison with algorithms such as JAYA, JAYALF, CLJAYA, DMO, SABO, and PSO, these two key indicators are significantly lower. However, compared to the HHO algorithm, there is still room for improvement. This clearly indicates that in terms of adaptability, the algorithm proposed in this paper has obvious advantages among the existing algorithms, being only slightly inferior to the HHO algorithm.

The best value serves as a crucial criterion for assessing an algorithm’s potential. Through comparison, it is evident that the optimal value of the algorithm presented in this paper is marginally lower than that of the CLJAYA, PSO, and HHO algorithms. Given this outcome, the exploration of the potential of the proposed algorithm must be conducted more thoroughly. This involves further strengthening its capacity to seek the optimal solution, thereby achieving a comprehensive enhancement of the algorithm’s performance.

5.3. Planetary Gear Train Design Problem

This problem aims to minimize the maximum error in the gear transmission ratio through optimization design. To achieve this, the total number of teeth for each gear in the automatic planetary transmission system must first be determined. The maximum transmission ratio error refers to the deviation between the actual and ideal transmission ratios. By minimizing this error, the transmission efficiency of the system can be improved, ensuring smooth vehicle operation under different working conditions [43].

As shown in Table 5, the CLJAYA-LF algorithm has several remarkable characteristics. First of all, it exhibits excellent performance and features high stability and reliability. Its best value is 5.26 × 10−1, which indicates that it has a similar performance to algorithms such as CLJAYA and JAYA in terms of finding the optimal solution. Compared to CLJAYA and JAYA, the relatively low standard deviation of 4.98 × 10−2 means that it has lower variability and higher consistency during multiple runs, suggesting that it is more effective in handling random factors. The mean value of 5.35 × 10−1 is lower than that of CLJAYA, showing strong overall performance. In addition, the worst-case value of 8.90 × 10−1 indicates that it outperforms JAYALF and JAYA, highlighting its good robustness under adverse conditions. In conclusion, the CLJAYA-LF has strong performance and is suitable for applications that require stability and reliability. CLJAYA-LF demonstrates excellent optimization performance. Its best value (1.62 × 101) is slightly worse than CLJAYA’s best value (1.61 × 101). The standard deviation (3.32 × 101) suggests that CLJAYA-LF exhibits small fluctuations in multiple runs, offering high stability. These two indicators indicate comparable optimization capabilities. The mean value (1.69 × 101), which is close to the best value, confirms its stable and reliable optimization results. The median value (1.70 × 101) shows that most of the results are close to the optimal solution. Even in the worst case (1.78 × 101), CLJAYA-LF still maintains a high solution quality compared to other methods, making it a stable and efficient optimization algorithm suitable for complex problems.

Table 5.

Results of different algorithms for planetary gear train design problem.

6. Conclusions

In this study, the CLJAYA-LF algorithm was proposed, and its performance was rigorously verified through numerous experiments. By incorporating the Lévy Flight method, the CLJAYA-LF algorithm enhances its global search capabilities while maintaining favorable local search performance, effectively resolving the issue of traditional algorithms being prone to being trapped in local optima. The experimental results indicate that the CLJAYA-LF algorithm demonstrates outstanding performance in terms of stability, efficiency, and global optimization ability. It can achieve excellent results in various engineering problems, especially showing significant advantages when dealing with complex non-linear problems. The low standard deviation obtained from multiple runs reflects its high stability, and the characteristic of rapidly converging to better solutions makes it suitable for scenarios with high requirements for real-time performance and efficiency. In conclusion, the CLJAYA-LF algorithm breaks through traditional limitations, improving optimization accuracy and efficiency, thus possessing both theoretical value and broad application prospects.

Although the performance of CLJAYA has improved compared to the existing JAYA and its improved algorithms, there is still a gap in the local mining ability compared to algorithms such as HHO. In the future, we plan to introduce advanced techniques like reinforcement learning to dynamically adjust the update strategy, aiming to improve the local mining ability of the algorithm and further enhance its performance.

Author Contributions

Writing—original draft preparation, X.S.; writing—review and editing, X.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Guangxi Science and Technology Major Program under GuikeAA24263033 and GuikeAA24263013.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Diwekar, U.M. Introduction to Applied Optimization; Springer Nature: Berlin/Heidelberg, Germany, 2020; Volume 22. [Google Scholar]

- Dantzig, G.B. Linear Programming and Extensions; Princeton University Press: Princeton, NJ, USA, 1963. [Google Scholar] [CrossRef]

- Pérez, L.V.; Bossio, G.R.; Moitre, D.; García, G.O. Optimization of power management in an hybrid electric vehicle using dynamic programming. Math. Comput. Simul. 2006, 73, 244–254. [Google Scholar] [CrossRef]

- Sebastian, R. An overview of gradient descent optimization algorithms. arXiv 2016, arXiv:1609.04747. [Google Scholar]

- Korani, W.; Mouhoub, M. Review on nature-inspired algorithms. SN Oper. Res. Forum 2021, 2, 36. [Google Scholar] [CrossRef]

- Katoch, S.; Chauhan, S.S.; Kumar, V. A review on genetic algorithm: Past, present, and future. Multimed. Tools Appl. 2021, 80, 8091–8126. [Google Scholar] [CrossRef] [PubMed]

- Hansen, N.; Arnold, D.V.; Auger, A. Evolution strategies. In Handbook of Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2015; pp. 871–898. [Google Scholar]

- Price, K.V. Differential evolution. In Handbook of Optimization: From Classical to Modern Approach; Springer: Berlin/Heidelberg, Germany, 2013; pp. 187–214. [Google Scholar]

- Chen, H.; Li, X.; Li, S.; Zhao, Y.; Dong, J. Improved Slime Mould Algorithm Hybridizing Chaotic Maps and Differential Evolution Strategy for Global Optimization. IEEE Access 2022, 10, 66811–66830. [Google Scholar] [CrossRef]

- Gao, H.; Zhang, Q. Alpha evolution: An efficient evolutionary algorithm with evolution path adaptation and matrix generation. Eng. Appl. Artif. Intell. 2024, 137, 109202. [Google Scholar] [CrossRef]

- Stergiou, K.; Karakasidis, T. Optimizing Renewable Energy Systems Placement Through Advanced Deep Learning and Evolutionary Algorithms. Appl. Sci. 2024, 14, 10795. [Google Scholar] [CrossRef]

- Jiang, X.; Li, S. BAS: Beetle Antennae Search Algorithm for Optimization Problems. arXiv 2017, arXiv:1710.10724. [Google Scholar] [CrossRef]

- Eberhart, R.; Kennedy, J. Particle swarm optimization. In Proceedings of the IEEE International Conference on NEURAL Networks, Perth, WA, Australia, 27 November–1 December 1995; Citeseer: Princeton, NJ, USA, 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Yue, F.; Sha, Z.; Sun, H.; Chen, D.; Liu, J. Research on the Optimization of TP2 Copper Tube Drawing Process Parameters Based on Particle Swarm Algorithm and Radial Basis Neural Network. Appl. Sci. 2024, 14, 11203. [Google Scholar] [CrossRef]

- Kumar De, S.; Banerjee, A.; Majumder, K.; Kotecha, K.; Abraham, A. Coverage Area Maximization Using MOFAC-GA-PSO Hybrid Algorithm in Energy Efficient WSN Design. IEEE Access 2023, 11, 99901–99917. [Google Scholar] [CrossRef]

- Karaboga, D.; Gorkemli, B.; Ozturk, C.; Karaboga, N. A comprehensive survey: Artificial bee colony (ABC) algorithm and applications. Artif. Intell. Rev. 2014, 42, 21–57. [Google Scholar] [CrossRef]

- Faris, H.; Aljarah, I.; Al-Betar, M.A.; Mirjalili, S. Grey wolf optimizer: A review of recent variants and applications. Neural Comput. Appl. 2018, 30, 413–435. [Google Scholar] [CrossRef]

- Alabool, H.M.; Alarabiat, D.; Abualigah, L.; Heidari, A.A. Harris hawks optimization: A comprehensive review of recent variants and applications. Neural Comput. Appl. 2021, 33, 8939–8980. [Google Scholar] [CrossRef]

- Agushaka, J.O.; Ezugwu, A.E.; Abualigah, L. Dwarf mongoose optimization algorithm. Comput. Methods Appl. Mech. Eng. 2022, 391, 114570. [Google Scholar] [CrossRef]

- Chen, Z.; Francis, A.; Li, S.; Liao, B.; Xiao, D.; Ha, T.T.; Li, J.; Ding, L.; Cao, X. Egret Swarm Optimization Algorithm: An Evolutionary Computation Approach for Model Free Optimization. Biomimetics 2022, 7, 144. [Google Scholar] [CrossRef]

- Lian, J.; Hui, G. Human evolutionary optimization algorithm. Expert Syst. Appl. 2024, 241, 122638. [Google Scholar] [CrossRef]

- Zhou, G.; Zhou, Y.; Deng, W.; Yin, S.; Zhang, Y. Advances in teaching–learning-based optimization algorithm: A comprehensive survey(ICIC2022). Neurocomputing 2023, 561, 126898. [Google Scholar] [CrossRef]

- Al-Masri, O.; Al-Sorori, W.A. Object-Oriented Test Case Generation Using Teaching Learning-Based Optimization (TLBO) Algorithm. IEEE Access 2022, 10, 110879–110888. [Google Scholar] [CrossRef]

- Oladejo, S.O.; Ekwe, S.O.; Mirjalili, S. The Hiking Optimization Algorithm: A novel human-based metaheuristic approach. Knowl.-Based Syst. 2024, 296, 111880. [Google Scholar] [CrossRef]

- Chen, Z.; Li, S.; Khan, A.T.; Mirjalili, S. Competition of tribes and cooperation of members algorithm: An evolutionary computation approach for model free optimization. Expert Syst. Appl. 2025, 265, 125908. [Google Scholar] [CrossRef]

- Rutenbar, R.A. Simulated annealing algorithms: An overview. IEEE Circuits Devices Mag. 1989, 5, 19–26. [Google Scholar] [CrossRef]

- Luo, K. Water flow optimizer: A nature-inspired evolutionary algorithm for global optimization. IEEE Trans. Cybern. 2021, 52, 7753–7764. [Google Scholar] [CrossRef]

- Hashim, F.A.; Mostafa, R.R.; Hussien, A.G.; Mirjalili, S.; Sallam, K.M. Fick’s Law Algorithm: A physical law-based algorithm for numerical optimization. Knowl.-Based Syst. 2023, 260, 110146. [Google Scholar] [CrossRef]

- Trojovskỳ, P.; Dehghani, M. Subtraction-average-based optimizer: A new swarm-inspired metaheuristic algorithm for solving optimization problems. Biomimetics 2023, 8, 149. [Google Scholar] [CrossRef]

- Rao, R. Jaya: A simple and new optimization algorithm for solving constrained and unconstrained optimization problems. Int. J. Ind. Eng. Comput. 2016, 7, 19–34. [Google Scholar] [CrossRef]

- Teng, Z.; Li, M.; Yu, L.; Gu, J.; Li, M. Sinkhole Attack Defense Strategy Integrating SPA and Jaya Algorithms in Wireless Sensor Networks. Sensors 2023, 23, 9709. [Google Scholar] [CrossRef]

- Iacca, G.; dos Santos Junior, V.C.; de Melo, V.V. An improved Jaya optimization algorithm with Lévy flight. Expert Syst. Appl. 2021, 165, 113902. [Google Scholar] [CrossRef]

- Zhang, Y.; Jin, Z. Comprehensive learning Jaya algorithm for engineering design optimization problems. J. Intell. Manuf. 2022, 33, 1229–1253. [Google Scholar] [CrossRef]

- Viswanathan, G.M.; Buldyrev, S.V.; Havlin, S.; da Luz, M.G.; Raposo, E.P.; Stanley, H.E. Optimizing the success of random searches. Nature 1999, 401, 911–914. [Google Scholar] [CrossRef] [PubMed]

- Wu, L.; Wu, J.; Wang, T. Enhancing grasshopper optimization algorithm (GOA) with levy flight for engineering applications. Sci. Rep. 2023, 13, 124. [Google Scholar] [CrossRef]

- Heidari, A.A.; Pahlavani, P. An efficient modified grey wolf optimizer with Lévy flight for optimization tasks. Appl. Soft Comput. 2017, 60, 115–134. [Google Scholar] [CrossRef]

- He, Q.; Liu, H.; Ding, G.; Tu, L. A modified Lévy flight distribution for solving high-dimensional numerical optimization problems. Math. Comput. Simul. 2023, 204, 376–400. [Google Scholar] [CrossRef]

- Mohiz, M.J.; Baloch, N.K.; Hussain, F.; Saleem, S.; Zikria, Y.B.; Yu, H. Application mapping using cuckoo search optimization with Lévy flight for NoC-based system. IEEE Access 2021, 9, 141778–141789. [Google Scholar] [CrossRef]

- Shen, X.; Sunat, K. A Novel Comprehensive Learning JAYA Algorithm Based on Lévy Flights. In Proceedings of the 2024 28th International Computer Science and Engineering Conference (ICSEC), Khon Kaen, Thailand, 6–8 November 2024; IEEE: New York, NY, USA, 2024; pp. 1–5. [Google Scholar]

- Wu, G.; Mallipeddi, R.; Suganthan, P. Problem Definitions and Evaluation Criteria for the CEC 2017 Competition and Special Session on Constrained Single Objective Real-Parameter Optimization; Nanyang Technological University: Singapore, 2016. [Google Scholar]

- Yusof, N.J.; Kamaruddin, S. Optimal design of step–cone pulley problem using the bees algorithm. In Intelligent Manufacturing and Mechatronics: Proceedings of SympoSIMM 2020; Springer: Berlin/Heidelberg, Germany, 2021; pp. 139–149. [Google Scholar]

- Jangir, P.; Buch, H.; Mirjalili, S.; Manoharan, P. MOMPA: Multi-objective marine predator algorithm for solving multi-objective optimization problems. Evol. Intell. 2023, 16, 169–195. [Google Scholar] [CrossRef]

- Daoudi, K.; Boudi, E.M. Optimal volume design of planetary gear train using particle swarm optimization. In Proceedings of the 2018 4th International Conference on Optimization and Applications (ICOA), Mohammedia, Morocco, 26–27 April; IEEE: New York, NY, USA, 2018; pp. 1–4. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).