Abstract

Web3.0 aims to foster a trustworthy environment enabling user trust and content verifiability. However, the proliferation of fake news undermines this trust and disrupts social ecosystems, making the effective alignment of visual-textual semantics and accurate content verification a pivotal challenge. Existing methods still struggle with deep cross-modal interaction and the adaptive calibration of discrepancies. To address this, we introduce the Bidirectional Semantic Enhancement and Adversarial Network (BSEAN). BSEAN first extracts features using large pre-trained models: a hybrid encoder for text and the Swin Transformer for images. It then employs a Bidirectional Modality Mapping Network, governed by cycle consistency, to achieve preliminary semantic alignment. Building on this, a Semantic Enhancement and Calibration Network explores inter-modal dependencies and quantifies semantic deviations to enhance discriminative capability. Finally, a Dual Adversarial Learning framework bolsters event generalization and representation consistency through adversarial training with event and modality discriminators. Experiments on public Weibo and Twitter datasets validate BSEAN’s superior performance across all metrics, demonstrating its efficacy in tackling the complex challenges of deep cross-modal interaction and dynamic modality calibration within Web3.0 social networks.

1. Introduction

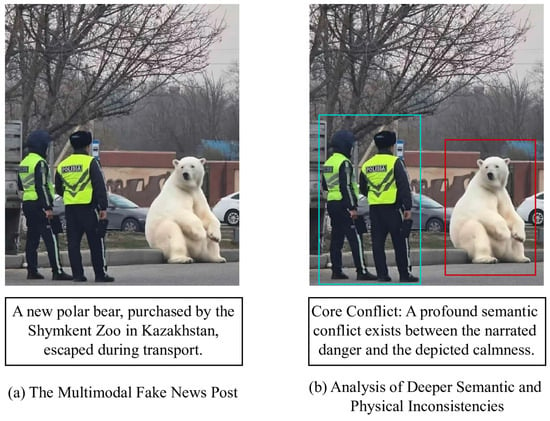

The proliferation of social media since the advent of the Web 2.0 era has substantially facilitated information dissemination, transforming users from passive consumers into active content creators and distributors []. Social media platforms such as Weibo and Twitter, characterized by their immediacy, interactivity, and diverse content modalities (including text, images, and videos), have significantly expanded the scope and influence of information propagation. This convenience, however, has also enabled the rapid creation and widespread circulation of fake news. A sophisticated example is presented in Figure 1, which shows a social media post claiming a polar bear escaped during transport in Kazakhstan. As analyzed in Figure 1b, a stark semantic conflict exists between the textual narrative, which implies danger and urgency (“escaped”), and the visual scene, which depicts unnatural calmness. The polar bear’s relaxed, human-like posture and the police officers’ non-urgent, observational stance contradict the expected behavior of both the animal and the authorities in such a crisis. An effective detection system must possess a deeper reasoning capability to assess behavioral plausibility, situational context, and potential physical inconsistencies, which is the central challenge this paper aims to address.

Figure 1.

Example of fake news.

Fake news [] is formally defined as deliberately fabricated information intended to achieve specific objectives—such as generating web traffic, manipulating public opinion, or securing financial gain—and whose falsity can be verified by reliable external sources [,]. This type of information is often highly deceptive, propagates at an accelerated rate, and can affect a large user base within a short period []. In the Web3.0 era, the internet is evolving from an “Internet of Information” to an “Internet of Value.” This paradigm establishes an underlying infrastructure for trust and value transfer through blockchain technology. Its core principles are leveraged to enhance cybersecurity and data integrity, forming a foundational layer of trust for the new web [], while cryptocurrencies and token economies facilitate the direct and efficient circulation of value, enabling direct incentives for producers (users). However, the rapid proliferation of generative AI within this new landscape has significantly lowered the barrier to entry for fabricating sophisticated fake videos, images, and text. Technologies such as deepfakes are capable of fundamentally disrupting human perception. In recent years, negative incidents stemming from fake news have become increasingly prevalent, both domestically and internationally. Such misinformation not only compromises the content security of social networks and erodes the bedrock of social trust but also undermines the credibility of governments and related industries, and can even foment social discord and disrupt critical socio-political processes [,,]. A notable case in 2024 saw a Hong Kong-based company incur a fraudulent loss of HKD 200 million due to a deepfake video, highlighting the severe threat posed to personal and corporate financial security. Consequently, in the Web3.0 era, the development of more accurate multimodal fake news detection techniques is of paramount practical importance for sanitizing the online environment, bolstering social trust, and preserving the integrity of the information ecosystem, which requires not only verifying on-chain provenance but also scrutinizing the semantic consistency of the content itself.

Current research on fake news detection is predominantly centered on content-based approaches. These methods aim to extract discriminative features from the textual and visual elements of a news item to assess its authenticity. The quality and representativeness of these features are, thus, decisive factors in the final detection performance. Early research efforts were largely focused on the analysis of unimodal information. For textual content, deep learning models, particularly Recurrent Neural Networks (RNNs) and their variants, have been widely applied [,]. These models typically convert text into vector representations using pretrained word embeddings and then employ RNNs to capture contextual dependencies and deep semantic information within the text sequences for classification. In this context, large pretrained language models (PLMs) like BERT often function as powerful text feature extractors [,]. A common practice is to utilize the output of BERT’s final layer as the definitive textual representation. While straightforward, this approach may not fully exploit the hierarchical semantic information encoded in the model’s intermediate layers, which can be essential for a comprehensive understanding of complex or intentionally misleading narratives.

For visual content in news, unimodal detection methods centered on Convolutional Neural Networks (CNNs) have also been developed [,]. These approaches typically leverage CNN architectures (e.g., VGG) pretrained on large-scale image datasets to extract visual features [], which are subsequently employed to ascertain image authenticity or its relevance to a specific event. Notably, the explicit features extracted by pretrained models, whether text- or image-based, can be influenced to some extent by the training data distribution. Specific types of fake news, such as those concerning sensitive domains or exhibiting particular emotional biases [,], may present certain distributional characteristics within datasets. If a model overrelies on these superficial, distribution-related features, its generalization capabilities when encountering novel, differently distributed data may be compromised.

Although unimodal methods have provided valuable insights and technical approaches for fake news detection within their respective domains, the evolution of information dissemination formats has seen purveyors of fake news increasingly employ multimodal strategies, such as combining text and images, to enhance deceptiveness and virality. They may exploit subtle inconsistencies between text and images, misleading cues from visual elements, or ambiguities arising from their combination to achieve deceptive ends. In such scenarios, relying solely on the analysis of a single modality struggles to comprehensively capture this cross-modal complexity and potential deceptiveness, thereby exposing the inherent limitations of unimodal detection methods.

To overcome the deficiencies of unimodal approaches, multimodal fake news detection has emerged, aiming to enhance detection accuracy and robustness by comprehensively analyzing multiple information sources, such as text and images. Although multimodal methods demonstrate superior potential compared to their unimodal counterparts, existing research still confronts several key challenges:

- Insufficient Cross-Modal Semantic Alignment and Deep Interaction: When processing features from different modalities [,], many multimodal models involve a fusion operation. However, simplistic concatenation or shallow interaction mechanisms are often insufficient to bridge the semantic gap between text and images and fail to adequately explore deep cross-modal semantic correlations. Even with the introduction of attention mechanisms [,], the efficacy of interaction remains constrained without effective initial alignment of features within a shared semantic space.

- Lack of Calibration Capability for Cross-Modal Semantic Discrepancies: In real-world multimodal news, varying degrees of semantic discrepancy, or even outright contradiction, can exist between text and images. Most current models lack a mechanism to dynamically assess and calibrate these discrepancies. Consequently, they cannot adaptively adjust the contribution of each modality based on the degree of visual-textual consistency, rendering them susceptible to interference from inconsistent or misleading information.

- Need for Enhanced Robustness and Generalization in Feature Representations: Fake news encompasses diverse event themes and manipulation tactics. If a model overfits to specific patterns prevalent in the training data, its generalization ability will be limited. This restricts its adaptability to varying event contexts and differences in modal information quality, and it hinders the learning of stable, discriminative features for identifying fake news.

To address the aforementioned challenges, this paper introduces the Bidirectional Semantic Enhancement and Adversarial Network (BSEAN), a novel model for multimodal fake news detection. BSEAN is designed to systematically enhance the model’s joint understanding of visual-textual information and its capability to identify inconsistencies through a multistage process. In the feature extraction stage, BSEAN employs the BERT pre-trained model to extract multi-level semantic features from text and integrates Text-CNN to enhance local short-text information. For the visual modality, the Swin Transformer pre-trained model is utilized to extract regional features from images.

The effective verification of consistency between textual and visual information is crucial in discerning multimodal fake news. To emulate the human cognitive process of cross-modal verification, which involves comparing content and identifying discrepancies, BSEAN operates as follows. First, the Bidirectional Modality Mapping Network establishes a preliminary alignment between textual and visual features in a shared semantic space via bidirectional mapping, aiming to capture richer cross-modal semantic correlations. Subsequently, the Semantic Enhancement and Calibration Network is employed. This network first deepens the model’s understanding through semantic interaction, then quantifies the deviation between modalities and dynamically recalibrates their contributions to mitigate potential conflicts. Furthermore, an adversarial learning strategy integrated within the Dual Adversarial Learning framework enhances the model’s adaptability across diverse event scenarios.

The main contributions of this paper can be summarized as follows:

- We designed a bidirectional modality mapping method that, by promoting cross-modal feature transformation, aims to achieve effective alignment of textual and visual features within a shared semantic space, providing a high-quality semantic foundation for subsequent interaction;

- We designed a unified Semantic Enhancement and Calibration Network (SECN). This network deeply explores cross-modal relationships through a semantic interaction mechanism and adaptively adjusts modal contributions via a deviation calibration strategy that dynamically adjusts modal contributions based on perceived semantic differences, enables a refined understanding of cross-modal information and robust adaptation to potential semantic conflicts, thereby enhancing the model’s ability to discern semantic inconsistencies;

- Through extensive comparative experiments and ablation studies against several state-of-the-art benchmark models on public Weibo and Twitter multimodal fake news datasets, the proposed BSEAN model demonstrates significant performance improvements across key evaluation metrics, validating its effectiveness in the task of multimodal fake news detection.

2. Related Work

This section systematically reviews the relevant research progress in the field of fake news detection, primarily delineating advancements from two perspectives: unimodal information processing and multimodal information analysis.

2.1. Unimodal Detection Methods

In the early era of the internet, news was predominantly presented in textual form; consequently, a substantial body of research focused on extracting effective cues from textual content to discern fake news. Prior to the application of deep learning techniques in this domain, researchers commonly relied on feature engineering methods []. These methods involved extracting statistical or linguistic features such as Term Frequency-Inverse Document Frequency (TF-IDF), N-grams, and sentiment lexicon scores [,], and subsequently employing traditional machine learning classifiers like Support Vector Machines (SVMs), Logistic Regression, and Decision Trees for veracity assessment. While these methods achieved a degree of success, the feature extraction process was time-consuming, labor-intensive, and heavily reliant on domain expertise, often failing to capture the deeper semantic information within texts.

The advent of deep learning precipitated a paradigm shift. Ma et al. [] pioneered the application of Recurrent Neural Networks (RNNs) and their variants, such as Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRUs), to the task of fake news detection. These models demonstrated the ability to automatically learn context-dependent semantic representations from text sequences, significantly enhancing detection performance. Recognizing that veracity assessment often necessitates background knowledge extending beyond the text itself, some studies began to explore effective ways to incorporate external knowledge into models. For instance, Dun et al. [] linked entities within news text to knowledge graphs and utilized attention mechanisms to fuse contextually relevant information associated with these entities, thereby augmenting the model’s comprehension capabilities. Xu et al. [] focused on the internal structure of evidence texts, employing graph neural networks to capture long-range dependencies between evidence snippets and, thus, identify and eliminate redundant or irrelevant evidential information. Liao et al. [] designed a multi-step evidence retrieval and enhancement framework that simulates human online fact-checking behaviors, iteratively retrieving and filtering relevant evidence to aid judgment. More recently, following the remarkable success of Large Language Models (LLMs), research has also begun to investigate leveraging their powerful text understanding and knowledge reasoning capabilities to improve the accuracy of fake news detection [].

Concurrently, given the increasingly significant role of images in the propagation of fake news—for instance, through tampering, splicing, or association with misleading text—image-based fake news detection methods have also garnered attention. Such approaches typically address the problem from two perspectives: first, detecting traces of manipulation within the image itself, and second, analyzing whether the semantic content of the image aligns with common sense or the accompanying textual descriptions. For example, Qi et al. [] proposed a method combining spatial domain features for semantic understanding with frequency domain features for detecting tampering traces. Other works directly utilize Convolutional Neural Networks (CNNs), such as VGG and ResNet, pre-trained on large-scale image datasets, to extract deep visual features for subsequent classification tasks []. However, relying solely on unimodal information—be it textual or visual—for fake news detection inherently faces limitations. Perpetrators of fake news often skillfully exploit the combination and manipulation of information across different modalities, making it difficult for single-modality analysis to reveal the inherent deceptiveness.

2.2. Multimodal Detection Methods

With the increasing prevalence of multimodal content such as images and text on social media, leveraging multi-modal information for fake news detection has become a mainstream research trend and an unavoidable necessity. Compared to unimodal approaches, multimodal methods can integrate cues from diverse information sources, analyzing news content from a more comprehensive perspective and, thus, possessing the potential to more accurately capture complementary information and potential inconsistencies across modalities.

Early multimodal methods typically employed relatively direct feature-level fusion strategies. Specifically, they would first independently extract features using unimodal feature extractors [,]—such as RNN/BERT for text and CNNs for images—and then merge these heterogeneous features into a unified representation through simple operations like feature concatenation, element-wise addition, or averaging, before finally feeding this representation into a classifier for judgment. For instance, Wang et al. [] used Text-CNN to extract textual features and, after concatenating them with image features, also introduced an event discrimination sub-task to learn shared feature representations across events. The work by Singhal et al. [] demonstrated that employing powerful pre-trained language models (e.g., BERT) as text encoders can significantly enhance detection performance due to their rich prior knowledge, even without relying on complex fusion mechanisms or auxiliary tasks. However, such simplistic “early fusion” or “late fusion” strategies often struggle to fully exploit the complex intrinsic dependencies and subtle semantic interactions between features from different modalities. This can lead to information redundancy or allow noise from one modality to adversely affect the overall judgment, thereby limiting further improvements in model performance.

To overcome the limitations of simple fusion strategies, researchers began to explore more sophisticated cross-modal interaction mechanisms, among which the attention mechanism has gained widespread favor due to its ability to dynamically assign weights to different information segments and capture long-range dependencies. The core idea is to allow information from one modality, such as keywords in text, to guide the model’s focus towards specific, relevant regions or aspects in another modality, like an image, thereby achieving selective enhancement and alignment of cross-modal information. For example, Jin et al. [] proposed an RNN model incorporating an attention mechanism, which, after fusing textual and social context features, utilized linear attention to enhance the representation of image features. Wu et al. [] drew inspiration from human reading habits to design a multimodal cross-attention network that simulates the process of humans alternately attending to text and images, learning cross-modal dependencies via a cross-attention mechanism. Qi et al. [] introduced an attention-based multimodal fusion network aimed at facilitating higher-level information ex-change at both the lexical and visual object levels. These methods, through various forms of attention mechanisms, have indeed promoted cross-modal information interaction and fusion to a certain extent.

Simultaneously, considering that a common characteristic of fake news is the inconsistency or weak correlation between textual descriptions and accompanying visual content, many research efforts have framed the fake news detection problem as a task of measuring and judging visual-textual consistency. The core of such methods lies in assessing whether the information conveyed by the textual and visual modalities points to the same fact or event. For instance, Shang et al. [] constructed an object-aware visual encoder to extract salient object features from news images and utilized principles from Generative Adversarial Networks (GANs) to evaluate the consistency between visual and textual features, thereby determining if the image matched the textual content. Xue et al. [] judged consistency by calculating the similarity between overall semantic representations of images and text. Zhou et al. [] also identified potential inconsistencies by comparing the differences between latent features extracted from images and text. These approaches directly address cross-modal semantic contradictions or deviations and exhibit good discriminative power for certain types of fake news.

Building on attention mechanisms, the Transformer architecture has advanced multimodal fusion by capturing long-range dependencies and fine-grained cross-modal interactions via self-attention, which dynamically weights contextual information across modalities—critical for social media data with complex text–image–user interaction relationships. Recent work on attention-guided Transformers further enhances such capabilities: Selvam et al. [] leveraged a unified framework integrating Transformer-based NLP and graph neural networks to process multimodal features (textual semantics and social network structures) for fake account identification, demonstrating the effective alignment of heterogeneous information and improved discriminative power for deceptive patterns in social media. These models represent a shift from heuristic fusion to end-to-end hierarchical attention modeling, strengthening adaptability to complex fake content scenarios.

Despite the significant advancements in multimodal fake news detection, existing research still confronts several critical challenges in achieving ideal detection performance. Firstly, effective cross-modal semantic alignment and deep interaction remain a core technical bottleneck. Although many methods employ attention mechanisms, if features from different modalities are not effectively represented in a shared or well-aligned semantic space prior to interaction, the efficacy of subsequent interactions is often constrained, making it difficult to truly capture deep, fine-grained semantic correlations and subtle inconsistencies between modalities. Secondly, current models generally lack the capability for adaptive calibration of cross-modal semantic discrepancies. In real-world news data, the semantic relationship between text and images is complex and varied, potentially exhibiting partial consistency, complete inconsistency, or even deliberately fabricated contradictions. Most models struggle to dynamically and adaptively adjust the contribution weights of each modality in the final decision-making process based on the degree of visual-textual consistency in specific samples, rendering them susceptible to interference from noise or misleading information. Furthermore, the generalization ability of models and their robustness to specific event themes and modalities require further improvement to ensure stable detection performance when faced with diverse and evolving fake news.While our approach leverages established components such as pre-trained encoders and cross-attention, its primary novelty lies in their synergistic integration into a multi-stage verification framework designed to emulate human-like reasoning. Unlike prior works that often rely on direct fusion or shallow interaction, BSEAN introduces a dedicated network to explicitly model and adaptively calibrate the semantic discrepancies between modalities. The specifics of this calibration mechanism, which builds upon and refines earlier alignment and gating concepts, will be detailed in the subsequent sections.

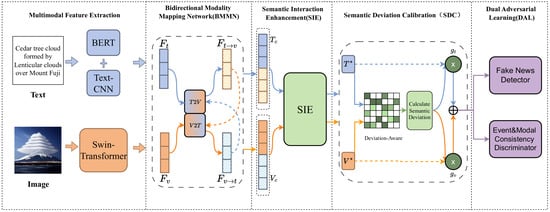

3. Methodology

To address the challenges delineated above, the BSEAN model proposed in this paper aims to construct a more comprehensive framework for multimodal fake news detection. Initially, the model leverages large-scale pre-trained models for multimodal feature extraction. Next, a Bidirectional Modality Mapping Network (BMMN) utilizes cycle-consistency constraints to achieve robust cross-modal feature alignment and mutual mapping, thereby establishing a foundational basis for subsequent deep interaction. Building upon this, we introduce the Semantic Enhancement and Calibration Network (SECN) to facilitate deep interaction and adaptive fusion. The SECN operates in two stages. First, the Semantic Interaction Enhancement (SIE) stage uses self-attention and bidirectional cross-attention mechanisms for deep visual-textual semantic interaction. Second, the Semantic Deviation Calibration (SDC) stage adaptively adjusts the contribution of each modality based on their semantic deviation, effectively handling potential inconsistencies. Finally, a Dual Adversarial Learning (DAL) framework is integrated to enhance the model’s event generalization capability and the consistency of modal representations, thus offering a more holistic and generalizable solution for multimodal fake news detection. The overall architecture of the proposed BSEAN model is illustrated in Figure 2. This section will provide a detailed description of the model from the following aspects.

Figure 2.

Overall architecture of the proposed BSEAN model.

3.1. Feature Extraction

Multimodal news encompasses both textual and visual modalities. To comprehensively capture the deep semantic information within text, this paper employs a text encoding method that combines a pre-trained language model with local feature enhancement. Specifically, the BERT pre-trained model is used to extract three hierarchical levels of features, which are then augmented by Text-CNN to enhance local short-text features. For the image encoder, the Swin Transformer [] pre-trained model, renowned for its out-standing performance in visual tasks, is utilized.

3.1.1. Text Encoder

Firstly, multi-level feature extraction is performed using the model. Given an input text , the 12-layer encoder of the BERT model is employed to obtain semantic features at different levels of abstraction:

Let represent the embedding vector for the word, where is the embedding dimension. To integrate shallow syntactic and deep semantic information, the outputs from BERT’s layers are grouped and summed to generate three sets of multi-level features, corresponding to shallow, intermediate, and deep features, respectively:

Subsequently, for each feature set , multi-scale convolutional kernels are applied to extract local semantics, and max-pooling is used to aggregate key information: . Here, represents convolutional kernels of varying window sizes. Finally, these multi-level features are fused through a concatenation operation to form the final textual representation:

3.1.2. Image Encoder

The Swin Transformer adopted in this paper is an efficient architecture for visual tasks. By introducing hierarchical feature maps and a shifted window multi-head self-attention mechanism, it not only ensures the capture of global semantics but also preserves detailed information while reducing computational complexity. An input image I is encoded by the Swin-T, outputting an image region feature matrix: , where M is the number of image regions and is the feature dimension. To match the textual feature space, a fully connected layer is employed to project the visual features into a unified dimension d, thereby achieving effective alignment with the textual features. The finally obtained projected features are denoted as .

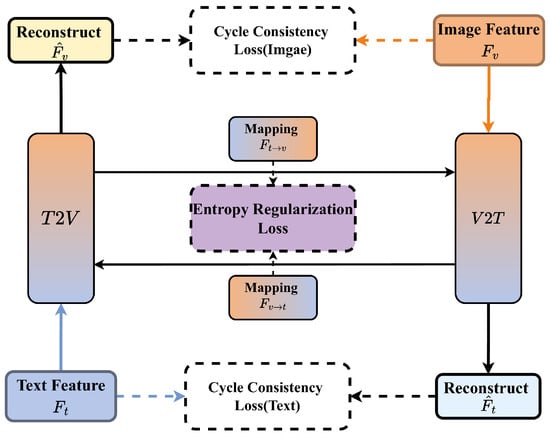

3.2. Bidirectional Modality Mapping Network

To achieve efficient knowledge transfer between textual and visual modalities, we designed a Bidirectional Modality Mapping Network (BMMN). This network employs a multi-head attention mechanism to realize bidirectional mapping between modalities. Concurrently, a cycle consistency constraint strategy is introduced to ensure that the mapped features maintain semantic consistency and stability. This module is illustrated in Figure 3.

Figure 3.

BMMN Network.

3.2.1. Cross-Modal Bidirectional Feature Mapping

Given the textual features and visual features , cross-modal mapping is achieved through Text-to-Visual (T2V) and Visual-to-Text (V2T) transformations. For T2V mapping, the textual features serve as the Query, while the visual features act as the Key and Value. This is computed as follows:

where h is the number of attention heads, and ,, represent the linearly transformed feature matrices. Similarly, the Visual-to-Text (V2T) mapping process generates . Finally, the dimensions of the mapped features are aligned through normalization.

3.2.2. Cycle Consistency and Regularization Optimization

To ensure that the mapped features retain the semantic information of their original modalities, a cycle consistency constraint is introduced. This involves reconstructing the features and back to their original modalities, yielding , respectively. The cycle consistency loss encourages consistency between the features before and after mapping. Furthermore, to prevent attention over-fitting, entropy regularization is applied to the attention matrices, promoting diversity in the attention distribution. The influence of this regularization is controlled by corresponding hyperparameters: ,,. The overall loss for this module is formulated as

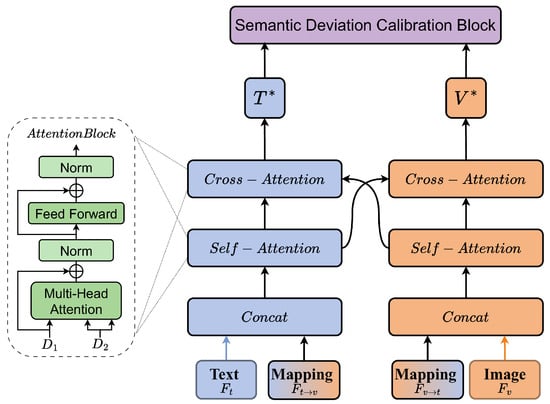

3.3. Semantic Enhancement and Calibration Network

To capture cross-modal semantic discrepancies, enhance local semantic features between text and images, and optimize feature representations, we have designed a cross-modal semantic information enhancement network, the structure of which is illustrated in Figure 4. This network progresses from an information fusion stage within a self-attention sub-layer to a cross-attention sub-layer, yielding cross-modally semantically enhanced features. These features are then calibrated based on semantic discrepancies to produce the final fused representation.

Figure 4.

The architecture diagram of SIE.

3.3.1. Semantic Interaction Enhancement

This module aims to capture intra-modal semantic relationships and cross-modal interaction information through self-attention and cross-attention mechanisms. Its structure, as depicted on the left side of Figure 4 (the attention mechanism module), involves mapping an input feature to the Q (Query) space, and another input feature to the K (Key) and V (Value) spaces. These are then propagated forward through a multi-head attention mechanism and a feed-forward neural network, with each component connected via a residual link and a normalization module. The process can be represented as

where denotes the multi-head attention operation, represents the feed-forward neural network, and is the output of this module.

Prior to cross-modal interaction, semantic information from different perspectives is first integrated by fusing the original features with their corresponding mapped features. The textual branch comprises the original textual features and the cross-modally mapped features . Similarly, the visual branch consists of the original visual features and the cross-modally mapped features . The process is as follows:

where the sequence length of the concatenated features is the sum of the sequence lengths of the individual features.

Subsequently, and are fed into separate self-attention modules, where intra-modal contextual enhancement is performed via the self-attention mechanism:

To achieve deep cross-modal information learning, a cross-attention layer is employed to strengthen the model’s profound understanding and alignment of local and global semantics between visual and textual features. Specifically, for the textual branch, its self-attention enhanced features are used as the Query to attend to the visual semantic information contained within the enhanced features of the visual branch (serving as Key and Value). This generates a more comprehensive textual representation infused with visual context. Under the guidance of visual features, the textual features learn and integrate relevant visual scene and object information. Symmetrically, the same process occurs in the visual branch: visual features, guided by textual features, learn and associate with relevant descriptive or conceptual textual information. Through this bidirectional, mutually guided cross-attention mechanism, the model can effectively capture and model complex correspondences and complementary information between images and text. This process is as follows:

3.3.2. Semantic Deviation Calibration

To mitigate inherent semantic differences between modalities, incomplete informational correspondence, or even deliberately fabricated semantic contradictions, this paper introduces a Semantic Deviation Calibration (SDC) module. This module adaptively evaluates, adjusts, and calibrates the multimodal features obtained after deep interaction, based on perceived semantic deviations.

The SDC module first needs to assess whether a semantic deviation exists between the enhanced textual features and the enhanced visual features . We quantify this deviation by calculating the cosine distance between them:

Based on the quantified semantic deviation , the model learns to generate gating weights and for the textual and visual modalities, respectively. These weights dynamically adjust the contribution of each modal feature in the subsequent fusion process according to the degree of deviation. Specifically, modal information exhibiting greater deviation is appropriately suppressed, while information with higher consistency is preserved or enhanced, thereby achieving adaptive feature calibration:

Finally, the adaptively calibrated textual features and visual features are deeply fused under the explicit guidance of the gating weights, which are dynamically generated based on the semantic deviation, to produce a unified multimodal representation M.

3.4. Dual Adversarial Learning

To enhance the model’s discriminative capability concerning event relevance and modality consistency, we construct an adversarial learning framework between a fake news discriminator and an event-modality consistency discriminator. Firstly, the fake news discriminator incorporates a Softmax layer following a fully connected layer to distinguish between true and fake news. Subsequently, the event-modality consistency discriminator engages in adversarial training through a Gradient Reversal Layer (GRL) to make the event features and modality features indistinguishable by their respective discriminators. The fake news discriminator and its loss function are represented as

where is the predicted probability of the news being “true”, and is the true label. Similarly, the loss functions for the event discriminator and the modality consistency discriminator are denoted as and , respectively. With the introduction of the GRL, the feature extractor is trained to become insensitive to event labels, thereby compelling the event discriminator to generate feature distributions that are indistinguishable across different event categories. This aims to mitigate the model’s reliance on specific event scenarios. Concurrently, the modality consistency discriminator aligns the cross-modal distributions of textual and visual features in the latent space, thereby suppressing modal bias. The final objective function is formulated as

where the parameters and are used to balance the adversarial losses from the event and modality consistency tasks with the primary fake news detection task, and take negative values. During adversarial training, the fake news discriminator aims to minimize its own loss, while simultaneously maximizing the losses of the event and modality consistency discriminators.

4. Experiments

4.1. Datasets

To validate the performance of the BSEAN model, this paper utilizes two datasets collected from different social media platforms: Weibo and Twitter.

The Weibo dataset, originally collected and curated by Jin et al. [], comprises confirmed fake news items verified by Sina Weibo’s official rumor debunking platform between May 2012 and January 2016. These news items primarily originated from posts by ordinary users and were authenticated by a panel of experts. The authentic news items in this dataset were sourced from official reports by Xinhua News Agency, an authoritative Chinese news organization.

The Twitter dataset, constructed by Boididou et al. [], is frequently employed for multimedia information credibility verification tasks on platforms like MediaEval. It focuses on identifying fake posts on social media and includes a development set for model training (approximately 5000 news items, covering over 10 rumor events) and a test set for evaluation (approximately 2000 news items, encompassing over 30 rumor events).

During the data preprocessing phase, to ensure the rigor of the experiments and the quality of the data, we removed text-only posts, duplicate images, data with broken links, and excessively low-quality images from both datasets. Textual content underwent standardization, including the removal of punctuation, numbers, special characters, and stopwords or overly short words. Through these preprocessing steps, we obtained refined visual-textual datasets suitable for training and evaluating our model.

4.2. Experimental Setup

In the feature extraction stage, we employed a pre-trained BERT model to extract textual features. Its 12-layer outputs were integrated into three sets of multi-level features. These were subsequently processed by a Text-CNN network containing three kernel sizes, 3, 4, and 5 (each with 128 filters), ultimately generating a 256-dimensional textual representation. For the visual modality, a pre-trained Swin-T model was used to process input images, and the output regional features were projected to 256 dimensions via a fully connected layer.

Regarding the model architecture, the multi-head attention mechanisms in all modules were configured with eight attention heads, each having a dimension of 32. For the key hyperparameters in our proposed modules, we set the loss weights in the Bidirectional Modality Mapping Network (BMMN) to and , with an entropy regularization weight of . In the DAL framework, the weights for the event and modality adversarial losses were set to and , respectively. During the training process, we utilized the AdamW optimizer with an initial learning rate set to and a batch size of 64. The model was trained for a maximum of 100 epochs. To prevent overfitting and select the best model, we incorporated an early stopping mechanism with a patience of 10 epochs, monitoring the F1-score on the validation set. All experiments were implemented using the PyTorch 2.3.0 framework and conducted on NVIDIA GeForce RTX 3090 GPU with 24 GB of memory.

4.3. Evaluation Metrics

To comprehensively evaluate the proposed method, the experiments utilized Accuracy, Precision, Recall, and F1-score to assess the model’s performance. These metrics were also computed separately for true news and fake news.

where (true positives) is the number of fake news items correctly classified as fake; (true negatives) is the number of true news items correctly classified as true; (false positives) is the number of true news items incorrectly classified as fake; and (false negatives) is the number of fake news items incorrectly classified as true.

4.4. Comparative Experiments

To validate the effectiveness of the proposed BSEAN model, this paper compares it with several classic model approaches in the field of fake news detection. The baseline models include the following:

Visual-Only: employs only the pre-trained Swin Transformer model to extract image features, followed by a simple classification.

Text-Only: utilizes only the pre-trained BERT model and Text-CNN to extract textual features, followed by a simple classification.

CLIP []: leverages the powerful visual and text encoders of CLIP to extract deep semantic features from the image and text, respectively. Subsequently, the features from both modalities are concatenated and passed through a classification layer to determine the news veracity.

EANN []: extracts textual and visual features using Text-CNN and VGG-19, respectively. These features are concatenated and fed into a fake news classifier. It incorporates an adversarial network to learn event-agnostic multimodal features for fake news detection.

MVAE []: extracts textual and visual features using Bi-LSTM and VGG-19. After concatenation, a Variational Auto-encoder (VAE) learns a shared multimodal representation to capture complex cross-modal relationships.

MCNN []: combines ELA (Error Level Analysis) algorithm and ResNet50 to extract image tampering and semantic features. BERT and Bi-GRU are used for textual feature ex-traction. An attention mechanism explores the overall news features.

ENRoBERTa []: achieves cross-modal information complementarity through efficient bimodal fusion and targeted preprocessing (ELA). Cross-modal heterogeneous data undergo a deep feature fusion module to complete the final detection task.

MCAN []: extracts spatial domain features using VGG-19 and textual features using BERT. It also incorporates DCT (Discrete Cosine Transform) to extract frequency domain features. Self-attention and co-attention modules are used to fuse multimodal information.

The comparative experimental results are presented in Table 1. Observation and analysis of these results yield the following findings:

Table 1.

Comparative experimental results.

- The model proposed in this paper outperforms most other baseline models across the evaluation metrics. In terms of accuracy, our model achieves improvements on both the Weibo and Twitter datasets compared to the other models, with overall accuracies of 90.1% and 88.3%, respectively. Furthermore, the F1-scores indicate that our proposed model demonstrates stable discriminative ability for fake news detection. Although the improvement in accuracy over some baseline models, such as MCAN on Weibo, is marginal, a deeper analysis reveals the distinct advantages of BSEAN. As shown in Table 1, BSEAN achieves the highest Macro-F1 score, which indicates a more balanced performance across both the real and fake news categories. Furthermore, as we will introduce in Section 4.7, the calibration of our model exhibits a lower Expected Calibration Error (ECE) score, which means its prediction confidences are more reliable.

- The data in the table show that fake news detection tasks relying solely on either textual or visual unimodal information perform poorly, as unimodal approaches struggle to capture rich semantic information. Moreover, detection tasks relying solely on the textual modality perform better than those relying solely on the visual modality. This is likely because the information provided by the textual modality is often more complete for semantic understanding than that directly obtainable from the visual modality.

- In the context of multimodal fake news detection: The CLIP-based model demonstrates the potential of pre-trained vision-language models, yet its results also suggest a potential limitation of relying solely on powerful general-purpose representations. EANN, through its event adversarial neural network, fuses textual and visual features and excels at mining explicit and implicit common features across events. However, the removal of specific event features can diminish its identification capability. MVAE’s multimodal variational autoencoder generates fused features via a self-supervised loss, enhancing generalization. Nevertheless, its simple fusion of modal information and lack of sophisticated interaction lead to suboptimal performance, and its sensitivity to hyperparameters further degrades overall efficacy. MCNN improves detection performance by analyzing social media features and the consistency of multimodal data. However, this method does not fully exploit the potential synergistic value of information from different modalities, a limitation that constrains the effective utilization of fused features by the model. ENRoBERTa achieves cross-modal information complementarity through efficient feature extraction and contrastive learning, effectively improving detection accuracy. However, the depth of feature fusion for complex modal interaction scenarios needs further enhancement. MCAN deeply fuses multimodal features through a multi-layer co-attention stack. This approach learns interdependencies between cross-modal features, underscoring the importance of capturing cross-modal semantics.

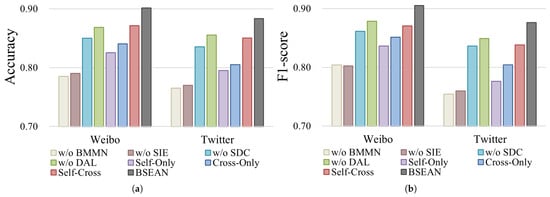

4.5. Ablation Study Analysis

This study explores the effectiveness of each component of the BSEAN model by evaluating performance after the removal of individual components. The following variants were created:

w/o BMMN: In this variant, the Bidirectional Modality Mapping Network (BMMN) module was removed. This means that textual and visual features, before entering the Semantic Interaction Enhancement module, no longer undergo explicit cross-modal mapping and cycle consistency constraints.

w/o SIE: The attention mechanisms within this module were removed. Instead, the input features were directly concatenated and simply fused, then fed into the Semantic Deviation Calibration module. Furthermore, to investigate the effectiveness of the bidirectional cross-attention mechanism within the SIE module, several variants with common attention mechanisms were designed to observe changes in model performance: Self-Only, Cross-Only, and Self-Cross.

w/o SDC: In this variant, the Semantic Deviation Calibration (SDC) module was removed. This implies that the deeply interacted features obtained after semantic interaction enhancement are directly concatenated without undergoing quantification of semantic deviation and adaptive adjustment of gating weights.

w/o DAL: This variant removed the adversarial learning mechanism from the discriminators. The final loss function no longer includes the adversarial terms from the event relevance discriminator and the modality consistency discriminator, retaining only the loss from the fake news discriminator.

The experimental results are shown in Figure 5. It can be observed that removing any module leads to a decline in model performance.

Figure 5.

Ablation study results, showing performance after removing various modules used in the model, and for the SIE module, using different attention mechanism variant. (a) Accuracy on different datasets. (b) F1-score on different datasets

Removing the BMMN module results in a significant performance degradation. This indicates that effective initial semantic alignment is crucial for subsequent deep cross-modal interaction, directly impacting the ability to capture rich cross-modal semantic correlations. The model can more effectively align and fuse features from different modalities, as BMMN’s bidirectional mapping and cycle consistency constraint mechanisms effectively mitigate cross-modal heterogeneity. This allows the model to better learn modality-agnostic semantic representations, laying a solid foundation for subsequent deep semantic interaction.

Removing the SIE module or using its variants with other attention mechanisms causes a substantial drop in model performance, particularly in the F1-score. This corroborates our assertion regarding the lack of deep cross-modal interaction in current methods. The SIE module, through its cross-modal interaction attention mechanism, effectively deepens the understanding of intra-modal contexts and promotes the deep fusion of cross-modal information. It enables textual features to “attend to” and absorb visual semantics from image scenes, and vice versa, thereby generating more discriminative cross-modal features. This deep, attention-driven interaction is key to understanding potential contradictions and correlations in multimodal fake news, significantly enhancing the model’s ability to discriminate between true and fake news. Ablation study results show performance after removing various modules used in the model, and for the SIE module, use different attention mechanism variants.

The absence of the SDC module leads to a decline in model performance, especially when handling samples with incompletely consistent modal information. This highlights the effectiveness of SDC in addressing inherent cross-modal semantic discrepancies. By quantifying the deviation through cosine distance between textual and visual features and adaptively adjusting the contribution of each modal feature based on this deviation, SDC can suppress modal features that are less informative or contradictory while enhancing highly consistent information. This prevents noise or misleading information from a single modality from negatively impacting the final discriminative outcome.

After removing the DAL module, all performance metrics decreased. The event relevance discriminator, by compelling the feature extractor to be insensitive to event labels, helps the model learn more generalizable fake news features rather than overfitting to specific event scenarios. Simultaneously, the modality consistency discriminator effectively suppresses modal bias by aligning the distributions of textual and visual features in the latent space. This dual adversarial mechanism works synergistically to significantly enhance the model’s generalization ability and robustness, enabling it to exhibit strong-er discriminative power when faced with diverse fake news.

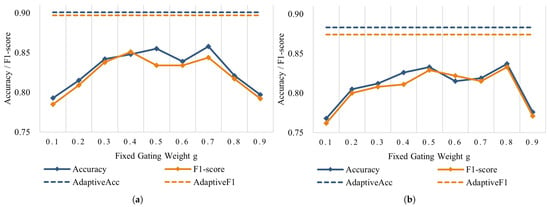

4.6. Quantitative Analysis of SDC Module Weights

To meticulously evaluate the effectiveness of the adaptive gating mechanism within the SDC module, we conducted a hyperparameter sensitivity experiment. We established a series of fixed gating weights (ranging from 0.1 to 0.9 with a step of 0.1), which replaced the dynamically calculated gating weights based on semantic deviation in the SDC module. The experimental results are illustrated in Figure 6. The following conclusions can be observed and analyzed:

Figure 6.

Quantitative analysis of SDC module weights. The solid lines represent the changes in Accuracy and F1-score with fixed weights, while the dashed lines at the top indicate the values achieved with adaptive weights. (a) On the Weibo dataset. (b) On the Twitter dataset.

When the fixed gating weight g approaches 0.1 or 0.9, the model’s accuracy and F1-score exhibit a sharp decline. This indicates that the excessive suppression of modal features leads to the filtering or loss of substantial critical semantic information, causing the model to face information scarcity during the fusion stage. In such scenarios, the model is almost unable to effectively utilize visual-textual features for fake news discrimination, and its performance may even be inferior to that of baseline models relying solely on a single modality, as even unimodal information is inappropriately attenuated. This validates the importance of moderately preserving and utilizing modal features.

As the fixed weight g increases, the model’s performance (accuracy and F1-score) initially rises, then tends to plateau, and, eventually, may slightly decrease. For a fixed gating weight, peak performance is reached when g is between 0.6 and 0.8. This point represents the best performance achievable by a static gating mechanism on this dataset: It suppresses noise and inconsistent information to some extent while retaining sufficient effective features. However, even this optimal fixed weight yields a performance significantly lower than that achieved by the complete BSEAN model. This clearly reveals the inherent limitation of a fixed-weight mechanism—it is a global, static decision incapable of making fine-grained adjustments based on the cross-modal semantic deviations of different samples.

In stark contrast to the performance curves under all fixed weights, the SDC module employing adaptive weights consistently maintains the highest accuracy and F1-score throughout the experiment, with its performance point exceeding the peak achievable by any fixed weight. BSEAN’s adaptive gating mechanism dynamically generates gating weights for textual and visual features based on the specific semantic deviation (i.e., the degree of cross-modal consistency or conflict) calculated from the visual-textual features of each input sample. This implies the following:

- When visual-textual information is highly consistent and mutually supportive, the weight values tend to be higher, ensuring that this strong, consistent evidence is fully utilized.

- When significant deviations or even contradictions exist between visual and textual information, the weight values tend to be lower to appropriately suppress this potential noise or conflicting information, preventing it from misleading the model’s discrimination.

- This context-aware dynamic adjustment capability enables the model to simulate the human cognitive process of “weighing” and “filtering” evidence when processing multimodal information, thereby achieving effective fusion of heterogeneous information.

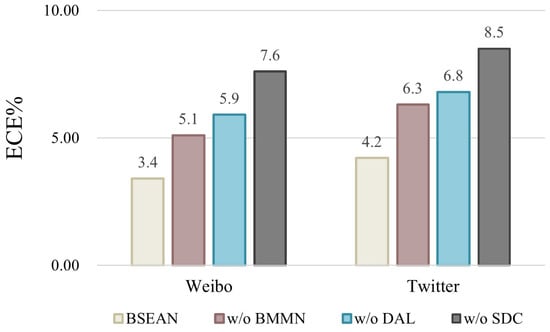

4.7. Investigation into Model Reliability via Calibration Analysis

While accuracy and F1-score are pivotal for assessing discriminative power, the trustworthiness of a model’s predictions is equally critical for its deployment in high-stakes domains like fake news detection. For this purpose, we employ the Expected Calibration Error (ECE) metric. A model is considered well-calibrated if its predicted confidence aligns with its empirical accuracy, and a lower ECE score indicates better calibration. This analysis aims to explore how the architectural components of BSEAN contribute to the trustworthiness of its predictions.

The results, presented in Figure 7, detail the calibration performance of BSEAN and its ablated variants. Our full BSEAN model achieved ECE scores of 3.4% on the Weibo dataset and 4.2% on the Twitter dataset. Among the tested configurations, the complete model exhibits the most favorable calibration properties, suggesting that its predictive probabilities are more reliable than those of its incomplete counterparts.

Figure 7.

Calibration analysis of BSEAN and its variants.

The primary finding from this analysis emerges from the ablation study. A significant increase in ECE was observed when the Semantic Deviation Calibration (SDC) module was removed (w/o SDC), reaching 0.076 on Weibo and 0.085 on Twitter. This provides a strong indication that the SDC module plays a crucial role in improving the model’s calibration. By designedly addressing semantic conflicts between modalities, the SDC module appears to help regulate the model’s confidence, leading to more dependable probability estimates, especially in ambiguous cases. Similarly, the removal of the DAL and BMMN modules also resulted in poorer calibration, suggesting that generalization and initial feature alignment are contributing factors to overall model reliability.

In summary, this investigation into model calibration suggests that the architectural choices within BSEAN, particularly the inclusion of the SDC module, not only enhance its discriminative accuracy but also contribute positively to the reliability of its predictions. While further research involving broader cross-model calibration comparisons is warranted, these findings position BSEAN as a promising approach that considers both performance and trustworthiness.

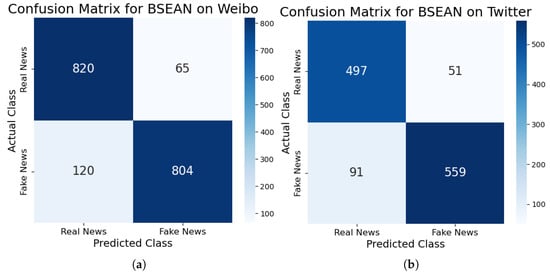

4.8. Analysis of Model Boundaries

To provide a more granular analysis of our model’s performance and address the identification of specific failure patterns, we conducted a detailed error analysis. This involves both a quantitative breakdown of classification results via confusion matrices and a qualitative investigation into the root causes of misclassifications.

Figure 8 presents the confusion matrices of the BSEAN model on the Weibo and Twitter test sets. These visualizations offer a clear breakdown of true positives (TP), true negatives (TN), false positives (FP), and false negatives (FN).

Figure 8.

Confusion matrices of the BSEAN model on the (a) Weibo and (b) Twitter datasets.

The matrices reveal a key insight: On both datasets, the number of false negatives (FN) is significantly higher than the number of false positives (FP). This indicates that the primary challenge for BSEAN is not in misidentifying real news, but rather in failing to detect sophisticated or nuanced fake news. This underscores the difficulty of the task and motivates a deeper analysis of these “missed” fakes. To understand the practical boundaries of our model, we manually reviewed a sample of the false negative cases and identified two predominant failure patterns:

Difficulty with Satirical and Ironic Content:A significant portion of the false negatives involved satire, where the literal meaning of the text is inconsistent with its pragmatic, deceptive intent. For example, a post might pair a mundane image with text like, “Following the latest city policy, our skies have never been clearer,” intending to sarcastically critique pollution. BSEAN, which excels at finding direct semantic contradictions, struggles to capture this type of high-level linguistic nuance, thus misclassifying the post as real.

Out-of-Context Image-Text Pairs: Another common failure pattern occurs when a real, unaltered image is repurposed with a misleading textual narrative. For instance, a well-documented, emotive photograph of two children from one country can be falsely re-contextualized as depicting victims of a natural disaster in another. Our model can confirm that the image is authentic and the text is grammatically sound, and may even find a thematic link. However, it lacks the external world knowledge or fact-checking capability to verify the spatio-temporal context, leading to an incorrect classification.

5. Case Study

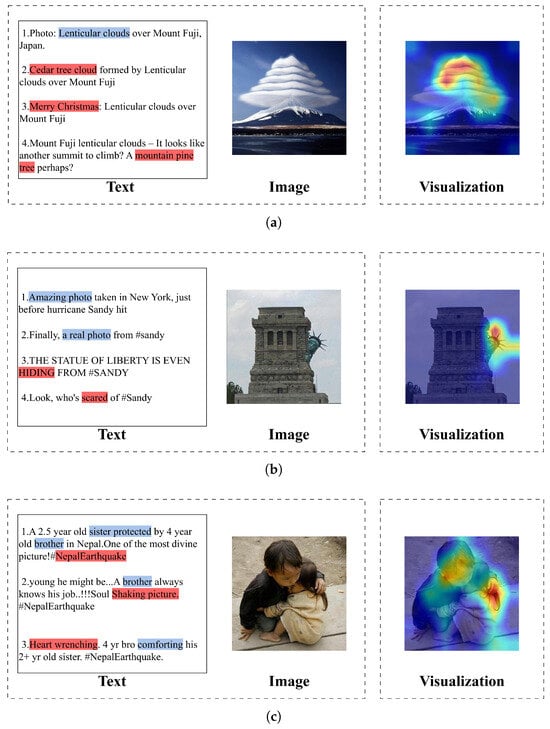

To provide a concrete understanding of BSEAN’s capabilities and limitations, we present three distinct case studies in Figure 9. We visualize the model’s attention via heatmaps and report its classification confidence, linking these outputs to our quantitative analyses.

Figure 9.

Case studies illustrating BSEAN’s decision-making process. (a,b) are success cases demonstrating the detection of semantic inconsistencies. (c) is a failure case that reveals the model’s operational boundaries. In the text boxes, blue indicates thematically aligned keywords, while red indicates conflicting or a false keywords.

From these cases, it can be observed that the model extracts key information from both visual and textual modalities to understand the semantic associations between them. Focusing solely on either modality can easily lead to misclassification. The success cases in Figure 9a,b demonstrate the model’s core strength in detecting various forms of semantic deviation.

In the first case in Figure 9a, the model is faced with deep associations between the visual of lenticular clouds and a conflicting textual narrative (e.g., describing it as a Cedar tree cloud). This suggests that the model learns to identify discrepancies between the sentiment or narrative conveyed by the text (describing a “spectacle” with incorrect terms) and the factual content of the image. The model’s attention visualization supports this, as it focuses on the core visual element to assess its semantic alignment with the text, correctly classifying the post as Fake (confidence: 94.7%). This sensitivity enables the effective identification of superficially consistent but factually conflicting information.

Similarly, in the second case Figure 9b, the model scrutinizes the semantic consistency between the actions attributed to the entity by the text (HIDING, scared) and the static, inanimate nature of the image (the Statue of Liberty). When the textual description conflicts with the inherent attributes of the visual content, the model accurately identifies this semantic deviation and effectively discerns the false information arising from the image–text discrepancy, leading to a high-confidence Fake classification (confidence: 91.2%).

The third case in Figure 9c serves as a crucial example of an ‘out-of-context’ failure pattern, clearly defining the model’s operational boundary. The post combines an emotive image of two children with a false narrative about the “#NepalEarthquake”. BSEAN misclassifies this post as Real, but with a very low confidence of 56.93%. This insightful result reveals that the model correctly identifies the high semantic harmony between the visual and textual content. However, the extremely low confidence signals the model’s “uncertainty” when faced with a challenge beyond its scope (external fact-checking). It demonstrates that while BSEAN excels at detecting internal semantic inconsistencies, it lacks the external knowledge for provenance verification, a clear direction for future work.

6. Discussion

Our study, while demonstrating the efficacy of BSEAN on established benchmarks, operates within a rapidly evolving information ecosystem. It is, therefore, pertinent to discuss the broader context of our model’s capabilities, particularly concerning emerging challenges such as AI-Generated Content (AIGC) and adversarial threats.

A key consideration for any contemporary detection model is its applicability to AIGC. Although the datasets utilized in this work predate the widespread use of generative AI, the architectural design of BSEAN is inherently suited to addressing this challenge. AIGC often exhibits subtle cross-modal inconsistencies—for instance, a synthetic image may lack perfect semantic coherence with a detailed textual narrative. BSEAN’s core mechanism, the Semantic Enhancement and Calibration Network (SECN), is engineered precisely to identify such fine-grained semantic deviations. By focusing on deep logical congruence rather than superficial features, our model possesses a foundational capability to detect the subtle incongruities common in AI-generated fakes, positioning it as a strong candidate for future validation on AIGC-centric datasets.

Furthermore, ensuring model robustness is paramount for real-world deployment. While this study did not include explicit adversarial attack evaluations, the Dual Adversarial Learning (DAL) framework is an integral component designed to bolster intrinsic resilience. By promoting the learning of event-agnostic features, the DAL module mitigates the risk of overfitting to spurious correlations within the training data. This process of learning disentangled representations enhances the model’s stability against distribution shifts and certain data perturbations. This design choice serves as a foundational step towards building a more adversarially robust system, providing a solid basis for future investigations into specialized defense mechanisms against targeted white-box and black-box attacks. Similarly, another critical dimension of robustness for real-world deployment is the model’s cross-lingual generalizability. While our study provides a strong validation on major English and Chinese benchmarks, assessing its efficacy across more diverse cultural and linguistic contexts remains a crucial direction for future work.

7. Conclusions

In this paper, we addressed the pressing challenge of multimodal fake news detection within the Web3.0 ecosystem by proposing a novel framework, the Bidirectional Semantic Enhancement and Adversarial Network (BSEAN). Our primary contribution lies in a multi-stage approach that emulates human-like verification by first achieving robust cross-modal alignment, then deeply exploring semantic inconsistencies via a dedicated enhancement and calibration mechanism, and, finally, learning generalizable features through a dual adversarial strategy. Extensive experiments on the Weibo and Twitter datasets demonstrate that BSEAN significantly outperforms existing state-of-the-art methods, validating its effectiveness in identifying subtle cross-modal semantic conflicts. While this work establishes a strong foundation for content-based analysis, future iterations could further align with the Web3.0 paradigm by integrating on-chain provenance data for a more holistic and trustworthy detection framework.

Author Contributions

Conceptualization and Design: Y.X., C.Z., and Z.C.; Methodology: C.Z. and Y.X.; Formal Analysis: H.P. and K.L.; Investigation: C.Z. and B.Z.; Resources: Z.C. and Z.Y.; Writing—Original Draft Preparation: C.Z. and X.S.; Writing—Review and Editing: Y.X., C.Z., and Z.C.; Visualization: K.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in part by the Major Science and Technology Special Project of Henan Province under Grant No. 251100210100; the Scientific Research Team Development Project of Zhongyuan University of Technology under Grant No. K2025TD002; the National Key Research and Development Project of China under Grant No. 2023YFB2703603; the Key Research Project for Higher Education of Henan Province under Grant No. 24A520059; and the 2024 Scientific and Technological Research Project in Henan Province under Grant No. 242102210136.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Li, K.; Guo, B.; Liu, J.; Wang, J.; Ren, H.; Yi, F.; Yu, Z. Dynamic probabilistic graphical model for progressive fake news detection on social media platform. ACM Trans. Intell. Syst. Technol. (TIST) 2022, 13, 1–24. [Google Scholar] [CrossRef]

- Zhang, Z.; Jing, J.; Li, F.; Zhao, C. Survey on fake information detection, propagation and control in online social networks from the perspective of artificial intelligence. Chin. J. Comput. 2021, 44, 2261–2282. [Google Scholar]

- Zhang, X.; Ghorbani, A.A. An overview of online fake news: Characterization, detection, and discussion. Inf. Process. Manag. 2020, 57, 102025. [Google Scholar] [CrossRef]

- Wentao, C.; Kuok-Tiung, L.; Wei, L.; Bhambri, P.; Kautish, S. Predicting the security threats of internet rumors and spread of false information based on sociological principle. Comput. Stand. Interfaces 2021, 73, 103454. [Google Scholar]

- Alam, F.; Cresci, S.; Chakraborty, T.; Silvestri, F.; Dimitrov, D.; Martino, G.D.S.; Shaar, S.; Firooz, H.; Nakov, P. A survey on multimodal disinformation detection. arXiv 2021, arXiv:2103.12541. [Google Scholar]

- Pawar, P.P.; Femy, F.F.; Rajkumar, N.; Jeevitha, S.; Bhuvanesh, A.; Kumar, D. Blockchain-Enabled Cybersecurity for IoT Using Elliptic Curve Cryptography and Black Winged Kite Model. Int. J. Inf. Technol. 2025, 1–11. [Google Scholar] [CrossRef]

- Roitero, K.; Soprano, M.; Portelli, B.; Spina, D.; Della Mea, V.; Serra, G.; Mizzaro, S.; Demartini, G. The COVID-19 infodemic: Can the crowd judge recent misinformation objectively? In Proceedings of the 29th ACM International Conference on Information & Knowledge Management, Virtual, 19–23 October 2020; pp. 1305–1314. [Google Scholar]

- Meel, P.; Vishwakarma, D.K. Fake news, rumor, information pollution in social media and web: A contemporary survey of state-of-the-arts, challenges and opportunities. Expert Syst. Appl. 2020, 153, 112986. [Google Scholar] [CrossRef]

- Osmundsen, M.; Bor, A.; Vahlstrup, P.B.; Bechmann, A.; Petersen, M.B. Partisan polarization is the primary psychological motivation behind political fake news sharing on Twitter. Am. Political Sci. Rev. 2021, 115, 999–1015. [Google Scholar] [CrossRef]

- Ma, J.; Gao, W.; Wong, K.F. Detect rumors on twitter by promoting information campaigns with generative adversarial learning. In The World Wide Web Conference; Association for Computing Machinery: New York, NY, USA, 2019; pp. 3049–3055. [Google Scholar]

- Sherstinsky, A. Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Phys. D Nonlinear Phenom. 2020, 404, 132306. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; (long and short papers). Volume 1, pp. 4171–4186. [Google Scholar]

- Zhou, Y.; Yang, Y.; Ying, Q.; Qian, Z.; Zhang, X. Multimodal fake news detection via clip-guided learning. In Proceedings of the 2023 IEEE International Conference on Multimedia and Expo (ICME), Brisbane, Australia, 10–14 July 2023; pp. 2825–2830. [Google Scholar]

- Cao, J.; Qi, P.; Sheng, Q.; Yang, T.; Guo, J.; Li, J. Exploring the role of visual content in fake news detection. In Disinformation, Misinformation, and Fake News in Social Media: Emerging Research Challenges and Opportunities; Springer: Cham, Switzerland, 2020; pp. 141–161. [Google Scholar]

- Zhou, P.; Han, X.; Morariu, V.I.; Davis, L.S. Learning rich features for image manipulation detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1053–1061. [Google Scholar]

- Wang, Y.; Ma, F.; Jin, Z.; Yuan, Y.; Xun, G.; Jha, K.; Su, L.; Gao, J. Eann: Event adversarial neural networks for multi-modal fake news detection. In Proceedings of the 24th ACM Sigkdd International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 849–857. [Google Scholar]

- Ajao, O.; Bhowmik, D.; Zargari, S. Sentiment aware fake news detection on online social networks. In Proceedings of the ICASSP 2019–2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 2507–2511. [Google Scholar]

- Giachanou, A.; Rosso, P.; Crestani, F. Leveraging emotional signals for credibility detection. In Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval, Paris, France, 21–25 July 2019; pp. 877–880. [Google Scholar]

- Khattar, D.; Goud, J.S.; Gupta, M.; Varma, V. Mvae: Multimodal variational autoencoder for fake news detection. In The World Wide Web Conference; Association for Computing Machinery: New York, NY, USA, 2019; pp. 2915–2921. [Google Scholar]

- Li, P.; Sun, X.; Yu, H.; Tian, Y.; Yao, F.; Xu, G. Entity-oriented multi-modal alignment and fusion network for fake news detection. IEEE Trans. Multimed. 2021, 24, 3455–3468. [Google Scholar] [CrossRef]

- Qian, S.; Wang, J.; Hu, J.; Fang, Q.; Xu, C. Hierarchical multi-modal contextual attention network for fake news detection. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual, 11 July 2021; pp. 153–162. [Google Scholar]

- Chen, Y.; Li, D.; Zhang, P.; Sui, J.; Lv, Q.; Tun, L.; Shang, L. Cross-modal ambiguity learning for multimodal fake news detection. In ACM Web Conference 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 2897–2905. [Google Scholar]

- Castillo, C.; Mendoza, M.; Poblete, B. Information credibility on twitter. In Proceedings of the 20th International Conference on World Wide Web, Hyderabad, India, March 28–1 April 2011; pp. 675–684. [Google Scholar]

- Horne, B.; Adali, S. This just in: Fake news packs a lot in title, uses simpler, repetitive content in text body, more similar to satire than real news. In Proceedings of the International AAAI Conference on Web and Social Media, Montreal, QC, Canada, 15–18 May 2017; Volume 11, pp. 759–766. [Google Scholar]

- Pérez-Rosas, V.; Kleinberg, B.; Lefevre, A.; Mihalcea, R. Automatic detection of fake news. arXiv 2017, arXiv:1708.07104. [Google Scholar] [CrossRef]

- Dun, Y.; Tu, K.; Chen, C.; Hou, C.; Yuan, X. Kan: Knowledge-aware attention network for fake news detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 2–9 February 2021; Volume 35, pp. 81–89. [Google Scholar]

- Xu, W.; Wu, J.; Liu, Q.; Wu, S.; Wang, L. Evidence-aware fake news detection with graph neural networks. In Proceedings of the ACM web conference 2022, Virtual, 25–29 April 2022; pp. 2501–2510. [Google Scholar]

- Liao, H.; Peng, J.; Huang, Z.; Zhang, W.; Li, G.; Shu, K.; Xie, X. Muser: A multi-step evidence retrieval enhancement framework for fake news detection. In Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Long Beach, CA, USA, 6–10 August 2023; pp. 4461–4472. [Google Scholar]

- Huang, Y.; Shu, K.; Yu, P.S.; Sun, L. From creation to clarification: ChatGPT’s journey through the fake news quagmire. In Proceedings of the Companion Proceedings of the ACM Web Conference 2024, Singapore, 13–17 May 2024; pp. 513–516. [Google Scholar]

- Qi, P.; Cao, J.; Yang, T.; Guo, J.; Li, J. Exploiting multi-domain visual information for fake news detection. In Proceedings of the 2019 IEEE international conference on data mining (ICDM), Beijing, China, 8–11 November 2019; pp. 518–527. [Google Scholar]

- Singh, B.; Sharma, D.K. Predicting image credibility in fake news over social media using multi-modal approach. Neural Comput. Appl. 2022, 34, 21503–21517. [Google Scholar] [CrossRef] [PubMed]

- Singhal, S.; Kabra, A.; Sharma, M.; Shah, R.R.; Chakraborty, T.; Kumaraguru, P. Spotfake+: A multimodal framework for fake news detection via transfer learning (student abstract). In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 13915–13916. [Google Scholar]

- Wang, Y.; Ma, F.; Wang, H.; Jha, K.; Gao, J. Multimodal emergent fake news detection via meta neural process networks. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, Singapore, 14–18 August 2021; pp. 3708–3716. [Google Scholar]

- Singhal, S.; Shah, R.R.; Chakraborty, T.; Kumaraguru, P.; Satoh, S. Spotfake: A multi-modal framework for fake news detection. In Proceedings of the 2019 IEEE fifth international conference on multimedia big data (BigMM), Singapore, 11–13 September 2019; pp. 39–47. [Google Scholar]

- Jin, Z.; Cao, J.; Guo, H.; Zhang, Y.; Luo, J. Multimodal fusion with recurrent neural networks for rumor detection on microblogs. In Proceedings of the 25th ACM International Conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 795–816. [Google Scholar]

- Wu, Y.; Zhan, P.; Zhang, Y.; Wang, L.; Xu, Z. Multimodal fusion with co-attention networks for fake news detection. In Proceedings of the Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021, Online, 1–6 August 2021; pp. 2560–2569. [Google Scholar]

- Qi, L.; Wan, S.; Tang, B.; Xu, Y. Multimodal fusion rumor detection method based on attention mechanism. Comput. Eng. Appl. 2022, 58, 209–217. [Google Scholar]

- Shang, L.; Kou, Z.; Zhang, Y.; Wang, D. A duo-generative approach to explainable multimodal covid-19 misinformation detection. In Proceedings of the ACM Web Conference 2022, Virtual, 25–29 April 2022; pp. 3623–3631. [Google Scholar]

- Xue, J.; Wang, Y.; Tian, Y.; Li, Y.; Shi, L.; Wei, L. Detecting fake news by exploring the consistency of multimodal data. Inf. Process. Manag. 2021, 58, 102610. [Google Scholar] [CrossRef] [PubMed]

- Zhou, X.; Wu, J.; Zafarani, R. SAFE: Similarity-aware multi-modal fake news detection. arXiv 2020, arXiv:2003.04981. [Google Scholar]

- Selvam, L.; Vinothkumar, E.S.; Krishnan, R.S.; Rajkumar, G.V.; Raj, J.R.F.; Malar, P.S.R. A Unified Deep Learning Model for Fake Account Identification Using Transformer-Based NLP and Graph Neural Networks. In Proceedings of the 2025 International Conference on Inventive Computation Technologies (ICICT), Kirtipur, Nepal, 23–25 April 2025; pp. 1033–1040. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Boididou, C.; Papadopoulos, S.; Zampoglou, M.; Apostolidis, L.; Papadopoulou, O.; Kompatsiaris, Y. Detection and visualization of misleading content on Twitter. Int. J. Multimed. Inf. Retr. 2018, 7, 71–86. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models From Natural Language Supervision. In Proceedings of the International Conference on Machine Learning (ICML), Virtual Event, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).