In this section, we will explore the principles of gradient-guided fuzz testing for efficiently discovering vulnerabilities in binary programs, and we will introduce our developed adaptive gradient-guided fuzz testing framework.

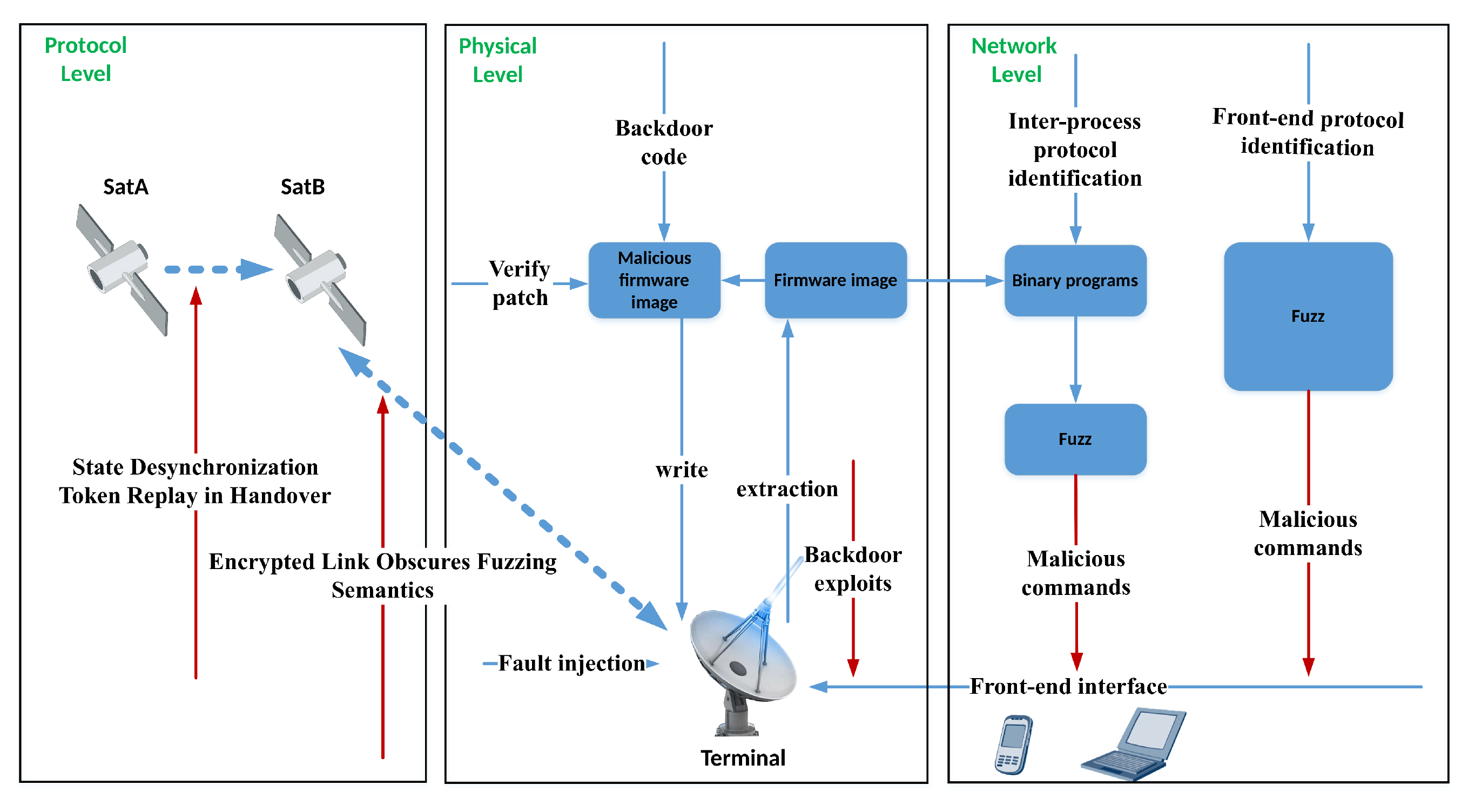

In summary, this threat model captures a spectrum of attack vectors: highly effective but hardware-dependent physical attacks, widely accessible but semantically opaque software attacks, and dynamic protocol-level threats that are both difficult to monitor and test. Collectively, these observations illustrate the unique challenges posed by SIGT systems—from physical tampering to protocol-level complexity. To address these issues, we introduce an adaptive fuzzing framework capable of dynamically navigating such deeply layered attack surfaces.

4.1. Gradient-Guided Fuzz Testing

Fuzz testing is a dynamic analysis vulnerability discovery technique that continuously sends mutated data to the target program to check if these inputs cause program errors or crashes [

23]. Current mainstream binary fuzz testing tools, such as American fuzzy lop (AFL), adopt coverage-guided methods combined with the principles of evolutionary optimization algorithms, retaining only those inputs most likely to produce new code coverage to improve seed quality. However, evolutionary optimization algorithms tend to get stuck in local optima during the computation process [

24], leading to a gradual decrease in efficiency in exploring new code paths. Gradient-guided optimization algorithms effectively address this issue [

25].

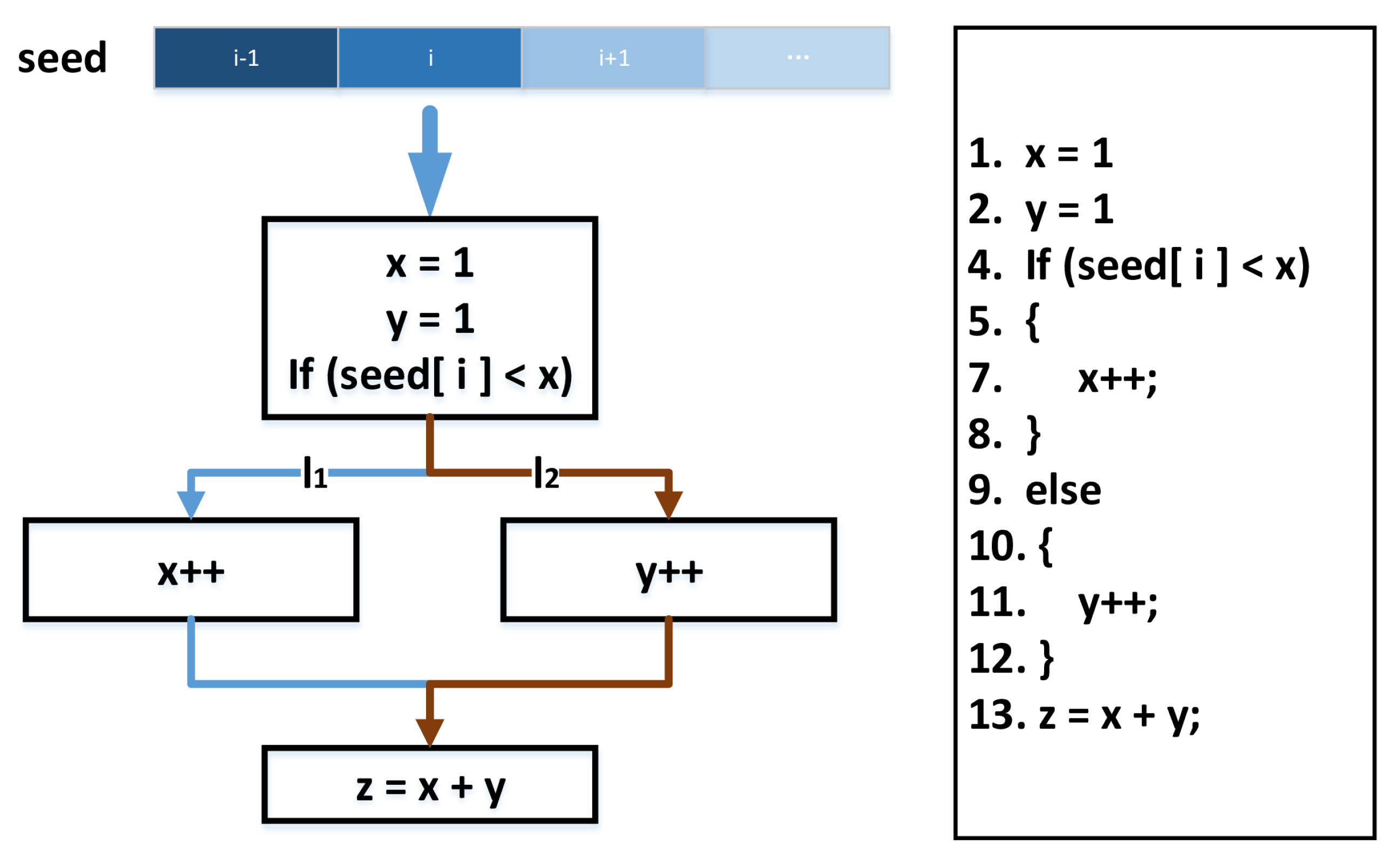

The principle of achieving efficient fuzz testing using gradient guidance is illustrated in

Figure 6. For the seed provided as input to the target program, the ith byte typically corresponds to a reference point of a conditional branch statement in the program. Therefore, if we can identify, through gradient guidance, the byte in each seed most likely to change the branch statement and mutate it accordingly, we may explore the sibling path of the original branch. For example, in

Figure 6, mutating the value of seed[i] to be greater than x can transition the seed exploration path from l1 to l2. The gradient-guided algorithm can meet this requirement by first constructing a surrogate function to establish the relationship between seed bytes and their coverage paths then utilizing this function to calculate the gradient corresponding to each byte, thereby identifying the most promising mutation bytes. However, due to the complexity of real-world programs, constructing a function that can smoothly represent the causal relationship mentioned above is highly challenging.

4.2. Neural Program Smoothing Fuzz Testing

Given the limitations of current gradient-guided methods in fuzzing programs, She et al. proposed a fuzz testing tool called NEUZZ based on neural program smoothing [

26,

27]. NEUZZ is a pioneering framework that integrates deep learning into fuzzing by modeling how input bytes affect code coverage. It trains a neural network to learn the mapping from inputs to edge coverage and uses gradient backpropagation to identify mutation positions. This method utilizes a neural network model [

28] to learn the functional relationship between input seed bytes and output coverage edges. It takes the byte sequences of each initial seed as input and outputs a vector representation of the corresponding coverage bitmap. Through the neural network [

29,

30], it captures the implicit correlations between seed byte sequences and program branches, and it then performs gradient backpropagation to identify the input bytes most likely to influence control flow transitions [

31]. Subsequent mutations are guided by the magnitude of these gradients, enabling targeted exploration of previously unreached paths.

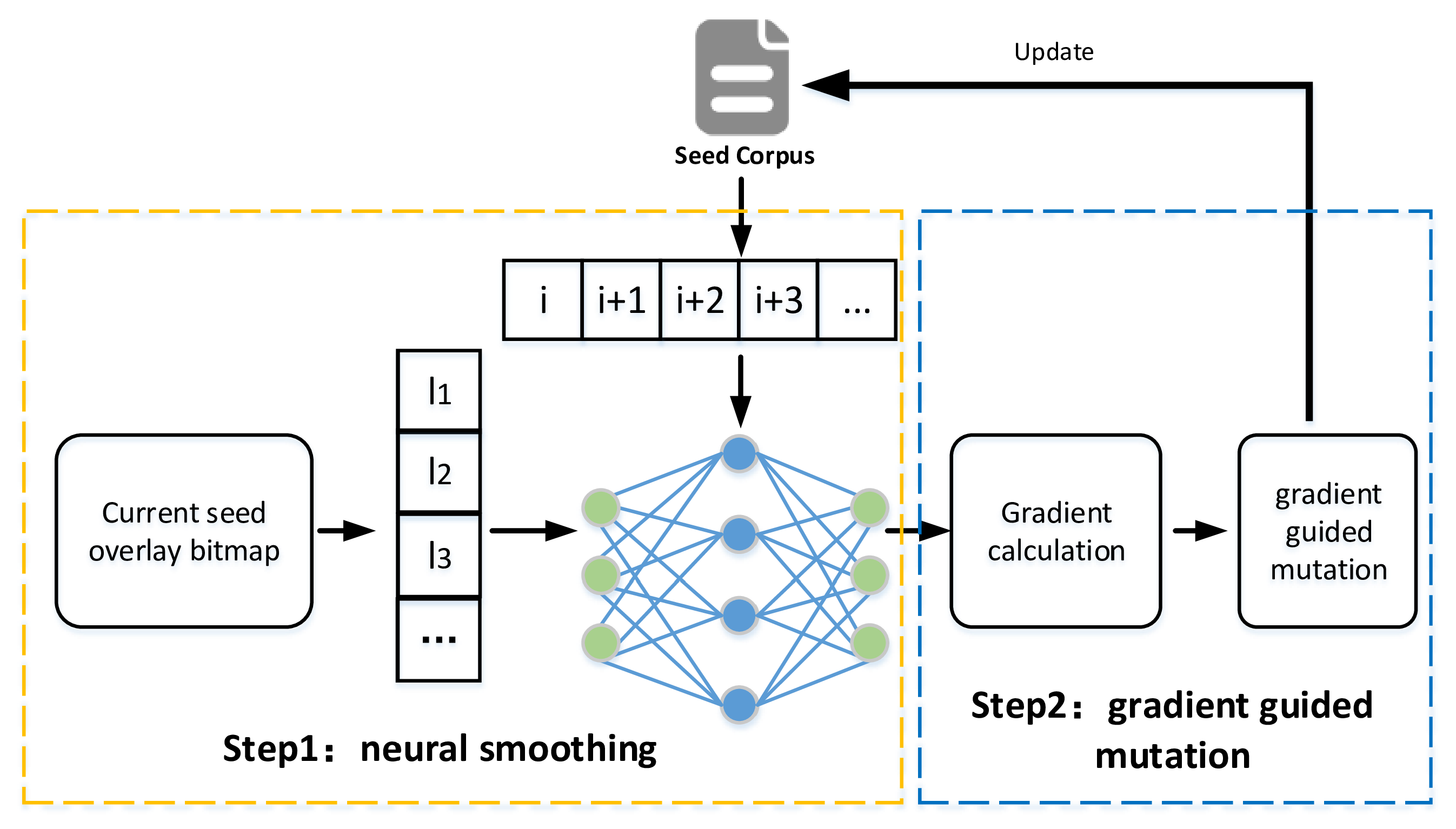

The specific process of NEUZZ is illustrated in

Figure 7, and it can be divided into two stages: neural program smoothing and gradient-guided mutation. In the smoothing stage, NEUZZ constructs a mapping function between the seed byte positions and

edge bitmap:

All the initial seeds are first executed to collect the set of covered edges, defining the output bitmap’s dimensionality n. Each input seed is recorded as a byte sequence of length m, representing the input dimensionality. For any given execution, if an edge is triggered then the corresponding bit in the output vector is set to 1; otherwise, to 0. For example, a seed activating edge1 but not edge2 yields an output like . The accumulated input–output pairs from all the seeds are then used to train the neural network. After training, gradient-based mutation is performed by computing the derivative of the output edge bitmap with respect to each input byte. These gradients serve as indicators of each byte’s mutation potential.

To implement the smoothing step, NEUZZ uses a fully connected feedforward neural network with a single hidden layer, typically consisting of 4096 neurons with ReLU activation. The output layer uses a sigmoid function to predict the edge activation probabilities. This lightweight design ensures both modeling capacity and computational efficiency.

The NEUZZ authors report that the neural network structure plays a secondary role compared to input diversity: experiments using deeper networks or wider hidden layers achieved only marginal improvements in coverage while significantly increasing training cost and risk of overfitting. Conversely, removing the hidden layer degraded performance, due to limited nonlinear modeling capacity. These findings indicate that a one-hidden-layer architecture is sufficient to approximate the mapping from seed bytes to coverage edges, offering a good trade-off between accuracy, generalization, and overhead in fuzzing scenarios [

26].

Experimental results demonstrate that NEUZZ achieves up to three times the edge coverage compared to traditional fuzzers like AFL over a 24 h run. It is particularly effective on large programs, where its learning-guided approach allows it to generalize internal branching logic and escape coverage plateaus. This advantage arises from the neural network’s ability to generalize over the collective edge-activation patterns of all seed inputs [

32,

33]. The quality and diversity of the initial seed set directly impact the model’s ability to capture complex branching behavior [

34,

35]. When the seed pool includes enough inputs to cover a broad range of sibling edge clusters, the neural model can guide mutations toward unexplored conditional branches more effectively. Conversely, a narrow seed distribution limits learning capacity and causes early saturation.

For Satellite Internet Ground Terminals (SIGTs), where binaries are large and obfuscated, NEUZZ’s gradient-based exploration framework offers significant advantages. Its learned model can navigate the deep and complex control-flow structures typical of SIGT binaries, triggering deeper paths and discovering subtle logic vulnerabilities. These properties make NEUZZ—and its successors, like MAFUZZ—particularly well-suited for security analysis in embedded and closed-source systems.

Building on NEUZZ, MAFUZZ retains the surrogate modeling framework using a single-layer feedforward neural network trained to approximate the mapping from seed byte sequences to coverage edge vectors. The model is optimized via standard backpropagation, and the gradient of the predicted coverage vector with respect to the input bytes is used to locate high-impact mutation positions.

Unlike conventional software, SIGT firmware often contains highly discrete control logic, such as hardcoded state machines and nested branches, which may introduce gradient discontinuities. To maintain the effectiveness of gradient-guided mutation in such scenarios, MAFUZZ restricts mutation to high-confidence bytes (i.e., those with large gradient magnitudes), reduces reliance on weak or noisy gradients, and emphasizes seed corpus diversity during training. This ensures that the model can generalize over discontinuous coverage landscapes and still provide meaningful directional guidance for mutation.

4.4. Adaptive Gradient-Guided Fuzzing

While NEUZZ demonstrates strong performance in gradient-guided fuzzing, its reliance on a static neural program smoothing process limits its ability to discover new execution paths once the initial sibling edge clusters are exhausted. In particular, when the initial seed pool lacks diversity, the neural network can only guide mutations within a constrained edge space, leading to early saturation and coverage stagnation.

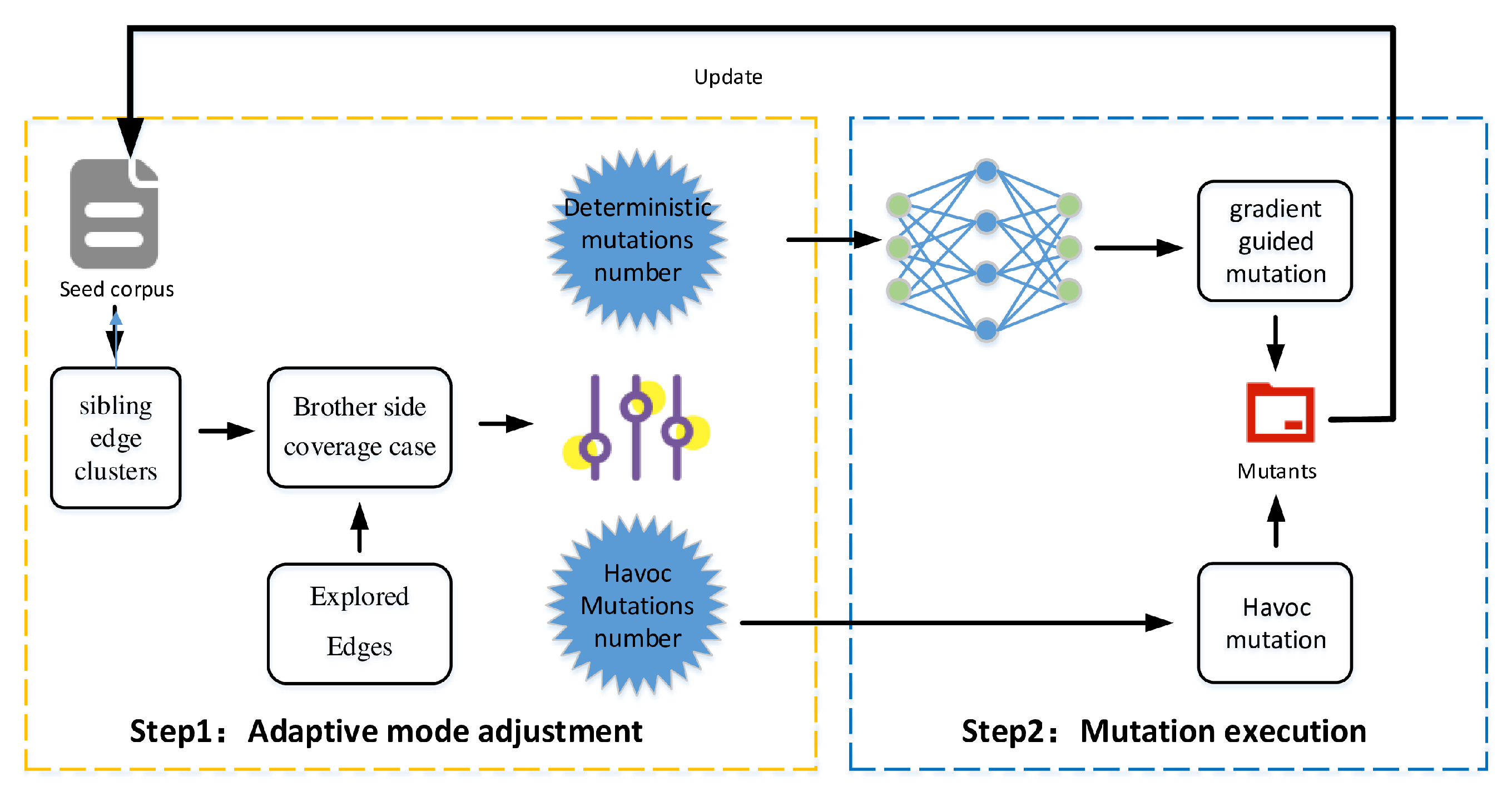

To address this limitation, we propose a novel adaptive gradient-guided fuzzing framework—MAFUZZ—that introduces two key enhancements to the traditional NEUZZ architecture: (1) integration of a Havoc mutation mode, and (2) a dynamic controller to adjust the balance between gradient-guided and random mutation strategies during fuzzing.

Hybrid mutation design. MAFUZZ extends the NEUZZ mutation pipeline by incorporating AFL-style Havoc mutation operations, such as random bit flips, byte insertions, and deletions. These random mutations enable the exploration of previously unreachable sibling edge clusters, complementing the precision of gradient-guided mutation. When the neural model becomes saturated, Havoc provides a mechanism to escape local minima by generating novel seed variants.

Adaptive mode regulation. Instead of statically applying both mutation strategies, MAFUZZ dynamically adjusts their usage during fuzzing based on real-time coverage feedback. After each round of seed execution, the algorithm analyzes the proportion of explored edges in sibling clusters and accordingly adjusts the number of gradient-guided and Havoc mutations for the next round. When the average saturation ratio is low, the controller favors gradient mutation for efficiency; when the ratio is high, it emphasizes Havoc to increase diversity.

Mutation control algorithm. The mutation strategy control algorithm lies at the heart of MAFUZZ’s adaptiveness. It evaluates the edge coverage status of the current seed pool and dynamically adjusts the balance between gradient-guided and Havoc mutation modes. The process consists of three stages, outlined as follows and corresponding to the structure of Algorithm 1:

| Algorithm 1 Adaptive mutation pattern modulation |

- Input:

, - Output:

, - 1:

for

do - 2:

Edge[i] ← SeedExecution(seeds[i]) - 3:

totalEdge[j] ← totalEdge[j] + Edge[i] - 4:

end for - 5:

correspRelation ← getEdgeRelation(program) - 6:

emptyArray ← correspRelation - 7:

for j in totalEdge do - 8:

for in correspRelation do - 9:

if totalEdge[j] = edge then - 10:

emptyArray[edge] ← 1 - 11:

end if - 12:

end for - 13:

for do - 14:

ratio[i] ← - 15:

averageRatio ← - 16:

end for - 17:

end for - 18:

if then - 19:

guideNum ← guideNum(1 + averageRatio) - 20:

randNum ← randNum(1 - averageRatio) - 21:

end if - 22:

if then - 23:

guideNum ← guideNum(1 - averageRatio) - 24:

randNum ← randNum(1 + averageRatio) - 25:

end if - 26:

return ,

|

(1) Edge coverage aggregation (lines 1–4). Each seed in the seed pool is executed on the target binary, and the edges it triggers are recorded. These edges are aggregated into a global set, totalEdge, representing the complete set of coverage edges observed in the current round. This forms the empirical basis for evaluating exploration saturation.

(2) Sibling edge cluster analysis (lines 5–12). Using static analysis (e.g., disassembling with objdump), the control flow graph (CFG) of the program is extracted. From this, all sibling edge clusters—sets of control-flow-related edges—are constructed as correspRelation. A zero-initialized structure emptyArray is created to match this layout.

The algorithm then flags each edge in totalEdge within its respective cluster. For each sibling cluster, the ratio of visited edges is computed. Averaging across all the clusters yields the global averageRatio, which quantifies the current round’s overall exploration depth.

This feedback loop allows MAFUZZ to dynamically balance exploitation and exploration as fuzzing progresses, effectively improving coverage across structurally diverse programs.

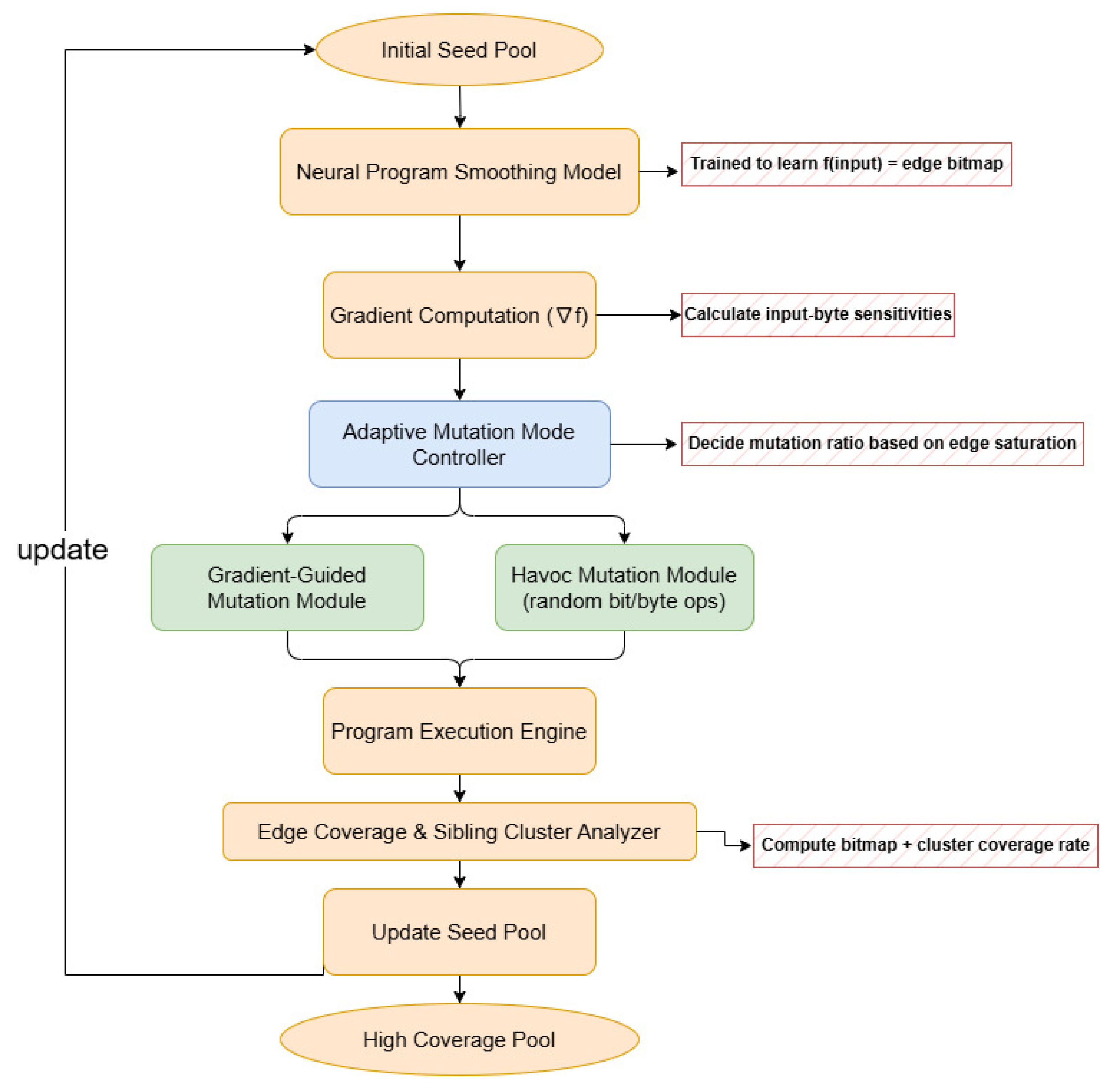

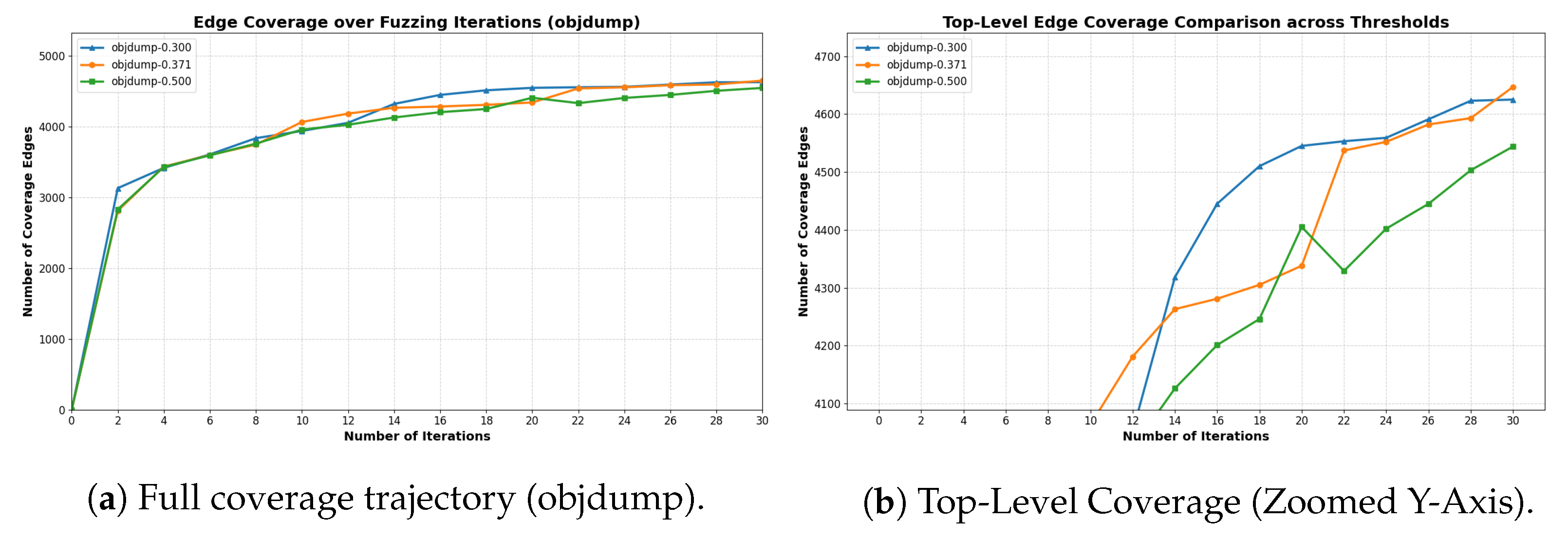

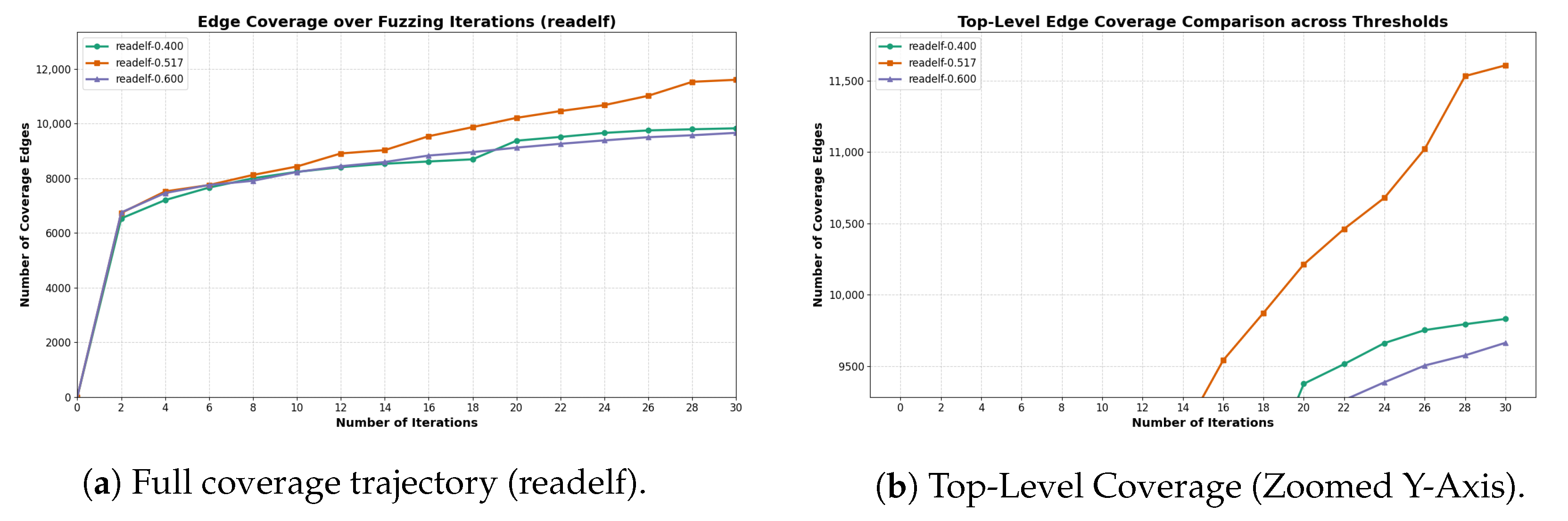

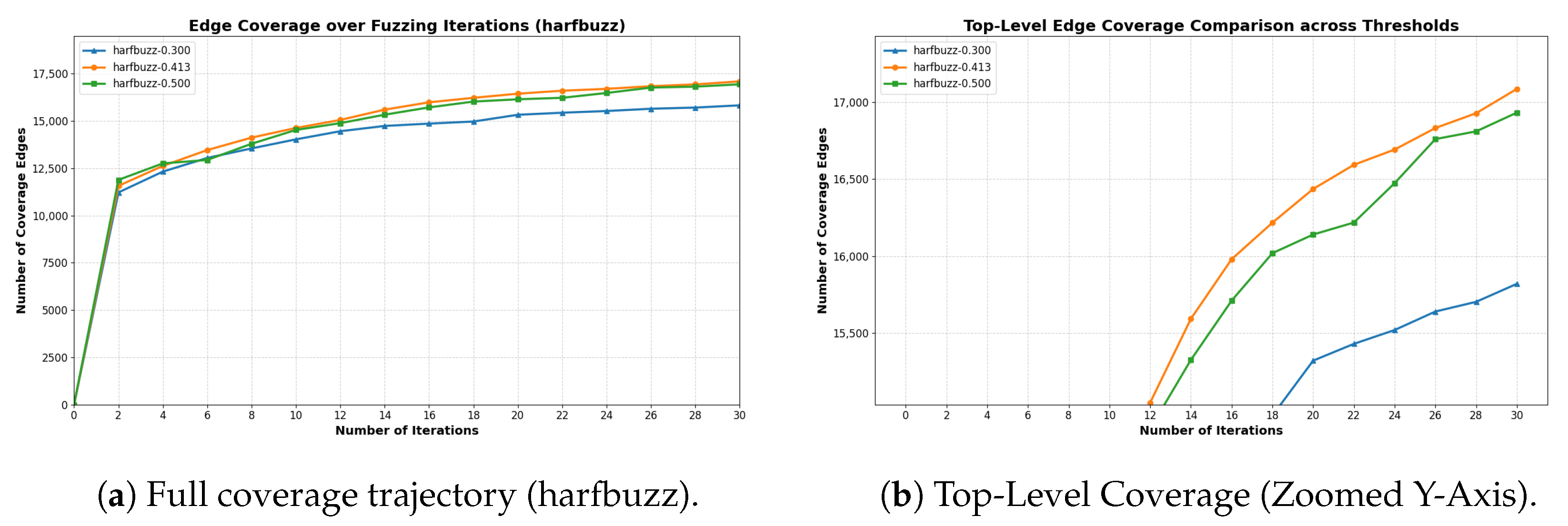

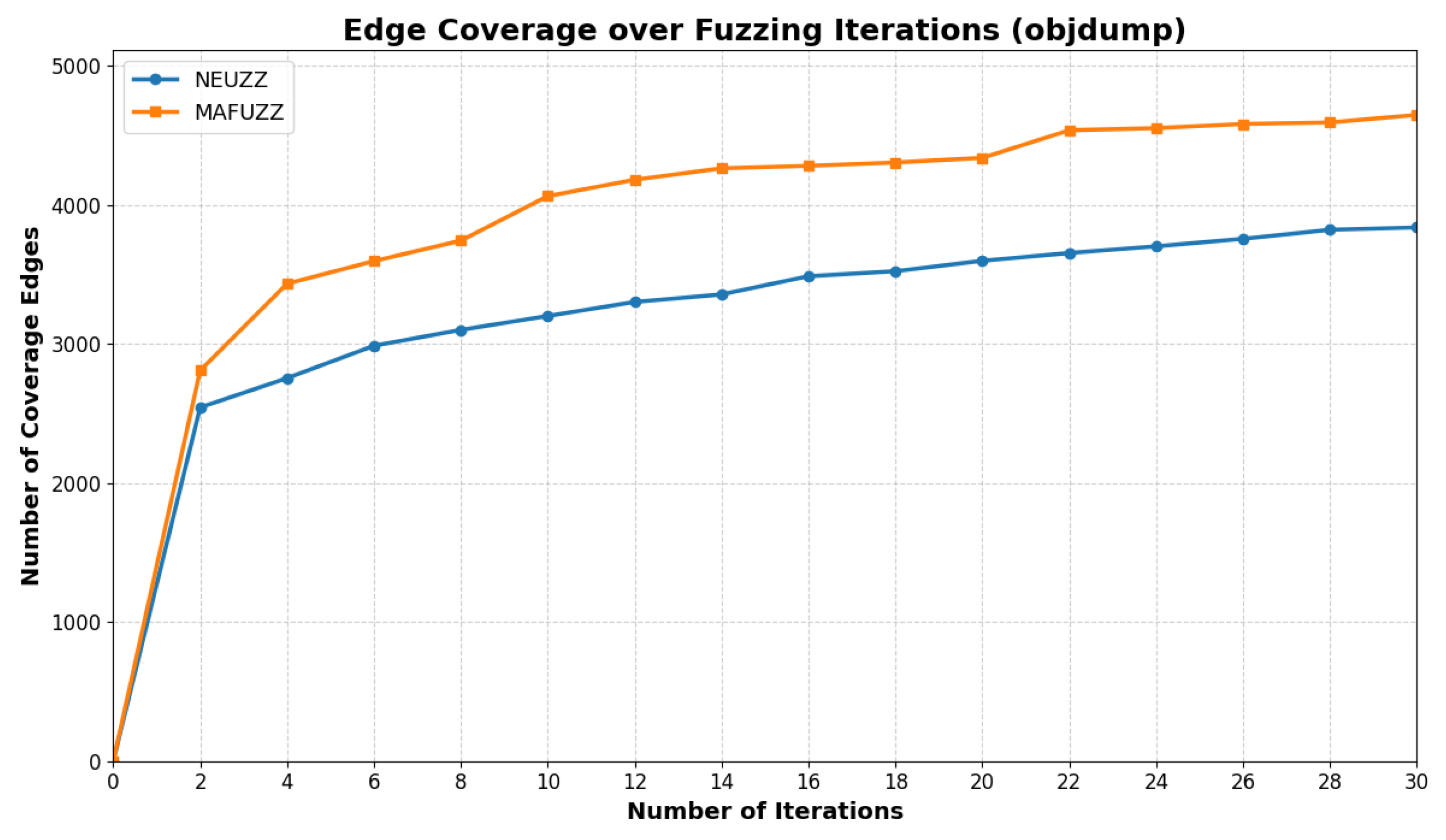

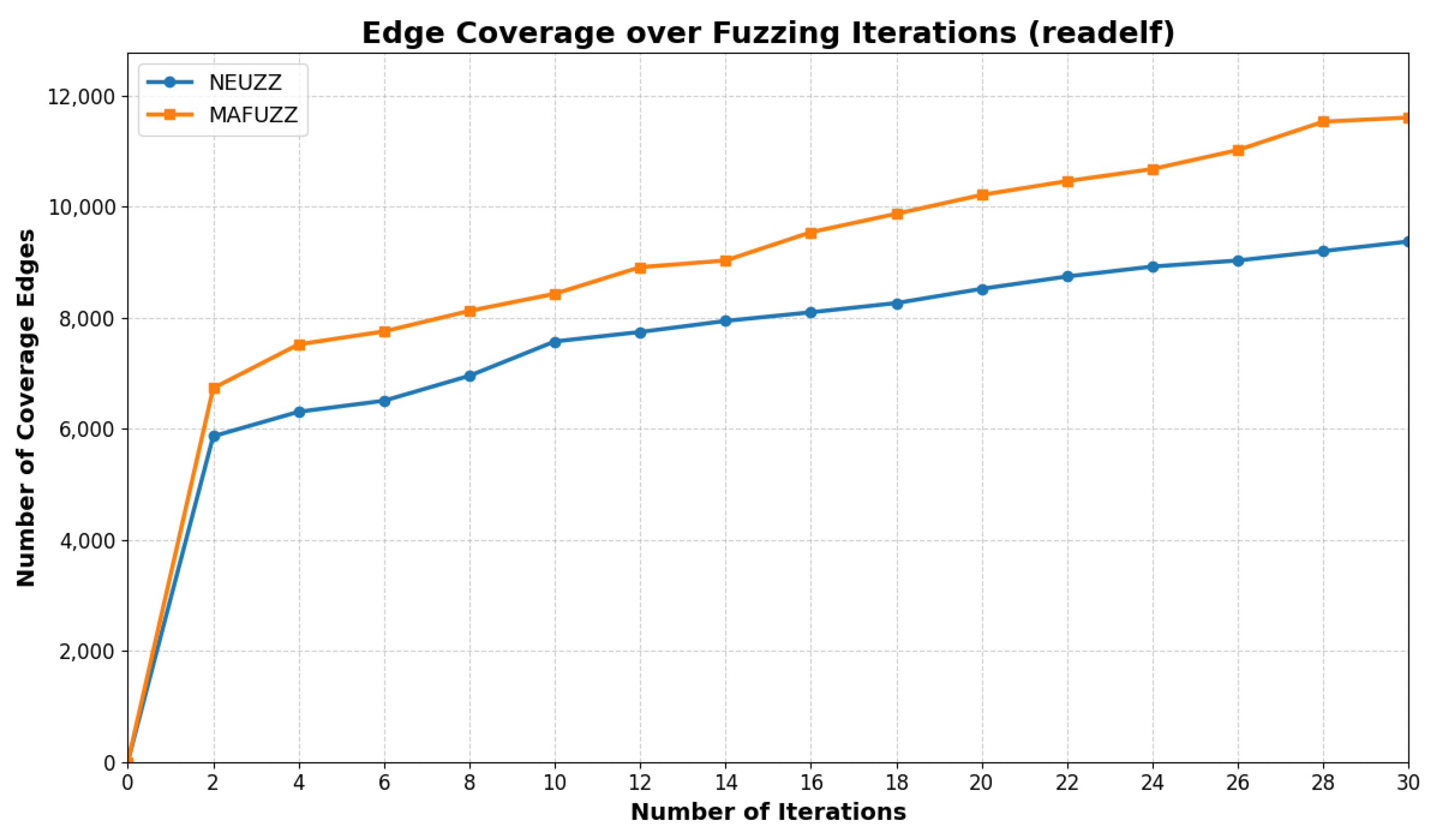

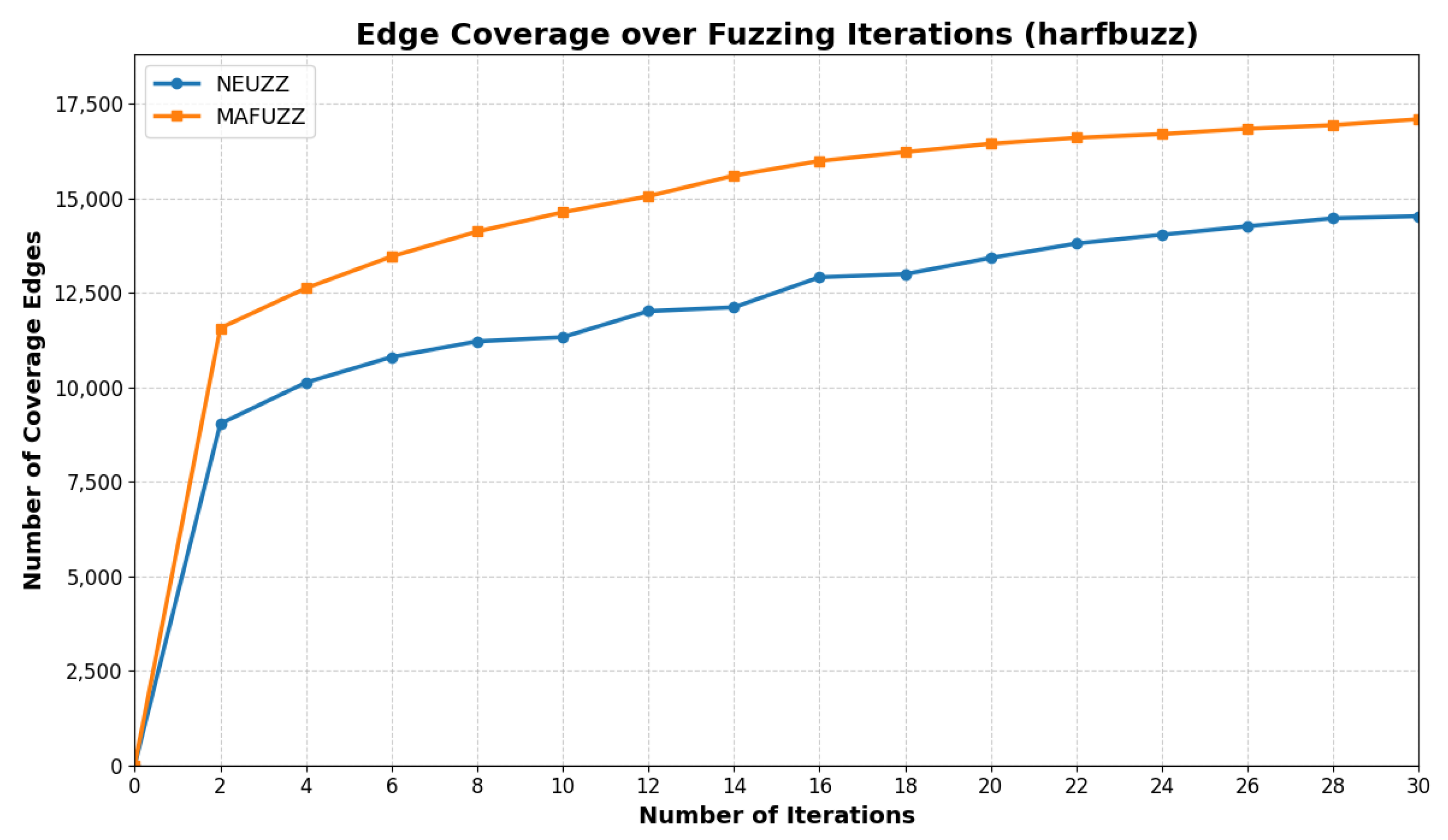

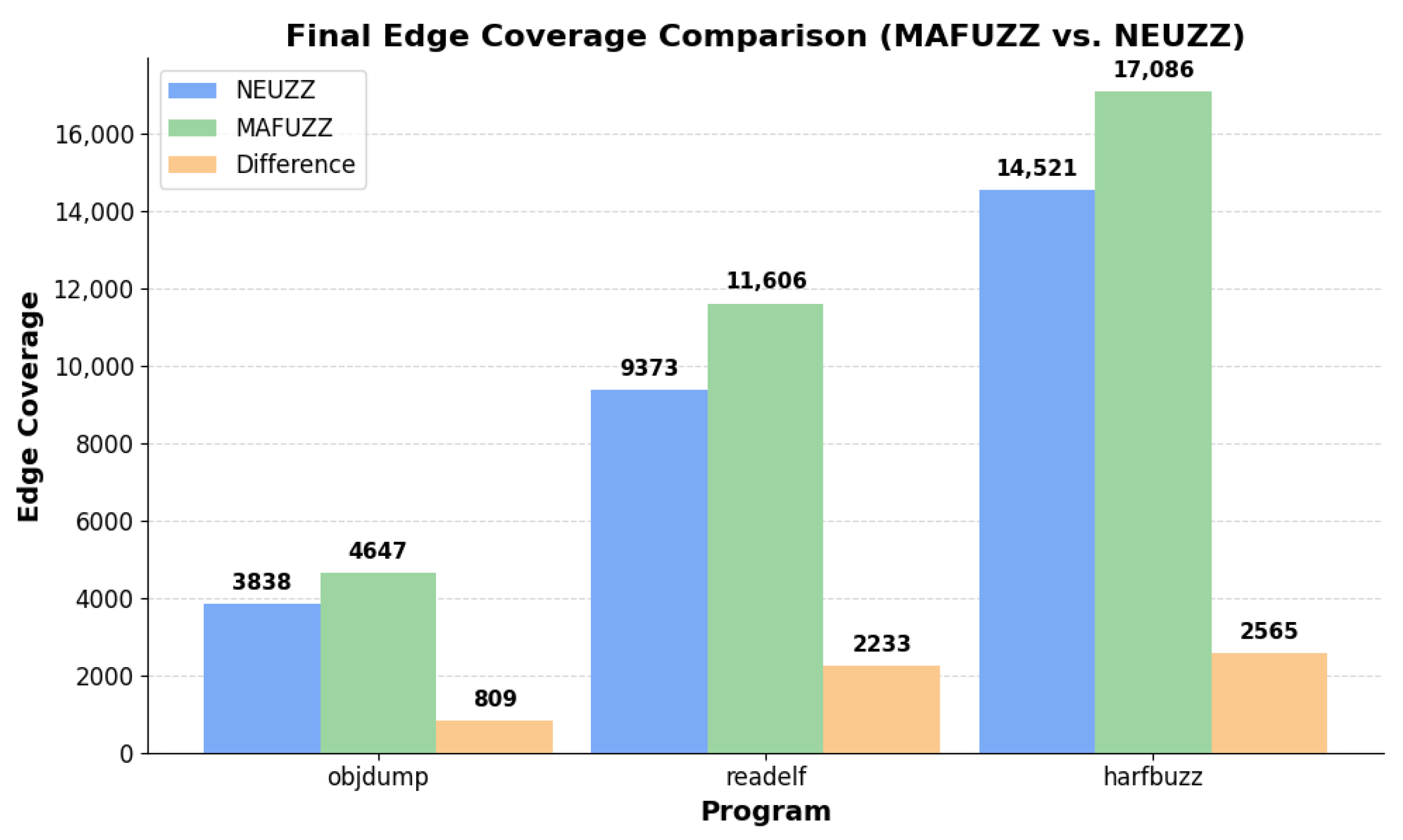

Figure 8 presents the architectural differences between NEUZZ and our proposed framework MAFUZZ. While retaining NEUZZ’s neural smoothing and gradient-guided mutation modules, MAFUZZ introduces two key enhancements. First, a Havoc mutation module is incorporated to explore novel edge clusters that are unreachable via gradient-guided paths. Second, an adaptive controller dynamically regulates the ratio of guided versus random mutations based on the saturation level of explored edge clusters in the seed pool. This closed-loop architecture improves fuzzing efficiency by balancing precision and diversity in input mutations.

Framework overview. Figure 9 presents the overall workflow of MAFUZZ. Starting from an initial seed pool, inputs are processed by a neural smoothing model to estimate coverage gradients. An adaptive controller adjusts the ratio of gradient-guided and Havoc mutations based on coverage feedback (the two mutation types are applied sequentially, with gradient-guided mutation followed by Havoc). Mutated seeds are executed, and edge results are used to update the seed pool, forming a closed loop that continuously refines mutation strategies to improve path coverage.

Summary. In summary, MAFUZZ introduces a hybrid and adaptive mutation framework that combines the precision of gradient guidance with the exploratory strength of Havoc mutation. By dynamically regulating the two strategies according to the real-time saturation of edge clusters, MAFUZZ enhances the diversity and depth of seed mutation. This adaptive control significantly improves path coverage efficiency and robustness across different program complexities.