Abstract

An industrial-scale increase in applications of the Internet of Things (IoT), a significant number of which are based on the concept of federation, presents unique security challenges due to their distributed nature and the need for secure communication between components from different administrative domains. A federation may be created for the duration of a mission, such as military operations or Humanitarian Assistance and Disaster Relief (HADR) operations. These missions often occur in very difficult or even hostile environments, posing additional challenges for ensuring reliability and security. The heterogeneity of devices, protocols, and security requirements in different domains further complicates the requirements for the secure distribution of data streams in federated IoT environments. The effective dissemination of data streams in federated environments also ensures the flexibility to filter and search for patterns in real-time to detect critical events or threats (e.g., fires and hostile objects) with changing information needs of end users. The paper presents a novel and practical framework for secure and reliable data stream dissemination in federated IoT environments, leveraging blockchain, Apache Kafka brokers, and microservices. To authenticate IoT devices and verify data streams, we have integrated a hardware and software IoT gateway with the Hyperledger Fabric (HLF) blockchain platform, which records the distinguishing features of IoT devices (fingerprints). In this paper, we analyzed our platform’s security, focusing on secure data distribution. We formally discussed potential attack vectors and ways to mitigate them through the platform’s design. We thoroughly assess the effectiveness of the proposed framework by conducting extensive performance tests in two setups: the Amazon Web Services (AWS) cloud-based and Raspberry Pi resource-constrained environments. Implementing our framework in the AWS cloud infrastructure has demonstrated that it is suitable for processing audiovisual streams in environments that require immediate interoperability. The results are promising, as the average time it takes for a consumer to read a verified data stream is in the order of seconds. The measured time for complete processing of an audiovisual stream corresponds to approximately 25 frames per second (fps). The results obtained also confirmed the computational stability of our framework. Furthermore, we have confirmed that our environment can be deployed on resource-constrained commercial off-the-shelf (COTS) platforms while maintaining low operational costs.

1. Introduction

We are observing an industrial-scale increase in the use of the Internet of things (IoT) in both the civilian and military spheres, a significant number of which are multi-domain. Multi-domain IoT environments such as intelligent transportation, smart power grids, resilient smart cities, advanced healthcare, and hybrid military operations increasingly adopt a federated concept. Establishing a federation aims to enable different entities to use shared resources and exchange (disseminate) information securely and efficiently without relying on a central authority, thus facilitating cooperation and increasing the resilience of the entire system.

Federated IoT environments are distributed, with their components and IoT devices located in different places and belonging to various entities. An illustrative example is the formation of a federation comprising NATO countries and non-NATO mission actors (Federated Mission Networking, FMN) []. In this model, each actor retains control over its capabilities and operations while accepting and meeting the requirements outlined in pre-negotiated and agreed-upon arrangements, such as the security policy. Another example involves the interaction of civilian services and military forces, which form a federation to provide humanitarian assistance during natural disasters (Humanitarian Assistance and Disaster Relief, HADR).

This collaboration necessitates a system architecture capable of processing data streams in real-time, filtering and searching for patterns to identify critical events (e.g., traffic congestion) or various threats (e.g., fire and hostile entities). Such requirements underscore the need for a system that can dynamically distribute data in response to the evolving information demands of end users (contextual dissemination). One approach to achieve this is by ranking information and services based on the value of information (VoI), a subjective measure that assesses the value of information to its users. Platforms like VOICE [] utilize VoI to rank information objects (IOs) and data producers according to their relevance and usefulness.

The aforementioned system presents unique security and reliability challenges due to its distributed nature and the necessity for secure communication between components from different administrative domains. To an even greater extent, fulfilling secure data stream distribution requirements due to the heterogeneity of devices and protocols. The basic problem remains: how do we carry out the acquisition and fusion of data from various sources with different levels of trust and operate in computing environments with varying degrees of reliability and security? To solve it, it is first necessary to determine the answer to the following sub-questions:

- Security gap: How do we implement secure data distribution among participants in a federated environment, adhering to the data-centric security paradigm []? This involves ensuring robust data protection starting from the point of origin, maintaining integrity throughout its entire life cycle, and facilitating granular access control mechanisms to enforce strict data permissions.

- Identity management gap: How do we manage the identity of devices? How do we identify devices?

- Real-time data access gap: How do we enable the processing and sharing of relevant IOs based on their VoI, importance, and relevance within a specified time range?

- Resource allocation gap: How do we dynamically allocate resources to effectively manage trade-offs in a data dissemination environment while taking customer key performance indicators (KPIs) into account?

- Network integration and interoperability gap: How do we organize interconnections, especially between unclassified systems (civilian systems) and military systems?

- Resilience and centralization gap: How do we ensure data availability in constrained (partially isolated) environments?

To fulfill the mentioned gaps effectively, it is essential to integrate various concepts and technologies while considering the security requirements of Federated IoT Environments. This integration involves implementing a data-centric authentication mechanism that employs a unique identity (fingerprint) that can be reliably stored within a DLT. Additionally, this involves establishing a data stream processing system built on a lightweight and manageable pool of microservices, complemented by context-based real-time data dissemination technologies. Consequently, this paper addresses a multi-layered framework architecture aimed at ensuring the secure and reliable dissemination of data streams within a multi-organizational federation environment. Our framework implements data-centric authentication based on the unique identities of IoT devices. We consider our main contributions to be as follows:

- We have developed a novel and practical framework for the secure and dynamic dissemination of data streams within a multi-organizational federation environment utilizing Hyperledger Fabric [], Apache Kafka as data queuing technology, along with a microservice processing logic to verify and disseminate data streams (by utilizing the Kafka Streams API library in Java [] and the Sarama library in Go []);

- We have integrated a hardware-software IoT gateway with a DLT (Hyperledger Fabric) to authenticate IoT devices and verify data streams, which involves the deployment of the fingerprint enrichment layer in conjunction with the protocol forwarder (proxy) component;

- We validated the effectiveness of the proposed framework by conducting extensive performance tests in two setups: the Amazon Web Services cloud-based and the Raspberry Pi resource-constrained environment.

The rationale for utilizing Kafka is grounded in its implementation of a publish–subscribe model and its adherence to a commercial off-the-shelf (COTS) approach. These are crucial for addressing the needs and constraints of federated IoT environments, where we emphasize interoperability between military and civilian systems. Moreover, we have adopted the Kafka Streams API library, a decision motivated by the inherent advantages of Kafka technology (the library leverages Kafka’s built-in mechanisms), including its fault tolerance and low operational costs associated with infrastructure deployment.

Furthermore, we have opted to integrate Hyperledger Fabric technology into our system, which requires that all participating parties be familiar with each other. This results in a permissioned blockchain that employs public key infrastructure. Our solution also features a single global instance of the distributed ledger, enabling the seamless transfer (mobility) of devices between organizations within the federation. This allows these devices to leverage another organization’s infrastructure for secure data dissemination. Additionally, private data channels can be established between organizations, ensuring that the identities of selected devices remain confidential from others.

By integrating these proposed solutions, multi-domain IoT environments can ensure secure and efficient distribution of data streams while effectively addressing the unique challenges associated with IoT devices and heterogeneous networks. This involves the implementation of end-to-end encryption to prevent unauthorized access during transmission, robust authentication measures for both devices and data, and utilizing blockchain technology to maintain data integrity and accountability. Additionally, achieving optimal performance and resource utilization can be facilitated through context-aware data dissemination, prioritizing information based on its significance and relevance. Furthermore, processing data streams locally at edge gateways can help minimize latency and enhance security.

The remainder of the article is structured as follows: Section 2 provides an overview of the relevant research that serves as the foundation for our solution. Section 3 details our multi-layered framework architecture, highlighting its key components and the security and reliability mechanisms employed to enhance confidentiality, integrity, availability, and accountability for data: in-process, in-transit, and at-rest. Section 4 outlines our experimental framework’s proposed message types and key operations. Section 5 presents a high-level security risk assessment considering several security and reliability threats. Section 6 introduces two configurations of our environment: one cloud-based and the other resource-constrained, along with benchmarks for latency metrics. Section 7 includes a thorough discussion of the results we obtained. Section 8 summarizes our conclusions and delineates our goals for future work. Lastly, the abbreviations used throughout this publication are defined at the end.

2. Related Work

This section presents related works that have had the greatest impact on the proposed framework architecture for secure and reliable data stream dissemination in federated IoT environments. These works address the basic problems related to the following:

- Securing data processed by IoT devices with the usage of distributed ledger technology and blockchain mechanisms;

- Behavior-based IoT device identification (IoT distinctive features);

- The integration of heterogeneous military and civilian systems based on IoT devices, where specific KPIs must be achieved (e.g., zero-day interoperability).

Additionally, at the end of this section, we briefly discuss our solution against the analyzed works.

2.1. Blockchain-Based Device and Data Protection Mechanisms

The literature presents numerous attempts to integrate the IoT and blockchain (distributed ledger) technology. Ref. [] describes the challenges and benefits of integrating blockchain with the IoT and its impact on the security of processed data. Similarly, in [,], a proposal for a four-tier structural model of blockchain and the IoT is presented.

Guo et al. [] proposed a mechanism for authenticating IoT devices in different domains, where cooperating DLTs operating in the master–slave mode were used for data exchange. Xu et al. [] presented the DIoTA framework based on a private Hyperledger Fabric blockchain, which was used to protect the authenticity of data processed by IoT devices.

Ref. [] proposed an access control mechanism for devices, which used the Ethereum public blockchain placed in the Fog layer and public key infrastructure based on elliptic curves. Furthermore, NIST defined attribute-based access control (ABAC) [] as a logical access control method that authorizes actions based on the attributes of both the publisher and subscriber, requested operations, and the specific context (current situational awareness).

H. Song et al. [] proposed a blockchain-based ABAC system that employs smart contracts to manage dynamic policies, which are crucial in environments with frequently changing attributes, such as the IoT. Additionally, Lu Ye et al. [] introduced an access control scheme that integrates blockchain with ciphertext-policy attribute-based encryption, featuring fine-grained attribute revocation specifically designed for cloud health systems.

2.2. Fingerprint Sampling Techniques

In addition to classification methods for identifying a group or type of similar IoT devices [], an interesting area of research is fingerprint techniques [,], which aim to identify a unique image of a device’s identity through the appropriate selection of its distinctive features. The fundamental premise of fingerprint methods is the occurrence of manufacturing errors and configuration distinctions, which implies the non-existence of two identical devices. Subsequently, the main challenge associated with fingerprinting techniques is the selection of non-ephemeral parameters that make it possible to distinguish devices uniquely. Generally, three main fingerprint method classes can be identified for IoT devices as a result of distinction:

- Hardware/Software class: hardware and software features of the device;

- Flow class: characteristics of generated network traffic;

- RF class: characteristics of generated radio signals.

The authors of the LAAFFI framework [] presented a protocol designed to authenticate devices in federated environments based on unique hardware and software parameters extracted from a given IoT device.

Concerning distinctive radio features, Sanogo et al. [] evaluated the power spectral density parameter. Ref. [] indicates a proposal to use neural networks to identify devices based on the physical unclonable function in combination with radio features: frequency offset, in-phase (I) and quadrature (Q) imbalance, and channel distortion.

Charyyev et al. [] proposed the LSIF fingerprint technique, where the Nilsimsa hash function was used to determine a unique IoT device network flow. In contrast, [] demonstrated the inter-arrival time (IAT) differences between successively received data packets as a unique identification parameter.

2.3. Reliable Data Stream Dissemination

In addressing one of the primary challenges of deploying various IoT devices within federated environments, which necessitates the processing of vast data streams in a secure, reliable, and context-dependent manner, we undertook a thorough analysis of relevant literature on this subject. Our emphasis was also on developing solutions to the sub-questions outlined in Section 1, particularly those concerning the gaps in real-time data access, network integration, interoperability, and security, all of which can be effectively tackled with a data-centric approach.

Notably, NATO countries continually refine their requirements (KPIs) to address the mentioned challenges. Moreover, they establish research groups dedicated to identifying optimal solutions for coalition data dissemination systems within the framework of Federated Mission Networking.

Jansen et al. [] developed an experimental environment involving four organizations, where data are distributed across two configurations. The first configuration employs two types of MQTT brokers: Mosquito and VerneMQ. In contrast, the second configuration operates without brokers, broadcasting MQTT streams using a connectionless protocol (e.g., user datagram protocol, UDP).

Suri et al. [] performed an analysis and performance evaluation of eight data exchange systems utilized in mobile tactical networks, revealing that the DisService author’s protocol significantly outperforms alternatives such as Redis and RabbitMQ. Additionally, another study [] suggests a data exchange system for IoT devices based on the MQTT protocol, incorporating elliptic curve cryptography for data security.

Furthermore, Yang et al. [] introduced a system architecture designed for anonymized data exchange among participants, leveraging the Federation-as-a-Service cloud service model and built upon the Hyperledger Fabric.

2.4. Discussion

Although the previously mentioned publications provide solutions for data access management, data exchange systems, and device/data authentication methods, a notable gap exists for a data dissemination system tailored for dynamic and distributed environments, such as the federated IoT. Furthermore, there is a lack of systems that incorporate device authentication techniques based on unique fingerprints while ensuring data protection in accordance with the data-centric paradigm.

In our literature analysis, we have not identified a solution that effectively combines components of a distributed ledger (specifically, Hyperledger Fabric), data queue systems (like Apache Kafka), and stream processing microservices to address the identified gap in data dissemination.

Most of the reviewed publications rely on a trusted third-party infrastructure and a private DLT to enhance the security of processed data. In contrast to the approaches taken by Guo et al. (master–slave chain) [] and Xu et al. (DIoTA framework) [], our solution utilizes a single global instance of the distributed ledger. This allows for the seamless transfer of devices between organizations within the federation, enabling these devices to use another organization’s infrastructure for secure data dissemination.

Refs. [,] do not assess data queue technologies like Apache Kafka. Moreover, they concentrate solely on efficient data exchange and overlook the security of data streams. In our proposed solution, it is feasible to implement attribute-based access control while concurrently adhering to the data-centric paradigm [].

Furthermore, we have prioritized interoperability between military and civilian systems, particularly considering the limitations of such environments. To this end, our proposed system incorporates recommendations from the NATO IST-150 working group [], which examined disconnected, intermittent, and limited (DIL) tactical networks. Our system employs a publish–subscribe model and utilizes commercial off-the-shelf (COTS) components that are widely available, thereby minimizing operational costs, which is crucial for ensuring immediate interoperability.

Additionally, our work distinguishes the key used for securing the communication channel of IoT devices from the key used for data authenticity protection. Unlike the DIoTA framework, which employs an HMAC-based commitment scheme with randomly generated keys for message authentication, we propose using the unique fingerprint samples of the device. Specifically, we propose to utilize a sealing key as a hybrid identity image based on a combination of several fingerprint method classes.

Finally, our framework (specifically, stream microservices) can be enhanced with components that analyze, classify, and share data streams based on the specific VoI that IOs provide to consumers (context), as well as their relevance [].

3. Framework Design

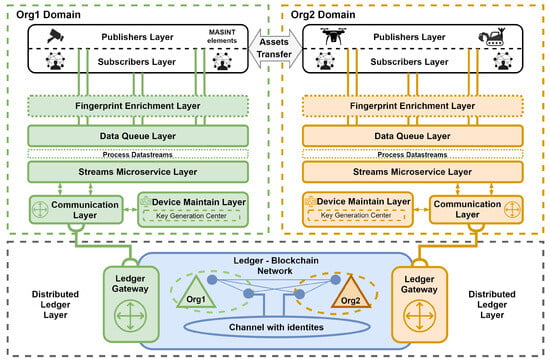

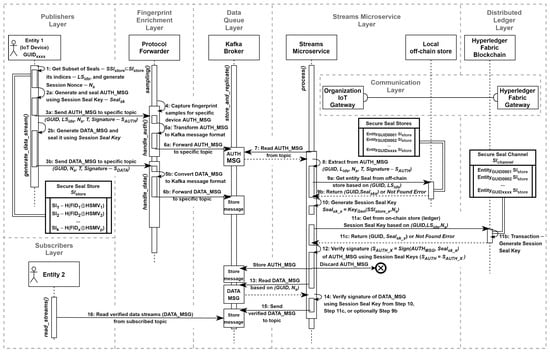

This section outlines our multilayered framework architecture for the reliable dissemination of data streams (messages) within a multi-organizational federation environment that requires the data-centric security approach. Figure 1 depicts the system’s overall structure of a federation comprising two organizations: Org1 and Org2. We proposed the adoption of specific technologies to address the various layers of our system. In the data queue layer [], we deployed the Apache Kafka stream-processing platform []. For the distributed ledger layer, we selected the Hyperledger Fabric solution. Finally, for the streams microservice layer, we implemented two of the same streams processing logic, utilizing the Kafka Streams API library in Java [] and the Sarama library in Go [].

Figure 1.

Proposed framework general overview.

Apache Kafka brokers ingest, merge, and replicate data streams generated by publishers (producers) and provide access to this data for subscribers (consumers). Simultaneously, the proposed system facilitates the verification of data streams based on device fingerprints (identities), which are redundantly stored in a distributed Hyperledger Fabric blockchain. Additionally, a hardware–software IoT gateway has been introduced to support the verification process, enabling stream processing microservices to communicate with the distributed ledger. This approach allows devices to leverage various organizational data queue Layers, ensuring secure and reliable data dissemination while adhering to predefined policies, such as one-to-many relationships. As part of our pluggable architecture, we have identified the following layers:

- Publisher Layer: This encompasses entities that facilitate the data-centric protection of generated (produced) data streams through the sealing process, where the sealing key is derived from the device’s fingerprint (identity) defined during the registration operation managed by the device maintain layer;

- Subscriber Layer: This is made up of authenticated and authorized entities that read (consume) available and verified data streams from the data queue layer according to the access policy (e.g., one to many);

- Data Queue layer: This is composed of distributed data queues that facilitate the acquisition, merging, storage, and replication of data streams transmitted from the publisher layer, subsequently making it accessible to the subscribers layer;

- Fingerprint Enrichment Layer: This can transport connectionless protocols like UDP by employing a protocol forwarder that converts data streams into a connection-oriented format (e.g., transmission control protocol, TCP). This layer is essential due to the constraints of the connection types supported by the technologies available for the data queue layer. Moreover, it facilitates device behavior-based authentication by utilizing analyzers to gather various features, including network and radio characteristics, and by enriching messages with entity fingerprint samples;

- Distributed Ledger Layer: This enables the interchangeable deployment of various DLT. Furthermore, it reliably stores the identities of devices belonging to organizations within the federation and retains information about entity data quality and subscriber data context dissemination [];

- Communication Layer: This enables the streams microservice and the device maintain layer to communicate with the distributed ledger via a hardware–software IoT gateway;

- Streams Microservice Layer: This is tasked with verifying sealed data streams originating from entities within the publisher layer. Additionally, it has the capability to analyze, categorize, and disseminate streams pertinent to these entities, and enrich them (e.g., by detecting objects during image processing);

- Device Maintain Layer: This manages the device registration operation, with additional responsibilities including updating and revoking identities. The registration process is initiated with the establishment of the entity’s identity through the use of fingerprint methods.

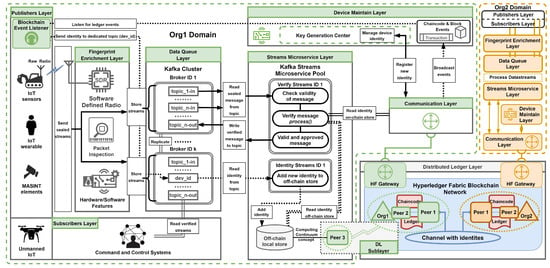

Figure 2 illustrates our detailed architecture, where entities representing the publisher’s layer ensure the security of their data streams by sealing them with identities registered within the device maintenance layer. These sealed streams are transmitted using the available (supported) communication protocols and mediums to the data queue layer (Kafka Cluster). In this layer, the streams are stored under a specific topic, such as topic_1-in. Additionally, the fingerprint enrichment layer participates (proxies) in transmission utilizing transformation of connectionless messages into a connection-oriented message format.

Figure 2.

Proposed framework detailed overview.

The subsequent step occurs in the streams microservice layer, which sequentially retrieves messages from the brokers to verify the sealed messages. The verify streams microservice queries the distributed ledger layer (Hyperledger Fabric) through the communication layer (IoT gateway) to obtain an image of the device’s identity. Next, the identity extracted from the message is compared with the one stored in the ledger.

Once a message is verified and approved, it is written back to the Kafka Cluster under a dedicated topic, such as topic_1-out, making it accessible to entities representing the subscriber’s layer. Optionally, any rejected messages during this process can be directed to a separate topic to aid in identifying and detecting potentially malicious devices.

As previously mentioned, a device identity image is critical to the verification process. The device maintenance layer encompasses a registration operation through the key generation center, during which the device identity is established using hybrid fingerprint techniques. These techniques determine the specific hardware and software features, generated network characteristics, and radio signals related to the device. Once the identity is defined, it is redundantly added (stored) in the distributed ledger layer through the execution of chaincode (a group of smart contracts). Successful registration is contingent upon achieving consensus among the participating federated organizations.

Moreover, within our framework, we have delineated two categories of data stores: the on-chain store and the off-chain store. The on-chain store refers to the ledger, while the off-chain store encompasses local storage utilized by applications and microservices, such as those leveraging the Kafka Streams API library. One of our key proposals is to employ the off-chain store as a micro-caching mechanism to store identities from the on-chain store temporarily. This mechanism can significantly mitigate the delays associated with ledger queries and improve the overall performance of an individual instance of the verify streams microservice.

To realize this, we proposed a minor extension to our environment. In the publisher’s layer, a dedicated listening application known as blockchain event listeners can be utilized to manage events emitted from the distributed ledger layer. These events will pertain to ledger operations, including device registration, updates, and revocations. Furthermore, a dedicated topic, such as dev_id, specifically for storing these events, can be established. Subsequently, the identity streams microservice can be deployed to add or update identities in the off-chain store. Additionally, by enforcing appropriate retention policies for this designated topic, we can preserve historical event-based records of device identity changes, functioning as a blockchain topic.

Alternatively, the off-chain store can be integrated with the continuum computing concept (CC) [], which involves providing computing capabilities across the diverse layers of an IoT system (edge, fog, and cloud). The fundamental notion of this concept is to relocate high-performance cloud-based services to lower layers. While this escalates resource management’s complexity, it facilitates deploying services that demand ubiquitous and efficient computing capabilities. The concept also aims to optimize resource allocation, ensuring that devices perform service tasks as close as possible to data sources.

In the realm of computing modeling, our system is designed to process incoming requests sequentially to minimize the decay of information objects. Our architecture facilitates the dynamic deployment of microservices at all layers of the IoT system, offering both horizontal and vertical scaling for resource allocation. It also accommodates various deployment contexts.

The upcoming headings will provide an in-depth overview of the mentioned layers, along with mechanisms that enhance confidentiality, integrity, availability, and accountability for data: in-process, in-transit, and at-rest. Furthermore, a thorough analysis of the built-in security and reliability features associated with each specified technology will be provided.

3.1. Publisher’s Layer

The publisher layer encompasses entities that facilitate the data-centric protection of generated data streams through symmetric encryption and sealing operations. This leverages digital signatures and the device’s hybrid identity. Although the selection of encryption and digital signature algorithms is not the primary focus of this study, it invites consideration of the potential applications of lightweight and quantum-resilient cryptography. In 2022, NIST evaluated and approved two quantum-resistant algorithms for digital signatures []: FALCON and SPHINCS+. These algorithms utilize distinct mathematical approaches: lattice and hash systems, respectively. In our research, however, we opted for well-established algorithms such as AES for encryption and HMAC for signatures. Devices with limited resources, like Raspberry Pi, have adequate computational power to perform the cryptographic operations required by these algorithms.

3.2. Subscriber’s Layer

The subscriber layer comprises entities that have been granted authorized access to the data queue layer. Our framework’s architecture is designed to ensure the reliable dissemination of data across all levels of command: tactical, operational, and strategic. Additionally, the framework enables seamless data sharing across different domains within a federation, which includes allied forces and civilian institutions, fostering collaboration and coordinated efforts toward common objectives. Moreover, our system adeptly manages data security along the path from producer to consumer, while also implementing fine-grained access control mechanisms.

3.3. Data Queue Layer

The layer in the headline holds a pivotal position within our system, performing a multitude of important functions:

- Storing and replicating sealed data streams within the layer;

- Storing invalid records to trigger a detection mechanism of potentially malicious entities;

- Intermediating within the micro-caching mechanism by linking the publisher layer (blockchain event listeners) with the streams microservice layer.

The publisher’s layer comprises numerous data sources, each generating real-time data streams that require processing. To facilitate the seamless processing of these messages, the Apache Kafka solution has been used. For example, Kul et al. [] introduced a framework that leverages Kafka and neural networks to monitor (track) vehicles. In their study, the dataset was represented as data streams captured by CCTV.

Apache Kafka is a stream-message system that utilizes a producer–broker–consumer (publish–subscribe) model and classifies messages based on their topics. The Kafka Cluster is composed of message brokers that acquire, merge, and store data generated from the publisher layer (producers), and make it available to the subscriber layer (consumers). The layer is designed for the availability and reliability of data records, thanks to built-in synchronization and distributed data replication between brokers. Furthermore, it leverages serialization and compression mechanisms, such as lz4 and gzip, making messages payload format- and protocol-independent.

Furthermore, Kafka technology incorporates built-in components and mechanisms for defining and managing entity authorization through access control lists (ACLs). Essentially, ACLs outline which entities can access specific resources (topics) and delineate allowed operations (e.g, READ and WRITE) to perform on those resources. Establishing a distinct principal for each entity (device) and assigning only the necessary ACLs enables the processes of debugging and auditing, leading to the identification of which entity executes each operation.

However, large Kafka Cluster topologies that involve multiple publishing and subscribing entities (numerous topics) often encounter significant challenges in managing entity authorization. However, it is feasible to implement a more intricate authorization hierarchy within the cluster. This can imply an additional operational burden. In a previous article [], we explored the application of ABAC in our environment. This solution can alleviate the authorization burden on the Kafka Cluster, thereby freeing its internal resources and allowing the streams microservice layer to manage access control more efficiently.

3.4. Fingerprint Enrichment Layer

A notable limitation of Kafka technology is its dependence on connection-oriented protocols, specifically the TCP. In contrast, our framework requires the ability to communicate with entities utilizing connectionless protocols, such as the UDP. To address this challenge, we propose the introduction of a fingerprint enrichment layer. A fundamental component of this layer is a protocol forwarder, which is designed to handle connectionless messages and convert them into a connection-oriented message format.

Furthermore, the mentioned layer can contribute to device behavior-based (fingerprint-compliance) authentication. This can be accomplished through the utilization of dedicated components, referred to as analyzers, which gather radio signals, network flows, and hardware features. For the radio fingerprinting analyzer, we propose employing a software-defined radio. This system replaces or supplements traditional hardware components, such as mixers, filters, amplifiers, and detectors, with software-based digital signal processing techniques. Providing flexibility, cost-effectiveness, versatile wideband reception, and enhanced interoperability within our architecture and radio communication systems. For the network analyzer, we can capture distinctive features from the headers of TCP/IP layers through comprehensive packet inspection. A detailed description of the proposed analyzer’s subsystem is beyond the scope of this publication and will be the subject of our future research.

3.5. Distributed Ledger Layer

Our experimental framework features a pluggable structure that allows for the interchangeable deployment of different DLTs. The distributed ledger layer indicates several functions:

- Redundant and reliable storing of all identities of devices belonging to organizations that participate in the federation;

- Redundant and reliable storing information about entities regarding the value of information and context dissemination;

- Secure handling of the chaincode (smart contracts) execution (transaction steps) during the device registration operation;

- Obtaining approvals (transaction authorizations) under endorsement policy from participating organizations;

- Generating events related to actions on the distributed ledger (blockchain);

- Being an integrated part of the verification process of devices and sealed messages.

Based on a performance comparison, we have opted to integrate the Hyperledger Fabric technology with our system. This particular technology has achieved a transaction throughput of 10,000 tps, as documented in []. It is noteworthy that the Ethereum ledger had a lower throughput. However, for Ethereum, only the proof of work consensus protocol was examined. At the same time, the currently less energy-intensive and scalable proof of stake consensus was not covered in these experiments.

The Fabric DLT adopts the practical Byzantine fault tolerance consensus algorithm, which mandates that all participating parties know of one another. As a consequence, it is a permissioned blockchain where public key infrastructure is deployed. To register the identity of devices, complex business logic is executed using multilingual chaincode (Go, Java, and Node.js). Moreover, the target execution environment for chaincode is a docker container, and its resources can be controlled (e.g., limited and isolated) through a Linux kernel feature, cgroups. Also, private data channels can be created between organizations, where the identity of selected devices can be hidden from other organizations.

The on-chain store used in Fabric technology consists of systematically organized structures: world state and transaction log. The world state serves as the ledger’s current state database, while the transaction log acts as a change data capture mechanism. This mechanism incrementally records both approved and rejected transactions, ensuring that data at rest are secure and accountable.

3.6. Communication Layer

The hardware–software IoT gateway manages communication between the device maintain layer, the streams microservice layer, and the distributed ledger layer via an interface to the Hyperledger Fabric Gateway services [] that run on the ledger nodes. The communication layer facilitates seamless communication exchange for the following actions:

- Performing queries to the on-chain store to read the examined identity from the distributed ledger layer during the verification process;

- Participating in entity identities registering, updating, and revoking operations called by the device maintain layer;

- Broadcasting of events generated by the distributed ledger layer as a result of approved transactions and blocks.

The IoT gateway utilizes a dynamic connection profile with the distributed ledger layer. This profile leverages the internal capabilities of ledger nodes to detect alterations in the network topology in real-time, thus ensuring the seamless functioning of the streams microservices layer, even in the event of node failure. Furthermore, the checkpointing mechanism proves to be beneficial, as it enables uninterrupted monitoring of ledger events without the risk of data loss due to connection interruptions.

3.7. Streams Microservice Layer

The streams microservice layer is responsible for verifying sealed messages originating from entities within the publisher’s layer. This layer is notable for executing complex operations on individual messages (records) in a sequential manner. To enhance the efficiency of message (stream) processing, selecting an appropriate framework or library is essential. Evaluations conducted by Karimov et al. [] and Poel et al. [] assessed various solutions designed for this purpose. Both studies concluded that the Apache Flink framework outperformed alternatives such as Kafka Streams API, Spark Streaming, and Structured Streaming, earning the highest overall ranking.

However, our proposed system architecture leverages the Streams API library to define custom verification processing logic. This choice is based on the inherent advantages of Kafka technology, including its failover and fault tolerance features. The Streams API library offers two approaches for implementing processing logic: the high-level Streams DSL and the low-level Processor API. We chose the Processor API for its pluggable architecture, which facilitates the deployment of various types of local off-chain stores. Moreover, the library employs a semantic guarantee pattern that ensures each message is processed exactly once from start to finish, thereby preventing any loss or duplication of messages in the event of a stream processor failure.

We opted against using the Spark and Structured Streaming frameworks as they rely on micro-batching, which processes messages within fixed time windows. Similarly, we did not consider the Apache Flink framework because it requires a separate processing cluster, which would increase operational costs for infrastructure deployment and negatively impact interoperability.

It is essential to highlight that our experimental system can further leverage the Kafka Streams API to support a range of tasks, including data enrichment, data quality assessment, and data context dissemination. In particular, this capability can be applied to object detection and classification during image processing. Figure 3 showcases the integration of our system for the mentioned use case.

Figure 3.

Message enrichment within our experimental system.

3.8. Device Maintain Layer

The primary function of the device maintain layer is to manage device registration operations, with additional responsibilities including updating and revoking device identity. The registration process begins with establishing the entity’s identity through hybrid fingerprint techniques. Entities of the publisher’s Layer will use these identities during the sealing process, where the device identity image, working as a key, will be used with digital signatures to seal data streams sent to the data queue layer.

In the process of defining entity identity, we advocate for the use of a confidential computing strategy [] that incorporates the defense-in-depth and hardware root of trust concepts. This strategy involves the implementation of multiple heterogeneous security layers (countermeasures) that are built on highly reliable hardware, firmware, and software components. These countermeasures are essential for executing critical security functions, such as session key generation and the secure storage of cryptographic materials, ensuring that any adverse operations not detected by one technology can still be identified and mitigated by another.

To safeguard data across their various states, whether in process, in transit, or at rest, secure enclaves, including trusted execution environments (TEEs), hardware security modules (HSMs), or trusted platform modules (TPMs), can be employed. TEEs provide a secure area within the processor, whereas HSMs are specialized hardware created specifically for key storage. Meanwhile, TPMs are hardware chips that offer a range of security functions, including secure key storage and platform (entity) integrity checks.

The specific procedures for key management fall outside the scope of this article. Instead, we propose a general procedure for defining the entity key (identity). During the registration operation, the device administrator places the device in an RF-shielded chamber to minimize potential interference that could impact the radio waves emitted by the device. Using specialized software and measurement equipment, the distinct characteristics of the device undergo a series of tests to establish a unique identity profile. In this study, we proposed a hybrid approach that combines several fingerprinting methods, primarily based on the parameters of the generated radio signals. The rationale for this selection includes the following:

- Limitations arising from the heterogeneity of the environment and the need to maintain the mobility of IoT devices;

- Devices’ vulnerability to extreme environmental factors (e.g., temperature and humidity);

- Autonomy from the protocols used in the network.

The complete entity management process is conducted through the communication layer. Once the identity is established, it can be securely stored using the designated storage solutions on the device. Furthermore, the identity will also be recorded in the distributed ledger layer and may optionally be retained in the off-chain stores of the streams microservice layer.

4. Framework Basic Operations

This section delineates the main operations of our experimental framework, conscientiously examining the interrelationships among system layers and the flow of messages. Notably, we have integrated certain elements conceptualized by Jarosz et al. concerning a novel LAAFFI protocol [].

4.1. Security Mechanisms and Message Types

As outlined in Section 3.4, the fingerprint enrichment layer utilizes the protocol forwarder component, which is specifically designed to manage connectionless streams and convert them into a connection-oriented format. This process employs an ETL (extract, transform, and load) mechanism, where a series of functions is applied to the extracted data, allowing them to be transformed into a standardized format. Moreover, the producer utilizing a data serialization mechanism is solely responsible for determining how to convert the data from a specific protocol (such as MQTT) into a byte representation. In contrast, the consumer defines how to interpret the byte string received from the broker through the deserialization process.

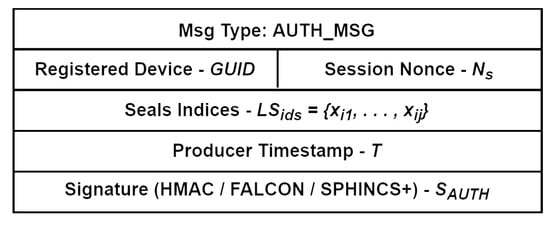

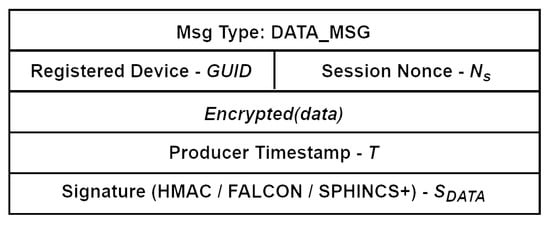

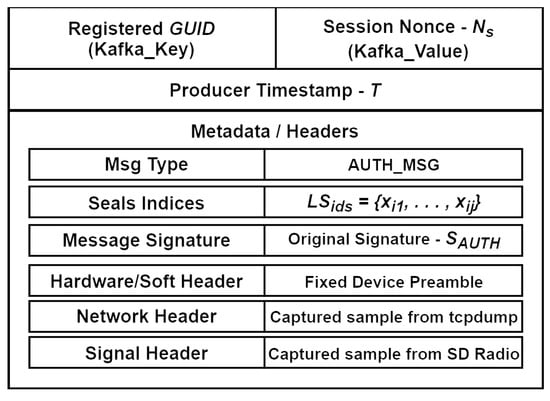

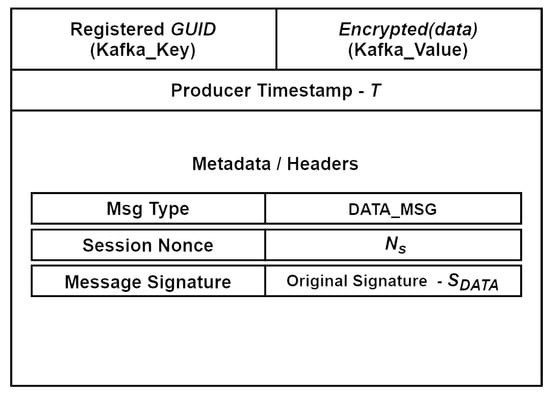

Within our environment, we proposed two primary types of messages that can be assigned to a single data stream. The first type is the data stream authentication message, referred to as AUTH_MSG (Figure 4). The second type is the session-related data stream message, known as DATA_MSG (Figure 5). Specific message fields are described below:

- Globally Unique Identifier, : This is assigned to the entity during the registration process in the device maintain layer. It functions as a unique identifier, ensuring that each device can be distinctly recognized within a set of registered devices. For example, it may be represented as a human-readable combination of the federated organization name, type, and number, such as ORG1-SENSOR-0001;

- Session Nonce, : This is a unique pseudo-random value generated by the entity that identifies a specific data stream, thus facilitating the correlation between the AUTH_MSG and the DATA_MSG;

- Seals Indices, : These consist of a subset of indexes for the seals selected from the secure seal store, , where the seal . For each specific seal we proposed to use a hashed exclusive OR multiplication ⊕ of the entity fingerprint sample, , recorded in the entity features store, , combined with internal parameters from the hardware security module, ;

- Timestamp, T: This is used as a protection mechanism against replay attacks. When the Kafka topic is configured to use CreateTime for timestamps, the timestamp of a record will be the time that the producer sets when the record (message) is created;

- Message Signatures, , : These are utilized to guarantee both the integrity and authenticity of the data streams. These signatures are compared to signature values calculated during the processing of messages within the streams microservice layer. The seal, , or subset of seals, , and session nonce work in conjunction with a key-derivation function to generate the key for the signature function, . The use of signatures fits naturally into the architecture of our system because of the Kafka message format autonomy (independence).

Figure 4.

AUTH_MSG structure.

Figure 5.

DATA_MSG structure.

The message structures of AUTH_MSG and DATA_MSG that the Kafka Broker can handle (store and queue) are illustrated in Figure 6 and Figure 7. The Kafka_Key and Kafka_Value consist of sequences of bytes that form the message. The Kafka_Key plays a crucial role in directing a message to a specific partition within the Kafka topic. When a key is provided, all messages associated with that key are directed to the same partition, ensuring they are processed sequentially. The Kafka_Value contains the data that consumers will read and process. Additionally, we have included optional header fields related to the implementation of specialized feature (behavior-based) analyzers deployed within the fingerprint enrichment layer (see Figure 6).

Figure 6.

AUTH_MSG Kafka structure.

Figure 7.

DATA_MSG Kafka structure.

We acknowledge the critical need to safeguard data throughout their various states, whether in process, in transit, or at rest. In our deployment, we have employed SSL/TLS communication solely between the streams microservice layer and the distributed ledger layer. Our framework follows a data-centric approach, which facilitates the encryption of data stream payloads using AES, along with data authentication through device fingerprints. This approach allows us to bypass the need for communication protection within the data queue layer, particularly among producers, subscribers, and brokers. This decision reduces additional overhead affecting microservice verification latency.

In conjunction with the Kafka serialization and deserialization mechanism, the aforementioned approach facilitates independent data exchange between producers and subscribers. It is essential to highlight that a microservice is unable to access the data payload, as it may be encrypted using a key that has been exchanged in advance between producers and subscribers. Nonetheless, this limitation does not impact the verification process of the data streams. A detailed discussion of this topic was provided in our previous work [].

Additionally, to safeguard the and during transit, we propose utilizing an encryption mechanism that employs one-time pre-shared keys, which will be securely maintained solely within the entity (e.g., event listener) and the microservice instance. To protect these stores in an off-chain environment (at-rest state), we recommend implementing the confidential computing strategy and secure enclaves, as detailed in Section 3.8.

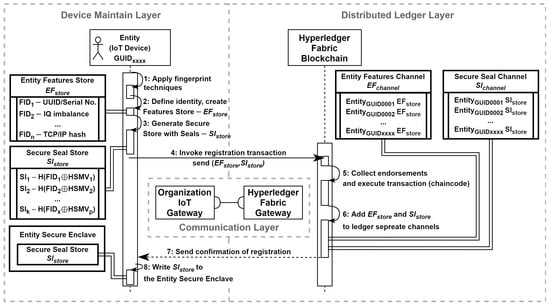

4.2. Entity Registration

The top-level sequence diagram (Figure 8) outlines the message flow and the specific actions (steps) that are related to the operation of registering entity identity:

- Step 1: The device registration process begins with the device maintain layer defining an entity’s identity through hybrid fingerprint techniques. This operation is conducted within a secure enclave (e.g., TEE);

- Step 2: The entity’s identity, represented by fingerprint samples, is recorded in the entity features store, ;

- Step 3: A set of seals, , is generated based on a subset of fingerprint samples from the features store. For each specific seal, , we proposed to use a hashed exclusive OR multiplication ⊕ of the feature sample, , combined with internal parameters from the hardware security module, ;

- Step 4: The chaincode is invoked, a component of the distributed ledger layer that manages the secure transaction of adding a new identity to the ledger. This transaction incorporates entity features and secure seal stores as part of its payload, .

- Step 5: The transaction is executed upon receiving the necessary approvals from the organizations specified by the endorsement policy. This step is crucial for ensuring the integrity and transparency of the ledger, as it guarantees that all identities are accurately and securely recorded;

- Step 6: The entity features and secure seal store are recorded in the distributed ledger through separate channels: an entity features channel, , and a secure seal channel, , respectively;

- Step 7: A confirmation of the registration is sent back to the device maintain layer;

- Step 8: Considering the confidential computing strategy, the is written to the entity’s secure enclave. Any cached values related to the registration process should be cleared (wiped out).

Figure 8.

Top-level sequence diagram for registering entity identity.

4.3. Blockchain Event Listener Application

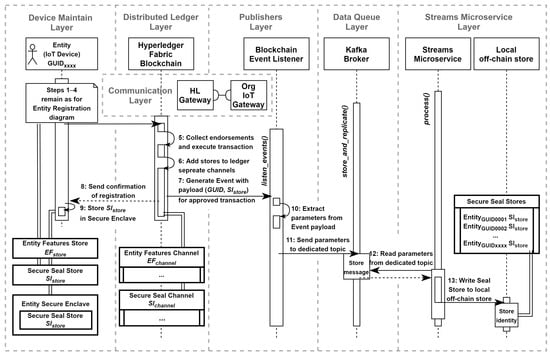

As an enhancement to the entity registration operation, we proposed to incorporate device identity into the local off-chain data store, which is a component of the streams microservice layer. This improvement aims to reduce time delays during message verification by eliminating the need for a ledger query step (micro-caching mechanism). Figure 9 presents a top-level sequence diagram for the operation, illustrating the flow of messages and interactions involved (steps):

- Step 1–4: These remain the same as for registering the entity identity top-sequence diagram (Figure 8);

- Step 5: Upon receiving the necessary approvals from the organizations specified by the endorsement policy, the transaction is executed (the distributed ledger layer);

- Step 6: The entity features and secure seal stores are recorded in the distributed ledger through separate channels: and ;

- Step 7: An application called the blockchain event listener monitors events that are emitted by the distributed ledger layer. This application represents a special entity within the publisher’s layer. As a result of the approved and executed transaction, the and the seal store are written to the event payload. Then, the event is emitted by the distributed ledger layer.

- Step 8: A confirmation of the registration is sent back to the device maintain layer;

- Step 9: The secure seal store is written to the entity’s secure enclave (the device maintain layer);

- Step 10: The blockchain event listener (the publisher’s layer) interprets the occurrence of the event, and the seal store is extracted from the event payload;

- Step 11: The seal store extracted from the event payload is written to the data queue layer (Kafka Cluster). The dedicated Kafka topic is utilized for this purpose;

- Step 12: The streams microservice layer reads the seal store from the cluster in a sequential manner;

- Step 13: During the process() method handled by the streams microservice layer, the seal store is added to the local off-chain data store and can be utilized by other streams microservices within the pool.

Figure 9.

Top-level sequence diagram for adding identities using a blockchain event listener.

4.4. Data Streams Verification

A tailored stream processing algorithm has been proposed. The top-level sequence diagram (Figure 10) outlines the message flow and the specific actions (steps) that are related to the operation of data streams verification. Additionally, the local off-chain data store has been incorporated into the streams microservice layer, which can enhance the overall performance of the system:

- Step 1: When an entity (IoT device) from the publisher’s layer intends to transmit a data stream, it invokes the generate_data_stream() method. During this execution, initial parameters for the cryptographic primitive (sealing) are chosen from the secure seal store, , where the seal, , or subset of seals, , is selected along with its corresponding indices, , and a session nonce, , is generated;

- Step 2a: For the chosen seal and the session nonce, a session seal key is generated, . Next, the AUTH_MSG is crafted and sealed using a signature algorithm founded on the specified parameters: ;

- Step 2b: Subsequently, the session-related data stream messages are sealed using the session seal key generated in Step 2a: ;

- Step 3a: The sealed AUTH_MSG is transmitted through a reliable communication channel via the fingerprint enrichment layer to the data queue layer (Kafka Cluster), stored under a designated topic. The AUTH_MSG includes ;

- Step 3b: The sealed DATA_MSG messages are transmitted in the same manner as described in Step 3a. The DATA_MSG contains ;

- Step 4: Optionally, within the fingerprint enrichment layer, specialized behavior-based analyzers handle the sampling() method to capture fingerprint samples associated with a specific device’s AUTH_MSG;

- Step 5a: The handle_auth() method transforms the raw AUTH_MSG message into a structure suitable for loading into the Kafka Broker;

- Step 5b: The handle_data() method transforms the raw DATA_MSG message into a format that can be processed by the data queue layer;

- Step 6a: The data stream authentication message, AUTH_MSG, is forwarded to the data queue layer;

- Step 6b: The session-related messages, DATA_MSG, are forwarded;

- Step 7: The process() method of the streams microservice layer sequentially reads the AUTH_MSG message from the specified topic;

- Step 8: The parameters are extracted from the AUTH_MSG for subsequent verification;

- Step 9a: The micro-caching mechanism is utilized. The verify streams microservice queries local off-chain storage to retrieve the device fingerprint (identity) that sealed the message. The query is composed of the GUID and seals indices (). As an extension, a stored procedure or a trigger-like mechanism can be employed with the local off-chain storage to generate the session seal key, . In this case, the body query is extended with the session nonce parameter (), allowing for a reduction in the number of steps necessary before proceeding to Step 12. The confidential computing strategy should be implemented to ensure the strict protection of data in transit;

- Step 9b: The appropriate identity is returned, or an identity showing Not Found Error is generated. If the extension mentioned in Step 9a is applied, an alternate response of is returned;

- Step 10: If the session seal key, , is successfully generated (or obtained), the steps related to querying the distributed ledger layer (Hyperledger Fabric) are omitted, and Step 12 is executed instead;

- Step 11a: Otherwise, the identity Not Found Error results in a query via the communication layer to the Hyperledger Fabric Gateway service of the distributed ledger layer;

- Step 11b: The chaincode (transaction) is executed to generate based on the device GUID, a list of seal indices, and the session nonce ;

- Step 11c: The device GUID along with the session seal key is returned from the ledger , or a relevant error is produced;

- Step 12: Entity identities are compared by verifying the extracted signature that sealed the AUTH_MSG against the signature, , with the session seal key returned from Step 10, Step 11c, or optionally, Step 9b. If the signatures match (), the AUTH_MSG undergoing the verification process will be preserved. If they do not match, the message may either be discarded or stored in a separate queue for the identification of potentially malicious or faulty devices;

- Step 13: The streams microservice sequentially reads and verifies the session-related messages, DATA_MSGN si;

- Step 14: The session seal key from either Step 10 or Step 11c is used, and the signatures of the DATA_MSG are compared;

- Step 15: Verified DATA_MSG is sent to the appropriate topic;

- Step 16: An entity representing the subscribers layer reads the verified data streams read_streams() based on the subscribed topics and the authorization policy.

Figure 10.

Sequence diagram for verification of the data streams operation.

5. Security and Reliability Risk Assessment

In this section, we have conducted a high-level security risk assessment considering several security and reliability threats across the application, network, and perception layers of the dissemination framework.

5.1. Analysis of Attack Resilience

The proposed framework for data distribution within the federation may be exposed to risks due to the valuable data collected, transmitted, and processed. These data can be particularly sensitive, and their disclosure may expose mission participants to serious human or material losses. The attacker’s goal in federated IoT ecosystems is most often to steal or forge data through manipulated IoT devices, disrupt transmissions, and use IoT devices to launch other attacks, including DoS/DDoS, preventing access to federated services. The attacks that can be carried out on federated IoT networks are the same as those that can be carried out on non-federated networks. However, when analyzing attacks on federated networks, it is essential to consider an attacker from an organization other than targeted devices or services. In the case of an attacker from outside the organization that owns the IoT device or service, it is likely to have more rights than a non-federated entity, but less than a member of the organization that owns the devices or services. Table 1 lists possible attacks on the developed data dissemination system. The criterion for selecting attacks is the possibility of violating information security properties. The list of attacks is based on the work on the security of the federated environment []. Some attacks presented in Table 1 are generalized categories encompassing different attack techniques. An example of such an attack is the DoS/DDoS attack, which can include flood attacks, fragmentation attacks, and reflection amplification attacks.

Table 1.

Types of attacks that can be attempted against the framework.

Cryptanalysis includes a set of attacks aimed at obtaining the plaintext of a transmitted message, which can be carried out in three distinguished layers of the framework. Examples of this attack include brute-force, dictionary, and statistical attacks. To make it challenging to obtain the plain text of a message sent between an IoT device and an application gateway or other IoT device, it is recommended to use strong encryption and HMAC algorithms that are considered secure. The parameters used to create the key must also be of sufficient entropy so that an attacker cannot guess the key used to secure the message.

Spoofing attacks include a large set of attacks designed to impersonate, in the case of our framework, a device, application gateway, or distributed ledger node. Since every message is authenticated in the framework, it is impossible to launch attacks of this type until the attacker has access to the parameters from which the key can be created. Furthermore, mutual authentication of the application gateway with the ledger node is carried out using TLS based on the certificates of both parties.

5.1.1. General Attacks

An attack that can apply to all layers of the architecture of the adopted solution is a (distributed) denial of service (DoS/DDoS) attack. When applied to the perception layer, it can involve the unauthorized seizure of IoT device resources. The attack aims to exploit an available resource; the most common resources are network bandwidth, CPU time, and internal memory. IoT devices are vulnerable to this type of attack due to their resource limitations. For the network layer, a DoS/DDoS attack can involve jamming communications between devices. The attacker tries to hijack the transmission bandwidth by sending a signal using the same frequencies as the devices communicating. As with the DoS/DDoS attack at the perception layer, defense options against this attack are very limited. At the application layer, the framework is also susceptible to DoS/DDoS attacks due to the capabilities of the transactions performed involving the distributed register. These transactions require adding data to the ledger, such as device registration or data reading from the ledger, as IoT devices’ identity parameters reading, through which Hyperledger Fabric resources can be exhausted. However, it is possible to point out the solutions adopted in the work, such as off-chain data storage or the multiplication of ledger nodes in each organization, significantly reducing the effectiveness of carrying out DoS attacks at the application layer. Furthermore, in our framework, the number of Kafka brokers, microservices, and IoT gateways could be increased to handle more requests. Moreover, using security information and event management (SIEM), we can identify specific properties of requests involved in DoS to detect a source of overloaded data and reject all malicious requests at the gateway level.

5.1.2. Perception Layer Attacks

- Device capture: The attacker can access the IoT device and generate messages sealed with its identity. In this situation, it is assumed that such a device’s behavioral pattern (distinctive features) will change. Consequently, it will be possible to use analytics tools (SIEM) to detect these changes, mainly related to network fingerprints. Moreover, when a compromised device is detected, it can be immediately marked and revoked from the distributed ledger. Also, security enclaves (e.g., HSM) can increase device resilience against capture and manipulation.

- Malicious devices: The attack involves adding a fake IoT device to the network. In our structure, a device is registered once in a protected environment. Therefore, we assume that the process will be coordinated by an authorized person. Therefore, it is not possible to register a fake device. If a device is not registered, it will not be authenticated and messages from such a device will be rejected, and consequently, the device will be detected and blocked.

- Device tampering: The attack consists of changing software or hardware components of the IoT device. In our framework, any changes to a unique device fingerprint would generate numerous failed verification attempts.

- Sybil attacks: The attack consists of having a multi-identity device by the IoT device. This situation is prevented via a secure registration process.

- Side-channel (timing) attacks: Attacks consist of obtaining the key by analyzing the implementation of the protocol (e.g., current power consumption and time dependencies). The framework could be susceptible to a timing attack when the device uses unique data to seal messages. In this situation, it is possible to predict from where these data are read, but not the values of these data; therefore, we believe that this attack is rather difficult to perform in practice.

5.1.3. Network Layer Attacks

- Eavesdropping: This attack involves eavesdropping on transmissions and obtaining messages (credentials). In our framework, we have separated the key used to secure the communication channel for IoT devices from the key used for the data authenticity protection mechanism. Only the registration phase is critical and must be carried out in a protected, trusted environment.

- Replay attack: This attack is based on intercepting a message and sending it later. In the case of the developed solution, it is impossible to perform this attack because each message contains a creation timestamp, which is verified. When decrypting the message, the IoT device or the gateway compares this timestamp with the current time. The disadvantage of this solution is having a correctly synchronized time on each IoT device.

- Packet injection: This attack involves injecting packets to disrupt communications. In the case of our framework, the attack can consist of duplicating transmitted packets or creating invalid packets. Since the packets are verified by the streams microservice layer in both cases, the recipient will ignore them.

- Session hijacking: This attack compromises the session key by stealing or predicting. Within our framework, it is mitigated through the session nonce, timestamps, and the device-based key used for data authenticity.

- Man-in-the-Middle: This attack consists of changing the messages sent between the IoT device and the verifying microservices. Any change to the message will prevent it from being verified due to the data authenticity protection mechanism. Moreover, invalid messages can be logged to identify faulty (malicious) devices.

5.1.4. Application Layer Attacks

- Storage attack: This attack consists of changing device identity features. To prevent this attack, access to the device should be properly secured to prevent changes to data stored in it. In our framework, if the device identity is changed, the device will not be able to authenticate itself. It is almost impossible to change data in a distributed ledger without the knowledge and consent of the organization that owns the IoT device.

- Malicious insider attack: This attack consists of using credentials by an authorized person. In the framework, access to data stored in the Hyperledger Fabric is possible only by an authenticated and authorized entity that uses an appropriate private key and a valid X.509 certificate. Each access attempt is logged. Resistance to this attack can be enhanced by using SIEM.

- Abuse of authority: The possibility of a successful attack is minimized through chaincodes designed to verify every operation performed on data stored in the distributed ledger. For this reason, each organization has, among other things, the ability to modify the permissions of its IoT devices, but unauthorized entities cannot perform this.

- Permissions modification by unauthorized users: In the framework, the modification of permissions is only possible through chaincodes; thus, any modification requires the conditions written in the chaincode to be met. If the chaincode is written correctly, only the device owner or authorized entity can modify the device’s IoT permissions.

5.2. Analysis of Fault Resilience

- Distributed ledger node failures: In our framework, we propose that each organization has a minimum of two nodes. Since the data in the blockchain are replicated, the failure of a single node does not affect the operation of the entire Hyperledger Fabric network.

- Kafka cluster (brokers) failure: In our framework, it is possible to maintain the availability of data generated by IoT devices by using the built-in synchronization mechanism and setting an appropriate replication factor.

6. Framework Evaluation

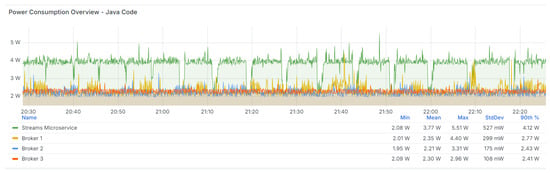

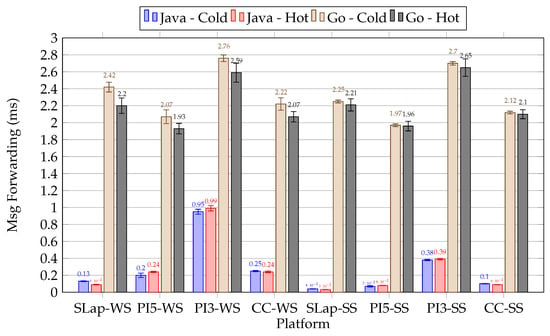

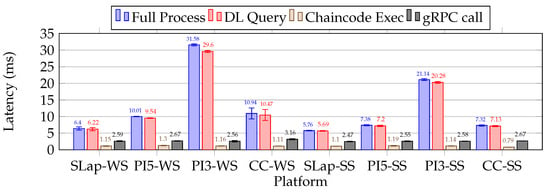

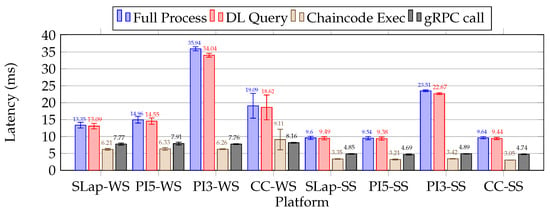

Benchmarking the performance of streaming data processing systems poses a considerable challenge due to the complexities of the global concept of time. This section provides benchmarks for our framework in the Amazon Web Services (AWS) cloud environment and the Raspberry Pi device-based environment. In the AWS environment, we measured the average times for consumers to read the data stream processed through microservices utilizing the Java Kafka Streams API. Our objective was to validate the applicability of our framework in the context of audiovisual streams and to assess its computational stability. Conversely, the Raspberry Pi setup focused on gathering the latency metrics of key internal operations on resource-constrained devices. We also compared the operation latencies of implementations in Java (Kafka Streams API library) and Go (Sarama library) programming languages.

6.1. Cloud Setup

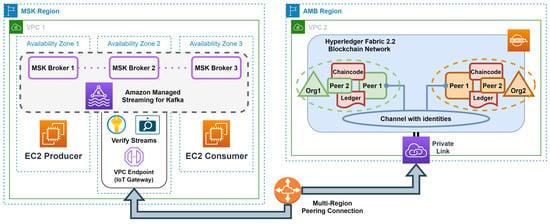

Our experiment seeks to verify our framework’s capability to process audiovisual streams in a distributed and federated cloud environment. Table 2 describes and Figure 11 illustrates the various components of our experimental framework deployed using AWS technology (Setup I).

Table 2.

Setup I: An overview of AWS cloud-based environment components.

Figure 11.

Setup I: An overview of the AWS cloud-based environment.

The AWS cloud, due to its pay-as-you-go model and pluggable architecture for the COTS services Amazon Managed Streaming–Apache Kafka and Amazon Managed Blockchain–Hyperledger Fabric, enables efficient deployment of our framework while simultaneously minimizing the operational costs associated with its various components’ provisioning, configuration, and maintenance. Consequently, our framework is suitable for federated environments, which are required to ensure zero-day interoperability.

6.2. Resource-Constrained Setup

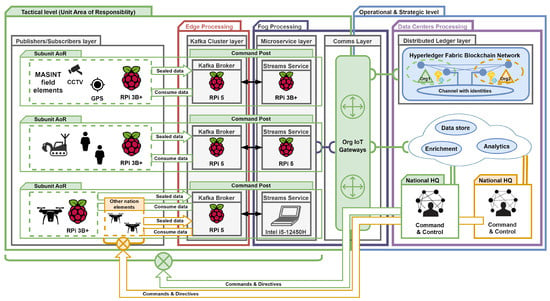

In the second benchmark (Setup II), we chose resource-constrained devices to evaluate their utilization and computational power. We positioned the specific layers of our framework within the IoT environment locations: actuators/sensors, edge, Fog, data center (Cloud). Moreover, our setup simulates infrastructure placement in tactical military networks and can be adapted for scenarios involving civil components during HADR operations. Table 3 and Figure 12 represent the device-based environment.

Table 3.

Setup II: An overview of the Raspberry Pi device-based environment components.

Figure 12.

Setup II: An overview of the Raspberry Pi device-based environment.

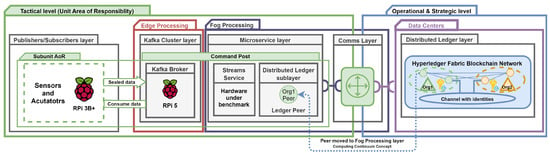

Furthermore, in Section 3, we presented the meaning of the computing continuum concept. By relocating the ledger peer from the data center to the Fog location, we refined Setup II to facilitate benchmarking with the mentioned concept (Figure 13).

Figure 13.

Setup II: The enabled computing continuum concept.

The Raspberry Pi device-based environment facilitates the straightforward out-of-the-box deployment of our framework while maintaining low operational costs. Moreover, the presented environment promotes the computing continuum concept, which can enhance the deployment of services at the tactical level in settings characterized by DIL networks, as well as in federated environments that require zero-day interoperability.

Additionally, we considered integrating information classification approaches with the CC concept to enable the processing and sharing of relevant IOs based on their value of information. The versatility of Raspberry Pi devices allows for integration with a neural processing unit, a specialized processor designed to accelerate artificial intelligence and machine learning tasks. This feature can facilitate parallel processing of large data volumes within the Fog component, making it especially well-suited for applications involving contextual dissemination through image recognition or natural language processing, as well as for data quality assessment.

Moreover, the hardware of these devices is compatible with various operating systems, including Windows 10 IoT Core and Linux OS. Lastly, the general-purpose input/output (GPIO) pins provide the flexibility to experiment with a range of communication protocols such as Sigfox, LoRaWAN, NB-IoT, Zigbee, and BLE.

6.3. Processing Systems Benchmarking

When conducting performance studies (benchmarks) of streaming data processing systems, it is necessary to consider the following key metrics [,]: latency, throughput, the usage of hardware–software resources (CPU and RAM), and power consumption (PC). Furthermore, the overall performance evaluation can be affected by the input parameters (e.g., system configuration) and processing scenarios (workloads) []. In the context of the proposed framework, several parameters are listed below:

- Configuration of the data queue layer: number of brokers, partitions, and data stream replication factor;

- Parallelization (horizontal-scaling) of stream processors (microservices);

- Kind (e.g., windowed aggregation) and type of operations (e.g., stateless);

- Number of organizations that joined a federated IoT environment and registered devices (identity count);

- Number of peers of the distributed ledger layer;

- Selected programming language for microservices and chaincodes.

Generally, latency is the interval of time it takes for a system (platform) under test (SUT) to process a message, calculated from the moment the input message is read from the source until the output message is written by SUT. Hence, it is important to distinguish the latency metric [] into its two types: event-time latency and processing-time latency. The first refers to the interval between a timestamp being assigned to the input message by the source and the time the SUT generates an output message. The second one refers to the interval calculated between the time the SUT ingests an input message (read) and the time the SUT generates an output message.

6.3.1. Data Dissemination System Benchmarking

In the context of Apache Kafka, latency (end-to-end, E2E) is defined as the total time from when application logic produces a record using KafkaProducer.send() to when the application logic can consume that record through KafkaConsumer.poll(). This E2E latency includes various intermediate operations that can affect the overall duration. The publish operation time encompasses flying time, queuing time, and internal record processing time. Flying time refers to the duration for transmitting the record to the leader broker, queuing time pertains to the time taken to add the request to the broker queue by the network thread, and record processing time involves the reading of the request from the queue and the appending of logs (records). Furthermore, the replication factor and cluster load impact commit time, which is the time required for the slowest in-sync follower broker to retrieve a record from the leader. Moreover, the catch-up operation refers to the time a consumer takes to read the latest record () while its offset pointer is lagging (, where ). Lastly, fetch operation time impacts the Kafka consumer record read latency, as the consumer continually polls the leader broker for more data from its subscribed topic partition.

As previously noted, various factors can influence latency. Our experiments focused on the detailed configuration of the Kafka parameters for our microservice that operates both as a producer and a consumer during single record processing. Consequently, the following configuration was uniformly applied across all scenarios (Table 4).

Table 4.

All scenarios configuration parameters.

6.3.2. Distributed Ledger System Benchmarking

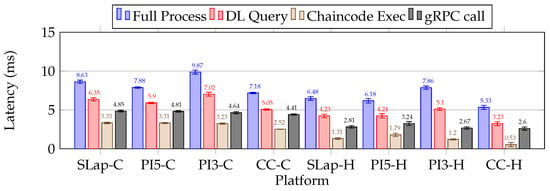

Regarding the distributed ledger operations benchmarking, we focused on collecting latency metrics from two sources: one from the microservice ledger query operation (DL query), and another directly from the log entries of the ledger peer server. By analyzing the peer logs, we focused on two types of entries: the duration of the gRPC call and the time required to evaluate the chaincode (chaincode execution), specifically to acquire the device’s seal from the ledger. The first metric is essential for monitoring and performance analysis, as it offers valuable insights into the efficiency and responsiveness of the services involved in gRPC communication. Conversely, the second metric pertains to a peer node that endorses transactions, reflecting the computation burden.