DuSiamIE: A Lightweight Multidimensional Infrared-Enhanced RGBT Tracking Algorithm for Edge Device Deployment

Abstract

1. Introduction

- Dual-stream Siamese network: We present a specialized module designed to enhance infrared features and address the problem of feature loss during extraction, particularly the under-utilization of infrared features commonly encountered in light sensing. The incorporation of this module enhances the PR and SR by 2.7% and 3.3%, respectively.

- Development of an infrared feature enhancement module: We introduce a novel dual-stream Siamese network with optical sensing architecture for edge devices, which demonstrates significant improvements in the PR and SR of 10.5% and 12.4%, respectively, compared to a single-stream Siamese network.

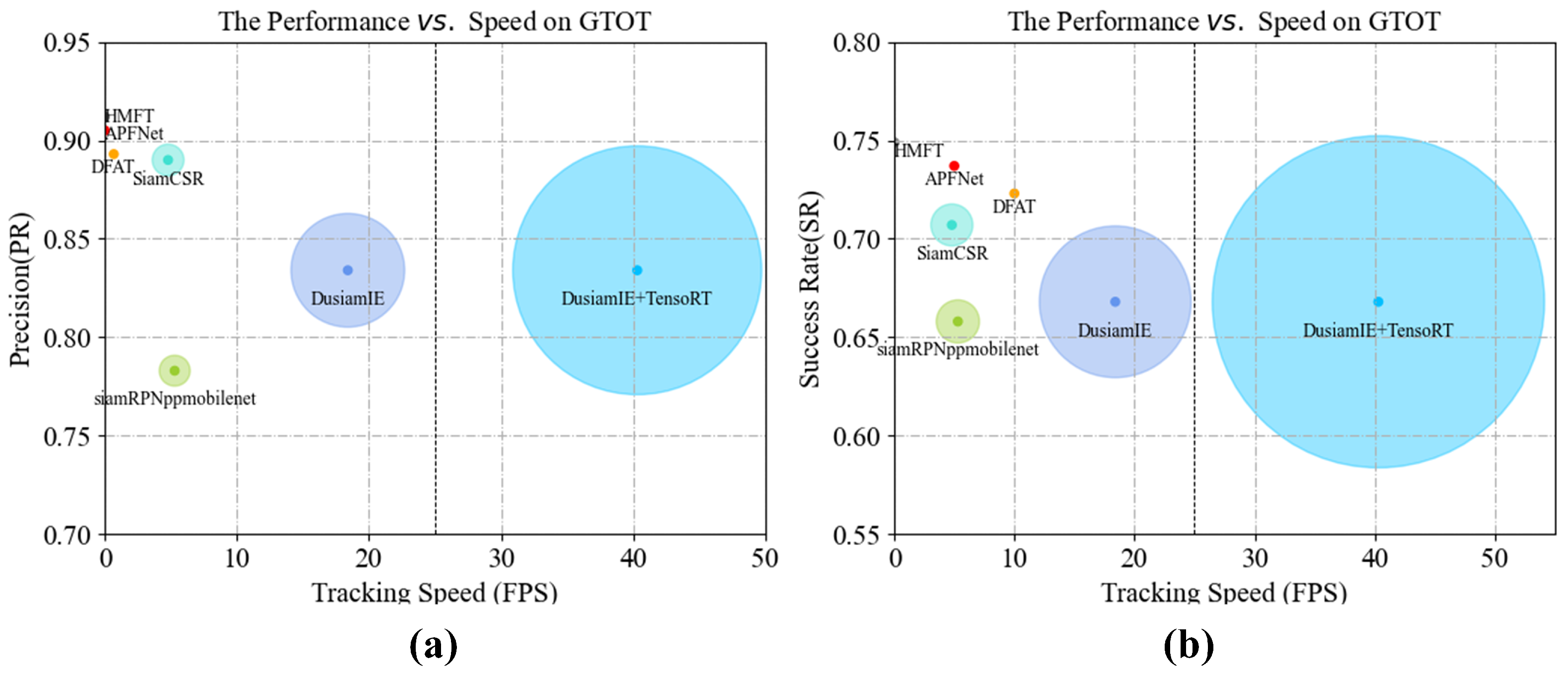

- Real-time tracking optimization using NVIDIA TensorRT: To facilitate real-time tracking on resource-constrained edge devices, we utilize NVIDIA TensorRT. The results demonstrate that the operating speed increased from 18.4 frames per second (FPS) to 40.3 FPS, thereby fully meeting the real-time requirements for target tracking.

2. Related Work

2.1. RGB Tracking

2.2. RGBT Tracking

3. Methodology

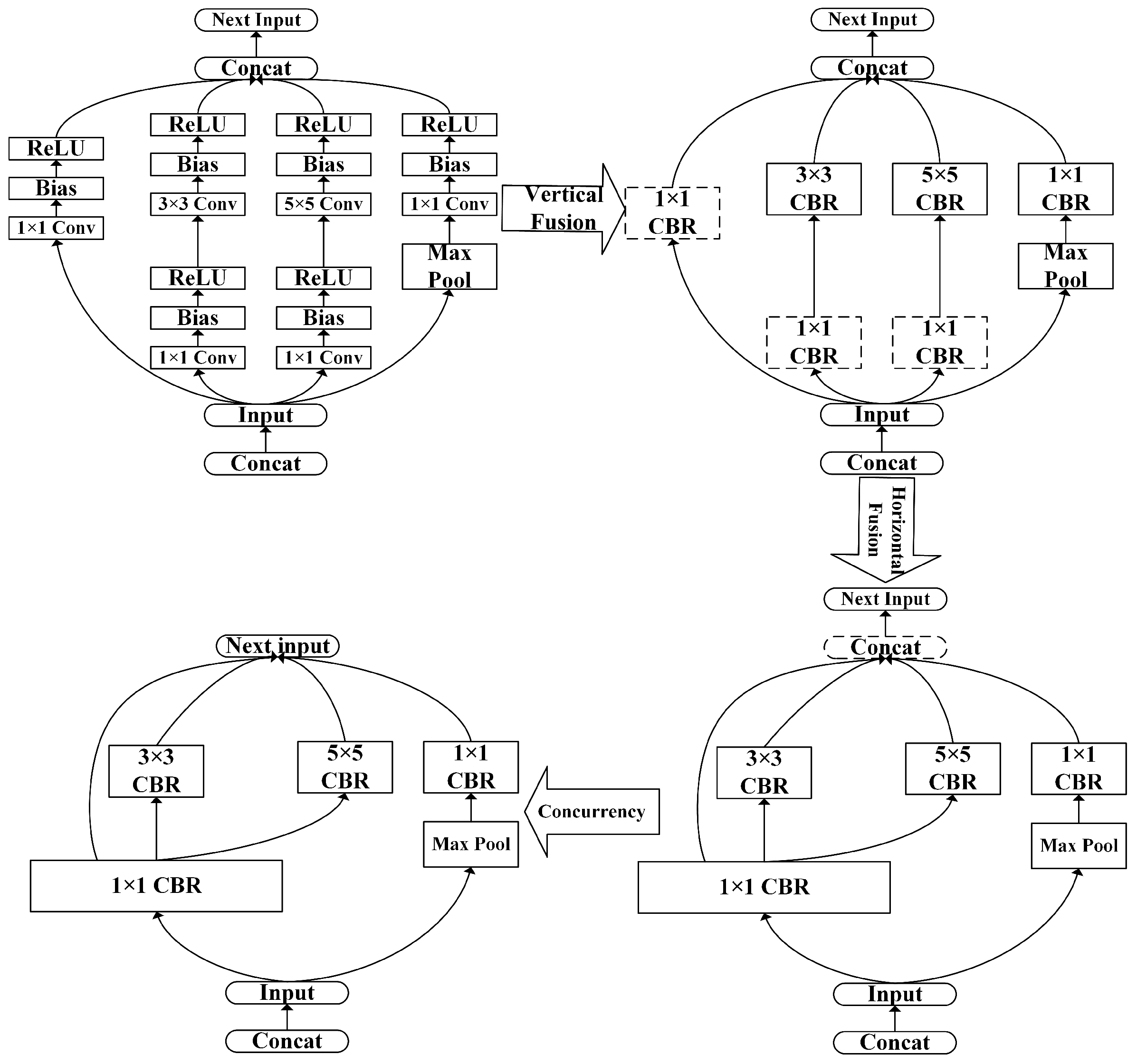

3.1. Lightweight Dual Stream Siamese Network Optimized for Edge Deployment

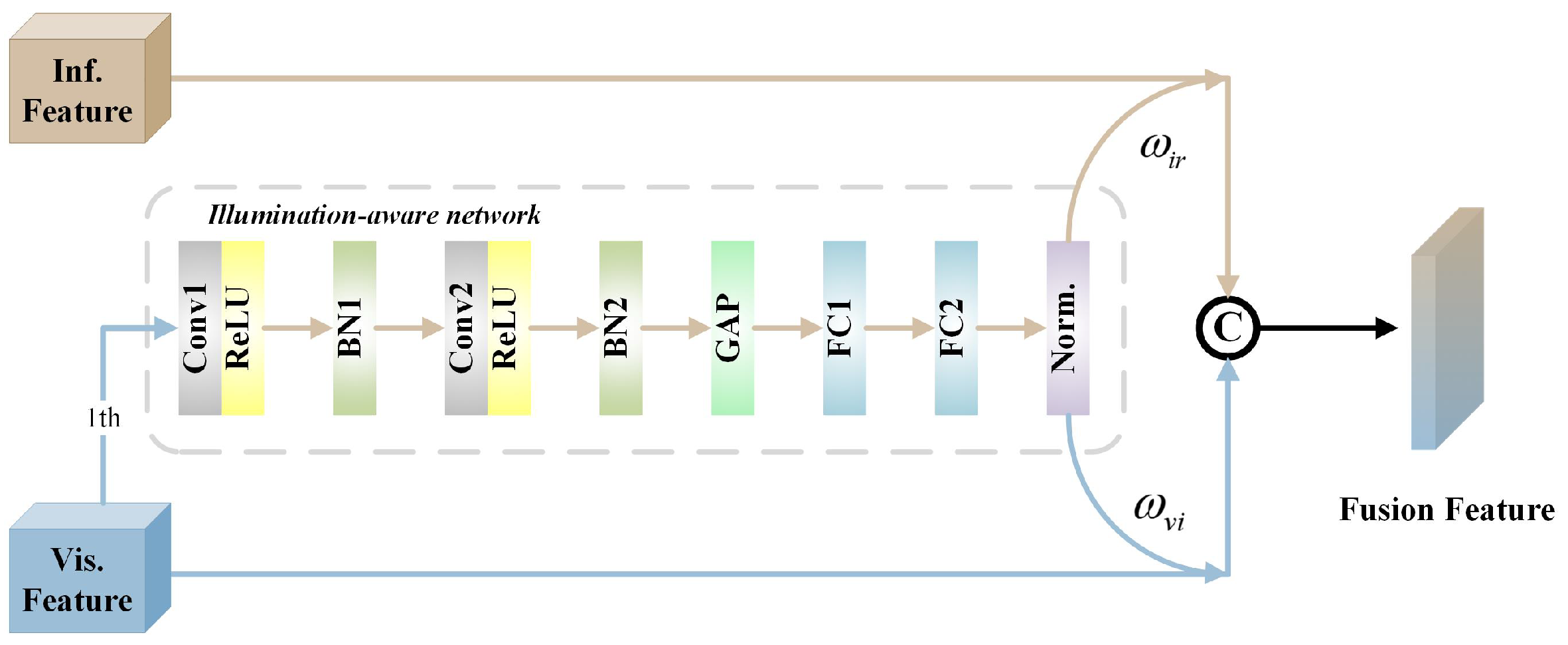

3.2. Multidimensional Infrared Feature Enhancement Module

3.3. Optimization of Inference Speed for Embedded Devices

4. Experiments and Discussions

4.1. Datasets and Evaluation Indicators

4.1.1. GTOT Dataset and Metrics

4.1.2. RGBT234 Dataset and Metrics

4.2. Experimental Environment

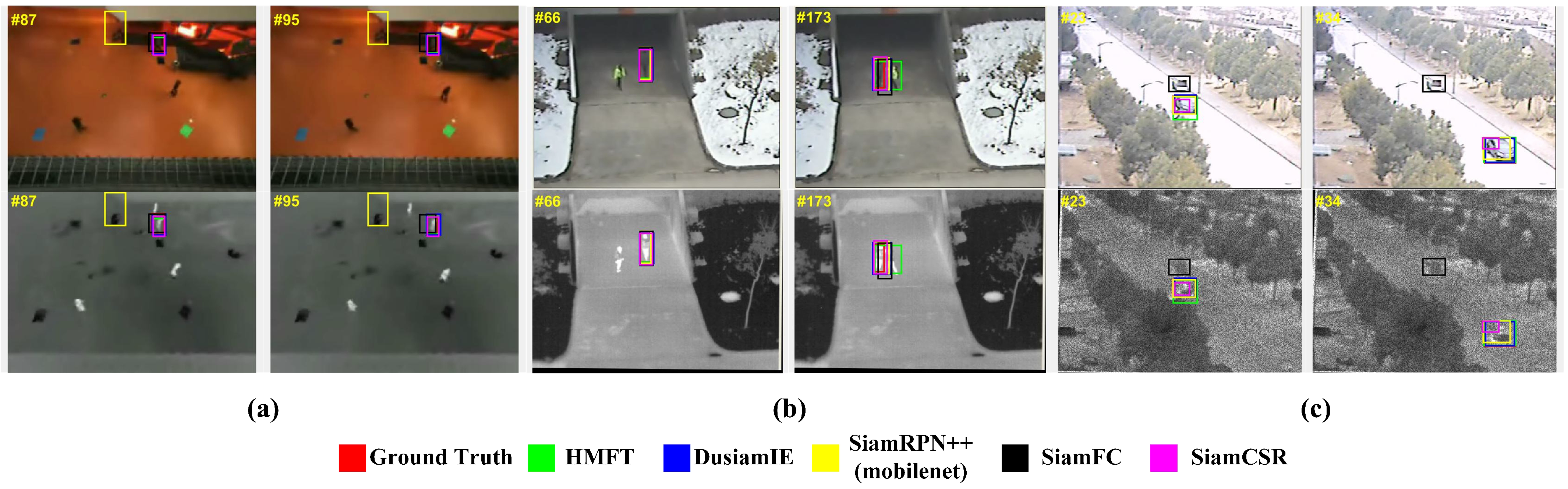

4.3. Comparison with Other Methods

4.3.1. Comparison of Results on GTOT

4.3.2. Comparison of Results on RGBT234

4.4. Ablation Study

4.5. Discussions

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- You, S.; Zhu, H.; Li, M.; Li, Y. A review of visual trackers and analysis of its application to mobile robot. arXiv 2019, arXiv:1910.09761. [Google Scholar]

- Wan, M.; Gu, G.; Qian, W.; Ren, K.; Maldague, X.; Chen, Q. Unmanned aerial vehicle video-based target tracking algorithm using sparse representation. IEEE Internet Things J. 2019, 6, 9689–9706. [Google Scholar] [CrossRef]

- Sun, Q.; Wang, Y.; Yang, Y.; Xu, P. Research on target tracking problem of fixed scene video surveillance based on unlabeled data. In Proceedings of the 2021 3rd World Symposium on Artificial Intelligence (WSAI), Guangzhou, China, 18–20 June 2021; pp. 29–33. [Google Scholar]

- Zhang, T.; Liu, X.; Zhang, Q.; Han, J. SiamCDA: Complementarity-and distractor-aware RGB-T tracking based on Siamese network. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 1403–1417. [Google Scholar] [CrossRef]

- Guo, C.; Yang, D.; Li, C.; Song, P. Dual Siamese network for RGBT tracking via fusing predicted position maps. Vis. Comput. 2022, 38, 2555–2567. [Google Scholar] [CrossRef]

- Bayoudh, K.; Knani, R.; Hamdaoui, F.; Mtibaa, A. A survey on deep multimodal learning for computer vision: Advances, trends, applications, and datasets. Vis. Comput. 2022, 38, 2939–2970. [Google Scholar] [CrossRef] [PubMed]

- Guo, C.; Xiao, L. High speed and robust RGB-thermal tracking via dual attentive stream siamese network. In Proceedings of the IGARSS 2022-2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 803–806. [Google Scholar]

- Cao, Z.; Fu, C.; Ye, J.; Li, B.; Hift, Y.L. Hierarchical feature transformer for aerial tracking. In Proceedings of the CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 15457–15466. [Google Scholar]

- Zhang, W. A robust lateral tracking control strategy for autonomous driving vehicles. Mech. Syst. Signal Process. 2021, 150, 107238. [Google Scholar] [CrossRef]

- Shao, J.; Du, B.; Wu, C.; Zhang, L. Tracking objects from satellite videos: A velocity feature based correlation filter. IEEE Trans. Geosci. Remote Sens. 2019, 57, 7860–7871. [Google Scholar] [CrossRef]

- Shao, J.; Du, B.; Wu, C.; Zhang, L. Can we track targets from space? A hybrid kernel correlation filter tracker for satellite video. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8719–8731. [Google Scholar] [CrossRef]

- Deng, X.; Li, J.; Guan, P.; Zhang, L. Energy-efficient UAV-aided target tracking systems based on edge computing. IEEE Internet Things J. 2021, 9, 2207–2214. [Google Scholar] [CrossRef]

- Sun, C.; Wang, X.; Liu, Z.; Wan, Y.; Zhang, L.; Zhong, Y. Siamohot: A lightweight dual siamese network for onboard hyperspectral object tracking via joint spatial-spectral knowledge distillation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5521112. [Google Scholar] [CrossRef]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual object tracking using adaptive correlation filters. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2544–2550. [Google Scholar]

- Kuai, Y.; Wen, G.; Li, D.; Xiao, J. Target-aware correlation filter tracking in RGBD videos. IEEE Sens. J. 2019, 19, 9522–9531. [Google Scholar] [CrossRef]

- Zheng, Y.; Liu, X.; Cheng, X.; Zhang, K.; Wu, Y.; Chen, S. Multi-task deep dual correlation filters for visual tracking. IEEE Trans. Image Process. 2020, 29, 9614–9626. [Google Scholar] [CrossRef] [PubMed]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H. Fully-convolutional siamese networks for object tracking. In Proceedings of the Computer Vision–ECCV 2016 Workshops, Amsterdam, The Netherlands, 8–10 and 15–16 October 2016; Proceedings, Part II 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 850–865. [Google Scholar]

- Guo, Q.; Feng, W.; Zhou, C.; Huang, R.; Wan, L.; Wang, S. Learning dynamic siamese network for visual object tracking. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1763–1771. [Google Scholar]

- Nam, H.; Han, B. Learning multi-domain convolutional neural networks for visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4293–4302. [Google Scholar]

- Li, H.; Li, Y.; Porikli, F. Deeptrack: Learning discriminative feature representations online for robust visual tracking. IEEE Trans. Image Process. 2015, 25, 1834–1848. [Google Scholar] [CrossRef] [PubMed]

- Peng, J.; Zhao, H.; Hu, Z.; Zhuang, Y.; Wang, B. Siamese infrared and visible light fusion network for RGB-T tracking. Int. J. Mach. Learn. Cybern. 2023, 14, 3281–3293. [Google Scholar] [CrossRef]

- Zhang, X.; Ye, P.; Peng, S.; Liu, J.; Gong, K.; Xiao, G. SiamFT: An RGB-infrared fusion tracking method via fully convolutional Siamese networks. IEEE Access 2019, 7, 122122–122133. [Google Scholar] [CrossRef]

- Zhang, X.; Ye, P.; Peng, S.; Liu, J.; Xiao, G. DSiamMFT: An RGB-T fusion tracking method via dynamic Siamese networks using multi-layer feature fusion. Signal Process. Image Commun. 2020, 84, 115756. [Google Scholar] [CrossRef]

- Xue, Y.; Zhang, J.; Lin, Z.; Li, C.; Huo, B.; Zhang, Y. SiamCAF: Complementary attention fusion-based Siamese network for RGBT tracking. Remote Sens. 2023, 15, 3252. [Google Scholar] [CrossRef]

- Long Li, C.; Lu, A.; Hua Zheng, A.; Tu, Z.; Tang, J. Multi-adapter RGBT tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Zhang, P.; Zhao, J.; Wang, D.; Lu, H.; Ruan, X. Visible-thermal UAV tracking: A large-scale benchmark and new baseline. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 8886–8895. [Google Scholar]

- Tang, Z.; Xu, T.; Li, H.; Wu, X.J.; Zhu, X.; Kittler, J. Exploring fusion strategies for accurate RGBT visual object tracking. Inf. Fusion 2023, 99, 101881. [Google Scholar] [CrossRef]

- Xiao, Y.; Yang, M.; Li, C.; Liu, L.; Tang, J. Attribute-based progressive fusion network for rgbt tracking. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 22 February–1 March 2022; Volume 36, pp. 2831–2838. [Google Scholar]

- Zhang, T.; Guo, H.; Jiao, Q.; Zhang, Q.; Han, J. Efficient rgb-t tracking via cross-modality distillation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 5404–5413. [Google Scholar]

- Yu, Z.; Fan, H.; Wang, Q.; Li, Z.; Tang, Y. Region selective fusion network for robust rgb-t tracking. IEEE Signal Process. Lett. 2023, 30, 1357–1361. [Google Scholar] [CrossRef]

- Chen, Z.; Zhong, B.; Li, G.; Zhang, S.; Ji, R. Siamese box adaptive network for visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6668–6677. [Google Scholar]

- Feng, Z.; Yan, L.; Xia, Y.; Xiao, B. An adaptive padding correlation filter with group feature fusion for robust visual tracking. IEEE/CAA J. Autom. Sin. 2022, 9, 1845–1860. [Google Scholar] [CrossRef]

- Süzen, A.A.; Duman, B.; Şen, B. Benchmark analysis of jetson tx2, jetson nano and raspberry pi using deep-cnn. In Proceedings of the 2020 International Congress on Human-Computer Interaction, Optimization and Robotic Applications (HORA), Ankara, Turkey, 26–28 June 2020; pp. 1–5. [Google Scholar]

- Liu, L.; Blancaflor, E.B.; Abisado, M. A lightweight multi-person pose estimation scheme based on Jetson Nano. Appl. Comput. Sci. 2023, 19, 1–14. [Google Scholar] [CrossRef]

- Micikevicius, P.; Narang, S.; Alben, J.; Diamos, G.; Elsen, E.; Garcia, D.; Ginsburg, B.; Houston, M.; Kuchaiev, O.; Venkatesh, G.; et al. Mixed precision training. arXiv 2017, arXiv:1710.03740. [Google Scholar]

- Li, C.; Cheng, H.; Hu, S.; Liu, X.; Tang, J.; Lin, L. Learning collaborative sparse representation for grayscale-thermal tracking. IEEE Trans. Image Process. 2016, 25, 5743–5756. [Google Scholar] [CrossRef]

- Li, C.; Liang, X.; Lu, Y.; Zhao, N.; Tang, J. RGB-T object tracking: Benchmark and baseline. Pattern Recognit. 2019, 96, 106977. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part V 13. Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Muller, M.; Bibi, A.; Giancola, S.; Alsubaihi, S.; Ghanem, B. Trackingnet: A large-scale dataset and benchmark for object tracking in the wild. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 300–317. [Google Scholar]

- Fan, H.; Lin, L.; Yang, F.; Chu, P.; Deng, G.; Yu, S.; Bai, H.; Xu, Y.; Liao, C.; Ling, H. Lasot: A high-quality benchmark for large-scale single object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5374–5383. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. Siamrpn++: Evolution of siamese visual tracking with very deep networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4282–4291. [Google Scholar]

- Madeo, S.; Pelliccia, R.; Salvadori, C.; del Rincon, J.M.; Nebel, J.C. An optimized stereo vision implementation for embedded systems: Application to RGB and infra-red images. J.-Real-Time Image Process. 2016, 12, 725–746. [Google Scholar] [CrossRef][Green Version]

- Zhang, P.; Wang, D.; Lu, H.; Yang, X. Learning adaptive attribute-driven representation for real-time RGB-T tracking. Int. J. Comput. Vis. 2021, 129, 2714–2729. [Google Scholar] [CrossRef]

| SiamRPNppMobile | SiamCSR | DFAT | APFNet | HMFT | DuSiamIE | DuSiamIE + TensorRT | |

|---|---|---|---|---|---|---|---|

| PR | 78.3 | 89.0 | 89.3 | 90.5 | 91.2 | 83.4 | 83.4 |

| SR | 65.8 | 70.7 | 72.3 | 73.7 | 74.9 | 66.8 | 66.8 |

| FPS | 5.3 | 4.8 | 0.69 | 0.02 | 0.02 | 18.4 | 40.3 |

| SiamRPNppMobile | SiamFT | DFAT | HMFT | APFNet | DuSiamIE + TensorRT | |

|---|---|---|---|---|---|---|

| NO | 83.8/64.2 | 84.8/62.0 | 80.9/55.7 | 90.9/67.4 | 80.8/54.1 | 82.2/61.2 |

| PO | 73.5/54.3 | 72.7/50.6 | 68.4/50.3 | 85.7/62.1 | 81.8/58.2 | 81.7/58.2 |

| HO | 59.4/43.4 | 57.6/40.7 | 75.4/55.6 | 66.4/46.9 | 84.7/59.6 | 67.5/45.1 |

| LI | 59.3/42.4 | 68.8/47.4 | 72.9/52.5 | 83.3/59.1 | 83.7/58.8 | 61.4/42.4 |

| LR | 66.4/46.5 | 69.6/46.5 | 74.1/53.3 | 76.3/57.1 | 83.4/58.8 | 74.1/46.4 |

| TC | 70.6/53.0 | 70.7/50.3 | 80.2/56.9 | 72.2/50.4 | 84.6/64.2 | 74.9/54.8 |

| DEF | 69.5/53.2 | 69.7/51.8 | 71.6/52.3 | 77.6/57.9 | 86.6/60.7 | 75.3/55.0 |

| FM | 65.3/46.9 | 62.0/42.2 | 71.4/52.2 | 65.9/46.9 | 79.9/59.1 | 75.8/51.2 |

| SV | 72.7/55.5 | 71.1/50.5 | 73.9/53.6 | 80.0/59.2 | 85.6/59.4 | 78.7/56.2 |

| MB | 64.5/48.7 | 59.0/43.3 | 76.9/53.1 | 70.6/50.9 | 89.0/62.7 | 66.3/47.3 |

| CM | 66.4/49.9 | 63.2/45.7 | 70.9/49.5 | 77.9/56.2 | 85.8/60.9 | 69.1/48.3 |

| BC | 57.8/39.3 | 60.5/40.3 | 79.5/57.7 | 73.8/49.8 | 84.5/61.2 | 64.8/41.9 |

| ALL | 69.7/51.7 | 68.8/48.6 | 75.8/55.2 | 78.8/56.8 | 82.7/57.9 | 75.3/52.6 |

| FPS | 5.3 | - | 0.7 | 0.32 | 0.5 | 34.7 |

| DuSiamIE | LIFA | MIFE | TensorRT | PR(%) | SR(%) | FPS |

|---|---|---|---|---|---|---|

| ✓ | 70.2 | 51.1 | ||||

| ✓ | ✓ | 80.7 | 63.5 | |||

| ✓ | ✓ | ✓ | 83.4 | 66.8 | 18.3 | |

| ✓ | ✓ | ✓ | ✓ | 83.4 | 66.8 | 40.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Wu, H.; Gu, Y.; Lu, J.; Sun, X. DuSiamIE: A Lightweight Multidimensional Infrared-Enhanced RGBT Tracking Algorithm for Edge Device Deployment. Electronics 2024, 13, 4721. https://doi.org/10.3390/electronics13234721

Li J, Wu H, Gu Y, Lu J, Sun X. DuSiamIE: A Lightweight Multidimensional Infrared-Enhanced RGBT Tracking Algorithm for Edge Device Deployment. Electronics. 2024; 13(23):4721. https://doi.org/10.3390/electronics13234721

Chicago/Turabian StyleLi, Jiao, Haochen Wu, Yuzhou Gu, Junyu Lu, and Xuecheng Sun. 2024. "DuSiamIE: A Lightweight Multidimensional Infrared-Enhanced RGBT Tracking Algorithm for Edge Device Deployment" Electronics 13, no. 23: 4721. https://doi.org/10.3390/electronics13234721

APA StyleLi, J., Wu, H., Gu, Y., Lu, J., & Sun, X. (2024). DuSiamIE: A Lightweight Multidimensional Infrared-Enhanced RGBT Tracking Algorithm for Edge Device Deployment. Electronics, 13(23), 4721. https://doi.org/10.3390/electronics13234721