Abstract

With the wide deployment of edge devices, distributed network traffic data are rapidly increasing. Traditional detection methods for malicious traffic rely on centralized training, in which a single server is often used to aggregate private traffic data from edge devices, so as to extract and identify features. However, these methods face difficult data collection, heavy computational complexity, and high privacy risks. To address these issues, this paper proposes a federated learning-based distributed malicious traffic detection framework, FL-CNN-Traffic. In this framework, edge devices utilize a convolutional neural network (CNN) to process local detection, data collection, feature extraction, and training. A server aggregates model updates from edge devices using four federated learning algorithms (FedAvg, FedProx, Scaffold, and FedNova) to build a global model. This framework allows multiple devices to collaboratively train a model without sharing private traffic data, addressing the “Data Silo” problem while ensuring privacy. Evaluations on the USTC-TFC2016 dataset show that for independent and identically distributed (IID) data, this framework can reach or exceed the performance of centralized deep learning methods. For Non-IID data, this framework outperforms other neural networks based on federated learning, with accuracy improvements ranging from 2.59% to 4.73%.

1. Introduction

With the widespread adoption of edge devices, distributed private traffic data are growing rapidly. As a medium for information transmission and interaction in cyberspace, traffic contains a wealth of critical information on sensitive users, behavioral patterns, and interaction habits. While providing a foundation for the intelligence of network services, such information also presents opportunities for malicious attackers. Consequently, monitoring malicious traffic has become a key focus in network security research [1]. Traditionally, malicious traffic detection relies on centralized data collection, uniform processing, and modeling. This approach not only requires significant resources, but also increases the risk of data privacy breaches. As a result, users become reluctant to share raw traffic data that may contain private information, ultimately creating “Data Silos” [2]. Therefore, it is necessary to develop more secure, distributed methods for malicious traffic detection so as to protect privacy effectively [3].

As a distributed machine learning framework, federated learning differs from traditional centralized detection methods, because it eliminates the need to share raw data, while allowing multiple edge devices, also known as clients, to process data and build models locally. In this way, participants only need to upload model parameters to a central server for aggregation, resulting in a shared global detection model [4]. Moreover, this approach alleviates the substantial workload associated with centralized data collection. While reducing data processing and storage costs, it can effectively safeguard user privacy and enable cross-domain learning and utilization of data [5].

The existing deep learning methods generally rely on centralized traffic data collection for unified processing and detection. In contrast, this paper investigates federated learning-based distributed malicious traffic detection. This approach can effectively leverage data and computational resources from diverse organizations and devices, while ensuring data privacy [6]. Facilitating information sharing and collaboration among various data owners reduces the dependence of global detection models on a single piece of data [7].

The contributions of this paper are summarized as follows:

- Proposes a federated learning-based distributed malicious traffic detection framework (FL-CNN-Traffic): This framework combines CNN and various federated learning aggregation algorithms (FedAvg, FedProx, Scaffold, and FedNova) to enable local detection on edge devices and global model aggregation on a central server. In malicious traffic detection tasks, the framework integrates data privacy preservation with cross-user collaboration, effectively addressing the “Data Silo” issue while mitigating the privacy leakage risks inherent in centralized detection methods.

- Establish a dynamic collaboration mechanism between the independent client model and the global model: In this framework, clients can use local data for independent model training and updating; and then, only the updated parameters need to be uploaded to the server, which would aggregate these parameters to generate a global model. This collaboration mechanism avoids the centralized transmission of raw traffic data, effectively protecting data privacy and fully learning the diverse data features across devices.

- Performance validation and adaptability evaluation in multiple data scenarios: In the IID data scenario, experiments show that the distributed detection framework for malicious traffic can effectively use computing resources on clients, reduce training time and memory usage, and achieve a level of accuracy comparable to or even better than that of centralized detection methods. In the Non-IID data scenario, datasets are divided by using the Dirichlet and logarithmic normal distribution, so as to implement a systematic analysis of the performance and applicability of the four aggregation algorithms. Moreover, a comparison with other deep neural networks based on federated learning reveals an accuracy improvement of 2.59–4.73%, confirming this framework’s advantages.

2. Related Work

With the development of the internet and continuous technological advancements, security issues such as malicious traffic have become increasingly severe. Many experts have proposed various methods for detecting malicious traffic. This paper divides the research into two categories: the current state of malicious traffic detection and the research on the application of federated learning in traffic detection.

2.1. Malicious Traffic

Traditional malicious traffic detection techniques primarily rely on feature matching and behavioral analysis. These methods depend on known signature databases or expert-defined rules to identify attacks by matching captured network traffic against known characteristics, as seen in intrusion detection systems (IDS) and firewalls. While these approaches are straightforward and achieve high accuracy in identifying known attacks, they are ineffective at detecting novel threats and incur high costs for feature labeling.

With the advancement of machine learning, researchers have begun applying these techniques to malicious traffic detection to improve accuracy and efficiency. Moore et al. [8] were among the first to combine network traffic statistical features with machine learning, using a supervised Multinomial Naive Bayes (MNB) classifier, which achieved a 50–70% improvement in accuracy compared to traditional statistical feature classification. Li et al. [9] proposed a semi-supervised Support Vector Machine (SVM) approach that requires only a few labeled samples to achieve results comparable to supervised methods. Fu et al. [10] developed an unsupervised real-time traffic detection system called HyperVision, which, for the first time, utilizes traffic interaction graphs to analyze connectivity, sparsity, and statistical features for detecting abnormal interaction patterns, thereby identifying unknown traffic as potentially malicious.

Deep learning techniques, leveraging neural networks for automatic feature learning, have further enhanced detection systems’ accuracy and generalization capabilities. Aldwairi et al. [11] designed a two-layer Restricted Boltzmann Machine (RBM) for network intrusion detection, achieving an accuracy of 89.7% in binary classification. Belarbi et al. [12] employed a Deep Belief Network (DBN) for multiclass testing on the CICIDS2017 dataset, reaching an accuracy of 94%. Li [13] proposed the AE-IDS system, which uses a three-layer auto-encoder combined with a random forest algorithm for feature selection, applies AP clustering for feature grouping, and utilizes K-means for anomaly detection, thereby reducing data dimensionality and improving detection accuracy. Wang et al. [14] were the first to apply Convolutional Neural Networks (CNNs) to malicious traffic detection by converting raw traffic data into grayscale images for input into a two-dimensional CNN, achieving an average accuracy of 99.41% on the USTC-TFC2016 dataset collected from natural environments. Cui et al. [15] compared the applications of CNNs and Recurrent Neural Networks (RNNs) in network traffic detection, concluding that CNNs perform best in binary classification tasks. Addressing the issue that a single deep learning model may not fully capture the features of raw traffic data, Li Xiangjun et al. [16] combined a CNN with Bidirectional Long Short-Term Memory (LSTM) networks to create a multi-model parallel fusion detection method, thereby improving detection accuracy. These seminal studies demonstrate that deep learning techniques offer advantages over traditional machine learning methods in malicious traffic detection. With their weight-sharing and dimensionality reduction capabilities, CNN-based detection models significantly enhance training efficiency and detection accuracy. Therefore, this paper selects a two-dimensional CNN as the detection training model for the client-side application.

While the methods above have achieved varying degrees of success in malicious traffic detection and classification, they rely on centralized data processing, which poses significant privacy concerns. Consequently, a key research focus has shifted toward ensuring data security while maintaining the effectiveness of detection methods.

2.2. Federated Learning for Malicious Traffic Detection

Deep learning methods are widely used in malicious traffic detection. Despite their high accuracy, the centralized processing of traffic data poses security risks related to privacy breaches. This translation maintains clarity and concisely emphasizes privacy concerns. In 2016, McMahan [17] introduced federated learning, a technique designed to address privacy concerns in the “cloud-edge” paradigm. As a distributed learning method, federated learning has been applied to malicious traffic detection. By leveraging steps such as global model distribution, local model training, and uploading and aggregating model parameters, traffic data generators can collaboratively build a global malicious traffic detection model without sharing raw data, thus effectively safeguarding data privacy and security. Mothukuri et al. [18] proposed an FL-based anomaly detection method for identifying abnormal intrusions in the Internet of Things (IoT). Man et al. [19] developed a Federated Learning-assisted Convolutional Neural Network (FedACNN) mechanism for distributed anomaly traffic detection. Chen Hexiong et al. [20] introduced a collaborative anomaly traffic detection technique using FL, leveraging detection nodes in a Stochastic Gradient Descent (SGD) network to construct a multi-node collaborative detection framework. Zhang Shuaihua et al. [21] designed a semi-supervised FL model for malicious traffic detection, addressing the issue of performance degradation in the global model caused by differing data labels among participants. To tackle the challenge of reduced classification accuracy in heterogeneous environments, Gao et al. [22] proposed a Federated Analysis (FA)-assisted FL method, FEAT, which analyzes the skewness of parameter estimates from client uploads and uses Thompson sampling to select less-skewed data for global model aggregation, thereby enhancing classification accuracy. Sarhan et al. [23] proposed a federated learning-based collaborative network threat intelligence sharing scheme, where participants extract valuable information using a standardized network traffic data format. By adopting a federated learning approach, the scheme effectively identifies multiple types of malicious traffic while ensuring data security. Wang Wenyue [24] was the first to define the problem of federated continual learning in network traffic scenarios and proposed a personalized and sustainable FL algorithm. This algorithm mitigates the issue of catastrophic forgetting by aggregating weights based on the similarity of client models, ultimately improving the accuracy of malicious traffic detection.

3. FL-CNN-Traffic Framework

This section introduces FL-CNN-Traffic, a framework combining a CNN with federated learning for distributed malicious traffic detection. We provide a detailed explanation of each step involved in the framework.

3.1. Overview

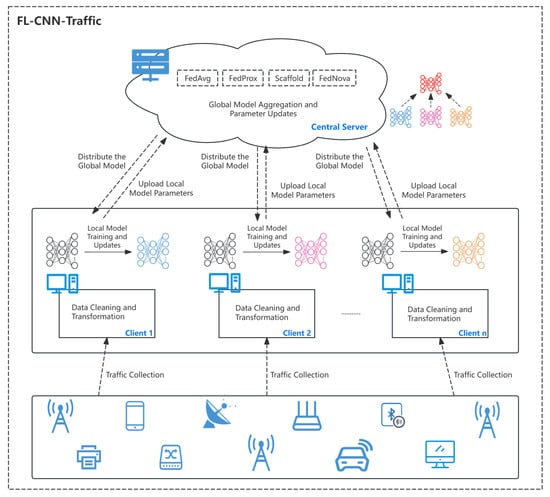

FL-CNN-Traffic is illustrated in Figure 1. Multiple edge clients collect traffic data from local devices and perform preprocessing operations such as data cleaning and transformation. The central server acts as a coordinator, randomly selecting clients to participate in training during each round and distributing the global model parameters from the previous round to these clients. The clients then train local models using their local data and return the updated model parameters to the server. The server aggregates and updates the global model, repeating the process of distributing updates and aggregating uploads until the global model converges.

Figure 1.

Federated learning-based distributed malicious traffic detection framework.

3.2. Edge Client Design

Due to individual devices’ limited storage resources and computational capabilities, handling large-scale data and tasks requiring intensive computation is challenging. For instance, during the training of a deep learning model, the device not only needs to store a large amount of training data but also requires powerful computational capabilities to complete complex mathematical operations like gradient descent, which is beyond the capacity of ordinary devices [25]. Therefore, tasks can be distributed across multiple edge devices, reducing storage and computational burdens [26]. These devices act as clients in federated learning, and each client needs to perform the following tasks:

- Data Collection and Preprocessing: Capture raw traffic data from devices, conduct data preprocessing and feature extraction, and use the processed data as local data for clients to use in subsequent model training.

- Global Model Reception: Receive the global model transmitted by the server and use it as the foundation for updating the local model.

- Local Model Training: Upon receiving the global model parameters sent by the server, initialize them as the local model parameters. Then, the model will be updated using the local dataset through optimization algorithms, such as stochastic gradient descent (SGD).

- Uploading Local Model Parameters: Return the updated model parameters to the server, where they will be aggregated to update the new global model. This process enables collaborative training across multiple clients without disclosing local traffic data.

In this paper, CNN is employed as the local training detection model on the client side, with the structure shown in Figure 2. CNN is a feedforward neural network commonly used for image recognition, primarily consisting of convolutional layers, pooling, and fully connected layers. The model designed in this paper consists of four convolutional layers and three fully connected layers. Each layer optimizes through batch normalization, ReLU activation functions, and max-pooling operations. Specifically, the preprocessed traffic data are first passed through the convolutional layers to extract features. The pooling layers then perform downsampling to reduce data dimensionality and computational complexity. Batch normalization accelerates the training process and enhances model stability, while the ReLU activation function introduces non-linearity, enabling the model to learn complex patterns. After multiple convolutional and pooling operations, the extracted feature maps are flattened and fed into the fully connected layers. ReLU functions and dropout layers mitigate the risk of overfitting and improve the model’s generalization ability. Finally, the feature vectors are mapped to the final class labels, thereby enabling the classification and detection of the input data.

Figure 2.

Structure of the convolutional neural network model.

3.3. Central Server Aggregation Design

The server acts as a coordinator, providing a trusted third party for the participating clients and aggregating model knowledge from different clients. Clients do not need to upload raw data, thus preserving user privacy while constructing an effective collaborative training model in a distributed environment. The server performs the following tasks:

- Global Model Initialization: The server randomly initializes a global model parameter to provide a solid starting point for the entire federated learning training process.

- Global Model Distribution: In the first round, the initialized global model is sent to the participating clients. In subsequent rounds of iteration, the server distributes the updated global model parameters to the participating training clients.

- Global Model Aggregation: To effectively aggregate the local traffic data knowledge from each client, the server receives the updated model parameters returned by the clients and performs aggregation according to various methods after each round of federated learning.

This paper employs four federated learning algorithms as aggregation methods for the server. A detailed analysis is conducted for each federated learning server aggregation and client update method. The related symbol explanations are provided in Table 1, following the parameter definition method in reference [27]. The processes of the central server and edge client are shown in Algorithm 1 and Algorithm 2, respectively.

Table 1.

List of symbols and descriptions.

| Algorithm 1 Implementation of central server |

|

| Algorithm 2 Implementation of edge client |

|

We define some terms used in the paper:

- Defined the relevant parameters for the client: total number of clients n, unique index Client ID “k”, number of samples per client , local training epochs E, learning rate , and local training loss function gradient .

- Defined the relevant parameters for the server’s global model: global model , total communication rounds , and control variable c for the aggregation algorithm.

3.3.1. FedAvg Weighted Average Aggregation

In the FedAvg [17], the server receives model update parameters from clients in each iteration and aggregates them using a weighted average. The server first sends the current global model to the participating clients. The clients then initialize their local model parameters using this global model and train on their local data to compute the updated model parameters . After receiving the clients’ update parameters, the server performs a weighted average based on the ratio of each client’s data size to the global data size n to obtain the global model parameters, as shown in Equation (1).

3.3.2. FedProx Regularization Constraint Aggregation

In the FedProx [28], a regularization term “” is introduced based on FedAvg to address the degradation in model training quality caused by data non-independence and identically distributed (Non-IID) and device heterogeneity. Like FedAvg, the server first distributes the global model to the clients participating in the training. The critical difference is that FedProx requires clients to minimize their local loss functions during training and account for the deviation between the local and global models. A regularization term is added to the local loss function to correct the model update direction, thereby imposing a constraint on the local model updates by considering the global model. The introduction of the regularization term limits the deviation between the local and global models, making the algorithm suitable for scenarios with significant data distribution differences or hardware performance inconsistencies. The client model update follows Equation (2), where “” represents the regularization term, controlling the penalty for the deviation between the global and local models. The server aggregation process remains consistent with that of FedAvg.

3.3.3. Scaffold Control Variable Aggregation

Scaffold [29] reduces the bias in model updates by introducing a control variate mechanism. Initially, the server sends the global model parameters and the global control variate c to the participating clients. During local training, the clients use the difference between their local control variate and the global control variate c to adjust the model updates, resulting in the updated model parameters, as shown in Equation (3). The clients then upload the updated model parameters and control variables to the server. Next, the server updates the global control variate through the control variable mechanism. It performs a weighted average aggregation of the clients’ model parameters to obtain the new global model parameters . The initial control variates share the same structure as the global model parameters, facilitating consistent adjustment and control throughout the training process.

3.3.4. FedNova Normalized Averaging Aggregation

FedNova [30] employs a normalized averaging method to mitigate the objective inconsistency caused by Non-IID data and device heterogeneity while maintaining a fast convergence rate. Clients update their local model parameters during local training based on their local training rounds and data. Due to differences in the computational capabilities of clients, the number of local training iterations may vary; the server first normalizes these updates upon receiving the model parameters from the clients to eliminate the effects of varying training rounds across clients. Then, the server adjusts the updates based on the clients’ computational loads, ensuring that each client’s contribution to the global model corresponds to its resource capacity. Finally, the server aggregates the updates to generate a new global model, as shown in Equation (4).

4. Experiments

4.1. Experimental Setup

4.1.1. Experimental Environment

The experiments were conducted on a system with an Intel(R) Core(TM) i7-12700H 2.30 GHz processor, 16 GB of memory, and the Windows 11 operating system. The programming was carried out using Python 3.9, with primary libraries including Numpy 1.21.5, Pandas 1.2.5, and Torch 1.8.1.

4.1.2. Dataset Processing

The dataset used in this study is the USTC-TFC2016 dataset, which includes ten types of malicious traffic collected by CTU researchers from natural network environments between 2011 and 2015, as well as normal traffic collected using IXIA BPS equipment [14], resulting in eleven classification categories. The details are shown in Table 2.

Table 2.

List of traffic types and corresponding counts.

In this paper, each PCAP packet is split at the flow level. To effectively extract and represent traffic features while ensuring compatibility with the constructed convolutional neural network model, the first 784 bytes of each flow are used as feature inputs. This decision is based on several considerations. First, the initial portion of a flow contains critical information for establishing connections and initiating sessions, which is essential for identifying traffic types. Second, the first 784 bytes typically include sufficient application-layer data that significantly reflect the flow’s behavior and characteristics. Lastly, selecting a fixed byte length as the model input standardizes the data format, simplifies the data processing workflow, and enhances the efficiency of model training and testing.

To further enhance the representation capability for data, a data visualization approach is employed by mapping each byte value to a grayscale pixel, so as to transform the raw network traffic data into a -byte two-dimensional grayscale image. On the one hand, such images’ format can facilitate effectively transforming the temporal and spatial correlation features inherent in the original traffic data into spatial distributions of pixels. The information conveyed by color, brightness, and texture can demonstrate the relationships within traffic data and reveal the structures and patterns in a more intuitive manner. Additionally, benign and malicious traffic patterns in an image would deliver different visual features, which would aid in identifying abnormal traffic. On the other hand, such images can effectively leverage the advantages of CNN in capturing spatial hierarchical structures and patterns. Through convolutional operations, local features in an image can be extracted efficiently, and complex feature combinations can be learned layer-by-layer. Compared to tabular data, such an image format cannot only represent traffic features at different scales, but can also diminish the reliance on manual feature engineering, simplifying the preprocessing complexity and improving the efficiency. As shown in Figure 3, the grayscale images include a mixture of benign traffic and malicious traffic. The processed dataset will be divided into 80% for model training and 20% for model testing.

Figure 3.

Traffic grayscale image showing (a) Benign Traffic and (b) Malicious Traffic.

4.2. Evaluation Metrics

The evaluation metrics used in this experiment include Accuracy, Precision, Recall, and F1. True Positive (TP) refers to the number of malicious traffic samples correctly identified in the test set. False Positive (FP) denotes the number of benign traffic samples that were incorrectly classified as malicious. True Negative (TN) is the count of benign traffic samples correctly identified in the test set. False Negative (FN) represents the number of malicious traffic samples that were incorrectly classified as benign in the test set, as shown in Table 3.

Table 3.

Model evaluation metrics.

4.3. Performance Comparison Between Centralized and Federated Learning for Traffic Classification

This paper aims to validate the applicability of federated learning for malicious traffic detection and classification by comparing centralized and federated distributed models under the assumption of IID data. Table 4 presents the results of centralized training for traffic classification detection, while Table 5 displays the outcomes for federated learning-based distributed malicious traffic classification. IID means that each client’s traffic data samples are independently generated, and the traffic categories and labels share the same statistical properties and distribution patterns.

Table 4.

Centralized malicious traffic classification performance.

Table 5.

Distributed malicious traffic classification performance.

Table 4 and Table 5 show that centralized classification detection provides ideal training results. However, even under IID data, distributed federated learning can achieve comparable results to centralized training for each category and, in some cases, even outperform centralized detection for specific categories. This indicates that relying solely on local centralized data for detection has limitations and that centralized detection can be prone to data privacy breaches. In contrast, federated learning protects data privacy and integrates knowledge from multiple sources, achieving results comparable to centralized classification. This demonstrates the necessity and effectiveness of federated learning in malicious traffic detection.

Additionally, a comparison of training time and memory usage between centralized and distributed training modes was conducted, with the results shown in Figure 4. Centralized training demands longer training time and greater memory capacity, whereas distributed training requires relatively less memory and reduced training time. The results demonstrate that distributed training can utilize computational resources more efficiently. By leveraging parallel processing across multiple clients, training time is significantly reduced. Furthermore, because data and model parameters are distributed across various device nodes, the memory burden on individual devices is notably alleviated. This finding further supports the feasibility of distributed methods for malicious traffic detection.

Figure 4.

Comparison of (a) Training Time and (b) Memory Space under different training modes.

4.4. Performance of the FL-CNN-Traffic

4.4.1. Non-IID Traffic Scenario Segmentation

In the previous experiment, we validated the effectiveness of applying federated learning to malicious traffic detection under the assumption of IID data. However, in real-world network environments, clients often experience varying conditions, with discrepancies in the types and amounts of traffic they handle. These differences can impact federated learning models’ performance and generalization capabilities. To better simulate this scenario, the experiment in this section employs a combination of Dirichlet and log-normal distributions to partition the data in a Non-IID manner, thus creating a more complex and diverse distributed traffic detection environment.

Class imbalance: A Dirichlet distribution is used to divide the data categories among clients, with its probability density function shown in the corresponding Equation (5). The parameter (0–1) controls the degree of divergence in category distribution across clients. A smaller value of indicates a greater disparity in the distribution of the same data category among clients. In this experiment, we set = 0.6.

Data Imbalance: The logarithmic normal distribution is employed to achieve disparities in client data volumes. The imbalance parameter is selected as the standard deviation of the normal distribution. When , each client receives an equal number of samples. Larger values indicate more significant differences in client data volumes. Given the total data n and the number of clients k, the mean of the normal distribution is calculated, and samples are drawn from the logarithmic normal distribution to determine the data volume for each client, as shown in Equation (6). In this experiment, we set imbalance = 0.5. Figure 5 shows the distribution of data among clients, where each Client ID represents a unique client, and different colors denote different traffic categories. The length of each colored segment reflects the quantity within each category. The figure shows that the traffic categories and quantities vary across clients, effectively simulating the Non-IID data scenario.

Figure 5.

View of Non-IID partitioning of traffic data.

4.4.2. Performance of the FL-CNN-Traffic

The experiment involves 20 clients, using CNN from Section 3.1 as the local training model for each client. The server employs a binomial distribution to randomly sample each client, determining whether they will participate in the current training round. If the sampling result is 1, the client is selected and added to the list of participating clients, while its local data size is recorded for global model aggregation. To ensure fairness in the experiment, the server consistently updates the parameters over 100 rounds, and each client communicates with the global model after three local training updates. The regularization term of FedProx is set to 0.1, and the global and local control variables of Scaffold are initialized as zero matrices with the same structure as the global model.

Table 6 presents the performance of FL-CNN-Traffic under various aggregation methods. FedAvg achieves the global model by averaging the parameters from client models. Although this method is straightforward, the significant differences in network traffic data distributions across clients can cause the model to be influenced by a few extreme values, leading to inadequate generalization. FedProx introduces a regularization term to limit the magnitude of client model updates, alleviating training instability caused by imbalanced data distribution and varying computational capabilities. Although this method improves the robustness of model aggregation, the performance gains are limited due to the relatively small regularization term used in the experiment, resulting in accuracy levels close to those of FedAvg.

Table 6.

FL-CNN-Traffic malicious traffic detection performance.

Network traffic data’s dynamic and diverse nature results in substantial discrepancies in model update directions across clients. While Scaffold reduces this deviation through control variables, it performs the worst. This poor performance may be attributed to the limited number of clients randomly selected in each communication round, which impairs the precision of estimating local model update adjustments based on client and server control variable differences, leading to a decline in performance.

FedNova improves upon this by normalizing client updates, which addresses the issue of update direction bias and significantly enhances model robustness on heterogeneous traffic data. Experimental results demonstrate that FedNova performs best across all metrics, effectively handling the complexities and distributional imbalances inherent in malicious traffic detection tasks. Although imbalances in traffic data distribution still impact detection performance, the algorithm holds significant practical value in network security.

4.5. Comparison of Different Neural Networks Combined with Federated Learning for Malicious Traffic Detection

In this section, we compare the malicious traffic detection performance of the proposed framework integrated with three different neural networks (KNN, MLP, and LeNet) within a federated learning setting, using the same parameters as those in Section 4.3. The experimental results are presented in Figure 6.

Figure 6.

The performance of different neural networks combined with federated learning aggregation methods.

The proposed Federated Learning-based Distributed Malicious Traffic Detection Framework outperforms other neural network models combined with federated learning in all evaluated metrics. The framework achieves an accuracy improvement of 2.59% to 4.73%, a precision increase of 3.75% to 5.61%, and an F1-score enhancement of 4.51% to 6.57% compared to alternative models. These results indicate that the proposed framework more effectively differentiates between malicious and benign traffic, reducing misclassification significantly, lowering the false positive rate, and enhancing the ability to capture malicious traffic, thereby improving overall performance.

5. Conclusions

This paper proposes a distributed malicious traffic detection framework based on federated learning (FL) to address the challenges of traditional centralized malicious traffic detection, such as difficulties in data collection, computational complexity, and privacy concerns. In this framework, a CNN is used as a local training model for the clients to extract features of malicious traffic and perform local training. Different aggregation methods are employed to aggregate the global model. Experimental results indicate that, under the assumption of data being IID, the proposed framework achieves performance comparable to or exceeding that of centralized malicious traffic detection systems. Furthermore, the framework’s effectiveness in detecting malicious traffic is validated in the context of Non-IID data. Comparisons with other neural network-based federated learning approaches demonstrate that the proposed method achieves higher accuracy, ranging from 2.59% to 4.73%, and improves classification performance by 4.51% to 6.57%.

However, this framework has some deficiencies to be overcome. Although multiple aggregation algorithms are used to optimize performance, this framework is still affected by differences in data distribution, thus leading to a slight decline in overall detection accuracy. Furthermore, this framework only uses CNN as the local detection model on clients, and other neural networks suitable for different traffic features still need further validation. Future research could adopt different deep learning models on different clients to capture local network traffic features more precisely. Personalized processing strategies can also be developed to address the heterogeneity of traffic data from clients. In this way, the collaboratively trained global model can better meet the traffic feature requirements of different clients in a specific manner and further boost the detection performance.

Author Contributions

Conceptualization, Y.L. and Z.W.; methodology, Y.L. and S.P.; software, Y.L.; validation, Y.L., Z.W. and S.P.; formal analysis, Y.L. and S.P.; investigation, Y.L.; resources, Z.W.; data curation, Y.L.; writing—original draft preparation, Y.L. and S.P.; writing—review and editing, Z.W. and L.J.; visualization, Z.W.; supervision, L.J. and Z.W.; project administration, L.J.; funding acquisition, Z.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Fundamental Research Funds for the Central Universities (Grant No. 3282024050, 3282024021); the key field science and technology plan project of Yunnan Province Science and Technology Department (Grant No. 202402AD080004).

Data Availability Statement

Data supporting the results of this study are available from the corresponding author upon request.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| FL-CNN-Traffic | Federated Learning-based Distributed Malicious Traffic Detection Framework |

| FL | Federated Learning |

| CNN | Convolutional Neural Network |

| IID | Independent and Identically Distributed |

| Non-IID | Non-Independent and Identically Distributed |

References

- Jayaraman, B.; Thai, M.T.N.T.; Anand, A.; Anandan, K.R. Detecting malicious IoT traffic using machine learning techniques. Rom. J. Inf. Technol. Autom. Control 2023, 33, 47–58. [Google Scholar] [CrossRef]

- Chen, F.; Li, P.; Miyazaki, T. In-network aggregation for privacy-preserving federated learning. In Proceedings of the 2021 International Conference on Information and Communication Technologies for Disaster Management (ICT-DM), Hangzhou, China, 3–5 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 49–56. [Google Scholar]

- Nguyen, D.C.; Ding, M.; Pathirana, P.N.; Seneviratne, A.; Li, J.; Poor, H.V. Federated learning for internet of things: A comprehensive survey. IEEE Commun. Surv. Tutorials 2021, 23, 1622–1658. [Google Scholar] [CrossRef]

- Chen, M.; Shlezinger, N.; Poor, H.V.; Eldar, Y.C.; Cui, S. Communication-efficient federated learning. Proc. Natl. Acad. Sci. USA 2021, 118, e2024789118. [Google Scholar] [CrossRef]

- Li, L.; Fan, Y.; Tse, M.; Lin, K.Y. A review of applications in federated learning. Comput. Ind. Eng. 2020, 149, 106854. [Google Scholar] [CrossRef]

- Lu, W.; Zhang, X. Based on federated learning and convolutional neural networks intrusion detection model. J. Inf. Secur. Res. 2024, 10, 642. [Google Scholar]

- Yin, Z.W. Design and Implementation of a Distributed Network Security Knowledge Update Mechanism Based on Federated Learning. Master’s Thesis, Beijing Jiaotong University, Beijing, China, 2023. [Google Scholar]

- Moore, A.W.; Zuev, D. Internet traffic classification using bayesian analysis techniques. In Proceedings of the 2005 ACM SIGMETRICS International Conference on Measurement and Modeling of Computer Systems, Banff, AB, Canada, 6–10 June 2005; pp. 50–60. [Google Scholar]

- Li, X.; Qi, F.; Xu, D.; Qiu, X.S. An Internet traffic classification method based on semi-supervised support vector machine. In Proceedings of the 2011 IEEE International Conference on Communications (ICC), Kyoto, Japan, 5–9 June 2011; pp. 1–5. [Google Scholar]

- Fu, C.; Li, Q.; Xu, K. Detecting unknown encrypted malicious traffic in real time via flow interaction graph analysis. arXiv 2023, arXiv:2301.13686. [Google Scholar]

- Aldwairi, T.; Perera, D.; Novotny, M.A. An evaluation of the performance of Restricted Boltzmann Machines as a model for anomaly network intrusion detection. Comput. Netw. 2018, 144, 111–119. [Google Scholar] [CrossRef]

- Belarbi, O.; Khan, A.; Carnelli, P.; Spyridopoulos, T. An intrusion detection system based on deep belief networks. In Proceedings of the International Conference on Science of Cyber Security, Cham, Switzerland, 15–17 August 2022; Springer International Publishing: Cham, Switzerland, 2022; pp. 377–392. [Google Scholar]

- Li, X. Research and Implementation of an Intrusion Detection System Based on Autoencoders. Master’s Thesis, Nanjing University of Posts and Telecommunications, Nanjing, China, 2020. [Google Scholar]

- Wang, W.; Zhu, M.; Zeng, X.; Ye, X. Malware traffic classification using convolutional neural network for representation learning. In Proceedings of the 2017 International Conference on Information Networking (ICOIN), Da Nang, Vietnam, 11–13 January 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 712–717. [Google Scholar]

- Cui, J.; Long, J.; Min, E.; Liu, Q.; Li, Q. Comparative study of CNN and RNN for deep learning based intrusion detection system. In Proceedings of the Cloud Computing and Security: 4th International Conference, ICCCS 2018, Haikou, China, 8–10 June 2018; Revised Selected Papers, Part V. Springer International Publishing: Cham, Switzerland, 2018; pp. 159–170. [Google Scholar]

- Li, X.; Wang, J.; Wang, S.; Chen, J.; Sun, J.; Wang, J. A malicious traffic detection method based on multi-model parallel fusion network. J. Comput. Appl. 2023, 43 (Suppl. S2), 122–129. [Google Scholar]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Artificial Intelligence and Statistics; PMLR: New York, NY, USA, 2017; pp. 1273–1282. [Google Scholar]

- Mothukuri, V.; Khare, P.; Parizi, R.M.; Pouriyeh, S.; Dehghantanha, A.; Srivastava, G. Federated-learning-based anomaly detection for IoT security attacks. IEEE Internet Things J. 2021, 9, 2545–2554. [Google Scholar] [CrossRef]

- Man, D.; Zeng, F.; Yang, W.; Yu, M.; Lv, J.; Wang, Y. Intelligent intrusion detection based on federated learning for edge-assisted Internet of Things. Secur. Commun. Netw. 2021, 2021, 9361348. [Google Scholar] [CrossRef]

- Chen, H.; Luo, Y.; Xie, L.; Zhang, Z.; Luo, Z.; Guo, W. Collaborative Detection Technology of SDN Abnormal Traffic Based on Federated Learning. Comput. Eng. 2023, 49, 168–176. [Google Scholar]

- Zhang, S.; Zhang, S.; Zhou, M.; Xu, C.; Chen, X. Malicious traffic detection model based on semi-supervised federated learning. J. Comput. Appl. 2024, 11, 3487–3494. [Google Scholar]

- Guo, Y.; Wang, D. Feat: A federated approach for privacy-preserving network traffic classification in heterogeneous environments. IEEE Internet Things J. 2022, 10, 1274–1285. [Google Scholar] [CrossRef]

- Sarhan, M.; Layeghy, S.; Moustafa, N.; Portmann, M. Cyber threat intelligence sharing scheme based on federated learning for network intrusion detection. J. Netw. Syst. Manag. 2023, 31, 3. [Google Scholar] [CrossRef]

- Wang, W. Research on Malicious Traffic Detection Methods for the Internet of Things Based on Federated Learning. Master’s Thesis, Jinan University, Guangzhou, China, 2023. [Google Scholar]

- Bonawitz, K. Towards federated learning at scale: System design. arXiv 2019, arXiv:1902.01046. [Google Scholar]

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge computing: Vision and challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Li, H.; Cao, H.; Feng, Y.; Li, X.; Pei, J. Optimization of Graph Clustering Inspired by Dynamic Belief Systems. IEEE Trans. Knowl. Data Eng. 2023, 36, 6773–6785. [Google Scholar] [CrossRef]

- Li, T.; Sahu, A.K.; Zaheer, M.; Sanjabi, M.; Talwalkar, A.; Smith, V. Federated optimization in heterogeneous networks. Proc. Mach. Learn. Syst. 2020, 2, 429–450. [Google Scholar]

- Karimireddy, S.P.; Kale, S.; Mohri, M.; Reddi, S.; Stich, S.; Suresh, A.T. Scaffold: Stochastic controlled averaging for federated learning. In Proceedings of the International Conference on Machine Learning, Virtual, 13–18 July 2020; PMLR: New York, NY, USA, 2020; pp. 5132–5143. [Google Scholar]

- Wang, J.; Liu, Q.; Liang, H.; Joshi, G.; Poor, H.V. Tackling the objective inconsistency problem in heterogeneous federated optimization. Adv. Neural Inf. Process. Syst. 2020, 33, 7611–7622. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).