Abstract

Recently, deep-learning-based low-light image enhancement (LLIE) methods have made great progress. Benefiting from elaborately designed model architectures, these methods enjoy considerable performance gains. However, the generalizability of these methods may be weak, and they may suffer from the overfitting risk in the case of insufficient data as a result. At the same time, their complex model design brings serious computational burdens. To further improve performance, we exploit dual information, including spatial and channel (contextual) information, in the high-dimensional feature space. Specifically, we introduce customized spatial and channel blocks according to the feature difference of different layers. In shallow layers, the feature resolution is close to that of the original input image, and the spatial information is well preserved. Therefore, the spatial restoration block is designed for leveraging such precise spatial information to achieve better spatial restoration, e.g., revealing the textures and suppressing the noise in the dark. In deep layers, the features contain abundant contextual information, which is distributed in various channels. Hence, the channel interaction block is incorporated for better feature interaction, resulting in stronger model representation capability. Combining the U-Net-like model with the customized spatial and channel blocks makes up our method, which effectively utilizes dual information for image enhancement. Through extensive experiments, we demonstrate that our method, despite its simplicity of design, can provide advanced or competitive performance compared to some state-of-the-art deep learning- based methods.

1. Introduction

Low-light image enhancement (LLIE) is a key image processing task that has received massive attention from researchers in recent years. It involves the enhancement of images captured under poor light conditions, where the image quality is affected by inappropriate exposure, noise, blurring, off-color, and low contrast. As a critical step, LLIE can benefit many applications, such as night photography, autonomous driving and underwater imaging [1,2,3]. In night photography, it could improve the photo quality and provide more creative space for photographers. In automatic driving systems, LLIE can improve the vehicle perception at night or under adverse weather conditions, enhancing road safety. In the underwater environment, LLIE can resolve the common issues, such as blurred details, low contrast, color distortion, poor clarity, and uneven illumination. In short, LLIE is of wide significance in numerous applications.

Traditional image processing methods, such as histogram equalization [4], gamma correction [5], and Retinex models [6,7], can be applied to handle the LLIE task, yet these show limited performance since their simple handcraft design or modeling cannot handle complex image quality degradation.

In recent years, deep learning techniques, especially convolutional neural networks (CNNs), have become an indispensable tool for LLIE [8]. In the data-driven manner, these methods either learn image-to-image mappings [9] directly or incorporate prior information (Retinex model [10,11,12] or structure information [13], etc.) to guide their architecture designs. Recent model design advances can be classified into two types, including interblock design and intrablock design. A typical example of the former is that of Retinex-related methods [11], where multiple subnetworks are employed to accomplish Retinex decomposition, illumination adjustment, and reflectance restoration. The same goes for multistage networks [14] and deep unfolding networks [15]. By such interblock design, they could decouple or separate the problem into multiple stages to better address each subproblem, which is considered to be simpler. Differently, the intrablock design includes various attention mechanisms, structure information exploitation [13], semantic information guidance, and so on. However, these recent methods have a tendency to become increasingly complex. Although carefully designed architectures and advanced tricks have brought some performance gains, these methods may not guarantee good generalizability and efficiency. Their complex designs may also discourage researchers from analyzing, validating, and comparing these methods [16]. The results of several typical low-light image enhancement methods are shown in Figure 1. It can be seen that some large model methods, such as EnlightenGAN and KinD++, may not work well. To resolve those issues, this paper designs a simple but effective network architecture based on dual information.

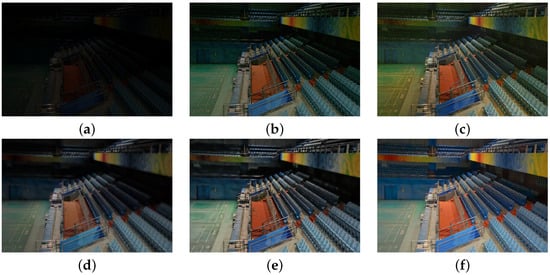

Figure 1.

Results of several typical low light image enhancement methods. (a) Input, (b) zero-DCE [17], (c) EnlightenGAN [18], (d) KinD [11], (e) KinD++ [19], (f) ours.

In this paper, we propose to utilize dual information, i.e., spatial and channel information, to construct a simple yet effective network for better low-light image enhancement. To achieve this goal, we perform different operations in different layers of the model based on the feature properties in order to sufficiently capitalize on the different attention mechanisms. Specifically, in the shallow layers (in this paper, we refer to the layers that are close to the inputs and outputs as shallow layers, whereas the layers in the middle are referred to as deep layers due to the U-shape), features are not excessively downsampled at the encoder or adequately upsampled at the decoder so that the resolution is similar to that of the original input. Such features contain relatively comprehensive and accurate spatial information, such as fine texture and edges in the image. Therefore, we introduce the proposed spatial restoration block into these layers to guide the model in recovering weak details hidden in the dark as well as suppressing noise. Note that those operations of shallow layers are highly correlated with spatial information. Differently, in the deep layers, the features are spatially compacted while the channels are sufficiently expanded. Plenty of information about the image, such as color and brightness, is embedded and distributed in these channels. Therefore, the designed channel interaction block is incorporated for feature interaction in the channel domain to better cope with global attribute-related subproblems, such as brightness and color correction, thus enhancing the model representation capability. Finally, we apply the above motivation to a fairly common U-shaped network structure to construct our method as [16]. Our method reasonably exploits the dual information for better enhancement performance, despite the simple design, compared to some state-of-art methods, such as multistage networks. Our contributions can be summarized as follows.

- We construct a dual information-based network for low-light image enhancement which makes effective use of spatial and channel (contextual) information, providing compelling enhancement performance.

- We propose to perform different operations for features with different properties based on designed spatial and channel blocks for better exploiting dual information.

- Our proposed method is simple but effective, which introduces two simple and lightweight designs on the basis of U-Net, achieving competitive performance.

- Extensive experiments validate that our method could offer advanced or competitive performance compared to some state-of-the-art methods.

2. Related Works

Deep Learning-Based Low-Light Image Enhancement: Although early traditional methods such as histogram-based and Retinex model-based methods have made great progress, they are not sufficiently generalizable due to their handcrafted priors or rules. In a data-driven manner, deep learning with its powerful learning capabilities has become a mainstream tool in the LLIE field. LLNet [9] is a pioneer in the deep learning applications for low-light image enhancement, using various stacked sparse denoising self-encoders to build a network that simultaneously brightens and denoises low-light images. MBLLEN [20] designs a multibranch network to perform feature extraction, enhancement and fusion to generate final results. The training dataset of the aforementioned methods is constructed by simulating low-light conditions, e.g., applying gamma transformation and adding noise. In order to construct a dataset that is closer to the real world, the SICE dataset is built in [21], which contains a large number of multiexposure images by changing the exposure value (EV). Then, image enhancers can be easily trained based on the SICE dataset. RetinexNet [10] designs several subnetworks based on the Retinex model to perform Retinex decomposition, illumination and reflectance adjustment, and finally produce the enhanced result. It also sets up the LOL dataset, which contains paired images under extreme dark scenes. The dataset was obtained by adjusting the cameras’ shooting parameters, such as ISO and the exposure time. Similarly, KinD [11] also employs multiple networks for decomposition and optimization, along with the designed loss function, to constrain the learning process. KinD is further improved by multi-illumination attention [19]. EnligtenGAN [18] learns the mapping from the low-light domain to the normal one based on generative adversarial network (GAN) [22] techniques, thus eliminating the need for strictly paired image datasets. DRBN [23] first iteratively obtains the band representation of low-light images in a supervised manner and then performs band recomposition through GAN in an unsupervised manner for better perception quality. TBEFN [24] first obtains two preliminary results through a network with two branches to cope with different degradation and then produces the final result by fusion and refinement. DSLR [25] introduces a Laplacian pyramid of images into the model design, aiming to guide the enhancement from coarse to fine. HWMNet [26] proposes a half wavelet attention block based on discrete wavelet transformation in their architecture. URetinex [27] unfolds the optimization of Retinex decomposition into a learning process based on several subnetworks. DCC-Net [28] proposes a divide-and-conquer strategy to decompose a low-light image into a gray one and its color histogram, aiming at color consistency. SMG [13] exploits the structure information to produce results with richer details and clearer edges.

Attention Mechanisms: Embedding attention mechanisms into deep networks has become a common practice in image processing tasks, particularly in image restoration-related tasks. SENet [29] proposes a squeeze-and-excitation reweighting network in the channel domain, which is also known as channel attention. GE [30] further introduces the spatial relationship. BAM [31] constructs a bottleneck attention module that integrates channel and spatial attention in parallel, while CBAM [32] considers them in series. CBAM also argues that common global average pooling (GAP) operators may result in information loss. Thus, CBAM additionally introduces global max pooling into the attention module. Inspired by non-local means, non-local operations [33] are proposed to capture long-range dependencies based on the dense relationship between any two positions. SkNet [34] introduces the dynamic selection mechanism between two features with different receptive field sizes. In recent years, the transformer [35] family has grown in popularity, and a variety of self-attention mechanisms have been proposed and have the ability to capture global dependencies. In this paper, we separately introduce attention mechanisms in the channel and spatial domains for features with different properties and can better leverage their roles, achieving compelling performance for low-light image enhancement.

3. Materials and Methods

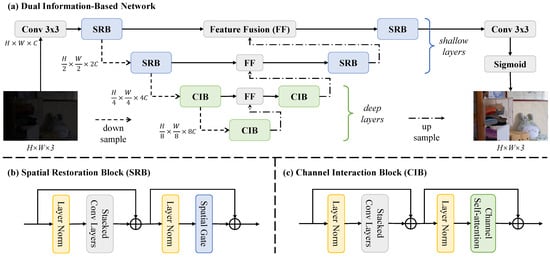

The framework of our proposed method is illustrated in Figure 2. In this section, we first detail the overall pipeline of the proposed dual information-based network. Then, we elaborate our designed spatial restoration block and channel interaction block. We finally design the loss function to train the network.

Figure 2.

The framework of our dual information-based network for low-light image enhancement. (a) dual information-based network, (b) spatial restoration block, (c) channel interaction block.

3.1. Overall Pipeline

We adopt the common U-shape network as our backbone for multiscale learning and efficiency improvement [16]. Specifically, our network is a typical encoder–decoder structure with four-scale learning as shown in Figure 2a. The spatial restoration blocks (SRBs) are deployed in shallow layers, which process features in two large scales to capture spatial information. Furthermore, the channel interaction blocks (CIBs) are inserted into deep layers, which handle features with multiple channels to achieve feature interaction, i.e., exchange high-level information distributed in different channels.

Given a low-light image with the shape of , where H, W, and 3 are the height, width, and channel number of the image, a convolution layer is first used to project the image to the feature space, producing the shallow feature with the shape of . Then, the obtained feature is fed into the four-scale encoder–decoder network, generating the dual information-aware feature. Finally, the feature is transformed by a convolution layer and to produce the enhanced results. During the process, we use max-pooling for downsampling in the encoder and transposed convolution layers for upsampling in the decoder. With each downsampling, the height and width of the feature map are halved and the number of channels is doubled, and vice versa for upsampling. Meanwhile, we employ a lightweight feature fusion module to connect the encoder and decoder, which is composed of concatenation and a depthwise separable convolution layer [36]. We use PReLU as the activation function across the whole network.

3.2. Spatial Restoration Block and Channel Interaction Block

As mentioned before, to better exploit dual information, we apply different modules to features with different properties in a U-Net-like network. Specifically, we design the spatial restoration block (SRB) to process high-resolution features in shallow layers, capturing spatial information. Meanwhile, we also design the channel interaction block (CIB) to handle channel-expanded features in deep layers, achieving feature interaction for exchanging contextual information.

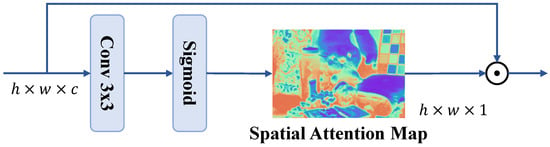

The framework of our CRB is shown in Figure 2b. We first use Layer Norm [37] to ensure the stability of the data distribution of the features. Then, stacked convolution layers are employed to extract local features and improve representation capability, which are composed of , , convolutional layers, and skip connection. The obtained feature is further processed by Layer Norm. Finally, we apply spatial attention to produce the spatial-aware feature. It is known that applying spatial self-attention to a high-resolution map is computationally expensive, where a pixel is normally regarded as a token and the complexity is quadratic with respect to the number of tokens [38]. Hence, it is impractical to apply spatial self-attention to aggregate pair-wise spatial information in high-resolution cases. Instead, we introduce the spatial gate mechanism to capture spatial information in a lightweight manner inspired by [32], as shown in Figure 3. The feature is transformed by a convolutional layer followed by , producing the desired spatial map. Finally, the input feature is modulated by the spatial map through element-wise multiplication, thus producing spatial-aware features. The whole SRB can be expressed as

where , , and are the input, intermediate, and output features of SRB, and , , and are the Layer Norm, stacked convolutions, and Spatial Gate, respectively.

Figure 3.

The illustration of the spatial gate.

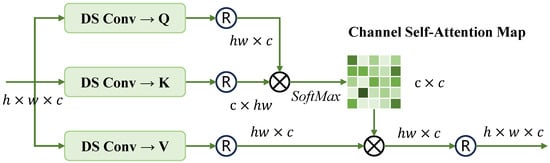

As for CIB, we utilize the similar structure of SRB, as shown in Figure 2c. The only difference lies in that the spatial gate is replaced by channel attention for feature interaction in deep layers. Early channel attention re-weights individual channels through global average pooling and fully connected layers. Different from the plain channel attention, we introduce channel self-attention to capture the channel-wise relationship inspired by [39]. Concretely, the input feature () is first transformed by three parallel depthwise separable convolution layers to generate the Query Q, Key K, and Value V, and all of them have the shape of . By transposing K, matrix multiplying with Q, and SoftMax, we can obtain the channel self-attention map. Finally, the output of CIB is derived by applying matrix multiplication to V and the attention map, achieving channel interaction and contextual information awareness. The whole process of CIB can be formulated as

where is the introduced channel self-attention, and other symbols share the similar meaning as Equation (1). can be mathematically expressed as

where represents the depthwise separable convolution, ⊗ is referred to as matrix multiplication, and d is the Q/K dimension. Note that we omit the multihead mechanism in self-attention for simplicity.

3.3. Loss Function

To encourage fidelity and structure similarity, we adopt the following hybrid loss to train our network,

where the is implemented as the sum of L1 loss and L2 loss to compensate their respective deficiency, and is measured by the SSIM (Structure Similarity Index Measure) index [40]. The mathematical form is given as

where N is the total number of pixels, and X and Y are the input and output, respectively.

4. Experimental Results

In this section, we first present our implementation details. Then, we make a quantitative comparison with some state-of-the-art methods on popularly adopted benchmarks. Finally, we show some visual cases to provide a qualitative comparison.

4.1. Implementation Details

To evaluate the effectiveness of our method, we train and test our method on the LOL dataset [10], as shown in Figure 4. LOL contains 500 low-light/normal-light image pairs, of which 485 pairs are used for training and 15 pairs are used for testing. To further validate the generalization capability of various methods, we also make a cross-dataset evaluation on the VE-LOL dataset [1] using the model trained on LOL as [41]. During training, we randomly crop image patches of the size of from the original image. As for data augmentation, we use random horizontal and vertical flipping and saturation enhancement. The batch size is set to 4. We adopt Adam as the optimizer with and . The learning rate is initially set to and decays with a factor of 0.8 every 200 epochs. We have the total 2000 epochs. All experiments are conducted on a NIVIDIA GeForce RTX 2080Ti GPU with TensorFlow.

Figure 4.

The illustration of channel self-attention.

We compare our method with RetinexNet [10], MBLLEN [20], Zero-DCE [17], KinD [11], DeepUPE [42], EnlightenGAN [18], DRBN [23], 3D-LUT [43], KinD++ [19], Sparse [44], LLFlow [41], MAXIM [45], IAT [46], Restormer [39], URetinex [27], DCC-Net [28], and HWMNet [26]. For quantitative comparisons, we adopt the Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity (SSIM) index as metrics. The larger the PSNR and SSIM, the better the performance.

4.2. Quantitative Comparison

The detailed performance comparison on the LOL dataset is shown in Table 1. The statistics demonstrate that our method outperforms or achieves competitive results compared to other state-of-the-art methods. Specifically, our method offers the best PSNR, i.e., 24.56 dB, which surpasses the second best method by ∼0.3 dB. Meanwhile, our method takes the second place in terms of SSIM. In conclusion, our method provides excellent recovery of low-light images.

Table 1.

Quantitative comparison on the LOL dataset in terms of PSNR ↑, SSIM ↑. The best results are in bold and the second best results are underlined.

As mentioned before, we evaluate the generalization capability on the VE-LOL, and the detailed results are shown in Table 2. Again, we see that our method achieves the second place in PSNR and the best score in SSIM. These results indicate that our method generalizes well to diverse low-light situations.

Table 2.

Cross-dataset evaluation on the VE-LOL dataset in terms of PSNR ↑, SSIM ↑. The best results are in bold and the second best results are underlined.

4.3. Qualitative Comparison

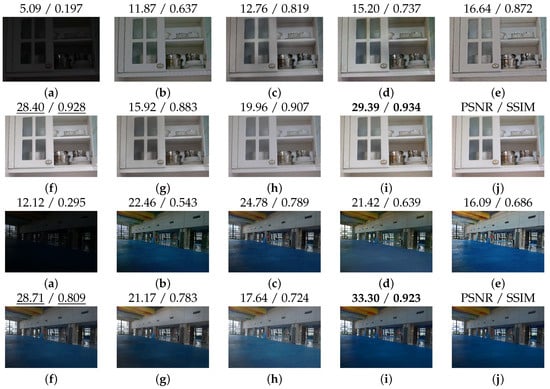

We show a typical example of the LOL dataset in Figure 5. We see that the results of our method are closer to the ground truth from a perception perspective, as evidenced by the overall brightness and color. Zero-DCE produces results with color bias, and the noise still remains. There are annoying artifacts in the results of KinD, EnlightenGAN, and KinD++, which degrade their enhancement quality. The enhancement of LLFlow is slightly underexposed. URetinex offers results with slight low contrast, which may result from over-enhancement. Overall, HWMNet and our method achieve the best enhancement. However, our method is closer to the ground truth in terms of color. It is also noteworthy that our method preserves the subtle textures on the glass as much as possible, without over-smoothing due to denoising. These visual results confirm that our method successfully exploits the dual information, i.e., spatial and channel (contextual) information, for better low-light image enhancement. We further provide another typical example of LOL in Figure 5. The problems described above can still be found in these methods, while our method consistently presents the closest visual effect to the ground truth.

Figure 5.

Visual comparison through a typical image of the LOL dataset. The best and second best scores are highlighted and underlined, respectively. (a) Input, (b) Zero-DCE [17], (c) KinD [11], (d) EnlightenGAN [18], (e) KinD++ [19], (f) HWMNet [26], (g) LLFlow [41], (h) URetinex [27], (i) ours, (j) ground truth.

Finally, the visual comparison on the VE-LOL dataset is presented in Figure 6. Again, the color bias is observed in the results of Zero-DCE, EnlightenGAN, KinD++, and URetinex, and under-enhancement exists in the results of KinD and LLFlow. As a whole, HWMNet and our method provide the best enhancement in terms of visual perception. Nevertheless, the color of the walls and the above signage in our results are much closer to the ground truth. All these results demonstrate the excellent enhancement capability of our method.

Figure 6.

Visual comparison through a typical image of VE-LOL dataset. The best and second best scores are highlighted and underlined, respectively. (a) Input, (b) Zero-DCE [17], (c) KinD [11], (d) EnlightenGAN [18], (e) KinD++ [19], (f) HWMNet [26], (g) LLFlow [41], (h) URetinex [27], (i) ours, (j) ground truth.

5. Conclusions

In this paper, we construct a dual information-based network for low-light image enhancement. Concretely, we effectively utilize dual information, i.e., spatial and channel (contextual) information through performing different operations on features in different layers. For features in shallow layers, the resolution is close to that of the original input image, and the spatial information is well preserved and abundant. Thus, we design a spatial restoration block for these features, which aims to capture spatial information and can facilitate the handling of spatial-related subproblems, such as detail restoration and noise suppression. For features in deep layers, the channels are sufficiently expanded, and various contextual information is distributed into different channels. Therefore, we design a channel interaction block to exchange high-level information among all channels, which allows the model to better cope with the global attribute-related subproblems, such as brightness and color correction. We implement the U-Net-like network and fully validate its effectiveness. Extensive experiments confirm the superiority of our method against some state-of-the-art methods.

Author Contributions

Material preparation, methodology, first draft writing and funding were performed by M.L. Investigation, analysis and concept development were performed by X.L. Supervision, data annotation, software and writing reviewing were performed by Y.F. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Defense Basic Scientific Research Program of China under Grant JCKY2020404C001 and in part by the Fund of Robot Technology Used for Special Environment Key Laboratory of Sichuan Province under Grants 23kftk02 and 23kfkt02.

Data Availability Statement

Our data come from public datasets, LOL [10] and VE-LOL [1].

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Liu, J.; Xu, D.; Yang, W.; Fan, M.; Huang, H. Benchmarking low-light image enhancement and beyond. Int. J. Comput. Vis. 2021, 129, 1153–1184. [Google Scholar] [CrossRef]

- Loh, Y.P.; Chan, C.S. Getting to know low-light images with the exclusively dark dataset. Comput. Vis. Image Underst. 2019, 178, 30–42. [Google Scholar] [CrossRef]

- Gong, T.; Zhang, M.; Zhou, Y.; Bai, H. Underwater Image Enhancement Based on Color Feature Fusion. Electronics 2023, 12, 4999. [Google Scholar] [CrossRef]

- Pizer, S.M.; Amburn, E.P.; Austin, J.D.; Cromartie, R.; Geselowitz, A.; Greer, T.; ter Haar Romeny, B.; Zimmerman, J.B.; Zuiderveld, K. Adaptive histogram equalization and its variations. Comput. Vision Graph. Image Process. 1987, 39, 355–368. [Google Scholar] [CrossRef]

- Huang, S.C.; Cheng, F.C.; Chiu, Y.S. Efficient contrast enhancement using adaptive gamma correction with weighting distribution. IEEE Trans. Image Process. 2012, 22, 1032–1041. [Google Scholar] [CrossRef]

- Jobson, D.J.; Rahman, Z.U.; Woodell, G.A. Properties and performance of a center/surround retinex. IEEE Trans. Image Process. 1997, 6, 451–462. [Google Scholar] [CrossRef]

- Guo, X.; Li, Y.; Ling, H. LIME: Low-light image enhancement via illumination map estimation. IEEE Trans. Image Process. 2016, 26, 982–993. [Google Scholar] [CrossRef]

- Li, C.; Guo, C.; Han, L.H.; Jiang, J.; Cheng, M.M.; Gu, J.; Loy, C.C. Low-light image and video enhancement using deep learning: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 9396–9416. [Google Scholar] [CrossRef] [PubMed]

- Lore, K.G.; Akintayo, A.; Sarkar, S. LLNet: A deep autoencoder approach to natural low-light image enhancement. Pattern Recognit. 2017, 61, 650–662. [Google Scholar] [CrossRef]

- Wei, C.; Wang, W.; Yang, W.; Liu, J. Deep Retinex Decomposition for Low-Light Enhancement. In Proceedings of the British Machine Vision Conference, Newcastle, UK, 3–6 September 2018. [Google Scholar]

- Zhang, Y.; Zhang, J.; Guo, X. Kindling the darkness: A practical low-light image enhancer. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 1632–1640. [Google Scholar]

- Wang, J.; Sun, Y.; Yang, J. Multi-Modular Network-Based Retinex Fusion Approach for Low-Light Image Enhancement. Electronics 2024, 13, 2040. [Google Scholar] [CrossRef]

- Xu, X.; Wang, R.; Lu, J. Low-Light Image Enhancement via Structure Modeling and Guidance. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 9893–9903. [Google Scholar]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H.; Shao, L. Multi-stage progressive image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 14821–14831. [Google Scholar]

- Mou, C.; Wang, Q.; Zhang, J. Deep generalized unfolding networks for image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 17399–17410. [Google Scholar]

- Chen, L.; Chu, X.; Zhang, X.; Sun, J. Simple baselines for image restoration. In Proceedings of the European Conference on Computer Vision; Springer: Cham, Switzerland, 2022; pp. 17–33. [Google Scholar]

- Guo, C.; Li, C.; Guo, J.; Loy, C.C.; Hou, J.; Kwong, S.; Cong, R. Zero-reference deep curve estimation for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1780–1789. [Google Scholar]

- Jiang, Y.; Gong, X.; Liu, D.; Cheng, Y.; Fang, C.; Shen, X.; Yang, J.; Zhou, P.; Wang, Z. Enlightengan: Deep light enhancement without paired supervision. IEEE Trans. Image Process. 2021, 30, 2340–2349. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Guo, X.; Ma, J.; Liu, W.; Zhang, J. Beyond brightening low-light images. Int. J. Comput. Vis. 2021, 129, 1013–1037. [Google Scholar] [CrossRef]

- Lv, F.; Lu, F.; Wu, J.; Lim, C. MBLLEN: Low-Light Image/Video Enhancement Using CNNs. In Proceedings of the British Machine Vision Conference, Newcastle, UK, 3–6 September 2018; Volume 220, p. 4. [Google Scholar]

- Cai, J.; Gu, S.; Zhang, L. Learning a deep single image contrast enhancer from multi-exposure images. IEEE Trans. Image Process. 2018, 27, 2049–2062. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. Acm 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Yang, W.; Wang, S.; Fang, Y.; Wang, Y.; Liu, J. From fidelity to perceptual quality: A semi-supervised approach for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3063–3072. [Google Scholar]

- Lu, K.; Zhang, L. TBEFN: A two-branch exposure-fusion network for low-light image enhancement. IEEE Trans. Multimed. 2020, 23, 4093–4105. [Google Scholar] [CrossRef]

- Lim, S.; Kim, W. DSLR: Deep stacked Laplacian restorer for low-light image enhancement. IEEE Trans. Multimed. 2020, 23, 4272–4284. [Google Scholar] [CrossRef]

- Fan, C.M.; Liu, T.J.; Liu, K.H. Half wavelet attention on M-Net+ for low-light image enhancement. In Proceedings of the 2022 IEEE International Conference on Image Processing (ICIP), Bordeaux, France, 16–19 October 2022; pp. 3878–3882. [Google Scholar]

- Wu, W.; Weng, J.; Zhang, P.; Wang, X.; Yang, W.; Jiang, J. URetinex-Net: Retinex-Based Deep Unfolding Network for Low-Light Image Enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–245 June 2022; pp. 5901–5910. [Google Scholar]

- Zhang, Z.; Zheng, H.; Hong, R.; Xu, M.; Yan, S.; Wang, M. Deep color consistent network for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–245 June 2022; pp. 1899–1908. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Vedaldi, A. Gather-excite: Exploiting feature context in convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; Volume 31. [Google Scholar]

- Park, J.; Woo, S.; Lee, J.Y.; Kweon, I.S. Bam: Bottleneck attention module. arXiv 2018, arXiv:1807.06514. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7794–7803. [Google Scholar]

- Li, X.; Wang, W.; Hu, X.; Yang, J. Selective kernel networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 510–519. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H. Restormer: Efficient transformer for high-resolution image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5728–5739. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Wang, Y.; Wan, R.; Yang, W.; Li, H.; Chau, L.P.; Kot, A. Low-light image enhancement with normalizing flow. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 27 February–2 March 2022; Volume 36, pp. 2604–2612. [Google Scholar]

- Wang, R.; Zhang, Q.; Fu, C.W.; Shen, X.; Zheng, W.S.; Jia, J. Underexposed photo enhancement using deep illumination estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6849–6857. [Google Scholar]

- Zeng, H.; Cai, J.; Li, L.; Cao, Z.; Zhang, L. Learning image-adaptive 3d lookup tables for high performance photo enhancement in real-time. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 2058–2073. [Google Scholar] [CrossRef] [PubMed]

- Yang, W.; Wang, W.; Huang, H.; Wang, S.; Liu, J. Sparse gradient regularized deep retinex network for robust low-light image enhancement. IEEE Trans. Image Process. 2021, 30, 2072–2086. [Google Scholar] [CrossRef] [PubMed]

- Tu, Z.; Talebi, H.; Zhang, H.; Yang, F.; Milanfar, P.; Bovik, A.; Li, Y. Maxim: Multi-axis mlp for image processing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5769–5780. [Google Scholar]

- Cui, Z.; Li, K.; Gu, L.; Su, S.; Gao, P.; Jiang, Z.; Qiao, Y.; Harada, T. You Only Need 90 K Parameters to Adapt Light: A Light Weight Transformer for Image Enhancement and Exposure Correction. In Proceedings of the British Machine Vision Conference, London, UK, 21–24 November 2022; p. 238. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).