1. Introduction

In recent years, the technical landscape has been profoundly transformed by myriad advancements in various domains, with LLMs standing out as one of the most influential. These models, driven by advancements in artificial intelligence (AI) and machine learning (ML), have become essential not only in the realm of NLP but also in various areas such as business intelligence [

1,

2,

3], healthcare [

4,

5,

6], legal analytics [

1,

7,

8], and even creative arts like music and literature [

9]. The sheer scale, complexity, and potential of LLMs necessitate an analysis of their influence across the most impactful scientific works. This endeavor is not a chronological recounting of events but an insight into the expanding growth and the multitudinous challenges and solutions that have surfaced over time. This article seeks to fill this knowledge gap by reviewing the most impactful scientific literature on LLMs’ influence, applications, advantages, disadvantages, challenges, benefits, and risks. The rationale for this undertaking, the chosen methodology, and its prospective contribution to the academic community are delineated below.

The importance of LLMs in today’s technical sphere cannot be understated. At a fundamental level, they signify the convergence of computational power [

10,

11,

12,

13], advanced algorithms [

14,

15,

16], and vast datasets [

10,

11,

17], which have collectively propelled the capabilities of these models to levels previously deemed unattainable. These models are at the core of many contemporary applications, from chatbots that offer near-human conversational experiences [

6,

8,

18,

19,

20] to automated content generation tools [

6,

7,

8] that are revolutionizing industries worldwide. The global economy, in terms of both industry and academia, has borne witness to the transformative power of LLMs [

7,

8,

18], adapting to and evolving with these technological advancements. As such, understanding their progression and their ever-expanding influence is not just an academic exercise but a necessity for anyone invested in the future of technology.

In the realm of AI and NLP, LLMs have emerged as a disruptive force, reshaping our understanding and interaction with human language through computational means. The evolution of these models from early rule-based systems and statistical methods to complex neural network-based architectures represents a significant shift in the field. These early models, while foundational, were inherently limited in their ability to grasp the complexities and subtleties inherent in human language.

The advent of neural networks, particularly recurrent neural networks (RNNs) and the innovative transformer architecture, marked a fundamental development. The introduction of transformers, as presented in the seminal work “Attention Is All You Need” by Vaswani et al., brought to light the self-attention mechanism [

21]. This mechanism enables models to assign varying degrees of importance to different words within a sentence, thereby capturing context and relationships that were previously elusive. The architecture of LLMs comprises at its core the embedding layer, which is responsible for translating tokens, be they words or sub-words, into numerical vectors, which are further used by the ML algorithms. The self-attention mechanism, a hallmark of this architecture, allows the model to dynamically focus on different segments of the input sequence. This ability to discern context and relationships between words is what sets these models apart in their understanding of language.

The transformer architecture revolutionized NLP. This architecture is characterized by its distinct separation into two main components: the encoder and the decoder. Each encoder layer, typically six in total, comprises a self-attention mechanism and a feed-forward neural network. This structure allows the model to weigh the importance of different words in a sentence, a process known as self-attention. The decoder mirrors the encoder’s structure but includes an additional layer that focuses attention on the encoder’s output, facilitating translation or summarization tasks [

21].

Moving to the generative pretrained transformer (GPT) architecture, it deviates significantly from the original transformer by employing a decoder-only structure. This design choice is essential for its functionality in generating text. The GPT model processes text input through multiple decoder layers to predict the next word in a sequence. This architecture underpins its ability to generate coherent and contextually relevant text. GPT’s training involves two key stages: pretraining on a large corpus of text to learn language patterns and fine-tuning for specific tasks, allowing it to adapt to a wide range of applications [

1].

In architectures like the GPT, the emphasis is on the decoder, which is tasked with generating language outputs. In contrast, architectures like the bidirectional encoder representations from transformers (BERT) employ the encoder part to understand profoundly the nuances of language context. These models also incorporate a fully connected neural network, which processes the outputs from the attention mechanism and encoder/decoder layers, culminating in the generation of the final output. The training process of these models involves two critical phases: pretraining and fine-tuning. During pretraining, the model undergoes training on vast datasets, absorbing general language patterns through unsupervised learning techniques. This phase often involves predicting missing words or sentences in a given text. The fine-tuning phase, on the other hand, tailors the pretrained model to specific tasks using smaller, task-oriented datasets, thereby enhancing its precision and applicability to specialized domains [

2].

The BERT architecture represents another significant shift in transformer-based models, focusing exclusively on an encoder-only structure. This design enables BERT to understand the context from both sides of a word in a sentence, a capability known as bidirectional context. Central to BERT’s functionality is the masked language model (MLM), where some input tokens are intentionally masked and the model learns to predict them, thereby gaining a deeper understanding of language context and structure.

As one explores the capabilities and advancements in LLMs, they encounter a variety of model variants, each contributing unique features and improvements. The GPT series, developed by OpenAI, is renowned for its proficiency in generating coherent and contextually relevant text. Google’s BERT excels in understanding contextual nuances in language, proving invaluable in applications like question answering and sentiment analysis [

22,

23].

Other models like T5 and XLNet have further expanded the possibilities, introducing novel mechanisms that continue to push the frontiers of language modeling [

24,

25]. The applications and impacts of these models are vast and varied. They have demonstrated exceptional skills in natural language understanding and generation, facilitating tasks ranging from text summarization to complex language translation. Beyond the realm of language, their usefulness extends to interdisciplinary applications, aiding research and development in fields as diverse as bioinformatics and law. Nevertheless, the development and deployment of LLMs are not without challenges and ethical considerations. The scalability of these models comes at the cost of substantial computational resources, raising concerns about their environmental impact. Additionally, the potential for these models to learn and perpetuate biases present in training data is a pressing issue, necessitating a thorough design and rigorous evaluation to ensure fairness and responsible usage.

A comparative overview of the main architectures, namely transformer, GPT, and BERT, reveals both their shared characteristics and distinct functionalities. The original transformer model presents a balanced encoder–decoder structure, adept at handling a variety of language processing tasks. In contrast, GPT, with its decoder-only design, excels in text generation, harnessing its layers to predict subsequent words in a sequence. BERT, with its encoder-only approach, focuses on understanding and interpreting language, and is adept at tasks like sentence classification and question answering. This comparative analysis underscores the versatility and adaptability of the transformer architecture, thereby setting a foundation for continual advancements in the field of generative AI (

Table 1).

While the present-day achievements of LLMs are visible and palpable, understanding their trajectory requires a thorough analysis of the specific scientific literature. Several innovative works, incremental improvements, and defining moments have contributed to the current state of LLMs [

26,

27,

28]. A review of the most impactful scientific literature on LLMs is not just about tracing this journey, but about understanding the patterns, identifying significant works, and foreseeing potential future directions. Such a review has the potential to inspire researchers, guiding both newcomers and veteran practitioners in the field and ensuring that the cumulative knowledge is accessible, comprehensible, and actionable.

The landscape of LLMs, while vast, is also replete with uncharted scientific domains and areas awaiting exploration. This review not only maps the known but also attains insights into the unknown, providing a comprehensive understanding while also igniting the desire for knowledge. By analyzing and presenting the most impactful scientific literature on LLMs, this paper serves as a foundation for future research endeavors. It seeks to foster collaboration, inspire innovation, and guide the direction of subsequent research, ensuring that the journey of LLMs is not just acknowledged but also aptly leveraged for the betterment of technology and society at large.

Consequently, the inexorable rise of LLMs in the technical domain, coupled with the profound impact they have had across various sectors, necessitates a detailed review. Leveraging the robust capabilities of the WoS indexing database [

29], this article endeavors to provide an insightful and impactful overview of the most influential papers regarding LLMs, thus enriching the existing body of knowledge and paving the way for future explorations.

This study offers a critical and in-depth exploration of LLMs from a multifaceted perspective. The review’s contributions extend across important bibliometric, technological, societal, ethical, and institutional insights, serving as a guide for future research, implementation, and policymaking in the field of AI. The main contributions of the conducted review study comprise insights into:

Technological impact and integration of LLMs: This article achieves an encompassing evaluation of the technological impact and integration of LLMs. It catalogues the multitude of ways in which LLMs have been assimilated into various technological sectors, offering readers a holistic view of these models’ influence on current technology paradigms. This study presents a detailed analysis of LLMs, enhancing the understanding of their functionality within different research areas. This review contributes by mapping out the interdisciplinary applications of LLMs, revealing their role as a milestone in the evolution of machine interaction with human languages.

The personalization revolution in technology and its societal impact: This study elucidates the transformative role of LLMs in personalizing technology, spotlighting the shift toward more intuitive user experiences. By exploring the adaptation of LLMs in personalizing user interaction, this article underscores a significant move toward more inclusive and democratized digital access. It contributes to the scientific literature by evaluating the societal impacts of these personalized experiences, balancing the advantages of global communication with the critical analysis of associated challenges, such as privacy concerns.

Trust, reliability, and ethical considerations of LLMs: Through a thorough examination, this review sheds light on the trust and reliability concerns surrounding LLMs, as well as their ethical implications. This article serves as an important discussion on the creation of trust in the digital realm, offering evidence of LLMs enhancing human decision-making. Furthermore, it highlights the necessity for ethical frameworks and bias mitigation strategies in LLM development, thereby contributing to the foundation for future policy and ethical guidelines in AI.

Institutional challenges and the road ahead: This review addresses the institutional challenges in integrating LLMs into existing frameworks, providing a strategic perspective for future adoption. It identifies the obstacles that institutions face, from infrastructural limitations to the need for enhanced AI literacy, offering valuable insights for overcoming these barriers. The article posits a forward-looking approach, considering potential regulatory and technological evolutions, and in doing so, it charts a path for institutions looking to responsibly harness the potential of LLMs.

The subsequent structure of this paper is as follows.

Section 2 depicts the rationale for the database querying, filtering, and data curation approach.

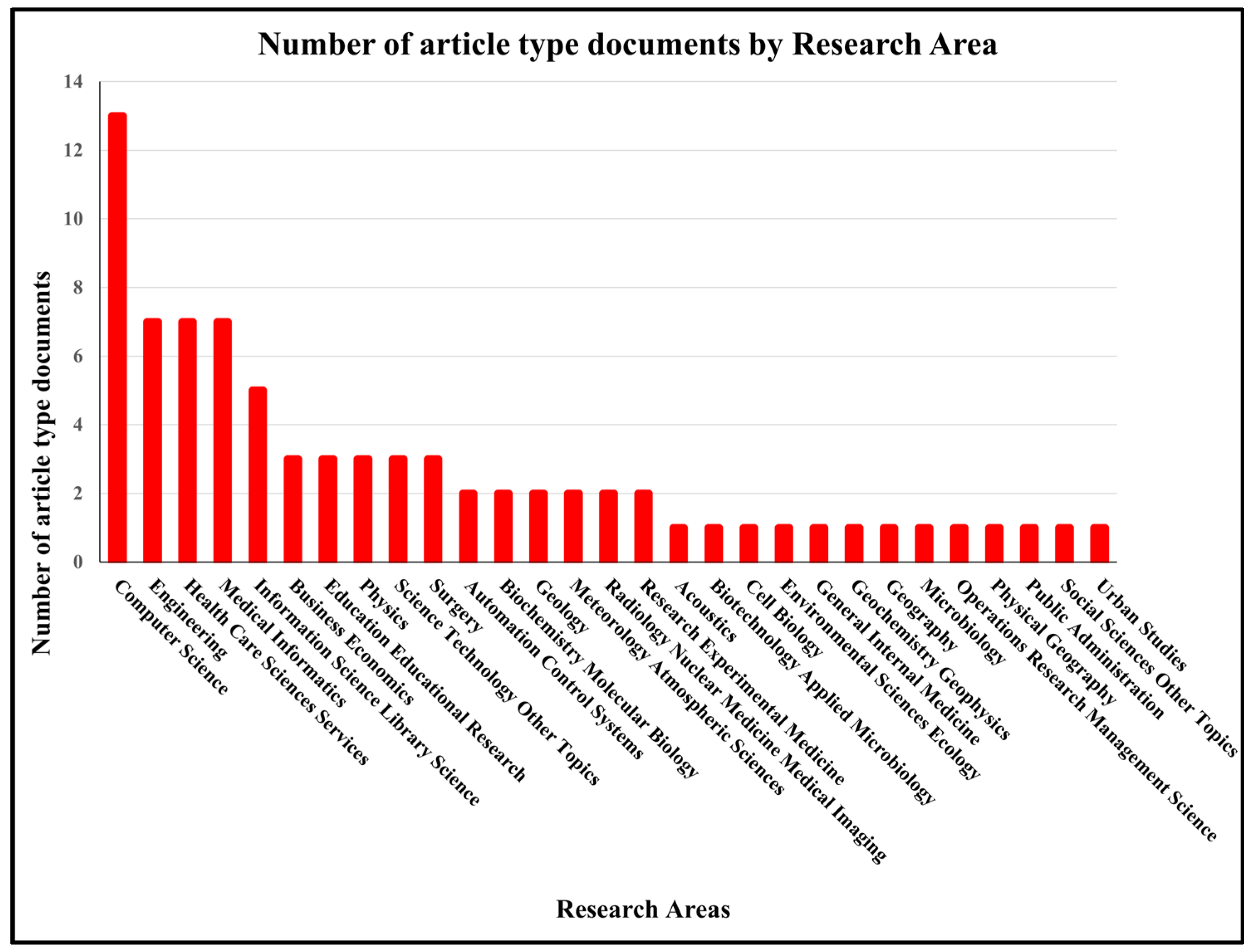

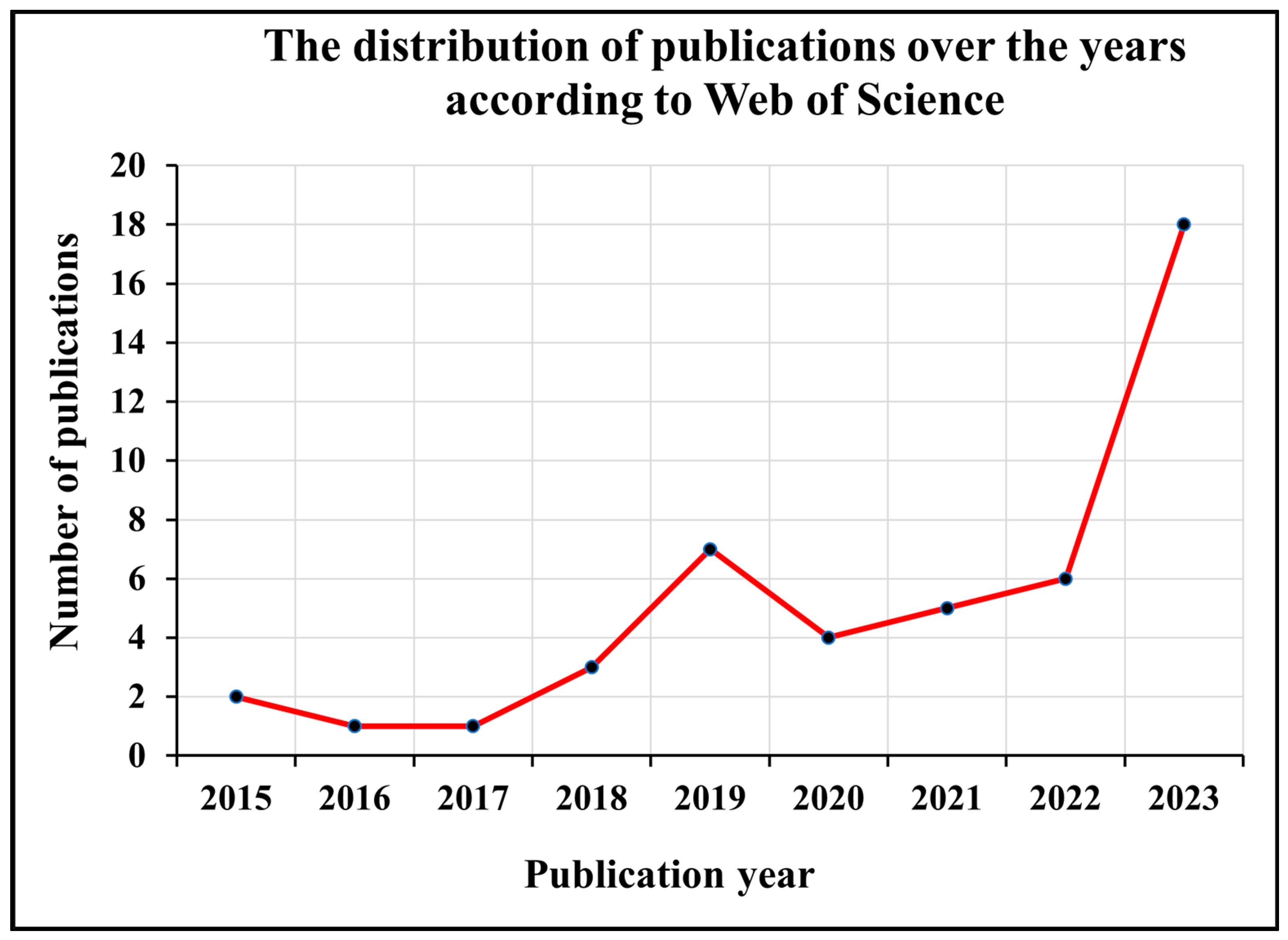

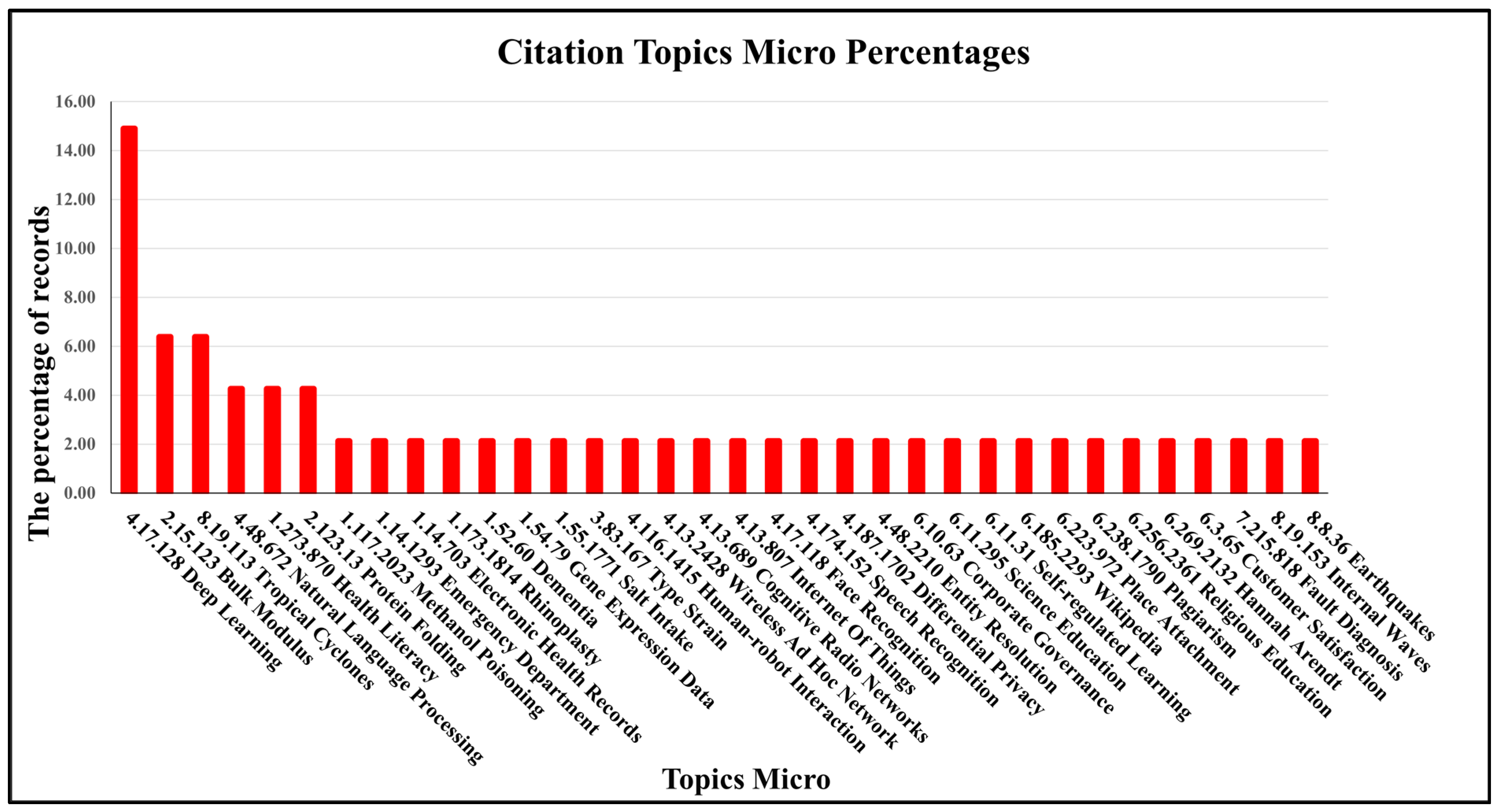

Section 3 presents outcomes achieved after analyzing the obtained scientific pool of articles, followed by an analysis regarding the expanding and multidisciplinary influence of LLMs within the scientific literature, along with a synthesis of relevant papers from the scientific pool of articles.

Section 4 contrasts advantages and benefits with the potential disadvantages and risks of LLMs, while

Section 5 depicts important insights of the conducted study.

4. Discussion

The advent and subsequent success of LLMs in the contemporary technological environment have presented multifaceted implications and advancements. Central to this discussion is the superior capability of LLMs in the realm of NLU. As elucidated in the preceding sections, LLMs are not just another incremental advancement in computational linguistics but represent a significant leap in how machines comprehend and engage with human language.

The quintessence of LLM success lies in their profound NLU. Their training, which encompasses an eclectic mix of text sources, has empowered these models to grasp the subtleties, nuances, and complexities inherent to human languages. Traditional language models, while effective, often falter when confronted with intricate human language, marked by idioms, metaphors, cultural context, and emotional undertones. However, LLMs, with their expansive dataset and sophisticated algorithms, have managed to significantly bridge this gap.

The enhanced NLU capabilities have notably transformed sentiment analysis. In the past, discerning the sentiment behind texts, especially those replete with complex emotions or sarcasm, proved challenging. LLMs have heralded an era where machines can identify and interpret layered sentiments with a higher degree of precision. This has vast implications for sectors such as market research, social media analytics, and customer feedback processing, where accurate sentiment interpretation is essential.

LLMs have also made a mark in question-answering systems. Previous models often provided answers based on keyword matching or simplistic logic. The deep NLU of LLMs enables them to grasp the essence of queries, considering context, intent, and depth, to generate more relevant and accurate responses. This enhancement bolsters domains like customer support, academic research, and intelligent tutoring systems.

Content summarization, another beneficiary of LLMs’ prowess, has evolved from the mere extraction of key sentences to a more refined abstraction of core ideas. LLMs can process vast amounts of text, understand the overarching themes, and produce concise yet comprehensive summaries. This is invaluable in areas like academic research, news aggregation, and business intelligence, where distilling vast quantities of information into accessible formats is essential.

The evolutionary trajectory of LLMs, as analyzed in this article, underscores an important shift toward a more harmonious machine–human language interface. As these models continue to learn and adapt, they are close to mimicking human-like text comprehension. This is not merely a technical achievement but signifies a broader cultural and societal shift. Machines that truly “understand” can lead to more meaningful human–machine interactions and enrich user experiences.

While the present accomplishments of LLMs in NLU are commendable, it is important to recognize the future steps that need to be taken. Continuous research, refinements, and ethical considerations will shape the future trajectory of LLMs. It is anticipated that as LLMs evolve, their NLU capabilities will further refine, ushering in an era where the delineation between human and machine language understanding becomes increasingly blurred.

Historically, analysis of extensive datasets required significant human labor, time, and computational resources. Traditional methods, although effective, were constrained by the sheer volume and complexity of the data. With LLMs, there is a paradigm shift in which largescale document analysis can be achieved swiftly and accurately. The automated analytical capabilities of LLMs transcend the linear growth of traditional methods, offering exponential improvements in both speed and scale. This not only allows for more extensive data analysis but also ensures a depth of analysis that would be cumbersome, if not impossible for humans to achieve within realistic timeframes.

Data extraction, especially from unstructured sources, is a bottleneck in information processing. The ability of LLMs to comprehend context, discern patterns, and extract relevant information from vast volumes of text is revolutionary. Organizations can now seamlessly derive insights from diverse sources without the need for exhaustive manual parsing. This efficiency translates to faster decision-making processes and more informed strategy development.

Beyond analysis and extraction, the scalability of LLMs has ushered in a new era in content generation. Their capacity to produce vast amounts of coherent and contextually relevant content, whether for research, marketing, or entertainment purposes, is a true milestone. This not only augments productivity but also allows for the tailoring of content to specific audiences on an unprecedented scale.

In the case of businesses and institutions, the scalable processing capabilities of LLMs represent both an opportunity and a challenge. The opportunity lies in harnessing this power for enhanced productivity, tailored solutions, and robust data-driven strategies. Nevertheless, the challenge arises in ensuring ethical use, data privacy, and avoiding over-reliance on these tools. Organizations must strike a balance between leveraging the potential of LLMs and ensuring that the human element, with its critical thinking and ethical considerations, remains integral to the decision-making process.

While the current capabilities of LLMs in scalable information processing are profound, it is essential to consider potential future trajectories. As LLMs continue to evolve, there could be further advancements in their efficiency, accuracy, and contextual understanding. The integration of LLMs with other advanced technologies, like quantum computing or neuromorphic chips, might redefine scalability limits. Furthermore, as LLMs become more ubiquitous, there might be a need for standardized benchmarks, best practices, and regulations to ensure their optimal and ethical use.

The pervasive integration of LLMs into diverse sectors, as underscored by the review conducted in this article, ushers in an era characterized by profound personalization in technology. One of the most salient impacts of LLMs is their ability to cultivate personalized user experiences, a dimension worth analyzing in-depth due to its ubiquity in contemporary applications and its potential to revolutionize user–technology interactions.

LLMs’ proficiency in generating contextually relevant content is paramount. Traditional systems, which are governed by predefined algorithms, often provide uniform outputs irrespective of the user’s unique attributes or histories. Nevertheless, LLMs, with their expansive training on diverse datasets and superior computational capabilities, can comprehend nuances and deliver outputs that resonate with a user’s specific context. For instance, in the realm of chatbots, while earlier iterations could only offer generic responses, LLM-driven chatbots can now understand user sentiment, previous interactions, and contextual clues, enabling a conversation that feels uniquely tailored to the individual.

The repercussions of such personalization extend well beyond chatbots. Recommendation systems, an integral part of e-commerce platforms, streaming services, and even news aggregators, have witnessed significant enhancements with the integration of LLMs. By analyzing user behaviors, preferences, and histories, LLMs can suggest products, songs, movies, or articles that align more closely with individual tastes, thereby increasing user engagement and satisfaction. Similarly, content curation platforms now have the tools to offer a bespoke content feed, ensuring that users are not just passive recipients but also active participants in a dialogue shaped by their preferences.

Personalization, enabled by LLMs, is not just about enhancing user satisfaction in the short term. By ensuring that each interaction is tailored to individual users, platforms can foster a deeper sense of loyalty and connection. Users are more likely to return to platforms that “understand” them, creating a symbiotic relationship in which the more the user interacts, the better the LLM becomes at providing personalized experiences, and the more likely the user is to continue engaging with the platform.

In light of the insights presented in this article, it is evident that the integration of LLMs into various technological platforms offers a paradigm shift in how users interact with technology. The journey from generic to personalized experiences, while filled with opportunities, also requires careful navigation to ensure that the potential of LLMs is harnessed responsibly and ethically. As researchers and practitioners continue to analyze LLMs more profoundly, the promise of creating more meaningful, personalized, and ethically grounded user experiences remains an exciting frontier for future explorations.

The ascent of LLMs and their subsequent applications has fostered a new paradigm in the computational world. One of the most salient features and arguably a cornerstone in their transformative capacity is their multilingual capabilities. When trained on text from diverse linguistic backgrounds, LLMs demonstrate the prowess to process and generate multilingual content, a feature that is a great challenge for traditional systems.

In the case of global businesses, this multilingual capability has metamorphosed their operational dynamics. Prior to the advent of LLMs with multilingual capacities, companies seeking to operate in different linguistic territories had to invest heavily in translators, local content creators, and region-specific marketing teams. The challenges were not solely monetary; the time-consuming nature of these translations and the potential loss of context or cultural nuance were considerable operational hurdles. With LLMs, these concerns are substantially alleviated. Now, businesses can generate content, answer queries, and address concerns in multiple languages with decreased lead times and increased accuracy. This not only enhances their global reach but also fosters an environment of inclusivity, where consumers and stakeholders from different linguistic backgrounds feel catered to and acknowledged.

This multilingual proficiency of LLMs has broader implications beyond business. In the realm of academia, researchers can now access and analyze content from multiple languages without the need for translation, thereby ensuring original context and meaning are preserved. This is particularly significant in fields like cultural studies, linguistics, and history, where the nuances of language play an essential role.

Furthermore, the integration of multilingual LLMs into applications like chatbots or customer service platforms has the potential to create a unified global digital interface. One can imagine a scenario in which a single chatbot can cater to queries from different parts of the world without any linguistic barrier. Such a scenario not only elevates the user experience but also signifies a step toward a truly globalized digital ecosystem.

Conversely, while the benefits are manifold, it is crucial to approach this capability with a degree of caution. Training LLMs on multilingual datasets requires a comprehensive understanding of the cultural, contextual, and colloquial nuances of each language. The potential for mistranslation or misinterpretation remains, which could lead to misunderstandings or even unintended consequences in certain scenarios. It emphasizes the importance of continuous refinement, feedback, and updates to these models to ensure that their multilingual capabilities are both accurate and culturally sensitive.

LLMs have undeniably etched a transformative mark on the technological landscape. As analyzed in this article, their foundation lies in their advanced processing capabilities, advanced algorithms, and vast datasets. Nevertheless, one of the most salient characteristics of LLMs, as observed in their practical application, is their remarkable flexibility across diverse domains. This facet of adaptability emerges as a defining factor in their widespread integration into various industries.

At the core of an LLM’s ability to function effectively across domains is its generalized training. These models, originally trained on large and diverse datasets, possess a broad understanding of language and concepts. It is this foundation that grants them the capacity to be fine-tuned to cater to specific industrial or academic needs. While some critics may argue that such generalized training might make the model lack specialized skills and therefore irrelevant, in reality, this broad foundation allows LLMs to be highly adaptable, making them relevant and invaluable across myriad sectors.

Industries, be it finance, healthcare, entertainment, or law, each have their unique lexicon, idioms, and conceptual intricacies. The versatility of LLMs lies in their ability to be trained further on domain-specific data, allowing them to comprehend and generate content that resonates with the particularities of each sector. For instance, in finance, an LLM fine-tuned with sectoral data can understand intricate terminologies and market dynamics, offering insights or analytics that are contextually relevant. Similarly, in the realm of law, an LLM can be trained to understand legal terminologies and case law references, aiding in tasks ranging from legal research to contract analysis.

This adaptability of LLMs is not merely about fitting into existing systems but also about fostering innovation within them. By catering to niche requirements, LLMs enable businesses and researchers to push boundaries and explore new avenues. In healthcare, for instance, LLMs could aid in parsing through vast amounts of medical literature, assisting doctors in diagnosis or treatment suggestions. In entertainment, they might be used to generate creative content or scripts, collaborating with human creators in unique ways.

Moreover, the flexibility of LLMs presents an exciting potential for cross-industry collaborations. A model trained in both healthcare and law might aid in navigating the intricate labyrinth of healthcare regulations. Similarly, the intersection of finance and technology could see LLMs playing important roles in fintech innovations.

The rise of LLMs and their transformative impact on diverse sectors, as highlighted in the preceding sections, brings with it a multitude of challenges and considerations, not least of which are the computational demands associated with their training. The findings of this review reveal some critical insights into the complexities and implications of these computational necessities, which warrant an in-depth discussion.

Central to the operation and optimization of LLMs is the undeniable requirement for vast computational resources. The training processes for these models often necessitate the deployment of clusters comprising high-performance “Graphics Processing Units” (GPUs) or “Tensor Processing Units” (TPUs). Such hardware-intensive processes underscore the immense computational prowess that undergirds the functioning of LLMs. Nonetheless, the implications are multifaceted. On one hand, the need for such powerful computational infrastructures means that the barrier to entry in the realm of LLM research and application is significantly high. The financial overhead associated with procuring, maintaining, and running these powerful clusters can be a significant deterrent for many organizations, especially for smaller entities or those from resource-limited settings. This potentially leads to the centralization of capabilities and expertise in well-funded organizations or institutions, therefore raising concerns about equity and accessibility in the LLM landscape.

Beyond the financial implications, there is a growing awareness and concern about the environmental footprint of LLM operations. The extensive energy consumption associated with training these models, particularly in vast data centers, has raised alarms about their carbon footprint. In an age increasingly defined by concerns over climate change and environmental degradation, the sustainability of LLMs has become a pressing issue. Extensive energy requirements not only magnify operational costs but also position LLMs at an intersection where technological advancement could potentially be at odds with environmental stewardship. This necessitates a reevaluation of practices, as well as innovations in energy-efficient training methods or the incorporation of renewable energy sources in data centers.

Given these insights, it becomes imperative for the scientific community to address these challenges proactively. Potential pathways include the development of more efficient training algorithms that reduce computational demands, collaborative efforts that pool resources to democratize access, and a conscious push toward sustainable practices in LLM research and deployment. Additionally, a deeper engagement with interdisciplinary experts, especially from the environmental science and sustainable energy sectors, could pave the way for solutions that reconcile the dual imperatives of technological advancement and environmental responsibility.

In the ever-evolving landscape of LLMs, their remarkable capabilities, as analyzed within the conducted review, are closely intertwined with some pressing challenges, notably the matters of data sensitivity and privacy. The benefits of LLMs, ranging from advanced NLP applications to groundbreaking contributions in creative arts, business intelligence, and healthcare, are fundamentally grounded in their training on expansive datasets. Conversely, this very strength has given rise to pressing concerns regarding the potential for these models to inadvertently leak sensitive data.

LLMs’ ability to generalize from vast datasets, which often include user-generated content, poses a significant risk. There is an underlying possibility, albeit minimal, that these models might memorize specific patterns or even direct inputs from the training data. Given the diverse nature of their training data, which may span from public web pages to academic articles, the inadvertent reproduction of sensitive or personally identifiable information is a tangible concern. Such occurrences, although rare, could have far-reaching implications, including potential breaches of confidentiality agreements, exposure of proprietary information, or even the unauthorized disclosure of personal data.

While the field has made strides in addressing these concerns, notably through techniques such as differential privacy, these solutions are not infallible. Differential privacy, which adds a degree of randomness to data or outputs to obfuscate individual data points, has shown promise in curbing the likelihood of data leakage. Nevertheless, ensuring absolute anonymity, especially in a domain characterized by the enormity and diversity of data as with LLMs, remains an elusive goal. Additionally, introducing differential privacy can sometimes come at the cost of model performance, creating a tradeoff between usefulness and privacy.

Recognizing the profound societal and technological impact of LLMs, it becomes paramount for researchers, developers, and policymakers to place increased emphasis on fortifying data privacy measures. Beyond merely refining existing techniques like differential privacy, there is a pressing need to innovate novel methodologies that can ensure data privacy without compromising the efficiency and efficacy of LLMs.

Collaborative efforts between academia and industry can lead the way in setting standardized protocols for training data curation, ensuring that sensitive information is systematically identified and excluded. Moreover, developing mechanisms for regular audits of model outputs against known sensitive data patterns can act as a safeguard against unintentional disclosures.

The emergence and proliferation of LLMs in various technical and nontechnical sectors have undeniably created environments of increased automation, efficiency, and innovation. While the transformative power of these models has brought forth numerous advantages, it has simultaneously highlighted pressing concerns regarding their societal and economic implications, specifically in the domain of job displacement.

The rapid integration of LLMs into different industries implies a significant shift in the nature of many tasks previously undertaken by humans. For instance, the deployment of advanced chatbots that offer near-human conversational experiences may decrease the demand for customer service representatives in certain sectors. Similarly, automated content generation tools could challenge the roles of writers, journalists, and content creators. Such changes inevitably raise pertinent questions about the potential for job losses and their subsequent impact on the workforce.

The economic ramifications of LLMs cannot be viewed in isolation. A broader sequence of events emerges when one considers the trajectory of technological advancements over history. Historically, every major technological upheaval, from the Industrial Revolution to the rise of computerization, has been accompanied by fears of widespread job displacement. While it is true that certain jobs become obsolete with technological progress, new roles, industries, and opportunities often emerge in tandem. Then again, the transition is not always seamless, and the displacement of one job does not always equate to the immediate creation of another.

The issue at hand is multifaceted. There is an immediate economic impact, in which sectors heavily reliant on tasks that LLMs can automate might witness a sharp decline in job opportunities. Even more significantly, there is also the long-term concern of a mismatch between the skills that the future job market demands and the skills that the current workforce possesses. Consequently, the importance of upskilling and workforce retraining becomes paramount.

Without parallel initiatives aimed at upskilling or retraining, widespread LLM adoption could exacerbate socioeconomic inequalities. The vulnerable sections of the workforce that are easily automatable are at heightened risk. Addressing these aspects requires concerted efforts from policymakers, industry leaders, and educational institutions to ensure that the workforce is prepared for the evolving job landscape. Programs that focus on equipping individuals with skills that are complementary to what LLMs offer are significant. Moreover, a multipronged approach that not only emphasizes technical skills but also soft skills like critical thinking, creativity, and emotional intelligence, which are less susceptible to automation, will be essential.

The extensive deployment and growing influence of LLMs in a plethora of technical sectors have ushered in a contemporary era of AI and NLP. While the potential benefits of LLMs are substantial, it is also critical to acknowledge and address the intricacies and challenges they introduce into the human–technology interface.

A salient concern that surfaces from the evolving LLM landscape is the propensity for overreliance on these models. The advanced capabilities of LLMs, such as their ability to generate coherent and often insightful outputs, may lead users to an unchecked acceptance of the information that LLMs produce. This raises the critical issue of trust calibration. It is of utmost importance that users of LLMs understand the inherent limitations and potential biases present in these models. Misplaced trust or an overestimation of an LLM’s accuracy can lead to misinformed decisions, especially in essential in sectors such as healthcare, legal analytics, and business intelligence.

As demonstrated in this review, LLMs have numerous applications and have the potential to bring significant solutions to numerous domains, such as the medical field (one of the most encountered research area applications). Consequently, serious problems that have affected during the COVID-19 pandemic and are still plaguing the medical field, such as the need for psychotherapeutic support 24/7 for medical personnel suffering from severe burnouts [

59], or supporting the medical decisional process in order to avoid mistakes that are more likely to occur in telemedicine environments [

60], may someday be alleviated by LLMs.

While LLMs often produce outputs that mimic human-like expertise, it is important to remember that they are inherently a product of their training data and algorithms. They lack human intuition, ethical reasoning, and the vastness of experiential knowledge. As such, there exists an imperative need to ensure that users are educated about the model’s nature, thereby calibrating their trust and reliance on its outputs appropriately.

Beyond individual users, institutional challenges must also be overcome. Organizations that deploy LLMs in their operations need to establish guidelines and protocols to ensure that the model’s outputs are cross-verified, especially when consequential decisions are at stake. Moreover, feedback loops should be incorporated to rectify any inaccuracies or biases, thereby refining the model over time.

Addressing these challenges is not just a technological endeavor but also an ethical and societal endeavor. Technological innovation is only one facet of the solution. Ethical considerations come into play when determining the boundaries of LLM usage, especially in areas in which misinformation or biases could have severe consequences. Meanwhile, societal collaboration is extremely important for creating a collective awareness of LLMs’ capabilities and limitations, fostering an environment in which technology complements human expertise rather than unquestionably overriding it.

The next section presents the conclusions of this study and its importance to the scientific community, specialists, and users.

5. Conclusions

The transformative potential of LLMs, particularly in the domain of NLU, has been substantially attained. Their ability to provide enhanced sentiment analysis, question-answering, and content summarization is proof of their efficiency and efficacy. As the technical community stands on this precipice of advancement, it is imperative to harness the potential of LLMs judiciously, ensuring that their contributions align with societal betterment and technological enlightenment.

The scalability in information processing offered by LLMs is reshaping the landscape of data analysis, extraction, and content creation. While the benefits are manifold, it is imperative for the scientific and professional communities to engage in continuous dialogue, ensuring that as we harness the potential of LLMs, we do so responsibly, ethically, and innovatively. The trajectory of LLMs, as analyzed in the preceding sections, underscores their transformative potential. As we anticipate future developments, a holistic understanding and a forward-looking perspective will be paramount in guiding this technological behemoth toward societal and technological advancement.

While the potential of LLM-driven personalization is vast, it is also important to address associated challenges. Personalization, if not wielded judiciously, can lead to the creation of “echo chambers”, in which users are only exposed to content that aligns with their existing beliefs or preferences. Furthermore, ethical considerations regarding user data privacy and the extent to which personalization algorithms should influence user decisions need to be rigorously examined.

The multilingual capabilities of LLMs have ushered in a new era of global communication and operation, breaking down linguistic barriers and creating a more interconnected world. The burden is on developers, researchers, businesses, and policymakers to harness this potential responsibly, ensuring that as we embrace a multilingual digital future, we do so with precision, empathy, and understanding.

The adaptability of LLMs across domains underscores their revolutionary potential. Their ability to seamlessly integrate, adapt, and innovate within varied industries is illustrative of their transformative power. The literature review of the most impactful scientific works offers insights into their growth, especially into their flexibility, which truly highlights their potential future trajectory. As industries continue to evolve and intersect, the role of LLMs, with their unparalleled adaptability, will undoubtedly be at the forefront of technological and societal advancement.

Although the evolution of LLMs paints a promising picture of technological progress and myriad applications, it is accompanied by pressing challenges. Addressing the computational and environmental demands of LLMs is not only a technical necessity but also a moral and ecological imperative. The journey forward will require a harmonization of innovation with responsibility, ensuring that the LLM landscape evolves in a manner that is both pioneering and sustainable.

The remarkable ascent of LLMs in the technical domain, highlighted in the preceding sections, underscores their transformative potential. As we stand on the cusp of further advancements and wider LLM adoption, responsibly addressing the intertwined challenges of data sensitivity and privacy will be decisive. Through collaborative research, innovation, and vigilance, the goal is to harness the unparalleled capabilities of LLMs, ensuring that they serve as a boon for societal and technological progress while safeguarding individual and collective data rights.

Although LLMs represent an exciting frontier in the realm of technology, their widespread adoption must be approached with a nuanced understanding of their broader socioeconomic implications. Embracing the benefits of LLMs should not come at the expense of the workforce. A balanced approach that acknowledges the transformative potential of LLMs while actively addressing the challenges they pose will be key to harnessing their power for the collective advancement of society.

Even though the evolutionary trajectory of LLMs as charted in this review underscores their transformative potential, it is imperative that the scientific and global community at large remain vigilant. The very essence of our reliance on technology, and more specifically on LLMs, must be anchored in informed and judicious trust. Only by navigating these multifaceted challenges with a holistic approach that encompasses technological, ethical, and societal dimensions can we truly harness the full potential of LLMs for the advancement of society and technology.

Despite the numerous opportunities and technological advancements, there are considerable limitations inherent in LLMs, primarily revolving around their dependency on vast datasets and computational resources that raise considerable concerns. Considering the previous work related to LLMs and the foregoing literature review and discussions, it is evident that while LLMs represent a significant leap forward in artificial intelligence, their current limitations necessitate a cautious and discerning application.

A paramount concern is the phenomenon of “hallucinations”, in which LLMs generate plausible but factually incorrect or nonsensical information. This not only questions the reliability of these models but also raises serious concerns in applications in which accuracy is critical, such as in informational and educational contexts. Furthermore, the potential for misuse of LLMs in generating misleading information or “deepfakes” poses a profound societal risk. The ease with which persuasive, but factually incorrect or manipulative content can be generated necessitates robust safeguards and ethical guidelines to prevent harm.

Another important limitation is the fact that these models are constrained by the biases inherent in their training data. Despite advancements in algorithmic neutrality, LLMs continue to reflect and sometimes amplify societal prejudices, underscoring the need for more rigorous and inclusive data curation.

Another salient limitation lies in the interpretability of LLMs. As these models grow in complexity, their decision-making processes become increasingly opaque, posing challenges not only for validation and trust but also for compliance with emerging regulations that mandate explainability in AI systems. This “black box” nature hinders the capacity for human oversight and raises ethical concerns, especially in high-stakes domains such as healthcare and law.

Furthermore, the environmental impact of LLMs cannot be overlooked. The immense computational resources required for training and operating these models translate into significant carbon footprints, which is contrary to global efforts aimed at sustainability. Although strides are being made toward more energy-efficient algorithms, the scale of improvement required is substantial.

Just as LLMs offer transformative potential, their current limitations highlight an essential need for continued research and responsible stewardship. Addressing these challenges will not only enhance the efficacy and reliability of LLMs but also ensure their alignment with societal values and ethical norms. As we are part of this disruptive technological evolution, it is imperative that we manage these limitations with a balanced approach, harmonizing technological advancement with human-centric principles.

Future research should therefore focus on developing more efficient, unbiased, and environmentally sustainable LLMs. This includes exploring novel training methodologies, implementing rigorous ethical guidelines, and embracing energy-efficient technologies. Future research should aim to enhance the contextual understanding of LLMs, thereby bridging the gap between technological complexity and human-centric applications. This involves deepening the models’ grasp of cultural nuances and ethical considerations, ensuring that their outputs are not only accurate but also culturally sensitive and morally sound. Moreover, the societal implications of LLMs, particularly in terms of job displacement and data privacy, require a balanced and multidisciplinary approach. Future research should collaborate across fields such as economics, sociology, and law to develop frameworks that mitigate the risks of automation while harnessing its benefits. This includes creating upskilling programs, advocating for fair data usage policies, and ensuring equitable access to these technologies.

The future of LLMs should be guided by a commitment to responsible innovation, in which technological advancement coexists with ethical integrity, environmental sustainability, and societal well-being. Embracing this complex approach from multiple angles will not only maximize the potential of LLMs but also ensure their evolution aligns with the overarching goal of bringing about a more inclusive, equitable, and enlightened digital future.

In addressing the intricacies of LLMs, this study has endeavored to provide a comprehensive analysis within its stated parameters. Nevertheless, it is important to acknowledge a notable limitation in our research scope. The current investigation did not extend to an examination of patents related to LLMs. This omission is not an oversight but rather a deliberate scope delineation, considering the complex and extensive nature of patent data. Consequently, the insights derived from patent analyses, which can offer valuable perspectives on technological advancements and intellectual property trends in LLMs, remain unexplored in this study. This gap in our research underscores a significant avenue for future work, in which a detailed exploration of patents could expose additional dimensions of LLM development and deployment, thereby enriching our understanding of this rapidly evolving field.

In conclusion, while LLMs offer transformative advantages that can revolutionize various sectors, it is fundamental to navigate their challenges and disadvantages with caution and foresight. The balanced harnessing of their potential, while addressing their pitfalls, will determine their trajectory in reshaping the technological landscape for the betterment of humankind.