Abstract

Malware is a significant threat to the field of cyber security. There is a wide variety of malware, which can be programmed to threaten computer security by exploiting various networks, operating systems, software and physical security vulnerabilities. So, detecting malware has become a significant part of maintaining network security. In this paper, data enhancement techniques are used in the data preprocessing stage, then a novel detection mode—CPL-Net employing malware texture image—is proposed. The model consists of a feature extraction component, a feature fusion component and a classification component, the core of which is based on the parallel fusion of spatio-temporal features by Convolutional Neural Networks (CNN) and Long Short-Term Memory networks (LSTM). Through experiments, it has been proven that CPL-Net can achieve an accuracy of 98.7% and an F1 score of 98.6% for malware. The model uses a novel feature fusion approach and achieves a comprehensive and precise malware detection.

1. Introduction

In 1971, the first computer worm, Creeper, appeared on the TENEX operating system in the DEC PDP-10. As a result, the Reape program, the earliest malware detection technology, was created and it was designed to locate and destroy Creeper. The first computer virus around the world, ELK Cloner, was created by 15-year-old high school student, Rich Skrenta, in 1982, and it was spread via floppy disks. ELK Cloner targeting the Apple II operating system is considered to be the first computer virus to be spread in the wild. The Brain, considered to be the first PC virus, was created by the Pakistani brothers Basit and Amjad Farooq Alvi to attack the IBM PC platform.

To date, the number of computer viruses has increased by over 40,000 sharply. Therefore, there are some potential risks in daily life, for instance, computer systems and stakeholders are seriously threatened by the increase in malware activity. In terms of security for stakeholders (specific to end-users), one of the most urgent issues, data attacked by viruses, is mitigated. Security tools and antivirus packages are designed by scientists all over the world to detect and remove viruses due to firewalls and are only used to monitor incoming and outgoing connections. Malware evasion checking techniques are also constantly updated with antivirus methods.

Malware detection technology has been developed for a long time. According to the execution status of malware in the analysis process, malware detection technology can be divided into static and dynamic detection.

Static detection is the use of analysis tools to analyze the features and functional modules of malware without executing binary programs. Malware feature strings and feature code segments can be found by this detection method, and functional modules and flowcharts of each functional module can be obtained. Although the cost of static detection is relatively low, there is the problem of detection lag.

Dynamic detection is the use of program debugging tools to observe and track the work process of malware when it is executed. Although dynamic detection can detect malware in time, it also increases the detection cost because it requires the support of a virtual environment.

In response to the limitations of traditional detection methods, a machine learning approach for malware detection has been proposed. Machine learning algorithms can automatically analyze data patterns. Predicting and analyzing unknown data based on these patterns. Machine learning algorithms have been applied in computer security domains such as malware and intrusion detection. During training, machine learning methods have not achieved optimal results and there is a need for feature analysis techniques. Therefore, malware detection techniques based on deep learning methods have received attention. Due to its superior performance in image processing, the researchers proposed a detection method based on malware texture images.

After a long period of development from machine learning to deep learning, researchers have been looking for a solution that optimizes the problem. Machine learning shows its unique advantages in classification. Machine learning cannot keep up with people’s needs when it comes to larger amounts of data and more computation. The advent of deep learning has made up for the shortcomings of machine learning. This article mainly classifies malware texture images, which can overcome the effect of obfuscation techniques on classification []. Deep learning has excellent ability in processing images, so deep learning algorithms are used to process the data. In experiments, to eliminate the detection techniques’ dependence on code and API (application program interfaces), the malware texture image was used as the study object. As a result, techniques for detecting malware become easier to understand and better results can be achieved with malware categorization methods.

This study found that the method of feature fusion does help the classifier to improve the accuracy of the classification []. The model proposes a deep learning fusion of temporal and spatial features for malware classification. The parallel feature fusion method enables the model to capture both spatial and temporal features of malware texture images, which not only improves the accuracy of the model, but also helps to enhance the robustness of the model. The method fills the gap in parallel feature fusion for malware. The main contributions of this paper are the following:

- We propose a new deep learning model to detect malware.

- This is the first time that the spatio-temporal features of malware grayscale images have been used for malware detection.

- We prove that the accuracy of feature fusion for malware classification is improved through ablation experiments.

2. Related Work

Currently, detection methods for malware are mainly divided into dynamic detection and static detection. Both forms of detection have their own advantages and disadvantages. Dynamic detection needs to build a virtual environment to parse the application’s APK (Android application package) packages []. This approach adds to the cost of researching malware, but dynamic detection can find malware faster than static detection. Static detection uses specific tools to check whether executable files are malicious through a detailed search of the Portable Executable (PE) files that come along with the executable files []. Although static detection can achieve malware detection at a lower cost, this method cannot prevent the destructive behavior of malware on the computer; when malware uses hiding techniques to conceal its aggressive code, the static detection method can no longer detect such malware.

With the development of machine learning, researchers gradually used it for malware detection. Still, machine learning exists in artificial feature extraction. Therefore, machine learning methods are challenging to develop in malware detection. Researchers used deep learning to detect malware because of the automatic extraction features function. In 2011, Nataraj [] succeeded in converting malware into grayscale texture images. Hence, deep learning detection methods based on malware texture images were proposed.

Malware texture images transform the binary representation of malware into a hexadecimal representation. Then, based on the size of the converted hexadecimal values, convert them into corresponding pixel values to obtain a texture image of malware. A texture image of malware contains the attack features of that malware. Even if malware can hide its attacking code through shell code and obfuscation techniques, the texture image of malware can still serve as a basis for detecting malware. Research has shown that using malware texture images as research objects can effectively detect new types of malware and reduce detection difficulties []. Researchers used ANNs (Artificial Neural Networks) to classify malware texture images in 2021 []. In the field of machine learning, the highest accuracy rate of SVM classifiers using feature fusion technology can reach 93.24% []. Therefore, feature fusion methods are effective means to improve the accuracy of the model. Sreeparna proposed InceptionV3 and the SVM model for feature extraction and prediction of malware RGB images []. Irshad [] used a genetic algorithm for malware feature selection and classified them using a single classifier to select the best performing features for malware detection. On the basis of machine learning, some articles proposed an automated Android malware detection method using an optimal ensemble learning approach for cyber security technology []. Deepa combines deep learning and machine learning approaches by first using the VGG16 model and DNN for feature extraction and then classifying the extracted features using a machine learning approach []. Although machine learning performs exceptionally well in the malware category binary classification [], research shows that the accuracy of deep learning algorithms for malware classification is higher than traditional machine learning methods []. There is also a lot of research on enhancing CNN networks to improve classification accuracy; Awan uses attention enhancement via CNNs to solve the malware recognition problem []. Bagane proposed a method for detecting IoT (Internet of Things) malware by cascading CNN and BiLSTM networks. Existing CNN frameworks [], such as VGG-16 and Res-Net-50, perform well in the classification of malware grayscale images [,]. Vasan proposed IMCFN based on the CNN network. Only fine-tuned layers, including FC2, FC1, and Block5, are used for less computational cost and faster classification, which fulfill the demand of most practical applications []. Lee [] used multiple autoencoder models for identifying malware images. Some researchers focus on the study of deep learning models themselves. Yuxin [] proposes a new model based on deep learning. This model accuracy is significantly improved by samples that are enhanced. In addition to CNN, researchers use BiTCN for malware detection. Their method mainly uses n-gram sequence features of malware opcode operand and an application programming interface (API) []. However, this method relies too much on understanding malware programming languages and programming logic, which places high demands on researchers. Currently, in the field of feature fusion, there is a model based on PV-DM (Paragraph Vector-Distributed Memory) []. Empirical evidence shows that feature fusion can effectively solve the problem of overfitting []. Paardekooper used a genetic algorithm (GA) to optimize the CNN topology and hyperparameters for image-based malware classification []. Reddy created a highly scalable framework known as MDC-Net to detect classfy and organize malware [].

3. Methodology

This part mainly introduces the dataset of this article, the methods used in this experiment and the experimental environment.

3.1. Dataset

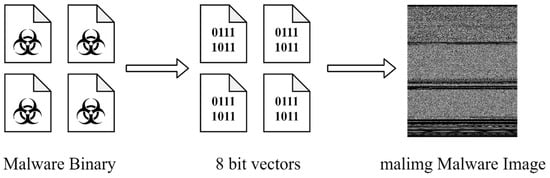

In this paper, the malimg dataset was selected by studying publicly available datasets on the web. This dataset contains most of the malware classes. Although there is a data imbalance in the malimg dataset, this can be addressed by adding experimental content. This dataset contains 9339 grayscale images of malware from 25 classes. The principle of generating this dataset is that a given malware binary is read as a vector of 8 bit unsigned integers and then organized into a 2D array []. This can be visualized as a grayscale image in the range [0,255] (0: black, 255: white) []. The generation process of the gray images is depicted in Figure 1 and the details in the dataset are shown in Table 1.

Figure 1.

The Principle of Generating Grayscale Images of Malware.

Table 1.

Families in the malimg dataset.

3.2. Data Enhancement

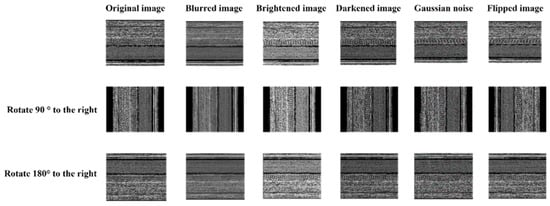

If the experiment had used the imbalanced dataset, this may have resulted in issues such as overlooking certain malware features. This may result in lower F1 scores and accuracy rates or even training failure in severe cases. Moreover, the relatively small number of image samples fails to satisfy the training requirements of deep learning networks. In this experiment, a single image was expanded to 17 images by using techniques such as rotating, flipping, brightening, darkening and adding Gaussian noise. This not only solves the problem of the data imbalance, but also solves the problem that the sample base is a small number. The deep learning network is trained using an enhanced dataset, which is divided into a test set and a training set at a ratio of 4:6.

3.3. Feature Fusion

Currently, the feature fusion method is a critical method to improve model performance. According to the feature fusion time, it can be classified into early, intermediate and late fusion. Early fusion refers to fusion in the input layer, which involves fusing features firstly and then training the predictor on the fused features. Intermediate fusion first converts features on different data sources into intermediate high-dimensional feature representations, then performs the fusion, and finally trains the predictor []. Late fusion refers to fusion in the prediction layer, first making predictions on different features and then fusing the results of these predictions. According to the model structure, it can be divided into serial fusion and parallel fusion. Serial fusion refers to the entire model having only one branch, while parallel fusion refers to the model having multiple branches each dealing with different features.

Amenova proved that the CNN-LSTM network classifier can improve the accuracy of malware classification []. Therefore, this paper proposes a method that combines early feature fusion and spatio-temporal feature fusion. First, the experiment employs the CNN to extract the spatial features from the grayscale images of malware. Subsequently, the LSTM network is used to extract the temporal features from the same grayscale images. Finally, the spatial and temporal features are merged based on their corresponding file names, and the spatio-temporal fused features serve as the basis for the malware texture images classification, thus effectively improving the robustness of the network.

3.4. Experimental Equipment

The device information used in this paper is as follows: Intel(R) Xeon(R) Silver 4110 CPU @ 2.10 GHZ; 512 G memory; NVIDIA Tesla P100-PCIE-16 GB and GPU computing, Manufactured by NVIDIA Corporation, which is located in Santa Clara, CA, USA.

4. Experiments

In this section, firstly, we used a data-enhanced method to enlarge the dataset to solve the problem of insufficient training samples in the malware dataset. This problem causes overfitting of the training results. The experiment handles various operations on a single original image, such as rotation, flipping, brightening, darkening and adding Gaussian noise. By combining these operating methods, a single original image is expanded into seventeen images, thereby supporting deep learning training. This experiment solves the problem of data imbalance and the problem of insufficient sample bases through this method of expanding the entire dataset. Some of the results of the data-enhanced method are shown in Figure 2.

Figure 2.

Data augmentation results.

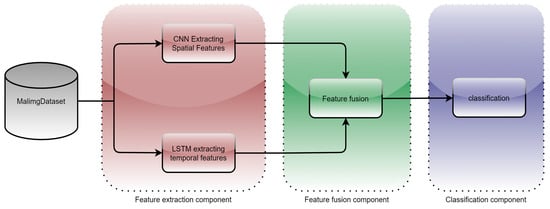

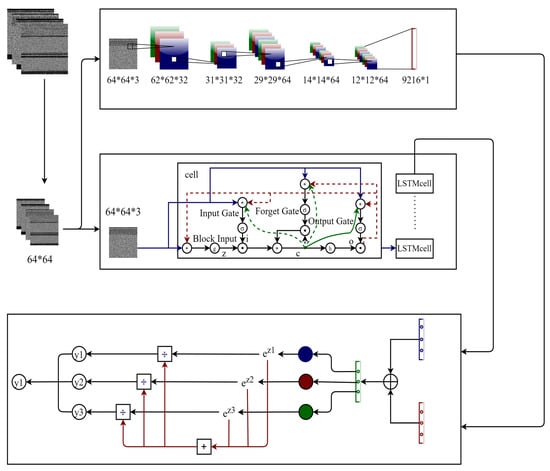

CPL-Net has three components: a feature extraction component, a feature fusion component, and a classification component. The overall flowchart of CPL-Net is shown in Figure 3.

Figure 3.

CPL-Net flowchart.

CPL-Net consists of a CNN-LSTM network and a parallel feature fusion approach. Due to the varying sizes of the grayscale images of malware, it is necessary to normalize the sizes of these images. In this study, all grayscale images are first resized to 64 × 64 and then we separately input the CNN and LSTM networks for feature extraction []. The CNN inputs a 64 × 64 grayscale image in three, RGB, channels, resulting in a data size of 64 × 64 × 3 []. The CNN applies convolution to the grayscale image, transforming the image into 62 × 62 × 32. The convolution layer is the most crucial part of the CNN framework, primarily responsible for discovering higher-dimension features within the input image []. Convolutional kernels are an essential tool for extracting image features, and therefore, as a vital part of convolution operations, the size of the convolutional kernels directly affects the experimental results. In this experiment, the size of the convolutional kernel is 3 × 3; the stride is 1. This is followed by a pooling operation that reduces the texture image’s size by half, resulting in 31 × 31 × 32. The pooling layer mainly decreases the dimensionality of the features, thereby reducing the computational load required for data processing []. Another round of convolution is performed, turning the grayscale image into 29 × 29 × 64, followed by another pooling operation. The grayscale image, having been operated on, then becomes 14 × 14 × 64. Finally, another convolution operation transforms the grayscale image into a 12 × 12 × 64 size []. The processed grayscale image is then flattened into a single dimension, resulting in a length of 9216 × 1. This spatial feature is passed on to the feature fusion component.

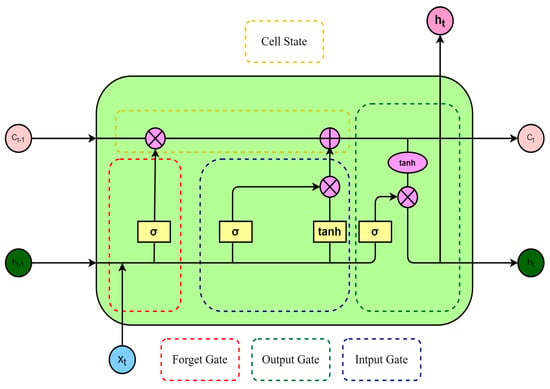

Similarly, the LSTM network extracts temporal features from grayscale images of size 64 × 64. The LSTM network model was proposed by Hochreiter and Schmidhuber []. Compared to the traditional RNN (recurrent neural network) LSTM model, it introduces three gating units: the forget gate, the input gate and the output gate. In digital circuits, a gate is a binary variable (0,1), where ‘0’ represents the closed state, not allowing any information to pass through, and ‘1’ represents the open state, allowing all data to pass []. In the LSTM network, a gate is essentially a fully connected layer, taking a vector as input and outputting a real-value vector ranging from ‘0’ to ‘1’. This reflects which information is permitted to pass through at a certain ratio. Memory cells, as the core of LSTM, are responsible for storing and transmitting messages. Memory cells are composed of a linear unit and a nonlinear unit. A linear unit is a simple adder used to add the memory cells from the previous time and the input from the current time. A nonlinear unit is a sigmoid function used to control the flow of information. The following are the main functions of the three gates of LSTM:

The forget gate (f): It determines how much information from the memory cell state at the previous timestep should be forgotten and how much should be retained in the current memory cell state. Equation (1) shows the calculation of the forget gate.

The input gate (i): It controls how much information from the current input information candidate state needs to be saved to the current memory cell []. Equation (2) shows a vector within the range of (0,1), activated by the Sigmoid function. Equation (3) illustrates the calculation process for updating the cell state, using the tanh function to activate the updated cell values. Equation (4) shows the cell state at this moment, and this function adds the calculation results of the forget gate and the input gate to determine the current memory cell, which is then used for the next cell.

The output gate (o): It controls how much information from the current memory cell needs to be outputted to the next memory cell. Equation (5) displays the output state of the output gate, and Equation (6) uses the tanh activation function, scaling the new memory cell obtained from Equation (4).

As part of the feature extraction component in this experiment, Equations (1)–(6) mainly explain the working principles of forget gates, input gates, and output gates in the LSTM model. These three gating mechanisms are essential parts of the regular operation of the LSTM model.

LSTM is a particular recursive neural network that can capture long-term dependencies in data. In this experiment, the LSTM model inputs images in a size of 64 × 64 into the model, then 64 cells are set up. We add a fully connected layer to divide the data into 25 features.

The specific situation between different gating mechanisms and the memory cell of LSTM is shown in Figure 4.

Figure 4.

LSTM architecture diagram.

When the feature extraction is completed, the corresponding spatial and temporal features are combined based on the image name. This is the main work of our feature fusion component. After the feature fusion is completed, the fused features are handed over to the classification component for classification operations, as the classification component, the Softmax function, compresses the values into the (0,1) interval, thereby achieving classification. The Softmax regression function (multinomial or multiclass logistic regression) is an extension of logistic regression on multiclass problems. For multiclass problems, the category label can have k values given as an output sample. The calculation method for Softmax regression function prediction is shown in Formula (7).

where k denotes the number of categories in the grayscale images of malware, v represents the output vector, and vj refers to the jth output in vi, which signifies the calculated category.

In CPL-Net use, the settings in the CNN model and the LSTM model are components for feature extraction. In malware texture images, we extract spatial features through the CNN model and temporal features through the LSTM model. Then, the spatial and temporal features are combined together through file names, and the fused features are given to the classification component with Softmax as the activation function, which means that CPL-Net will output a 25-dimensional probability distribution.

The principle of parallel feature fusion in this study is illustrated in Figure 5.

Figure 5.

Parallel CNN-LSTM feature fusion.

In this work, accuracy is used as the evaluation criteria of the model. Accuracy is a metric that can intuitively reflect the rate of correct predictions of the model, and is calculated as shown in Formula (8). However, the accuracy does not reflect all types of accurate situations of classification due to data imbalance. Therefore, the F1 value that can reflect all types of accurate cases of classification, is used to evaluate the comprehensive performance of a model. Formula (9) is the formula of the F1 value. For multiclassification problems, the macro average F1 value (the average of the individual F1 scores obtained for each class) is an evaluation standard used commonly, and is utilized in the experiment, as shown in Formula (10), the confusion matrix of prediction results is shown in Table 2.

where Q represents the number of classes in the dataset.

Table 2.

The confusion matrix of prediction results.

This article has designed ablation experiments for deep learning feature fusion methods. An ablation experiment is an experiment to determine the cause of an outcome or phenomenon by eliminating the elimination of certain factors. We compare the accuracy and F1 score of the CNN, the LSTM network and the CPL-Net under identical conditions. The improvement effect of CPL-Net on the experiment can be effectively compared.

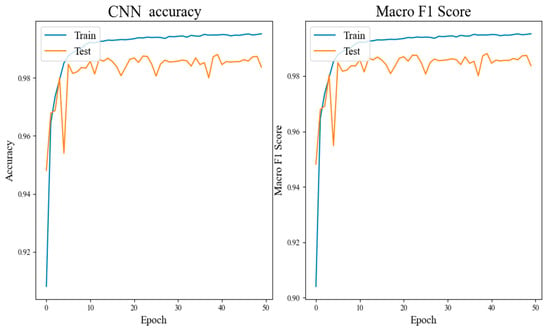

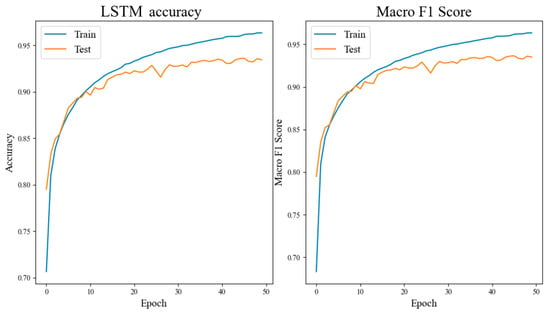

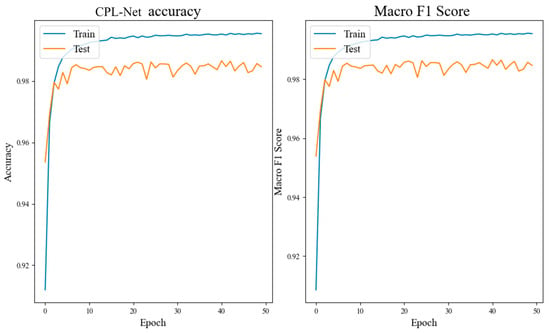

Figure 6, Figure 7 and Figure 8 and Table 3 illustrate that the CNN network achieves a classification accuracy of 98.3% for grayscale images of malicious code. LSTM, being more suitable for sentiment analysis of texts, has an accuracy rate of only 92.7% when no preprocessing is applied to the dataset. The accuracy rate of the CPL-Net reaches 98.7%. Although the accuracy improvement of CPL-Net compared to CNN is only 0.4%, and the F1 value improvement is only 0.2%, the curve shows that CPL-Net has better stability than CNN. The CNN network showed a significant decrease in accuracy and F1 value during the fifth training epoch, while CPL-Net maintained a relatively stable range throughout the training process. Through ablation experiments, we conclude that CPL-Net can improve the accuracy of detecting malware texture images and has better stability. However, compared to the CNN network, the improvement effect of CPL-Net is not particularly significant. The following experiments will ameliorate the model’s feature fusion and classification components to further improve the accuracy and stability of CPL-Net.

Figure 6.

Experimental results of CNN.

Figure 7.

Experimental results of LSTM.

Figure 8.

Experimental results of CPL-Net.

Table 3.

Accuracy rate of ablation experiment.

In Table 4, the models presented in this article on the malimg dataset compared the accuracy and F1 score of models proposed by others in recent years. Deep learning methods generally perform better than machine learning methods. The MDC-Net accuracy studied by Reddy in 2023 was the highest, but he only used CNN for feature extraction. Therefore, this once again proves the feasibility of deep learning methods and demonstrates that feature fusion methods can improve the model’s performance. The results show that the accuracy of our model is higher than that of other models, which proves the effectiveness of our work. At the same time, this article innovatively proposes and realizes a feature fusion method between spatial and temporal features for malware detection, which has higher robustness than previous models, making the features extracted by the model more diverse and helping the model identify malware from various aspects. In this experiment, the feature fusion method uses a simple combined method, which may cause certain features to be ignored by the model. In the future, feature fusion methods more suitable for malware texture image classification will be studied to ensure that each feature can play a role.

Table 4.

Comparison of different models.

5. Conclusions and Future Work

In this paper, the research focuses on the classification of malware using the methods of deep learning feature fusion. Deep learning feature fusion is extremely promising in malware texture image classification. Therefore, the approach of feature fusion is used to classify malware texture images. Ablation experiments demonstrated that the approach of deep learning feature fusion has higher accuracy and robustness than single deep learning models. During this experiment, the biggest challenge was the data type error encountered during feature stitching. After a long period of exploration and debugging, we finally overcame the difficulties and achieved the work of feature stitching. In the future, we will train the generative adversarial network to further enhance the features of the dataset. Softmax classification is used by CPL-Net, and the accuracy of the model is affected by the simple classification method. Therefore, we will introduce the self-attention mechanism for classification. We will explore the possibility of multimodal feature fusion for malware detection, using the multimodal feature fusion proposed [].

Author Contributions

Methodology, X.R.; Software, X.R.; Validation, X.R.; Formal analysis, J.Z.; Investigation, J.L.; Data curation, T.W.; Writing—original draft, X.R.; Writing—review & editing, J.L.; Supervision, J.L.; Project administration, J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was sponsored by Gansu University of Political Science and Law’s research and innovation team. The funding number is 2017C-16.

Data Availability Statement

I have made the code for this article public on GitHub. You can find my code at the link following: https://github.com/immortal001/CPL-Net.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kumar, N.; Meenpal, T. Texture-Based Malware Family Classification. In Proceedings of the 2019 10th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Kanpur, India, 6–8 July 2019. [Google Scholar]

- Singh, J.; Thakur, D.; Gera, T.; Shah, B.; Abuhmed, T.; Ali, F. Classification and Analysis of Android Malware Images Using Feature Fusion Technique. IEEE Access 2021, 9, 90102–90117. [Google Scholar] [CrossRef]

- Jing, P.; An, N.; Yue, S. Dynamic detection method for Android terminal malware based on Native layer. In Proceedings of the 2023 4th International Conference on Computer Engineering and Application (ICCEA), Hangzhou, China, 7–9 April 2023. [Google Scholar]

- Tyagi, S.; Baghela, A.; Dar, K.M.; Patel, A.; Kothari, S.; Bhosale, S. Malware Detection in PE files using Machine Learning. In Proceedings of the 2022 OPJU International Technology Conference on Emerging Technologies for Sustainable Development (OTCON), Raigarh, India, 8–10 February 2023. [Google Scholar]

- Nataraj, L.; Karthikeyan, S.; Jacob, G.; Manjunath, B.S. Malware Images: Visualization and Automatic Classification. In Proceedings of the 8th International Symposium on Visualization for Cyber Security 2011, Pittsburgh, PA, USA, 20 July 2011. [Google Scholar]

- Patil, V.; Shetty, S.; Tawte, A.; Wathare, S. Deep Learning and Binary Representational Image Approach for Malware Detection. In Proceedings of the 2023 International Conference on Power, Instrumentation, Control and Computing (PICC), Thrissur, India, 19–21 April 2023. [Google Scholar]

- Faruk, M.J.H.; Shahriar, H.; Valero, M.; Barsha, F.L.; Sobhan, S.; Khan, M.A.; Whitman, M.; Cuzzocrea, A.; Lo, D.; Rahman, A.; et al. Malware Detection and Prevention using Artificial Intelligence Techniques. In Proceedings of the 2021 IEEE International Conference on Big Data (Big Data), Orlando, FL, USA, 15–18 December 2021. [Google Scholar]

- Sreejay, O.P.S.; Joseph, S. Architectural Design of Malware Infected File Detection Using Deep Learning. In Proceedings of the 2023 International Conference on Power, Instrumentation, Control and Computing (PICC), Thrissur, India, 19–21 April 2023. [Google Scholar]

- Irshad, A.; Maurya, R.; Dutta, M.K.; Burget, R.; Uher, V. Feature Optimization for Run Time Analysis of Malware in Windows Operating System using Machine Learning Approach. In Proceedings of the 2019 42nd International Conference on Telecommunications and Signal Processing (TSP), Budapest, Hungary, 1–3 July 2019. [Google Scholar]

- Alamro, H.; Mtouaa, W.; Aljameel, S.; Salama, A.S.; Hamza, M.A.; Othman, A.Y. Automated Android Malware Detection Using Optimal Ensemble Learning Approach for Cybersecurity. IEEE Access 2023, 11, 72509–72517. [Google Scholar] [CrossRef]

- Deepa, K.; Adithyakumar, K.S.; Vinod, P. Malware Image Classification using VGG16. In Proceedings of the 2022 International Conference on Computing, Communication, Security and Intelligent Systems (IC3SIS), Kochi, India, 23–25 June 2022. [Google Scholar]

- Sharma, N.; Sangal, A.L. Machine Learning Approaches for Analysing Static features in Android Malware Detection. In Proceedings of the 2023 Third International Conference on Secure Cyber Computing and Communication (ICSCCC), Jalandhar, India, 26–28 May 2023. [Google Scholar]

- Sundharakumar, K.B.; Bhalaji, N.; Prithvikiran. Malware Classification using Deep Learning Methods. In Proceedings of the 2023 3rd International Conference on Smart Data Intelligence (ICSMDI), Trichy, India, 30–31 March 2023. [Google Scholar]

- Awan, M.J.; Masood, O.A.; Mohammed, M.A.; Yasin, A.; Zain, A.M.; Damaševičius, R.; Abdulkareem, K.H. Image-based malware classification using VGG19 network and spatial convolutional attention. Electronics 2021, 10, 2444. [Google Scholar] [CrossRef]

- Bagane, P.; Joseph, S.G.; Singh, A.; Shrivastava, A.; Prabha, B.; Shrivastava, A. Classification of Malware using Deep Learning Techniques. In Proceedings of the 2021 9th International Conference on Cyber and IT Service Management (CITSM), Bengkulu, Indonesia, 22–23 September 2021. [Google Scholar]

- Alam, M.; Akram, A.; Saeed, T.; Arshad, S. DeepMalware: A Deep Learning based Malware Images Classification. In Proceedings of the 2021 International Conference on Cyber Warfare and Security (ICCWS), Islamabad, Pakistan, 23–25 November 2021. [Google Scholar]

- Aslan, Ö.; Yilmaz, A.A. A New Malware Classification Framework Based on Deep Learning Algorithms. IEEE Access 2021, 9, 87936–87951. [Google Scholar] [CrossRef]

- Vasan, D.; Alazab, M.; Wassan, S.; Naeem, H.; Safaei, B.; Zheng, Q. IMCFN: Image-based Malware Classification using Fine-tuned Convolutional Neural Network Architecture. Comput. Netw. 2020, 171, 107138. [Google Scholar] [CrossRef]

- Lee, J.; Lee, J. A classification system for visualized malware based on multiple autoencoder models. IEEE Access 2021, 9, 144786–144795. [Google Scholar] [CrossRef]

- Yuxin, D.; Guangbin, W.; Yubin, M.; Haoxuan, D. Data Augmentation in Training Deep Learning Models for Malware Family Classification. In Proceedings of the 2021 International Conference on Machine Learning and Cybernetics (ICMLC), Adelaide, Australia, 4–5 December 2021. [Google Scholar]

- Xuan, B.; Li, J.; Song, Y. BiTCN malware classification method based on multi-feature fusion. In Proceedings of the 2022 International Conference on Image Processing, Computer Vision and Machine Learning (ICICML), Xi’an, China, 28–30 October 2022. [Google Scholar]

- Gui, H.; Zhang, C.; Huang, Y.; Liu, F. A PV-DM-based feature fusion method for binary malware clustering. In Proceedings of the 2022 4th International Conference on Communications, Information System and Computer Engineering (CISCE), Shenzhen, China, 27–29 May 2022. [Google Scholar]

- Li, S.; Li, Y.; Wu, X.; Otaibi, S.A.; Tian, Z. Imbalanced Malware Family Classification Using Multimodal Fusion and Weight Self-Learning. IEEE Trans. Intell. Transp. Syst. 2023, 24, 7642–7652. [Google Scholar] [CrossRef]

- Paardekooper, C.; Noman, N.; Chiong, R.; Varadharajan, V. Designing Deep Convolutional Neural Networks using a Genetic Algorithm for Image-based Malware Classification. In Proceedings of the 2022 IEEE Congress on Evolutionary Computation (CEC), Padua, Italy, 18–23 July 2022. [Google Scholar]

- Reddy, V.S.K.; Nagaraju, I.; Gayatri, M.; Chandrika, R.R.; Dileep, P.; Revathy, P. MDC-Net:Intelligent Malware Detection and Classification using Extreme Learning Machine. In Proceedings of the 2023 Third International Conference on Artificial Intelligence and Smart Energy (ICAIS), Coimbatore, India, 2–4 February 2023. [Google Scholar]

- Han, X.; Mou, J.; Lu, J.; Banerjee, S. Two Discrete Memristive Chaotic Maps and Its DSP Impletementation. Fractals 2023, 31, 2340104. [Google Scholar] [CrossRef]

- Amenova, S.; Turan, C.; Zharkynbek, D. Android Malware Classification by CNN-LSTM. In Proceedings of the 2022 International Conference on Smart Information Systems and Technologies (SIST), Nur-Sultan, Kazakhstan, 28–30 April 2022. [Google Scholar]

- Gao, X.; Mou, J.; Li, B.; Banerjee, S.; Sun, B. Multi-image hybrid encryption algorithm based on pixel substitution and gene theory. Fractals 2023, 31, 2340111. [Google Scholar] [CrossRef]

- Gao, X.; Sun, B.; Cao, Y.; Banerjee, S.; Mou, J. A color image encryption algorithm based on hyperchaotic map and DNA mutation. Chin. Phys. B 2023, 32, 030501. [Google Scholar] [CrossRef]

- Elalem, M.; Jabir, T. Malware Analysis in Cyber Security based on Deep Learning; Recognition and Classification. In Proceedings of the 2023 IEEE 3rd International Maghreb Meeting of the Conference on Sciences and Techniques of Automatic Control and Computer Engineering (MI-STA), Benghazi, Libya, 21–23 May 2023. [Google Scholar]

- Ma, Y.; Mou, J.; Lu, J.; Banerjee, S.; Cao, Y. A Discrete Memristor Coupled Two-Dimensional Generalized Square Hyperchaotic Maps. Fractals 2023, 31, 2340136. [Google Scholar] [CrossRef]

- Sha, Y.; Mou, J.; Wang, J.; Banerjee, S.; Sun, B. Chaotic Image Encryption with Hopfield Neural Network. Fractals 2023, 31, 2340107. [Google Scholar] [CrossRef]

- Guo, M.; Zhu, Y.; Liu, R.; Zhao, K.; Dou, G. An associative memory circuit based on physical memristors. Neurocomputing 2022, 472, 12–23. [Google Scholar] [CrossRef]

- Guo, M.; Zhao, K.; Sun, J.; Wen, S.; Dou, G. Implementing Bionic Associate Memory Based on Spiking Signal. Inf. Sci. 2023, 649, 119613. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).