Intelligent Risk Prediction System in IoT-Based Supply Chain Management in Logistics Sector

Abstract

1. Introduction

1.1. Research Motivation

1.2. Problem Statement

1.3. Research Questions

1.4. Research Contributions

- (i)

- In particular, we present a multi-model hybrid DL technique based on CNN and BIGRU for forecasting delivery timing and status. (For example SC hazards.)

- (ii)

- Choosing one of several more complex DL techniques that shows the most potential in terms of performance while being taught.

- (iii)

- Displaying the models’ performance on advanced DL networks.

2. Related Work

2.1. Machine Learning Techniques for Intelligent Supply Chain Risk Prediction Systems

2.2. Deep Learning Techniques for Intelligent Supply Chain Risk Prediction Systems

2.3. Miscellaneous Techniques for Intelligent Supply Chain Risk Prediction Systems

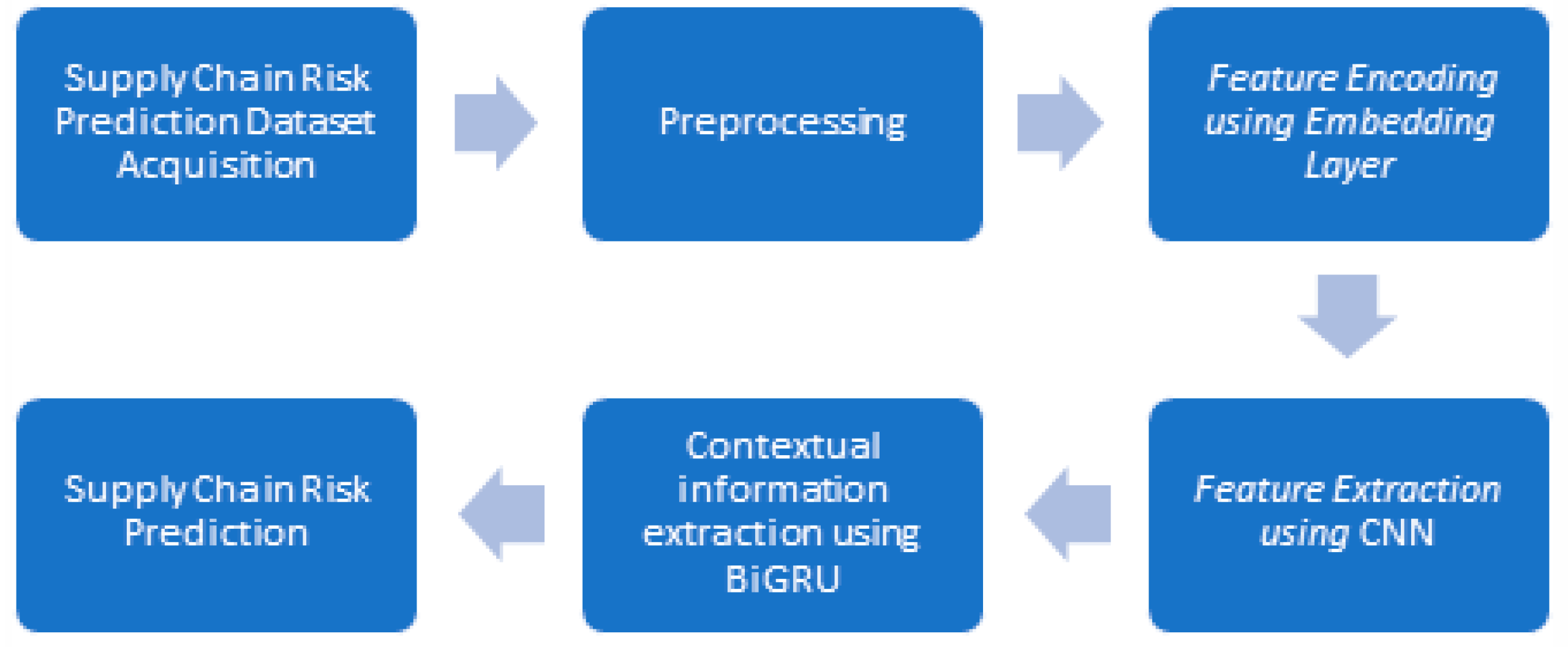

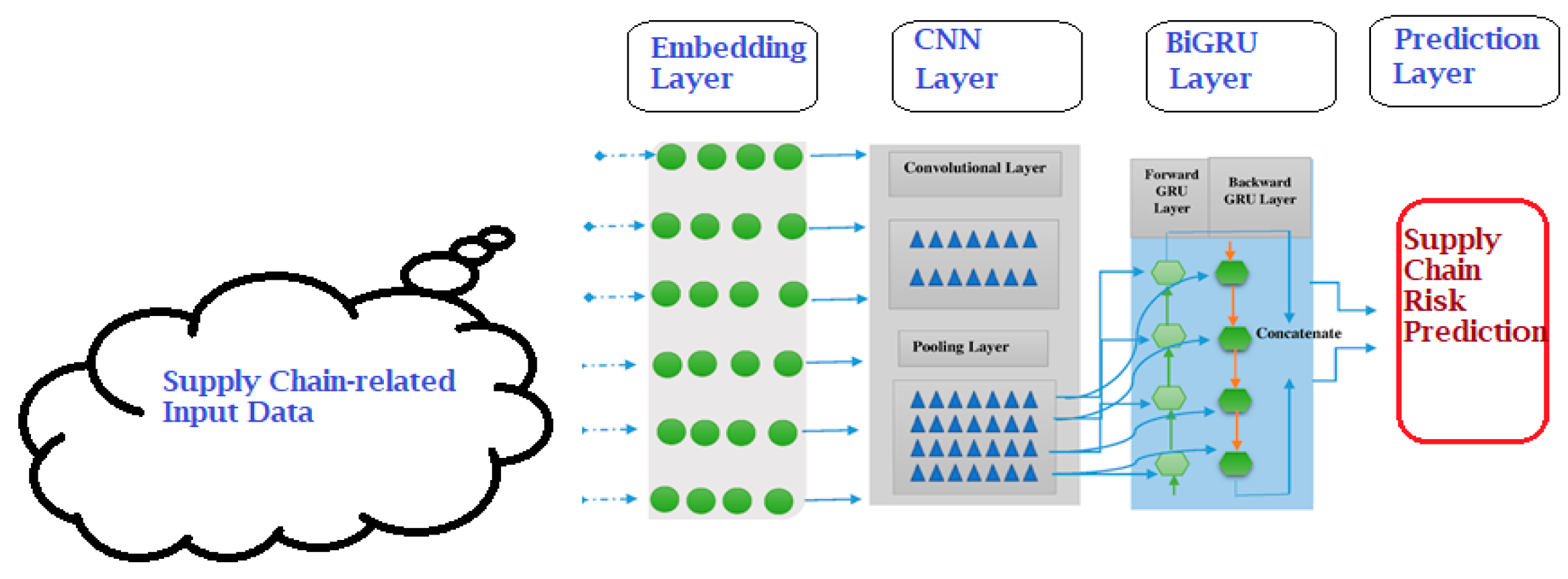

3. Proposed Methodology

3.1. Supply Chain Risk Prediction Dataset Acquisition

3.2. Pre-Processing

3.3. Feature Encoding Using an Embedding Layer

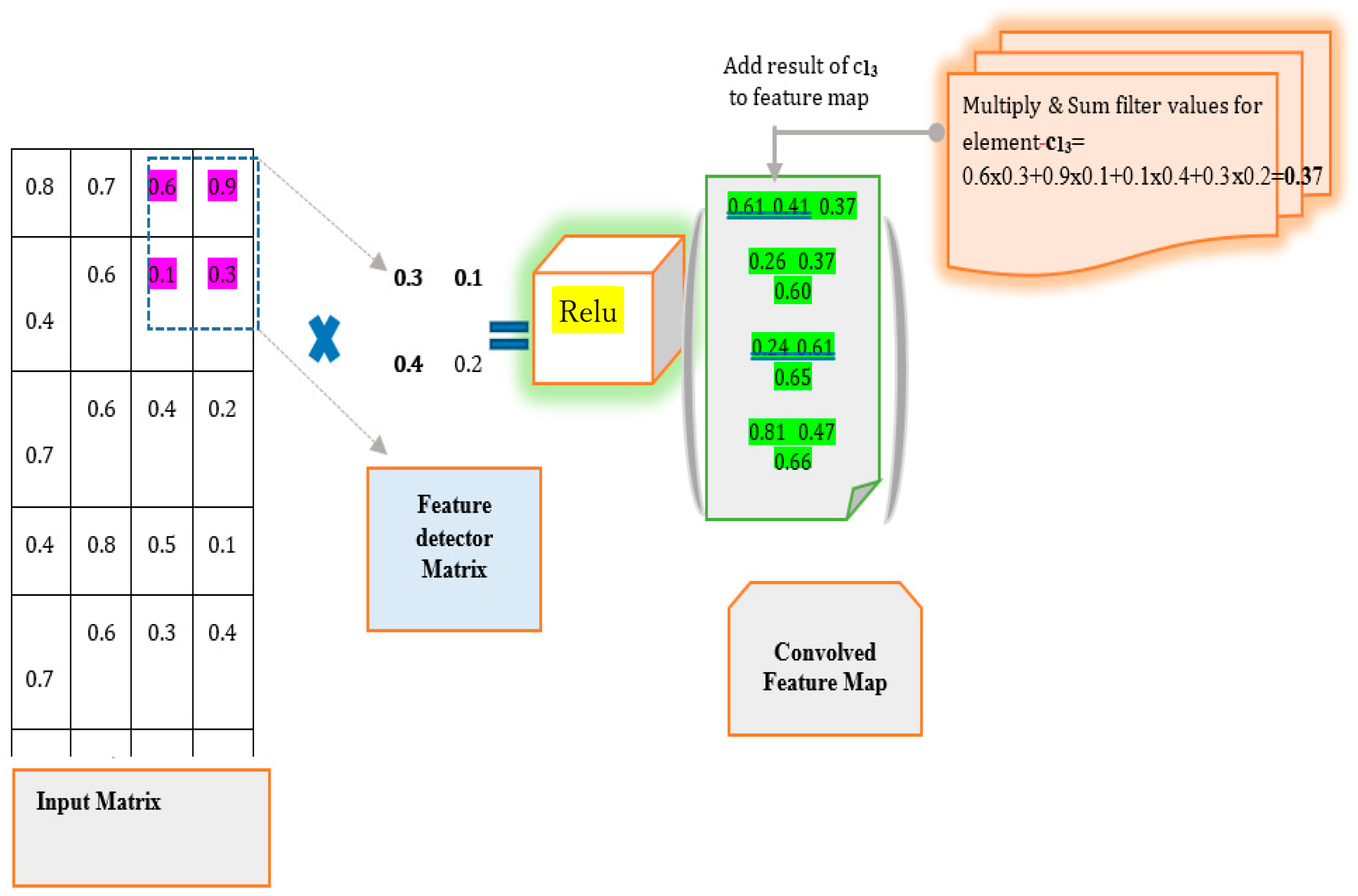

3.4. Feature Extraction Using a CNN Layer

3.5. Pooling Layer

3.6. Contextual Information Extraction Using BiGRU

- Reset gate: The network’s short-term memory is managed by the reset gate. The reset gate specifies how the fresh input will be mixed with the previously calculated output. The formula (Equation (8)) used to calculate this number is as follows:

- Update gate: Similarly, for long-term memory, we have an update gate, which regulates how much the output data are modified. The update gate’s equation is shown below (Equation (9));

- How it works: Let us now look at how these gates work. A GRU goes through a two-step process to identify the hidden state ht. The first stage is to generate what is known as the candidate hidden state, which is represented below.

3.7. Prediction Layer

3.8. Applied Example

3.8.1. Embedding Layer

3.8.2. CNN Layer

3.8.3. BiGRU Layer

3.8.4. Output Layer

| Algorithm 1: Algorithm for predicting risks in supply chains using a CNN-GRU model |

| Step I: Import the dataset in the form of an xlsx file. Step II: Split using scikit into train-test). Step III: Develop the dictionary for associating integers with CICDDoS2019 Step V: procedure of BiGRU+CNN MODEL (NRtrain,Rtrain) #The deep learning model uses a layered method [E,B,C,M,S]. model = Sequential () # Embedding Layer to map numbers to low-dimensional vectors model.add(Embedding(max_features,embed_dim,input_length = max_len)) #BiGRU Layer model.add (Bidirectional (GRU (100)) #Convolutional Layer model.add (Conv1D (filters=6, kernelsize=3, padding=’ same’,activation=’ relu’) #Maxpooling Layer model.add (MAxPooling1D (poolsize=2)) #Prediction of Supply chain Risks using Sigmoid Layer model.add (Dense (2,activation=’ sigmoid’)) #Fitting a model using train data model.fit () #Compiler Function model.compile () #Assess the performance of the model using the test dataset Supply_chain_risk=model.evaluate () #The anticipated supply chain risk is being reported as either a positive or negative shipment outcome. return predicted supply_chain_risk End Procedure |

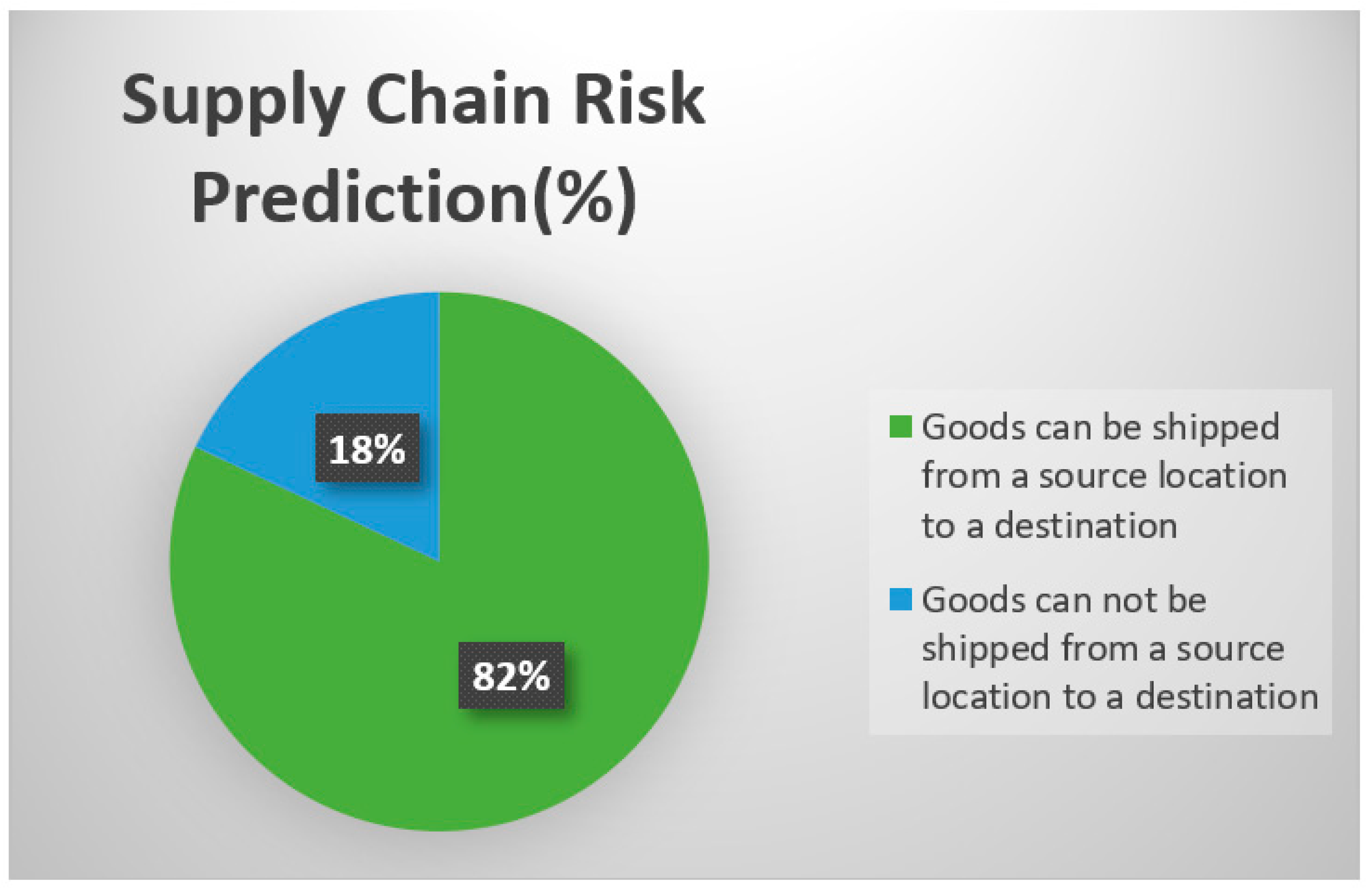

3.9. The Supply Chain Risk Prediction Model’s User Interface

4. Experimental Results

4.1. Addressing the Research Questions

4.1.1. Addressing the First Research Question

Performance Evaluation Metrices

4.1.2. Addressing the Second Research Question

4.1.3. Addressing the Third Research Question

4.2. Comparison with Baselines

4.3. Summary of the Results and Discussion

4.3.1. Analysis of the Results

4.3.2. Summary of Theoretical and Practical Contributions

4.3.3. Generalizability of Experimental Results

4.3.4. Research Implications and Insights

4.4. Complexity Analysis

5. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hu, H.; Xu, J.; Liu, M.; Lim, M.K. Vaccine supply chain management: An intelligent system utilizing blockchain, IoT and machine learning. J. Bus. Res. 2023, 156, 113480. [Google Scholar] [CrossRef]

- Karumanchi, M.D.; Sheeba, J.I.; Devaneyan, S.P. Blockchain Enabled Supply Chain using Machine Learning for Secure Cargo Tracking. Int. J. Intell. Syst. Appl. Eng. 2022, 10, 434–442. [Google Scholar]

- Bassiouni, M.M.; Chakrabortty, R.K.; Hussain, O.K.; Rahman, H.F. Advanced deep learning approaches to predict supply chain risks under COVID-19 restrictions. Expert Syst. Appl. 2023, 211, 118604. [Google Scholar] [CrossRef]

- Singh, S.; Kumar, R.; Panchal, R.; Tiwari, M.K. Impact of COVID-19 on logistics systems and disruptions in food supply chain. Int. J. Prod. Res. 2021, 59, 1993–2008. [Google Scholar] [CrossRef]

- Dong, Z.; Liang, W.; Liang, Y.; Gao, W.; Lu, Y. Blockchained supply chain management based on IoT tracking and machine learning. EURASIP J. Wirel. Commun. Netw. 2022, 2022, 127. [Google Scholar] [CrossRef] [PubMed]

- Vo, N.N.; He, X.; Liu, S.; Xu, G. Deep learning for decision making and the optimization of socially responsible investments and portfolio. Decis. Support Syst. 2019, 124, 113097. [Google Scholar] [CrossRef]

- Asghar, M.Z.; Ullah, I.; Shamshirband, S.; Khundi, F.M.; Habib, A. Fuzzy-based sentiment analysis system for analyzing student feedback and satisfaction. Comput. Mater. Contin. 2020, 62, 631–655. [Google Scholar]

- Asghar, M.Z.; Habib, A.; Habib, A.; Khan, A.; Ali, R.; Khattak, A. Exploring deep neural networks for rumor detection. J. Ambient Intell. Humaniz. Comput. 2021, 12, 4315–4333. [Google Scholar] [CrossRef]

- Cai, X.; Qian, Y.; Bai, Q.; Liu, W. Exploration on the financing risks of enterprise supply chain using back propagation neural network. J. Comput. Appl. Math. 2020, 367, 112457. [Google Scholar] [CrossRef]

- Liu, Y.; Huang, L. Supply chain finance credit risk assessment using support vector machine–based ensemble improved with noise elimination. Int. J. Distrib. Sens. Netw. 2020, 16, 1550147720903631. [Google Scholar] [CrossRef]

- Xu, C.; Ji, J.; Liu, P. The station-free sharing bike demand forecasting with a deep learning approach and large-scale datasets. Transp. Res. Part C 2018, 95, 47–60. [Google Scholar] [CrossRef]

- Nikolopoulos, K.; Punia, S.; Schäfers, A.; Tsinopoulos, C.; Vasilakis, C. Forecasting and planning during a pandemic: COVID-19 growth rates, supply chain disruptions, and governmental decisions. Eur. J. Oper. Res. 2021, 290, 99–115. [Google Scholar] [CrossRef]

- Pan, W.; Miao, L. Dynamics and risk assessment of a remanufacturing closed-loop supply chain system using the internet of things and neural network approach. J. Supercomput. 2023, 79, 3878–3901. [Google Scholar] [CrossRef]

- Liu, C.; Ji, H.; Wei, J. Smart SC risk assessment in intelligent manufacturing. J. Comput. Inf. Syst. 2022, 62, 609–621. [Google Scholar]

- Palmer, C.; Urwin, E.N.; Niknejad, A.; Petrovic, D.; Popplewell, K.; Young, R.I. An ontology supported risk assessment approach for the intelligent configuration of supply networks. J. Intell. Manuf. 2018, 29, 1005–1030. [Google Scholar] [CrossRef]

- Lorenc, A.; Burinskiene, A. Improve the orders picking in e-commerce by using WMS data and BigData analysis. FME Trans. 2021, 49, 233–243. [Google Scholar] [CrossRef]

- Keller, S. US Supply Chain Information for COVID19. Available online: https://www.kaggle.com/skeller/us-supply-chain-information-for-covid19 (accessed on 2 March 2023).

- Baryannis, G.; Dani, S.; Antoniou, G. Predicting supply chain risks using machine learning: The trade-off between performance and interpretability. Future Gener. Comput. Syst. 2019, 101, 993–1004. [Google Scholar] [CrossRef]

- Asghar, M.Z.; Albogamy, F.R.; Al-Rakhami, M.S.; Asghar, J.; Rahmat, M.K.; Alam, M.M.; Lajis, A.; Nasir, H.M. Facial Mask Detection Using Depthwise Separable Convolutional Neural Network Model During COVID-19 Pandemic. Front. Public Health 2022, 10, 855254. [Google Scholar] [CrossRef]

- Liu, J.; Yang, Y.; Lv, S.; Wang, J. Attention-based BiGRU-CNN for Chinese question classification. J. Ambient Intell. Humaniz. Comput. 2019, 1–12. [Google Scholar] [CrossRef]

- Agarwal, R. NLP Learning Series: Part 3—Attention, CNN and What Not for Text Classification. 2019. Available online: https://towardsdatascience.com/nlp-learning-series-part-3-attention-cnn-and-what-not-for-text-classification-4313930ed566 (accessed on 9 April 2023).

- All Things Embedding. Available online: https://keras.io/layers/embeddings/ (accessed on 21 February 2023).

- She, X.; Zhang, D. Text Classification Based on Hybrid CNN-LSTM Hybrid Model. In Proceedings of the 2018 11th International Symposium on Computational Intelligence and Design (ISCID), Hangzhou, China, 8–9 December 2018; Volume 2, pp. 185–189. [Google Scholar]

- Rachel, D. How Computers See: Intro to Convolutional Neural Networks. 2019. Available online: https://glassboxmedicine.com/2019/05/05/how-computers-see-intro-to-convolutional-neural-networks/ (accessed on 25 March 2023).

- Rabie, O.; Alghazzawi, D.; Asghar, J.; Saddozai, F.K.; Asghar, M.Z. A Decision Support System for Diagnosing Diabetes Using Deep Neural Network. Front. Public Health 2022, 10, 861062. [Google Scholar] [CrossRef]

- Alghazzawi, D.; Bamasag, O.; Ullah, H.; Asghar, M.Z. Efficient detection of DDoS attacks using a hybrid deep learning model with improved feature selection. Appl. Sci. 2021, 11, 11634. [Google Scholar] [CrossRef]

- Alghazzawi, D.; Bamasag, O.; Albeshri, A.; Sana, I.; Ullah, H.; Asghar, M.Z. Efficient prediction of court judgments using an LSTM+ CNN neural network model with an optimal feature set. Mathematics 2022, 10, 683. [Google Scholar] [CrossRef]

| Research Questions | Motivation |

|---|---|

| RQ1: When given a benchmark data set, how accurately does the CNN + BiGRU composite DL model predict supply chain risks in the logistics sector? | Deep neural network models, specifically CNN + BiGRU, are being investigated and put into use in order to forecast supply chain risks in the logistics sector. |

| RQ2: How can we contrast the proposed CNN + BiGRU model with classical ML methods? | To examine the efficacy of traditional feature representation methods, such as Bag of Words (BOW), using ML classifiers. |

| RQ3: How can we evaluate the efficacy of the proposed method in predicting supply chain risks in the logistics sector in relation to benchmark research and other DL methods? | To evaluate and contrast the outcomes of the proposed CNN + BiGRU DL model with those of competing approaches, evaluation metrics such as precision, recall, F1-score, and accuracy will be used. |

| Study | Objectives | Techniques/Methods | Results | Limitations |

|---|---|---|---|---|

| Liu and Huang [10] | - | Machine learning (ensemble SVM) | Acc: 83.26% | This study simply applied ensemble SVM, whereas the investigation could apply some additional methods to increase accuracy. |

| Cai et al. [9] | - | Machine learning (SVM) | The linear approach outperforms the other models with regard to precision, recall, and accuracy. | Models are implemented with a limited dataset. |

| Xu et al. [11] | - | Deep learning (LSTM-NN) | The model produces satisfactory outcomes. | There is still room for enhancements to the framework by incorporating feature selection with DL models |

| Lorenc et al. [16] | - | Deep learning (LSTM) | LSTM improves precision, reduces runtimes, and optimizes memory utilization. | The proposed model lacks handling contextual information |

| Bassiouni et al. [3] | - | Deep learning (TCN) | Based on computational results, the suggested model (i.e., TCN) is ideal at calculating the risk of transportation to a certain location within the COVID-19 limitations. | There are a limited number of dispatches available for training, testing, and validation. It is necessary to raise or extend the overall quantity of shipments. |

| Pan et al. [13] | Supply chain risk evaluation | Deep learning (BP) neural network | Maximum relative error (0.03076923%) | The integration of feature selection and hybrid DL approaches can increase model performance. |

| Hu et al. [1] | Vaccine supply chain (SC) in the context of the COVID-19 pandemic |

| The results are promising (Acc: 87.23%, Pre: 85.51%, Rec: 86.85%, F1-score: 87.34%) | By combining it with more advanced approaches such as transfer learning, additional improvement is possible. |

| Liu, et al. [14] | To identify risks associated with the digital supply chain |

| The model produced positive results and had high predictive power. | Lack of automated and enhanced risk assessment methods based on hybrid deep learning. |

| Palmer et al. [15] | Supply chain risk evaluation | Reference ontology method | The proposed models have an accuracy range of 80–86% and an average recall of 75–83%. | A combination of different ML and DL methods is required for efficient risk prediction in supply chains. |

| Field | Description | Valid Values | Type | Length |

|---|---|---|---|---|

| SHIPMT_ID | Shipment identifier | 0000001–4,547,661 | CHAR | 7 |

| ORIG_STATE | FIPS state code of shipment origin | 01–56 | CHAR | 2 |

| ORIG_MA | Metro area of shipment origin | See .csv file | CHAR | 5 |

| ORIG_CFS_AREA | CFS area of shipment origin | Concatenation of ORIG_STATE and ORIG_MA | CHAR | 8 |

| DEST_STATE | FIPS state code of shipment destination | 01–56 | CHAR | 2 |

| DEST_MA | Metro area of shipment destination | See .csv file | CHAR | 5 |

| DEST_CFS_AREA | CFS area of shipment destination | Concatenation of DEST_STATE and DEST_MA | CHAR | 8 |

| NAICS | Industry classification of shipper | See .csv file | CHAR | 6 |

| QUARTER | Quarter of 2012 in which the shipment occurred | 1, 2, 3, 4 | CHAR | 1 |

| SCTG | 2-digit SCTG commodity code of the shipment | See .csv file | CHAR | 5 |

| MODE | Mode of transportation of the shipment | See .csv file | CHAR | 2 |

| SHIPMT_VALUE | Value of the shipment in dollars | $0–999,999,999 | NUM | 8 |

| SHIPMT_WGHT | Weight of the shipment in pounds | 0–999,999,999 | NUM | 8 |

| SHIPMT_DIST_GC | Great circle distance between shipment origin and destination (in miles) | 0–99,999 | NUM | 8 |

| SHIPMT_DIST_ROUTED | Routed distance between shipment origin and destination (in miles) | 0–99,999 | NUM | 8 |

| TEMP_CNTL_YN | Temperature controlled shipment—Yes or No | Y, N | CHAR | 1 |

| EXPORT_YN | Export shipment—Yes or No | Y, N | CHAR | 1 |

| EXPORT_CNTRY | Export final destination | C = Canada | CHAR | 1 |

| - | - | M = Mexico | - | - |

| - | - | O = Other | - | - |

| HAZMAT | Hazardous material (HAZMAT) code | P = class 3 HAZMAT (flammable liquids) | CHAR | 1 |

| - | - | H = other HAZMAT | - | - |

| - | - | N = not HAZMAT | - | - |

| WGT_FACTOR | Shipment tabulation weighting factor | 0–999,999 | NUM | 8 |

| Layer | Mathematical Symbol | Description |

|---|---|---|

| Embedding | Dataset | |

| Represent the words in a dataset | ||

| Set of real numbers | ||

| I | Input matrix | |

| CNN | Filter matrix | |

| Output matrix | ||

| Pooled feature matrix | ||

| I | Input matrix | |

| Real number | ||

| Bias term | ||

| Activation function | ||

| Rectified feature map | ||

| BiGRU | Update gate | |

| Reset gate | ||

| Input vector | ||

| Cell state | ||

| Input weight | ||

| Output weight |

| CNN Model: | BIGRU Model: | Other Parameters: |

|---|---|---|

| Convolutional layer (filter size = 3 and padding = same) Pooling layer (pool size = 2) | GRU layer (units = 100) | Dense Layer (optimizer: adam, activation: sigmoid) Embedding Layer ( max _features: 2000, embed_dim: 128) |

| Model | Parameters |

|---|---|

| CNN + BIGRU1 | filter count (2) |

| CNN + BIGRU2 | filter count (4) |

| CNN + BIGRU3 | filter count (6) |

| CNN + BIGRU4 | filter count (8) |

| CNN + BIGRU5 | filter count (10) |

| CNN + BIGRU6 | filter count (12) |

| CNN + BIGRU7 | filter count (14) |

| CNN + BIGRU8 | filter count (16) |

| CNN + BIGRU9 | filter count (18) |

| CNN + BIGRU10 | filter count (20) |

| Model | Common Parameters |

|---|---|

| CNN + BIGRU1 to CNN + BIGRU10 | filter size (3), pool size (2), units (100) |

| Model | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|

| CNN + BIGRU1 | 93 | 93 | 93 |

| CNN + BIGRU2 | 91 | 91 | 91 |

| CNN + BIGRU3 | 94 | 94 | 94 |

| CNN + BIGRU4 | 91 | 91 | 91 |

| CNN + BIGRU5 | 92 | 92 | 92 |

| CNN + BIGRU6 | 91 | 91 | 91 |

| CNN + BIGRU7 | 93 | 93 | 93 |

| CNN + BIGRU8 | 91 | 91 | 91 |

| CNN + BIGRU9 | 91 | 91 | 91 |

| CNN + BIGRU10 | 93 | 93 | 93 |

| Model | Accuracy (%) | Loss Score (%) |

|---|---|---|

| CNN + BIGRU1 | 93 | 0.43 |

| CNN + BIGRU2 | 91 | 0.27 |

| CNN + BIGRU3 | 94 | 0.32 |

| CNN + BIGRU4 | 91 | 0.37 |

| CNN + BIGRU5 | 92 | 0.40 |

| CNN + BIGRU6 | 91 | 0.41 |

| CNN + BIGRU7 | 94 | 0.42 |

| CNN + BIGRU8 | 91 | 0.43 |

| CNN + BIGRU9 | 91 | 0.44 |

| CNN + BIGRU10 | 93 | 0.45 |

| Model | Acc. (%) | Prec. (%) | Rec. (%) | F1-Score (%) |

|---|---|---|---|---|

| Support vector machine (ML) | 86 | 88 | 86 | 86 |

| Logistic regression (ML) | 80 | 84 | 81 | 80 |

| Random forest (ML) | 82 | 83 | 82 | 82 |

| Decision tree (ML) | 87 | 87 | 87 | 87 |

| ML [13] | 83.21 | 83.21 | 83.21 | 83.21 |

| CNN + BIGRU (DL) | 94 | 94 | 94 | 94 |

| Model | Acc. (%) | Pre. (%) | Rec. (%) | F1-Score (%) |

|---|---|---|---|---|

| RNN | 87 | 87 | 87 | 87 |

| BIGRU | 91 | 91 | 91 | 91 |

| CNN | 90 | 90 | 90 | 90 |

| GRU | 90 | 90 | 90 | 90 |

| CNN + BIGRU | 94 | 94 | 94 | 94 |

| Technique | A (%) | P (%) | R (%) | F (%) |

|---|---|---|---|---|

| Supply chain prediction with DL [3] | 85.71 | 86.52 | 85.11 | 87.32 |

| Supply chain prediction with ML [13] | 83.21 | 84.62 | 84.09 | 84.56 |

| Supply chain prediction with DL (proposed CNN + BIGRU) | 94 | 94 | 94 | 94 |

| Hu et al. [1] | 86.88 | 87.71 | 88.31 | 87.97 |

| Dong et al. [5] | 81 | 80.52 | 80.0 | 81.0 |

| Liu et al. [10] | 84.22 | 87.17 | 86.71 | 88.21 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alzahrani, A.; Asghar, M.Z. Intelligent Risk Prediction System in IoT-Based Supply Chain Management in Logistics Sector. Electronics 2023, 12, 2760. https://doi.org/10.3390/electronics12132760

Alzahrani A, Asghar MZ. Intelligent Risk Prediction System in IoT-Based Supply Chain Management in Logistics Sector. Electronics. 2023; 12(13):2760. https://doi.org/10.3390/electronics12132760

Chicago/Turabian StyleAlzahrani, Ahmed, and Muhammad Zubair Asghar. 2023. "Intelligent Risk Prediction System in IoT-Based Supply Chain Management in Logistics Sector" Electronics 12, no. 13: 2760. https://doi.org/10.3390/electronics12132760

APA StyleAlzahrani, A., & Asghar, M. Z. (2023). Intelligent Risk Prediction System in IoT-Based Supply Chain Management in Logistics Sector. Electronics, 12(13), 2760. https://doi.org/10.3390/electronics12132760