Abstract

Epilepsy is a chronic neurological disease characterized by a large electrical explosion that is excessive and uncontrolled, as defined by the world health organization. It is an anomaly that affects people of all ages. An electroencephalogram (EEG) of the brain activity is a widely known method designed as a reference dedicated to study epileptic seizures and to record the changes in brain electrical activity. Therefore, the prediction and early detection of epilepsy is necessary to provide timely preventive interventions that allow patients to be relieved from the harmful consequences of epileptic seizures. Despite decades of research, the prediction of these seizures with accuracy remains an unresolved problem. In this article, we have proposed five deep learning models on intracranial electroencephalogram (iEEG) datasets with the aim of automatically predicting epileptic seizures. The proposed models are based on the Convolutional Neural Network (CNN) model, the fusion of the two CNNs (2-CNN), the fusion of the three CNNs (3-CNN), the fusion of the four CNNs (4-CNN), and transfer learning with ResNet50. The experimental results show that our proposed methods based on 3-CNN and 4-CNN gave the best values. They both achieve an accuracy value of 95%. Finally, our proposed methods are compared with previous studies, which confirm that seizure prediction performance was significantly improved.

1. Introduction

Epilepsy is a neurological disease produced by an abnormal function of the activity of the brain. It is similar to convulsive disorders, which cause repetitive and sudden seizures. These seizures are due to synchronous or simultaneous activity of brain cells that should be inactive. This last phenomenon is compared to a thunderstorm.

The EEG electroencephalography provides information on the electrical activity generated by nerve cells in the cerebral cortex in real time and with excellent temporal resolution in the order of ten milliseconds [1].

Detection by EEG signals requires direct investigation by a physician and significant effort and time. Additionally, experts often report differing conclusions on diagnostic results [2]. Therefore, there is a need to develop an automatic and computer-assisted method to diagnose epilepsy.

Recently, several researchers have developed various algorithms to detect epileptiform EEG data. There are existing methods for detecting seizures by using hand-designed techniques for feature extraction within EEG signals, such as nonlinear signal analyses, the time domain, the time-frequency domain, and the frequency domain. By using classifiers, chosen features should be then classified to recognize different EEG signals.

With the help of EEG, we can explore the phases of an epileptic patient [3]. Our work consists of manipulating iEEGs for an epileptic individual to predict the pre-seizures mainly from the two phases: the interictal phase, which is the period separating the occurrence of two epileptic seizures and in which the patient does seem fine, and the preictal phase, which is characterized by the sudden onset of sporadic isolated spikes and large amplitudes, and the patient may show signs of behavioral problems.

Our prediction is generated with the help of deep learning models that have proven a high accuracy for classifying between the phases of the iEEG: interictal and preictal. This allows for a fast-acting and necessary medicine and to prevent any seizure activity before the annotated onset that may have been missed by the epileptologist.

The main contributions of the present work may be summarized as follows:

- The usage of five models: 1-CNN, 2-CNN, 3-CNN, 4-CNN, and ResNet50 with transfer learning. Each one of these models is composed of a unique architecture. In this paper, we will investigate these models to attain the maximum efficiency of detecting epileptic seizures with high-precision scores.

- The 3-CNN and 4-CNN models outperform up-to-date methods in terms of accuracy.

- Automatic prediction of epileptic seizures in the interictal phase turned out to be possible with the help of our developed models.

2. Related Works

Despite the machine-learning-based and conventional threshold-based predictors, deep learning-based works have recently demonstrated high-performance seizure prediction that uses long short-term memory networks (LSTM) [4]; convolutional neural networks (CNN) [5,6,7]; semi-supervised [8] and mixed models [9,10]; and models with various time-frequency domains, frequency domains, and multivariate spatiotemporal time-domain features [11].

In [6], the authors proposed a method based on the distribution of the raw version of the EEG signal into intervals of 30 s. The Short-Term Fourier Transform (STFT) was calculated and then used as input for the CNN model. They used 64 seizures from 13 patients in the CHB-MIT dataset, reaching an FPR of 0.16 and a sensitivity of 81.2%. The three datasets studied in their research work are not large enough for a better evaluation, since the maximum recording period for each patient is 3 days. Indeed, this method can be further enhanced by non-EEG data, such as information about when seizures occur.

In [12], the authors proposed an algorithm using cooperative multi-scale CNNs for automatic feature learning of iEEG datasets. Their results point out that the proposed method reached an accuracy score of 84% and an average sensitivity of 87.85%. However, training such a multi-scale network needs longer hours, increasing, therefore, the model complexity [13]. We believe this research [12] can be extended by merging EEG and ECG data by improving classifiers and applying simplified methods for feature extraction. Recognizing that the EEG can provide indications of a seizure hours before seizure onset, the trend is to focus on analysis beyond one hour before the phase of seizure onset.

In [14], the authors predicted epileptic seizures using features preprocessed with statistical momentum, Hjorth parameters, and spectral band power as inputs to a multiframe 3D CNN model were carried out, reaching an FPR of 0.096 and a sensitivity of 85.71%. Their suggested method was generalized since no patient-specific engineering was conducted at any stage. However, it needs to be tested extensively with more subjects in various clinical conditions from different age groups to calculate the overall performance due to the fact that the CHB-MIT Scalp EEG dataset is mainly comprised of pediatric patients.

In this research work, we propose a method for predicting epileptic seizures using deep learning models. We propose five models: 1-CNN, 2-CNN, 3-CNN, 4-CNN, and ResNet50 with transfer learning. ResNet50 presents one of the most commonly used residual neural networks, which have the characteristic of performing shortcuts to go over some layers. The first part of Model-3 is a pre-trained ResNet50 without top layers. Then, after global average pooling, batch normalization, dropout, batch normalization, dropout, and dense layers are inserted. The output of the network is a softmax output layer composed of two neurons.

3. Methodology

Recently, DL models have demonstrated great efficiency and high performance in the domain of computer vision image processing and segmentation, detection, and classification.

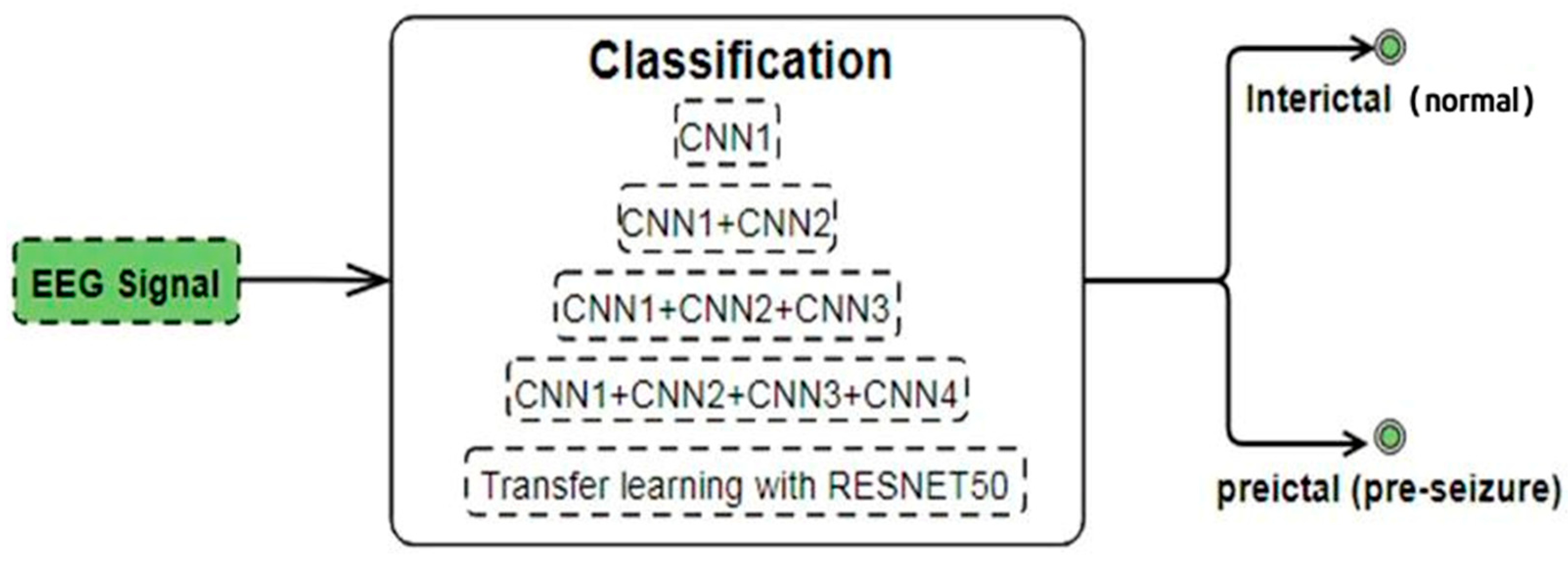

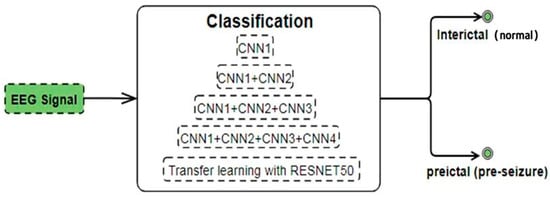

We proposed five DL algorithms to realize this classification: 1-CNN, the combination of two CNN models, the combination of three CNN models, the combination of four CNN models, and transfer learning with a ResNet50 model. The proposed methodology for the prediction of seizures (Figure 1) is provided in this section.

Figure 1.

Overview of proposed method.

3.1. Dataset Description

We used the American Epilepsy Society Seizure Prediction Challenge as our dataset. It contains intracranial EEG signals (iEEG) from five (5) dogs and two patients with forty-eight (48) seizures and a total duration of 627 h of monitoring. (https://www.kaggle.com/c/seizure-prediction/data, accessed on 15 January 2022).

3.2. Pre-Processing

The original dataset is composed of .mat files, each containing 10 min of 15 channel EEG readings. We sample 1-s snippets from a single electrode before computing the spectrogram using “scipy.signal”. This lets us leverage powerful and efficient CNNs on the vision problem after Fourier smoothing the noisy and non-stationary signal over 1 s time windows.

Next, we preprocessed the remaining data to train with five DL algorithms in order to differentiate these classes based on our signal processing scheme for 1 s time windows sampled from a single electrode in the multichannel sample.

3.3. Dataset Splitting

Splitting the dataset is an important step towards having good results. Therefore, we need to have a way to make sure that neither were the training data too small, which would lead to a greater variance for estimated parameters, nor the testing sample not large enough so the problem of great variance for the performance statistic would occur. As a result, we chose to separate our initial dataset into two (2) independent datasets. A total of 97% of data are utilized for training, whereas 3% are used for testing.

3.4. Proposed Methods

In the mission of classification, we applied the CNN classifier (1-CNN). In addition, we proposed other classifiers that combine two CNN models (2-CNN), three CNN models (3-CNN), and four CNN models (4-CNN). Finally, we implemented a transfer-learning model used with the ResNet50 model.

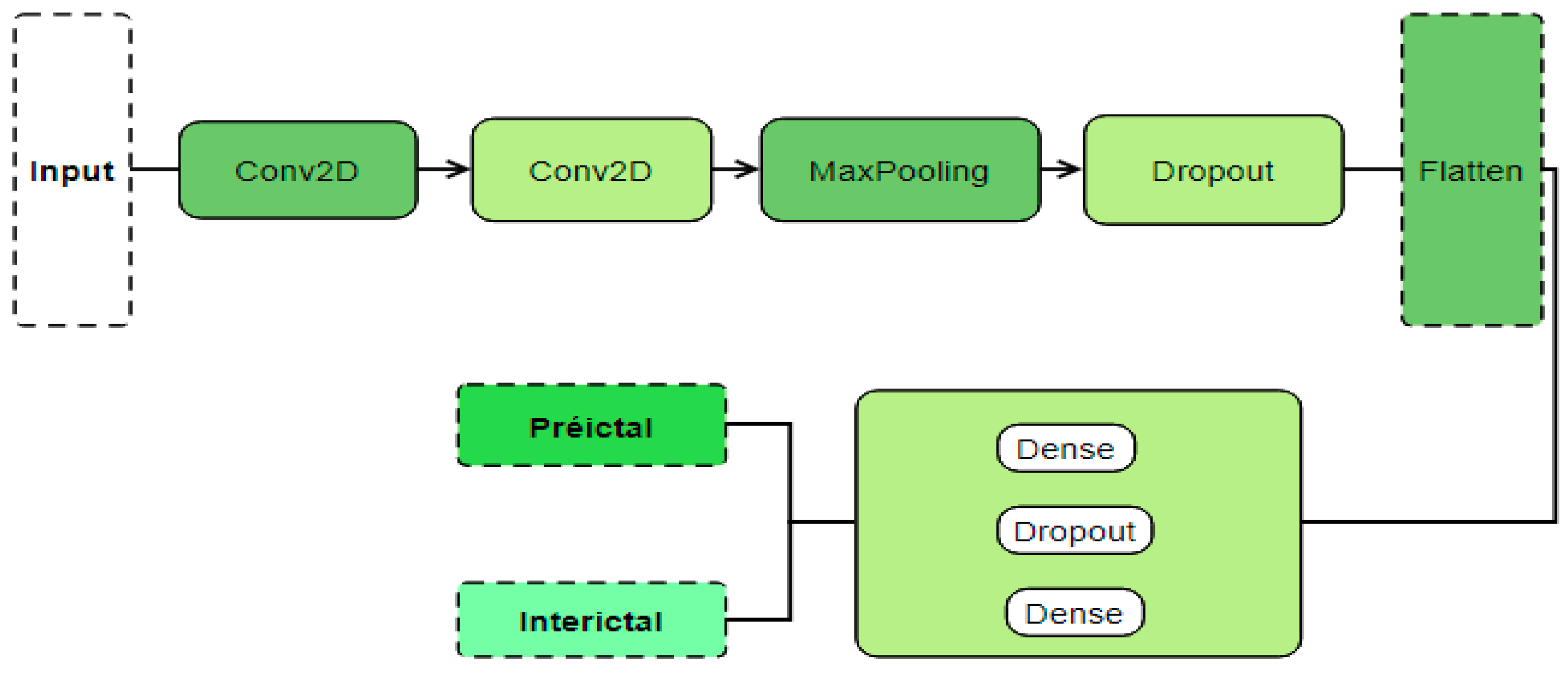

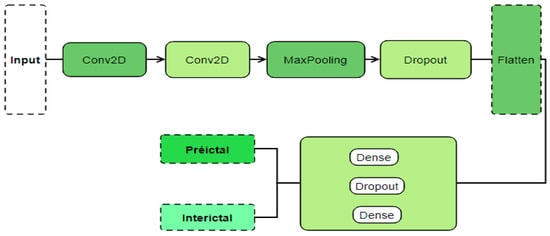

3.4.1. 1-CNN Architecture

To differentiate between a preictal and an interictal event, we have proposed a simple 1-CNN (Figure 2). This 1-CNN model is composed of the following elements:

Figure 2.

Architecture of the 1-CNN model.

- Two convolutional layers, one with 32 filters and the other with 32 filters with a kernel size (3, 3), and the activation function is the ReLU.

- A layer of max pooling with pool size = (2, 2)

- A dropout layer. In simple terms, this layer refers to the ignoring of neurons in the training phase of a group of neurons that are chosen at random; our rate is 0.25.

- A flattering layer.

- A dense layer. The layers where all the inputs of one layer are connected to each activation function of the next layer; our dense layer is comprised of 64 layers, and the activation function is the ReLU.

- Another dropout layer with a 0.5 rate.

- A dense layer with a sigmoid activation function.

- The number of epochs presents a hyper parameter, which is used to define the number of iterations run by the training algorithm. An epoch means that each sample in the training dataset has had the opportunity to update the parameters of the internal model. In our work, we used a number of epochs equal to 30.

Figure 2 shows the 1-CNN architecture we used with the ReLU and sigmoid activation function.

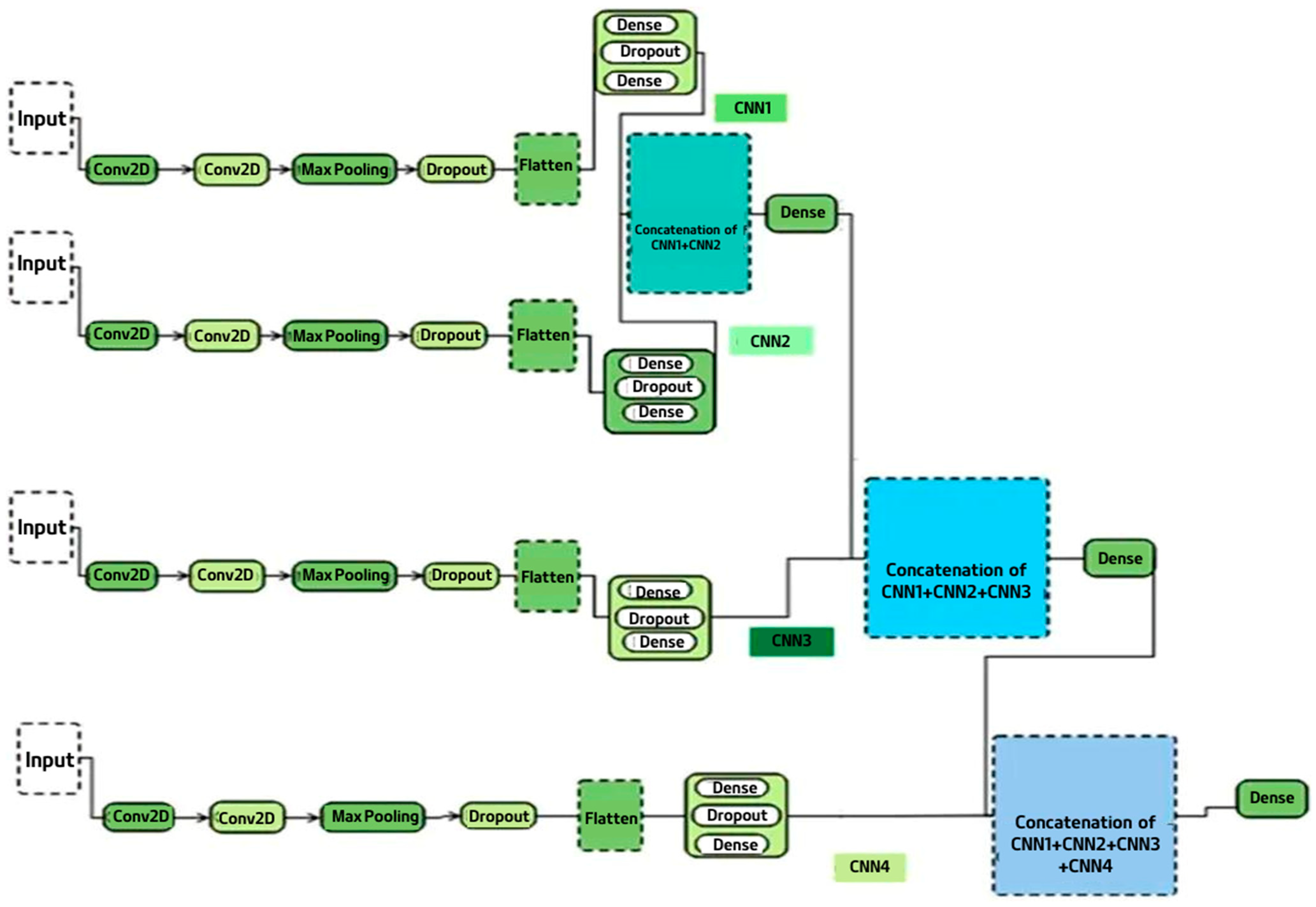

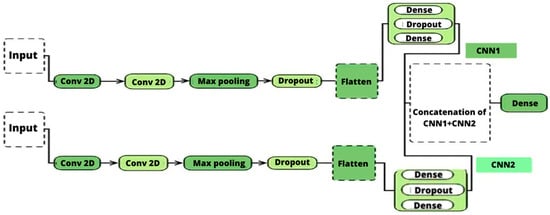

3.4.2. 2-CNN Architecture

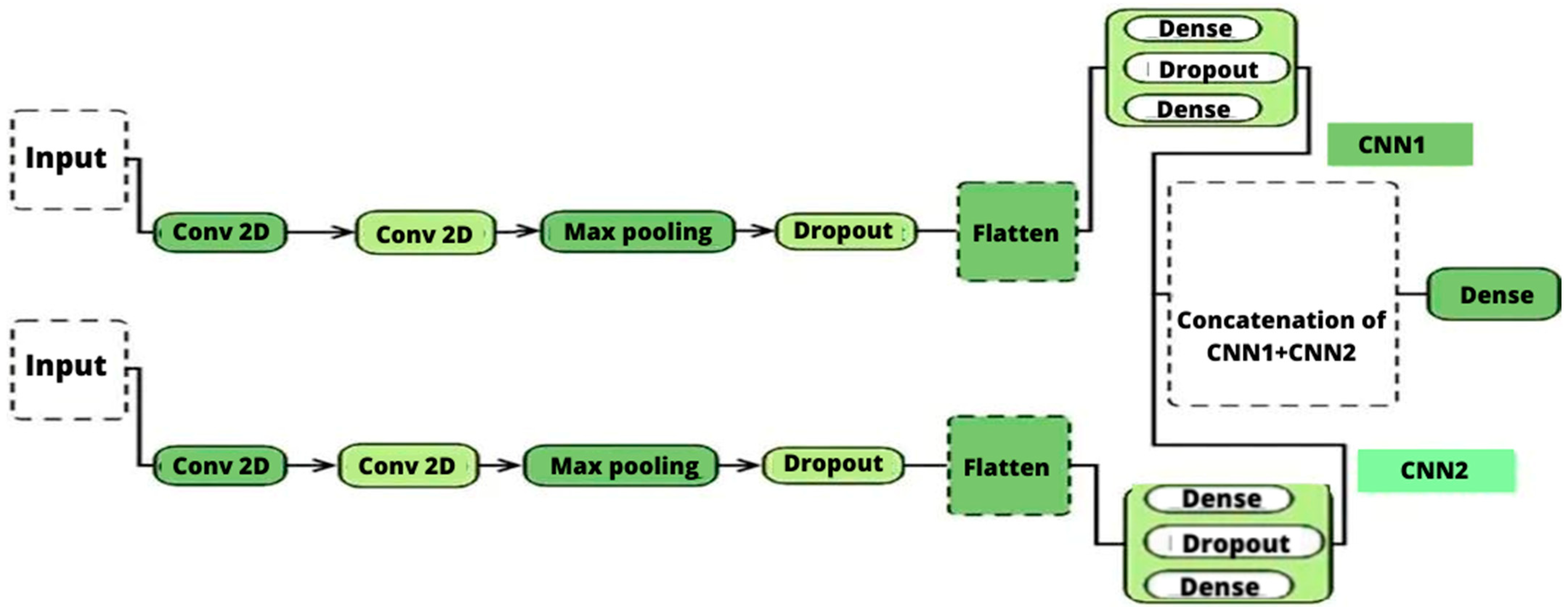

We propose a fusion method to combine two CNN models for iEEG classification. Different CNN architectures may be useful in extracting different types of iEEG, so their merging can help us create generic features for the prediction of epileptic seizures.

The 2-CNN model is composed of two similar CNN models with same architecture of the last model; we merge the CNN1 with CNN2 models as shown in Figure 3. The combination is done by a simple concatenation of the two CNN models, followed by a dense layer with a softmax activation function.

Figure 3.

2-CNN model architecture.

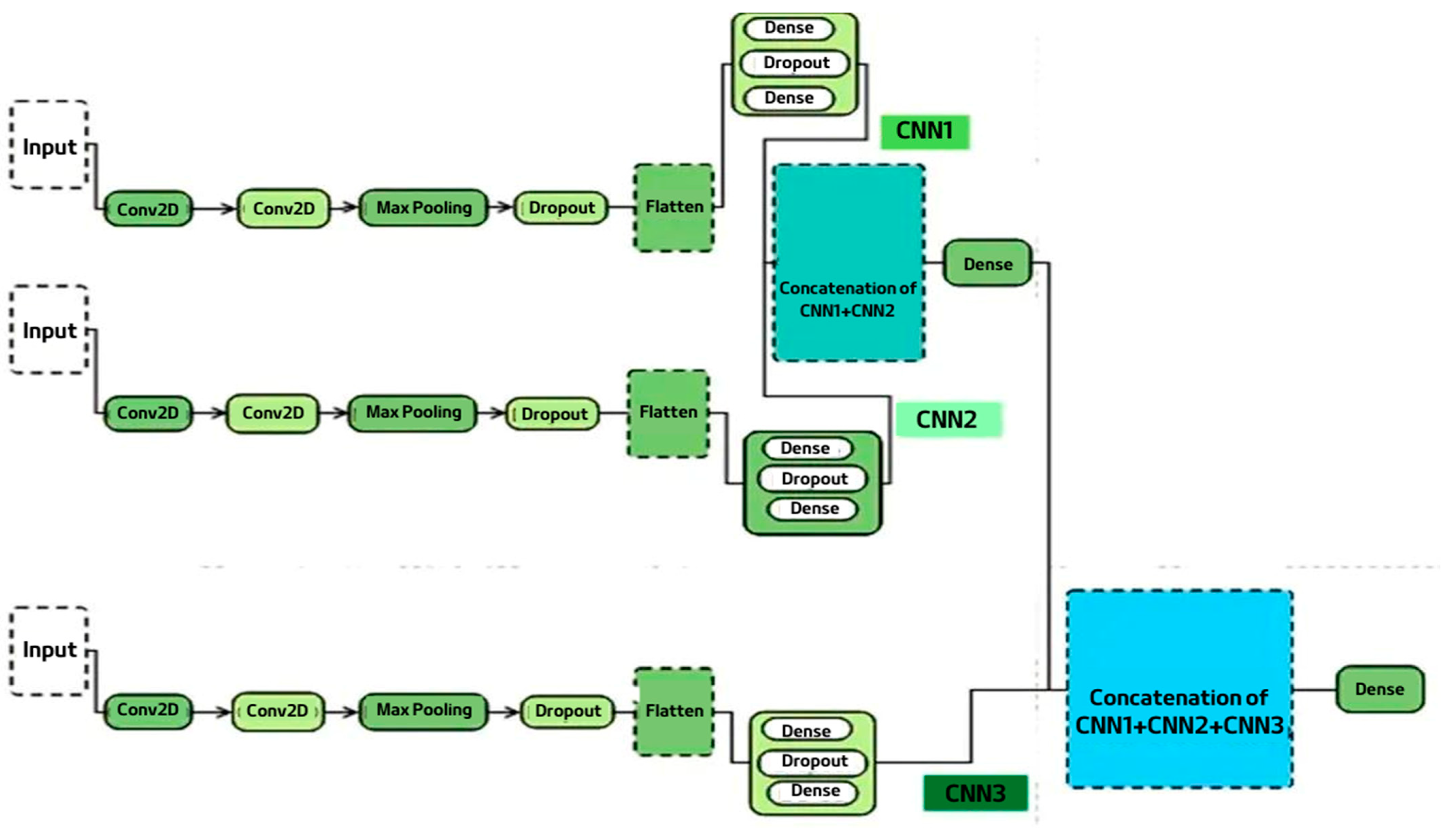

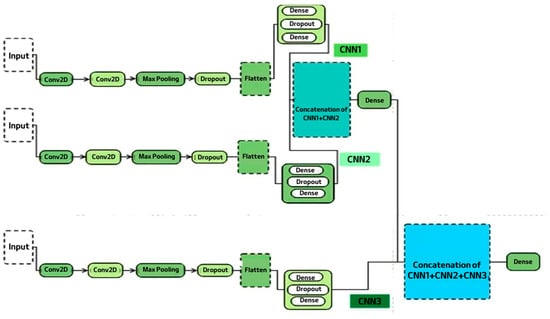

3.4.3. 3-CNN Architecture

The 3-CNN model is composed of three similar CNN models. We merged the CNN1, CNN2, and CNN3 models as shown in Figure 4. The combination is done by a simple concatenation of the two CNNs models, followed by a second concatenation of the third model, and finally, the addition of a dense layer and softmax activation function.

Figure 4.

3-CNN model architecture.

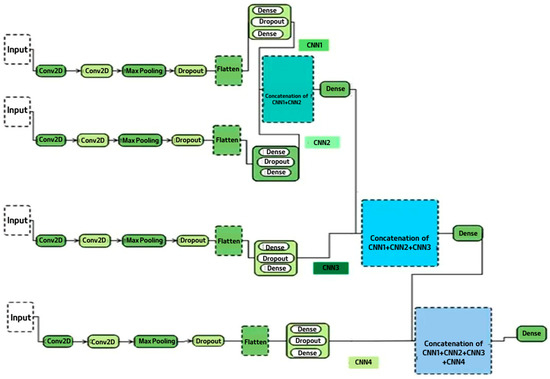

3.4.4. 4-CNN Architecture

The 4-CNN model is composed of three similar CNN models. We merge the CNN 1, CNN2, CNN3, and CNN4 models as shown in Figure 5. The combination is done by a simple concatenation of the two CNNs models, followed by a second concatenation of the third model and a third concatenation with the fourth model. Finally, we add a dense layer with softmax activation function.

Figure 5.

4-CNN model architecture.

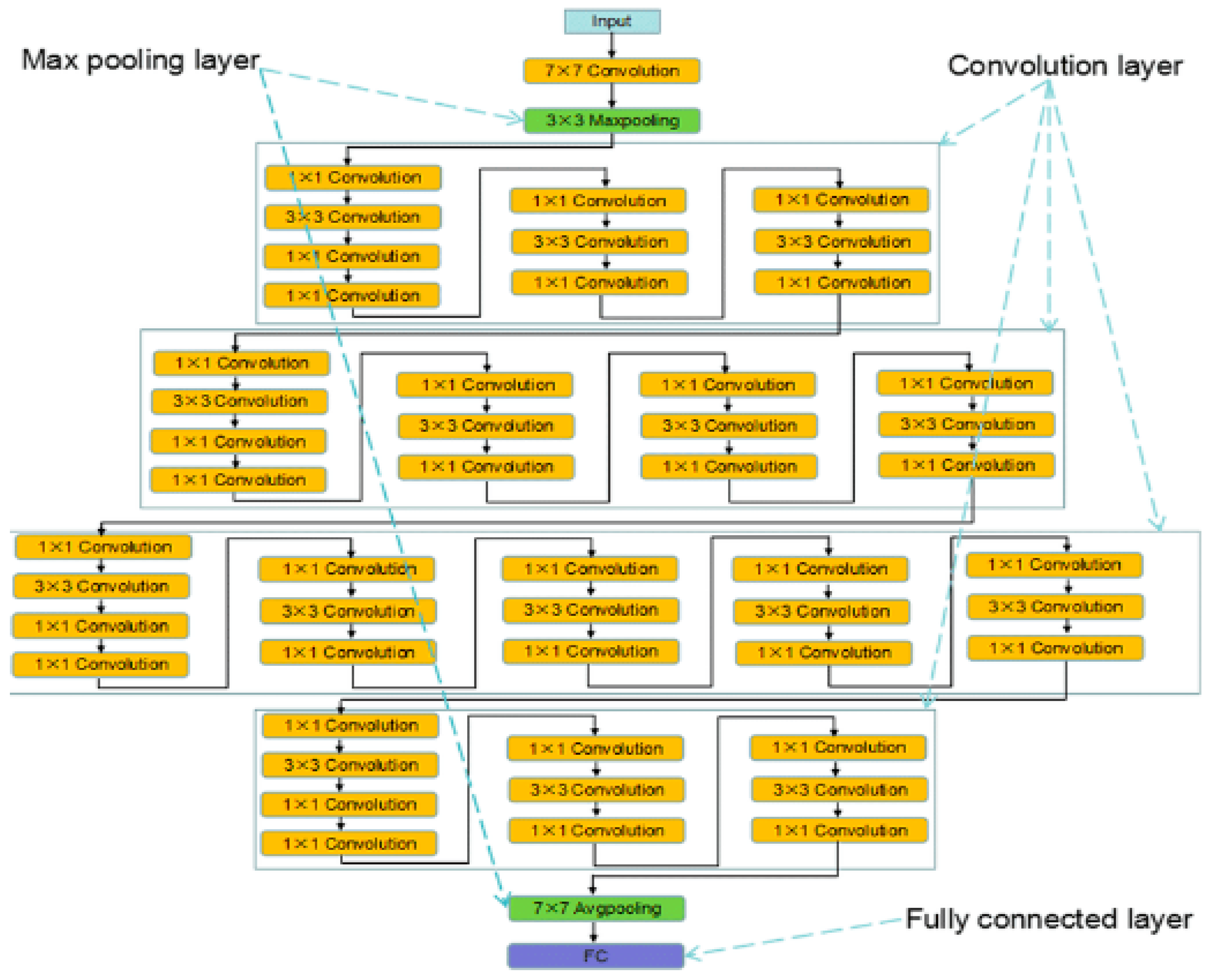

3.4.5. ResNet50 Model Architecture

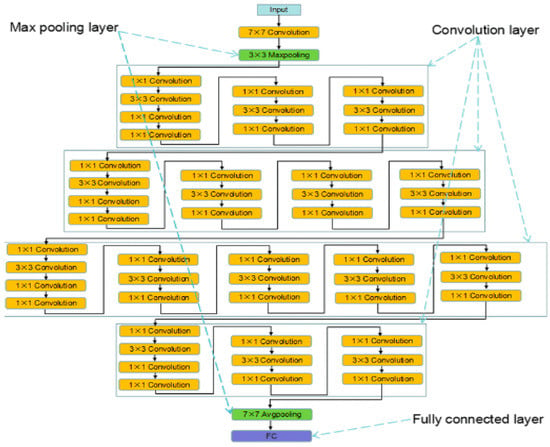

The ResNet50 model (Figure 6) is a 50-layer-deep convolutional neural network. The special part of ResNet is the residual learning block. This means that each layer should feed into the next layer, and the distance directly into the layers is about two to three swings. The approach is to add a shortcut or a jump connection that allows information to flow.

Figure 6.

ResNet50 model architecture.

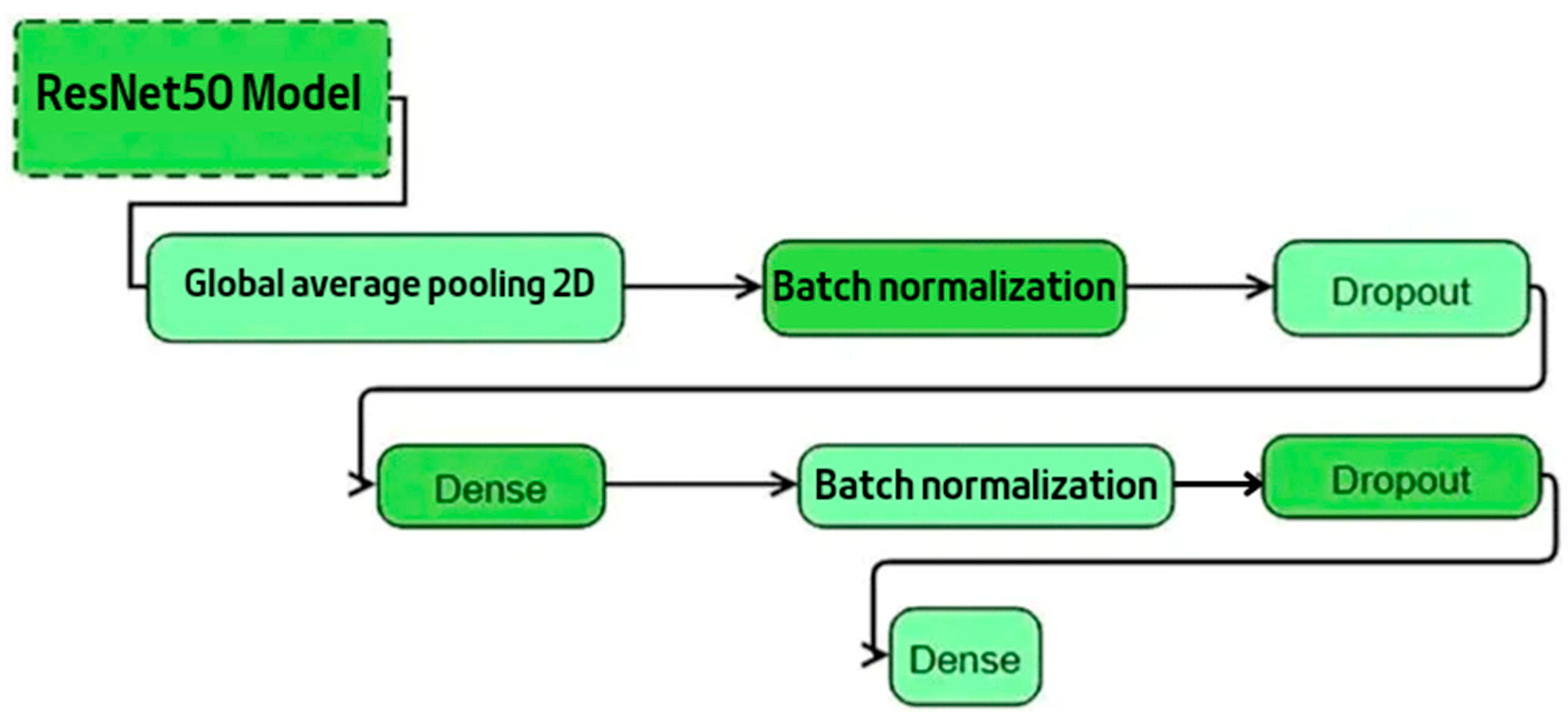

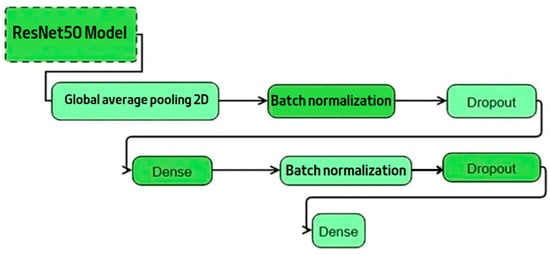

In this work, we used the pre-trained ResNet50 model; its output went through (Figure 7):

Figure 7.

Architecture of the transfer learning with ResNet50 model.

- GlobalAveragePooling2D which applies average pooling over the spatial dimensions until each spatial dimension is one and leaves the other dimensions unchanged. In this case, the values are not kept because they are averaged.

- Batch normalization, a very deep neural network training technique that normalizes the inputs of a layer for each mini-batch. This has the effect of stabilizing the learning process and considerably reducing the number of training epochs required to form deep networks.

- A dropout, a regularization technique to reduce over-fitting in neural networks; our dense layer is a layer with a 0.5 rate.

- A dense layer with 512 layers and the ReLU for activation function

- Batch normalization

- Dropout with a 0.5 rate

- A dense layer with two layers, and the activation function is the softmax

4. Performance Criteria

4.1. Used Optimizer

We will try to test an optimizer in order to minimize the error of the predictions (the loss function) and make the predictions more correct and as optimized as possible. We used the ADAM (Adaptive Moment Estimation) optimizer, which is an algorithm based on a first order gradient of stochastic objective functions, based on adaptive estimates of lower-order moments; it is among the most widely used optimizers.

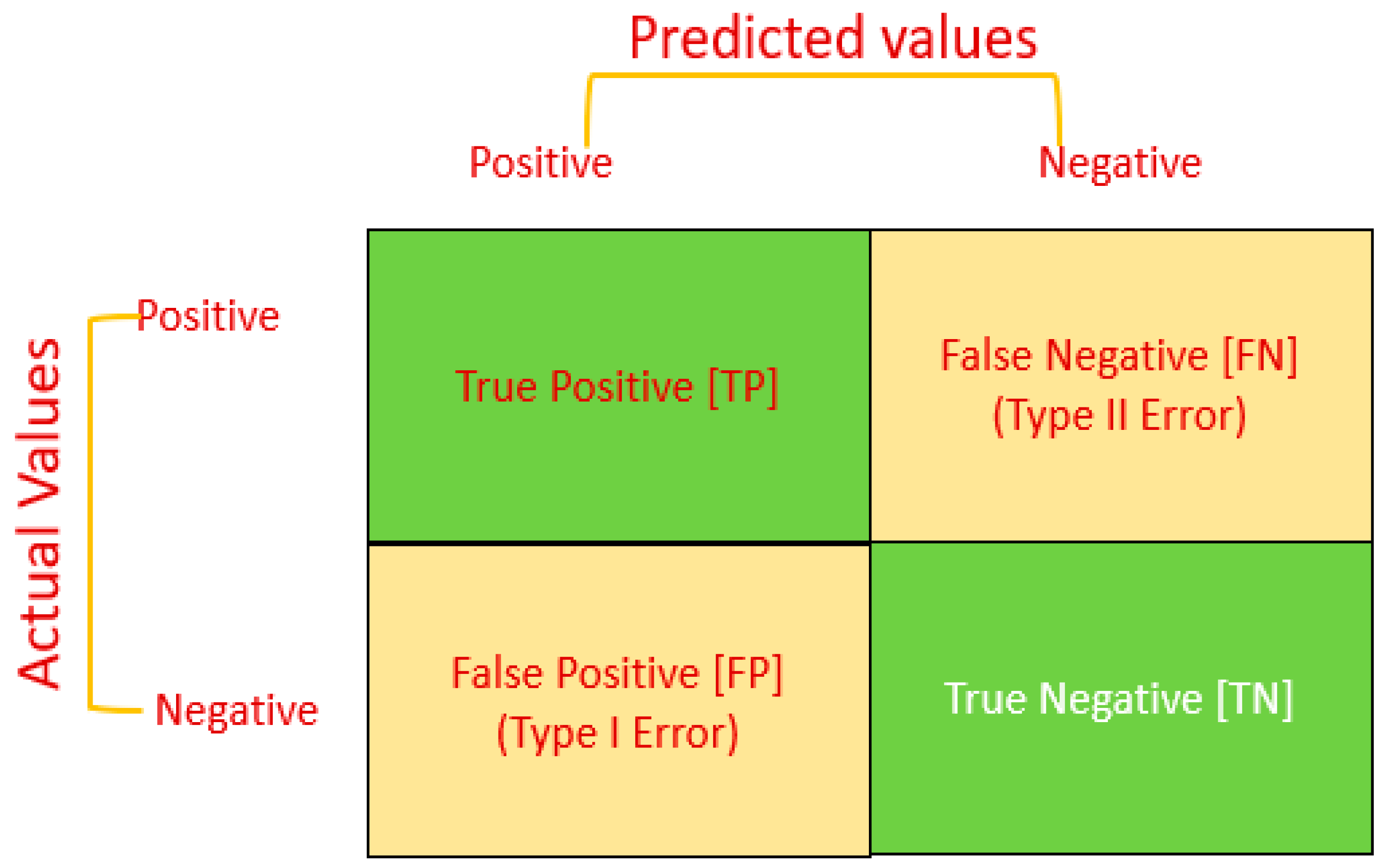

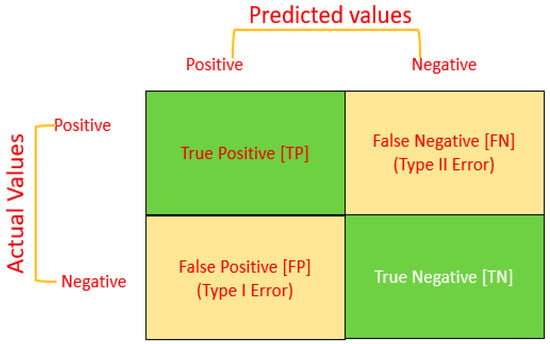

4.2. Definition of Terms

In order to determine the effectiveness of a classifier, one must distinguish between four types of items classified for the desired class: TP (true positive), TN (true negative), FP (false positive), and FN (false negative).

- TP: These are the correctly predicted positive values, which means that the value of the actual class is yes and the value of the predicted class is also yes.

- TN: These are the correctly predicted negative values, which means that the value of the real class is no and the value of the predicted class is also no.

False positives and false negatives occur when the actual class conflicts with the predicted class.

- FP: When the real class is no and the predicted class is yes.

- FN: When the real class is yes but the predicted class is no.

This matrix helps to explain how the classification model is confused when making predictions. This lets you know what mistakes and what types of mistakes are being made.

The following performance measures were calculated after calculating the values of the confusion matrix (Figure 8). The evaluation metrics we used are:

Figure 8.

Confusion matrix.

4.3. Experimental Results

In this section, we will present the main results obtained after the application of the 1-CNN, 2-CNN, 3-CNN, and 4-CNN models and transfer learning with ResNet50.

These models have been tuned for 30 epochs.

Python 3 and the TensorFlow framework were used to create the models. These were developed using the Jupyter Notebook.

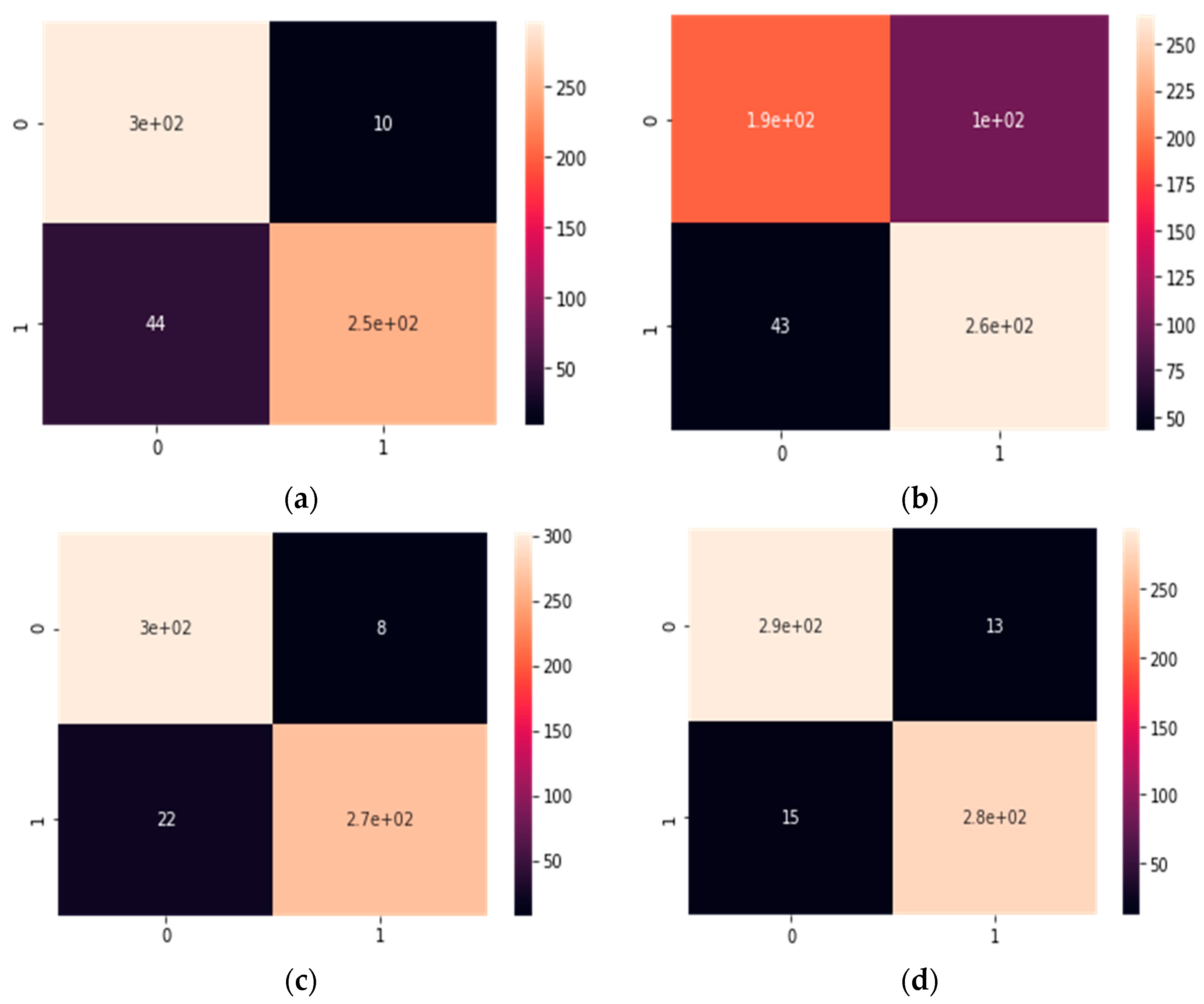

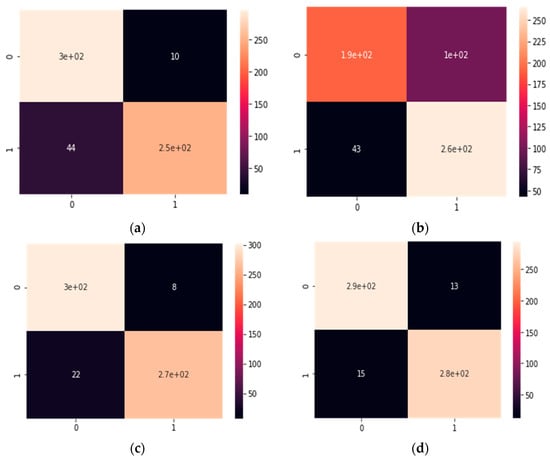

The confusion matrices obtained by the 1-CNN, 2-CNN, 3-CNN, 4-CNN, and transfer learning with ResNet models are shown in Figure 9.

Figure 9.

Confusion matrix obtained by the (a) 1-CNN, (b) 2-CNN, (c) 3-CNN, (d) 4-CNN, and (e) transfer learning with ResNet50 models.

From Figure 9, we can extract the total number of well-classified segments and the total number of misclassified segments obtained by the 1-CNN, 2-CNN, 3-CNN, 4-CNN, and transfer leaning with ResNet50 models with the ReLU activation function. The total prediction of preictal and interictal events and the percentages of the precision and error rates are presented in Table 1.

Table 1.

Precision and error rate of 1-CNN, 2-CNN, 3-CNN, 4-CNN, and transfer learning with ResNet50 models.

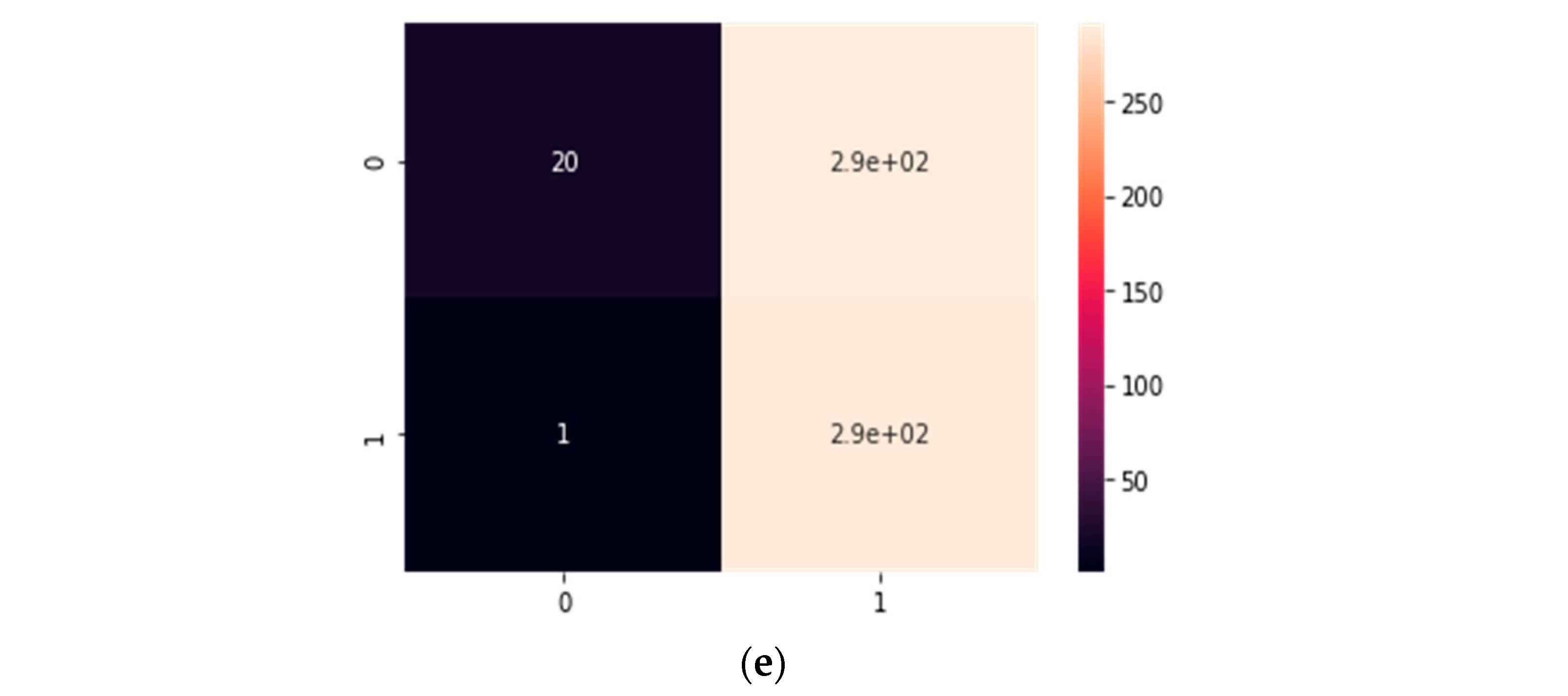

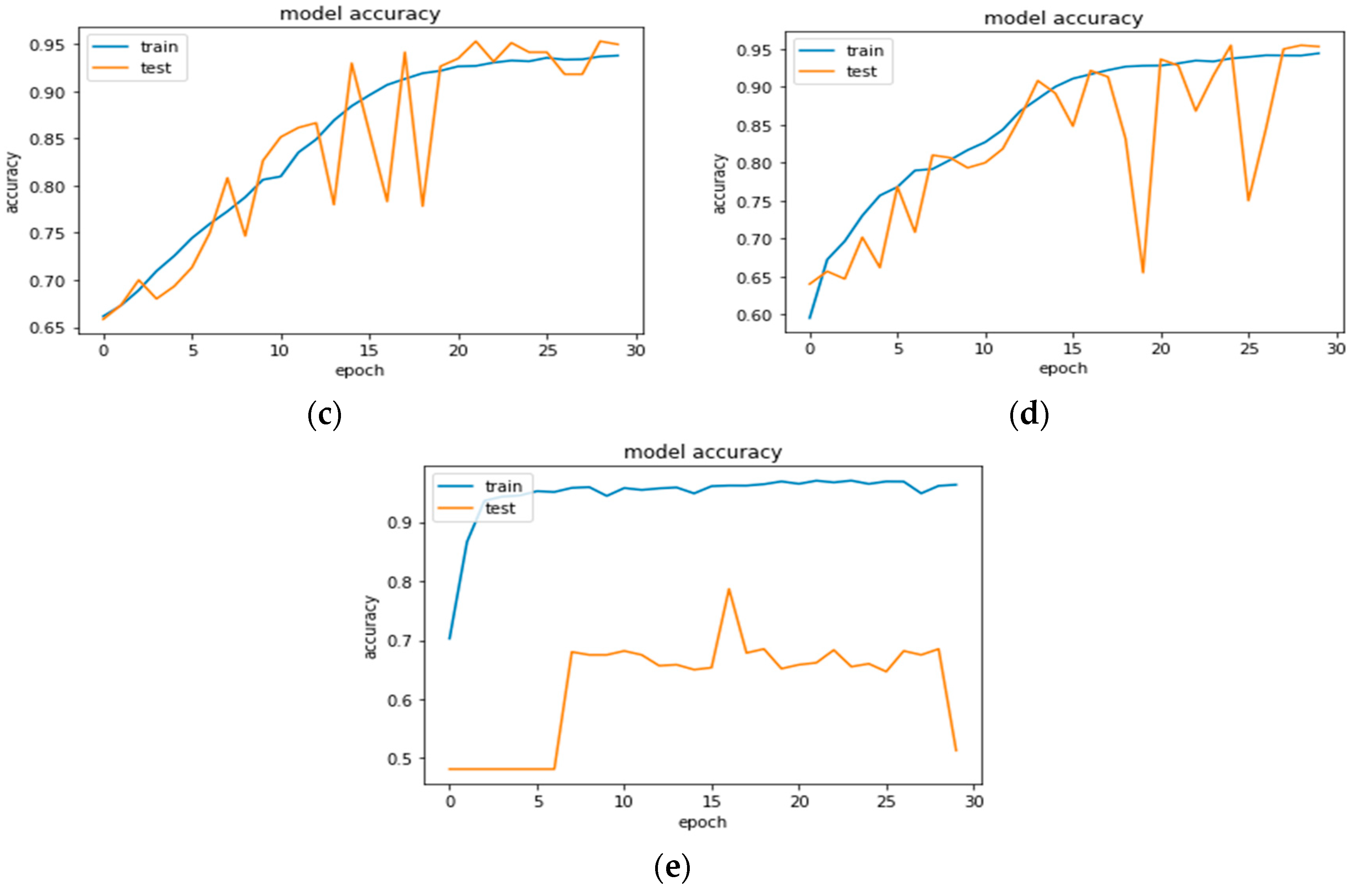

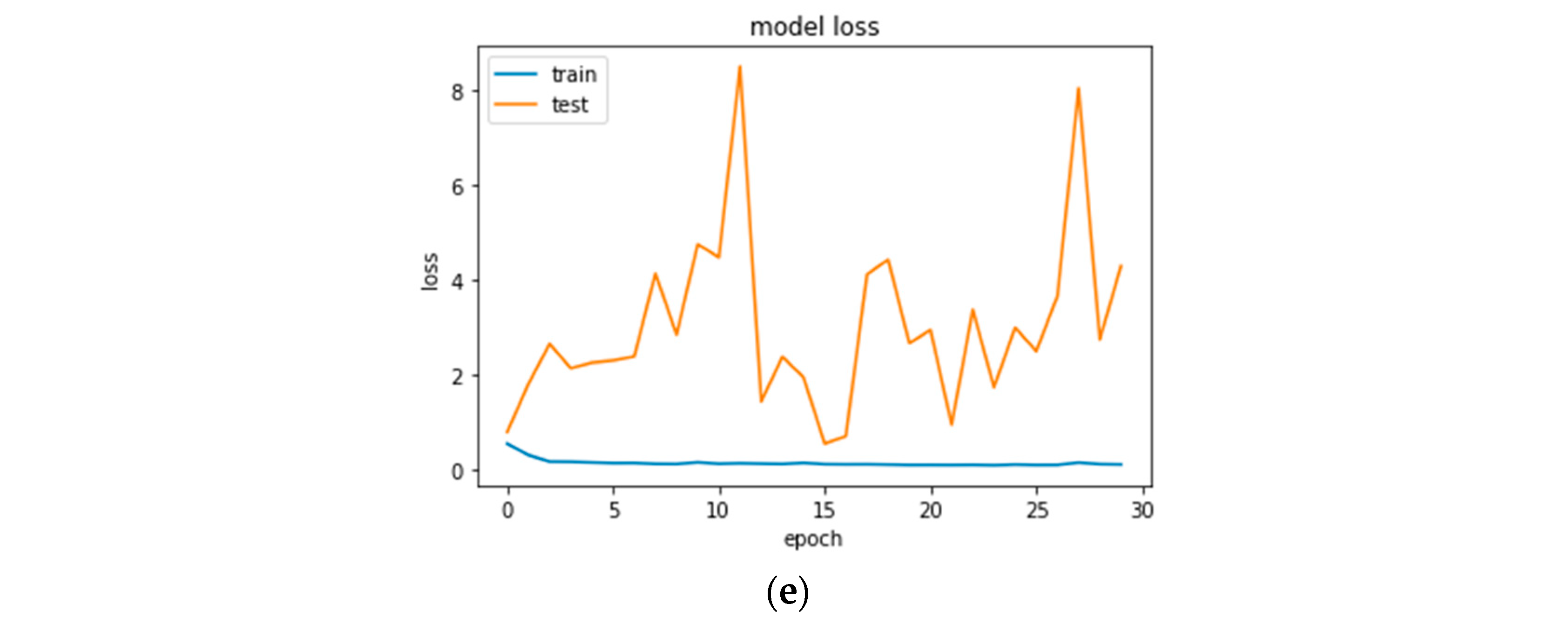

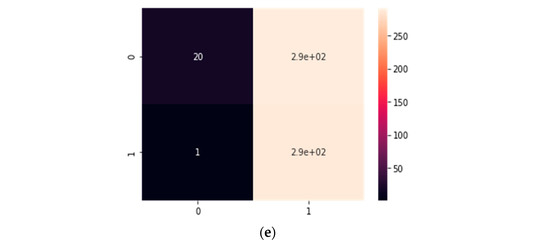

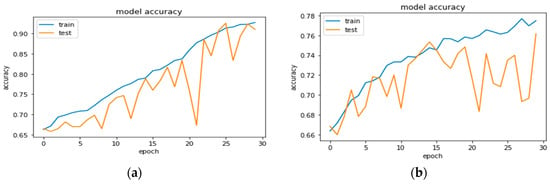

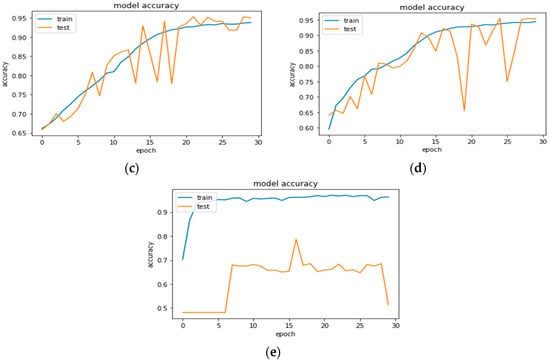

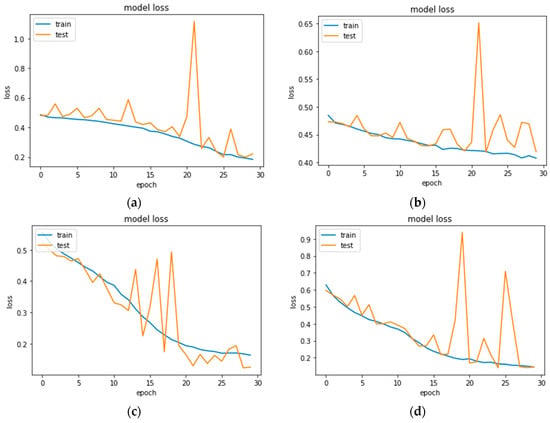

The results of the training, accuracy, and loss models have been presented as plots in Figure 10 and Figure 11.

Figure 10.

Plots of accuracy models for (a) 1-CNN, (b) 2-CNN, (c) 3-CNN, (d) 4-CNN, and (e) Transfer learning with ResNet50.

Figure 11.

Plots of loss model for (a) 1-CNN, (b) 2-CNN, (c) 3-CNN, (d) 4-CNN, and (e)Transfer learning with ResNet50.

Figure 10 and Figure 11 show the plots of accuracy and loss for our five proposed models between the training and validation phases. As can be seen, the training loss and accuracy continued to improve. We note that the precision of training and testing increases for model accuracy. With test accuracy and test loss, there was a gap with the training one, and stabilized training seemed to be fine: the training curve is upper and the test curve is lower. Figure 10a,c,d and Figure 11a,c,d demonstrate a case of a good fit. In Figure 10b and Figure 11b, a large gap remains between both curves. For Figure 10e and Figure 11e, in this case, it indicates that the test dataset may be easier for the model to predict than the training dataset.

Table 2 shows a summary of the performance metrics of evaluation obtained by applying the five models.

Table 2.

Classification report of DL models.

5. Discussion

People who have epilepsy suffer from having unexpected seizures, which is why predicting this event is meant to help and prevent sudden accidents.

In the above, we have used iEEG data for the prediction and diagnosis of epileptic seizures.

The results obtained from Table 2 show that the two methods based on the fusion of three CNNs (3-CNN) and the fusion of four CNNs (4-CNN) each give an accuracy value of 95%. The 3-CNN gives an accuracy value of 95.0%, recall of 94.5%, and 95.0% for the F1-score. The 4-CNN gives an accuracy value of 95.5%, recall of 95.5%, and 95.0% for the F1-score.

The proposed method, based on transfer learning with ResNet50, gives the lowest performance values compared to the proposed methods. It reaches an accuracy of 51%, precision of 72.5%, recall of 53.0%, and 39.0% for the F1-score.

Deep learning opens the new door to intelligent diagnostics in the medical and healthcare fields, especially in iEEG signal processing.

In our analytics, we will be using the accuracy score to compare the models because our data are a set of patient data; therefore the true positive and true negative are the most important for giving us a general idea about the interictal phase.

Table 3 summarizes the results of automatic prediction of epileptic seizures and compares them to the models used. This clearly indicates that the models used outperform other state-of-the-art models in terms of accuracy value.

Table 3.

Comparison value of accuracy for each model.

In [15], the authors propose a new DL hybrid model, DenseNetLSTM for predicting patient-specific epileptic seizures using scalp EEG data. This method achieves an accuracy of 93.28%

In [16], the author proposes a method for preictal/interictal recognition, which is an important step for early detection of epileptic seizures, by using EEG signals. In the proposed method, feature extraction was performed from the spectrogram images of EEG signals with pre-trained CNN models, and these features were classified with SVM. The accuracy obtained was around 90%.

6. Conclusions

This research work was developed in the context of the prediction of seizures using deep learning methods. Generally, electrical brain activity has become a highly demanded area of scientific research to explore essential pathologies of the human brain.

We proposed five models (1-CNN, 2-CNN, 3-CNN, 4-CNN, and Transfer learning with ResNet50) for the prediction of epileptic seizures. The results obtained point out that the two methods based on the fusion of three CNNs (3-CNN) and the fusion of four CNNs (4-CNN) each give an accuracy value of 95%. The 3-CNN offers an accuracy value of 95.0%, recall of 94.5%, and 95.0% for the F1-score. The 4-CNN gives an accuracy value of 95.5%, recall of 95.5%, and 95.0% for the F1-score.

In the future, predicting seizures can be further improved to increase accuracy, allowing doctors to plan treatment more quickly and in a more informed manner. Therefore, future research can also be done to reduce the number of parameters. This research work can also be extended by merging EEG and electrocardiogram (ECG) data, improving classifiers and applying simplified feature extraction methodologies.

Author Contributions

Methodology, O.O. and A.E.; Project administration, H.H.; Writing—original draft, O.O.; Writing—review & editing, A.E. All authors have read and agreed to the published version of the manuscript.

Funding

The authors thank Natural Sciences and Engineering Research Council of Canada (NSERC) and New Brunswick Innovation Foundation (NBIF) for the financial support of the global project. These granting agencies did not contribute in the design of the study and collection, analysis, and interpretation of data.

Data Availability Statement

The dataset used in this study is public and can be found at the following links: https://www.kaggle.com/c/seizure-prediction/data, accessed on 15 January 2022.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Cesar, O.E.; Omar, P.; Sebastian, S.C.; Juvenal, R.R.; Rebeca, R.V. A Comparative Study of Time and Frequency Features for EEG Classification. In Proceedings of the VIII Latin American Conference on Biomedical Engineering and XLII National Conference on Biomedical Engineering, Cancún, Mexico, 2–5 October 2020; pp. 91–97. [Google Scholar]

- Yang, Y.; Zhou, M.; Niu, Y.; Li, C.; Cao, R.; Wang, B.; Yan, P.; Ma, Y.; Xiang, J. Epileptic seizure prediction based on permutation entropy. Front. Comput. Neurosci. 2018, 12, 55. [Google Scholar] [CrossRef] [PubMed]

- Sánchez-Reyes, L.M.; Rodríguez-Reséndiz, J.; Avecilla-Ramírez, G.N.; García-Gomar, M.-L.; Robles-Ocampo, J.-B. Impact of EEG Parameters Detecting Dementia Diseases: A Systematic Review. IEEE Access 2021, 9, 78060–78074. [Google Scholar] [CrossRef]

- Tsiouris, K.M.; Pezoulas, V.C.; Zervakis, M.; Konitsiotis, S.; Koutsouris, D.D.; Fotiadis, D.I. A long short-term memory deep learning network for the prediction of epileptic seizures using EEG signals. Comput. Biol. Med. 2018, 99, 24–37. [Google Scholar] [CrossRef] [PubMed]

- Khan, H.; Marcuse, L.; Fields, M.; Swann, K.; Yener, B. Focal onset seizure prediction using convolutional networks. IEEE Trans. Biomed. Eng. 2018, 65, 2109–2118. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Truong, N.D.; Nguyen, A.D.; Kuhlmann, L.; Bonyadi, M.R.; Yang, J.; Ippolito, S.; Kavehei, O. Convolutional neural networks for seizure prediction using intracranial and scalp electroencephalogram. Neural Netw. 2018, 105, 104–111. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Usman, S.M.; Khalid, S. Aslam MH. Epileptic seizures prediction using deep learning techniques. IEEE Access 2020, 8, 39998–40007. [Google Scholar] [CrossRef]

- Truong, N.D.; Zhou, L.; Kavehei, O. Semi-supervised seizure prediction with generative adversarial networks. In Proceedings of the 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Berlin, Germany, 23–27 July 2019. [Google Scholar]

- Daoud, H.; Bayoumi, M.A. Efficient epileptic seizure prediction based on deep learning. IEEE Trans. Biomed. Circuits Syst. 2019, 13, 804–813. [Google Scholar] [CrossRef] [PubMed]

- Wei, X.; Zhou, L.; Zhang, Z.; Chen, Z.; Zhou, Y. Early prediction of epileptic seizures using a long-term recurrent convolutional network. J. Neurosci. Methods 2019, 327, 108395. [Google Scholar] [CrossRef] [PubMed]

- Ortiz-Echeverri, C.J.; Salazar-Colores, S.; Rodríguez-Reséndiz, J.; Gómez-Loenzo, R.A. A New Approach for Motor Imagery Classification Based on Sorted Blind Source Separation, Continuous Wavelet Transform, and Convolutional Neural Network. Sensors 2019, 19, 4541. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Asharindavida, F.; Shamim Hossain, M.; Thacham, A.; Khammari, H.; Ahmed, I.; Alraddady, F.; Masud, M. A forecasting tool for prediction of epileptic seizures using a machine learning approach. Concurr. Comput. Pract. Exp. 2020, 32, e5111. [Google Scholar] [CrossRef]

- Hussein, R.; Ahmed, M.O.; Ward, R.; Wang, Z.J.; Kuhlmann, L.; Guo, Y. Human Intracranial EEG Quantitative Analysis and Automatic Feature Learning for Epileptic Seizure Prediction. IEEE Trans. Biomed. Eng. 2019, 66, 1–13. [Google Scholar]

- Ozcan, A.R.; Erturk, S. Seizure prediction in scalp EEG using 3D convolutional neural networks with an image-based approach. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 2284–2293. [Google Scholar] [CrossRef] [PubMed]

- Ryu, S.; Joe, I. A Hybrid DenseNet-LSTM Model for Epileptic Seizure Prediction. Appl. Sci. 2021, 11, 7661. [Google Scholar] [CrossRef]

- Toraman, S. Preictal and interictal recognition for epileptic seizure prediction using pre-trained 2D-CNN models. Traitement Signal 2020, 37, 1045–1054. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).