Abstract

Although educational robots are known for their capability to support language learning, how actual interaction processes lead to positive learning outcomes has not been sufficiently examined. To explore the instructional design and the interaction effects of robot-assisted language learning (RALL) on learner performance, this study systematically reviewed twenty-two empirical studies published between 2010 and 2020. Through an inclusion/exclusion procedure, general research characteristics such as the context, target language, and research design were identified. Further analysis on oral interaction design, including language teaching methods, interactive learning tasks, interaction processes, interactive agents, and interaction effects showed that the communicative or storytelling approach served as the dominant methods complemented by total physical response and audiolingual methods in RALL oral interactions. The review provides insights on how educational robots can facilitate oral interactions in language classrooms, as well as how such learning tasks can be designed to effectively utilize robotic affordances to fulfill functions that used to be provided by human teachers alone. Future research directions point to a focus on meaning-based communication and intelligibility in oral production among language learners in RALL.

1. Introduction

Educational robots are known as capable interactive pedagogical agents in language learning situations. Previous research has reported on educational robots’ affordances for training skills in one’s first, second, or foreign language [1,2,3]. Despite claims about the potential of educational robots for helping learners improve language skills [4], no previous review has focused on instructional design that leads to positive learning outcomes in robot-assisted oral interactions. This review study, therefore, aims to fill this gap by analyzing 22 empirical studies in terms of the interactive design of oral tasks by highlighting the teaching methods used, the oral task types, the role served by the robot and the instructor/facilitator, as well as their effectiveness in improving oral competence.

1.1. Scope and Definitions

Educational robots can be divided into hands-on robots and service robots [5]. While hands-on robots are programmable robots for engineering-related practice (e.g., LEGO Mindstorm), service robots are intelligent robots that can be used by teachers as complementary tools for incorporating specific learning content and activities suitable in their teaching contexts [5,6]. This study focuses on educational robots used in language education. In language learning, the use of educational service robots can effectively facilitate the presentation of digital content, task repeatability, interactivity, flexibility for incorporating different learning theories, and embodied interactions conducive to learning [7,8]. In particular, interactions that enable oral communication between learners and robots serve as the core of robot-assisted language learning (RALL).

Defined as interactive language learning through systems that involve the physical presence of a robot, RALL provides learners face-to-face communication opportunities that resemble real conversation situations [9]. In RALL, verbal (e.g., question-and-answer) and non-verbal modalities (e.g., gesturing, nodding, face tracking) can be used to facilitate language practice, leading to increased learning motivation, interest, engagement, as well as cognitive gains [9]. Furthermore, based on principles of instructional design for technology-enhanced language learning, appropriate use of language teaching methods for designing learning activities [10], as well as the roles played by various interactive agents in RALL, need to be examined closely in order to yield insights on effective pedagogy [11]. This systematic review thus provides details about actions taken by various interacting agents (e.g., learner, robot, instructor/facilitator) in RALL and their effects on learning outcomes to help language practitioners develop interactive course design using robots in their classrooms.

1.2. The Review Study

This study aimed to conduct a systematic review, which is a type of review under the Search, Appraisal, Synthesis, and Analysis (SALSA) framework [12,13]. A systematic review adheres to a set of guidelines to address research questions by identifying reliable and quality data on a topic. Researchers who conduct this type of review (a) undertake exhaustive, comprehensive searching, (b) apply inclusion/exclusion to appraise the data, (c) synthesize the data through a narrative accompanied by tabular results, and (d) analyze what is known to provide recommendations for practice, or analyze what is unknown and state uncertainty around findings with recommended directions for future research [12].

Previous research has investigated the affordances of educational robots, and analyzed the learning goals of their use of robots for different age groups [7]. However, one research topic that remains unexplored in RALL is the cooperation between the teacher and robot and the resulting language teaching and learning model in this cooperation mode [5]. It is therefore necessary to delve into the implementation of RALL in the classroom by focusing on the interactions, including the activity design, the interactive agents involved, and interaction processes. It is also important to identify how these interaction elements affect the learning outcomes and shape learners’ experiences in RALL. Four research questions were therefore formulated as follows:

- RQ1:

- What language teaching methods are incorporated in the design of oral interactions in RALL?

- RQ2:

- Which types of oral interaction task design are employed in RALL?

- RQ3:

- What roles do robots and instructors fulfill when facilitating oral interactions in RALL?

- RQ4:

- What are the learning outcomes of RALL oral interactions in terms of learners’ cognition, language skills, and affect?

2. Literature Review

2.1. Oral Interactions in Language Classrooms

Traditionally, interaction is the process “face-to-face” action channeled either verbally through written or spoken words, or non-verbally through physical means such as eye-contact, facial expressions, gesturing [14]. In second or foreign language development, comprehensible input plays an important role [15]. That is, language learners must be able to understand the linguistic input provided to them in order to communicate authentically through spoken or written forms. In particular, classroom oral interaction involves listening to authentic linguistic output from others and responding appropriately to continue in a communicative event such as role play, dialogue, or problem-solving [16,17]. Classroom oral exchanges involve two interlocutors speaking and listening to each other in order to predict the upcoming content of the communicative event and prepare for a response [18]. As a consequence, providing the context for negotiation of meaning becomes a crucial part of facilitating classroom oral exchanges that range from formal drilling to authentic, meaning-focused communication such as information exchange [19,20]. Aside from establishing the context for oral interactions, creating intended communication behaviors among learners is another goal for language instructors. According to Robinson [14], two types of interaction can be found in a classroom—verbal and non-verbal interaction. Verbal oral interactions refer to communicative events such as speaking to others in class, answering and asking questions, making comments, and taking part in discussions. Non-verbal interaction, on the other hand, refers to interacting through behaviors such as head nodding, hand raising, body gestures, and eye contact [17]. As educational robots assume humanoid forms, they can help achieve various types of classroom oral interactions in RALL.

2.2. Affordances of Educational Robots for Language Learning

As [21] reported, educational robots began to emerge in North America, South Korea, Taiwan, and Japan in the mid-2000s. These robots took anthropomorphic forms and assumed the role of peer tutors, care receivers, or learning companions. They have an outer appearance of anthropomorphized robots with faces, arms, mobile devices, and tablet interfaces attached to their chests [21]. With different functions such as voice/sound, facial, gestural, and position recognition, RALL is perceived to be more fun, credible, enjoyable, and interactive than computer-assisted language learning, which relies on mobile devices (e.g., smartphones or tablets) only. Different stimuli can be provided as robots assume roles such as human or animal characters that speak, move, or make gestures [21] to tell stories. The various multimodal sources of input and interactions make RALL a promising field with numerous possibilities in interactive design for language learning. In addition, as the robot-assisted learning mode is still at its infant stage, there remains a great potential for researchers and educators to postulate language learning models for best practices.

2.3. Human-Robot Interaction in RALL

Prior research has shown that human–robot interaction (HRI) can lead to language development. In a review study [22], comprehensive insights were provided about the effects of HRI on language improvement, including robots’ positive impact on learner motivation and emotions due to novelty effects, and the multifaceted robotic behaviors that provide social and pedagogical support to learners. Through immersing in real-life physical environments and manipulating real-life objects, learners can also experience embodied learning to improve their vocabulary, speaking, grammar, and reading. Whole body movements and gestures have been found conducive to vocabulary learning, for example.

Robots are capable of complementing humans in language learning scenarios that focus on specific language skills such as speaking, grammar, or reading. Studies have concluded that robots can help children gain vocabulary equally well as human teachers. Furthermore, the use of robots in language learning has a great impact on learners’ affective state, including learning-related emotions. In the presence of a robot, instead of a human teacher, learners’ anxiety is reduced, and they are less afraid of making mistakes in front of a humanoid robot. Higher confidence has also been reported among teenage students when they practiced speaking skills in robot-assisted situations [22].

2.4. Applying Language Teaching Methods in Interactive Design in RALL

Cheng et al. [7] claimed that language education is ranked at the top as a learning domain with the application of educational robots. The reported types of language learning varied from general, foreign, to second or additional language skills; and the popular age levels for applying RALL were between ages of three and five (preschool), and prior to puberty (primary school), as these are two critical periods for language learning. Further connection needs to be made between language teaching methods and RALL instructional design. In this regard, the notion of didaktik can be applied [23]. Didaktik is a German term comparable to the North American concept of instructional design that considers learner needs, task design, and learning materials. Jahnke and Liebscher [23] argued that an emphasis should be put on the role of the teacher and how his/her course design translates or connects to student learning and performance. The Didaktik system has three components—the instructor, the learner, and the course content or design. The design of second and/or foreign language learning activities involves the incorporation of teaching methods as a basis for the intended learning experience.

As outlined by [24], twentieth-century language instruction mainly employed a number of language teaching methodologies in second or foreign language learning settings. According to [24], language practitioners continuously swing between methodologies that are strictly managed and those that are more laissez faire in terms of content and amounts. On one side of the pendulum swing stand the traditional methods developed in early twentieth century, these include grammar translation, direct method, and the reading method. By the mid-twentieth century, the audiolingual method (ALM) emerged mainly for teaching oral skills. Highlighting drill-based practice, ALM presents specific language structures (e.g., sentence patterns) to learners in a systematic and organized manner and helps them replace native language habits with target language habits. The method also includes pronunciation and grammar correction through drills.

Following ALM was the emergence of total physical response (TPR) and teaching proficiency through reading and storytelling (TPRS). As a method, TPR [25] directs learners to listen to commands in the target language and immediately respond with a commanded physical action. TPRS also extended from TPR and aimed to develop oral and reading fluency in the target language. By having learners tell interesting and comprehensible stories in the classroom, TPRS has been perceived as a useful technique for fostering 21st century speaking skills, connecting closely with the concept of comprehensible input and the natural approach [26].

As ALM gradually faded in the 1980s, communicative approaches such as communicative language teaching (CLT) became the dominant foreign and second language teaching paradigm, and has continued to gain popularity worldwide in the 21st century [27]. In a way, CLT makes up for shortcomings of ALM by focusing on the functional aspect of language rather than the formal aspect. Therefore, CLT mainly trains learners’ communicative competence through authentic interactions (e.g., role-play scenarios) instead of ensuring pronunciation or grammatical accuracy [28]. CLT activities usually incorporate meaningful tasks such as interviews, role-play, and opinion giving [29].

3. Methods

3.1. Search Strategy

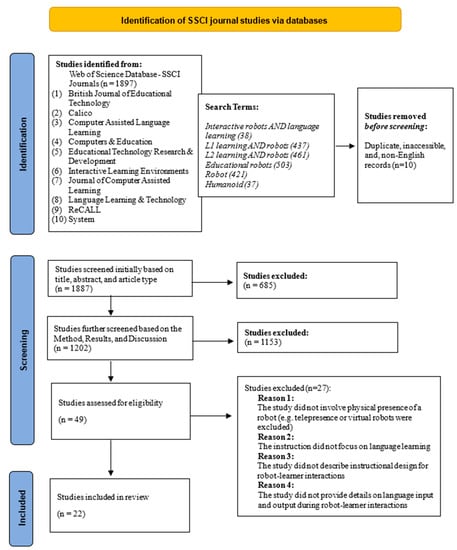

The authors employed a search strategy to retrieve articles published between 2010 and 2020 [30,31] in order to survey the development of RALL in the past decade. The databases included Web of Science, ERIC, and Ebsco, while journal sources included ten journals, most of which were from the Social Sciences Citation Index, in the field of educational technology and computer-assisted language instruction (e.g., Computers & Education, British Journal of Educational Technology, Computer-Assisted Language Learning, Educational Technology Research & Development, Interactive Learning Environments, System). The researchers conducted six searches using the following key terms—“Interactive robots AND language learning,” “L1 learning AND robots,” “L2 learning AND robots,” “Educational robots,” “Robot,” and “Humanoid,” which led to the retrieval of 1897 articles.

3.2. Study Selection

After the initial article retrieval, the researchers underwent a study selection process. The researchers first eliminated inaccessible, duplicate, and non-English articles, which reduced the number of articles to 1887. After these articles were removed, the remaining studies were screened by title, abstract, and type of study. Specifically, titles and abstracts that indicated the use of robots for language learning were selected. Also, only empirical studies were selected. Therefore, other article types such as review studies, book reviews, proceedings, and editorials were eliminated, leading to 1202 studies remaining for further screening based on the Method, Results, and Discussion sections. In particular, the researchers evaluated the rigor of the Method section, evidence of learning outcome in the Results, and pedagogical implications in the Discussion. This led to 49 eligible studies for inclusion/exclusion.

3.3. Eligibility: Inclusion/Exclusion Criteria

With a total of 49 studies eligible for assessment, rigorous inclusion/exclusion criteria were applied to obtain valid data on interactions in RALL. The criteria were as follows:

- The study must present physical use of robots;

- The study must focus on language learning;

- The study must employ rigorous methodology with sufficient details;

- The study must report about robot-learner interactions in detail, including the specific language input and output during the interactions.

As shown in Figure 1, articles that failed to meet the inclusion criteria were removed. For example, studies that used virtual robots or studies with a focus on subjects other than language learning were removed. Similarly, studies that did not provide thorough accounts of the instructional design for oral interactions (including the language input and output in RALL) were eliminated. The final number of selected articles was twenty-two with the publication period spanning from 2010 to 2020.

Figure 1.

PRISMA flow chart showing the selection process (available online: http://www.prisma-statement.org/ (accessed on 7 March 2021).

3.4. Data Extraction

The data extraction process involved close reading of the 22 selected studies. First, the general research profile (See Table A1) with characteristics (e.g., country, target language, implementation duration, research design, technological components) were coded. Second, based on the Didaktik instructional design model, which includes three components—the instructor, the learner, and the course design, the researchers coded content on the learning activity, role of the robot as a pedagogical agent, interactive task design, language input and output, and learning outcome in terms of cognition, affect, and skill (see Table A2 and Table A3). Table 1 provides the coding scheme for the interactive oral task design (See Table A3).

Table 1.

Coding Scheme for Task Design for Oral Interactions in RALL.

3.5. Tabulations

A series of tabulations were conducted by one of the co-authors and one experienced research assistant. First, general characteristics were identified. For example, the target language for each study was categorized as (a) a first language, (b) a foreign language, and (c) a second language (See Table A1). Another general characteristic identified was the major theoretical foundations in RALL and their benefits and drawbacks across the 22 studies. The last general characteristic concerned the technological affordances in RALL, including the type of robot and the sensors used (See Table A1).

Second, the distribution of major language teaching methods (e.g., audiolingual method, communicative language teaching) applied in the 22 reviewed studies was tabulated (See Table A2). Many studies employed more than one language teaching method in their activities. Third, oral interaction tasks that were considered effective in the selected studies were categorized into (a) storytelling, (b) role-play, (c) action command, (d) question-and-answer, (e) drills (e.g., repeating/reciting), and (f) dialogue (See Table A3). Fourth, the roles played by the robot and the support provided by the instructor/facilitator were coded (See Table A2). The robot’s main roles included (a) role-play character, (b) action commander, (c) dialogue interlocutor, (d) learning companion, and (e) teacher assistant; while the support by human instructors/facilitators included (a) procedural support, (b) learning support, and (c) technical support. Fifth, the language input and output were coded (See Table A3). Specifically, the language input mode was categorized into (a) linguistic, (b) visual, (c) aural, (d) audiovisual, and (e) gestural/physical modes; and the language output was categorized into four levels based on linguistic complexity, including (a) phonemic level (referring to the smallest sound unit in speech, e.g., the phonetic entities/b/,/æ/, and/t/, respectively in the word bat), (b) lexical level, (c) phrasal level, and (d) sentential level. During the entire inter-coding process, one of the researchers served as the first coder and created a coding scheme to train the second coder. Then, after initial coding trials on three studies, the two coders met and discussed the resulting discrepancies to engage in another trial. After all the studies were coded, the inter-coder reliability in terms of percent agreement was calculated to be 87%.

3.6. Synthesis

Synthesis on the detailed instructional design for oral interactions in RALL was based on the type of task design and the actions performed by the robot, learners, and human facilitators/instructors. The researchers synthesized the coded data to connect the nature of each task type to the actual interactions induced by the task. For example, through storytelling, a robot could read a story aloud for the learner to listen and receive the linguistic input. The learners could then be asked to recite, repeat, or act out the story in a role play task to produce language output following the robot’s content delivery or action commands. Furthermore, the language input and output, as well as the type of teacher talk afforded by the robot in each oral interactive task among the 22 studies were analyzed to help the researchers understand the mechanisms that enriched the oral interactions. The researchers sought evidence of stimulating and engaging elements in the designed oral tasks and were able to see that the oral interactive tasks were conducive to heightening the level of motivation, interest, and cognitive engagement, which in turned fostered the development of oral skills in language education.

4. Results

4.1. General Characteristics

Several characteristics in the general profile of the 22 studies were worth noting—the geographic research settings, education levels, the target language for acquisition with the robot-assisted activities, the research design, theoretical bases, and technological affordances in RALL. The countries that implemented robot-assisted oral interactions for language learning included Taiwan (n = 6), Japan (n = 3), Sweden (n = 3), Iran (n = 3), South Korea (n = 2), United States (n = 2), Turkey (n = 2), and Italy (n = 1). In terms of the distribution of RALL by learners’ education levels, the results showed that primary schools engaged their learners in RALL most frequently (n = 11), followed by preschools (n = 4), higher education (n = 4), and secondary schools (n = 3). This finding indicates that robots best serve children in formal, primary schooling years, as children between the ages of 7 and 12 (the primary schooling age in most countries) still find robots fun and appealing as opposed to older teenagers who might find them somehow childish or less intellectually engaging. The second age group that benefited most from RALL was preschoolers. Similarly, toddlers and young children still enjoy interacting with humanoid robots. Coincidentally, primary school children and preschoolers belong to the two critical periods for language development. It is possible that since learners from these two developmental stages benefit most from enriched language learning activities, language educators devote more efforts by incorporating robot-assisted oral interactive learning activities to engage learners from these two age cohorts.

Target languages in the 22 RALL studies focused primarily on foreign language learning, especially learning English as a foreign language (n = 14) occurred most frequently, followed by Russian (n = 1) and Dutch (n = 1), while first and second language learning occurred less frequently, with three studies for both categories. As for the research design, the majority of the studies employed either single-group (n = 7) or between-group (n = 6) experiments; some of these experiments adopt pre-/post-test instruments (n = 6), while others adopt survey evaluation design (n = 2). Other research designs include quasi-experiments (n = 4), ethnographic study design (n = 1), and system design and implementation evaluation (n = 1). Overall, the research instruments revealed a trend of using quantitative, summative assessment in RALL. Specifically, over 70% of the studies employed tests such as listening, speaking, word-picture association, vocabulary, reading, and writing tests to measure learners’ performance of target skills. Only less than 15% used qualitative, formative assessment on skills such as storytelling and drawing artifacts. Although 29% of the studies did use video recording to collect data on learning performance, the assessment methods remained test-oriented in RALL.

Two major theoretical bases were identified among the RALL studies—technologies for creating human–robot relationships and embodied cognition through robot-based content design. The first theoretical basis was developing robots for forming human–robot relationships through HRI interactions. Attempts to enable humanoid robots to autonomously interact with children using visual, auditory, and tactile sensors were realized [36]. Also, RFID tags enabled mechanisms such as identifying individual learners and adapting to their interactive behaviors to successfully engage learners in actual language use. Such findings support theoretical perspectives from social psychology by highlighting similarity and common ground in learning. Applying this perspective to RALL, it was imperative that robots bear similar attributes and knowledge as target users [36]. Doing so led to benefits such as engaged language use, improved oral skills, and higher motivation and interest in learning. However, novelty effects were reported [37]. Also, highly structured activities for autonomous robot responses led to little variation among learner responses. Recommendations were thus made about adapting robot behaviors to learners’ responses.

The second theoretical basis was applying embodied cognition through robot-based content design. Robot-based content design, as opposed to computer-based content design, which consists of static user model and two-dimensional, visual and audio content displayed on screen consists of dynamic user models with visual, audio, and tangible, human-like humanoids with an appearance and body parts that perform face-to-face interactions [37]. In addition to tangible, interactive design, RALL design provided bidirectional interactive content through installing e-book materials, reaping combined benefits of e-learning tools and embodied language learning to improve learners’ reading literacy, motivation, and habit [38].

As for technological affordances in RALL, the general functionalities included identifying multiple learners, recalling interaction history, speech recognition and synthesis, body movements, oral interactions, teaching, explaining, song playing, dancing, face recognition, language understanding and generation, dialogue interactions, motions on wheels, and interaction event tracking. Sensors such as wireless ID tags, eye/stomach/arm LEDs, RFID readers/sensors, infrared sensors, tactile sensors, sonars were used to support the various affordances.

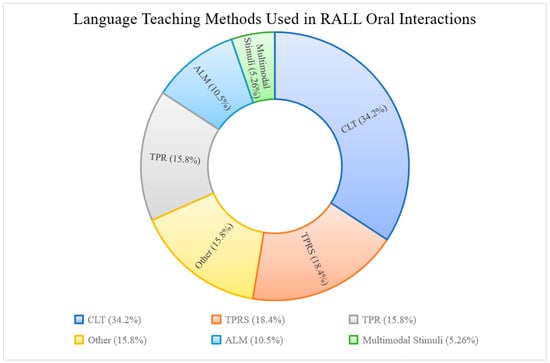

4.2. Language Methods Used in RALL Oral Interactions (RQ1)

The language teaching methods that were used to create RALL oral interactions were based on language instruction theories that emerged during the 20th and 21st centuries. Moreover, some studies employed more than one language teaching method in their RALL oral interaction activity design. Figure 2 shows that the most popular method adopted was CLT (n = 13), followed by TPRS (n = 7), TPR (n = 6). Other methods such as multimedia-enhanced instruction, learning by teaching, socio-cognitive conflict (n = 6), ALM (n = 4), and multimodal stimuli (n = 2). In addition, studies that adopted multiple language teaching methods employed combinations such as CLT plus TPR plus TPRS (n = 4), CLT plus TPR (n = 2), CLT plus TPR plus ALM (n = 1), ALM plus TPRS plus TPR (n = 1), and CLT plus TPRS (n = 1).

Figure 2.

Language teaching methods in RALL oral interactions. NOTE: CLT = Communicative Language Teaching. TPRS = Teaching Proficiency through Reading and Storytelling. TPR = Total Physical Response. ALM = Audiolingual Method.

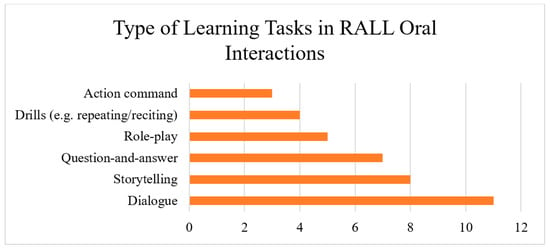

4.3. Task Design for Oral Interactions in RALL (RQ2)

The task design for oral interactions was analyzed through a learner-centered perspective. The instructional design elements included (a) the task itself, (b) the language input provided by the robot and received by the learner, as well as (c) the oral language output produced by the learner. In terms of the interactive task design, the task design that led to oral interactions included dialogue (n = 11), storytelling/story acting (n = 8), question-and-answer (n = 7), Role Play (n = 5), drill (n = 4), and action commands (n = 3). The instruction embedded in the task design was more form-focused (n = 12) than meaning-focused (n = 8), with only a few studies that included both in the design (n = 2). Figure 3 presents the results on the interactive task design.

Figure 3.

Type of task design for RALL oral interactions.

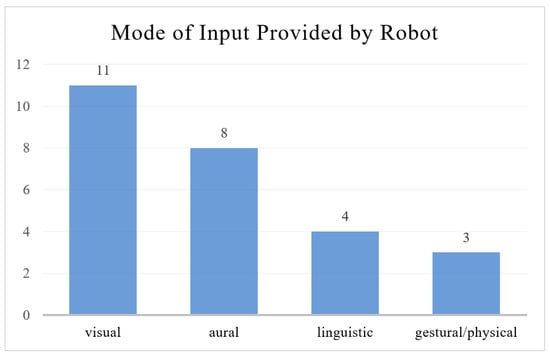

The mode of language input provided by the robot served as input from the learner’s perspective, and mainly consisted of aural input (n = 18), followed by visual (n = 11), linguistic (n = 4), and gestural/physical input (n = 3), as shown in Figure 4.

Figure 4.

The mode of input the robot provides to the learner.

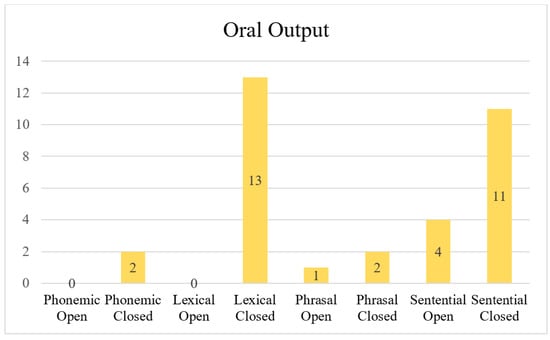

Language output produced by the learners mostly consisted of sentential, closed answers (n = 11), followed by lexical, closed answers (n = 13), and others (See Figure 5).

Figure 5.

Type of oral output produced by learners.

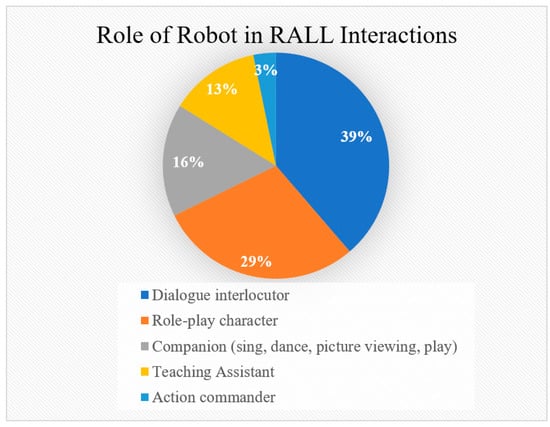

4.4. Role of Robots and Instructors (RQ3)

From a design-based perspective, there were five possible roles the robots played in RALL oral interactions (Figure 6). The most common role was a dialogue interlocutor (n = 12). This referred to pre-determined dialogues where the robot conversed with the learners using fixed phrases or sentences. The second most frequent role fulfilled by the robot was a role-play character, where the robot acted out a story as one of the characters in the story (n = 9), followed by a companion that sings, dances, played with the learner, or showed pictures on its screen (n = 5), a teaching assistant that helped the teacher with any part of the instructional procedure (4), and action commander that acts out certain movements commanded by the learner during an activity (n = 1).

Figure 6.

Roles played by robots in oral interactions.

In addition, the robot served a major function of providing teacher talk. Five kinds of teacher talk were provided, including skill training (n = 12), affective feedback (n = 11), knowledge teaching (n = 7), motivational elements (n = 3), and procedural prompts (n = 2). Finally, the instructor or facilitator would, in some studies, serve to provide additional support in RALL. The types of support included procedural support (n = 9), learning support (n = 7), and technical support (n = 1) for those studies that mentioned them.

The interactive oral task design allowed the robot, human facilitators, and learners to engage in a well-orchestrated speaking practice in a contextualized and meaningful way. Some example actions performed by the interacting agents are summarized in Table 2. It is evident that RALL oral interactive mechanisms can be multifarious, each specific to the oral communicative goal and context. In most cases, the interactions were based on robotic functions such as (a) speaking [32], (b) making gestures and movements [39], (c) singing [34], (d) object detections [40,41], (e) voice recognition functions [42], and (f) display of digital content on the accompanying tablets [43]. While robots were used to facilitate bi-directional communication by initiating or engaging in verbal, gestural, and physical interactive processes to allow learners to practice receptive (e.g., listening and reading) and productive (e.g., speaking and writing) language use, human facilitators constantly provided procedural, learning, and technical support [34,38] to learners during the interactive tasks.

Table 2.

Synthesis of Actions Performed by Interacting Agents in RALL.

Learners engaged mostly in productive language practice such as asking questions [33], repeating or creating words or sentences orally [34,39], creating stories orally [44] or in writing [33], performing movements [39], and acting in role plays [45]. They also relied on the guidance of human facilitators with various task needs such as game introduction [46] and provision of feedback [39].

4.5. Learning Outcomes of RALL Oral Interactions (RQ4)

The cognitive learning outcome of engaging learners in RALL oral interactions was reflected by effective academic achievement [35], increased concentration [35], understanding of new words through pictures, animation, and visual aid [44], and significant improvement in word–picture association abilities [46]. Children also gained the ability in picture naming [41]. In terms of the acquisition of language skills, there was significant improvement in learners’ speaking skills [45]. Specifically, student-talk rate and response ratio increased [39], and the RALL system helped to significantly improve speech complexity, grammatical and lexical accuracy, number of words spoken per minute, and response time [43]. Pronunciation also became more native-like [43]. Efficient vocabulary gains [37,40,42] and retention [42] also occurred.

In terms of language skills, there was significant improvement in listening and reading skills [39]. The slightly structured repetitive interaction pattern was perceived as beneficial for adult Swedish learners with low proficiency levels [47]. Evidence of the development of other skills such as physical motor skills due to the use of the robot [33] and children’s ability in teaching [40] was also reported. As for affective learning outcomes, increased satisfaction, interest, confidence, motivation, and attitudes [34,45,47,48,49] were found toward the use of RALL and toward learning English [48,50]. In RALL, students became more active in a native-like setting [49]. Also, the robots reduce learner anxiety about making mistakes in front of native speakers [51]. Class atmosphere improved effectively due to RALL.

Moreover, positive emotional responses were identified from various studies. Of the coded emotional responses, over 91% were positive. Only several negative responses were identified, which showed learners’ dissatisfaction with the robot’s synthesized voice, facial expressions, and feelings of anxiety and fear of making mistakes in RALL. The positive responses are summarized as bolded keywords, which reflect the affective states of learners during RALL (See Table 3). The positive affect included emotional states such as eagerness, enthusiasm, satisfaction, appreciation, motivation, and enjoyment.

Table 3.

Positive Cognitive, Skill, and Affective Learning Outcome.

5. Discussion

The review identified recent efforts in the field of RALL that applied various types of robotic sensing technologies (e.g., personal identification mechanisms with RFID tags) to enrich robot–human interactive design. By integrating other tools such as e-books into robots, the field of RALL was advanced with more diverse instructional design. Detailed findings concerning each question are described below and summarized in Table 4.

Table 4.

Alignment of research questions to review findings on RALL.

With regards to the first research question, findings about the language teaching methods incorporated in RALL oral interactions revealed a heavy emphasis on communicative skill training with the use of Communicative Language Teaching and Teaching Proficiency through Reading and Storytelling. On the other hand, many studies also applied Total Physical Response and Audiolingual Method to train bottom-up language skills such as word recognition. Through RALL interactions, learners were able to experience receptive language learning [52] of vocabulary and sentences by mimicking authentic scenarios, reading the storylines, or seeing pictures in word-association tasks. Moreover, they engaged in productive language use by giving robot commands or creating stories. Such interaction opportunities in RALL can effectively enhance both productive communication (e.g., oral skills) and creative skills, which are important for 21st century learners [53].

Although the dominant language teaching methods were communicative and storytelling approaches, existing affordances of educational robots such as giving commands and voice recognition have allowed traditional methods such as audiolingual and total physical response methods to complement the top-down, communicative approach in many of the studies reviewed. To a certain extent, the audiolingual and total physical response methods reflect a bottom-up approach that drills learners with simple instructional design (e.g., dialogues or question-and-answer). This implies that activity design using CLT, TPRS, ALM, and TPR may be easy for RALL practitioners to implement and is especially applicable to the majority of RALL research settings in East Asian contexts. Many traditional English classrooms rely on grammar translation and audiolingual methods for English learning, therefore, the drill-based practices that combine ALM or TPR with communicative approaches appears to be a feasible design combination.

To address the second research question on the types of oral interaction task design in RALL, the designed tasks were aligned to language teaching methods such as teaching proficiency through reading and storytelling to fulfill such goals as (a) learning the meaning of a set of vocabulary confined to the content of a story, (b) forming personalized questions through a spoken class story, (c) reading specific language structures in a story, and (d) acting out parts of a story by repeating certain language structures in the actors’ lines [54]. The results showed that through communicative, meaning-based language teaching methods, RALL practitioners could create interactive language learning tasks such as storytelling and role play with robots acting as human- or animal-like characters. However, it is worthy to note that the oral output produced by learners tended to be closed answers at lexical and sentential levels, which points to future efforts to develop tasks that highlight intelligibility to fulfill meaning-focused instruction.

The pedagogical implication for RALL instructional design therefore highlights oral and reading fluency as well as communicative competence instead of grammatical accuracy. Language teachers that integrate RALL can adopt a wide array of methods along the skill-training spectrum. On one end, the tasks can focus on communicating in situated dialogues, and on the other end, the tasks can aim to improve accuracy in pronunciation or word-picture association. The instructional design consisting of these methods allows educational robots to engage learners in a context-specific manner to appeal to learners in various educational levels. This further confirms previous researchers’ arguments that RALL is a feasible and valuable language learning mode for oral language development [55]. Furthermore, robots no longer are perceived as merely machines that automatically carry out a sequence of programmed actions, but as interactive pedagogical agents with multi-sensory affordances conducive to language learners’ oral communication development [56].

In response to the third research question concerning the roles played by the robots and instructors, the findings showed that the robot usually played the most essential role during oral interactions in RALL, with timely support by a human instructor or facilitator. The findings are in line with previous claims that compared to books, audios, and web-based instruction, humanoid robots can best engage learners in language learning through human-like interactions [21]. The input–output process of comprehensible linguistic content that is vital in language learning [15] can be effectively fulfilled by oral interactions provided by robots.

As for the fourth research question, various learning outcomes in terms of cognition, language skills, and affect were identified. For cognitive learning outcomes, RALL effectively facilitated learners’ understanding of vocabulary across all age levels. This echoed the findings by [57] that robot-assisted learning can effectively lead to cognitive gains in target subject domains (e.g., mathematics and science) with robots’ complex, multi-sensorial content, and interactions. In this study, the subject domain is language, therefore, the cognitive learning gain is mostly focused on vocabulary comprehension (e.g., closed answers at the lexical level), which was reported as a major focus in the RALL oral instructional design. For the skill-based learning outcomes, significant improvement in terms of speaking abilities, including the complexity, accuracy, and pronunciation was evident in numerous studies. This suggests that oral interactions facilitated by robots are promising for improving oral proficiency among language learners. As put forth by Mubin et al. [58], robots have efficient information and processing affordances, which can reduce learners’ cognitive workload and anxiety compared to traditional instructional modes. The review findings support the view that robots can foster speaking abilities without incurring anxiety or extra cognitive demands on the learners.

In terms of the affective learning outcome, which is an important aspect of language acquisition, the presence and affordances of educational robots made the learning experience more exciting, enjoyable, fun, and encouraging. The learners became more eager, enthusiastic, and confident in class under RALL conditions. These positive emotional states serve as advantages of incorporating educational robots in language education. In this respect, previous research has included emotional design as one of the instructional conditions in multimedia learning that enhanced learning [59] with increased motivation and better performance. It has been proven that positive emotional states during learning can activate retention and comprehension during learning according to [59]. The review thus confirms the positive impact of robot-assisted interactions in language learning scenarios.

This review study had three limitations. The first limitation concerns the small sample size of the articles reviewed (n = 22). This limitation is mainly due to the current limited number of studies on RALL oral interactions in existing databases, as RALL is a new research niche with gradual, growing efforts focusing on the analysis on instructional design involving various interacting agents. However, with a narrow research focus and strict inclusion/exclusion procedures, the review did reach data saturation since the studies provided rather rich data for answering the research questions. Other systematic reviews with relatively small sample sizes have also proven to be valuable with rigorous systematic review procedures [60]. Secondly, the studies varied in terms of educational levels, which in part was also due to the constraint of a small sample size. Despite the limitation, the authors were able to obtain the expected patterns as the focus was on analyzing instructional design for interactions in language learning with the use of educational robots. The third limitation was the duration of the 22 studies, most of them were not longitudinal, therefore, the researchers cannot make claims about valid learning outcomes in the long run.

6. Conclusions

This systematic review reported on general research trends for RALL and analyzed interactions among various agents, the robot, the learners, and the human facilitator across educational levels. Specifically, the research questions focused on (a) the language teaching methods, (b) instructional design, (c) roles of robot and instructors/facilitators, and (d) cognitive, skill-based, and affective learning outcomes. The review findings suggested that RALL instructional design employ communicative language teaching and storytelling as the most dominant language learning methods, and these two methods are often complemented by audiolingual and total physical response methods. The learning tasks are based on the principles of the identified language learning teaching methods, and the resulting interaction processes and effects proved to be conducive to language acquisition. Interaction effects from the learning tasks led to positive cognitive, skilled-based, and affective outcomes in language learning.

By examining the benefits and drawbacks of RALL theoretical perspectives and design practices, the review contributes to the research field of robot-assisted language teaching and learning with in-depth exploration and discovery about effective instructional design elements and their effects on interaction processes and language learning. The detailed analysis helps to add new insights and provide specific design elements to guide RALL practitioners including teachers, instructional designers, and researchers.

Future research should aim to develop more sophisticated functions to improve the accuracy and adaptivity for mechanisms such as speech recognition, feedback giving, and personal identification, and engage multiple learners in RALL interactions via collaborative oral tasks. In addition, as storytelling appears as a recent trend of activity design in RALL, forming detailed and applicable storytelling rubrics that emphasize intelligibility in oral production via functions such as automatic speech recognition will help ensure the meaning-focused nature of interactive RALL. Finally, it will be worthwhile to investigate innovative ways to design and assess interactions for learners at different educational levels using innovative teaching methods. Efforts should also aim to combine RALL with other emerging technologies such as the use of tangible objects and internet-of-things technology [61] to better facilitate authentic and embodied language learning for young learners. Finally, specific emotional design in RALL leading to socio-emotional development among young learners holds promises in the RALL research area.

Author Contributions

Conceptualization, V.L. and N.-S.C.; methodology, V.L. and N.-S.C.; validation, V.L. and H.-C.Y.; investigation, V.L.; writing—original draft preparation, V.L.; writing—review and editing, H.-C.Y.; supervision, N.-S.C.; funding acquisition, N.-S.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partly funded by the Ministry of Science and Technology, Taiwan under grant numbers MOST-109-2511-H-003-053-MY3, MOST-108-2511-H-003-061-MY3, MOST-107-2511-H-003-054-MY3, and MOST 108-2511-H-224 -009. This work was also financially supported by the “Institute for Research Excellence in Learning Sciences” of National Taiwan Normal University (NTNU) from The Featured Areas Research Center Program within the framework of the Higher Education Sprout Project by the Ministry of Education (MOE) in Taiwan”.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

General Profile of the Reviewed Studies on RALL.

Table A1.

General Profile of the Reviewed Studies on RALL.

| No. | Authors and Year | Country/Language TL = Target Language | Participant Profile | Implementation Duration | Research Purpose | Robot Type and Affordances | Sensors and Accompanying Tools | Research Design | Instruments |

|---|---|---|---|---|---|---|---|---|---|

| 1 | Kanda, Hirano, Eaton, and Ishiguro, 2004 [36] | Japan L1: Japanese TL: English | 119 first-grade students and 109 sixth-grade students | 2 weeks | Analyze the effect of the robots on social interaction over time and learning | Humanoid robot/Robovie

|

| Single-group experiment with pretest–posttest design | Quantitative:

|

| 2 | Han, Jo, Jones, and Jo, 2008 [35] | Korea L1: Korean TL: English | 90 fifth to sixth graders | Forty minutes | Investigate if the effect of the use of home robots in children’s learning is more effective for their concentration, learning interest, and academic achievement than the other two types of instructional media | Humanoid robot/ IROBI

|

| Between-group experiment | Quantitative:

|

| 3 | Chang, Lee, Chao, Wang, and Chen, 2010 [32] | Taiwan L1: Mandarin TL: An unspecified second language | 100 fifth graders | 5 weeks | Explore the possibility of using robots to teach a second language | Humanoid Robot

| Unknown | Quasi-experimental intervention | Qualitative:

|

| 4 | Chen, Quadir, and Teng, 2011 [44] | Taiwan L1: Mandarin TL: English | 5 EFL fifth graders | 80 min | Investigate the effect of the integration of book, digital content, and robots on elementary school students’ English learning | Humanoid robot (pedagogical social agent)

|

| Test-driven experiment:System design and implementation | Qualitative:

|

| 5 | Lee, Noh, Lee, Lee, Lee, Sagong, and Kim, 2011 [45] | South Korea L1: Korean TL: English | 21 EFL third to fifth graders | Eight weeks | Investigate the effect of RALL on elementary school students | Animal-like robots (Mero and Engkey)

| -RFID sensors | Single-group experiment with pretest–posttest design | Quantitative:

|

| 6 | Hsiao, Chang, Lin, and Hsu, 2015 [38] | Taiwan L1: Mandarin TL: Mandarin | 57 pre-kindergarteners | 11 months | Explore the influence of of educational robots on fostering kindergarteners’ reading motivation literacy, and behavior | Humanoid robot/iRobiQ

| -Infrared sensors | Between-group experiment | Quantitative:

|

| 7 | Tanaka and Matsuzoe, 2012 [40] | Japan L1: Japanese TL: English | 18 preschool students | Phase 1: Six daysPhase 2: one month | Investigate the effect of care-receiving robots on preschool students’ vocabulary learning | Humanoid robot/NAO (care-receiving robot)

|

| Single-group experiment with pretest–posttest design | Quantitative:

|

| 8 | Wang, Young, and Jang, 2013 [50] | Taiwan L1: Mandarin TL: English | 63 fifth graders | Not vailable | Investigate the effectiveness of tangible learning companions on students’ English conversation | Animal-like robot

| Unknown | Quasi-experiment | Quantitative:

|

| 9 | Alemi, Meghadari, and Ghazisaedy, 2014 [42] | Iran L1: Iranian TL: English | 46 seventh graders | Five weeks | Investigate the effect of RALL on students’ vocabulary learning and retention | Humanoid robot/ NAO

|

| Quasi-experiment | Quantitative:

|

| 10 | Alemi, Meghdari, and Ghazisaedy, 2015 [48] | Iran L1: Iranian TL: English | Seventy female students between 12 and 13 years of age in junior high | 5 weeks | Examine the effect of robot-assisted language learning (RALL) on anxiety level and attitude in English vocabulary acquisition | Humanoid robot/ NAO

| Tablet for display

| Between-group experiment | Quantitative:

|

| 11 | Mazzoni and Benvenuti, 2015 [46] | Italy L1: Italian TL: English | 10 preschool students | Three days | Investigate whether humanoid robots can assist students in learning English as effective as a human counterpart in terms of social-cognitive conflict paradigm | Humanoid robot/MecWilly

| Sensors for recognizing human language | Between-group experiment | Quantitative:

|

| 12 | Wu, Wang, and Chen, 2015 [34] | Taiwan L1: Mandarin TL: English | 64 EFL third graders | 200 min | Investigate the effect of in-house built teaching assistant robots on EFL elementary school students’ English learning | Humanoid robot/ PET

| LEDs(head, face, ears, arms) | Between-group experiment | Quantitative:

|

| 13 | Hong, Huang, Hsu, and Shen, 2016 [39] | Taiwan L1: Mandarin TL: English | 52 fifth graders | Not available | Investigate the effects of design robot-assisted instructional materials on elementary school students’ learning performance | Humanoid robot/Bioloid

|

| Between-group experiment | Quantitative:

|

| 14 | Lopes, Engwell, and Skantze, 2017 [47] | Sweden L1: 14 different mother tongues TL: Swedish | 22 L2 Swedish learners (average age 29.1) | Two 15 min interactions | Explore using a social robot in a conversational setting to practice a second language | Humanoid Robot/ Furhat

|

| Quantitative:

| |

| 15 | Westlund, Dickens, Jeong, Harris, DeSteno, and Breaseal, 2017 [41] | USA L1: English TL: English | 36 preschool students | Not available | Investigate the effects of non-verbal cues on children’s vocabulary learning | Animal-like robot/ DragonBot

|

| Single-subject experiment | Quantitative:

|

| 16 | Crompton, Gregory, and Burke, 2018 [33] | USA L1: English TL: English | Three teaching assistants and 50 preschool students | Not available | Investigate how the use of humanoid robots can support preschool students’ learning | Humanoid robot/ NAO

| Unknown | Ethnographic study design | Qualitative:

|

| 17 | Sisman, Gunay, and Kucuk, 2018 [62] | Turkey L1: Turkish TL: English | 232 secondary school students broken into small sessions of 20 students each | Four months | Investigate an educational robot attitude scale (ERAS) for secondary school students | Humanoid robot/ NAO

| UnknownMobile phone | Experiment with evaluation survey design | Quantitative:

|

| 18 | Lio, Maede, Ogawa, Yoshikawa, Ishiguro, Suzuki, Aoki, Maesaki, and Hama, 2019 [43] | Japan L1: Japanese TL: English | Nine university students | Seven days | Investigate the effect of RALL system on college students’ English-speaking development | Humanoid robot/ CommU

| UnknownTablet for display | Single-group experiment with pretest–posttest design | Quantitative:

|

| 19 | Wedenborn, Wik, Engwall, and Beskow, 2019 [63] | Sweden L1: Unknown TL: Russian | Fifteen university students | 15 min per participant | Investigate the effect of a physical robot on vocabulary learning | Humanoid Robot/ Furhat

| Java-basedframework for constructing multi-modal dialogue systems

| Quasi-experiment | Quantitative:

|

| 20 | Alemi and Haeri, 2020 [49] | Iran L1: Iranian TL: English | 38 kindergarteners | Two months | Investigate the impact of applying the robot-assisted language leaning (RALL) method to teach request and thanking speech acts to young children. | Humanoid robot/ NAO

| UnknownRobot Control Software:Choregraphe program

| Single-group experiment with pretest–posttest design | Quantitative:

|

| 21 | Engwell, Lopes, and Ålund, 2020 [51] | Sweden L1: Varied TL: Swedish | Robot-led Conversations:6 adults beyond tertiary education levelSurvey:32 participants | Three days | Investigate how the post-session ratings of the robot’s behavior along different dimensions are influenced by the robot’s interaction style and participant variables | Humanoid Robot/ Furhat

|

| Experiment with evaluation survey design | Quantitative:

|

| 22 | Leeuwestein, Barking, Sodacı, oudgenoeg, Verhagen Vogt, Aarts, Spit, Haas, Wit, and Leseman, 2020 [37] | Turkey L1: Turkish TL: Dutch | 67 kindergarteners | 2.4 days with 40 min sessions | Investigate the effects of providing translations in L1 on the learning of L2 in a vocabulary learning experiment using social robots | Humanoid robot/ NAO

| Unknown

| Single-group experiment with pretest–posttest design | Quantitative:

|

Appendix B

Table A2.

Instructional Design and Learning Outcome of RALL.

Table A2.

Instructional Design and Learning Outcome of RALL.

| Communicative Skill | Learning Activity | Language Teaching Method | Role of Robot | Role of Instructor or Facilitator | Learning Outcomes | |

|---|---|---|---|---|---|---|

| 1 | Vocabulary | Engaging students in learning a vocabulary of about 300 sentences for speaking and 50 words for recognition with 18 day trial. |

| Dialogue interlocutor Play Mate | Teacher/facilitator is absent/not mentioned | Skill:

|

| 2 | Speaking | Engaging the students in speaking and dialogue with NCB, WBI, or HRL for about 40 min |

| Role-play character | Teacher/facilitator is absent/not mentioned | Cognition:

|

| 3 | Listening Speaking | Five weekly practice scenarios each with a different interaction mode |

| Role-play character Action commander | Learning support:

| Affect:

|

| 4 | Vocabulary | A system contains five RALL activities: students took turns to have a test drive on the system in a total amount of 40 min | Multimedia-enhanced instruction | Role-play character Dance- and sing-along partner | Teacher/facilitator is absent/not mentioned | Cognition:

|

| 5 | ListeningSpeaking:

| Engaging students in learning 68 English lessons in four different RALL classrooms |

| Role-Play Character (Sales clerk) | Technical support | Skills:

|

| 6 | Reading Vocabulary Grammar | Experimental group: read e-book with the aid of iRobiQ Control group: read e-book with the aid of tablet-PC |

| Content display on robot partner screen | Procedural support:

| Affect:

|

| 7 | Vocabulary | Engaging students in four verb-learning games with the aid of care-receiving robot for 30 min per section |

| Respondant to learners’ action commands | Procedural support:

| Skill:

|

| 8 | Speaking | Experimental group: engaging 32 students in practicing English conversation with tangible learning robot Control group: engaging 31 students in practicing English conversation with classmates |

| Dialogue interlocutorDance- and sing-along partner | Procedural support:

| Skill:

|

| 9 | Vocabulary | Experimental group: learn English vocabulary from humanoid robot Control group: learn English vocabulary from human teachers |

| Dialogue interlocutorTeacher assistant | Procedural support:

| Skill:

|

| 10 | Vocabulary | Experimental group: learn English vocabulary through the RALL system Control group: learning English vocabulary based on the Communicative Approach |

| Dialogue interlocutorTeacher assistant(show vocabulary-related motions) | Procedural support:

| Affect:

|

| 11 | Vocabulary | Experimental group: learn English vocabulary in children-SCC condition Control group: learning English vocabulary in robot-SCC condition |

| Dialogue interlocutor (remotely controlled) | Procedural support:

| Cognition:

|

| 12 | English Alphabets Listening Speaking | Experimental group: learn English with PET Control group: learn English with human teacher |

| Teacher assistantDialogue interlocutorRole-play character | Learning support:

| Skill:

|

| 13 | Listening Speaking Reading Writing | Experimental group: have English class by humanoid robot Control group: have English class by human teacher |

| Role-play characterDialogue interlocutor | Learning support:

| Skill:

|

| 14 | Speaking | Experimental group: have conversational setting to practice with two second language learners, one native moderator and a human Control group: Have conversational setting to practice with two second language learners, one native moderator and a robot n |

| Dialogue interlocutor | Procedural support:

| Skill:

|

| 15 | Vocabulary | Engaging students in vocabulary learning with the aid of robot and human teacher |

| Picture viewing partner | Teacher/facilitator is absent/not mentioned | Cognition:

|

| 16 | Listening Speaking | Phase 1: panning RALL lessons Phase 2: RALL lessons implementation Phase 3: reflect on the process of designing and implementing RALL lessons |

| Dialogue interlocutor | Procedural support:

| Cognition:

|

| 17 | Listening Speaking | Engaging students in four robot-assisted English tasks for 40 min per class |

| Role-play character (remotely controlled) | Procedural support:

| Affect:

|

| 18 | Speaking | Engaging the students in speaking practices with the aid of RALL system for a total of 30 min per day for seven days |

| Dialogue interlocutor | Teacher/facilitator is absent/not mentioned | Skill:

|

| 19 | Vocabulary | Learn vocabulary exercises in three different conditions:First condition: disembodied voiceSecond condition: screenThird condition: robot |

| Teacher assistant | Learning support:

| Cognition:

|

| 20 | Speaking Vocabulary | Experimental group: learn English with a humanoid robot and the teacher Control group: learn English with the teacher |

| Dialogue interlocutor Teacher assistant | Learning support:

| Affect:

|

| 21 | Speaking | Engaging the students in four stereotypic interaction styles with social robot Furhat for three days |

| Role-play character Dialogue interlocutor | Teacher/facilitator is absent/not mentioned | Affect:

|

| 22 | Vocabulary | Engaging students in vocabulary learning with the monolingual or the bilingual robot for 40 min |

| Role-play character | Teacher/facilitator is absent/not mentioned | Skill:

|

Appendix C

Table A3.

Interactive Oral Task Design in RALL.

Table A3.

Interactive Oral Task Design in RALL.

| No. | Interactive Task Design | Interaction Mode | Instructional Focus | Teacher Talk by Robot | Input Mode | Oral Output |

|---|---|---|---|---|---|---|

| 1 |

| Robot–learner

| Form-focused | Skill training:

| Aural:

|

|

| 2 |

| Robot–learner

| Form-focused | Skill training:

| Visual:

|

|

| 3 |

| Robot–learner

| Form-focused:Meaning-focused | Knowledge teaching:

| Aural

|

|

| 4 |

| Robot–learner

| Form-focused | Knowledge teaching:

| Linguistic:

|

|

| 5 |

| Robot–learner

| Meaning-focused | Motivational elements:

| Aural:

|

|

| 6 |

| Robot–learner

| Form-focused | Skill training:

| Visual:

|

|

| 7 |

| Robot–learner

| Meaning-focused | Procedural prompts | Linguistic:

|

|

| 8 |

| Robot–learner

| Meaning-focused | Skill training:

| Aural:

|

|

| 9 |

| Robot–learner

| Meaning-focused | Knowledge teaching:

| Visual:

|

|

| 10 |

| Robot–learner

| Form-focused | Knowledge teaching:

| Aural:

|

|

| 11 |

| Robot–learner

| Form-focused | Motivational elements:

| Visual:

|

|

| 12 |

| Robot–learner

| Form-focused and meaning-focused | Knowledge teaching:

| Visual:

|

|

| 13 |

| Robot–learner

| Form-focused | Skill training:

| Aural:

|

|

| 14 |

| Robot–learner

| Meaning-focused | Skill training:

| Aural:

|

|

| 15 |

| Robot–learner(remote human control of robot)

| Form-focused | Knowledge teaching:

| Visual:

|

|

| 16 |

| Robot–learner

| Form-focused | Skill training:

| Aural:

|

|

| 17 |

| Robot–learner(remote human control of robot)

| Meaning-focused | Skill training:

| Aural:

|

|

| 18 |

| Robot–learner

| Form-focused | Skill training

| Linguistic:

|

|

| 19 |

| Robot–learner

| Form-focused | Skill training:

| Linguistic:

|

|

| 20 |

| Robot–learner

| Meaning-focused | Skill training:

| Visual:

|

|

| 21 |

| Robot–learner

| Meaning-focused | Skill training:

| Visual:

|

|

| 22 |

| Robot–learner

| Form-focused | Knowledge teaching:

| Visual:

|

|

References

- Kory-Westlund, J.M.; Breazeal, C. A long-term study of young children’s rapport, social emulation, and language learning with a peer-like robot playmate in preschool. Front. Robot. AI 2019, 6, 1–17. [Google Scholar] [CrossRef]

- Liao, J.; Lu, X.; Masters, K.A.; Dudek, J.; Zhou, Z. Telepresence-place-based foreign language learning and its design principles. Comput. Assist. Lang. Learn. 2019. [Google Scholar] [CrossRef]

- So, W.C.; Cheng, C.H.; Lam, W.Y.; Wong, T.; Law, W.W.; Huang, Y.; Ng, K.C.; Tung, H.C.; Wong, W. Robot-based play-drama intervention may improve the narrative abilities of Chinese-speaking preschoolers with autism spectrum disorder. Res. Dev. Disabil. 2019, 95, 103515. [Google Scholar] [CrossRef]

- Alemi, M.; Bahramipour, S. An innovative approach of incorporating a humanoid robot into teaching EFL learners with intellectual disabilities. Asian-Pac. J. Second Foreign Lang. Educ. 2019, 4, 10. [Google Scholar] [CrossRef]

- Han, J. Robot-Aided Learning and r-Learning Services. In Human-Robot Interaction; Chugo, D., Ed.; IntechOpen: London, UK, 2010; Available online: https://www.intechopen.com/chapters/8632 (accessed on 5 July 2021).

- Spolaor, N.; Benitti, F.B.V. Robotics applications grounded in learning theories on tertiary education: A systematic review. Comput. Educ. 2017, 112, 97–107. [Google Scholar] [CrossRef]

- Cheng, Y.W.; Sun, P.C.; Chen, N.S. The essential applications of educational robot: Requirement analysis from the perspectives of experts, researchers and instructors. Comput. Educ. 2018, 126, 399–416. [Google Scholar] [CrossRef]

- Merkouris, A.; Chorianopoulos, K. Programming embodied interactions with a remotely controlled educational robot. ACM Trans. Comput. Educ. 2019, 19, 1–19. [Google Scholar] [CrossRef]

- Kahlifa, A.; Kato, T.; Yamamoto, S. Learning effect of implicit learning in joining-in-type robot-assisted language learning system. Int. J. Emerg. Technol. 2019, 14, 105–123. [Google Scholar] [CrossRef]

- Warschauer, M.; Meskill, C. Technology and second language learning. In Handbook of Undergraduate Second Language Education; Rosenthal, J., Ed.; Lawrence Erlbaum: Mahwah, NJ, USA, 2000; pp. 303–318. [Google Scholar]

- Woo, D.J.; Law, N. Information and communication technology coordinators: Their intended roles and architectures for learning. J. Comput. Assist. Learn. 2020, 36, 423–438. [Google Scholar] [CrossRef]

- Grant, M.J.; Booth, A. A typology of reviews: An analysis of 14 review types and associated methodologies. Health Inf. Libr. J. 2009, 26, 91–108. [Google Scholar] [CrossRef]

- Samnani, S.S.S.; Vaska, M.; Ahmed, S.; Turin, T.C. Review Typology: The Basic Types of Reviews for Synthesizing Evidence for the Purpose of Knowledge Translation. J. Coll. Physicians Surg. Pak. 2017, 27, 635–641. [Google Scholar]

- Robinson, H.A. The Ethnography of Empowerment—The Transformative Power of Classroom Interaction, 2nd ed.; The Falmer Press; Taylor & Francis Inc.: Bristol, PA, USA, 1994. [Google Scholar]

- Pica, T. From input, output and comprehension to negotiation, evidence, and attention: An overview of theory and research on learner interaction and SLA. In Contemporary Approaches to Second Language Acquisition; Mayo, M.D.P.G., Mangado, M.J.G., Martínez-Adrián, M., Eds.; John Benjamins Publishing Company: Philadelphia, PA, USA, 2013; pp. 49–70. [Google Scholar]

- Rivers, W.M. Interactive Language Teaching; Cambridge University Press: New York, NY, USA, 1987. [Google Scholar]

- Tuan, L.T.; Nhu, N.T.K. Theoretical review on oral interaction in EFL classrooms. Stud. Lit. Lang. 2010, 1, 29–48. [Google Scholar]

- Council of Europe. The Common European Framework of Reference for Languages: Learning, Teaching, Assessment; Council of Europe: Strasbourg Cedex, France, 2004; Available online: http://www.coe.int/T/DG4/Linguistic/Source/Framework_EN.pdf (accessed on 6 July 2021).

- Brown, H.D. Teaching by Principles: Interactive Language Teaching Methodology; Prentice Hall Regents: New York, NY, USA, 1994. [Google Scholar]

- Ellis, R. Instructed Second Language Acquisition: Learning in the Classroom; Basil Blackwell. Ltd.: Oxford, UK, 1990. [Google Scholar]

- Han, J. Emerging technologies: Robot assisted language learning. Lang. Learn. Technol. 2012, 16, 1–9. [Google Scholar]

- Van den Berghe, R.; Verhagen, J.; Oudgenoeg-Paz, O.; van der Ven, S.; Leseman, P. Social Robots for Language Learning: A Review. Rev. Educ. Res. 2019, 89, 259–295. [Google Scholar] [CrossRef]

- Jahnke, I.; Liebscher, J. Three types of integrated course designs for using mobile technologies to support creativity in higher education. Comput. Educ. 2020, 146, 103782. [Google Scholar] [CrossRef]

- Mitchell, C.B.; Vidal, K.E. Weighing the ways of the flow: Twentieth century language instruction. Mod. Lang. J. 2001, 85, 26–38. [Google Scholar] [CrossRef]

- Asher, J. The Total Physical Response Approach to Second Language Learning. Mod. Lang. J. 1969, 53, 3–17. [Google Scholar] [CrossRef]

- Muzammil, L.; Andy, A. Teaching proficiency through reading and storytelling (TPRS) as a technique to foster students’ speaking skill. J. Engl. Educ. Linguist. Stud. 2017, 4, 19–36. [Google Scholar] [CrossRef][Green Version]

- Chen, Y.M. How a teacher education program through action research can support English as a foreign language teachers in implementing communicative approaches: A case from Taiwan. Sage Open 2020, 10, 2158244019900167. [Google Scholar] [CrossRef]

- Savignon, S.J. Communicative competence. TESOL Encycl. Engl. Lang. Teach. 2018, 1, 1–7. [Google Scholar]

- Bagheri, M.; Hadian, B.; Vaez-Dalili, M. Effects of the Vaughan Method in Comparison with the Audiolingual Method and the Communicative Language Teaching on Iranian Advanced EFL Learners’ Speaking Skill. Int. J. Instr. 2019, 12, 81–98. [Google Scholar] [CrossRef]

- Lin, V.; Liu, G.Z.; Hwang, G.J.; Chen, N.S.; Yin, C. Outcomes-based appropriation of context-aware ubiquitous technology across educational levels. Interact. Learn. Environ. 2019. [Google Scholar] [CrossRef]

- Petticrew, M.; Roberts, H. Systematic Reviews in the Social Sciences: A Practical Guide; Blackwell: Oxford, UK, 2006. [Google Scholar]

- Chang, C.W.; Lee, J.H.; Chao, P.Y.; Wang, C.Y.; Chen, G.D. Exploring the Possibility of Using Humanoid Robots as Instructional Tools for Teaching a Second Language in Primary School. Educ. Technol. Soc. 2010, 13, 13–24. [Google Scholar]

- Crompton, H.; Gregory, K.; Burke, D. Humanoid robots supporting children’s learning in early childhood setting. Br. J. Educ. Technol. 2018, 49, 911–927. [Google Scholar] [CrossRef]

- Wu, W.C.V.; Wang, R.J.; Chen, N.S. Instructional design using an in-house built teaching assistant robot to enhance elementary school English-as-a-foreign-language learning. Interact. Learn. Environ. 2015, 23, 696–714. [Google Scholar] [CrossRef]

- Han, J.; Jo, M.; Jones, V.; Jo, J.H. Comparative Study on the Educational Use of Home Robots for Children. J. Inf. Processing Syst. 2008, 4, 159–168. [Google Scholar] [CrossRef]

- Kanda, T.; Hirano, T.; Eaton, D.; Ishiguro, H. Interactive robots as social partners and peer tutors for children: A field trial. Hum.-Comput. Interact. 2004, 19, 61–84. [Google Scholar]

- Leeuwestein, H.; Barking, M.; Sodacı, H.; Oudgenoeg-Paz, O.; Verhagen, J.; Vogt, P.; Aarts, R.; Spit, S.; Haas, M.D.; Wit, J.D.; et al. Teaching Turkish-Dutch kindergartners Dutch vocabulary with a social robot: Does the robot’s use of Turkish translations benefit children’s Dutch vocabulary learning? J. Comput. Assist. Learn. 2020, 37, 603–620. [Google Scholar] [CrossRef]

- Hsiao, H.S.; Chang, C.S.; Lin, C.Y.; Hsu, H.L. “iRobiQ”: The influence of bidirectional interaction on kindergarteners’ reading motivation, literacy, and behavior. Interact. Learn. Environ. 2015, 23, 269–292. [Google Scholar] [CrossRef]

- Hong, Z.W.; Huang, Y.M.; Hsu, M.; Shen, W.W. Authoring robot-assisted instructional materials for improving learning performance and motivation in EFL classrooms. Educ. Technol. Soc. 2016, 19, 337–349. [Google Scholar]

- Tanaka, F.; Matsuzoe, S. Children teach a care-receiving robot to promote their learning: Field experiments in a classroom for vocabulary learning. J. Hum.-Robot. Interact. 2012, 1, 78–95. [Google Scholar] [CrossRef]

- Westlund, J.M.K.; Dickens, L.; Jeong, S.; Harris, P.L.; DeSteno, D.; Breaseal, C.L. Children use non-verbal cues to learn new words from robots as well as people. Int. J. Child-Comput. Interact. 2017, 13, 1–9. [Google Scholar] [CrossRef]

- Alemi, M.; Meghdari, A.; Ghazisaedy, M. Employing humanoid robots for teaching English language in Iranian junior high-schools. Int. J. Hum. Robot. 2014, 11, 1450022. [Google Scholar] [CrossRef]

- Lio, T.; Maede, R.; Ogawa, K.; Yoshikawa, Y.; Ishiguro, H.; Suzuki, K.; Aoki, T.; Maesaki, M.; Hama, M. Improvement of Japanese adults’ English speaking skills via experiences speaking to a robot. J. Comput. Assist. Learn. 2019, 35, 228–245. [Google Scholar] [CrossRef]

- Chen, N.S.; Quadir, B.; Teng, D.C. Integrating book, digital content and robot for, enhancing elementary school students’ learning of English. Aust. J. Educ. Technol. 2011, 27, 546–561. [Google Scholar] [CrossRef]

- Lee, S.; Noh, H.; Lee, J.; Lee, K.; Lee, G.G.; Sagong, S.; Kim, M. On the effectiveness of robot-assisted language learning. ReCALL 2011, 23, 25–58. [Google Scholar] [CrossRef]

- Mazzoni, E.; Benvenuti, M. A robot-partner for preschool children learning English using socio-cognitive conflict. Educ. Technol. Soc. 2015, 18, 474–485. [Google Scholar]

- Lopes, J.; Engwell, O.; Skantze, G. A first visit to the robot language café. In Proceedings of the 7th ISCA Workshop on Speech and Language Technology in Education, Stockholm, Sweden, 25–26 August 2017; pp. 25–26. [Google Scholar]

- Alemi, M.; Meghdari, A.; Ghazisaedy, M. The impact of social robotics on L2 learners’ anxiety and attitude in English vocabulary acquisition. Int. J. Soc. Robot. 2015, 7, 523–535. [Google Scholar] [CrossRef]

- Alemi, M.; Haeri, N.S. Robot-assisted instruction of L2 pragmatics: Effects on young EFL learners’ speech act performance. Lang. Learn. Technol. 2020, 24, 86–103. [Google Scholar]

- Wang, Y.H.; Young, S.S.C.; Jang, J.S.R. Using tangible companions for enhancing learning English conversation. Educ. Technol. Soc. 2013, 16, 296–309. [Google Scholar]

- Engwell, O.; Lopes, J.; Ålund, A. Robot interaction styles for conversation practice in second language learning. Int. J. Soc. Robot. 2020, 13, 251–276. [Google Scholar] [CrossRef]

- Uriarte, A.B. Vocabulary teaching: Focused tasks for enhancing acquisition in EFL contexts. MEXTESOL J. 2013, 37, 1–12. [Google Scholar]

- Rios, J.A.; Ling, G.; Pugh, R.; Becker, D.; Bacall, A. Identifying critical 21st-century skills for workplace success: A content analysis of job advertisements. Educ. Res. 2020, 49, 80–89. [Google Scholar] [CrossRef]

- Lichtman, K. Teaching Proficiency through Reading and Storytelling (TPRS): An Input-Based Approach to Second Language Instruction; Routledge: New York, NY, USA, 2018. [Google Scholar]

- Neumann, M.M. Social robots and young children’s early language and literacy learning. Early Child. Educ. J. 2019, 48, 157–170. [Google Scholar] [CrossRef]

- Toh, L.P.E.; Causo, A.; Tzuo, P.W.; Chen, I.M.; Yeo, S.H. A Review on the Use of Robots in Education and Young Children. Educ. Technol. Soc. 2016, 19, 148–163. [Google Scholar]

- Papadopoulos, I.; Lazzarino, R.; Miah, S.; Weaver, T.B.; Koulouglioti, C.T. A systematic review of the literature regarding socially assistive robots in pre-tertiary education. Comput. Educ. 2020, 155, 103924. [Google Scholar] [CrossRef]

- Mubin, O.; Stevens, C.; Shahid, S.; Mahmud, A.; Dong, J.-J. A review of the applicability of robots in education. Technol. Educ. Learn. 2013, 1, 13. [Google Scholar] [CrossRef]

- Heidig, S.; Muller, J.; Reichelt, M. Emotional design in multimedia learning: Differentiation on relevant design features and their effects on emotions and learning. Comput. Hum. Behav. 2015, 44, 81–95. [Google Scholar] [CrossRef]

- Barrett, N.; Liu, G.Z. Global trends and research aims for English Academic Oral Presentations: Changes, challenges, and opportunities for learning technology. Rev. Educ. Res. 2016, 86, 1227–1271. [Google Scholar] [CrossRef]

- Lin, V.; Yeh, H.C.; Huang, H.H.; Chen, N.S. Enhancing EFL vocabulary learning with multimodal cues supported by an educational robot and an IoT-Based 3D book. System 2021, 104, 102691. [Google Scholar] [CrossRef]

- Sisman, B.; Gunay, D.; Kucuk, S. Development and validation of an educational robot attitude scale (ERAS) for secondary school students. Interact. Learn. Environ. 2018, 27, 377–388. [Google Scholar] [CrossRef]

- Wedenborn, A.; Wik, P.; Engwall, O.; Beskow, J. The effect of a physical robot on vocabulary Learning. arXiv 2019, arXiv:1901.10461. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).