Abstract

A reconfigurable match table (RMT) is a programmable pipeline architecture for packet processing. In order to enable the programmable data plane to support segment routing IPv6 (SRv6) and other network protocols, this paper extends the deparser based on RMT. The paper uses the extended deparser and two RMT pipelines to build a protocol-independent network slice programmable data plane model named as the programmable SRv6 processor. We design it primarily for segment identifier (SID) processing of SRv6. We have proved that it can support SRv6, multiple semantics for SIDs, micro segment ID, multi-protocol label switching, and a service function chain (SFC). This architecture has broad application prospects. Experimental results on an FPGA showed that the extended deparser could achieve 100 Gbps throughput for 512B packets with few resources.

1. Introduction

Programmability of the data plane is an important trend for both programmable switches and SmartNICs. There are two limitations in the current switching chip and OpenFlow protocol [1], as follows: (1) match-action processing is only allowed on a fixed set of fields, and (2) the OpenFlow specification merely defines a limited set of packet processing actions. The McKeown Group has proposed the RMT (reconfigurable match tables) model, which is a RISC (reduced instruction set computing)-inspired pipeline architecture for packets forwarding on a switching chip, and the group has focused on identifying the basic minimum set of action primitives to specify how the packet header is processed in hardware [2]. The RMT model allows the forwarding plane to be changed by modifying the matching fields and arithmetic logical unit (ALU) programs without modifying the hardware. As in OpenFlow, the designers can specify as many match tables of any width and depth as resources allow, and each table can be configured to have the ability to match on any field. However, RMT allows more comprehensive modification of all header fields than OpenFlow.

However, the deparser module in the current RMT architecture is only responsible for performing simple merge operations. It writes the packet header vector (PHV) to the corresponding byte offset position in the packet header, merges the packet header with the corresponding payload in the packet cache, and transmits the merged packet out of the pipeline. The RMT performs a match-action at each stage, simply parsing and rewriting the packet header via a VLIW (very long instruction word), so it cannot support more complex network protocol processing. This paper extends the functionality of the RMT architecture by improving the deparser module in the RMT, so that instead of simply combining PHVs and payloads, the deparser module can perform more complex packet processing by parsing the PHVs generated by the container, such as inserting packet headers, encapsulating packet headers, and compressing packet headers, as well as other stateful operations, and it can better support the processing and forwarding of different network protocols. The deparser module is a programmable module, as with the other modules.

In order to enable the forwarding plane to support different network protocols, the RMT pipeline architecture is investigated in this paper, and the main work and innovations include the following:

- The idea of CacheP4 comes from the repeated entry matching of packets in the same stateless packet flow when the forwarding device forwards packets on the data plane [3]. CacheP4 speeds up packet forwarding by avoiding such repeated matching. Inspired by CacheP4, we believe that it is not necessary to place the MAT cache at the starting position of RMT, but rather that we should regard this fast path as a multi-level pipeline, that is, with each processing stage as a level of cache, and add a slow path at the end for complex stateful packet processing. We can regard the slow path as a Cache ALU for the RMT architecture and use CacheP4 for reference to extend the deparser as a deparser plus, based on the RMT architecture. The RMT architecture is mainly responsible for simple stateless processing, so it is an example of pipeline architecture in the traditional model of dedicated network processor (NP) forwarding. Deparser plus is mainly responsible for complex and stateful operations, such as encapsulation of message headers and insertion of protocol headers, using a complex instruction set, and it also makes use of the run-to-completion (RTC) architecture of the NP forwarding model. A comparison of the RTC and pipeline architectures is shown in Table 1. The combination of the RTC and pipeline architectures enables the design of a protocol-independent packet processing architecture.

Table 1. RTC vs. pipeline.

Table 1. RTC vs. pipeline.

- We used ingress RMT, deparser plus, and egress RMT to form a pipeline architecture called a programmable SRv6 (segment routing IPv6) processor, because we designed it primarily for the segment identifier (SID) processing of SRv6. It can support parsing and processing packets of different lengths, such as SRv6, multi-protocol label switching (MPLS), virtual extensible local area network (VXLAN), multiple semantics for segment IDs (SIDs), and micro SID (uSID) compression and decompression. This paper also investigates the use of programmable SRv6 processor in application scenarios, such as segment routing over UDP (SRoU), in-band network telemetry (INT), and service function chain (SFC) orchestration.

- We test the deparser plus module on the Corundum platform using SRv6 packets to verify that the extended deparser plus can perform operations on packets using different instructions depending on the SID.

2. Related Work

Network technologies are often developed to serve practical needs. The architecture of networks has changed profoundly in recent years, especially in the model of cloud computing, which requires network resources in the cloud to be sufficiently abstracted and flexibly scheduled, such as the central processing unit (CPU), memory and disk, and namely the ability to be virtualized. With the development of network virtualization and network functions, the requirements for a programmable data plane are also increasing, and developers need to be able to reprogram the forwarding process of the network to suit their needs.

2.1. Network Virtualization

Network virtualization means that the connections between virtual network nodes are not connected using physical cables but instead rely on specific virtualized links to connect them [4]. Objectively speaking, it is the development of cloud computing that promotes the development of network virtualization [5]. It focuses on the abstraction of network resources (ports, broadband and IP addresses) and supports dynamic allocation and management according to tenants and applications. Network virtualization technology creates multiple virtual networks on a shared physical network resource, while each virtual network can be deployed and managed independently. As a branch of virtualization technology, network virtualization is essentially a resource-sharing technology. Common network virtualization applications include virtual local area networks (VLANs) [6], VXLAN [7], virtual private networks (VPNs) [8], and virtual network devices.

Network slicing is an on-demand networking approach that allows operators to separate multiple virtual end-to-end networks in a unified infrastructure in order to accommodate various types of applications [9]. The core of network fragmentation technology is network function virtualization (NFV) [10]. The NFV separates the hardware and software parts from the traditional network. The hardware is deployed by a unified server and the software is undertaken by different virtual network functions (VNFs) to meet the requirements of flexible service assembly. These VNFs include the virtual evolved packet core (vEPC) [11], virtual radio access network (vRAN) [12], and applications from different parties.

Segment routing (SR) is a protocol designed to forward data packets over a network based on the concept of source routing, which divides the network path into segments and assigns SIDs to these segments and network nodes. A forwarding path can be obtained by ordering the SIDs [13].

2.2. Programmable Data Plane

Network programmability is the ability to define the processing algorithms that are executed in the network, particularly in the individual processing node, such as switches, routers, load balancers, etc. In the past, the functions of network devices were bound to the device manufacturer, and users could not modify these functions to meet their own needs. The tight coupling of devices and functions increases the cost of deploying new network protocols. Compared with traditional network, a programmable network allows network equipment suppliers and users to build the network that best suits their needs, and is faster and cheaper, without compromising the performance or quality of the equipment. For example, they can develop custom network monitoring, analysis, and diagnosis systems to achieve unprecedented network visualization and correlation, thereby dramatically reducing network operating costs. They can also collaborate to optimize the network and the applications that run on it to ensure the best user experience. Software defined networking (SDN) is the first attempt in history to make devices (and in particular their control plane) programmable. In this architecture, a single switch (or forwarding node) does not need to implement all the logic needed to maintain a packet-forwarding policy. For example, they do not run routing protocols to create routing tables. Instead, they get these precomputed policies from the network control plane. Controller–switch communication is handled through a standardized southbound application programming interface (API), such as OpenFlow, P4Runtime [14], or the Open vSwitch database management protocol. The main reason for the high memory and processing overhead inherent in packet processing applications is the inefficient use of memory and I/O resources by common network interface cards (NICs). FlexNIC [15] implements a new network direct memory access (DMA) interface that allows operating systems and applications to install simple packet handling rules to the network card and then perform these operations before transferring packets to host memory.

OpenFlow specifies the messaging API to control the functionality of the data plane, and then SDN applications can use the functionality provided by this API to implement network control. However, the specific data plane that is the basis of OpenFlow cannot be changed. It performs only the algorithm defined by the OpenFlow specification, albeit with relative flexibility. Data plane algorithms can be represented using standard programming languages. However, they do not map well onto specialized hardware, such as high-speed application specific integrated circuits (ASICs). Therefore, some data plane models have been proposed as abstractions for hardware. Data plane programming languages are tailored to these data plane models and provide methods for expressing algorithms in an abstract way. The resulting code is then compiled for execution on the specific packet processing nodes that support the respective data plane programming models. On this basis, the programmable protocol-independent switch architecture (PISA) was proposed [16]. The PISA generic programmable data plane extends both programmable protocol parsing and programmable data packet processing operations. As network programmability matures, a single device will have to support multiple independently developed modules at the same time, thus, Menshen achieves this by adding a lightweight isolation mechanism to RMT to isolate the processing of different modules on the same device [17].

A new generation of SDN solutions must make the data forwarding plane programmable as well, allowing software to truly define the network and network devices. Additionally, programming protocol-independent packet processors (P4) provide users with this capability, breaking the limitations of hardware devices on the data forwarding plane and allowing the data packet parsing and forwarding process to be programmatically controlled as well, making the network and devices truly open to users from the top to bottom.

Disaggregated RMT (dRMT) separates computational resources (e.g., match/action processors) from memory, which are interconnected via a crossbar [18]. Each match-action (MA) processor holds a P4 program and processes packets in an RTC manner. The FlexCore architecture introduces another innovation in the dRMT partial decomposition design by holding an indirect data structure called a program description table (PDT) in the local memory of the MA processor [19]. This table contains metadata about the control flow of the program and can be used to reconfigure the processing flow of this processor.

At present, the programmable NIC has become a general concept, which refers to any NIC module that has the programmable capability to support the uninstallation of various virtual network functions or application services. According to the different implementation mechanisms of the programmable NIC, the programmable NIC can be divided into three types according to the technical route, as follows: the programmable NIC based on ASIC, the programmable NIC based on a multi-core, and the programmable NIC based on FPGA. The programmable NIC based on ASIC has a fixed logic and can only be programmed by instruction (such as a CPU). Therefore, it needs to work with other external programmable modules to realize the main functions of the programmable NIC. However, its working frequency is high, and a high performance–power consumption ratio can be achieved by means of a special pipeline and other mechanisms. At the same time, the processing process has a good delay certainty. For example, the Intel IPU uses ASIC to realize the basic logic of NIC programmable switching layer, and implements accelerated logic of other data plane based on multi-core [20]. A multicore-based programmable NIC generally has one or more embedded core arrays, which are interconnected with the data/control pathways of the NIC through an on-chip bus to provide programmability to the NIC in a manner similar to an embedded server. Examples include Mellanox’s BlueField [21] programmable NIC and Fungible’s DPU [22]. Compared with ASIC, multi-core architecture has lower programming complexity and higher flexibility, but because it also uses Von Neumann’s computing architecture, its energy consumption is higher than that of general ASIC or FPGA logic. The characteristics of a FPGA-based programmable NIC are between the aforementioned two. Firstly, FPGA has the field programmable ability, which allows users to use HDL (Verilog, VHDL) to reconstruct the functional logic of a network card offline at the logic gate level, so it has better programmability. However, the disadvantage is that the programming complexity is relatively high compared to the CPU. In addition, due to the partial timing margin occupied by the routing between the configurable logic blocks and the logic, the ASIC generally operates less frequently than the ASIC of the same process level.

The key idea of in-network computing is to take advantage of the unique advantages of switches to do part of the computation directly in the network, so as to reduce latency and improve performance. Although programmable switches have been an important enabler of this new paradigm, PISA is often not suitable for emerging in-network applications, thus, limiting further development and preventing widespread adoption of in-network computing applications. Thus, Juniper came up with a programmable architecture for in-network computing called the Trio [23]. The Trio is built on a custom processor core with an instruction set optimized for network applications. As a result, the chipset has the performance of a traditional ASIC while enjoying the flexibility of a fully programmable processor, allowing new features to be installed through software. The Trio’s flexible architecture allows it to support features and protocols developed long after the chipset is released. The Trio is built on a custom processor core with an instruction set optimized for network applications. As a result, the chipset has the performance of a traditional ASIC while enjoying the flexibility of a fully programmable processor, allowing new features to be installed through software. The Trio’s flexible architecture allows it to support features and protocols developed long after the chipset is released.

3. Protocol-Independent Pipeline Design

To support processing for any type of packets, deparser plus, which extends the RMT pipeline, is proposed and combined with RMT to form the programmable SRv6 processor. The RMT architecture is mainly responsible for simple stateless processing, and deparser plus is responsible for complex, stateful operations. This processor can better adapt to the processing and forwarding of different protocol clusters and form protocol-independent pipeline architecture.

3.1. Overall Architecture of Programmable SRv6 Processor

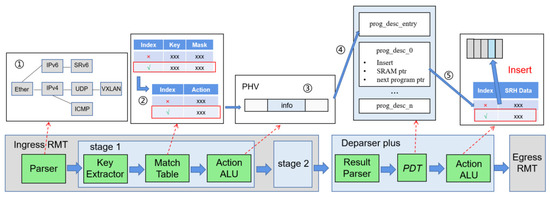

As shown in Figure 1, this processor consists of ingress RMT, deparser plus, and egress RMT. The original deparser module of ingress RMT has been replaced by deparser plus, so the final phase of ingress RMT does not merge the packet payload with the header, but sends parsing results (metadata and PHV) and cached payload directly to the deparser plus module.

Figure 1.

Overall architecture of programmable SRv6 processor.

The ingress RMT module adopts a reduced instruction set and is mainly responsible for receiving and determining the type of packets to complete the simple preprocessing of packets. It writes the parsing results needed to process the packet to metadata, including the packet type and flow ID, which is then processed by the deparser plus module.

The deparser plus module is responsible for receiving PHVs and metadata sent by the ingress RMT and for stateful processing of packets based on the flow ID and packet type in the metadata. After modifying any PHV fields based on the complex instructions in the action table, the modified PHV is sent to the Egress RMT for modification and forwarding.

The Egress RMT uses a reduced instruction set and receives PHVs sent by the Deparser plus module to change the PHVs’ destination IP addresses, search the forwarding table and adjacency table to generate layer 2 headers, and finally forward the packets.

After the packet enters the pipeline, the packet header is parsed by the parser to extract the information waiting to be matched in the next stages. The ingress RMT performs simple processing on packets, such as by modifying specific fields and searching flow tables. Deparser plus handles complex operations on packets after receiving parsing results, such as inserting headers and encapsulating headers. The modified packet is then passed to the egress RMT for final processing, before it is then sent out.

Ingress RMT, deparser plus, and egress RMT are programmable modules that form the programmable SRv6 processor. Programmers can change a processor’s functionality as needed. Therefore, it meets the requirement of a programmable data plane and realizes pipelined processing architecture independent of protocol.

3.2. Programmable MAT

A match-action table (MAT) is the core module in RMT architecture. As shown in Figure 2, each stage looks up fixed-size keys constructed by the key extractor through exact matches in MAT, which are the actual entries looked up in the match table. The lookup result is used as an index to the VLIW action table to identify the appropriate action to perform. Each VLIW action table entry indicates which fields from the PHV to use as ALU operands (i.e., the configuration of each ALU’s operand crossbar) and what opcode should be used for each ALU controlled by the VLIW instruction (i.e., addition, subtraction, etc.). There is one ALU per PHV container, removing the need for a crossbar on the output, because each ALU’s output is directly connected to its corresponding PHV container. After a stage’s ALUs have modified its PHV, the modified PHV is passed to the next stage.

Figure 2.

Example of PDT processing.

3.3. Protocol-Independent Programmable Deparser Plus

Deparser plus receives metadata and PHVs sent by the ingress RMT as shown in Figure 2. The metadata carries the packet type and flow ID to determine what needs to be carried out in the packet. Deparser plus can process any location of the packets by, for example, inserting a segment routing header (SRH) or encapsulating VXLAN packets. Deparser plus sends the modified packets and parsing results to the egress RMT for modification and forwarding. Deparser plus, which is responsible for complex stateful operations, such as header compression, encapsulation, and protocol header insertion, uses a complex instruction set, and is an RTC architecture in the model of NP forwarding.

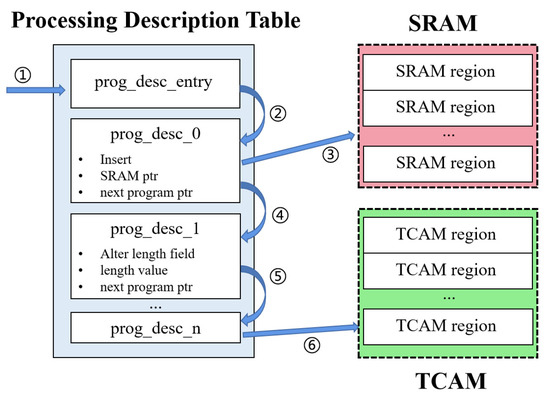

We use the processing description table (PDT) module in deparser plus to control the RTC processing of packets by the action module as shown in Figure 3. The PDT can be configured by the result parser module or compiled by a developer using the P4 program. Each MA processor maintains a local PDT, and all packets arriving at the port will first hit a default entry in the PDT to activate packet processing. Each entry stores an instruction for a program element that represents a specific operation and contains a pointer to the next instruction. Instructions contain opcodes and other information needed to process data, such as pointers to resources that are implemented by the instruction. Pointer addresses can be static random access memory (SRAM) locations or ternary content addressable memory (TCAM) locations, because only one pointer type is valid for a PDT entry. Taking SRH insertion as an example, the PDT entries and control flow are shown in Figure 3.

Figure 3.

Example of PDT processing.

As shown in Figure 1, Deparser plus consists of the result parser, PDT, and the action modules. The result parser module receives metadata and PHVs sent by two FIFO queues in the previous stage. The result parser module determines the packet type based on metadata and searches the action table to obtain action instructions. The content of instruction contains opcodes and additional fields of other information. The action table contains a series of specific operations corresponding to each instruction, such as inserting data and modifying the packet length. The result parser parses the instructions and configures the PDT module in this manner. Then, the PDT module will set up the program to call the action module to deal with the PHV according to the instructions. The mapping table contains the data needed by PDT to process the header, such as SRH. The action module is controlled by the PDT module to obtain the offset, the offset length, and the information to be inserted or modified. The offset indicates the location of the packet to be operated on. Finally, the modified PHV is sent to the egress RMT.

Some simple instructions such as add, subtract, and replace can be performed in the RMT pipeline, thus, we only need to define instructions for complex operations in deparser plus. At present, we have defined seven instructions, as follows: insert data into the packet header, delete the packet header data, encapsulate the packet header, decapsulate the packet header, alter instructions in the action table, and compress and decompress the packet header. The definitions and formats of these seven instructions are shown in Table 2.

Table 2.

Deparser plus instruction definition.

3.4. Programmable SR Forwarding for Network Slice

A programmable SRv6 processor has wide application scenarios, such as SR, VXLAN, SRoU [24], INT [25], and SFC orchestration [26]. The SR technology support includes MPLS SR [27], SRv6, and multiple semantics for SIDs.

The MPLS SR and SRv6 are two main application forms of SR technology. SRv6 has much more powerful network programmable ability than MPLS SR, thanks to its flexible SRH extension header.

3.4.1. SRv6 Mapping in Processor

The SRv6 [28], namely SR+IPv6, adopts the existing IPv6 forwarding technology and realizes network programming through the flexible IPv6 extension header. To implement SR technology on the IPv6 forwarding plane, SRv6 adds an SRH extension header in the IPv6 route extension header, which specifies an explicit IPv6 path and stores IPv6 segment list information. During packet forwarding, the segments left and segment list fields jointly determine the IPv6 destination address (DA) information to guide packet forwarding paths and behaviors.

In this paper, we have enabled SRv6 forwarding by extending the deparser module in the RMT pipeline to deparser plus. Figure 3 shows the mapping of the SRv6 packet forwarding process on the pipeline using the source node as an example. Ingress RMT is responsible for parsing the packet, extracting its flow ID, and writing in metadata, which indicates if it is a packet that needs to be inserted into the SRH, before sending it to the deparser plus module. In the deparser plus module, the metadata determines the type of message. If the packet is a source node SRv6 packet, the metadata searches for the action table based on the flow ID and packet type, obtains insert instructions, and configures the PDT. The instruction contains the insert opcode, offset, index of mapping table, length of inserted data, and packet length field offset.

The PDT module obtains the SRH through the index of mapping table, invokes the action module to insert the SRH into the IPv6 packet through the offset and offset length in the insert instruction, and adds the corresponding length value to the payload length field in the IPv6 packet header. The egress RMT performs normal SRv6 packet processing, such as replacing the destination IP address, rewriting the segment left field, and replacing the MAC address. The egress RMT searches the forwarding table to obtain the output port and sends out the SRv6 packet. The P4 program is used to describe the specific processing behavior of processor to SRv6 packets, as shown in Algorithm 1.

| Algorithm 1. The pseudo code of SRv6. |

| Input:packet |

| Output:modified _ packet |

| 1. if (hop _ limit == 0) drop (packet); |

| 2. else if (SRH){ |

| 3. if (Des _ IP == Local _ IP){ |

| 4. if (Segment _ Left == 0 ){ |

| 5. Des _ IP = Segment _ List [0]; |

| 6. Delete (SRH); |

| 7. hop _ limit --; |

| 8. Submit (modified _ packet); |

| 9. } |

| 10. else { |

| 11 Des _ IP = Segment _ List [Segment _ Left]; |

| 12 Segment _ Left --; |

| 13 hop _ limit --; |

| 14 out _ port = Look _ up (FIB, Des _ IP); |

| 15 Des _ MAC = Look _ up (ADJ, Des _ IP); |

| 16 Forward (out _ port, modified _ packet); |

| 17 } |

| 18 else { |

| 19 hop _ limit --; |

| 20 out _ port = Look _ up (FIB, Des _ IP); |

| 21 Des _ MAC = Look _ up (ADJ, Des _ IP); |

| 22 Forward (out_port, modified_packet); |

| 23 } |

| 24 } |

| 25 else { |

| 26 flow _ id = Look _ up (Flow _ table); |

| 27 if (flow _ id == IPv6){ |

| 28 out _ port = Look _ up (FIB, Des _ IP); |

| 29 Des _ MAC = Look _ up (ADJ, Des _ IP); |

| 30 Forward (out _ port, modified _ packet); |

| 31 } |

| 32 else if (flow _ id == source _ SRv6){ |

| 33 instruction = Look _ up (Action _ table, flow _ id) |

| 34 Config (PDT, instruction); |

| 35 SRH = Look_up (Mapping _ table, instruction [index]); |

| 36 Insert (PHV, SRH, offset, SRH _ length); |

| 37 payload _ length = payload _ length + SRH _ length; |

| 38 Des _ IP = Segment _ List [ Segment _ Left ]; |

| 39 Segment _ Left --; |

| 40 out _ port = Look _ up (FIB, Des _ IP); |

| 41 Des _ MAC = Look _ up (ADJ, Des _ IP); |

| 42 Forward (out _ port, modified _ packet); |

| 43 } |

| 44 } |

3.4.2. Multiple Semantics and Function for SID

The programmable capability of SRv6 comes from the following three parts: (1) multiple segments can be combined to form SRv6 paths, that is, paths can be edited. (2) the SRv6 segment defines network instructions in the SRv6 network programming, indicating where to go and how to get there. The ID that identifies an SRv6 segment is called an SRv6 SID. An SRv6 SID is a 128 bit value in the form of an IPv6 address, and it consists of three parts, namely the locator, function, and arguments. Each segment of SRv6 is 128 bit, which can be flexibly divided into multiple segments. The function and length of each segment can be customized, so they have flexible programming capability, that is, they are service editable. (3) When packets are transmitted on the network, irregular information needs to be encapsulated on the forwarding plane. This can be accomplished by using the flexible combination of optional type–length–value (TLV) in SRH, that is, the application editable.

In programmable SRv6 processor, you can complete the complex processing of packets by adding SID entries to the actions table of the deparser plus and using the SID’s function field to look for instructions that need to be executed. After instructions are passed to the PDT module, the PDT controls the action module to execute the instructions represented by the function field.

3.4.3. Support for uSID

The protocol overhead introduced by SRv6 is much higher than that of SR-MPLS. Therefore, SRv6 imposes high requirements on network devices. Although SRv6 has huge advantages in network programmability and load balancing, in order to take advantage of these advantages, there is an urgent need to address SRv6’s protocol overhead, maximum transmission unit (MTU), and hardware requirements.

These questions are essentially the same question, as follows: how can we improve the efficiency of the SRv6 segment? To solve this problem, uSID (micro SID) and G-SID (general SID) header compression schemes are proposed [29,30]. Cisco extended the existing SRv6 framework and defined a new segment type, uSID.

In a normal SRv6 header (SRH), each SID would need to hold the same common prefix, but these identical parts are redundant. The uSID extracts the same public prefix (network segment) from the segment list as the prefix block of the compressed packet header, and uses node ID and function ID as the compressed SID (uSID) together with the public prefix to form the compressed segment list.

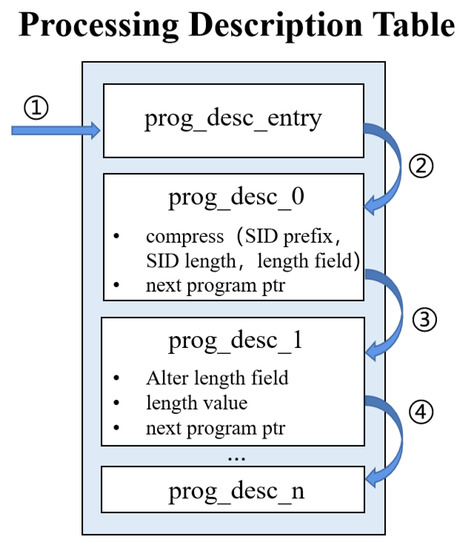

The programmable SRv6 processor supports uSID header compression, which is a stateful process. When SRv6 packets enter the pipeline, only the packet type and flow ID are written into the metadata. The result parser analyzes the metadata and searches the action table to obtain the compress instructions to be executed. The content of the instruction contains the compressed opcode, SID prefix, SID length, packet length field offset, etc., to configure the PDT module. Figure 4 shows a program for compressing the uSID header in PDT. The PDT module sends the compress instruction and SID prefix to the action module for SRH compression. The action module places the uSID prefix in the first 16 bits of the 128 bit SID, and then sequentially compresses the last 16 bits of SIDs with the same prefix after the first uSID prefix. After seven SIDs are compressed, a 128 bit SID is created for further compression until a different prefix or compression is completed. Finally, the difference between the compressed header length and the packet length before compression is calculated, and the difference is used as the offset to guide the re-fragmentation and forwarding of packets.

Figure 4.

The uSID compression program in PDT.

4. Application and Validation

4.1. Prototype System

This paper’s prototype system is implemented using the Corundum prototype platform based on a VCU118 FPGA development board [31]. Corundum is an open source, FPGA-based NIC prototype platform for 100Gbps and higher rate network interface development. Corundum is composed of three nested modules, namely the FPGA top module, interface module, and port module. The top module mainly includes PCIe IP core, DMA interface, PTP hardware clock and Ethernet interface, as well as one or more interface module instances. Each interface module includes an Ethernet interface at the operating system level, queue management, packet descriptors, and completion processing logic, and one or more port module instances. The port module provides the AXI-Stream interface to the MAC, as well as the send scheduler, send and receive engine, and other content. Corundum includes core functions for real-time and high-speed operations, including high performance data paths, 10G/100G Ethernet interfaces, third-generation PCIe, and scalable queue management and transmission scheduling capabilities. With a combination of multiple network interfaces, multiple ports in each interface, and transmission scheduling functions in each port, Corundum can be used for the development and testing of advanced network applications. Corundum supports a variety of FPGA, including the VCU118 development board.

The prototype system test environment is shown in Figure 5. In our system, Corundum is connected to a machine equipped with an Intel Xeon E5645 CPU clocked at 2.40 GHz via a peripheral component interface express (PCIe), and to the Spirent FX3-100GO-T2 tester [32] via quad small form-factor pluggables (QSFP28). The SRv6 processor is constructed by combining two RMT pipelines with a deparser plus module.

Figure 5.

Prototype system test environment.

4.2. Application Scenarios for Prototype System

A programmable SRv6 processor can support a wide range of application scenarios, such as VXLAN, SRoU, INT, and SFC. The comparison and analysis of these four application scenarios is shown in Table 3.

Table 3.

Comparison and analysis of application scenarios.

The VXLAN encapsulates data frames of the virtual network in packets transmitted in the actual physical network. The encapsulation of the VXLAN packet headers can be carried out through the encapsulate instruction in this architecture.

The SRoU essentially inserts SRH into UDP payloads. The processing in this architecture is similar to that of SRv6 packets, and SRoU packets can be generated and processed by changing the insertion position of SRHs.

The INT is a framework for network data plane collection and reporting network status without the intervention of network control plane. As the telemetry packet passes through the switching device, the telemetry instruction tells the network device with network telemetry capability what network state information should be collected and written. The header information and telemetry information can be inserted by insert instructions in this architecture to realize the generation and processing of INT packets.

The SFC directs network traffic through business points in a predetermined order required by business logic. The processing idea of SR forwarding technology can also be used in SFC. It is possible to add SFC headers for different network traffic by using the insert instruction. It is also possible to run the delete instruction to delete the SFC header from the packet and submit it to the host for network processing.

4.3. Performance Analysis

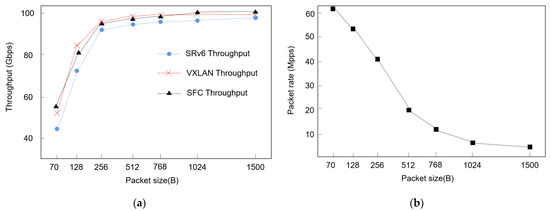

The programmable SRv6 processor was tested using different sizes packets of SRv6, SFC, and VXLAN. We used up to five segments per SRv6 packet. Additionally, each SFC header contained a unique ID for the SFC.

If the packet needed to be encapsulated as a VXLAN packet, the outer IP header, outer UDP header, and VXLAN header were generated based on the IP address and VXLAN network identifier (VNI) in the action table. The outer MAC header was generated by the egress RMT.

In our current implementation, the number of clock cycles required by the pipeline to process a packet depends on the packet size because the number of cycles to process headers and loads depends on the header and load length. As shown in Figure 6, the Corundum prototype platform has interface rates of up to 100Gbps, and deparser plus has been able to process packets at linear speed in Corundum when the packet size has reached 512 bytes.

Figure 6.

Results for performance. (a) Throughput of the SRv6 processor; (b) packet rate of the SRv6 processor.

The resource utilization of deparser plus in the Corundum prototype platform is shown in Table 4, which includes configurable logic blocks (CLBs), CLB look up tables (LUT), CLB registers, a block RAM tile, and a seven-input multiplexer (F7 Muxes). Experimental results show that deparser plus can perform protocol-independent processing of packets at a linear speed with few resources.

Table 4.

Resource utilization of VCU118 FPGA.

4.4. Remarks

The design of the SRv6 processor shows that complex packet operations can be handled in a programmable pipeline. It has not been optimized for performance. For instance, the data width of the pipeline could be increased with additional design effort, improving both throughput and latency. Latency can be improved by optimizing how packets are pulled out of the packet cache, updated, and sent out. Moving from an FPGA to an ASIC would improve frequency and data width. Deeper pipelining or several parallel parsers can also increase throughput.

5. Conclusions and Outlook

This article extends the deparser module in the RMT architecture to a deparser plus with a complex instruction set design to support complex stateful packet processing. Ingress RMT and egress RMT, which are designed with a reduced instruction set, integrated with the deparser plus form a programmable architecture called the programmable SRv6 processor, for performing SRv6 packet processing. Deparser plus has been tested and only introduces moderate overhead to existing RMT pipelines. It also provides line-rate packet processing.

Currently, we have defined seven instructions in deparser plus. These seven complex instructions, together with the reduced instructions in the RMT architecture, contain most of the operations required for complex packet processing. Future work could be aimed at extending the complex instruction set in deparser plus by inserting new instructions into the action table with the existing alter instruction to support more complex packet processing.

Author Contributions

Conceptualization, Z.L. and G.L.; methodology, J.W.; validation, Z.L., X.Y. and J.W.; formal analysis, Z.L.; investigation, Z.L.; resources, Z.L.; data curation, J.W.; writing—original draft preparation, Z.L.; writing—review and editing, G.L.; visualization, X.Y.; supervision, G.L.; project administration, X.Y.; funding acquisition, G.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Key Research and Development Program of China, grant number 2020YFB1805603.

Conflicts of Interest

The authors declare no conflict of interest.

References

- McKeown, N.; Anderson, T.; Balakrishnan, H.; Parulkar, G.; Peterson, L.; Rexford, J.; Shenker, S.; Turner, J. OpenFlow: Enabling innovation in campus networks. ACM SIGCOMM Comput. Commun. Rev. 2008, 38, 69–74. [Google Scholar] [CrossRef]

- Bosshart, P.; Gibb, G.; Kim, H.S.; Varghese, G.; McKeown, N.; Izzard, M.; Mujica, F.; Horowitz, M. Forwarding metamorphosis: Fast programmable match-action processing in hardware for SDN. ACM SIGCOMM Comput. Commun. Rev. 2013, 43, 99–110. [Google Scholar] [CrossRef]

- Ma, Z.; Bi, J.; Zhang, C.; Zhou, Y.; Dogar, A.B. Cachep4: A behavior-level caching mechanism for p4. In Proceedings of the SIGCOMM Posters and Demos, Los Angeles, CA, USA, 21–25 August 2017; pp. 108–110. [Google Scholar]

- Chowdhury, N.M.M.K.; Boutaba, R. A survey of network virtualization. Comput. Netw. 2010, 54, 862–876. [Google Scholar] [CrossRef]

- Sadeeq, M.M.; Abdulkareem, N.M.; Zeebaree, S.R.; Ahmed, D.M.; Sami, A.S.; Zebari, R.R. IoT and Cloud computing issues, challenges and opportunities: A review. Qubahan Acad. J. 2021, 1, 1–7. [Google Scholar] [CrossRef]

- Baykara, M.; DAŞ, R. SoftSwitch: A centralized honeypot-based security approach using software-defined switching for secure management of VLAN networks. Turk. J. Electr. Eng. Comput. Sci. 2019, 27, 3309–3325. [Google Scholar] [CrossRef]

- Salazar-Chacón, G.; Naranjo, E.; Marrone, L. Open networking programmability for VXLAN Data Centre infrastructures: Ansible and Cumulus Linux feasibility study. Rev. Ibérica De Sist. E Tecnol. De Inf. 2020, E32, 469–482. [Google Scholar]

- Ezra, P.J.; Misra, S.; Agrawal, A.; Oluranti, J.; Maskeliunas, R.; Damasevicius, R. Secured communication using virtual private network (VPN). In Cyber Security and Digital Forensics; Springer: Singapore, 2022; pp. 309–319. [Google Scholar]

- Wijethilaka, S.; Liyanage, M. Survey on network slicing for Internet of Things realization in 5G networks. IEEE Commun. Surv. Tutor. 2021, 23, 957–994. [Google Scholar] [CrossRef]

- Barakabitze, A.A.; Ahmad, A.; Mijumbi, R.; Hines, A. 5G network slicing using SDN and NFV: A survey of taxonomy, architectures and future challenges. Comput. Netw. 2020, 167, 106984. [Google Scholar] [CrossRef]

- Hawilo, H.; Liao, L.; Shami, A.; Leung, V.C. NFV/SDN-based vEPC solution in hybrid clouds. In Proceedings of the 2018 IEEE Middle East and North Africa Communications Conference (MENACOMM), Jounieh, Lebanon, 18–20 April 2018; pp. 1–6. [Google Scholar]

- Pujolle, G. Fabric, SD-WAN, vCPE, vRAN, vEPC; Wiley: Hoboken, NJ, USA, 2020. [Google Scholar]

- Ventre, P.L.; Salsano, S.; Polverini, M.; Cianfrani, A.; Abdelsalam, A.; Filsfils, C.; Camarillo, P.; Clad, F. Segment routing: A comprehensive survey of research activities, standardization efforts, and implementation results. IEEE Commun. Surv. Tutor. 2020, 23, 182–221. [Google Scholar] [CrossRef]

- P4 Runtime. Available online: https://p4.org/p4-runtime/ (accessed on 7 September 2022).

- Kaufmann, A.; Peter, S.; Sharma, N.K.; Anderson, T.; Krishnamurthy, A. High performance packet processing with flexnic. In Proceedings of the Twenty-First International Conference on Architectural Support for Programming Languages and Operating Systems, Atlanta, GA, USA, 2–6 April 2016; pp. 67–81. [Google Scholar]

- Bosshart, P.; Daly, D.; Gibb, G.; Izzard, M.; McKeown, N.; Rexford, J.; Schlesinger, C.; Talayco, D.; Vahdat, A.; Varghese, G.; et al. P4: Programming protocol-independent packet processors. ACM SIGCOMM Comput. Commun. Rev. 2014, 44, 87–95. [Google Scholar] [CrossRef]

- Wang, T.; Yang, X.; Antichi, G.; Sivaraman, A.; Panda, A. Isolation Mechanisms for {High-Speed}{Packet-Processing} Pipelines. In Proceedings of the 19th USENIX Symposium on Networked Systems Design and Implementation (NSDI 22), Renton, WA, USA, 4–6 April 2022; pp. 1289–1305. [Google Scholar]

- Chole, S.; Fingerhut, A.; Ma, S.; Sivaraman, A.; Vargaftik, S.; Berger, A.; Mendelson, G.; Alizadeh, M.; Chuang, S.T.; Keslassy, I.; et al. drmt: Disaggregated programmable switching. In Proceedings of the Conference of the ACM Special Interest Group on Data Communication, Los Angeles, CA, USA, 21–25 August 2017; pp. 1–14. [Google Scholar]

- Xing, J.; Hsu, K.F.; Kadosh, M.; Lo, A.; Piasetzky, Y.; Krishnamurthy, A.; Chen, A. Runtime programmable switches. In Proceedings of the 19th USENIX Symposium on Networked Systems Design and Implementation (NSDI 22), Renton, WA, USA, 4–6 April 2022; pp. 651–665. [Google Scholar]

- Intel Infrastructure Processing Unit for Data Centers. Available online: https://www.intel.com/content/www/us/en/newsroom/news/infrastructure-processing-unit-data-center.html#gs.abuzma (accessed on 12 September 2022).

- NetXtreme® Ethernet Adapters. Available online: https://www.broadcom.com/how-to-buy/hardware-partners/ethernet-network-adapters/broadcom (accessed on 12 September 2022).

- CONNECTX-5 Product Brief. Available online: https://www.nvidia.com/en-us/networking/ethernet/connectx-5/ (accessed on 12 September 2022).

- Yang, M.; Baban, A.; Kugel, V.; Libby, J.; Mackie, S.; Kananda, S.S.R.; Wu, C.H.; Ghobadi, M. Using trio: Juniper networks’ programmable chipset-for emerging in-network applications. In Proceedings of the ACM SIGCOMM 2022 Conference, Amsterdam, The Netherlands, 22–26 August 2022; pp. 633–648. [Google Scholar]

- Exploration of Cloud Native Routing Architecture. Available online: https://www.136.la/jingpin/show-121807.html (accessed on 12 September 2022).

- Tan, L.; Su, W.; Zhang, W.; Lv, J.; Zhang, Z.; Miao, J.; Liu, X.; Li, N. In-band network telemetry: A survey. Comput. Netw. 2021, 186, 107763. [Google Scholar] [CrossRef]

- Sun, G.; Li, Y.; Yu, H.; Vasilakos, A.V.; Du, X.; Guizani, M. Energy-efficient and traffic-aware service function chaining orchestration in multi-domain networks. Future Gener. Comput. Syst. 2019, 91, 347–360. [Google Scholar] [CrossRef]

- Segment Routing with MPLS Data Plane. Available online: https://datatracker.ietf.org/doc/html/rfc8660 (accessed on 8 September 2022).

- Wu, Y.; Zhou, J. Dynamic Service Function Chaining Orchestration in a Multi-Domain: A Heuristic Approach Based on SRv6. Sensors 2021, 21, 6563. [Google Scholar] [CrossRef] [PubMed]

- Tulumello, A.; Mayer, A.; Bonola, M.; Lungaroni, P.; Scarpitta, C.; Salsano, S.; Abdelsalam, A.; Camarillo, P.; Dukes, D.; Clad, F.; et al. Micro SIDs: A solution for efficient representation of segment IDs in SRv6 networks. In Proceedings of the 2020 16th International Conference on Network and Service Management (CNSM), Izmir, Turkey, 2–6 November 2020; pp. 1–10. [Google Scholar]

- Cheng, W.; Liu, Y.; Jiang, W.; Zhang, G.; Yang, F.; Han, T. G-SID: SRv6 Header Compression Solution. In Proceedings of the 2020 IEEE 20th International Conference on Communication Technology (ICCT), Nanning, China, 28–31 October 2020; pp. 126–130. [Google Scholar]

- Forencich, A.; Snoeren, A.C.; Porter, G.; Papen, G. Corundum: An open-source 100-gbps nic. In Proceedings of the 2020 IEEE 28th Annual International Symposium on Field-Programmable Custom Computing Machines (FCCM), Fayetteville, AR, USA, 3–6 May 2020; pp. 38–46. [Google Scholar]

- Spirent Quint-Speed High-Speed Ethernet Test Modules. Available online: https://assets.ctfassets.net/wcxs9ap8i19s/12bhgz12JBkRa66QUG4N0L/af328986e22b1694b95b290c93ef6c21/Spirent_fX3_HSE_Module_datasheet.pdf (accessed on 7 September 2022).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).