1. Introduction

According to the Korea National Statistical Office’s data on the number of persons with disabilities registered by gender and by disability type in 2020 in Korea, there are more than 2.61 million people with disabilities in Korea, of which more than 250,000 are blind. More than 45% of them are now engaged in economic activities and communicating smoothly with the world.

Korea has been implementing a “special university admission system for those subject to special education” since 1995, and the universities and departments that implement it have constantly continued to expand [

1]. Starting with the 1998 College Scholastic Ability Test, blind students have increased their interest in college entrance by providing cassette tapes of test papers recorded for blind students or by providing extended questions for low-visibility students [

2]. As a result, the educational level ratio of students with disabilities has increased in Korea [

3].

The college admission rate for students with disabilities who graduated from high school was 50.9% in 2020, more than twice that of 24.5% in 2004. The reason blind college students want to go to college is to make their dreams come true, to pursue a variety of careers, to eliminate prejudice against disability, and even the needs of students with disabilities for higher education are no different from the needs of ordinary students [

4]. However, out of 32 visually impaired college students living in college, 19 (59.4%) said that they were seriously considering a transfer, and 16 (50%) answered that they had considered taking a leave of absence [

1,

5].

In recent years, the atmosphere of college admission for disabled students has changed a lot in the direction of guaranteeing their rights and opportunities. However, there is a lack of consideration for various types of disability, and practical support for them is still difficult [

6]. Adaptation to college life of the visually impaired faces difficulties in overall daily life and social activities due to limitations in the scope and diversity of experiences, limitations in walking ability, and limitations in interaction with the environment. The help of others is also indispensable to acquire the skills to perform various tasks independently [

3,

6].

Universities provide convenience for college students with disabilities in many aspects [

7], but there are many ways and types to provide practical assistance which are lacking. As a result, the difficulties experienced by the visually impaired as they enter college are left to individuals with disabilities. Sometimes, as a superficial result of this, a leave of absence, a school warning, or withdrawal is also possible [

6].

Until now, studies related to the education of students with disabilities have only provided support for admission opportunities in the transition from secondary education to higher education, and studies for adaptation to college life after admission are insufficient [

8]. As a result of analyzing the many research [

9,

10,

11], many studies are conducted on blind adults as well as blind children, but there are many child-centered studies in Korea. Therefore, further research is needed to expand to adults, including blind college students [

5,

12] and it is necessary to develop support programs that meet the needs of blind college students, such as ghost-writing and word work, and voice support computers [

4].

In this paper, we design and implement VTR4VI for students with visual impairment or blindness. VTR4VI is a software system that converts the voice of a person with visual impairment or blindness into a report and stands for “Voice to Report for Visually Impaired”. By using VTR4VI, students with visual impairment or blindness can independently write reports without help from others. There are many programs that can generate reports and write notes based on speech recognition. However, since they are not designed and implemented for students with visual impairment or blindness, it is difficult to check if the written contents are properly generated or not in real time. In addition, existing programs do not provide voice notification or voice-command-based functionality. As a result, there are many inconveniences and difficulties for students with visual impairments or blindness. This simple and innovative VTR4VI App provides an easy-to-use interface and voice feedback for the visually impaired, allowing users to use it without any assistance. In this paper, we focus on the following three schemes to implement VTR4VI:

First, all commands should be easy to use for people with visual impairment or blindness by voice;

Second, it provides voice feedback whenever the function is performed, allowing the people with visual impairment or blindness to know the progress of the current report;

Third, all commands can be customized in any language so people with visual impairment or blindness can use the app easily.

2. Related Research

This section describes applications and services that have functions similar to those of VTR4VI that can be used by blind people. It includes a total of seven services’ voice recorders and performs a comparative analysis with VTR4VI. Finally, we discuss the limitations of existing services.

2.1. Relevant Existing Voice-Based Services

The services used to study or write reports by voice are very diverse. However, there are not many programs made in consideration of the accessibility of the visually impaired. This section describes the characteristics of diverse services used by disabled and non-disabled people when studying or generating reports by voice.

2.1.1. Job Access with Speech (JAWS)

JAWS [

13] is a screen reader developed for computer users who cannot see screen content or navigate with a mouse because of their visual impairment. JAWS provides audio and braille output for popular computer applications on PCs. Users with visual impairments can use JAWS to control all key functions of the Microsoft Windows operating system with keyboard shortcuts and voice feedback. Every aspect of JAWS can be customized, including all keystrokes and elements such as reading speed, granularity used when reading punctuation, and hints. However, to use the so many functions necessary to use modern computer software effectively, users must remember many specific keystrokes.

2.1.2. Dragon NaturallySpeaking

Nuance’s Dragon NaturallySpeaking [

14] can quickly and accurately create documents and reports by voice using a speech engine using deep-processing technology. Dragon NaturallySpeaking is a standard word processing software that recognizes and studies the speaker’s voice and can automatically and quickly learn vernacular, intonation, and personal pronunciation [

15].

The users can also synchronize with Dragon Anywhere, which is a separate cloud-based mobile dictation solution, to create and edit documents on their iOS or Android device with voice. There are various versions available, so users can choose to purchase according to usage and situation. However, the program can be a cost burden to users because they must pay more than USD 150 a year.

2.1.3. Web-Based Co-Authoring Framework for Blind (WCFB)

WCFB [

16] provides an interactive, intelligent interface that can be used by blind and visually impaired people to collaborate on educational activities such as editing, writing, or reviewing documents. The system sends instant voice notifications of actions and events occurring in a shared environment and features special voice command inputs that allow visually impaired people to interact efficiently with the application.

It also supports communication services to make it easier to exchange information between people who are blind and visually impaired. Although the system is aimed at the visually impaired and has voice notification or voice command input capabilities, it can only be used in certain situations, such as a shared environment, and is not suitable for the visually impaired.

2.1.4. Google Live Transcribe

Google Live Transcribe [

17], powered by Google Cloud [

18] converts voice conversations into subtitles in real time and supports over seventy languages spoken by 80% of the world’s population. The system uses automatic speech recognition technology to detect and converts spoken language into readable texts and displays them as subtitles so that deaf and hard-of-hearing patients can communicate seamlessly and gain proper access to information from the world.

Automatic speech recognition technology has improved in recent years, and today, Google applies automatic subtitle processing to everyday conversations, making it easier for them to access real conversations in real time. Users can select, copy, and paste text so they can perform these actions if they want to move the transcribed text to another platform such as Google Docs. In fact, the system is primarily for deaf and hard-of-hearing users, but the technology to detect and convert spoken language into text is valuable for the visually impaired. Although the system can be used to create documents with voice, it does not provide voice notifications for the visually impaired and provides an interface suitable only for non-disabled people. Therefore, the system is not suitable for visually impaired people to use when writing reports.

2.1.5. Trint

Trint [

19] is a collaborative platform for searching, editing, and leveraging audio and video content using an automated voice-to-text algorithm. Trint can be used to convert audio and video files to text, as well as subtitles and audio and video file search capabilities. As it uses Trint’s automatic recording and verification tools instead of the time-consuming manual copying, it is much faster and cheaper to use. The price is USD 15 per hour, the basic version is USD 120 per month. It supports more than one hundred languages including Korean, English, European Spanish, European French, German, Italian, etc. However, since it does not support Korean for free, it is inconvenient for a domestic person with visual impairment or blindness to use. In addition, since the report cannot be edited or modified using voice feedback or voice commands, it is also considerably inconvenient for the visually impaired to use it.

2.1.6. Braina

Brainasoft’s Braina [

20] is an intelligent personal assistant and speech recognition software for Windows PCs. If users have a Wi-Fi network, iOS and Android apps can use their smartphone as a wireless microphone to operate the Braina on their PC. The Braina Lite version, which only supports English, is free. The multi-lingual version of Braina Pro, including Korean voice commands, costs

$49 a year. In addition to the function to change voice into text, the Braina app also provides voice control for notification setting and web browsing [

21]. However, since the status of the report cannot be verified or the report cannot be modified either in real time as the report is being written, it is difficult for the person with visual impairment or blindness to use it.

2.1.7. Google Docs

Google Docs [

22] is a word processor included in Google’s free web-based software office suite. Google Docs can be used as a desktop application for Android, iOS, Windows, Web application, and Google Chrome OS, and is compatible with the Microsoft office file format. This application allows users to create, view, and edit files online while collaborating with others in real time. If users can turn on their computers in the Chrome browser, they can create, edit, format, and edit files using voice commands in Google Docs. However, this application does not provide voice feedback, and when visually impaired users use Google docs to create a document, the current state of the document is not known.

2.2. Limitations of Existing Services

Table 1 is an existing voice-based learning service that can be used by both the visually impaired and the non-visually impaired. In detail, the table contains the target users and purposes of each service, the types of inputs and outputs, the user group, and the platform environment in which the service is executed. First, JAWS and WCFB are services developed in consideration of visually impaired users. JAWS is a worldwide screen reader that reads screens by voice. However, simply reading does not provide convenience in writing reports or writing documents. WCFB provides voice feedback on documentation but has the disadvantage of being less portable because it is executable on the desktop. In addition, there are voice-based learning services such as Dragon Naturally Speaking, Google Live Transcribe, Trint, Braina, and Google Docs. However, they do not provide convenient access to the visually impaired because they are services designed for non-visually impaired people. All programs are provided with voice-based services, but JAWS and WCFB are the only services that provide voice feedback. In writing documents for the visually impaired, feedback is required to verify the accuracy of the input, but the rest of the programs do not provide feedback in any form.

Additionally, to use the above programs, visually impaired people use additional auxiliary devices. The number of college students who use computer assistive technology devices accounts for a large proportion of students with visual impairments, and the types include braille information terminals, screen reading software, and alternative input devices. The students usually spend 10 to 15 h of computer assistive technology, for learning and homework. However, 84.6% of college students with disabilities who use assistive devices have difficulty in school life, and the reasons why students are dissatisfied with assistive devices are inconvenient use, limited functions, difficulty in operation, and expensive prices [

23].

Therefore, to solve this problem, this paper proposes a system that allows visually impaired students to independently perform tasks by using simple and easy-to-use auxiliary devices instead of expensive or difficult-to-operate auxiliary devices. Voice feedback is an essential element for visually impaired students to write assignments in report format without assistants, screen reading software, braille information terminals, and alternative input devices. Various methods, such as tactile sense or hearing, can be used to check whether the contents entered by the user are well entered, but the auditory method is effective to check the contents entered by the user.

3. Design and Implementation of VTR4VI

The Android version of the smartphone used to implement VTR4VI is 8.1.0, and the Bluetooth remote control is compatible with Bluetooth 3.0 version or later. In addition, VTR4VI was implemented in Ubuntu 16.04. The operation method of the Bluetooth remote control is shown in

Figure 1.

This figure shows how to use the Bluetooth remote control used in VTR4VI. First, the user can move up and down the list through the joystick and then can push the joystick to the right to select a list and push the joystick to the left to perform a back function. Afterward, the user can input a voice command through the button above and the report content through the button below. The main functions of VRT4VI are the command input button and the content input button. In addition, the X button loads existing files and allows them to create content. The triangle button allows the user to enter editing mode directly.

The VTR4VI converts speech into text by the Android built-in Speech to Text (STT) when users enter report content using voice commands through the Android app. The converted text is written in HTML format indicating the report format. Once users have created a report, they can check and edit the report through “check mode” and “edit mode”. Both modes use Android’s built-in Text to Speech (TTS) to tell the users what they have selected in the report. When the users inform VTR4VI that all checking and editing is complete, the completed report is sent to the server, which is converted to a pdf file and saved. There are both HTML and pdf reports on the server.

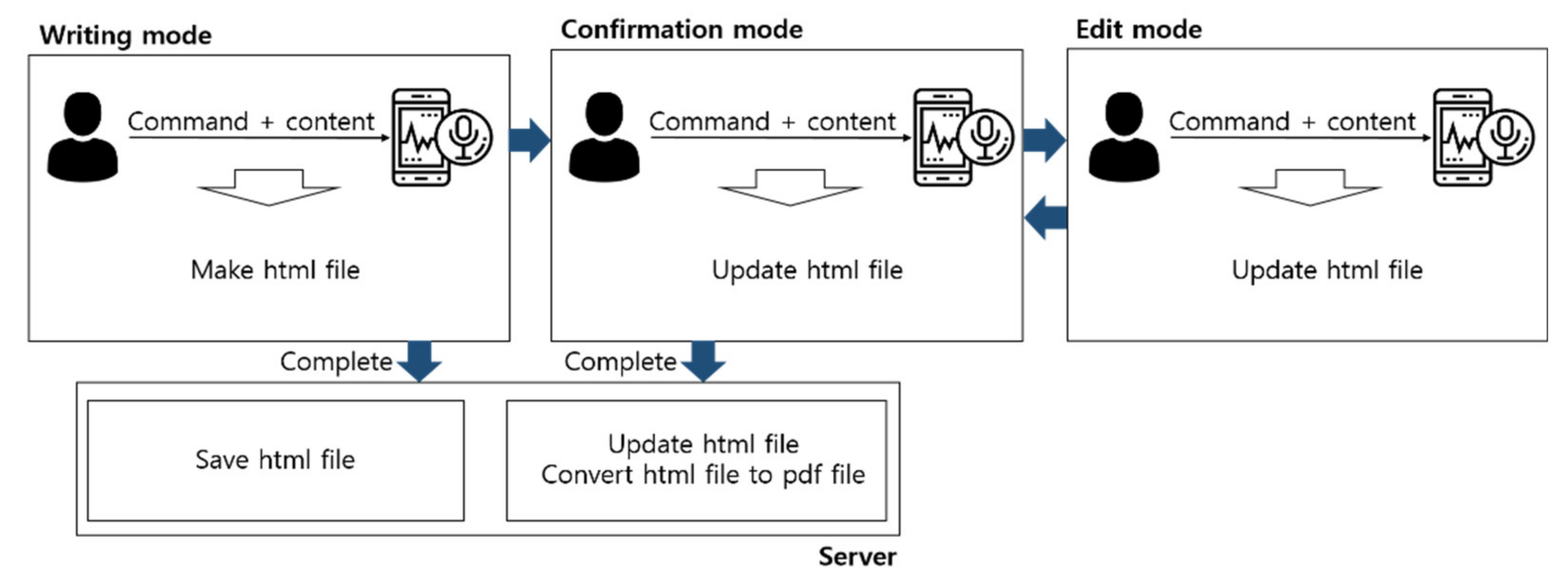

3.1. Execution Mode of VTR4VI

VTR4VI is a program that allows people with visual impairment or blindness to easily generate written reports. A simplified flowchart of the VTR4VI program is shown in

Figure 2. The VTR4VI recognizes the voice using the SpeechRecognizer class of Android, sends it to the server, converts it into text, and generates a report. All the commands needed to generate the report are voice-based. To use voice commands in VTR4VI, users enter the command after pressing the button on the Bluetooth remote. VTR4VI has thirteen commands in the writing mode: “File Name”, “Name”, “Title”, “Paragraph”, “Close”, “Insert table”, “Add row”, “End table”, “Add Chapters”, “Add Section”, “Add Lines”, “Add Spaces”, and “Complete”. Users can use them to create reports.

In the writing mode, an HTML file is created in a report format and sent to the server. After writing, confirmation mode checks the content created, and if editing is required, the user can enter edit mode. The completed report is sent to the server after updating the report by modifying words, paragraphs, chapters, and sections in edit mode.

In the check mode, users can check and edit the report using six commands: “Search”, “Paragraph”, “Add chapter”, “Add section”, “Add row”, and “Delete”. In this mode, the users can search or check the content of the paragraph units by voice and add additional paragraphs, chapters, sections, and rows that the users want. Users can also delete paragraphs, chapters, and sections that they do not want. Users can also send a completed report to the server using the “Complete” command.

In the edit mode, users can edit the report in detail using two commands: “Edit” and “Delete”. In this mode, the users can read the words that make up a paragraph, chapter, or section. When words are spoken, they can delete unwanted words or correct words that they typed incorrectly.

Finally, the “Complete” command informs the completion of the report and sends the completed HTML file to the server. The HTML file sent to the server is converted to pdf format and saved.

3.2. Writing Mode of VTR4VI

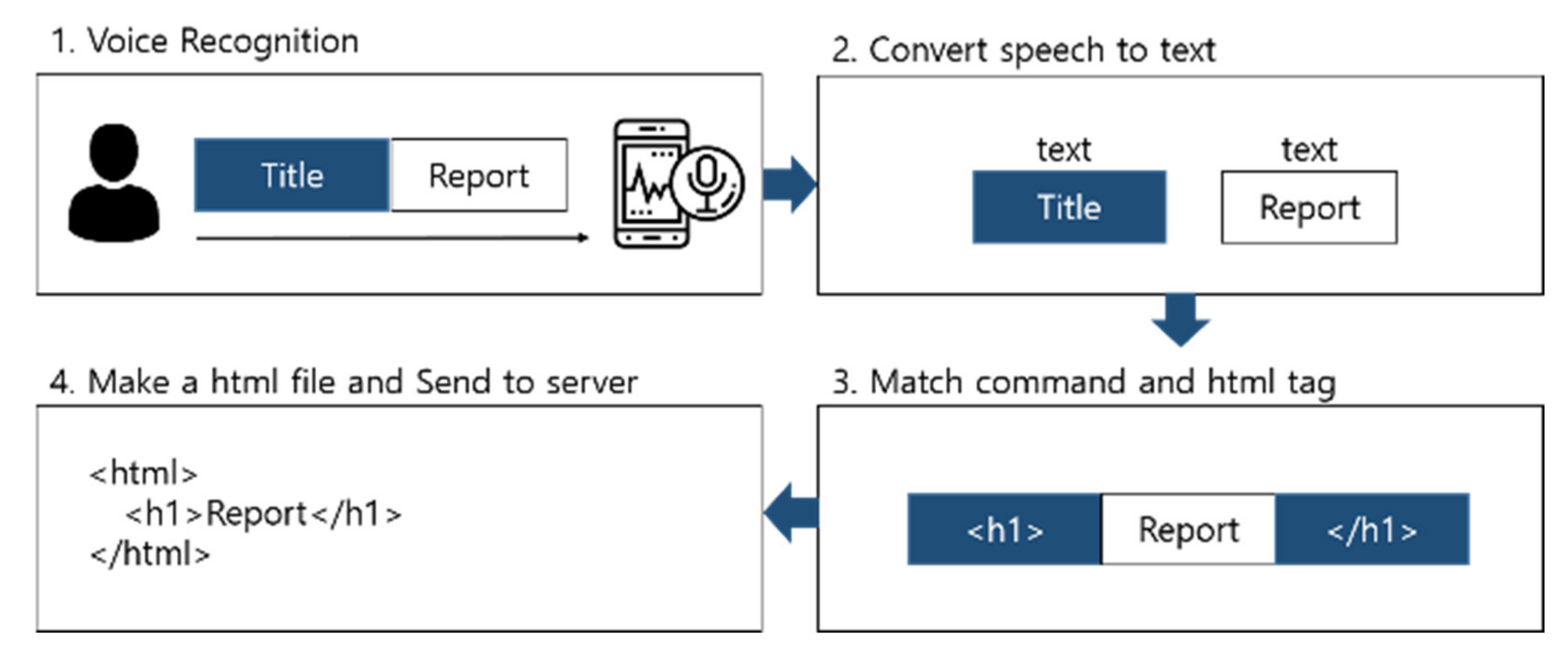

The writing function of VTR4VI is shown in

Figure 3. Users can say the command and contents to create a report. All voice commands can be entered after pressing the button on the Bluetooth remote. One click of a button allows one voice command, and voice feedback is given at each time to inform them of whether the command is well-inputted. The process of the command of the composing function is shown in

Figure 4. Users can specify the name of the HTML and pdf file through the “Filename” command and the title of the report through the “Title” command. They can use the “Name” command to write the author’s name on the report. They can then write a paragraph of the report using the “Paragraph” command. After using the “Paragraph” command to enter the content, the “Close” command completes a paragraph. They can create chapters, and sections of their report using the commands “Add Chapters” and “Add Sections”, respectively. Users can insert a table using the “Insert table” and add rows using the “Add row”. After using the “Insert table” or “Add row” command to enter table content, the “End table” command completes a table. They can add one line and one space using the “Add line” and “Add space” commands. As shown in

Table 2, all commands are matched with HTML tags and written in the report. Finally, once users have finished writing their report, they can enter check mode by using the “Complete” command.

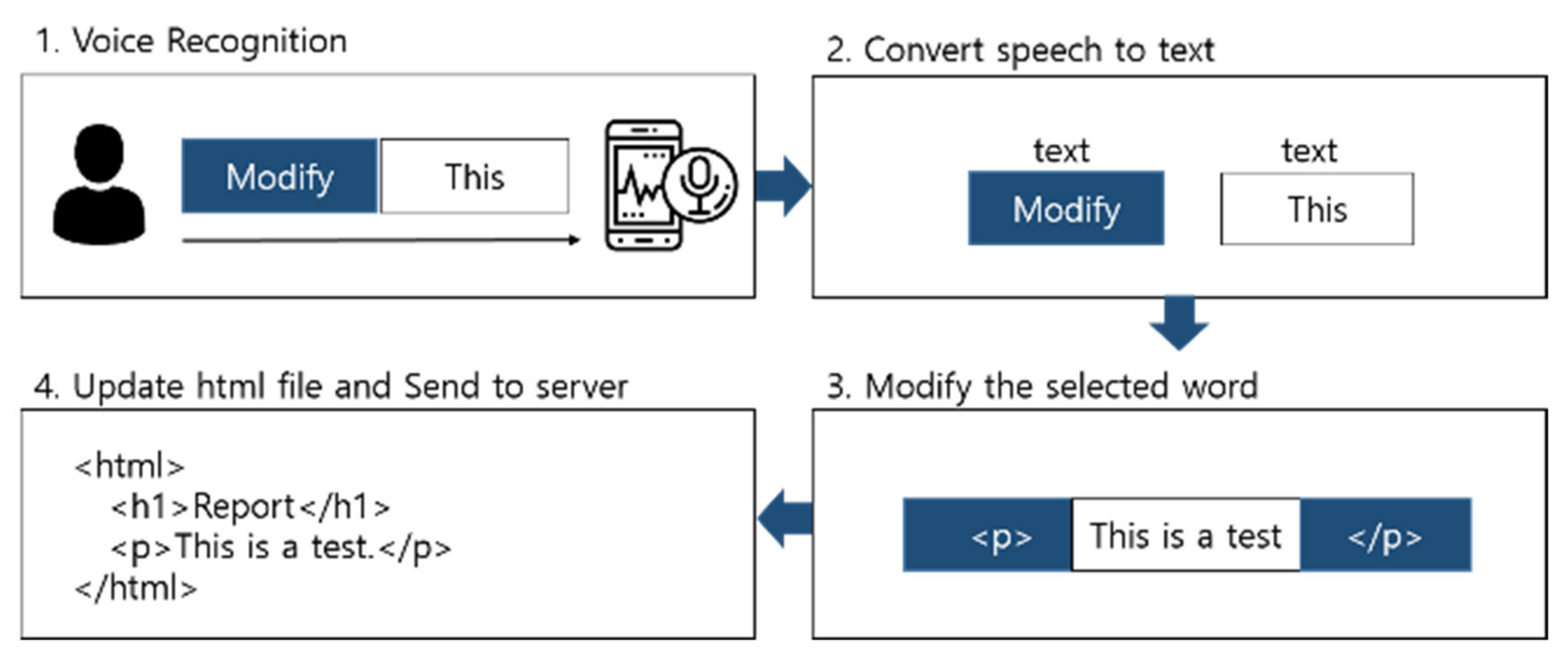

3.3. Check Mode of VTR4VI

The check function of VTR4VI is shown in

Figure 5. Users load the completed HTML file and edit it. In the check mode, commands and contents entered by the users in the writing mode are classified into a contents list. The users press the up or down button to select one of the contents lists. The selected commands and contents are informed by voice so that the users can check that the contents are well-inputted. Users can search and check the keyword they want through the “Search” command. They can also add paragraphs, chapters, sections, and rows through the “Paragraph”, “Add chapter”, “Add section”, and “Add row” commands. The added command and contents are inserted after the currently selected position. The “Delete” command can be used to delete the selected command and its contents. The users can select the command to edit and then enter the edit mode by pressing the right button. Once users have completed the report, they can use the “Complete” command to send the completed HTML-format report file to the server.

3.4. Edit Mode of VTR4VI

The editing function of VTR4VI is shown in

Figure 6. Users can select the desired content in check mode and edit it in detail. The selected contents are passed based on the whitespace, and a list is created. The users can select one from the list by pushing the up or down button, and the selected contents are informed by voice. There are two kinds of commands that can be used in the editing function: “Edit” and “Delete”. After selecting the word, the users want to edit, they can edit it with the “Edit” command. They can also delete a word by selecting it and then using the “Delete” command. The users can then return to the check mode by pressing the left button.

4. Evaluation

In this section, the efficiency and accuracy are compared and verified through the usability evaluation of the VTR4VI program developed in this study and the existing program. In addition, usability evaluation to verify the effectiveness of the use of auxiliary devices of VTR4VI is also conducted.

4.1. Participants

For the empirical evaluation, end users were asked to evaluate the system. The more participants there are, the more usability problems can be found, but considering the cost versus utility, three to five are appropriate [

24,

25]. Considering this, our evaluation was conducted by setting the number of participants to five, and the information of the participants is shown in

Table 3. The participants are Korean college students in their 20s with visual impairments.

4.2. Preparation and Limitation

In usability assessment, there are a total of two programs that can be compared with VTR4VI. The first comparison program is Google Docs. To compare VTR4VI with an existing program, the program must be able to generate and edit documents in report format using voice commands. However, it is difficult to compare properly with VTR4VI, as there are no existing programs that provide a voice command function, report formatting function, and sound notification function. As shown in

Table 4, we used Google Docs as a comparison program, which allows users to create and edit reports using voice commands.

WCFB is a program that writes documents based on voice commands for the visually impaired, but it is suitable for certain situations such as sharing and collaborating, and there is a limitation in its comparison because it does not specify a report format. It was, therefore, revealed that the comparison was difficult because other programs did not provide the report formatting function and voice feedback function provided by VTR4VI. Both Google Docs and VTR4VI use google Speech To Text technology, so the accuracy of speech recognition does not affect the evaluation results. Although Google Docs is a desktop program, there is no environmental impact resulting from its evaluation. Therefore, Google Docs was selected as a comparative program.

However, Google Docs is not a program for the visually impaired, so when evaluating Google Docs, the participants were assisted by a helper. Since Google Docs does not support the voice feedback function, it cannot be used by the visually impaired, so the helper provided the voice feedback instead. Participants can check the contents of the report read by the assistant through “From Start”, “Stop”, and “Proceed” commands. In addition, the helper informs the participant whether or not the voice command has been properly recognized through the sound of “Ding Dong” and “Beep”. Through this, VTR4VI and Google Docs were evaluated under equal conditions. The second comparison target is the result when using VTR4VI only with voice, without using Bluetooth. This comparison was performed to determine whether the use of assistive devices is effective when using VTR4VI by visually impaired people.

All participants were trained on VTR4VI and Google Docs for 10 min before performing the evaluation. Participants were given a manual on how to use each program. After training, participants conducted each experiment twice in total to evaluate the effectiveness. Participants were presented with two reports and used each program to produce the same results. If the order of evaluation programs is the same, participants can become familiar with a specific program, so the order of usability evaluation was conducted randomly.

Table 5 shows the tasks and evaluation methods of usability evaluation. Participants used an auxiliary device to evaluate VTR4VI, and when evaluating Google Docs, they proceeded with the help of helpers. When evaluating the voice version of VTR4VI, the participants proceeded with only their voice instead of an auxiliary device. For usability evaluation, the efficiency and accuracy of each task were measured, and the satisfaction level for each program was measured. The standard of efficiency was defined as the time required to complete each task.

The criterion of accuracy is whether the desired task was correctly performed with a voice command, and we measured the number of times the voice command was retried until the task was completed. The number of voice commands used in the report is shown in

Table 6. If the number of retries of the measured voice command was 0, it was evaluated as 1 point, if it was 1, 0.5 points, and if it was more than 2 times, the voice command success rate was measured.

The experiment was divided into three categories. Experiment 1 was an experiment on writing a report, Experiment 2 was an experiment on editing by paragraph, and Experiment 3 was an experiment on editing by word. The evaluation procedure of Experiment 1 is as follows: The time required to complete a given chapter, paragraph, and table, and the success rate of voice commands were measured. Chapters were written using one voice command, paragraphs were written using two voice commands, and tables were written with three or more voice commands. The evaluation procedure of Experiment 2 is as follows. It measures the time it takes to find and delete a specific paragraph in each report and the success rate of voice commands. The evaluation method in Experiment 3 involved the measurement of the time it took to find, delete, and correct a specific word in each report and the success rate of voice commands. Since it was difficult to compare separate voice commands for the tasks of Experiment 2 and Experiment 3, i.e., finding a given paragraph and finding words, the success rate of voice commands was excluded. The evaluation was conducted twice in total. Prior to the evaluation, participants were given a full explanation of the voice commands of each program and how to use them.

5. Results and Discussion

5.1. Usability Evaluation Results for Report Writing and Editing

To proceed with the

t-test, dependent variables such as time required and the number of retries were used. The

t-test is the most commonly used statistical technique that compares the averages of the two datasets measured in two different scenarios against a similar unit and ascertains whether they are significantly different from each other [

16,

26]. The

t-test was used to analyze the results. To measure the time required, the time from when the participant started to write the report to the end of editing the report was measured. The time required for each task and the success rate of voice commands for each task were measured.

Table 7 shows the average results for the measured time required and the success rate of voice commands. The following sections provide an in-depth comparative analysis of the results shown in

Table 7 for each experiment.

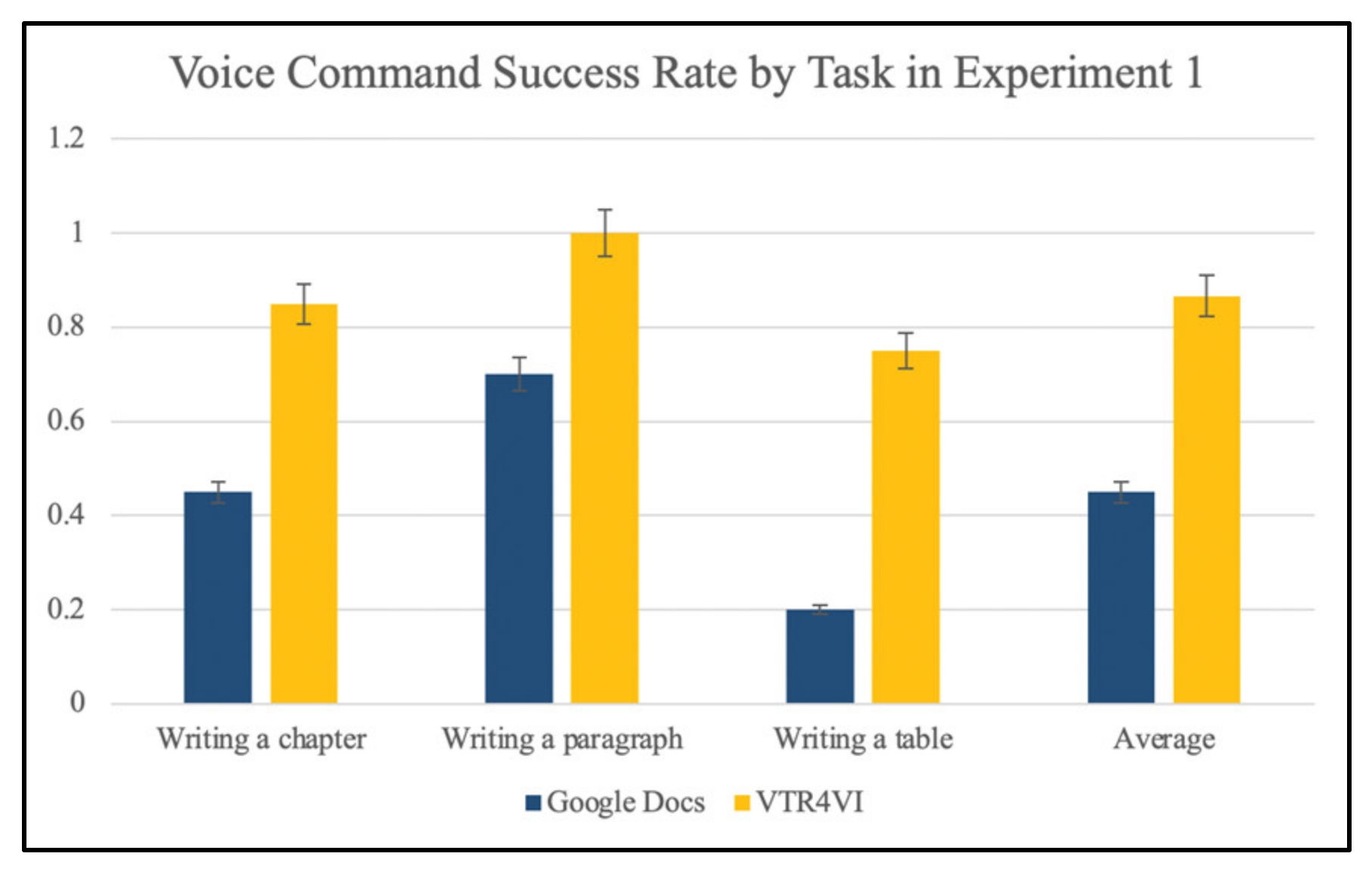

5.1.1. Analysis of Usability Evaluation Results for Experiment 1 (Writing a Report)

To compare the efficiency and accuracy of Experiment 1, the average time required and the average voice command success rates were compared. To write a given chapter, VTR4VI took an average of 26.9 s, and its voice command success rate averaged 0.85; in comparison, Google Docs averaged 33.8 s, and its success rate was 0.45. When writing a chapter, it was confirmed that Google Docs took an average of 1.257 times longer than VTR4VI and that the voice command success rate was 1.8889 times lower. As a result of conducting a t-test for the time required for chapter writing of the two programs, it was confirmed that there was no significant difference between the two groups (t = 0.92, p = 0.37 > 0.05).

To write a given paragraph, VTR4VI took an average of 62.7 s, and its voice command success rate was an average of one, whereas Google Docs took an average of 77.5 s, and its success rate was 0.7. When writing a paragraph, it was confirmed that Google Docs took an average of 1.236 times longer than VTR4VI, and the voice command success rate was 1.4286 times lower. As a result of conducting the t-test for the time required for paragraph writing of the two programs, it was confirmed that there was no significant difference between the two groups (t = 0.76, p = 0.46 > 0.05).

To write a given table, VTR4VI took an average of 100.3 s, and its average voice command success rate was 0.75, whereas Google Docs took an average of 162.9 s, and its success rate was 0.2. When writing the table, it was confirmed that Google Docs took an average of 1.624 times longer than VTR4VI, and the voice command success rate was 3.75 times lower.

The average time required for each program and the success rate of voice commands were as follows: VTR4VI took an average of 63.3 s, and its voice command success rate was 0.8667; by contrast, Google Docs took an average of 91.4 s, and its average success rate was 0.45. Therefore, when writing a given chapter, paragraph, or table, it was confirmed that Google Docs took an average of 1.444 times longer than VTR4VI, and the voice command success rate was 1.926 times lower on average.

Figure 7 and

Figure 8 show the graphs of the average time required for each task and the success rate of voice commands for Experiment 1 of VTR4VI and Google Docs. When evaluating Google Docs, VTR4VI is more efficient when there are three or more commands such as table writing and when writing a report on average, even though it was conducted under equal conditions, such as with support from an assistant providing voice feedback. It could be confirmed that it was high. In addition, regardless of the number of commands, the success rate of voice commands confirmed that VTR4VI is more effective than Google Docs in terms of accuracy in all tasks.

5.1.2. Analysis of Usability Evaluation Results for Experiment 2 (Editing by Paragraph)

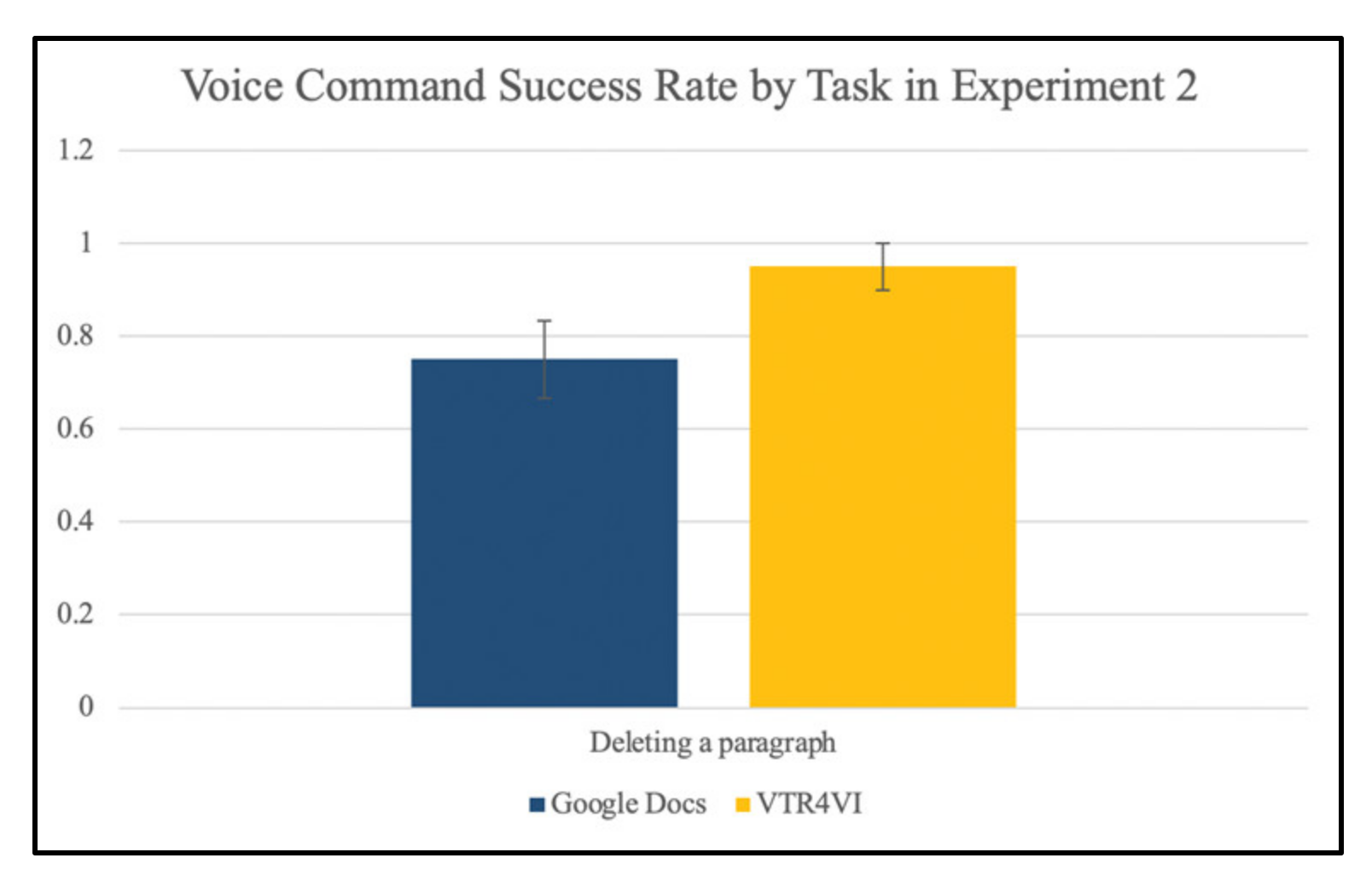

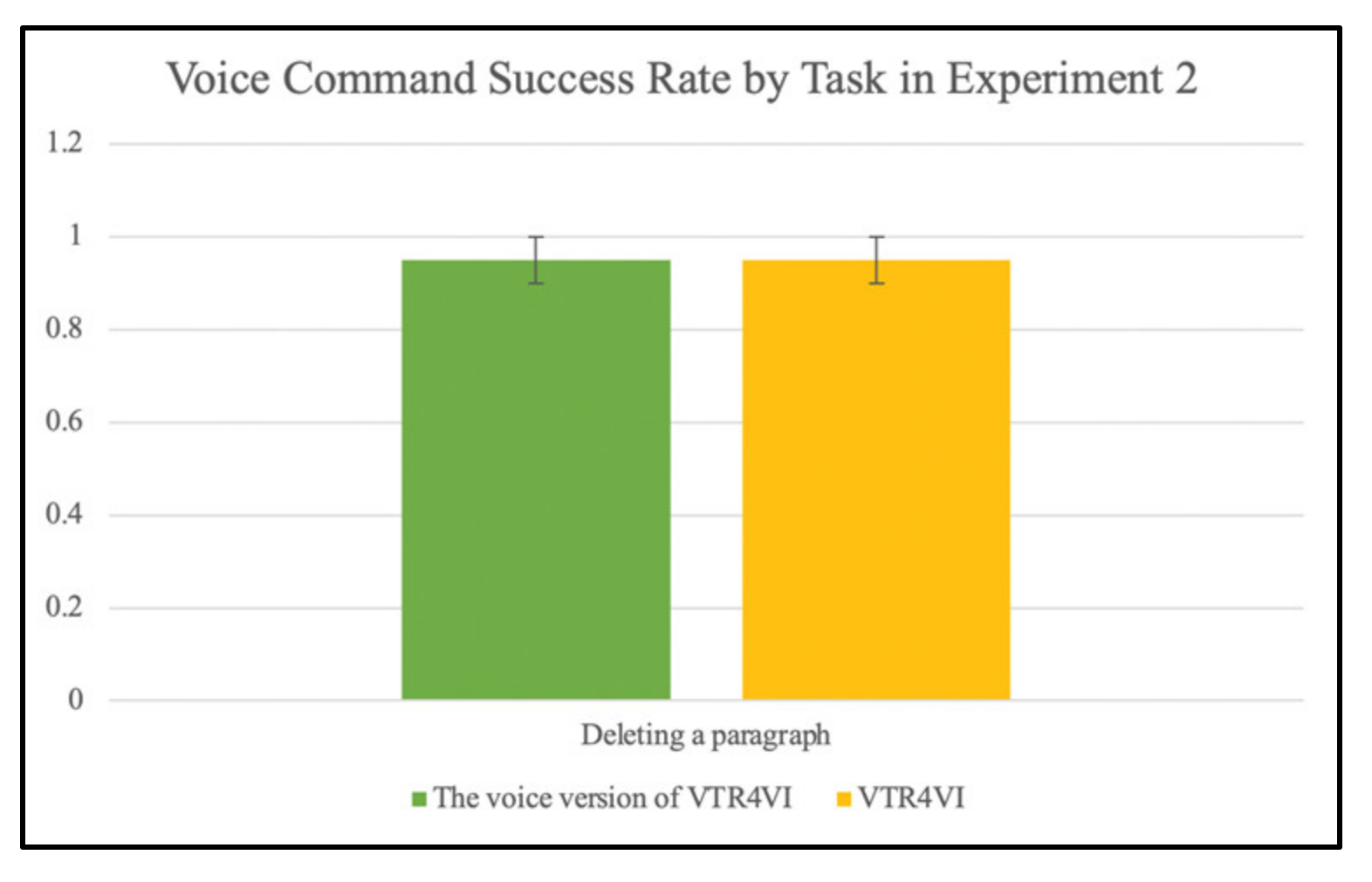

To compare the efficiency and accuracy of Experiment 2, the average time required and the average voice command success rates were compared. VTR4VI took an average of 16.1 s, whereas Google Docs took an average of 39.6 s to find a specific paragraph in each report. When searching for a specific paragraph, it was confirmed that Google Docs took an average of 2.46 times longer than VTR4VI.

To delete a specific paragraph, VTR4VI took an average of 24.7 s, and its voice command success rate was 0.95 on average, whereas Google Docs took an average of 40.9 s, and its success rate was 0.75. When deleting a specific paragraph, it was confirmed that Google Docs took an average of 1.656 times longer than VTR4VI, and its voice command success rate was 1.2667 times lower. As a result of performing the t-test on the success rate of the two programs’ paragraph deletion voice command, it was confirmed that there was no significant difference between the two groups (t = 1.809, p = 0.103 > 0.05).

The average time required for each program was as follows: VTR4VI took an average of 20.4 s, whereas Google Docs took an average of 40.25 s. Therefore, when editing by paragraph, it was confirmed that Google Docs took an average of 1.973 times longer than VTR4VI.

Figure 9 and

Figure 10 show the graphs of the average time required for each task and the success rate of voice commands for Experiment 2, comparing VTR4VI and Google Docs. Compared with Google Docs, this system is very effective in terms of efficiency and accuracy for editing by paragraph.

5.1.3. Analysis of Usability Evaluation Results for Experiment 3 (Editing by Word)

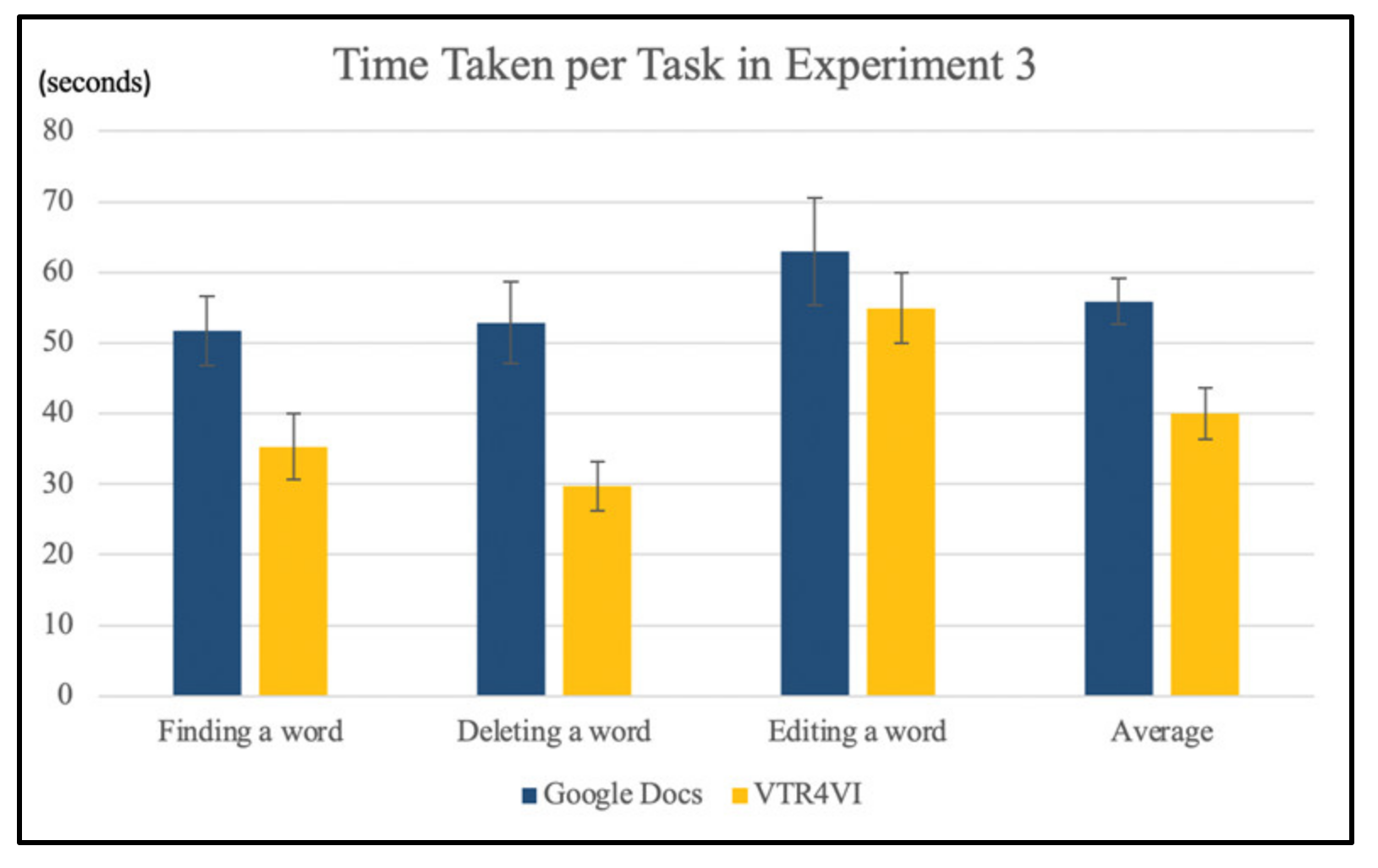

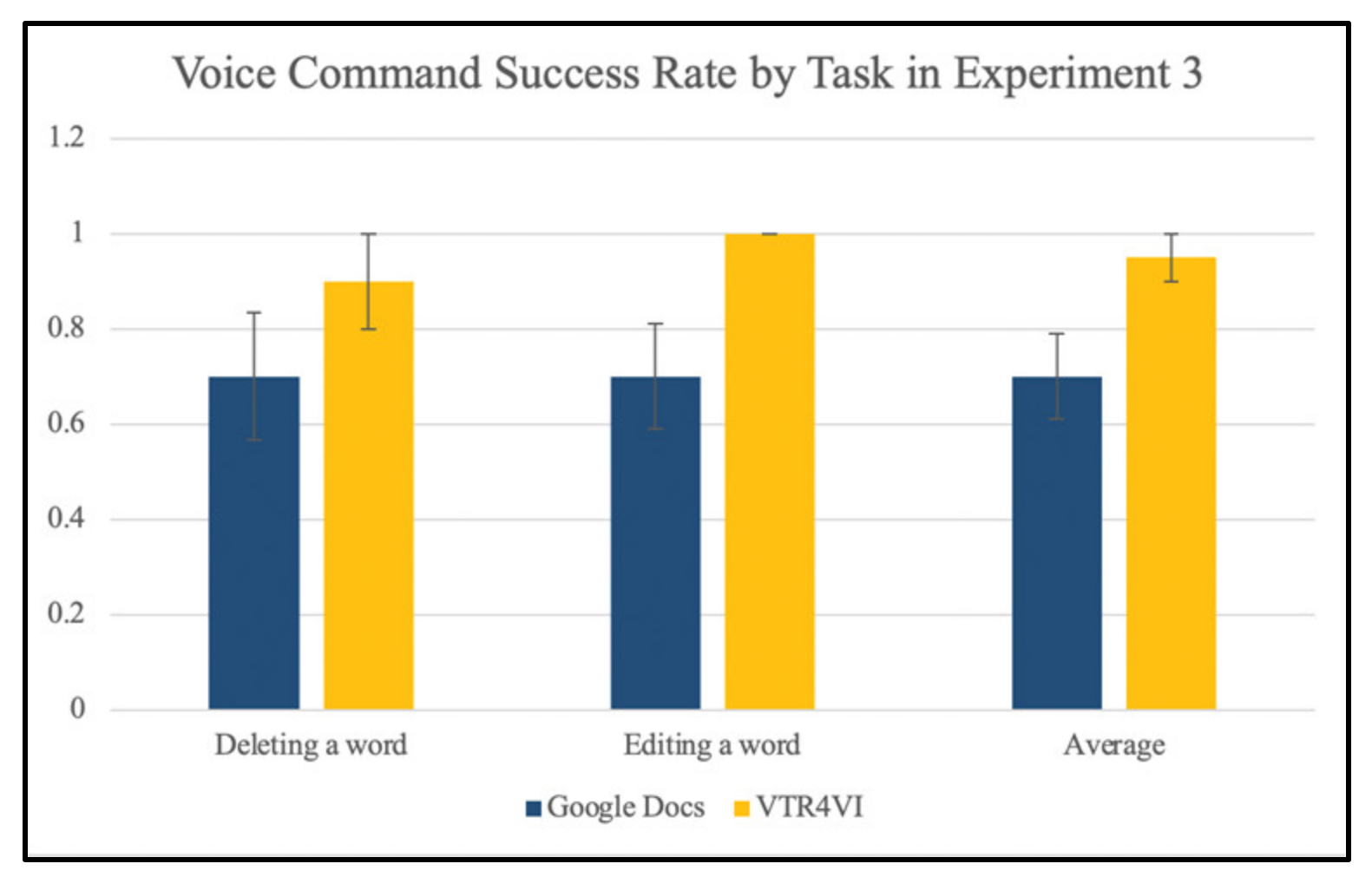

To compare the efficiency and accuracy of Experiment 3, the average time required and the average voice command success rates were compared. VTR4VI took an average of 35.3 s, whereas Google Docs took an average of 51.7 s to find a specific word in each report. When searching for a specific word, it was confirmed that Google Docs took an average of 1.465 times longer than VTR4VI.

To delete a specific word, VTR4VI took an average of 29.7 s, and its voice command success rate was 0.9 on average; by contrast, Google Docs took an average of 52.9 s, and its success rate was 0.7. When deleting a specific word, it was confirmed that Google Docs took an average of 1.781 times longer than VTR4VI, and its voice command success rate was 1.2857 times lower. As a result of performing the t-test on the success rate of word deletion voice commands of the two programs, it was confirmed that there was no significant difference between the two groups (t = 1.309, p = 0.222 > 0.05).

VTR4VI took an average of 54.9 s to change a specific word into another word, and its average voice command success rate was 1, whereas Google Docs took an average of 62.9 s, and its average success rate was 0.7. When correcting a specific word, it was confirmed that Google Docs took an average of 1.1457 times longer than VTR4VI, and its voice command success rate was 1.4286 times lower. As a result of conducting a t-test for the time required for word correction of the two programs, it was confirmed that there was no significant difference between the two groups (t = 1.021, p = 0.333 > 0.05).

The average time required for each program was as follows: VTR4VI averaged 39.96 s, and its voice command success rate averaged 0.95; in comparison, Google Docs averaged 55.83 s, and its average success rate was 0.7. Therefore, when editing by word, it was confirmed that Google Docs took an average of 1.397 times longer than VTR4VI, and its success rate was 1.357 times lower on average.

Figure 11 and

Figure 12 illustrate the graphs of the average time required for each task and the success rate of voice commands for Experiment 3, comparing VTR4VI and Google Docs. Compared with Google Docs, it was confirmed that this system is highly effective in terms of efficiency when searching for or deleting a specific word and editing by word on average. In addition, it was confirmed that it is highly effective in terms of accuracy when correcting specific words and when editing each word on average.

5.1.4. Analysis of Satisfaction Evaluation Results

For satisfaction evaluation, subjective satisfaction was measured for three items, and the results are shown in

Table 8. The satisfaction evaluation results are shown in

Figure 13, indicating that VTR4VI averaged 4.4 points and Google Docs averaged 2.73 points. VTR4VI received an average of 1.62 times higher than Google Docs. Participants evaluated that “VTR4VI was easier to use because the command was simpler than Google Docs”, and “VTR4VI seems to be more effective when editing documents than direct document writing”.

5.2. Usability Evaluation Result for the Use of Auxiliary Device

The purpose of this evaluation was to verify that the use of an assistive device is effective when VTR4VI is used by the visually impaired. The program to compare with VTR4VI was the voice version of VTR4VI, which operates only by voice, without the use of an auxiliary device. As with the previous evaluation, a

t-test was used to analyze the results. We measured the time each task took and the success rate of the voice commands for each task.

Table 9 shows the average results for the measurement time taken and the success rate of voice commands. The following sections provide an in-depth comparative analysis of the results of each experiment shown in

Table 9.

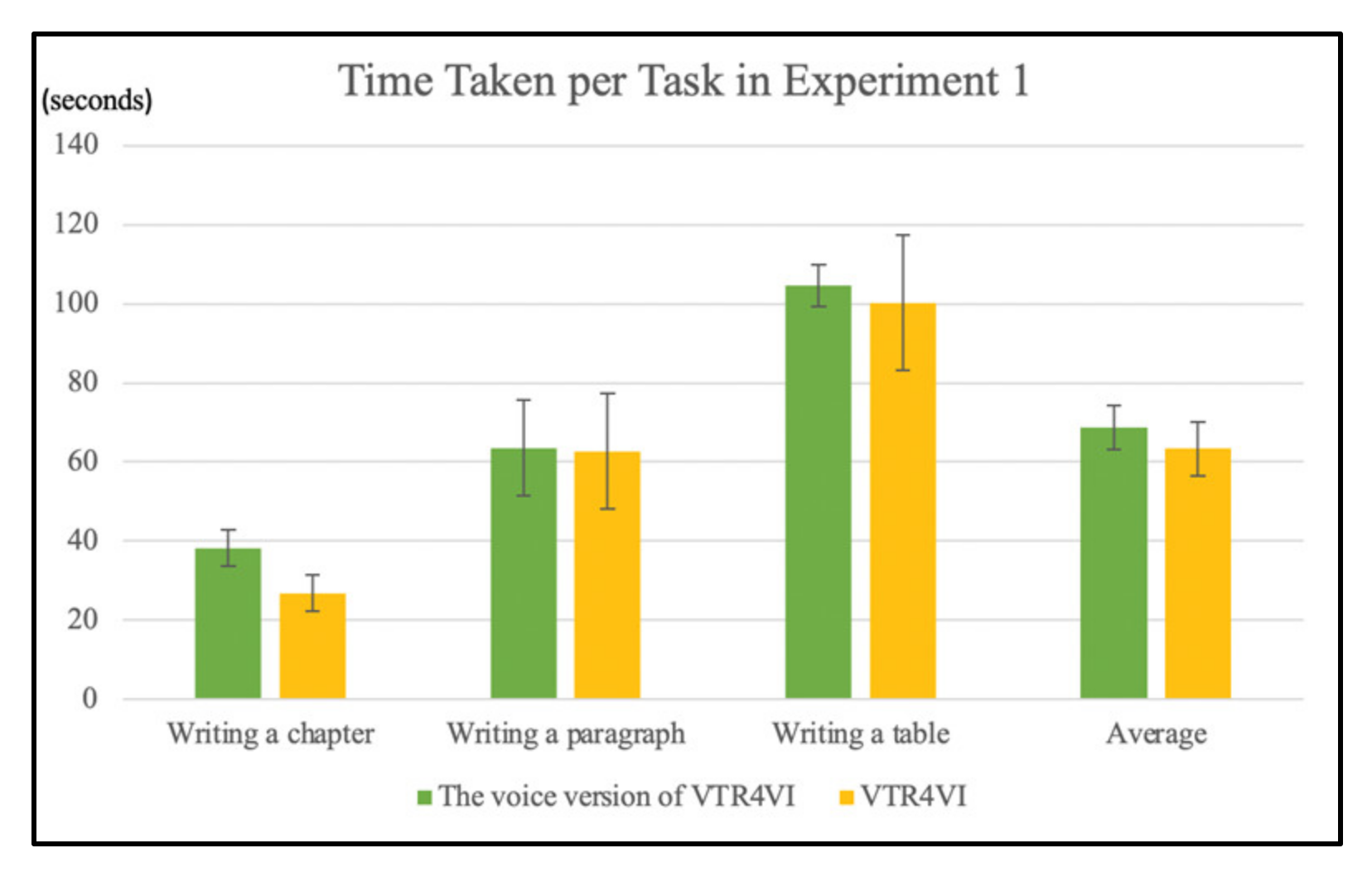

5.2.1. Analysis of Usability Evaluation Results for Experiment 1 (Writing a Report)

To compare the efficiency and accuracy of Experiment 1, the average time required and the average voice command success rates were compared. To write a given chapter, VTR4VI (with auxiliary devices) took an average of 26.9 s, and its voice command success rate averaged 0.85, whereas the voice version of VTR4VI took an average of 38.3 s, and its success rate was 0.7. When writing a chapter, it was confirmed that the voice version of VTR4VI took an average of 1.42 times longer than VTR4VI (with auxiliary devices), and its voice command success rate was 1.21 times lower.

To write a given paragraph, VTR4VI took an average of 62.7 s, and its voice command success rate was an average of 1; by contrast, the voice version of VTR4VI took an average of 63.6 s, and its success rate was 0.65. When writing the paragraph, it was confirmed that the voice version of VTR4VI took an average of 1.01 times longer than VTR4VI, and its voice command success rate was 1.54 times lower. As a result of conducting the t-test for the time required for paragraph writing of the two programs, it was confirmed that there was no significant difference between the two groups (t = 0.074, p = 0.942 > 0.05).

To create a given table, VTR4VI took an average of 100.3 s, and its voice command success rate averaged 0.75, whereas the voice version of VTR4VI took an average of 104.6 s, and its success rate was 0.8. When writing the table, it was confirmed that the voice version of VTR4VI took an average of 1.04 times longer than VTR4VI, and its voice command success rate was 1.07 times higher. As a result of conducting the t-test for the time required to prepare the tables for the two programs, it was confirmed that there was no significant difference between the two groups (t = 0.275, p = 0.789 > 0.05), and consequent to conducting the t-test for success rates, it was confirmed that there was no significant difference between the two (t = 0.557, p = 0.591 > 0.05).

The average time required for each program and the success rate of voice commands were as follows: VTR4VI took an average of 63.3 s, and its voice command success rate was 0.8667, whereas the voice version of VTR4VI took an average of 68.83 s, and its average success rate was 0.7167. Therefore, when writing a given chapter, paragraph, or table, it was confirmed that the voice version of VTR4VI took an average of 1.09 times longer than VTR4VI, and its voice command success rate was 1.21 times lower on average. As a result of performing the t-test for the average duration of the two programs, it was confirmed that there was no significant difference between the two groups (t = 0.782, p = 0.454 > 0.05).

Figure 14 and

Figure 15 show the graphs of the average time required for each task and the success rates of voice commands for Experiment 1, comparing VTR4VI and the voice version of VTR4VI. Compared with the voice version of VTR4VI, the VTR4VI has higher efficiency when performing tasks that require fewer commands, such as writing chapters. In addition, it was confirmed that, on average, the accuracy of VTR4VI was higher than the voice version of the VTR4VI when VTR4VI performed fewer commands such as writing chapters and paragraphs and when writing a report.

5.2.2. Analysis of Usability Evaluation Results for Experiment 2 (Editing by Paragraph)

To compare the efficiency and accuracy of Experiment 2, the average time required and the average voice command success rates were compared. VTR4VI took an average of 16.1 s to find a specific paragraph in each report, whereas the voice version of VTR4VI took an average of 30.2 s. When searching for a specific paragraph, it was confirmed that the voice version of VTR4VI took an average of 1.88 times as long as VTR4VI.

To delete a specific paragraph, VTR4VI took an average of 24.7 s, and its voice command success rate averaged 0.95, whereas the voice version of VTR4VI took an average of 36.5 s, and its success rate was 0.95. When deleting a specific paragraph, it was confirmed that the voice version of VTR4VI took an average of 1.48 times longer than VTR4VI, and the voice command success rate was the same.

The average time required for each program was as follows: The VTR4VI took an average of 20.4 s, whereas the voice version of the VTR4VI took an average of 33.35 s. Therefore, when editing by paragraph, it was confirmed that the voice version of VTR4VI took 1.64 times more time on average than VTR4VI.

Figure 16 and

Figure 17 show the graphs of the average time required for each task and the success rate of voice commands for Experiment 2, comparing VTR4VI and the voice version of VTR4VI. VTR4VI is highly effective in terms of efficiency for editing by paragraph compared with the voice version of VTR4VI.

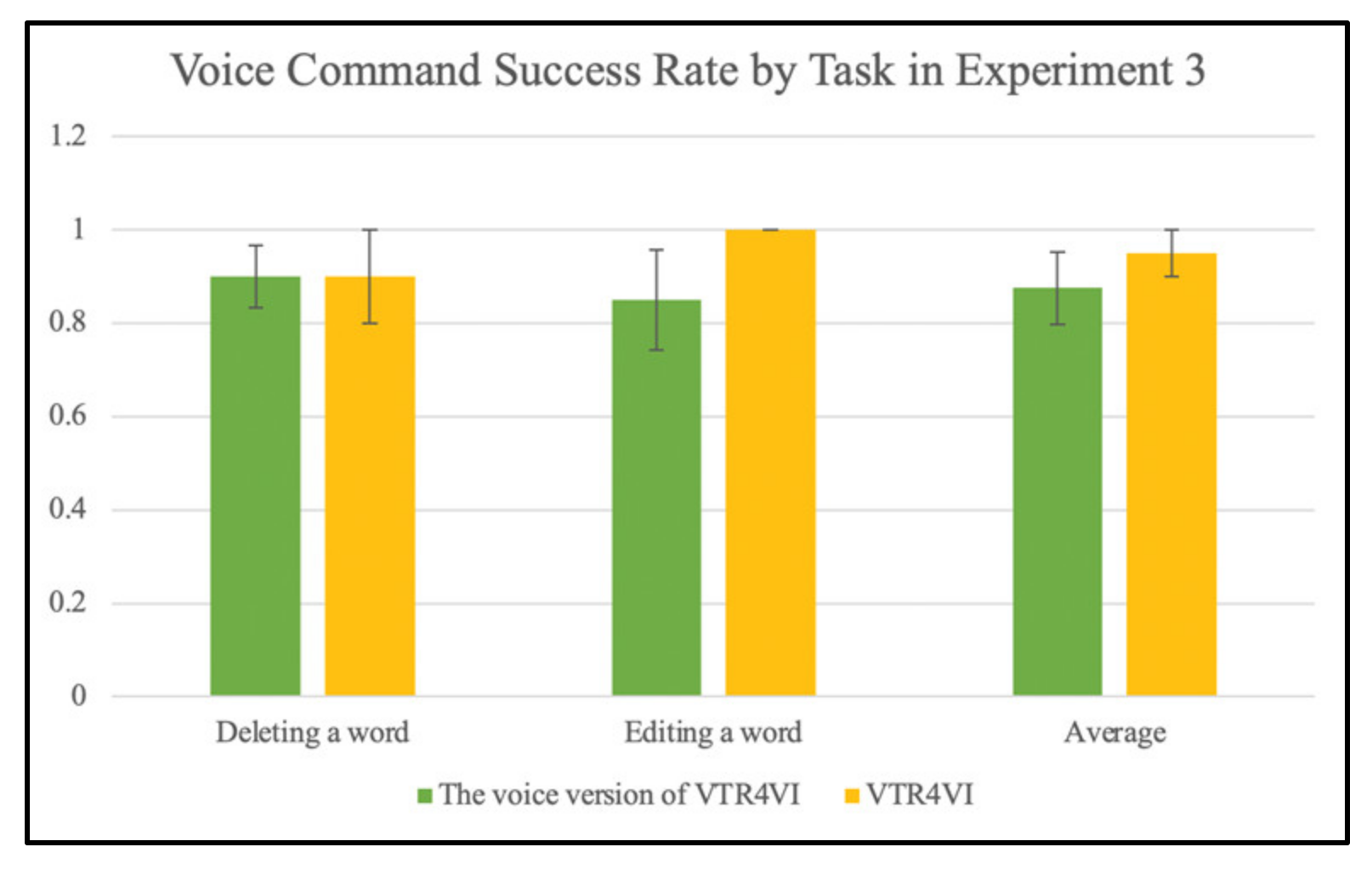

5.2.3. Analysis of Usability Evaluation Results for Experiment 3 (Editing by Word)

To compare the efficiency and accuracy of Experiment 3, the average time required and the average voice command success rates were compared. VTR4VI took an average of 35.3 s to find a specific word in each report, whereas the voice version of VTR4VI took an average of 41.7 s. When searching for a specific word, it was confirmed that the voice version of VTR4VI took an average of 1.18 times longer than that of VTR4VI. As a result of conducting a t-test for the time required to find the words of the two programs, it was confirmed that there was no significant difference between the two groups (t = 1.90, p = 0.089 > 0.05).

To delete a specific word, VTR4VI took an average of 29.7 s, and its voice command success rate was 0.9 on average, whereas the voice version of VTR4VI took 42 s on average, and its success rate was 0.9. When deleting a specific word, it was confirmed that the voice version of VTR4VI took an average of 1.4 times longer than the VTR4VI, and its voice command success rate was the same.

To change a specific word into another word, VTR4VI took an average of 54.9 s, and its voice command success rate was an average of 1, whereas the voice version of VTR4VI took an average of 61.9 s, and its success rate was an average of 0.85. When correcting a specific word, it was confirmed that the voice version of VTR4VI took 1.13 times longer than VTR4VI on average, and its voice command success rate was 1.18 times lower. As a result of conducting a t-test for the time required for word correction of the two programs, it was confirmed that there was no significant difference between the two groups (t = 1.383, p = 0.199 > 0.05).

The average time required for each program was as follows: The average VTR4VI took 39.96 s, and its average voice command success rate was 0.95; by contrast, the voice version of the VTR4VI took 48.53 s on average, and its average success rate was 0.875. Therefore, when editing by word, it was confirmed that the voice version of VTR4VI took an average of 1.2 times longer than VTR4VI, and its success rate was averaged 1.1 times lower. As a result of conducting a t-test for the average voice command success rate of the two programs, it was confirmed that there was no significant difference between the two groups (t = 0.757, p = 0.467 > 0.05).

Figure 18 and

Figure 19 illustrate the graphs of the average time required for each task and the success rate of voice commands for Experiment 3, comparing VTR4VI and the voice version of VTR4VI. Compared with the voice version of VTR4VI, VTR4VI was found to be highly effective in terms of efficiency when deleting certain words and editing each word on average. In addition, when correcting a specific word, it was confirmed that it is highly effective in terms of accuracy.

5.2.4. Analysis of Satisfaction Evaluation Results

To evaluate the satisfaction, the items in

Table 8 were measured. The satisfaction evaluation result is shown in

Figure 20. VTR4VI averaged 4.4 points, and the only voice version VTR4VI averaged 4 points. VTR4VI scored an average of 1.1 times higher than the voice version of VTR4VI. Participants evaluated that “VTR4VI was easier to use because the command was simpler than Google Docs”, and “VTR4VI seems to be more effective when editing documents than direct document writing”.

5.3. Discussion

We identified the requirements for reporting in learning for visually impaired people and developed a voice report generator accordingly. Various similar services were analyzed to add functions that were not previously provided but were essential for the use of the visually impaired, and for convenience of operation, Bluetooth devices were linked, to increase usability. In order to evaluate the usability of the developed application, an evaluation was conducted for the actual visually impaired, and the results were very significant. First, as a result of comparative evaluation with Google Docs, it was possible to write reports more accurately and quickly for all tasks. In addition, the results of user evaluation were also higher. In addition, the VTR4VI program, which uses additional Bluetooth devices, can write reports in a shorter time and can record voice command content with high accuracy.

However, several points of discussion are worth noting in this evaluation. First, although Google Docs is a desktop program, and VTR4VI is a mobile app, the environmental difference between the two was not significant when conducting a usability evaluation. All participants sat in their chairs to conduct the evaluation and input voices in the same way. Second, Google Docs offers the most similar features to the VTR4VI among existing voice memo programs but does not provide voice feedback. To solve this problem, the helper provided voice feedback to conduct a fair evaluation. Finally, Google Docs has relatively many voice commands, and VTR4VI has limited voice commands. Therefore, we set the same number of voice commands for creating a single report for both VTR4VI and Google Docs. As a result, if services considering the accessibility of the disabled, such as VTR4VI, are provided to the visually impaired, it is very useful in that the blind can learn independently, write reports, and use them for task submission. Previously, it was very difficult for the visually impaired to write documents without helpers, but this application enables more accurate and convenient report writing. It was not easy for blind people to write a report without a writing assistant. Other services have previously been provided to blind people, but they have not been sufficient, and they have the right to receive more convenient and accurate services. This provides independent learning ability to the disabled by eliminating the inconvenience of learning for the visually impaired, which can occur when the number of helpers is insufficient, and by allowing them to write documents on their own. Currently, VTR4VI only provides input and correction of text and tables, but there are more elements used in actual reports, including figures, equations, and graphs. If college students with visual impairments use them to write real reports, more factors will be needed.

6. Conclusions

In this paper, the VTR4VI program was proposed, which enables people with visual impairments to write reports using voice without any help. VTR4VI is designed for people with visual impairment or blindness using voice-only commands. Each time the function is performed, the program gives users voice feedback to inform them of the progress of the report. In VTR4VI, three modes are implemented—the writing mode, check mode, and edit mode. It recognizes voice via the VTR4VI app on a mobile device and converts it into text. The converted text is composed of the command and content pairs, and the command is matched with the HTML tag. Once users are in writing mode, they can go to check mode to review the report that was created before. After verifying commands and their contents by voice, they can add or delete paragraphs, chapters, and sections. If they want to make detailed modifications, the commands and contents to be edited can be selected, and users can enter the edit mode. In edit mode, using voice, they can also edit or delete words they want.

The performance of the application was evaluated by conducting a usability evaluation. The results of the usability assessment showed that VTR4VI is promising and useful for the visually impaired. When participants rated the program along with Google Docs, VTR4VI was rated more efficient, even though the assistant provided voice feedback for a fair evaluation. To investigate if the use of an auxiliary device is effective, a comparison was made with the voice version of the VTR4VI, which operates only by voice instead of using an auxiliary device. This result also confirmed that VTR4VI is more efficient. The participants also gave feedback such as “It is unique to generate a report using an auxiliary device and it is easy to operate” and “Voice feedback is very convenient”.

This research is meaningful in that it contributes to achieving digital equality by providing convenient services to the visually impaired who experience considerable inconvenience during their studies. In general, many software programs continue to appear, but there are scarce software programs that consider the accessibility of the disabled, so people with disabilities are likely to feel the information gap. Therefore, continuous research and development related to the disabled are essential, so we tried to develop software that could help the visually impaired in real life. As a result, the development of VTR4VI has made it possible for visually impaired students to perform more convenient tasks and studies.

In the future, VTR4VI will provide spell checking and the creation and editing of additional elements such as equations, images, and graphs. We will also develop VTR4VI through feedback by conducting usability evaluations for more blind people. Furthermore, if this program is commercialized, many visually impaired students will be able to have a convenient college life.

Author Contributions

Conceptualization, J.C. and J.L.; methodology, J.C.; software, J.C.; validation, J.C., Y.S. and J.L.; formal analysis, Y.S.; writing—original draft preparation, J.C.; writing—review and editing, Y.S.; project administration, J.L. These authors contributed equally to this work. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Research Foundation of Korea (NRF) grant funded by the Korean government (MSIT) (No. 2022R1F1A1063408, NRF-2022H1D8A303739411).

Institutional Review Board Statement

We obtained Sookmyung Women’s University IRB approval before we began this research.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kim, H.Y. A Survey of the Factors on Major Decision & Vocational Preparations of College Students with Visual Impairments. Korean J. Vis. Impair. 2013, 29, 1–23. [Google Scholar]

- Lourens, H.; Swartz, L. Experiences of visually impaired students in higher education: Bodily perspectives on inclusive education. Disability. Soc. 2016, 31, 240–251. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lee, W.; Lee, J.H.; Lee, H.N. A Study on the Relationship between the Disability Identity of Students with Disabilities and their Adjustment to College: Moderating Effect of Perceived Social Support. Spec. Educ. Res. 2011, 10, 245–268. [Google Scholar] [CrossRef]

- Won, J.R. A Study on Support Service Program of College Students with Disabilities. J. Spec. Educ. 2001, 8, 47–70. [Google Scholar]

- Jo. H.G. A Narrative Inquiry into College Life of Student with Severe Visual Impairment. J. Korean Soc. Wellness 2006, 11, 209–226. [Google Scholar]

- Choi, S.K. Phenomenological Research on the Meaning of Visually-Impaired Undergraduate Freshmen’s University Entrance and their School Life Experiences: At a crossroads before taking a leave of absence. J. Korea Contents Assoc. 2018, 18, 36–50. [Google Scholar]

- Chung, C.C. A Study on Improvement Plans for Educational Support Systems for College Students with Disabilities. J. Spec. Educ. Theory Pract. 2007, 8, 109–132. [Google Scholar]

- Son, S.H.; Lee, Y.; Cho, S.H.; Moon, J.Y.; Ma, J.S.; Kang, M. An Analysis of Research Trends and Practices in Higher education for college students with Disabilities. Korea Educ. Rev. 2013, 19, 189–217. [Google Scholar]

- Grant, P.; Spencer, L.; Arnoldussen, A.; Hogle, R.; Nau, A.; Szlyk, J.; Seiple, W. The functional performance of the BrainPort V100 device in persons who are profoundly blind. J. Vis. Impair. Blind. 2016, 110, 77–88. [Google Scholar] [CrossRef] [Green Version]

- McKenzie, A.R. The use of learning media assessments with students who are deaf-blind. J. Vis. Impair. Blind. 2007, 101, 587–600. [Google Scholar] [CrossRef]

- Evans, S.; Douglas, G. E-learning and blindness: A comparative study of the quality of an e-learning experience. J. Vis. Impair. Blind. 2008, 102, 77–88. [Google Scholar] [CrossRef]

- Kang, J.G.; Kim, R.K. An Analysis of the Recent Study Trend of Visual Impairments. Korean J. Vis. Impair. 2011, 27, 103–133. [Google Scholar]

- JAWS. Available online: https://www.freedomscientific.com/products/software/jaws/ (accessed on 9 May 2022).

- Naunce. Available online: https://www.nuance.com/index.html (accessed on 9 May 2022).

- Baker, J. The DRAGON system—An overview. IEEE Trans. Acoust. Speech Signal Process. 1975, 23, 24–29. [Google Scholar] [CrossRef]

- Waqar, M.M.; Aslam, M.; Farhan, M. An Intelligent and Interactive Interface to Support Symmetrical Collaborative Educational Writing among Visually Impaired and Sighted Users. Symmetry 2001, 11, 238. [Google Scholar] [CrossRef] [Green Version]

- Google Live Transcribe. Available online: https://www.android.com/accessibility/live-transcribe (accessed on 9 May 2022).

- Google Cloud. Available online: https://cloud.google.com (accessed on 9 May 2022).

- Trint. Available online: https://trint.com (accessed on 9 May 2022).

- Braina. Available online: https://www.brainasoft.com/braina (accessed on 9 May 2022).

- Suciu, G.; Dobre, R.A.; Butca, C.; Suciu, V.; Mihaila, I.; Cheveresan, R. Search based applications for speech processing. In Proceedings of the 2016 8th International Conference on Electronics, Computers and Artificial Intelligence (ECAI), Ploiesti, Romania, 30 June–2 July 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Google Docs. Available online: https://docs.google.com/ (accessed on 9 May 2022).

- Chae, H.T. A study on the Actual Condition and needs of University Student with Disabillities to support Assistive Technology Service. J. Vocat. Rehabil. 2011, 21, 73–93. [Google Scholar]

- Nielsen, J.; Thomas, K.L. A mathematical model of the finding of usability problems. In Proceedings of the INTERACT ’93 and CHI ’93 Conference on Human Factors in Computing Systems (CHI ’93), Amsterdam, The Netherlands, 24–29 April 1993; pp. 206–213. [Google Scholar] [CrossRef]

- Conyer, M. User and usability test-how it should be undertaken? Aust. J. Educ. Technol. 1995, 11, 38–51. [Google Scholar]

- Raju, T.N.K. William Sealy Gosset and William, A. Silverman: Two “Students” of science. Pediatrics 2005, 116, 732–735. [Google Scholar] [CrossRef] [PubMed]

Figure 1.

How to use VTR4VI’s auxiliary device.

Figure 1.

How to use VTR4VI’s auxiliary device.

Figure 2.

Simple flowchart of VTR4VI. This figure is the overall operational process of VTR4VI.

Figure 2.

Simple flowchart of VTR4VI. This figure is the overall operational process of VTR4VI.

Figure 3.

An example of writing mode operation. This figure shows how the writing mode works. Entering the voice “Title Report” will divide the command and its content into text. Then, the command is matched with “h1” tag of HTML and written in the HTML file.

Figure 3.

An example of writing mode operation. This figure shows how the writing mode works. Entering the voice “Title Report” will divide the command and its content into text. Then, the command is matched with “h1” tag of HTML and written in the HTML file.

Figure 4.

Command processing process in writing mode.

Figure 4.

Command processing process in writing mode.

Figure 5.

An example of check mode operation. This figure shows the operation of the check mode. When the voice command “Paragraph It is a test” is entered, the command and its content are divided and converted into text. “Paragraph” is converted into a “p” tag of HTML and added to the HTML file.

Figure 5.

An example of check mode operation. This figure shows the operation of the check mode. When the voice command “Paragraph It is a test” is entered, the command and its content are divided and converted into text. “Paragraph” is converted into a “p” tag of HTML and added to the HTML file.

Figure 6.

An example of edit mode operation. This figure shows the operation of the check mode. When the voice command “Paragraph It is a test” is entered, the command and its content are divided and converted into text. “Paragraph” is converted into a “p” tag.

Figure 6.

An example of edit mode operation. This figure shows the operation of the check mode. When the voice command “Paragraph It is a test” is entered, the command and its content are divided and converted into text. “Paragraph” is converted into a “p” tag.

Figure 7.

Time Taken by Task Graph for Experiment 1 of Google Docs and VTR4VI.

Figure 7.

Time Taken by Task Graph for Experiment 1 of Google Docs and VTR4VI.

Figure 8.

Voice Command Success Rate per Task Graph for Experiment 1 in Google Docs and VTR4VI.

Figure 8.

Voice Command Success Rate per Task Graph for Experiment 1 in Google Docs and VTR4VI.

Figure 9.

The graph of time taken per task in Experiment 2, comparing Google Docs and VTR4VI.

Figure 9.

The graph of time taken per task in Experiment 2, comparing Google Docs and VTR4VI.

Figure 10.

Voice command success rates of Google Docs and VTR4VI.

Figure 10.

Voice command success rates of Google Docs and VTR4VI.

Figure 11.

The graph of time taken per task in Experiment 3, comparing Google Docs and VTR4VI.

Figure 11.

The graph of time taken per task in Experiment 3, comparing Google Docs and VTR4VI.

Figure 12.

The graph of voice command success rate per task in Experiment 3, comparing Google Docs and VTR4VI.

Figure 12.

The graph of voice command success rate per task in Experiment 3, comparing Google Docs and VTR4VI.

Figure 13.

Satisfaction evaluation graph of Google Docs and VTR4VI.

Figure 13.

Satisfaction evaluation graph of Google Docs and VTR4VI.

Figure 14.

The graph of time taken per task for Experiment 1, comparing the voice version of VTR4VI and VTR4VI.

Figure 14.

The graph of time taken per task for Experiment 1, comparing the voice version of VTR4VI and VTR4VI.

Figure 15.

The graph of voice command success rate per task for Experiment 1, comparing the voice version of VTR4VI and VTR4VI.

Figure 15.

The graph of voice command success rate per task for Experiment 1, comparing the voice version of VTR4VI and VTR4VI.

Figure 16.

The graph of time taken per task for Experiment 2, comparing the voice version of VTR4VI and VTR4VI.

Figure 16.

The graph of time taken per task for Experiment 2, comparing the voice version of VTR4VI and VTR4VI.

Figure 17.

The graph of voice command success rate per task for Experiment 2, comparing the voice version of VTR4VI and VTR4VI.

Figure 17.

The graph of voice command success rate per task for Experiment 2, comparing the voice version of VTR4VI and VTR4VI.

Figure 18.

The graph of time taken per task for Experiment 3, comparing the voice version of VTR4VI and VTR4VI.

Figure 18.

The graph of time taken per task for Experiment 3, comparing the voice version of VTR4VI and VTR4VI.

Figure 19.

The graph of voice command success rate per task for Experiment 3, comparing the voice version of VTR4VI and VTR4VI.

Figure 19.

The graph of voice command success rate per task for Experiment 3, comparing the voice version of VTR4VI and VTR4VI.

Figure 20.

Satisfaction evaluation graph of the voice version of VTR4VI and VTR4VI.

Figure 20.

Satisfaction evaluation graph of the voice version of VTR4VI and VTR4VI.

Table 1.

Comparison Table of Existing Services.

Table 1.

Comparison Table of Existing Services.

| | JAWS | Dragon NaturallySpeaking | WCFB | Google Live Transcribe | Trint | Braina | Google

Docs |

|---|

| User | Visually impaired | Non-visually impaired | Visually impaired | Non-visually impaired and Deaf | Non-visually impaired | Non-visually impaired | Non-visually impaired |

| Purpose | Screen reader | Speech to text conversion | Word processor | Speech to text conversion | Speech to text conversion | Speech to text conversion | Word Processor |

| Input | Voice | Voice | Voice | Voice | Voice | Voice | Voice and Text |

| Output | Voice feedback | Text | Text and Voice feedback | Text | Text | Text | Text |

| Number of users | Single | Single | Group | Single | Single | Single | Single and Group |

| Equipment used | Desktop | Desktop and Mobile | Desktop | Desktop and Mobile | Desktop | Desktop | Desktop and Mobile |

Table 2.

HTML tag matching command of VTR4VI.

Table 2.

HTML tag matching command of VTR4VI.

| Command | HTML Tag |

|---|

| Filename | <title></title> |

| Title | <h1></h1> |

| Name | <div></div> |

| Add Chapter | <h2></h2> |

| Add Section | <h3></h3> |

| Insert Table | <table> |

| Add row | <tr></tr> |

| End Table | </table> |

| Paragraph | <p> |

| Add Line | <br/> |

| Add Space | |

| Close | </p> |

Table 3.

Details of usability evaluation participants.

Table 3.

Details of usability evaluation participants.

| No | Age | Gender | Degree of Disability | Frequency of Speech Recognition

(Usually/Sometimes/Rarely) |

|---|

| 1 | 24 | Female | Total blindness | Usually |

| 2 | 20 | Male | Total blindness | Rarely |

| 3 | 21 | Female | Total blindness | Sometimes |

| 4 | 21 | Male | The first degree of visual impairment level | Usually |

| 5 | 24 | Male | Total blindness | Usually |

Table 4.

Comparison table of related research with VTR4VI. VTR4VI and 7 similar services are listed below. For each service, whether or not the functions required for the voice report generator of the blind are supported is marked with O and X.

Table 4.

Comparison table of related research with VTR4VI. VTR4VI and 7 similar services are listed below. For each service, whether or not the functions required for the voice report generator of the blind are supported is marked with O and X.

| | VTR4VI | JAWS | Dragon NaturallySpeaking | WCFB | Google Live Transcribe | Trint | Braina | Google Docs |

|---|

| Write the voice as text | O | X | O | O | O | O | O | O |

| Write in report format | O | X | X | X | X | X | X | O |

| Real-time feedback on what users have written | O | X | X | X | X | X | X | X |

| Modify or edit the content using voice command | O | X | O | O | X | X | X | O |

| Voice feedback for the visually impaired | O | X | X | O | X | X | X | X |

Table 5.

The tasks and evaluation methods of usability evaluation.

Table 5.

The tasks and evaluation methods of usability evaluation.

| Experiment | Task | Evaluation Method |

|---|

| Google Docs | VTR4VI Used with Bluetooth Devices | VTR4VI with Voice Only without Bluetooth Device |

|---|

Experiment1

(Writing a report) | Writing a chapter | Participants proceed with a helper. | Participants proceed using auxiliary device. | Participants proceed using only their own voices. |

| Writing a paragraph |

| Writing a table |

Experiment2

(Editing by paragraph) | Finding a paragraph |

| Deleting a paragraph |

Experiment3

(Editing by word) | Finding a word |

| Deleting a word |

| Editing a word |

Table 6.

Number of voice commands per task.

Table 6.

Number of voice commands per task.

| Experiment | Task | Number of Voice Command |

|---|

| Google Docs | VTR4VI Used with Bluetooth Devices | VTR4VI with Voice Only without Bluetooth Device |

|---|

Experiment1

(Writing a report) | Writing a chapter | 1 | 1 | 1 |

| Writing a paragraph | 2 | 2 | 2 |

| Writing a table | 4 | 4 | 4 |

Experiment2

(Editing by paragraph) | Finding a paragraph | - | - | - |

| Deleting a paragraph | 1 | 1 | 1 |

Experiment3

(Editing by word) | Finding a word | - | - | - |

| Deleting a word | 1 | 1 | 1 |

| Editing a word | 1 | 1 | 1 |

Table 7.

Efficiency and accuracy measurement results for each task of Google Docs and VTR4VI.

Table 7.

Efficiency and accuracy measurement results for each task of Google Docs and VTR4VI.

| Experiment | Task | Google Docs | VTR4VI |

|---|

Time

(Seconds) | Voice Command Success Rate | Time

(Seconds) | Voice Command Success Rate |

|---|

Experiment1

(Writing a report) | Writing a chapter | 33.8 | 0.45 | 26.9 | 0.85 |

| Writing a paragraph | 77.5 | 0.7 | 62.7 | 1 |

| Writing a table | 162.9 | 0.2 | 100.3 | 0.75 |

Experiment2

(Editing by paragraph) | Finding a paragraph | 39.6 | - | 16.1 | - |

| Deleting a paragraph | 40.9 | 0.75 | 24.7 | 0.95 |

Experiment3

(Editing by word) | Finding a word | 51.7 | - | 35.3 | - |

| Deleting a word | 52.9 | 0.7 | 29.7 | 0.9 |

| Editing a word | 62.9 | 0.7 | 54.9 | 1 |

Table 8.

Satisfaction evaluation indicators.

Table 8.

Satisfaction evaluation indicators.

| No | Item | Scale |

|---|

| 1 | Are you willing to recommend it to others? | 5-point scale |

| 2 | Do you intend to use it again? | 5-point scale |

| 3 | Do you find this report generator convenient? | 5-point scale |

Table 9.

Efficiency and accuracy measurement results for each task of the voice version of VTR4VI and VTR4VI.

Table 9.

Efficiency and accuracy measurement results for each task of the voice version of VTR4VI and VTR4VI.

| Experiment | Task | The Voice Version of VTR4VI | VTR4VI |

|---|

Time

(Seconds) | Voice Command Success Rate | Time

(Seconds) | Voice Command Success Rate |

|---|

Experiment1

(Writing a report) | Writing a chapter | 38.3 | 0.7 | 26.9 | 0.85 |

| Writing a paragraph | 63.6 | 0.65 | 62.7 | 1 |

| Writing a table | 104.6 | 0.8 | 100.3 | 0.75 |

Experiment2

(Editing by paragraph) | Finding a paragraph | 30.2 | - | 16.1 | - |

| Deleting a paragraph | 36.5 | 0.95 | 24.7 | 0.95 |

Experiment3

(Editing by word) | Finding a word | 41.7 | - | 35.3 | - |

| Deleting a word | 42 | 0.9 | 29.7 | 0.9 |

| Editing a word | 61.9 | 0.85 | 54.9 | 1 |

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).