Abstract

Cloud computing is now established as a viable alternative to on-premise infrastructure from both a system administration and cost perspective. This research provides insight into cluster computing performance and variability in cloud-provisioned infrastructure from two popular public cloud providers, Amazon Web Services (AWS) and Google Cloud Platform (GCP). In order to evaluate the perforance variability between these two providers, synthetic benchmarks including Memory bandwidth (STREAM), Interleave or Random (IoR) performance, and Computational CPU performance by NAS Parallel Benchmarks-Embarrassingly Parallel (NPB-EP) were used. A comparative examination of the two cloud platforms is provided in the context of our research methodology and design. We conclude with a discussion of the results of the experiment and an assessment of the suitability of public cloud platforms for certain types of computing workloads. Both AWS and GCP have their strong points, and this study provides recommendations depending on user needs for high throughput and/or performance predictability across CPU, memory, and Input/Output (I/O). In addition, the study discusses other factors to help users decide between cloud vendors such as ease of use, documentation, and types of instances offered.

1. Introduction

Cloud computing is now well established with characteristics such as on-demand-self-service, broad network access, resource pooling, rapid elasticity, and measured service []. Some of the leading Cloud Service Providers (CSPs) include Amazon, Google, Azure, Oracle, among others. When selecting between the different Cloud Service Providers (CSPs), consumers need to evaluate them based on their needs and factors such as performance, security, price, reliability, storage, etc.

CSPs generally offer three types of service models, including Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS). IaaS provides user access to the Application, Data, Runtime, Middleware, and OS layers in the stack. This would include access to virtual machines (VMs), storage, networking, etc. On the other hand, PaaS lets users manage the Application and Data layers and is suited to developers without being concerned about the underlying infrastructure. In SaaS, the provider provides access to their software and manages all underlying servers and networking.

This research provides a vendor-neutral performance assessment of IaaS cloud performance and variability using benchmarking to compare memory bandwidth, IO performance, and computational (CPU) performance of cloud-hosted clusters of VMs. We measure these metrics with an increasing number of nodes in the cluster. The benchmarks we used for memory bandwidth, IO performance, and computational performance are STREAM, IOR (Interleave or Random), and NPB-EP, respectively. The benchmark results were analyzed to determine the scalability, statistical significance, and performance unpredictability of AWS as compared to GCP. Due to the multi-tenant nature of public IaaS clouds, user applications may be impacted by the noisy neighbor scenario, leading to performance unpredictability. This research attempts to capture this impact. The Message Passing Interface (MPI) versions of the benchmarks were used in the cluster.

2. Cloud Computing Platform

AWS—Elastic Cloud Computing (EC2) and Google Cloud Platform (GCP) Services

We researched the services provided by AWS Elastic Cloud Computing (EC2) and GCP. Factors such as architecture, types of instances offered, performance, security, and reliability were researched and documented here. Details regarding the two platforms are shown in Table 1. Please note that this is only intended as an introduction to services offered and certainly not intended to be an exhaustive list and is subject to change by cloud vendors at any time.

Table 1.

Cloud Services.

3. Related Work

Li et al. in [] also performed a similar study to compare the performance of GCP with EC2. They measured four service features—communication, memory, storage, and computation []. For memory, they used data throughput as the capability metric and the STREAM benchmark []. For storage, they used transaction speed and data throughput as the capability metric and Bonnie++ as their benchmark []. For computation, they used transaction speed and performance/price ratio as the capability metric and NPB-MPI as their benchmark []. The authors revealed both potential advantages and disadvantages of applying GCP to scientific computing. They suggested that GCP may be better suited in comparison to EC2 for applications that require frequent disk operations []. However, GCP may not be best suited for VM-based parallel computing.

Villalpando et al. in [] showcased a design of a performance analysis platform that included infrastructure and a framework for performance measurement for the design, validation, and comparison of performance models and algorithms for cloud computing []. The aim of this study was to help users better understand and compare the relationships between performance attributes of different cloud systems [].

Shoeb et al. in [] performed a comparative study on I/O performance between computing- and storage-optimized instances of Amazon EC2. The study compared the performance and cost of these instances. The authors concluded that the I/O performance of the same instance type varies over different time periods [].

Prasetio et al. in [] discuss the performance of distributed database systems in a cloud computing environment, since distributed database systems in cloud computing environments can cause performance issues. The objective of this study was to help users choose the most appropriate cloud computing platform for their needs. They used AWS as the cloud platform for their study and concluded that the choice between cloud and local hosting may depend on factors like query complexity, network latency, among other factors [].

The research presented in this paper is comprehensive because we have used system-level benchmarks that capture performance metrics across memory, computing, and I/O tiers. It is known that public clouds that are multi-tenant and can show performance variability over a period of time. This study provides insight into the performance variability observed for these metrics across varying time periods spread over two weeks. For each experiment, the number of active nodes in the cloud cluster processing the benchmark varied. Five configurations (scenarios) were chosen: 1 node, 2 nodes, 4 nodes, 6 nodes, and 8 nodes, respectively. Furthermore, the research includes two of the leading public cloud providers to provide a more representative study of the major cloud providers.

4. Results

4.1. Test Bed

The virtual machine node specifications are provided in Table 2.

Table 2.

VM Instance Specifications.

4.2. Benchmarks and Metrics

Each benchmark was compiled on the virtual machine instance from which the results were collected.

4.2.1. STREAM

This benchmark measures and outputs memory bandwidth or best rate in MB/s with 4 different functions (Add, Copy, Scale, Triad). According to [], “The STREAM benchmark is a simple synthetic benchmark program that measures sustainable memory bandwidth (in MB/s) and the corresponding computation rate for simple vector kernels”.

Each execution of the benchmark performs four operations (Copy, Scale, Add, and Triad) and selects the best metrics from one of ten runs. The average memory bandwidth (MB/s), average time (microseconds), minimum time (microseconds), and maximum time (microseconds) are collected. This benchmark is important for measuring the memory capabilities of VMs and the effects of the ‘memory wall’ on high-performance computing capabilities in a cloud-provisioned cluster infrastructure.

4.2.2. NPB (NAS Parallel Benchmarks)

This benchmark is designed to measure the computing performance of cluster or grid supercomputers in highly parallel tasks []. While NPB has several execution modes, we are only concerned with the embarrassingly parallel, or EP, configuration. This benchmark measures and outputs the communication performance with two metrics for EP: Execution Time to Complete in seconds and Million operations per second (MOPS).

According to [], “NPB are a small set of programs designed to help evaluate the performance of parallel supercomputers. This benchmark contains of eight programs: five kernels and three pseudo-applications” [].

4.2.3. IOR (Interleave or Random)

I/O performance is increasingly important for data-intensive computing. IOR measures parallel I/O performance in HPC environments. It is an MPI-based application that can generate I/O workloads in interleaved or random patterns. The benchmark measures input/output performance in MiB/s (1 MiB/s = 1 MB/s × 1.048) or read/write operations per second (Op/s). The IOR software is used for benchmarking parallel file systems using POSIX, MPIIO, or HDF5 interfaces []. I/O performance in MB/s is collected for both read and write operations, as well as the number of Op/s.

4.3. Research Methodology

IaaS cloud platforms are generally multi-tenant, and user applications may be impacted by “noisy neighbors”. This occurs when one tenant’s excessive resource usage negatively impacts the performance or availability of other tenants using the same shared infrastructure. This can negatively impact other tenants in terms of slower performance and higher latency. The impact of the noisy neighbor tends to be random, and in order to capture some of this impact, the benchmarks were run over two random days at three different periods. It was ensured that the background processes within each VM used in the experiments were identical. Furthermore, to capture the performance of both a single node and a cluster of nodes in the cloud, the benchmarks were run using node counts of 1, 2, 4, 6, and 8.

A series of experiments was conducted on each cluster using the benchmarks described in Section 4.2. For each benchmark, there were 30 executions on each cluster. The experiments were split in half, and two random days were chosen for the execution. Each day, the benchmark was tested three times: early morning, mid-day (early afternoon), and late evening. For each experiment, the number of active nodes processing the test was varied. Five configurations were chosen: 1 node, 2 nodes, 4 nodes, 6 nodes, and 8 nodes, respectively. A test matrix is shown in Table 3.

Table 3.

Test Execution Matrix.

4.4. Results

4.4.1. Stream Benchmark Results

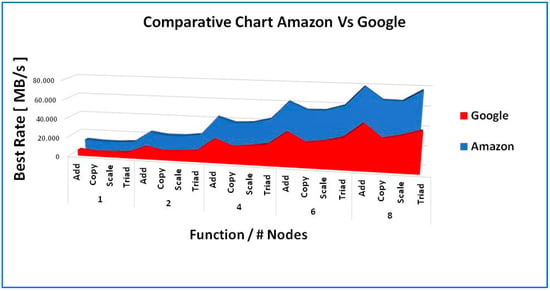

The execution of the Stream Benchmarks on both EC2 and GCP showed that both platforms are capable of scaling to increase the computational throughput. There is, however, a marked difference in the total throughput (MBps), indicating that AWS EC2 provided a higher throughput. A graphical representation of the best rate in MBps is shown in Figure 1. Overall, AWS outperforms GCP irrespective of the number of nodes and measuring function.

Figure 1.

Scalability Comparison Across All Functions.

4.4.2. Stream Benchmark Statistical Analysis

After executing the Stream Benchmark for the planned test bed, a basic statistical analysis was conducted and documented in Table 4 and Table 5, as shown below. It can be observed for both platforms that the standard deviation is very narrow.

Table 4.

EC2 Statistical Analysis (Stream).

Table 5.

GCP Statistical Analysis (Stream).

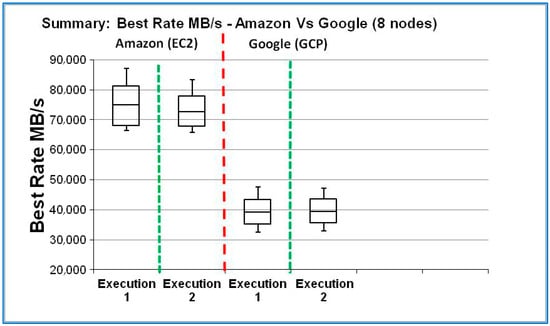

4.4.3. Stream Benchmark Box-Plot Analysis

Figure 2 depicts the Inter-Quartile Range and Median as calculated with the data gathered during the statistical analysis. The box plots clearly show that both providers have a comparable behavior across both execution test sets. It is also visible that the MBps rate generated by AWS-EC2 is greater than that of GCP.

Figure 2.

Stream Benchmark Box-plot Analysis.

4.4.4. Stream Benchmark Significance Test

Further analysis uncovered that the difference in throughput (MBps) between the clouds is statistically significant when comparing AWS and GCP. The data used in performing T-Tests and deducing significance are shown in Table 6 along with the calculated p-value (test results were grouped by node count).

Table 6.

Stream Benchmark Significance Test.

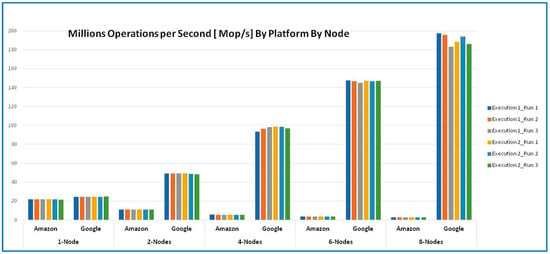

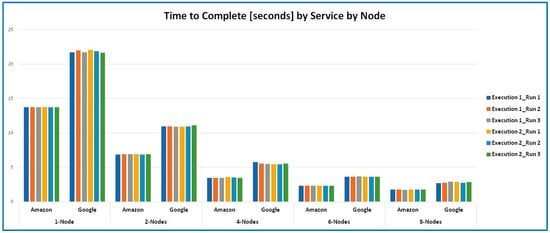

4.4.5. NP Benchmark Results

The results for the NP Benchmark were measured in millions of operations per second (MOPS) as well as the total time for completion. The number of tests performed matches those used for the other two benchmarks. Interestingly, Figure 3 shows an inverse relation between the EC2 and GCP results. While the total MOPs for EC2 gradually reduce as the number of nodes is increased, the total MOPs for GCP substantially increase as more nodes are added with each run. Regarding the total time in seconds for execution of the tests, this gradually reduced for both EC2 and GCP, as shown in Figure 4.

Figure 3.

NPB Benchmark Scalability—MOPS Throughput.

Figure 4.

NP Benchmark Scalability—Total Time in Seconds.

4.4.6. NP Benchmark Statistical Analysis

This is shown in Table 7. Unlike the Stream Benchmark, the statistical analysis performed over the NP Benchmark showed to be less predictable when comparing the corresponding standard deviations. Also noticeable is the wide difference between the means and medians of EC2 versus GCP.

Table 7.

NPB Millions of Operations Per Second.

4.4.7. NP Benchmark Significance Test

The results for execution time and MOPS were found to be statistically significant with p-values of 0.0125 and 0.0002, respectively.

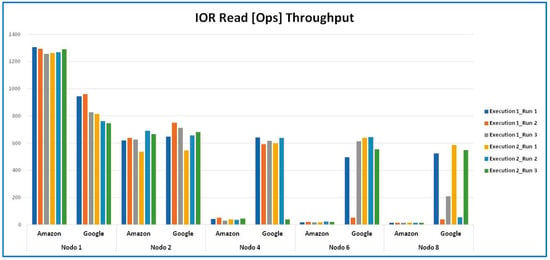

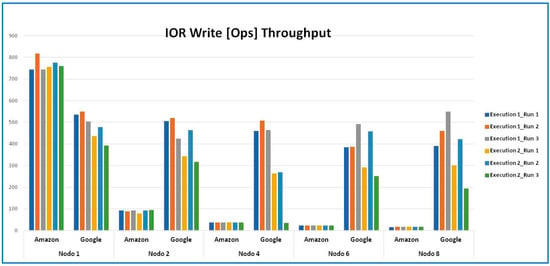

4.4.8. IOR Benchmark Results

The results for the IOR Benchmark were measured in operations per second (OPs) for two functions: read and write. The number of tests performed matches those used in Stream and NP benchmarks. Like the NP Benchmark results, the EC2 total OPs decreased as the number of nodes increased. However, for the IOR benchmark, the GCP results were not inversed with respect to EC2. Rather, the total OPs for GCP also decreased as nodes were added to the execution. Figure 5 and Figure 6 show the decrease in total OPs for the read and write operations, respectively. Also noticeable in the graphs is the variability in the results generated by the execution on the GCP platform. Unlike GCP, Amazon’s EC2 performed consistently across all IOR executions.

Figure 5.

IOR Benchmark Read Operation Throughput.

Figure 6.

IOR Benchmark Write Operation Throughput.

4.4.9. IOR Benchmark Statistical Analysis

Like the findings in Figure 5 and Figure 6, the statistical analysis further supports the visuals. For both the read and write functions, it can be observed that the measured standard deviations for EC2 are quite similar and close to the mean, whereas the standard deviations for GCP are disparate. Table 8 lists the statistical analysis performed for the IOR benchmark.

Table 8.

NP benchmark.

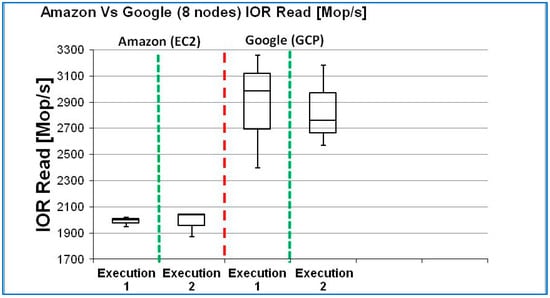

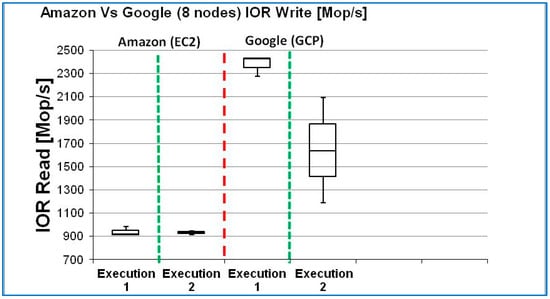

4.4.10. IOR Benchmark Box-Plot Analysis

Figure 7 and Figure 8 clearly present the wide variability in the results of the IOR benchmark as executed on the GCP platform. While both the read and write operations have varying standard deviations within the inter-quartile ranges (IQRs), it is also notable that the IQRs for the write operation do not even overlap. AWS-EC2 is considerably more predictable than GCP, as shown by the IQR ranges recorded.

Figure 7.

IOR Read Box-plot Analysis.

Figure 8.

IOR Write Box-plot Analysis.

Given the proprietary nature of these cloud platforms, it is difficult to know the exact cause of performance variability. In terms of memory and CPU benchmarks, the two clouds did not show a significant difference in performance variability. For the IOR benchmark, AWS did show more predictable results than GCP. One possible factor could be I/O resource contention. It is known that I/O resource contention in the cloud occurs when multiple virtual machines (VMs) or workloads compete for limited storage or network I/O resources, leading to performance bottlenecks. This can manifest as increased latency and reduced throughput. When multiple VMs or applications simultaneously try to read from or write to the same storage or network resources, this can lead to contention. AWS does have more edge locations worldwide. The AWS global network achieves low latency and consistent performance across AWS services and EC2 instances. Optimized routes between regions deliver high transfer speeds.

4.4.11. IOR Benchmark Significance Test

Statistical significance analysis using T-Tests for the IOR read and write operations did not produce findings that meet the minimal significance criteria of p-value less than or equal to 0.05, except for 8-node clusters. As the number of nodes increased to 8 nodes, the p-value approximated the 0.05 threshold. The data used in performing T-Tests are shown in Table 9 along with the calculated p-values (test results were grouped by node count).

Table 9.

IOR Benchmark Significance Test.

5. Conclusions

The results gathered from the experiments showed that AWS Elastic Cloud Computing (EC2) is relatively more predictable than Google’s Cloud Platform (GCP) across multiple executions at random times for the benchmarks used. Generally, AWS exhibited less performance variability across the benchmark runs conducted.

As an example, the IOR results showed that AWS is more predictable while performing read and write operations. In terms of net throughput, it is true that GCP outperformed AWS EC2. Hence, GCP is recommended if a large number of IO operations are needed, so long as the use case can tolerate a variance in the total time for execution. If the use case cannot tolerate variability in IO operations, then AWS is recommended.

As for scalability, both EC2 and GCP scaled accordingly when tested with the STREAM benchmark. However, the NPB results are more favorable to the GCP platform.

In terms of usability, the Google Cloud Platform proved to be slightly easier to use and administer the target nodes for the experiment. AWS also did not present major issues once the nodes were created and the code was compiled, showing a trouble-free execution and collection of results.

Looking at other points of comparison, GCP has a simple and transparent billing model while offering VMs ranging from basic to performance-driven configurations. AWS does have a comprehensive set of VMs and thorough documentation and best practice white papers to support the migration of traditional data center-based companies into cloud technology, which, when paired with excellent predictability, make it a good option for organizations that require control over the hosting and reliability of their information technology assets.

It is important to point out the limitations of this study. The testing approach entailed randomly choosing days and times for the execution of the test beds. Such tests were not synchronized, nor did the researchers have control over the collateral performance impacts of shared resources. Further research with hard constraints for external variables is necessary to firmly establish the predictability of one platform over the other. In addition, this study can be extended to other types of virtual machines to broaden the use cases to include memory-intensive or CPU-intensive instances. Performance testing with machine learning workloads is another approach to future research.

Author Contributions

S.A.: Conceptualization, methodology, formal analysis, investigation, resources, writing—original draft, writing—review and editing. H.R. and V.L.: Software, data curation, writing—original draft (in part). All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Sandhiya, V.; Suresh, A. Analysis of Performance, Scalability, Availability and Security in Different Cloud Environments for Cloud Computing. In Proceedings of the 2023 International Conference on Computer Communication and Informatics (ICCCI), Coimbatore, India, 23–25 January 2023; pp. 1–7. [Google Scholar]

- Amazon Web Services, Inc. Available online: https://aws.amazon.com/ec2/ (accessed on 15 March 2024).

- Google Cloud. Available online: https://cloud.google.com/compute/docs/machine-resource (accessed on 15 April 2024).

- Li, Z.; OBrien, L.; Ranjan, R.; Zhang, M. Early observations on performance of google compute engine for scientific computing. In Proceedings of the 2013 IEEE 5th International Conference on Cloud Computing Technology and Science, Bristol, UK, 2–5 December 2013. [Google Scholar]

- University of Virginia. STREAM Benchmark Reference Information. Department of Computer Science, School of Engineering and Applied Science. Available online: http://www.cs.virginia.edu/stream/ref.html (accessed on 15 April 2024).

- Villalpando, L.E.B.; April, A.; Abran, A. CloudMeasure: A Platform for Performance Analysis of Cloud Computing Systems. In Proceedings of the 2016 IEEE 9th International Conference on Cloud Computing (CLOUD), San Francisco, CA, USA, 27 June–2 July 2016; pp. 975–979. [Google Scholar]

- Shoeb, A.A.M. A Comparative Study on I/O Performance between Compute and Storage Optimized Instances of Amazon EC2. In Proceedings of the IEEE International Conference on Cloud Computing, Anchorage, AK, USA, 27 June–2 July 2014. [Google Scholar]

- Prasetio, N.; Ferdinand, D.; Wijaya, B.C.; Anggreainy, M.S.; Kumiawan, A. Performance Analysis of Distributed Database System in Cloud Computing Environment. In Proceedings of the 2023 IEEE 9th International Conference on Computing, Engineering and Design (ICCED), Kuala Lumpur, Malaysia, 31 October 2023; pp. 1–6. [Google Scholar]

- NASA Website. NAS Parallel Benchmarks. NASA Advanced Supercomputing Division, 28 March 2019. Available online: https://www.nas.nasa.gov/publications/npb.html (accessed on 15 April 2024).

- Sourceforge.net. “IOR HPC Benchmark”. Available online: https://sourceforge.net/projects/ior-sio/ (accessed on 14 April 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).