Abstract

Industry 5.0 represents a paradigm shift toward human–AI collaboration in manufacturing, incorporating unprecedented volumes of robots, Internet of Things (IoT) devices, Augmented/Virtual Reality (AR/VR) systems, and smart devices. This extensive interconnectivity introduces significant cybersecurity vulnerabilities. While AI has proven effective for cybersecurity applications, including intrusion detection, malware identification, and phishing prevention, cybersecurity professionals have shown reluctance toward adopting black-box machine learning solutions due to their opacity. This hesitation has accelerated the development of explainable artificial intelligence (XAI) techniques that provide transparency into AI decision-making processes. This systematic review examines XAI-based intrusion detection systems (IDSs) for Industry 5.0 environments. We analyze how explainability impacts cybersecurity through the critical lens of adversarial XAI (Adv-XIDS) approaches. Our comprehensive analysis of 135 studies investigates XAI’s influence on both advanced deep learning and traditional shallow architectures for intrusion detection. We identify key challenges, opportunities, and research directions for implementing trustworthy XAI-based cybersecurity solutions in high-stakes Industry 5.0 applications. This rigorous analysis establishes a foundational framework to guide future research in this rapidly evolving domain.

1. Introduction

The integration of artificial intelligence and machine learning (ML) in smart industries, particularly in Industry 5.0 applications, has created an urgent need for transparent and interpretable AI-based solutions [,,]. Like other sensitive application domains such as business, healthcare, education, and defense systems, the opaque nature of AI models raises significant concerns regarding decision-making transparency in smart industries. Beyond user acceptance and technology adoption issues, system developers must ensure fair and unbiased AI solutions. This need to understand causal reasoning in Deep ML model inferences has directed research attention toward explainable AI (XAI) []. The DARPA-funded XAI initiative aimed to develop interpretable machine learning models for reliable, human-trusted decision-making systems, crucial to IoT and intelligent system integration in Industry 5.0 [,,,].

Cybersecurity represents a critical challenge in smart industries with extensive interconnected devices. While AI-based solutions have proven effective for cybersecurity applications, the opacity of complex AI models in cybersecurity solutions—including intrusion detection systems (IDSs), intrusion prevention systems (IPSs), malware detection, zero-day vulnerability discovery, and Digital Forensics—exacerbates transparency and trust issues [,]. XAI can address these concerns by demonstrating AI algorithm trustworthiness and transparency in critical cybersecurity applications. Security analysts need to understand internal decision mechanisms of deployed intelligent models and precisely reason about input–output relationships to stay ahead of attackers. XAI-derived insights could enhance cybersecurity solutions through human–AI collaboration, improving development, training, deployment, and debugging processes. However, XAI application in cybersecurity presents a double-edged sword challenge—while improving security practices, it simultaneously makes explainable models vulnerable to adversarial attacks [,,].

Industry 5.0’s increased IoT device reliance makes systems more vulnerable to cyber threats, potentially causing serious damage and financial losses. While compromised smart home device security creates privacy and security risks, failures in critical infrastructure such as smart grids, nuclear power plants, or water treatment facilities elevate risks significantly []. To address rising cybersecurity challenges in evolving smart cities and industries, various advanced security measures have been implemented, including Security Information and Event Management (SIEM) systems, vulnerability assessment solutions, IDSs, and user behavior analytics [,,]. This study evaluates explainable IDS security measure advancements and highlights remaining challenges.

ML and deep learning (DL) algorithm adaptation in IDSs has introduced intelligent IDSs that significantly optimize detection rates. These ML/DL-based IDSs are adopted because they demonstrate superior robustness, accuracy, and extensibility compared with traditional detection techniques like rule-based, signature-based, and anomaly-based detection [,]. These complex algorithms’ foundation lies in mathematical and statistical concepts that perform pattern discovery, correlation analysis, and structured data disparity representation through probabilities and confidence intervals [,]. ML primary types include supervised, semi-supervised, unsupervised, reinforcement, and active learning techniques, each serving specific security application purposes []. Despite the intelligent AI-based module’s effectiveness, opaque/black-box model transparency and prediction justification remain uncertain. This lack of insights into opaque AI model decision-making systems raises trust issues for Industry 5.0 adoption [].

Several critical research questions require investigation: What cybersecurity challenges emerge from AI and IoT device integration in industrial applications? How trustworthy and transparent are AI solutions in decisions making? How can trustworthy, transparent AI-based security and privacy solutions be developed for Industry 5.0? How vulnerable to adversarial attacks are AI-based solutions? How can explainability mechanisms be defined in IDSs to effectively interpret temporal and contextual dependencies for specific cyber threats?

1.1. Industry 5.0: Characteristics and AI Integration Context

Industry 5.0 represents the next industrial paradigm evolution, emphasizing human-centric approaches that integrate artificial intelligence, robotics, and Internet of Things (IoT) technologies to create collaborative human–machine environments []. Unlike Industry 4.0’s automation and digitization focus, Industry 5.0 prioritizes sustainability, resilience, and human centricity while leveraging advanced AI capabilities for enhanced decision making and operational efficiency.

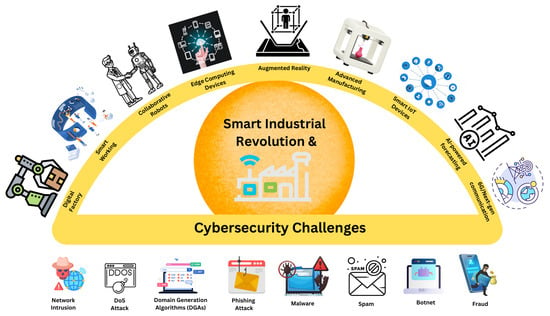

This paradigm shift manifests through several interconnected technological pillars (Figure 1): digital factories with cyber–physical systems, AR/VR interfaces for human–machine collaboration, edge computing with 5G/6G networks enabling real-time processing, and collaborative robotics working alongside human operators. These technologies enable mass customization, adaptive manufacturing, and AI-driven optimization []. However, this convergence expands the attack surface, introducing critical cybersecurity vulnerabilities across multiple vectors.

Figure 1.

Industry 5.0 technological landscape and cybersecurity threat vectors. The upper arc illustrates enabling technologies (digital factories, edge computing, AR/VR, collaborative robotics, 5G/6G networks, smart IoT, etc.) defining Industry 5.0’s human-centric paradigm. The lower section depicts major cybersecurity threats (network intrusion, DDoS, phishing, malware, botnets, ransomware, etc.) exploiting the expanded attack surface from extensive interconnectivity.

The cybersecurity threat landscape in Industry 5.0 encompasses both traditional and emerging attack paradigms (Figure 1). Network intrusion attempts target the interconnected IoT infrastructure, while Distributed Denial-of-Service (DDoS) attacks threaten operational continuity. Phishing and social engineering exploit the human element in collaborative environments, whereas malware, spam, and botnet infiltrations compromise system integrity. Advanced persistent threats leverage supply chain vulnerabilities inherent in multi-stakeholder industrial ecosystems. These threats are amplified by Industry 5.0’s characteristics: extensive device interconnectivity creates cascading failure risks, reduced human oversight in automated processes limits real-time threat detection, and the integration of AR/VR systems introduces novel attack vectors through immersive interfaces.

This threat landscape creates unprecedented requirements for AI transparency and explainability in cybersecurity solutions. Human operators must understand, trust, and effectively collaborate with intelligent security systems in critical industrial processes, necessitating explainable AI approaches that balance transparency with adversarial robustness. The extensive AI and machine learning integration in Industry 5.0 environments introduces a fundamental tension: while explainability mechanisms enable human–AI collaboration and trust building essential to security operations, they simultaneously provide adversaries with insights into model decision making that can be exploited for sophisticated attacks. Understanding this dual nature of explainability—as both security enabler and potential vulnerability—is critical to developing trustworthy cybersecurity solutions in Industry 5.0 contexts, motivating the systematic analysis presented in this survey.

1.2. Scope of the Survey

This survey focuses on network-based intrusion detection problems within Industry 5.0 and how XAI can provide a deeper understanding of machine learning-based intrusion detection systems to mitigate associated cybersecurity risks. The paper provides a taxonomy of XAI methods, discussing each XAI approach’s advantages and disadvantages. This paper examines XAI pitfalls in cybersecurity and how attackers could exploit XAI method explanations to exploit AI-based IDS vulnerabilities.

1.3. Related Surveys

Industrial paradigm evolution has introduced transformative goals emphasizing resource-efficient and intelligent society creation. This trajectory seeks to elevate living standards and mitigate economic disparities through hyperconnected, automated, data-driven industrial ecosystem integration [,,]. This digital transformation promises significant productivity and efficiency enhancement across the entire production processes. These milestones become possible through AI/Generative AI integration as collaborative landscapes, fostering innovation, optimizing resource utilization, and driving economic growth in smart industries []. This accompanies huge smart critical infrastructure developments such as smart grids and IoT-controlled dams. However, such advancements expose systems to elevated sophisticated cyber attack risks [,,].

Connected devices and networks in autonomous industry infrastructure are more prone to hijacking, malfunctioning, and resource misuse threats due to expansive attack surfaces from pervasive connectivity, reduced human oversight in automated processes, and high-value data assets targeted by adversaries. These vulnerabilities necessitate additional security layers for risk protection []. Conventional AI-based cybersecurity systems remain under active development for full maturity achievement, and robust, trustworthy security system establishment has emerged as a prominent defender objective [,].

As shown in Table 1, while existing research has explored either XAI taxonomies [,,] or cybersecurity applications [,,,], understanding gaps remain regarding explainability impacts on adversarial contexts in intrusion detection systems. Explainability mechanism adoption in cybersecurity, specifically in intrusion detection and prevention systems, is reviewed in surveys by Chandre et al. [] and Moustafa et al. []. Recent research has focused on autonomous transportation, smart cities, and energy management systems [,,,]. Table 1 demonstrates that existing surveys address these topics in isolation: studies examining Industry 5.0 [,,] provide general technological overviews without analyzing adversarial robustness of explainability mechanisms, while XAI-focused surveys [,] lack application to cybersecurity contexts. Multi-domain surveys [] cover cybersecurity broadly but without depth in adversarial XAI exploitation or Industry 5.0-specific human-centric security requirements. Additionally, XAI has been used to exploit ML intelligence after gaining model insights. Our work uniquely addresses XAI, cybersecurity, and adversarial approach intersections, examining explainability concept impacts on cybersecurity practices, emphasizing the emerging adversarial explainable IDS (Adv-XIDS) trend, which represents significant challenges for explainable AI-based cybersecurity decision models.

Table 1.

Comparison of related surveys on explainable AI and cybersecurity.

1.4. Contributions

Based on serious threat vectors and their implications, this paper analyzes different instances of XAI method adoption in IDSs and examines interpretability impacts on cybersecurity practices in Industry 5.0 applications. We provide a comprehensive literature overview on XAI-based cybersecurity solutions for Industry 5.0 applications, focusing on existing solutions, associated challenges, and future research directions for challenge mitigation. For self-containment, we provide an XAI taxonomy overview.

This systematic review distinguishes itself through three key aspects. First, we analyze cybersecurity threats within Industry 5.0’s human-centric paradigm, examining vulnerabilities in human–robot collaboration, AR/VR manufacturing, and federated edge architectures. These contexts require explainability for real-time human intervention and cross-organizational threat sharing. Second, we document explainability’s dual nature: as an IDS enhancement enabling transparency (Section 5, Table 2) and as an adversarial attack vector exploitable through SHAP, LIME, and gradient-based methods (Section 6, Table 3). Third, our PRISMA-guided analysis of 135 studies quantifies XAI technique distributions, dataset prevalence, and vulnerability–protection mappings (Table 4).

Table 2.

Explainable AI-based intrusion detection systems.

Table 3.

Adversarial techniques targeting intrusion detection systems in Industry 5.0: Compilation of methods exploiting IDS vulnerabilities, with and without leveraging explainable AI mechanisms, in the context of cybersecurity.

The main contributions of this paper are summarized as follows:

- We provide a clear and comprehensive taxonomy of XAI systems with categorization of ante hoc and post hoc methods, analyzing their applicability and limitations in cybersecurity contexts.

- We provide a detailed overview of current state-of-the-art IDSs, their limitations, and the deployment of XAI approaches in IDSs, systematically analyzing 135 empirical studies to identify implementation patterns, commonly used datasets, and explainability technique preferences.

- We systematically discuss the exploitation of XAI methods for launching more advanced adversarial attacks on IDSs, mapping specific XAI techniques to documented attack vectors and vulnerability levels.

- We analyze Industry 5.0-specific cybersecurity challenges and identify research directions for adversarially robust, human-centered explainable security systems, including federated learning architectures and SOC workflow integration.

This paper organization follows: Section 2 presents the survey methodology by describing objective questions. Section 3 provides an explainable AI taxonomy overview. Section 4 describes key Industry 5.0 cybersecurity challenges. Section 5 presents explainable AI in cybersecurity, specifically focusing on XAI-based IDSs. Section 6 presents adversarial XAI and IDS techniques. Section 7 discusses XAI-based IDS lessons learned, challenges, and future research directions. Finally, Section 8 concludes this survey.

2. Methodology

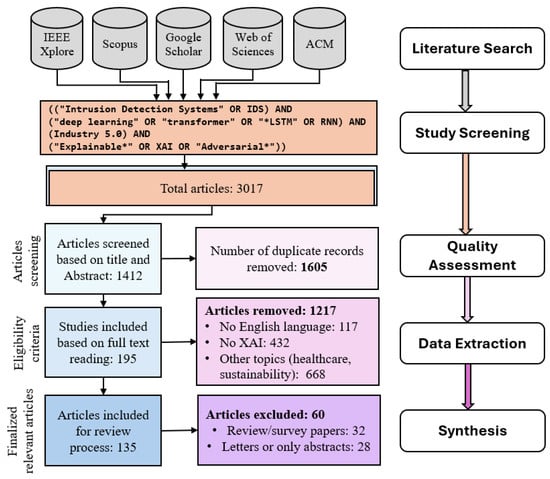

This systematic review implements a structured methodology to investigate explainable artificial intelligence (XAI)-based intrusion detection systems (IDSs) and adversarial approaches exploiting their explainability (Adv-XIDS) within Industry 5.0 environments. Conducting this review according to the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) 2020 guidelines [], we employ a reproducible process for literature identification, screening, and analysis. The complete PRISMA 2020 checklist is provided in Supplementary Material, and the flow diagram illustrating study selection is presented in Figure 2. While the review protocol was developed prior to data collection (July 2024), it was not prospectively registered in PROSPERO—an acknowledged limitation. The methodological design minimizes selection bias and enhances finding reliability through systematic exploration of key research questions, rigorous search protocols, and precise selection criteria, establishing a foundation for understanding complex interactions among explainability mechanisms, security frameworks, and adversarial challenges in human-centric industrial systems.

Figure 2.

PRISMA flow diagram and systematic review methodology. Study selection process (left) and five-stage methodological framework (right) illustrating our systematic review approach from literature search to synthesis.

2.1. Research Questions

We formulated five research questions to explore critical dimensions of cybersecurity, explainability, and adversarial robustness in Industry 5.0:

- What are the key cybersecurity challenges in Industry 5.0, and why are explainable AI-based intrusion detection systems (X-IDSs) essential to addressing these threats?

- What techniques and methods enhance transparency and interpretability in X-IDS implementations?

- What are the primary challenges and limitations of X-IDS in cybersecurity applications?

- What are the security implications of adversaries exploiting X-IDSs decision mechanisms, and how can these systems be protected against such attacks?

- What are the emerging trends and future research directions for X-IDSs in Industry 5.0 contexts?

These questions serve as the analytical framework for subsequent methodological stages, ensuring focused examination aligned with the study’s objectives while maintaining comprehensive coverage of explainable intrusion detection systems and their adversarial implications.

2.2. Search Strategy

We conducted a systematic literature search across five authoritative databases: IEEE Xplore, Scopus, Web of Science, ACM Digital Library, and Google Scholar. We selected these repositories for their comprehensive coverage of research in cybersecurity, artificial intelligence, and industrial systems, providing a robust foundation for identifying relevant studies on XAI-based IDSs and Adv-XIDSs in Industry 5.0.

The search strategy employed a structured terminology framework integrating intrusion detection terms (“Intrusion Detection System,” “IDS,” “Network Intrusion,” “Anomaly Detection,” “Threat Detection”, etc.), explainability terms (“Explainable AI,” “XAI,” “Interpretable Machine Learning,” “SHAP,” “LIME,” “Explainability,” “Transparency”, etc.), and industrial/adversarial context terms (“Industry 5.0,” “Industry 4.0,” “Industrial IoT,” “IIoT,” “Smart Manufacturing,” “Adversarial Attack,” “Adversarial XAI,” “Adversarial Machine Learning”, etc.). These terminology groups were combined using Boolean operators (AND, OR) with syntax adapted to each database’s specific requirements. Representative search strings included combinations such as (“Intrusion Detection System” OR “IDS” OR “Network Intrusion”) AND (“Explainable AI” OR “XAI” OR “Interpretable Machine Learning”) AND (“Industry 5.0” OR “Industrial IoT” OR “Adversarial Attack”). Database-specific filters were applied, including document type restrictions (journal articles and conference papers), language requirements (English only), and temporal boundaries (January 2015 to October 2024). Searches were executed during August–September 2024, capturing both foundational works and recent advancements in these rapidly evolving domains. The search protocol was developed through iterative refinement to optimize precision and recall, ensuring comprehensive coverage while maintaining relevance.

2.3. Study Selection

We followed the PRISMA guidelines for the selection process, as illustrated in Figure 2. The initial search yielded 3017 articles, which we reduced to 1412 unique records after eliminating 1605 duplicates through automated detection and manual verification. Independent reviewers screened titles and abstracts for relevance to XAI-based IDSs and Adv-XIDSs in Industry 5.0 contexts, with conflicts being resolved through structured consensus discussion. Figure 2 details this systematic screening process, showing the progressive elimination of studies at each stage.

Title and abstract screening excluded 1217 studies based on predetermined criteria: non-English publications (117 studies, 9.6%), studies lacking substantive focus on XAI methods or IDS applications (432 studies, 35.5%), and studies addressing tangentially related topics, including healthcare applications without industrial cybersecurity context, general sustainability discussions, or other computing domains with insufficient relevance to our research questions (668 studies, 54.9%).

Full-text assessment of the 195 remaining articles employed independent evaluation by multiple reviewers with consensus-based conflict resolution, resulting in the exclusion of 60 studies: review or survey papers without primary empirical contributions (32 studies, 53.3%) and publications with insufficient methodological detail such as conference abstracts or position papers lacking adequate description of methods, datasets, or validation procedures (28 studies, 46.7%).

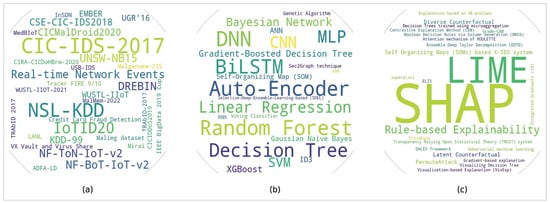

The Industry 5.0-focused cybersecurity literature remains nascent, with limited industrial datasets. Therefore, we classify the 135 included studies into three categories: (1) research explicitly addressing the ICS, IIoT, or SCADA environments using domain-specific datasets like WUSTL-IIOT-2021 or Edge-IIoTset (n = 23, 17.0%); (2) research using established benchmarks (NSL-KDD, CICIDS-2017, UNSW-NB15, etc.) while investigating Industry 5.0-applicable challenges such as real-time detection, human-interpretable explainability, federated learning, and adversarial robustness (n = 78, 57.8%); and (3) foundational XAI-IDS research establishing broadly applicable techniques (n = 34, 25.2%). This classification enables systematic assessment of current research applicability to Industry 5.0 deployment requirements while identifying critical gaps between existing evaluation contexts and operational demands of human-centric industrial systems.

2.4. Inclusion and Exclusion Criteria

We included studies that satisfied the following criteria:

- Investigated XAI-based IDSs or Adv-XIDSs within the cybersecurity context of Industry 5.0.

- Employed deep learning architectures (e.g., transformers and LSTMs) or shallow computational models for intrusion detection.

- Comprised peer-reviewed articles or high-quality gray literature containing empirical findings or theoretical frameworks with substantive insights.

We defined exclusion criteria as follows:

- Non-English studies that would introduce language-based analytical barriers.

- Articles with peripheral relevance to XAI-based IDSs or cybersecurity paradigms.

- Publications with insufficient methodological detail or inadequate empirical support (e.g., conference abstracts and letters to editors).

Quality assessment was integrated throughout the selection process, with studies being evaluated for clarity of research objectives, appropriateness and rigor of methodology, suitability of datasets and validation procedures, sufficiency of technical documentation, and significance of contributions to XAI-based IDS or adversarial XAI domains. Only studies demonstrating methodological rigor and substantive contributions across these dimensions were retained for analysis. These criteria ensured that the review maintained analytical focus on high-quality, relevant research contributions.

2.5. Data Extraction and Synthesis

We extracted data from the 135 included studies using a standardized protocol template, documenting research objectives, methodological approaches, key findings, and specific relevance to the established research questions. This process involved collaborative validation among multiple reviewers to ensure extraction accuracy and interpretive consistency through structured discussion and consensus building. We conducted narrative synthesis with thematic organization addressing the research questions, culminating in an analytical framework for understanding XAI-based IDS implementations in Industry 5.0 environments. This approach enhances the reliability of the conclusions while establishing a clear trajectory for future research and practical applications in this critical domain of cybersecurity.

3. Explainable AI (XAI) Taxonomies

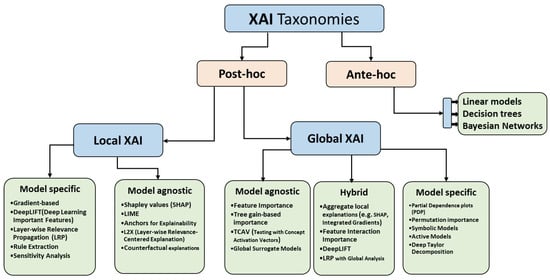

Similar to other application domains, XAI tools could be very effective in cybersecurity by incorporating human insights in decision making. These tools allow cybersecurity experts and analysts to understand why a threat is flagged, leading to better threat detection, root cause analysis, and improved trust in AI decisions. They also facilitate more efficient utilization of human resources when dealing with false positives, which are prevalent in cybersecurity and often consume significant manual analysis time. To support cybersecurity analysts, various XAI techniques have been developed, broadly categorized into “ante hoc” and “post hoc” explainability methods []. This section explores the taxonomy of XAI in the security domain, as illustrated in Figure 3, with a specific focus on XAI-based intrusion detection systems (X-IDSs), including a comparative analysis of advantages and disadvantages inherent in prominent approaches.

Figure 3.

Comprehensive taxonomy of XAI methods categorized by interpretability approach (ante hoc vs. post hoc) and explanation scope (local vs. global), with specific techniques listed for each category.

3.1. Ante Hoc Explainability

Ante hoc explainability refers to models designed to be inherently interpretable, providing transparency into their decision-making processes at algorithmic, parametric, and functional levels [,]. Such models—e.g., linear regression, logistic regression, Decision Trees, Random Forest, naive Bayes, Bayesian networks, and rule-based learning—are often applied in intrusion detection systems (IDSs) for Industry 5.0 cybersecurity, where understanding attack patterns is critical. However, their simplicity frequently limits their ability to handle the complex, high-dimensional, and non-linear data typical of modern cyber threats.

For example, linear regression uses coefficients to quantify how features (e.g., network traffic metrics) influence predictions, offering a clear view of linear relationships []. Yet, it fails to model the non-linear attack signatures common in IDS datasets. Similarly, logistic regression provides interpretable probabilities through coefficient signs and magnitudes [], but its linear assumptions restrict its effectiveness against sophisticated threats. Decision Trees generate human-readable rules (e.g., “if packet size > 100, then flag intrusion”) by partitioning data, though their transparency diminishes with increased depth, and they are prone to overfitting noisy IDS inputs []. Random Forest, an ensemble of Decision Trees, reveals global feature importance (e.g., IP address frequency), but their aggregated decisions obscure local interpretability. Bayesian networks depict probabilistic dependencies (e.g., between malware presence and port activity) via directed acyclic graphs, yet their computational complexity scales poorly with the large feature sets of IDSs []. Lastly, rule-based learning employs explicit “if-then-else” conditions (e.g., “if port = 80 and traffic > 1 MB, then alert”), ensuring straightforward interpretation, but its fixed rules struggle to adapt to evolving attack patterns [].

The aforementioned inherently transparent models have achieved competitive performance in many regression and classification problems. However, these interpretability and explainability methods are limited to model families of lower complexity. Key constraints such as model size, sparsity, and monotonicity requirements create an inevitable trade-off between performance and transparency. Consequently, more complex model architectures—including ensembles, Artificial Neural Networks (ANNs), Deep Neural Networks (DNNs), Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTM) networks, transformers, and support vector machines (SVMs)—which often lack transparency in their decision-making processes, require post hoc methods for interpretation. A more recent category called hybrid approaches combines multiple explainable AI techniques to achieve appropriate interpretability while maintaining high predictive performance [].

3.2. Post Hoc Explainability

Complex black-box models typically offer superior predictive capabilities at the expense of limited explainability regarding their decision-making processes. In intelligent systems, both accuracy and interpretability are essential characteristics. To address the transparency imperative, surrogate explainers become critical, requiring development and application to interpret the underlying rationale of intricate models’ decisions [].

Post hoc explainability methods have emerged as a prominent research avenue to address the opacity challenge of complex black-box model families. These methods elucidate the decision-making process of trained models by addressing two primary types of explanations: local explanations and global explanations. Local explanations focus on how a model predicts outcomes for specific instances, providing insights into the influence of particular inputs on classification decisions []. Conversely, global explanations assess the impact of all input features on the model’s overall output, enabling comprehension of feature importance hierarchies and decision boundaries across entire datasets.

Global and Local Explanations

In cybersecurity contexts, global explanations provide strategic insights by revealing which network attributes, behavioral patterns, or file characteristics consistently contribute to threat classification across diverse attack scenarios. These explanations support defensive strategy development and resource allocation by identifying systemic vulnerabilities and attack vectors. However, they may obscure nuanced attack behaviors crucial to precise incident analysis [].

Local explanations focus on interpreting individual predictions, enabling security analysts to understand why specific network flows, files, or user behaviors were classified as malicious or benign. This granular insight is essential to incident response, forensic investigation, and distinguishing between legitimate anomalies and actual threats. While valuable for tactical operations, local explanations may not capture broader attack campaign patterns requiring strategic intervention [].

Model-Agnostic Approaches: Both local and global explanations are implemented through model-agnostic techniques—applicable to any AI-based model regardless of internal structure—and model-specific techniques tailored for particular architectures []. Model-agnostic post hoc explainability techniques focus directly on model predictions rather than internal representations, enabling deployment with any learning model irrespective of internal logic []. Most model-agnostic techniques quantify feature influence through simulated feature removal, termed removal-based explanations [].

LIME (Local Interpretable Model-agnostic Explanation) primarily provides local explanations by approximating complex decision boundaries in the local neighborhood of specific instances using interpretable surrogate models []. In cybersecurity applications, LIME helps analysts understand why particular network connections were classified as intrusions, supporting incident-level decision validation. LIME offers domain-specific implementations for text, image, tabular, and temporal data analysis [].

SHAP (SHapley Additive exPlanations) uniquely provides both local and global explanations by employing game theory principles to assign importance values representing each feature’s contribution to specific predictions []. Individual SHAP values offer local explanations for specific predictions, while aggregating SHAP values across instances enables global interpretability of feature importance patterns. The SHAP framework includes specialized variants: Kernel SHAP, Tree SHAP, Deep SHAP, and Linear SHAP [].

Other prominent approaches include visualization techniques that primarily provide global explanations: Accumulated Local Effect (ALE) plots and partial dependence plots (PDPs) illustrate relationships between features and model predictions []. Individual Conditional Expectation (ICE) plots bridge local and global perspectives by displaying predictions for individual instances while enabling pattern recognition across multiple instances [].

Model-Specific Approaches: Model-specific post hoc explainability addresses models with design transparency but complex internal decision structures requiring specialized interpretation approaches. These techniques leverage architectural characteristics to provide targeted explanations based on the model’s inherent structure [].

Ensemble methods typically provide global explanations through feature importance analysis and model simplification techniques []. Simplification approaches include weighted averaging, Model Distillation [,], G-REX (Genetic-Rule Extraction) [], and feature importance analysis through permutation importance or information gain [].

Support vector machines (SVMs) demonstrate versatility by supporting multiple explanation types. Model simplification techniques provide global explanations through decision boundary interpretation, while counterfactual explanations offer local explanations by identifying minimal changes required for decision alteration. Example-based explanations bridge local and global perspectives by utilizing representative dataset instances to illustrate SVM decision processes [].

Deep learning models including Multi-Layer Perceptrons (MLPs), Convolutional Neural Networks (CNNs), and Recurrent Neural Networks (RNNs) require primarily post hoc explanation techniques due to their complex black-box characteristics. These approaches include model simplification, feature importance estimation, Saliency Map visualizations, and integrated local–global explanation frameworks []. Recent research has explored hybrid approaches incorporating expert-authored rules with algorithm-generated knowledge to achieve robust explainability [].

The diversity of post hoc explainability methods enables cybersecurity practitioners to select appropriate explanation granularity based on operational requirements. Local explanations support tactical decision making for individual incidents, while global explanations inform strategic security posture development. This flexibility becomes particularly crucial in Industry 5.0 environments, where both rapid incident response and comprehensive threat landscape understanding are essential to effective cybersecurity.

Having established this XAI taxonomy framework, we now proceed to examine the growing demand for explainability in AI-based cybersecurity applications, with particular emphasis on interpretable intrusion detection systems. This represents a critical research domain that has evolved toward sophisticated IDS implementations. As this paradigm becomes integrated into Industry 5.0 infrastructure, the transparency of IDSs has become increasingly essential.

4. Industry 5.0 and Associated Cybersecurity Challenges

The enhanced interconnectivity characteristic of Industry 5.0 environments simultaneously exposes smart industrial systems to multifaceted cybersecurity vulnerabilities and threats, potentially compromising operational integrity, worker safety, and production continuity. These evolving threat landscapes create complex security challenges that traditional cybersecurity approaches struggle to address effectively, necessitating more sophisticated and transparent security solutions.

Contemporary industrial ecosystems require extensive data collection and analysis for operational objectives, including consumer behavior modeling, supply chain optimization, and predictive maintenance. This interconnectivity has substantially expanded potential entry vectors and exploitable vulnerabilities within Industry 5.0 frameworks, complicating threat detection and mitigation efforts []. The attack surface expansion becomes particularly critical in infrastructure contexts where electrical generation stations and water treatment facilities increasingly rely on Industrial IoT (IIoT) systems, introducing unprecedented risk levels affecting thousands of individuals. The complexity of these interconnected systems demands security solutions that can provide clear explanations of threat detection decisions to enable rapid human intervention and response.

Social engineering attacks exploit human cognitive vulnerabilities rather than technical system weaknesses, constituting significant threat vectors in human-centric Industry 5.0 environments. These attacks include phishing campaigns, pretexting scenarios, and voice phishing (vishing), which frequently serve as malware delivery mechanisms []. Within Industry 5.0 contexts, where human–machine collaborative interfaces are intensified, social engineering presents escalating security concerns. The human-centric nature of these threats requires security systems that can clearly communicate threat indicators and attack patterns to human operators, emphasizing the need for explainable AI approaches that bridge technical detection capabilities with human understanding.

Cloud computing infrastructure delivers essential capabilities for Industry 5.0 implementations, supporting manufacturing operations through IoT-based monitoring systems and application programming interfaces for data normalization [,]. However, cloud architectures introduce distinct security challenges, including third-party software vulnerabilities, inadequately secured APIs, and complex data governance requirements. The distributed and multi-tenant nature of cloud environments creates intricate attack patterns that require sophisticated detection mechanisms capable of providing clear explanations of security events across diverse infrastructure components.

IoT systems enable comprehensive data acquisition across industrial domains through interconnected sensors and devices but present substantial security challenges due to large-scale deployment complexities and inconsistent protection measures []. Security implications vary considerably across implementation contexts, with medical devices and smart grid relays presenting higher risk profiles than consumer-grade devices. The heterogeneous nature of IoT ecosystems, combined with inconsistent vulnerability patching and limited on-device protection capabilities, creates complex threat landscapes requiring intelligent security solutions that can adapt to diverse device behaviors while providing transparent explanations of anomalous activities.

Supply chain vulnerabilities emerge from the inherent complexities and interdependencies among multiple stakeholders in modern industrial networks. While Industry 5.0 enhances supply chain management through human–robot collaboration, these interdependencies create potential attack vectors that can propagate across organizational boundaries []. The multi-organizational nature of supply chain attacks requires security solutions capable of correlating threats across different domains while providing clear attribution and explanation of attack progression to facilitate coordinated response efforts.

These cybersecurity challenges collectively demonstrate the limitations of traditional black-box security approaches in Industry 5.0 environments [,,]. The complexity, criticality, and human-centric nature of these threats necessitate security solutions that not only detect attacks accurately but also provide transparent explanations of their decision-making processes [,]. This requirement for explainability becomes particularly crucial when security systems must enable rapid human intervention, facilitate cross-organizational threat communication, and maintain trust in human–machine collaborative environments. Recent advancements in intrusion prevention systems (IPSs) have enhanced network monitoring capabilities, with intrusion detection systems (IDSs) serving as critical components for preliminary threat identification. However, the evolving threat landscape in Industry 5.0 demands more sophisticated, explainable approaches to intrusion detection that can address these complex security challenges while maintaining transparency and human trust.

5. Intrusion Detection Systems for Cybersecurity in Industry 5.0

In Industry 5.0, the proliferation of interconnected systems and increasingly sophisticated automation has dramatically elevated cyber attack risks. Security breaches in these environments can precipitate severe industrial operational disruptions, substantial financial losses, and critical safety hazards. Consequently, the development and implementation of advanced cybersecurity measures, particularly machine learning (ML)-based intrusion detection systems (IDSs), are essential to safeguarding the integrity and security of Industry 5.0 ecosystems [,].

ML-based IDSs have demonstrated remarkable efficacy in addressing cybersecurity challenges within industrial environments [,]. In the Industry 5.0 context, the strategic importance of intelligent IDSs has increased exponentially [,]. Given the expanded attack surface resulting from device interconnectivity discussed in Section 4, these systems enable the effective monitoring of networks and systems for malicious activities, behavioral anomalies, and potential violations of data management policies. Furthermore, IDSs offer valuable post-incident capabilities following social engineering attacks by detecting suspicious device behaviors, network anomalies, unusual access patterns, unauthorized data flows, and known malicious payloads. They also play a crucial role in mitigating cloud and IoT vulnerabilities—prevalent concerns in Industry 5.0 environments—through advanced detection methodologies such as anomaly-based approaches [].

Beyond protecting conventional personal and enterprise networks, the imperative to secure critical infrastructure represents a paramount concern. While the compromising of personal networks and smart devices creates privacy, financial, and psychological risks for individuals, the threat level escalates significantly when considering enterprise networks that process information about numerous individuals [,]. The risk profile becomes exponentially more severe when addressing critical infrastructure security. Protection of these systems is fundamentally important because disruptions to essential services such as electrical power distribution and water supply can profoundly impact thousands of individuals and organizations. Although the compromising of a personal health monitoring device presents a significant threat to an individual, this pales in comparison to the catastrophic consequences potentially resulting from security breaches in nuclear power plant control systems, which could affect thousands or millions of people. This criticality elevates the protection of industrial infrastructure to the highest priority within cybersecurity frameworks, simultaneously emphasizing the essential role of explainable machine learning approaches in this domain [,,].

This section provides a comprehensive examination of ML-based IDSs, highlighting their key operational aspects and exploring how explainable AI (XAI) enhances their effectiveness within Industry 5.0 security contexts.

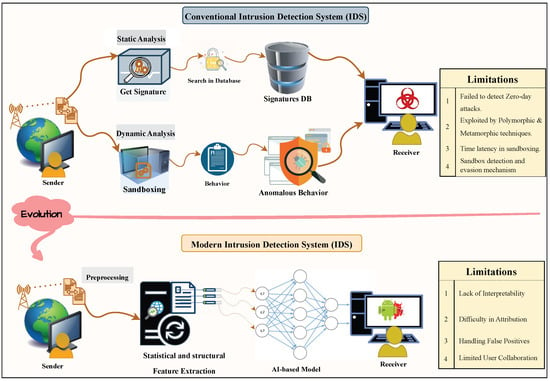

5.1. Traditional Intrusion Detection System (IDS)

Conventional approaches to IDS implementation typically employ either signature-based intrusion detection systems (S-IDSs) or anomaly-based intrusion detection systems (A-IDSs), as illustrated in the upper section of Figure 4. S-IDS methodologies operate by matching new patterns against previously identified attack signatures, a technique also referred to as knowledge-based detection [,]. These approaches rely on constructing comprehensive databases of intrusion instance signatures, against which each new instance is compared. However, this detection methodology demonstrates significant limitations in identifying zero-day attacks and is further compromised by polymorphic and metamorphic techniques incorporated into modern malware, which enable the same malicious software to manifest in multiple forms, evading signature-based identification.

Figure 4.

Evolution of intrusion detection systems: conventional versus modern architectures. (Upper): Conventional IDS using signature-based and behavior-based (sandboxing) detection with limitations including zero-day attack vulnerability and polymorphic technique susceptibility. (Lower): Modern ML-based IDS with preprocessing and AI-based detection, exhibiting limitations in interpretability, false-positive handling, and user collaboration—motivating XAI adoption.

The challenges posed by polymorphic and metamorphic malware variants have been addressed through anomaly-based intrusion detection systems (A-IDSs), which analyze suspicious variants within controlled sandbox environments to evaluate behavioral characteristics. An alternative analytical approach involves establishing behavioral baselines for normal computer system operations using machine learning, statistical analysis, or knowledge-based methodologies []. Following the development of a decision model, any significant deviation between observed behavior and established model parameters is classified as an anomaly and flagged as a potential intrusion. From a traditional sandbox analysis perspective, A-IDSs demonstrate superior capabilities in detecting zero-day attacks and identifying polymorphic and metamorphic intrusion variants. However, this approach is constrained by detection speed limitations compared with S-IDS implementations. The integration of advanced ML techniques has substantially mitigated these limitations by automatically identifying essential differentiating characteristics between normal and anomalous data patterns with high accuracy rates [,].

Machine learning solutions operate on the principle of data generalization to formulate accurate predictions for previously unencountered scenarios. These approaches demonstrate optimal performance with sufficient training data volumes. The ML domain encompasses two primary methodological categories: supervised learning, which utilizes labeled data for model training, and unsupervised learning, which extracts valuable insights from unlabeled datasets. The performance efficacy of ML-based IDS models is contingent upon the quality of the data type information, acquisition accessibility and speed, and the fidelity with which the data reflects source behavior (i.e., host or network characteristics) []. Common data sources leveraged in ML-based solutions include network packets, function or action logs, session data, and traffic flow information. The cybersecurity research community frequently employs feature-based benchmark datasets such as DARPA 1998, KDD99, NSL-KDD, and UNSW-NB15 for standardized evaluation purposes [,,].

Multiple data types serve distinct functions in detecting various attack categories, as each data type reflects specific attack behavior patterns. For instance, system function and action logs primarily reveal host behavior characteristics, while session and network flow data illuminate network-level activities. Consequently, appropriate data sources must be selected based on specific attack characteristics to ensure the collection of relevant and actionable information []. Header and application data contained within communication packets provide valuable information for detecting User-to-Root (U2R) and Remote-to-Local (R2L) access attacks. Packet-based IDS implementations incorporate both packet parsing-based and payload analysis-based detection methodologies. Network flow-based attack detection represents another significant approach, particularly effective against Denial-of-Service (DoS) and Probe attacks, employing feature engineering-based and deep learning-based detection techniques [].

Session creation-based attacks can be identified using statistical information derived from session data as input vectors for decision models. Sequence analysis of session packets provides detailed insights into session interaction patterns, an approach frequently implemented using text processing technologies such as Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), and Long Short-Term Memory (LSTM) networks to extract spatial features from session packets. System logs recorded by operating systems and application programs represent another important attack detection vector, containing system calls, alerts, and access records. However, effective interpretation of these logs typically requires specialized cybersecurity expertise. Recent detection methodologies increasingly incorporate hybrid approaches that combine rule-based detection with machine learning techniques. Additional detection methodologies include text analysis techniques that process system logs as plain text, with n-gram algorithms commonly employed to extract features from text files for subsequent classification tasks [].

5.2. Explainable IDS (X-IDS)

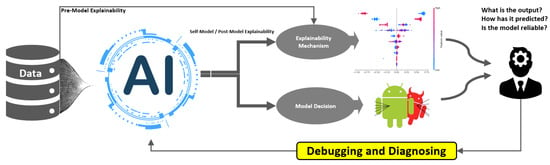

The preceding analysis demonstrates that most contemporary intelligent intrusion detection systems employ complex ML techniques that deliver exceptional intrusion detection performance. However, another critical aspect requiring consideration in IDS design is decision-making process transparency. System developers must address fundamental “Why” and “How” questions regarding IDS model operations to develop more reliable, secure, and effective security solutions (Figure 5). Explanatory elements within IDS implementations may include rationales for generated alerts, justifications for anomaly or benign classifications, and compromise indicators for security operations center analysts [,]. The necessity and utility of explanations in security systems were initially proposed by Viganò et al. [], who emphasized explanations’ role in comprehending intelligent systems’ core functionality. They introduced the “Six Ws” framework, expanding the traditional “Five Ws” (Who, What, When, Where, and Why) by incorporating “How” to explore methodological aspects. Although “How” deviates from the “W” pattern, its conventional inclusion ensures comprehensive analytical coverage. Viganò et al. demonstrated that addressing these six dimensions enhances security system transparency and operational effectiveness.

Figure 5.

Explainable intrusion detection system (X-IDS) operational framework. Data flows through the AI-based model generating dual outputs: (1) model decision (classification/alert) and (2) explainability mechanism providing decision rationale. Security analysts evaluate both streams to answer “What is the output? How was it predicted? Is the model reliable?”, creating a feedback loop for debugging, diagnosis, and model improvement.

The explainable and interpretable IDS concepts assume heightened significance in Industry 5.0 contexts as organizations strive to effectively address emerging cyber threats while maintaining security measures of transparency and interpretability. Explainability in IDSs represents a collaborative initiative between AI systems and human operators addressing technical challenges at both implementation and operational levels, enhancing detection and response capabilities. This collaborative approach enables IDSs to transcend black-box model limitations by integrating fundamental knowledge and insights, facilitating interpretable decision-making processes [,].

The cybersecurity research community is actively revising traditional intelligent intrusion detection systems to incorporate explainability features tailored to diverse stakeholder requirements [,]. These revisions have established three distinct explainability domains: self-model explainability, pre-modeling explainability, and post-modeling explainability. Self-model explainability encompasses simultaneous generation of explanations and predictions, leveraging problem-specific insights derived from domain expert knowledge. Pre-modeling explainability utilizes refined attribute sets to facilitate clearer system behavior interpretations before model training. Post-modeling explainability focuses on modifying trained model behavior to enhance responsiveness to input–output relationships, improving overall system transparency and effectiveness within the dynamic Industry 5.0 cybersecurity landscape [,]. The subsequent sections examine these explainability approaches in greater detail.

5.2.1. Self-Model Explainability

The X-IDS models generated from self-explaining models are designed to inherently explain their intrinsic decision-making procedure. These models exhibit simple architectures capable of identifying crucial attributes that trigger the decision-making process for a given input. In this way, several explainability techniques have been proposed according to the model’s complexity. For instance, Sinclair et al. [] suggested a rule-based explanation by developing an ante hoc explainability application named NEDAA system that combines ML methods like genetic algorithms and Decision Trees to aid intrusion detection experts by generating rules for classifying normal network connections from anomalous ones, based on domain knowledge. The NEDAA approach employs analyst-designed training sets to develop rules for intrusion detection and decision support. Mahbooba et al. [] tried to explain and interpret known attacks in the form of rules to highlight the target of the attack and their causal reasoning using Decision Trees. They used ID3 to construct a Decision Tree, using the KDD-99 dataset, where the decision rules traverse from top to bottom nodes, and the rules from the model are generated using the Rattle package in R. Manoj et al. [,] proposed Decision Tree-based explainability to explain the actions taken by the Industrial control system against IoT network activities. These rules can be compiled into an expert system for detecting intrusive events or to simplify training data into concise rule sets for analysts. The rule-based explanation offers valuable insights into decision making, promotes transparency, and allows domain expertise integration. However, they have limitations in handling complex and evolving threats, scalability, and potential conflicts []. Another recent host-based intrusion detection system (HIDS) was proposed by Yang et al. []; they used Bayesian networks (BNs) to create a self-explanatory hybrid detection system by combining data-driven training with expert knowledge. BNs are a specific type of Probabilistic Graphical Models (PGMs) that model the probabilistic relationships among variables using Bayes’ Rule []. Firstly, they extracted expert-informed interpretable features from two datasets, Tracer FIRE 9 (TF9) and Tracer FIRE 10 (TF10), which consist of normal and suspect system event logs generated through Zeek and Sysmon by the Sandia National Laboratories (SNL) Tracer FIRE team. The authors utilized Bayes Server as an engine for evaluating multiple BN architectures in finding the best-performing model, while the explanations are provided by visualizing the network graph, which provides feature importance information via conditional probability tables []. Self-explanatory models in IDSs offer notable advantages by enhancing transparency and interpretability. They provide insights into decision making, enabling analysts to understand the reasoning behind alerts. This aids in trust building, model validation, and effective response. However, self-explanatory models might struggle with complex relationships, limiting their capacity to capture nuanced attack patterns.

5.2.2. Pre-Modeling Explainability

Pre-modeling explainability techniques involve some preprocessing methods to summarize large feature datasets into an information-centric set of attributes that align with human understanding and help downstream modeling and analysis. Zolanvari et al. [] proposed an explainable model for transforming the input features into representative variables through the factor analysis of mixed data (FAMD) method and then for finding mutual information to quantify the amount of information for each representative and their mutual dependence on the class labels, which helps in finding the top explainable representatives for artificial neural network (ANN). Le et al. [] used information gain (IG) to calculate the most informative feature values, which are then used in Ensemble Tree classification. The model’s outputs are then plotted in the form of a heatmap and decision plot using the SHAP explanation technique. Alani et al. [] proposed a method named Recursive Feature Elimination (RFE) using feature importance, where the features having the lowest importance are removed during the training and test rounds of different classifiers, including RF, LR, DT, GNB, XGB, and SVM classifiers. After retrieving the minimum number of features on which the model shows better performance, RF, a TreeExplainer which is a type of SHAP explainer, is used to measure the contribution of each selected feature. Gurbuz et al. [] addressed the security and privacy issues of the IoT-based healthcare network data flow by applying the least computationally expensive machine learning models, including KNN, DT, RF, NB, SVM, MLP, and ANN. The procedure involves first retrieving important features using a linear regression classifier and then leveraging the Shapash Monitor explanation interface to visualize feature importance plots, prediction distributions, and partial dependence plots for healthcare professionals, data scientists, and other stakeholders. Patil et al. [] analyzed the correlation between features using a heatmap, and the outliers were excluded in the preprocessing step. Then the LIME technique was used to explain their Voting Classifier, consisting of RF, DT, and SVM classifiers. Zebin et al. [] addressed the explainability problem in DNS over HTTPS (DoH) protocol attack detection system. To understand the underlying distribution of the dataset, the Kernel Density Estimation (KDE) technique was deployed to estimate the probability density function of the features. After the thorough preprocessing of the datasets, optimal hyperparameters for the base RF classifier were found by the GridsearchCV function. For the explanation of the model, they used SHAP values to highlight the features that contributed to the underlying decision of the model. Sivamohan et al. [] selected information-rich features by using the Krill Herd Optimization (KHO) algorithm for BiLSTM-XAI-based classification, where the explanation is provided using both LIME and SHAP mechanisms. Wang et al. [] proposed a hybrid explanatory mechanism by first finding the top-most important feature set by using the LIME technique on a CNN+LSTM structure. A Decision Tree model, XGBoost, is then trained on the selected important features, while the explanations for the important features are generated through the SHAP mechanism. Another hybrid mechanism was proposed by Tanuwidjaja et al. [] by using both LIME and SHAP mechanisms to cover both the local and global explanations of an SVM-based IDS.

Another pre-modeling explainability technique involves visualization, where the focus is on providing intuitive visualizations of data and model behavior to help users, analysts, and stakeholders gain insights into how the model works and why it makes certain predictions. Mills et al. [] proposed a graphical representation for understanding the Decision Trees of the Random Forest (RF) classifier. In the same context, Self-Organizing Maps (SOMs), also known as Kohonen maps [], are used as an exploratory tool to gain a deeper understanding of the data that the decision model is trained on. Ables et al. [,] trained and evaluated different extensions of Kohonen Map-based Competitive Learning algorithms, including Self-Organizing Map (SOM), Growing Self-Organizing Map (GSOM), and Growing Hierarchical Self-Organizing Map (GHSOM), which are capable of producing explanatory visualizations. The core design of these extensions is to organize and represent high-dimensional data in a lower-dimensional space while preserving the topological relationships and structures of the original data. That is why SOMs can also be used for dimensionality reduction. In terms of IDS explainability, statistical and visual explanations were created by visualizing global and local feature significance charts, U-matrix, feature heatmaps, and label maps through the resulting trained models using NSL-KDD and CIS-IDS-2017 benchmark datasets. Lundberg et al. [] proposed a visual explanation method, named VisExp, that applies SHAP to find feature importance values, “SHAP-values”, for explaining the behavior of an in-vehicle intrusion detection system (IV-IDS). The visual explanation is generated as a dual swarm plot utilizing standard Python visualization libraries, which presents the normal Controller Area Network (CAN) traffic at the top and the intruder’s traffic at the bottom according to the SHAP-values distribution. Al et al. [] used the Fast Gradient Sign Method (FGSM) as an adversarial sample generator, and in the next step, the DALEX framework was utilized for identifying the most influential features that enhance the Deep Neural Network (DNN) model’s decision performance. The same fine-tuning of the deep cyber threat detection model was also explored by Malik et al. [] by coupling the same adversarial sample generator, “FGSM”, and the explanations generated through SHAP values. Lanfer et al. [] addressed the false alarms and dataset limitations issues in available network-based IDS datasets by utilizing SHAP summary and Gini impurity. Their contribution lies in demonstrating how imbalances in datasets can affect XAI methods like SHAP and how retraining models on specific attack types can improve classification and align better with domain knowledge. They utilized SHAP beeswarm plots to visualize the explanations of the target class individually. As such, visual explanations offer intuitive insights into the system’s behavior, but the dependence on visualization quality makes the system limited to subjectivity and may also lead to inconsistency with the change in the visualization technique []. To mitigate these limitations, a combination of various explainability methods, including both visual and non-visual approaches, should be employed to provide a more comprehensive understanding of IDS behaviors and enhance threat detection and prevention capabilities. Lu et al. [] proposed a feature attribution explainability mechanism based on the concept of an economic theory called modern portfolio theory (MPT). By considering features to be assets and using perturbation, the expected feature output attribution values are referred to as their explanation. Feature attribution based on modern portfolio theory minimizes the variance in prediction score changes about attribution values, whereby a higher feature attribution value signifies a substantial impact on the model’s prediction score with a small feature change.

5.2.3. Post-Model Explainability

Post-model explainability refers to the techniques and methods used to interpret and understand the decisions made by a trained learning model. Unlike self-and pre-model explainability techniques, post-modeling allows stakeholders to gain insights into model decisions, detect biases, and validate model behavior, contributing to better-informed decision making and building trust in AI systems []. The most adopted techniques in the literature include the feature importance methods, where the impact of each input feature is analyzed according to the trained model’s performance. Sarhan et al. [] used the SHAP method to explain ML model detection performance. After finding the best-performing sets of hyperparameters for both the MLP and RF classifiers through partial grid search, the models were analyzed to understand their internal operations by calculating the Shapley value of the features. Tcydenova et al. [] proposed the LIME explainability approach for detecting adversarial attacks on IDSs, where the normal data boundaries are explained for a trained SVM-based model. Gaitan et al. [] used horizontal bar plots to visualize the global explanation of the model prediction using the SHAP mechanism. Oseni et al. [] proposed a SHAP mechanism for improving the interpretation and resiliency of DL-based IDSs in IoT networks. The use of the SHAP mechanism was also proposed by Alani et al. [] and Kalutharage et al. [] to explain a deep learning-based Industrial IoT (DeepIIoT) intrusion detection system. Muna et al. [] employed LIME and SHAP mechanisms to explain the prediction made by the Extreme Gradient Boosting (XG-Boost) classifier and also used ELI5, “Explain Like I’m 5”, a Python package (https://www.python.org/, accessed on 26 October 2025) using the interpreting Random Forest feature weight approach. This package supports tree-based explanation to show how effective each feature is in contributing to all parts of the tree in the final prediction. Abou et al. [] used RuleFit and SHAP mechanisms to explore the local and global interpretations for DL-based IDS models. Marino et al. [] utilized an adversarial ML approach to find an explanation for the input features. They used the samples that were incorrectly predicted by the trained model and tried again with the required minimum modifications in feature values to correctly classify. This allowed for the generation of a satisfactory explanation for the relevant features that contributed to the misclassification of the MLP model. da Silveira Lopes et al. [] combined the SHAP and adversarial approaches to accurately identify the false-positive prediction by an IDS model. Szczepanski et al. [] proposed a prototype system where they utilized a Feedforward ANN with PCA to train as a classifier, and in parallel, a Decision Tree was generated from the samples along with their outputs from the classifier. The retrieved tree was handled by the DtreeViz library to visualize an explanation for the classifier’s decision. Wang et al. [] improved the explanation of an IDS by combining local and global interpretation generated by models using the SHAP technique. The local explanation gives the reason for the decision taken by the model, and the global explanation shows the relationships between the features and different attacks. They used the NSL-KDD dataset and two different classifiers, namely, one-versus-all and multiclass classifiers, to compare the interpretation results. Nguyen et al. [] adopted the same mechanism to explain the decisions made by a CNN and a DT-based IDS model by using SHAP values. The target was to build trust for the design of the intrusion detection model among security experts. Roy et al. [,] proposed a SHAP-LIME hybrid explainability technique to explain the results generated by a DNN both globally and locally. Mane et al. [] presented the same hybrid approach for explaining a Deep Neural Network-based IDS. To provide quantifiable insights into which features impact the prediction of a cyber attack and to what extent, they used the SHAP, LIME, Contrastive Explanation Method (CEM), ProtoDash, and Boolean Decision Rules via Column Generation (BRCG) approaches.

Learning the compact representations of input data through the encoding and decoding process, autoencoders aid in uncovering underlying patterns and essential features within the data. This latent representation often corresponds to meaningful characteristics of the input data, making it easier to understand and interpret the model’s behavior []. The autoencoders are based on reconstructing the input samples by minimizing the reconstruction error between the encoder and decoder. Along with the great property of anomaly detection, reconstruction error-based methods also provide a comprehensive explanation of the connection between the inputs and the corresponding outputs. In this context, Khan et al. [] proposed an autoencoder-based IDS architecture by adopting a CNN and an LSTM-based autoencoder to discover threats in the Industrial Internet of Things (IIoT), as well as explaining the model internals. For model explainability, the LIME technique was used to explain the predictions of the proposed autoencoder-based IDS. Ha et al. [] proposed the same LSTM-based autoencoder model for anomaly detection in industrial control systems, where the explainability of the model was achieved by the Gradient SHAP mechanism. Nguyen et al. [] used a variational autoencoder (VAE) to detect network anomalies and a gradient-based explainability technique to explain the models’ decisions. Antwarg et al. [] used reconstruction error as an anomaly score and computed the explanation for prediction error by relating the SHAP values of the reconstructed features to the true anomalous input values. Aguilar et al. [] proposed a Decision Tree-based interpretable autoencoder, where the correlation between the categorical attributes’ tuples is learned through the Decision Tree encoding and decoding process and the interpretability of the autoencoder is achieved by finding the rules from the decoder to interpret how they enable to decode the tuple accurately. Lanvin et al. [] proposed a novel explainability mechanism named AE-values, where the explanation is based on the p-values of the reconstruction errors produced by an unsupervised autoencoder-based anomaly detection method. They handle the anomaly detection problem as a one-class classification problem using the Sec2graph method, and a threshold value is computed from the reconstruction error of the input benign files. The error value above the threshold is considered responsible for the anomaly. Javeed et al. [,] proposed a multiclass prediction model by combining BiLSTM, Bidirectional-gated recurrent unit (Bi-GRU), and fully connected layers. They applied the SHAP mechanism on the last fully connected layer to obtain the local and global interpretation for the model decision.

Another post-model explainability technique involves Saliency Map or Attention Map methods, which aim to explain the decisions of a Convolutional Neural Network (CNN) by highlighting the regions of an input image that contribute the most to a specific prediction. Yoon et al. [] proposed a method named Memory Heat Map (MHM) to characterize and segregate the anomalous and benign behavior of the operating system. Lin et al. [] used the region perturbation technique to generate a heatmap for visualizing the predictions made by the image-based CNN model. Iadarola et al. [] proposed a cumulative heatmap generated using the Gradient-weighted Class Activation Mapping (Grad-CAM) technique, where the gradients of the convolutional layer are converted into a heatmap through Grad-CAM to balance the trade-off between CNN accuracy and transparency. Andresini et al. [] addressed the network traffic classification and decision explanation task through image classification, where the network flow traces are transformed into a pixel frame of single-channel square images. A CNN model is incorporated with the attention layer to capture the contextual relationships between different features of the network traffic and the observed intrusion classes. These techniques help users understand not only which regions are important but also the extent to which different regions influence the model’s predictions. They can be particularly useful for gaining a finer-grained understanding of the relationships between input features and model responses [].

From the literature review, it is evident that machine learning-based intrusion detection systems (IDSs) predominantly employ rule-based, LIME, and SHAP techniques to achieve local and global explainability. Despite their widespread adoption, these methods exhibit limitations in fully interpreting the complex decision-making processes of black-box models, particularly in capturing nuanced attack patterns and ensuring robustness across diverse threat scenarios. Consequently, there is a pressing need for advanced explainability techniques that enhance transparency, improve interpretability, and align more effectively with domain-specific requirements in cybersecurity applications for Industry 5.0 environments.

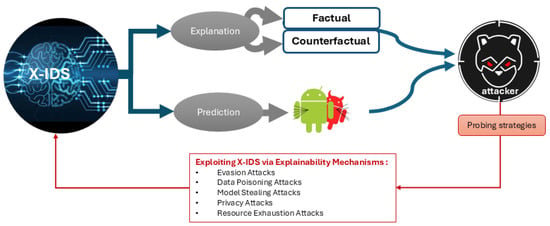

6. Adversarial XAI and IDSs

While XAI enhances trust and transparency in cybersecurity systems, it simultaneously introduces new vulnerabilities that sophisticated attackers can exploit. This paradox represents a fundamental challenge in Industry 5.0 cybersecurity: the same explainability mechanisms that enable human understanding and system debugging also provide adversaries with insights into model decision-making processes [,].

The prevalence of adversarial methodologies, wherein attackers systematically subvert intelligent models by crafting adversarial examples, targets both black-box and white-box models []. These attacks exploit factual and counterfactual explanations generated by XAI methods to facilitate feature manipulation, evasion, poisoning, and oracle attacks, as illustrated in Figure 6. Oracle attacks encompass techniques where adversaries exploit model outputs—predictions, confidence scores, or XAI-generated explanations—to infer sensitive information or manipulate system behavior, including membership inference and model vulnerability extraction []. This exploitation becomes particularly critical in Industry 5.0, where interconnected systems rely on real-time IDSs.

Figure 6.

Exploiting X-IDSs: Attacker probes predictions and explanations (factual and counterfactual) to launch adversarial attacks, undermining system security.

From our analysis in Section 5 and Table 2, prominent explainability methods include regression model coefficients, rule-based approaches, LIME, SHAP, and gradient-based explanations. These techniques are primarily evaluated based on descriptive accuracy and relevance []. However, access to detailed model decision-making information enables attackers to manipulate both target security models and their explainability mechanisms [,]. This vulnerability necessitates robust defenses against adversarial attacks in AI-based cybersecurity systems for Industry 5.0 [].

Before machine learning adoption, network anomaly detection relied on carefully designed rules that expert attackers could reverse-engineer to bypass detection mechanisms []. While intelligent ML-based systems have shown promise in mitigating these threats, the ongoing adversary–defender rivalry drives sophisticated adversarial strategy development. Deep learning models remain susceptible to adversarial attacks that manipulate their behavior, leading to outcomes contrary to intended functionality [].

Table 3 provides a comprehensive overview of adversarial techniques targeting IDSs, categorized by attack type and explainability utilization. The analysis reveals a progression from traditional black-box attacks to sophisticated explainability-aware attacks, highlighting the evolving threat landscape in XAI-based cybersecurity systems.

6.1. Adversarial Attacks Without Utilizing Explainability

Adversarial attacks on ML systems are categorized into white-box attacks (complete target system knowledge), black-box attacks (limited knowledge with model querying capability), and gray-box attacks (partial classifier information) []. Attack vectors include privacy attacks, poisoning attacks, and evasion attacks. Perturbation and evasion mechanisms represent the most straightforward approaches, where perturbation attacks subtly alter input data to mislead ML models and evasion attacks create inputs that bypass detection entirely [,,].

Generative Adversarial Networks (GANs) and variants are widely employed in cybersecurity for generating synthetic data, addressing class imbalance, and creating adversarial examples [,]. Piplai et al. [] targeted GAN-based solutions using discriminator neural networks as classifiers, successfully attacking through Fast Gradient Sign Method (FGSM) perturbations. Ayub et al. [] employed Jacobian-based Saliency Map Attack (JSMA) for adversarial sample generation, while Pujari et al. [] utilized Carlini–Wagner white-box evasion attacks. Alshahrani et al. [] performed evasion attacks using Deep Convolutional GANs for synthetic sample generation.

GAN training instability has led to variants including Wasserstein GAN with gradient penalty (WGAN-GP) and AdvGAN. Duy et al. [] investigated ML-based IDS vulnerabilities in software-defined networks using these variants, targeting non-functional features for malicious traffic evasion. Zhang et al. [] evaluated classifier robustness using gradient-free methods, while Lan et al. [] introduced poisoning attacks through malicious file injection into benign Android APKs.

Membership inference attacks pose severe threats to AI model privacy by revealing training data information []. Qiu et al. [] presented black-box adversarial attacks on DL-based NIDSs in IoT environments, achieving 94.31% attack success rates while modifying minimal packet bytes. Chen et al. [] introduced Anti-Intrusion Detection Auto-Encoder (AIDAE) for generating features mimicking normal network traffic. Jiang et al. [] demonstrated perturbation attacks against LSTM and RNN models, proposing Feature Grouping and Multi-model fusion Detector (FGMD) for enhanced robustness.