Abstract

Transmission lines in complex outdoor environments often suffer external damage in construction areas, severely affecting the stability of power systems. Traditional manual detection methods have problems of low efficiency and poor real-time performance. In deep learning-based detection methods, standard convolution has a large parameter count and computational complexity, making it difficult to deploy on edge devices; while lightweight depthwise separable convolution offers low computational cost, it suffers from insufficient feature extraction capability. This limitation stems from its independent processing of each channel’s information, making it unable to simultaneously meet the practical requirements for both lightweight design and high detection accuracy in transmission line monitoring applications. To address the above problems, this study proposes LFRE-YOLO, a lightweight external damage detection algorithm for transmission lines based on YOLOv10n. This study proposes LFRE-YOLO, a lightweight external damage detection algorithm based on YOLOv10n. First, we design a lightweight feature reuse and enhancement convolution (LFREConv) that overcomes the limitations of traditional depthwise separable convolution through cascaded dual depthwise convolution structure and residual connection mechanisms, significantly expanding the effective receptive field with minimal parameter increment and compensating for information loss caused by independent channel processing in depthwise convolution through feature reuse strategies. Second, based on LFREConv, we propose an efficient lightweight feature extraction module (LFREBlock) that achieves cross-channel information interaction enhancement and channel importance modeling. Additionally, we propose a lightweight feature reuse and enhancement detection head (LFRE-Head) that applies LFREConv to the regression branch, achieving comprehensive lightweight design of the detection head while maintaining spatial localization accuracy. Finally, we employ layer-adaptive magnitude-based pruning (LAMP) to prune the trained model, further optimizing the network structure through layer-wise adaptive pruning. Experimental results demonstrate significant improvements over YOLOv10n baseline: mAP50 increased from 92.0% to 94.1%, mAP50-95 improved from 66.2% to 70.2%, while reducing parameters from 2.27 M to 0.99 M, computational complexity from 6.5 G to 3.1 G, and achieving 86.9 FPS inference speed, making it suitable for resource-constrained edge computing environments.

1. Introduction

Transmission line failures are predominantly overhead line failures, with external force damage consistently being one of the primary causes of frequent overhead line failures []. Traditional transmission line inspection mainly relies on manual periodic patrols, which suffer from low efficiency, high costs, limited coverage, and poor real-time performance, making it difficult to meet the demands of modern power grid safety monitoring []. With the rapid development of artificial intelligence and computer vision technologies [,,], intelligent detection technologies based on deep learning provide new solutions for transmission line external damage target detection, enabling all-weather automated monitoring and significantly improving detection efficiency and accuracy. Currently, deep learning-based object detection algorithms can be categorized into two types. The first category comprises two-stage object detection algorithms (e.g., R-CNN series []), which obtain candidate regions through selective search and typically outperform single-stage detectors in detection accuracy and small object detection. However, they have high computational complexity and relatively slow inference speeds, making them unsuitable for scenarios requiring high real-time performance.

The second category consists of single-stage object detection algorithms (e.g., YOLO series []), which demonstrate excellent real-time performance compared to two-stage detectors. However, most single-stage detectors are large-scale server detectors [], and for edge devices with limited computational resources, the FLOPs of most single-stage models remain unacceptable and require further optimization. As intelligent detection technology expands to resource-constrained platforms such as unmanned aerial vehicles (UAVs) and edge devices, model lightweighting has become a critical issue that urgently needs to be addressed. However, in the specific application scenario of transmission line inspection, lightweight models must overcome two key challenges: first, the complex environmental interference—the transmission line corridor backgrounds include forests, farmland, buildings, and similarly colored objects (such as yellow engineering vehicles and withered autumn grass), forming strong background noise that easily leads to false detections and missed detections by the model; second, the issue of target scale variation and small target detection—due to the dynamic changes in UAV perspectives and distances, the same machinery may appear extremely large in close-ups and very small in distant views, exhibiting multi-scale characteristics, and the features of distant small targets are easily overwhelmed in deep networks, resulting in failures to detect or accurately recognize them.

To address these challenges, researchers have proposed various improvement methods. At the convolution level, traditional lightweighting approaches primarily focus on the decomposition and reconstruction of convolutional kernels. Depthwise separable convolution [] significantly reduces the number of parameters and computational cost by decomposing standard convolution into depthwise convolution and pointwise convolution. However, due to the channel-independent processing mechanism of depthwise convolution, the feature representation capacity may be compromised, resulting in inadequate feature discriminability when dealing with complex background interference. To mitigate this issue, group convolution [] divides input channels into multiple groups and performs convolution operations independently within each group, reducing computational complexity by decreasing the number of connections. Nevertheless, group convolution still restricts information exchange between different groups, potentially affecting global feature learning. ShuffleNet [] introduces channel shuffle operations based on group convolution, enhancing information flow between different groups by reorganizing inter-channel connections. MobileNetV2 [] adopts an inverted residual structure design, using 1 × 1 convolution to first expand the number of channels, then performing depthwise convolution, and finally compressing feature dimensions through linear bottleneck layers. These methods all aim to strike a balance between efficiency and feature richness to enhance model robustness in noisy backgrounds.

At the detection head level, early methods achieved lightweighting by uniformly reducing the number of convolutional layers and channels in detection heads, but ignored the differences between classification and regression tasks. To address this problem, decoupled detection heads [] separate classification and regression tasks into different branches, allowing for specialized optimization design for each task. Despite this, existing decoupled designs still face the problem of lightweighting imbalance, where classification branches can be effectively compressed through lightweight operations such as depthwise separable convolution, while regression branches still rely on computationally expensive standard convolution structures due to the need for precise spatial localization capability [,]. Moreover, existing designs still remain limited in their ability to preserve and enhance features of small targets within deep networks when handling multi-scale variations.

In summary, lightweight models for transmission line external damage detection must address three critical challenges under a constrained computational budget: the inherent computational complexity of the model itself, robust noise resistance in complex backgrounds, and precise detection of multi-scale objects, particularly small targets. Current feature extraction methods exhibit limitations in receptive field and feature representation capacity, while prevailing detection head structures struggle to achieve accurate perception of diverse object scales concurrently with model lightweighting.

Therefore, this paper proposes a novel lightweight network that significantly reduces model complexity and computational requirements while ensuring detection accuracy through innovative feature extraction modules, optimized detection head structure, elimination of model redundancy, and integration of adaptive pruning techniques.

The main contributions of this paper are summarized as follows:

- (1)

- Lightweight Feature Reuse and Enhancement Convolution (LFREConv): Addressing the insufficient cross-channel information interaction and limited receptive field issues in traditional depthwise separable convolution, we design a cascaded dual depthwise convolution structure with residual connection mechanisms. This significantly expands the effective receptive field with minimal parameter increment while compensating for information loss caused by independent channel processing in depthwise convolution through feature reuse strategies, enhancing feature representation capability while maintaining lightweight advantages.

- (2)

- Efficient Feature Extraction Module (LFREBlock) based on LFREConv: Through cascaded stacking of multi-layer LFRE convolutions, we progressively expand the receptive field and extract high-level semantic information. Combined with feature concatenation, channel shuffle, and SE attention mechanisms, this achieves cross-channel information interaction enhancement and channel-level importance modeling, effectively improving feature extraction capability in complex scenarios.

- (3)

- Lightweight Feature Reuse and Enhancement Detection Head (LFRE-Head): Based on LFREConv, we redesign the regression head to propose LFRE-Head, which retains as much feature layer information as possible before prediction while expanding the effective receptive field on a lightweight basis.

- (4)

- LAMP Application: We apply the layer-adaptive magnitude-based pruning (LAMP) algorithm to compress the trained LFRE-YOLO, maximally reducing model complexity while maintaining detection accuracy.

2. Related Work

2.1. Transmission Line External Damage Target Detection Related Work

Transmission lines serve as critical components in the power energy supply system, making their safe and stable operation paramount. However, China’s complex terrain results in transmission lines being predominantly distributed in outdoor environments, often passing through construction areas where external force damage accidents caused by construction machinery occur frequently, leading to frequent transmission line failures and seriously threatening power grid safety. With the rapid development of computer vision technology and modern sensor technology, intelligent detection technologies based on deep learning provide new solutions for preventing external force damage to transmission lines.

Early research primarily focused on traditional two-stage detection frameworks. Xiang et al. [] achieved power grid engineering vehicle intrusion detection by adjusting the ROI pooling layer position in Faster R-CNN and optimizing the feature classification structure. Qu et al. [] proposed the E-OHEM-enhanced Faster R-CNN, improving detection accuracy through online hard example mining technology, though with relatively slow inference speed. With the rise of single-stage detection algorithms, YOLO series models have gained attention due to their excellent speed–accuracy balance.

Subsequently, Liu et al. [] improved YOLOv3 according to engineering vehicle characteristics and proposed an engineering vehicle detection model around transmission lines. Li et al. [] focused on algorithm deployment on embedded devices and proposed a lightweight transmission line safety hazard detection algorithm based on YOLOv5s. Zou et al. [] conducted in-depth research on complex scenarios containing random feature targets and improved YOLOX-s, enhancing the algorithm’s recognition capability for diverse external damage targets. Li et al. [] proposed an intelligent detection method with detection and three-dimensional ranging capabilities based on YOLOv7-Tiny and 3D ranging technology, adding distance perception capability to object detection and providing richer information for risk assessment. Zou et al. [] combined YOLOv8s with attention mechanisms to improve model accuracy in complex environments, addressing the problem of small target missed detection in actual complex transmission line scenarios.

In recent years, improvements in the YOLO series algorithms have further pushed the performance boundaries for transmission line defect detection under complex backgrounds and for small targets. Li et al. [] proposed the RKM-YOLO algorithm, based on lightweight improvements to YOLOv8n, focusing on optimizing the detection capability for small-target defects in complex environments. By designing modules to enhance the receptive field and proposing a multi-scale collaborative attention mechanism, they significantly improved the detection performance for small targets such as broken insulators, contamination flashovers, and rusted vibration dampers. Wang et al. [] proposed an improved transmission line insulator defect detection algorithm based on YOLO11, focusing on solving the challenges of small target detection and complex background interference. By replacing novel convolutional layers, introducing attention mechanisms, and improving the loss function, they achieved a lightweight design and real-time performance while maintaining a high level of accuracy. Ji et al. [] proposed an improved detection algorithm based on YOLOv12, focusing on achieving efficient real-time detection of transmission line defects on edge devices. This research introduced a Bidirectional Weighted Feature Pyramid Network (BiFPN) to enhance multi-scale feature fusion, embedded a Cross-stage Channel-Position Collaborative Attention (CPCA) module to suppress complex background interference, and adopted ShuffleNetV2 to achieve backbone network lightweight. Ultimately, it reached a detection speed of 142.7 FPS, demonstrating strong potential for edge deployment.

Although existing methods are primarily improved-upon classical detection frameworks and have achieved certain effects in specific scenarios, they generally have limitations. Specifically, most methods focus on enhancing detection accuracy while paying insufficient attention to model lightweighting and lack optimized designs tailored for resource-constrained devices.

2.2. Lightweight Technology Related Work

With the widespread application of deep learning models across various fields, deployment costs and computational resource requirements have increasingly become key factors constraining their practical implementation. Network lightweight technology aims to significantly reduce model storage overhead, computational complexity, and inference time while maintaining model performance, enabling deep learning models to operate efficiently in resource-constrained environments. Current mainstream network lightweight technologies primarily include lightweight network structure design and model pruning methods.

Initial lightweight techniques mainly focused on lightweight network structure design. The MobileNet series represents pioneering work in lightweight networks. Howard et al. [] first introduced depthwise separable convolution in MobileNetV1, decomposing standard convolution into depthwise convolution and pointwise convolution. This reduced computational complexity from to , achieving significant parameter and computation reduction. The ShuffleNet series [] took a different approach, using channel shuffle operations to address insufficient inter-group information interaction in grouped convolution. ShuffleNetV1 combined pointwise grouped convolution with channel shuffle, achieving good performance under extremely low computational budgets. GhostNet [], based on redundant feature maps, proposed the Ghost module, generating more feature maps through inexpensive linear transformations to achieve effects similar to ordinary convolution with fewer parameters.

Following lightweight network structure design, model pruning techniques emerged. Model pruning optimizes model structure by systematically removing redundant parameters and connections in neural networks, representing one of the most intuitive and effective lightweight methods. The core of pruning technology lies in identifying and removing weights that contribute minimally to overall network performance, thus simplifying the model while maintaining performance. Based on operation granularity differences, pruning methods can be divided into unstructured pruning and structured pruning.

Unstructured pruning achieves model compression by removing individual weight connections, representing the most intuitive pruning method. Han et al. [] pioneered magnitude-based iterative pruning methods through “training–pruning–fine-tuning” cycles to progressively optimize network structure. However, sparse models generated by unstructured pruning have difficulty achieving actual acceleration effects on existing hardware, limiting their practical value. Structured pruning operates at channel, filter, or layer levels, producing hardware-friendly regular model structures compared to unstructured pruning that generates irregular sparse models. Li et al. [] proposed L1-norm-based filter importance evaluation methods, representing early structured pruning work. He et al. [] proposed channel pruning methods by minimizing reconstruction error to select important channels. Luo et al. [] developed filter pruning based on next-layer statistical information, formulating filter selection as an optimization problem.

Although lightweight techniques have a solid theoretical foundation, relying solely on a single aspect of lightweighting is often insufficient. It is necessary to combine lightweight network architecture design with model pruning to further compress the model, thereby better meeting the demands of real-world deployment.

3. Materials and Methods

3.1. Overall Network Architecture

As an important milestone in the YOLO series, YOLOv10 introduces an NMS-free design and a consistency-matching strategy, fundamentally addressing the issue of inconsistent label assignment between training and inference. Compared with traditional models that require post-processing, this end-to-end design significantly improves inference efficiency, providing a key guarantee for real-time detection scenarios. Meanwhile, its dual label assignment mechanism achieves accuracy close to two-stage methods while maintaining the speed advantage of single-stage detectors. Moreover, YOLOv10 itself is optimized for edge devices. Its efficiency–accuracy driven designs, such as the lightweight classification head and spatial-channel decoupled downsampling, result in YOLOv10n having only 2.3 M parameters, offering an ideal starting point for further lightweight improvements. Therefore, this paper chooses YOLOv10n as the baseline model.

However, in practical application scenarios, edge computing devices have limited computational power and storage space. Although YOLOv10 can effectively detect external damage targets, it still suffers from insufficient lightweight design and limited receptive field expansion capability. Addressing these issues, this paper introduces LFRE-YOLO, a lightweight transmission line external damage target detection model based on YOLOv10n as the baseline model. The network structure is shown in Figure 1. Specific improvements include the following aspects: First, we replace the C2f modules in the YOLOv10n backbone network with the proposed LFREBlock feature extraction modules, which adopt feature reuse and enhancement strategies to significantly improve feature representation capability while maintaining lightweight advantages. Second, we redesign the regression branch of the YOLOv10n detection head to propose the LFRE-Head lightweight detection head, achieving comprehensive lightweight design of the detection head while ensuring spatial localization accuracy. Finally, we apply the LAMP pruning algorithm to prune the trained model, achieving further lightweight design through layer-wise adaptive scoring mechanisms that precisely identify and remove redundant connections in the network.

Figure 1.

The overall framework of LFRE-YOLO.

3.2. Lightweight Feature Reuse and Enhancement Convolution (LFREConv)

In object detection tasks, a common lightweight strategy involves using depthwise separable convolution to replace standard convolution operations. This method decomposes standard convolution into depthwise convolution and pointwise convolution, significantly reducing computational complexity and parameter count. However, depthwise separable convolution suffers from two issues: First, depthwise convolution uses single-channel convolution kernels that independently perform spatial convolution on each input channel, lacking cross-channel information interaction mechanisms and failing to effectively capture correlations and dependencies between channels. Second, receptive field is particularly important in convolutional neural networks as it directly relates to feature extraction capability strength, while the limited receptive field of single-layer 3 × 3 depthwise convolution restricts the model’s ability to acquire contextual information, leading to limited feature extraction capability.

To address these issues, this paper redesigns depthwise separable convolution using residual connections and a cascaded dual depthwise convolution structure, proposing Lightweight Feature Reuse and Enhancement Convolution (LFREConv), as shown in Figure 2.

Figure 2.

The newly designed LFREConv.

Considering that pointwise convolution occupies the majority of parameters in depthwise separable convolution while depthwise convolution has a relatively small parameter overhead, we expand the effective receptive field by cascading a second depthwise convolution layer after the first depthwise convolution . This design significantly enhances contextual information capture capability with minimal parameter increment. Simultaneously, we introduce a residual connection mechanism that performs element-wise addition between the original input feature map X and the feature map processed by dual-layer depthwise convolution to obtain , effectively compensating for information loss caused by independent channel processing in depthwise convolution and ensuring complete preservation of important original feature information. Then, pointwise convolution (ordinary convolution with 1 × 1 kernel) integrates inter-channel information in feature map and extracts features to obtain feature layer . This structure maintains the lightweight advantages of depthwise separable convolution while significantly improving feature representation capability through feature reuse and receptive field enhancement strategies.

The mathematical formulation is as follows:

where represents a depthwise convolution (DW) operation on input feature map X, represents a depthwise convolution operation on feature map obtained from X through depthwise convolution, and represents a pointwise convolution (PW) operation on input feature maps X and . Compared to the original depthwise separable convolution, LFREConv can obtain more important original feature information during pointwise convolution operations.

3.3. Lightweight Feature Reuse and Enhancement Module (LFREBlock)

To address the issues of inter-channel information loss after depthwise convolution and small receptive fields in backbone network feature extraction modules, this paper designs LFREBlock based on LFREConv to expand receptive fields and enhance feature extraction capability under lightweight conditions.

As shown in Figure 3, our proposed LFREBlock consists of LFREConv, channel shuffle operation, and SE attention mechanism.

Figure 3.

The newly designed LFREBlock.

We perform deep feature extraction on input features through n cascaded LFREConv layers, progressively expanding receptive fields and extracting higher-level semantic information through multi-layer stacking to obtain feature layers .

Here, represent feature extraction using LFREConv on feature maps.

The obtained feature maps are then concatenated with the original feature map X to obtain feature map .

The merged features undergo channel shuffle to obtain , breaking inherent grouping patterns between channels to promote inter-channel interaction and ensure complete feature representation.

Then, 1 × 1 pointwise convolution is used for channel information fusion and dimensionality reduction. The SE module excels at allocating weights to network channels []. For better performance, we add the SE module to the end of the module, achieving channel importance allocation through global context modeling, highlighting important features and suppressing redundant information.

Here, f represents a pointwise convolution operation on feature map .

3.4. Lightweight Feature Reuse and Enhancement Detection Head (LFRE-Head)

The detection head serves as a core component of object detection networks, occupying nearly 1/5 of the entire model’s computational load and typically including two key branches: the classification branch and the regression branch.

To reduce detection head computational load, YOLOv10 employs depthwise separable convolution to make its classification branch lightweight, effectively reducing model overhead without affecting model performance. However, for the regression branch, YOLOv10 still uses standard convolution. This is because the regression branch’s task is more complex and precise, requiring accurate prediction of target spatial position information. This spatial localization task has higher requirements for feature extraction capability, while traditional depthwise separable convolution has relatively weak feature extraction capability, potentially leading to localization accuracy degradation and affecting overall detection performance.

However, this design strategy has an obvious problem: as shown in Figure 4a, YOLOv10’s regression branch employs two consecutive 3 × 3 standard convolution layers. While ensuring feature extraction capability, this also becomes the primary source of parameters and computational load in the entire detection head, severely constraining the model’s lightweight effect.

Figure 4.

(a) YOLOv10 detection head architecture. (b) The newly designed LFRE-Head.

Addressing this issue, our proposed LFREConv provides new insights. LFREConv combines the lightweight advantages of depthwise separable convolution with significantly enhanced feature representation capability through feature reuse and receptive field enhancement strategies, effectively meeting requirements for both lightweight regression branch design and model performance maintenance.

Therefore, this paper redesigns the regression branch in the detection head based on LFREConv, proposing LFRE-Head. As shown in Figure 4b, our processing of input feature layers differs from YOLOv10’s regression branch processing.

First, we replace the first 3 × 3 convolution with 1 × 1 convolution for lightweight design, reducing parameters from to , achieving approximately 9× parameter compression. This 1 × 1 convolution primarily handles feature transformation and dimensionality reduction in the channel dimension.

Subsequently, we replace the second 3 × 3 standard convolution with LFREConv, maintaining lightweight characteristics while enhancing feature extraction capability through feature reuse and receptive field enhancement, effectively compensating for potential information loss from first-layer convolution lightweight design and ensuring sufficient spatial perception capability in the regression branch.

Finally, the regression output layer generates bounding box parameters through 1 × 1 convolution.

where represents predicted boxes, f and represent 1 × 1 convolution operations, and represents LFREConv operation. This achieves lightweight design of the regression branch while ensuring regression accuracy.

3.5. Model Compression Using the LAMP Pruning Method

Although LFRE-YOLO has achieved lightweighting of the model structure through the design of LFREBlock and LFRE-head, its computational overhead and storage requirements can still pose bottlenecks for real-time detection on edge devices and embedded systems with extremely limited resources. This is particularly true in scenarios demanding mobile deployment and high real-time performance, where further model compression is crucial. To address this, this paper adopts a pruning strategy to optimize the trained LFRE-YOLO model, aiming to minimize model complexity while preserving detection accuracy to meet practical deployment needs.

This paper employs layer-adaptive magnitude-based pruning (LAMP) [] to compress LFRE-YOLO. LAMP is a global pruning method whose core lies in abandoning traditional pruning methods’ “one-size-fits-all” unified threshold strategy, instead assigning adaptive scores to weights in each layer for layer-wise pruning.

The core of the LAMP algorithm lies in its adaptive scoring mechanism for each layer. For weight matrix W in a certain layer (first flattened to a one-dimensional vector), sorted by absolute weight values from small to large (index indicates ), the LAMP score for the u-th weight is:

where the numerator term represents the square of the weight, reflecting the weight’s own magnitude, and the denominator term represents the sum of squares of the weight and all more important weights, reflecting the weight’s relative contribution among remaining weights. The smaller the denominator, the higher the weight’s proportion among remaining weights and the stronger its importance.

Based on the scoring results, the algorithm employs a progressive pruning strategy, prioritizing the removal of weight connections with the smallest LAMP scores until a predefined Speed is achieved. By integrating dynamic importance assessment with hierarchical optimization strategies, the LAMP algorithm effectively identifies and preserves connections critical to feature extraction capabilities. This enables it to maintain the model’s feature representation capacity while significantly reducing computational complexity, allowing the pruned LFRE-YOLO model to achieve an optimal balance between performance and efficiency in resource-constrained scenarios for detecting external damage to transmission lines.

4. Results

4.1. Dataset Construction

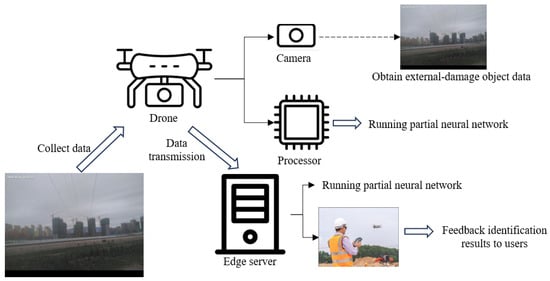

The original image dataset entirely comes from real business scenarios provided by a certain power company—power inspection videos collected by intelligent video inspection systems such as drones (as shown in Figure 5). The initial external force damage hazard dataset contained three common hazard targets: cranes, tower cranes, and construction machinery, totaling 1360 images. The original images of the dataset samples are shown in Figure 6.

Figure 5.

Transmission line external damage detection system framework.

Figure 6.

Dataset sample examples.

To prevent network model overfitting and improve model generalization capability, we selected four strategies from commonly used image data augmentation methods []: Gaussian noise, brightness adjustment, rotation, and mirroring to augment the original dataset. After augmentation, the dataset expanded to 4080 images. Sample quantities before and after augmentation are shown in Table 1. The dataset was divided into training, validation, and test sets in a 7:1:2 ratio.

Table 1.

Dataset sample statistics.

4.2. Experimental Environment and Hyperparameter Settings

To verify the effectiveness of the proposed algorithm, we established corresponding experimental environments. The experimental environment was set up on an Ubuntu 20.04 operating system equipped with RTX 2080Ti GPU (11 GB). Software versions used were: Python 3.8.0, PyTorch 1.12.1, and CUDA 11.6. All experimental training processes used identical hyperparameters: batch size of 32, training epochs of 400, input image size of 640 × 640, and initial learning rate of 0.01. Specific hyperparameters are shown in Table 2.

Table 2.

Training hyperparameters.

4.3. Evaluation Metrics

To comprehensively verify the performance of our proposed method, we adopt two evaluation metrics to assess model detection performance, including F1 score to measure model comprehensive performance and stability, and mean Average Precision (mAP) to measure model accuracy.

The calculation formulae are as follows:

where TP (True Positive) represents the number of correctly predicted positive samples, FP (False Positive) represents the number of other samples predicted as positive samples, and FN (False Negative) represents the number of positive samples predicted as other samples. N represents the number of classification categories, and represents the average precision value of the i-th category. Additionally, we introduce metrics for evaluating model complexity and computational efficiency, including Giga Floating-Point Operations per Second (GFLOPs), Parameters (Params), and Model Size. GFLOPs and Params are used to evaluate model computational complexity, while FPS represents model detection speed.

4.4. Impact of Large Convolution Kernels in Single Layers on Model Performance

Convolution kernel size often affects final network performance. In MixNet [], the authors analyzed the impact of different-sized convolution kernels on network performance and ultimately mixed different-sized convolution kernels in the same layer of the network. However, this mixing slowed down model speed in terms of model reference speed. Therefore, to ensure low latency and high accuracy, only one size of convolution kernel can be used in a single layer, and using large convolution kernels (5 × 5 depth-wise convolution) in the last two layers of feature extraction modules in the network backbone is more competitive []. We demonstrate the impact of large convolution kernels in single layers on model performance through experiments, with results shown in Table 3. A value of 1 means that the depth-wise convolution kernel in LFREConv is 5 × 5, and 0 means that the depth-wise convolution kernel in LFREConv is 3 × 3.

Table 3.

Impact of using large convolution kernels in single layers on model performance.

From experimental results, we can conclude that large kernel convolution can improve model performance with almost no increase in inference time, with optimal model performance when using large convolution kernels in the last two layers of the network backbone.

4.5. Performance Comparison Under Different Speed

For the lightweight LFRE-YOLO model, we perform pruning at different Speed (computational complexity after pruning = computational complexity before pruning/Speed) and fine-tune the pruned models to recover accuracy, as shown in Table 4. Experimental results demonstrate that, as Speed increases, model parameters and computational complexity show significant downward trends, but this does not necessarily lead to decreased expression capability. Within the 1.0–1.3 Speed range, model detection accuracy shows obvious improvement, with the mAP50 metric improving from 93.0% to 94.1% and the mAP50-95 metric significantly increasing from 68.5% to 70.2%. This phenomenon indicates that the original LFRE-YOLO model contains certain parameter redundancy and feature repetition learning issues. The LAMP algorithm, by identifying and removing these redundant connections, not only achieves lightweight model design but also optimizes network feature expression capability, validating LAMP’s effectiveness in eliminating network redundancy.

Table 4.

Model performance comparison under different Speeds.

When the Speed reaches 1.3, the model achieves an ideal performance–efficiency balance point: parameters compress to 0.99 M (35.7% reduction), computations reduce to 3.1 G (22.5% reduction), while mAP50 remains at 94.1%. This result shows that moderate structured pruning can not only significantly reduce model complexity but also improve model detection accuracy by eliminating feature redundancy.

As Speed further increases to 1.4–1.6, while lightweight effects become more apparent, detection accuracy begins showing noticeable downward trends, with mAP50 gradually decreasing from 94.1% to 93.4% and mAP50-95 declining from 70.2% to 67.8%. This indicates that when pruning intensity exceeds critical points, some network connections crucial for detection performance are mistakenly removed, causing damage to model feature extraction and expression capabilities.

Comprehensively considering model detection accuracy, parameter count, and computational complexity, at 1.3× Speed, the LFRE-YOLO model’s parameters and volume are significantly reduced while detection performance not only shows no obvious decline but actually improves. Therefore, we select the pruned model under this configuration for subsequent deployment and applications.

4.6. Ablation Experiments

To verify the accuracy and lightweight effect of the proposed improved model in transmission line external force damage hazard target detection tasks, this paper designs four groups of ablation experiments with YOLOv10n as the baseline model, targeting each module. Ablation experiment results are shown in Table 5, where ✓ indicates adoption of the corresponding module.

Table 5.

Ablation experiments.

From experimental results, we can see that after introducing LFREBlock as a feature extraction module in the backbone, the model shows significant improvements in both detection accuracy and computational efficiency. Compared to the baseline model YOLOv10n, mAP50 improves from 92.0% to 92.6%, and mAP50-95 increases from 66.2% to 68.0%. Parameters reduce from 2.27 M to 1.87 M, computational complexity decreases from 6.5 G to 5.3 G, and model size compresses from 5.5 M to 4.8 M. This demonstrates that LFREBlock achieves lightweight model design while enhancing feature representation capability through feature reuse and receptive field enhancement strategies.

Adding LFRE-Head based on LFREBlock further improves model performance. The mAP50 value reaches 93.0%, and mAP50-95 improves to 68.5%. Parameters further reduce to 1.54 M, FLOPs decrease to 4.0 G, and model size shrinks to 3.6 M. Experimental results show that LFRE-Head successfully addresses the difficulty of lightweight design in traditional detection head regression branches by applying LFREConv to regression branches, achieving comprehensive lightweight design of detection heads while ensuring spatial localization accuracy. Finally, using the LAMP algorithm to compress the improved model achieves maximum lightweight design while reaching the highest detection accuracy. The mAP50 value reaches 94.1%, mAP50-95 improves to 70.2%, representing improvements of 2.1% and 4.0%, respectively, compared to the baseline model. Parameters compress to 0.99 M, computational complexity reduces to 3.1 G, model size is only 2.4 M, and inference speed improves to 86.9 FPS. Figure 7 illustrates the precision–recall curves for the ablation studies of the proposed components. This indicates that after introducing LFREBlock and LFRE-Head modules, certain redundancy still exists in the model. The LAMP algorithm precisely identifies and removes these redundant connections through layer-wise adaptive scoring mechanisms, further optimizing network structure and improving model performance and inference speed. Comprehensively, the proposed LFRE-YOLO model demonstrates significant improvements over the YOLOv10n model in lightweight design, detection accuracy, and inference speed, making it more suitable for deployment in resource-constrained practical application scenarios such as edge computing devices.

Figure 7.

The precision–recall curves: (a) baseline, (b) ours.

4.7. Comparison Experiments with Different Models

To further verify the superiority of our proposed model, we first compare LFRE-YOLO with other YOLO series models, including YOLOv5n, YOLOv6n, Gold-YOLO, YOLOv7-tiny, YOLOv8n, GELAN-t, and YOLOv11n. We compared the LFRE-YOLO model with other models from three perspectives: detection accuracy, lightweight design, and inference speed. All comparative models were trained and tested on the transmission line external damage dataset constructed in this study, using the same training–validation–test split and under the same environment. As shown in Table 6, in terms of detection accuracy, LFRE-YOLO demonstrates significant advantages with mAP50 of 94.1% and mAP50-95 of 70.2%. Compared to YOLOv8n (92.4% mAP50), Gold-YOLO (92.3% mAP50), and YOLOv11n (91.9% mAP50), improvements of 1.7%, 1.8%, and 2.2%, respectively, are achieved, demonstrating LFRE-YOLO’s advantages in detection accuracy. In lightweight design, LFRE-YOLO occupies absolute advantage with 0.99 M parameters, 3.1 GFLOPs, and 2.4 M model size. Compared to YOLOv8n, parameters reduce by 67.1% and computations decrease by 56.3%. Compared to YOLOv11n, parameters reduce by 61.6% and computations decrease by 50.8%. Even compared to the smallest official model YOLOv5n, LFRE-YOLO still achieves 43.8% parameter reduction and 24.4% computation reduction. In inference speed, LFRE-YOLO achieves 86.9 FPS. Compared to most models, its FPS is higher. Compared to YOLOv5n, LFRE-YOLO has only 10.8 FPS difference but achieves significant accuracy improvement, with mAP50 improving by 3.1% and mAP50-95 improving by 8.7%. Although YOLOv7-tiny’s inference speed reaches 108.1 FPS, its mAP50 is 86.2% and mAP50-95 is 54.9%, which are 7.9% and 15.3% lower than LFRE-YOLO’s mAP50 and mAP50-95, respectively. This further verifies LFRE-YOLO’s balance between detection accuracy and inference speed.

Table 6.

Comparison experiments with different models.

5. Discussion

This paper proposes an efficient method for detecting external damage targets in transmission lines. To address the problems of insufficient cross-channel information interaction and limited receptive field in traditional depthwise separable convolution, we introduce the proposed LFREConv, LFREBlock, and LFRE-Head as feature extraction modules and detection head into the original YOLOv10n framework. The improved LFRE-YOLO model can achieve higher accuracy while significantly reducing computational costs. Since the three modules proposed in this paper are all designed based on feature reuse and enhancement principles and lightweight design considerations, LFREConv, LFREBlock, and LFRE-Head significantly enhance feature richness and improve model performance. LFREConv can alleviate the problem of insufficient cross-channel information interaction in traditional depthwise separable convolution, LFREBlock optimizes the feature extraction process through multi-layer cascading and attention mechanisms, and LFRE-Head achieves comprehensive lightweight design of the detection head while maintaining spatial localization accuracy. Additionally, the introduced LAMP method further compresses model parameters through layer-wise adaptive scoring mechanisms, maximally reducing model complexity while maintaining detection accuracy.

Experimental results demonstrate that the proposed lightweight method achieves higher accuracy on the transmission line external damage hazard dataset, with mAP50 improving from 92.0% to 94.1%, mAP50-95 increasing from 66.2% to 70.2%, while compressing parameters by 56.4% to 0.99 M, reducing computational complexity by 52.3% to 3.1 G, and achieving inference speed of 86.9 FPS. Furthermore, comparative experiments with other mainstream YOLO series models further validate the comprehensive advantages in detection accuracy and lightweight degree, with computational efficiency meeting real-time requirements. In summary, the research method presented in this paper demonstrates promising application potential and application prospects for edge deployment of intelligent monitoring systems for transmission lines, providing new approaches for related research. In future work, we will continue to conduct in-depth research on the following aspects: Due to experimental limitations in this study, we have not verified target detection performance under different climatic conditions (such as fog, rain, and snow scenarios). Future research will construct larger-scale datasets covering various climatic conditions to improve model generalization capability. Although the model theoretically possesses the conditions for edge deployment, validation of the proposed method’s effectiveness and stability in actual engineering applications on real platforms such as unmanned aerial vehicles and embedded devices is still needed.

Author Contributions

M.L.: Concept design, data organization, form analysis, research investigation, methodology, software development, initial draft writing, review and editing; B.W.: Concept design, data organization, research investigation, methodology, initial draft writing, review and editing; M.C.: Concept design, research investigation, resource management, software development, validation, review and editing. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Science Foundation of Hubei Province, grant number 2022CFA007, and the Hubei Provincial Central Guidance Local Science and Technology Development Project, grant number 2023EGA027.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Acknowledgments

The authors wish to thank the editor and reviewers for their valuable suggestions.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Jia, H.; Han, Z.; Xu, X.; Wu, P.; Qin, R.; Jin, Y.; Wang, X.; Huang, W. A Background Reasoning Framework for External Force Damage Detection in Distribution Network. In Proceedings of the Annual Conference of China Electrotechnical Society, Beijing, China, 17–18 September 2022; Springer: Singapore, 2022; pp. 771–778. [Google Scholar]

- Liu, Z.; Wu, G.; He, W.; Fan, F.; Ye, X. Key target and defect detection of high-voltage power transmission lines with deep learning. Int. J. Electr. Power Energy Syst. 2022, 142, 108277. [Google Scholar] [CrossRef]

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E. Deep learning for computer vision: A brief review. Comput. Intell. Neurosci. 2018, 2018, 7068349. [Google Scholar] [CrossRef]

- Xu, S.; Wang, J.; Shou, W.; Ngo, T.; Sadick, A.M.; Wang, X. Computer vision techniques in construction: A critical review. Arch. Comput. Methods Eng. 2021, 28, 3383. [Google Scholar] [CrossRef]

- Ai, D.; Jiang, G.; Lam, S.K.; He, P.; Li, C. Computer vision framework for crack detection of civil infrastructure—A review. Eng. Appl. Artif. Intell. 2023, 117, 105478. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Yu, G.; Chang, Q.; Lv, W.; Xu, C.; Cui, C.; Ji, W.; Dang, Q.; Deng, K.; Wang, G.; Du, Y.; et al. PP-PicoDet: A better real-time object detector on mobile devices. arXiv 2021, arXiv:2111.00902. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Song, G.; Liu, Y.; Wang, X. Revisiting the sibling head in object detector. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11563–11572. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. YOLOv8. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 23 November 2025).

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J. Yolov10: Real-time end-to-end object detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Xiang, X.; Lv, N.; Guo, X.; Wang, S.; El Saddik, A. Engineering vehicles detection based on modified faster R-CNN for power grid surveillance. Sensors 2018, 18, 2258. [Google Scholar] [CrossRef] [PubMed]

- Qu, L.; Liu, K.; He, Q.; Tang, J.; Liang, D. External damage risk detection of transmission lines using e-ohem enhanced faster r-cnn. In Proceedings of the Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Guangzhou, China, 23–26 November 2018; Springer: Cham, Switzerland, 2018; pp. 260–271. [Google Scholar]

- Liu, P.; Song, C.; Li, J.; Yang, S.X.; Chen, X.; Liu, C.; Fu, Q. Detection of transmission line against external force damage based on improved YOLOv3. Int. J. Robot. Autom 2020, 35, 460–468. [Google Scholar] [CrossRef]

- Li, H.; Jiang, F.; Guo, F.; Meng, W. A real-time detection method of safety hazards in transmission lines based on YOLOv5s. In Proceedings of the International Conference on Artificial Intelligence and Intelligent Information Processing (AIIIP 2022), Qingdao, China, 17–19 June 2022; SPIE: Cergy-Pontoise, France, 2022; Volume 12456, pp. 283–287. [Google Scholar]

- Zou, H.; Ye, Z.; Sun, J.; Chen, J.; Yang, Q.; Chai, Y. Research on detection of transmission line corridor external force object containing random feature targets. Front. Energy Res. 2024, 12, 1295830. [Google Scholar] [CrossRef]

- Li, J.; Zheng, H.; Cui, Z.; Huang, Z.; Liang, Y.; Li, P.; Liu, P. Intelligent detection method with 3D ranging for external force damage monitoring of power transmission lines. Appl. Energy 2024, 374, 123983. [Google Scholar] [CrossRef]

- Zou, H.; Yang, J.; Sun, J.; Yang, C.; Luo, Y.; Chen, J. Detection method of external damage hazards in transmission line corridors based on YOLO-LSDW. Energies 2024, 17, 4483. [Google Scholar] [CrossRef]

- Li, H.; Wu, Z.; Sun, Y.; Wu, X.; Luo, K.; Zhu, Q.; Wang, G. A lightweight RKM-YOLO algorithm for transmission line fault inspection. Energy Rep. 2025, 14, 1509–1522. [Google Scholar] [CrossRef]

- Wang, J.; Wang, Y.; Li, X.; Yuan, B.; Wang, M. SCI-YOLO11: An improved defect detection algorithm for transmission line insulators based on YOLO11. PLoS ONE 2025, 20, e0322561. [Google Scholar] [CrossRef]

- Ji, Y.; Ma, T.; Shen, H.; Feng, H.; Zhang, Z.; Li, D.; He, Y. Transmission Line Defect Detection Algorithm Based on Improved YOLOv12. Electronics 2025, 14, 2432. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1580–1589. [Google Scholar]

- Han, S.; Pool, J.; Tran, J.; Dally, W. Learning both weights and connections for efficient neural network. In Proceedings of the 29th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015. [Google Scholar]

- Li, H.; Kadav, A.; Durdanovic, I.; Samet, H.; Graf, H.P. Pruning filters for efficient convnets. arXiv 2016, arXiv:1608.08710. [Google Scholar]

- He, Y.; Zhang, X.; Sun, J. Channel pruning for accelerating very deep neural networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1389–1397. [Google Scholar]

- Luo, J.H.; Wu, J.; Lin, W. Thinet: A filter level pruning method for deep neural network compression. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5058–5066. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Lee, J.; Park, S.; Mo, S.; Ahn, S.; Shin, J. Layer-adaptive sparsity for the magnitude-based pruning. arXiv 2020, arXiv:2010.07611. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. Mixconv: Mixed depthwise convolutional kernels. arXiv 2019, arXiv:1907.09595. [Google Scholar] [CrossRef]

- Cui, C.; Gao, T.; Wei, S.; Du, Y.; Guo, R.; Dong, S.; Lu, B.; Zhou, Y.; Lv, X.; Liu, Q.; et al. PP-LCNet: A lightweight CPU convolutional neural network. arXiv 2021, arXiv:2109.15099. [Google Scholar] [CrossRef]

- Ultralytics. Ultralytics/Yolov5: v7.0—YOLOv5 SOTA Realtime Instance Segmentation. 2022. Available online: https://github.com/ultralytics/yolov5 (accessed on 7 May 2023).

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar] [CrossRef]

- Wang, C.; He, W.; Nie, Y.; Guo, J.; Liu, C.; Han, K.; Wang, Y. Gold-YOLO: Efficient Object Detector via Gather-and-Distribute Mechanism. arXiv 2023. arXiv 2023, arXiv:2309.11331. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Wang, C.Y.; Yeh, I.H.; Mark Liao, H.Y. Yolov9: Learning what you want to learn using programmable gradient information. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer: Cham, Switerland, 2024; pp. 1–21. [Google Scholar]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).