BERT Fine-Tuning for Software Requirement Classification: Impact of Model Components and Dataset Size

Abstract

1. Introduction

1.1. Research Problem

- Assessing the sensitivity of model performance to different hyperparameter settings

- Identifying the key components of the fine-tuning process that contribute most to classification accuracy

- Determining the minimum and optimal dataset sizes required for effective fine-tuning

1.2. Research Questions

- RQ1: Which hyperparameters have the most significant impact on BERT fine-tuning effectiveness for the SRC task?

- RQ2: How does dataset size affect BERT performance in the SRC task?

- RQ3: What is the optimal combination of dataset size and hyperparameter settings for maximizing performance in SRC tasks?

1.3. Research Contribution

- Optimally tuned BERT model for SRC

- 2.

- Empirical analysis of fine-tuning factors

2. Literature Review

3. Materials and Methods

3.1. Datasets

- (A)

- PROMISE Dataset:

- (B)

- PURE Dataset:

3.2. BERT Model Configuration

3.3. Fine-Tuning Process

4. Results and Discussion

4.1. Experimental Results

4.2. Fine-Tuned BERT Model Results for the SRC Task

| (a) | ||||

|---|---|---|---|---|

| Precision | Recall | F1-Score | Support | |

| FR | 0.98 | 0.98 | 0.98 | 255 |

| NFR | 0.99 | 0.99 | 0.99 | 370 |

| Accuracy | 0.98 | 625 | ||

| Macro Avg | 0.98 | 0.98 | 0.98 | 625 |

| Weighted Avg | 0.98 | 0.98 | 0.98 | 625 |

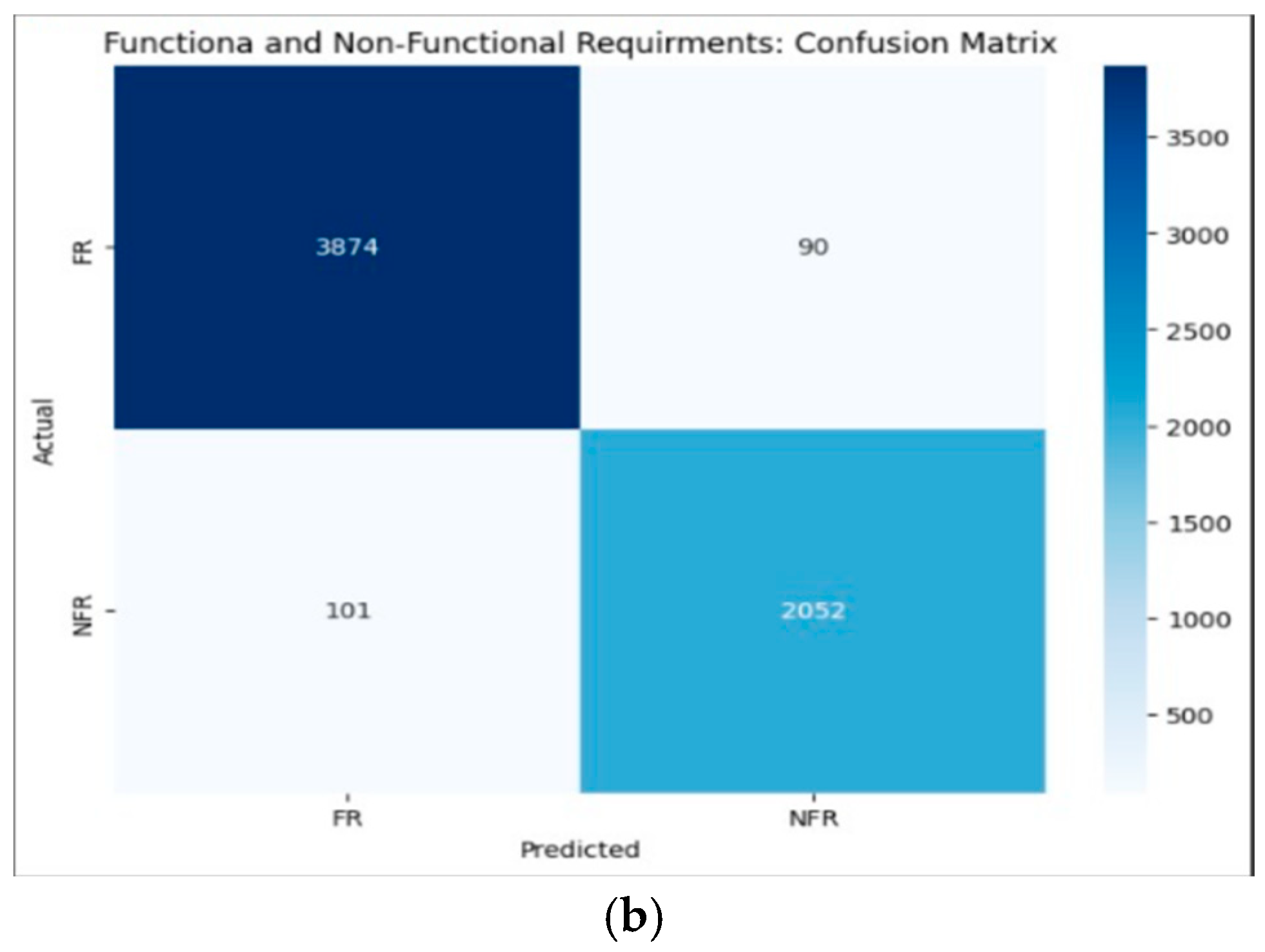

| (b) | ||||

| Precision | Recall | F1-Score | Support | |

| FR | 0.97 | 0.98 | 0.98 | 3964 |

| NFR | 0.96 | 0.95 | 0.96 | 2153 |

| Accuracy | 0.97 | 6117 | ||

| Macro Avg | 0.97 | 0.97 | 0.97 | 6117 |

| Weighted Avg | 0.97 | 0.97 | 0.97 | 6117 |

4.3. Hyperparameter Ablation

4.3.1. Effect of Training Epochs on BERT Fine-Tuning Performance

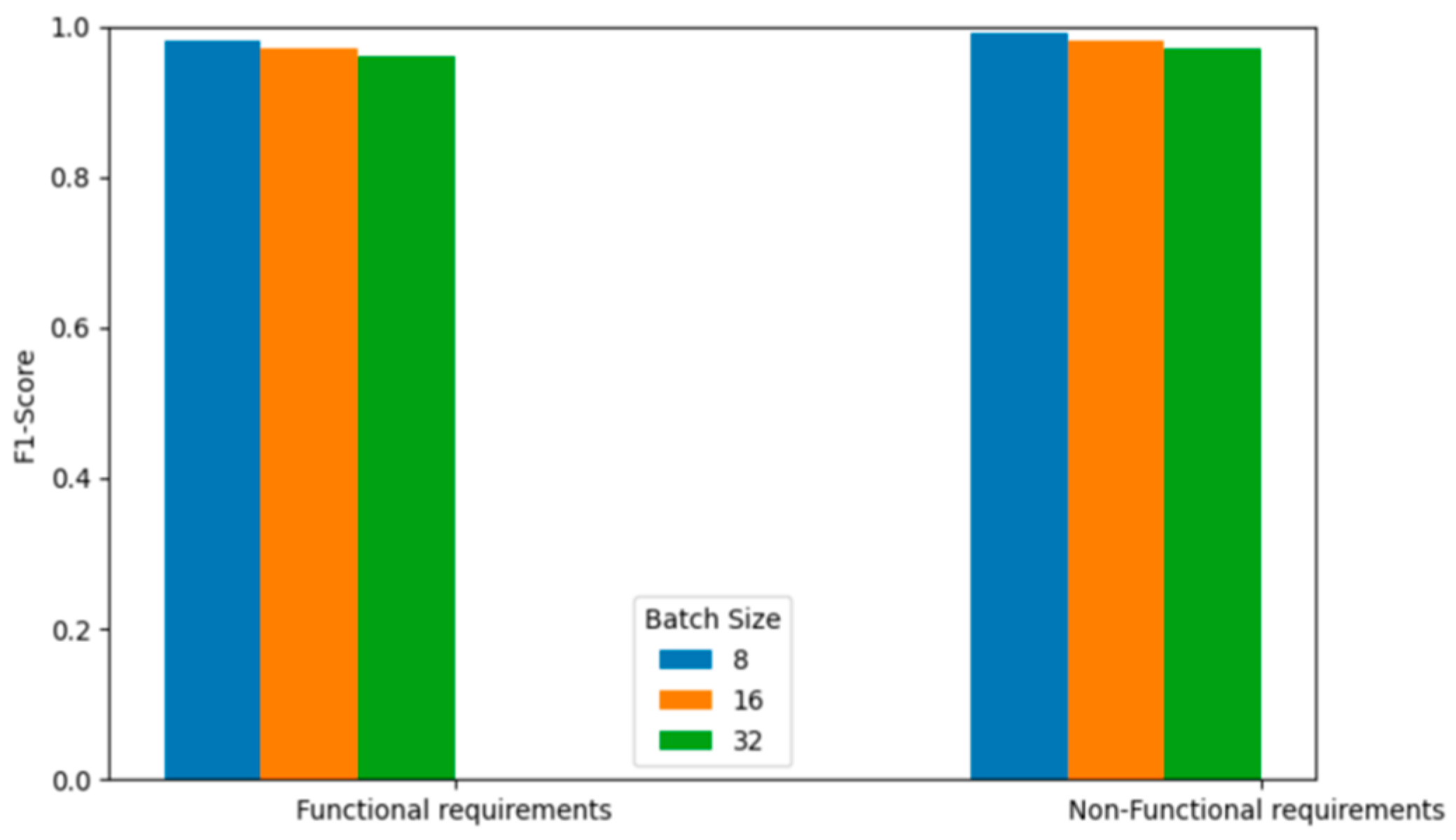

4.3.2. Effect of Batch Size Selection on BERT Fine-Tuning Performance

4.3.3. Effect of Learning Rate on BERT Fine-Tuning Performance

| Parameter | PROMISE Dataset | FR_NFR Dataset |

|---|---|---|

| Optimizer | Adam | Adam |

| Max Seq Length | 256 | 256 |

| Dropout Rate | 0.2 | 0.2 |

| Batch Size | 8 | 16 |

| Learning Rate | 1 × 10−5 | 1 × 10−5 |

| Epochs | 8 | 4 |

| Study | Technique | FR | NFR | F1 Avg | ||||

|---|---|---|---|---|---|---|---|---|

| P | R | F | P | R | F | |||

| [7] | SVM (word features) | 0.92 | 0.93 | 0.93 | 0.93 | 0.92 | 0.92 | 0.93 |

| [8] | Naïve Bayes (TF-IDF) | 0.80 | 0.93 | 0.86 | 0.95 | 0.85 | 0.90 | 0.88 |

| [17] | BERT classfier | 0.92 | 0.88 | 0.90 | 0.92 | 0.95 | 0.93 | 0.92 |

| [8] | Bert+GAT | 0.94 | 0.90 | 0.92 | 0.94 | 0.98 | 0.96 | 0.94 |

| [10] | Processed data | 0.90 | 0.97 | 0.93 | 0.98 | 0.93 | 0.95 | 0.94 |

| [6] | PRCBERT with RoBERTa-large | 0.92 | 0.95 | 0.93 | 0.94 | 0.96 | 0.95 | 0.94 |

| Our model | Bert (fine-tuned) | 0.98 | 0.98 | 0.98 | 0.99 | 0.99 | 0.99 | 0.99 |

5. Limitations and Future Work

- Scaling the model to handle larger software requirements datasets while maintaining efficiency and accuracy.

- Developing specialized models for fine-grained classification of individual NFR types.

- Creating adaptive tuning mechanisms that automatically adjust hyperparameters based on dataset characteristics.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ozkaya, M.; Kardas, G.; Kose, M.A. An Analysis of the Features of Requirements Engineering Tools. Systems 2023, 11, 576. [Google Scholar] [CrossRef]

- Wiegers, K.E.; Beatty, J.; Wiegers, K.E. Software Requirements, 3rd ed.; Best practices; Microsoft Press: Redmond, WA, USA, 2013; ISBN 978-0-7356-7966-5. [Google Scholar]

- Kaur, K.; Kaur, P. The Application of AI Techniques in Requirements Classification: A Systematic Mapping. Artif. Intell. Rev. 2024, 57, 57. [Google Scholar] [CrossRef]

- Shreda, Q.A.; Hanani, A.A. Identifying Non-Functional Requirements from Unconstrained Documents Using Natural Language Processing and Machine Learning Approaches. IEEE Access 2025, 13, 124159–124179. [Google Scholar] [CrossRef]

- Kaur, K.; Kaur, P. BERT-CNN: Improving BERT for Requirements Classification Using CNN. Procedia Comput. Sci. 2023, 218, 2604–2611. [Google Scholar] [CrossRef]

- Luo, X.; Xue, Y.; Xing, Z.; Sun, J. PRCBERT: Prompt Learning for Requirement Classification Using BERT-Based Pretrained Language Models. In Proceedings of the 37th IEEE/ACM International Conference on Automated Software Engineering, Rochester, MI, USA, 10–14 October 2022; ACM: New York, NY, USA, 2022; pp. 1–13. [Google Scholar]

- Kurtanović, Z.; Maalej, W. Automatically Classifying Functional and Non-Functional Requirements Using Supervised Machine Learning. In Proceedings of the 2017 IEEE 25th International Requirements Engineering Conference (RE), Lisbon, Portugal, 4–8 September 2017; pp. 490–495. [Google Scholar]

- Li, G.; Zheng, C.; Li, M.; Wang, H. Automatic Requirements Classification Based on Graph Attention Network. IEEE Access 2022, 10, 30080–30090. [Google Scholar] [CrossRef]

- EzzatiKarami, M.; Madhavji, N.H. Automatically Classifying Non-Functional Requirements with Feature Extraction and Supervised Machine Learning Techniques: A Research Preview. In Proceedings of the Requirements Engineering: Foundation for Software Quality, Virtual, 12–15 April 2021; Dalpiaz, F., Spoletini, P., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 71–78. [Google Scholar]

- Abad, Z.S.H.; Karras, O.; Ghazi, P.; Glinz, M.; Ruhe, G.; Schneider, K. What Works Better? A Study of Classifying Requirements. In Proceedings of the 2017 IEEE 25th International Requirements Engineering Conference (RE), Lisbon, Portugal, 4–8 September 2017; pp. 496–501. [Google Scholar]

- Haque, M.A.; Rahman, M.A.; Siddik, M.S. Non-Functional Requirements Classification with Feature Extraction and Machine Learning: An Empirical Study. In Proceedings of the 2019 1st International Conference on Advances in Science, Engineering and Robotics Technology (ICASERT), Dhaka, Bangladesh, 3–5 May 2019; pp. 1–5. [Google Scholar]

- Jindal, R.; Malhotra, R.; Jain, A.; Bansal, A. Mining Non-Functional Requirements Using Machine Learning Techniques. e-Inform. Softw. Eng. J. 2021, 15, 85–114. [Google Scholar] [CrossRef]

- Airlangga, G. Enhancing Software Requirements Classification with Semisupervised GAN-BERT Technique. J. Electr. Comput. Eng. 2024, 2024, 4955691. [Google Scholar] [CrossRef]

- Navarro-Almanza, R.; Juarez-Ramirez, R.; Licea, G. Towards Supporting Software Engineering Using Deep Learning: A Case of Software Requirements Classification. In Proceedings of the 2017 5th International Conference in Software Engineering Research and Innovation (CONISOFT), Merida, Mexico, 25–27 October 2017; pp. 116–120. [Google Scholar]

- Baker, C.; Deng, L.; Chakraborty, S.; Dehlinger, J. Automatic Multi-Class Non-Functional Software Requirements Classification Using Neural Networks. In Proceedings of the 2019 IEEE 43rd Annual Computer Software and Applications Conference (COMPSAC), Milwaukee, WI, USA, 15–19 July 2019; Volume 2, pp. 610–615. [Google Scholar]

- Kici, D.; Malik, G.; Cevik, M.; Parikh, D.; Başar, A. A BERT-Based Transfer Learning Approach to Text Classification on Software Requirements Specifications. In Proceedings of the 34th Canadian Conference on Artificial Intelligence, Vancouver, BC, Canada, 25–28 May 2021. [Google Scholar] [CrossRef]

- Hey, T.; Keim, J.; Koziolek, A.; Tichy, W.F. NoRBERT: Transfer Learning for Requirements Classification. In Proceedings of the 2020 IEEE 28th International Requirements Engineering Conference (RE), Zurich, Switzerland, 31 August–4 September 2020; IEEE: New York, NY, USA, 2020; pp. 169–179. [Google Scholar]

- PURE: A Dataset of Public Requirements Documents. In Proceedings of the 2017 IEEE 25th International Requirements Engineering Conference (RE), Lisbon, Portugal, 4–8 September 2017; Available online: https://ieeexplore.ieee.org/document/8049173 (accessed on 16 September 2024).

- Sonali, S.; Thamada, S. FR_NFR_Dataset 2024. Available online: https://data.mendeley.com/datasets/4ysx9fyzv4/1 (accessed on 12 September 2025).

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, Minnesota, 3–5 June 2019; Burstein, J., Doran, C., Solorio, T., Eds.; Volume 1 (Long and Short Papers); Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 4171–4186. [Google Scholar]

- Gardazi, N.M.; Daud, A.; Malik, M.K.; Bukhari, A.; Alsahfi, T.; Alshemaimri, B. BERT Applications in Natural Language Processing: A Review. Artif. Intell. Rev. 2025, 58, 166. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2017, arXiv:1412.6980. [Google Scholar] [CrossRef]

- Gkouti, N.; Malakasiotis, P.; Toumpis, S.; Androutsopoulos, I. Should I Try Multiple Optimizers When Fine-Tuning Pre-Trained Transformers for NLP Tasks? Should I Tune Their Hyperparameters? arXiv 2024, arXiv:2402.06948. [Google Scholar]

- Taj, S.; Daudpota, S.M.; Imran, A.S.; Kastrati, Z. Aspect-Based Sentiment Analysis for Software Requirements Elicitation Using Fine-Tuned Bidirectional Encoder Representations from Transformers and Explainable Artificial Intelligence. Eng. Appl. Artif. Intell. 2025, 151, 110632. [Google Scholar] [CrossRef]

- Mosbach, M.; Andriushchenko, M.; Klakow, D. On the Stability of Fine-Tuning BERT: Misconceptions, Explanations, and Strong Baselines. arXiv 2021, arXiv:2006.04884. [Google Scholar] [CrossRef]

- Zhao, L.; Alhoshan, W. Machine Learning for Requirements Classification. In Handbook on Natural Language Processing for Requirements Engineering; Ferrari, A., Ginde, G., Eds.; Springer Nature: Cham, Switzerland, 2025; pp. 19–59. ISBN 978-3-031-73143-3. [Google Scholar]

| Requirement | Class | Dataset |

|---|---|---|

| “The system shall refresh the display every 60 s.” | NFR | PROMISE |

| “The system shall filter data by: Venues and Key Events.” | FR | |

| “The app shall run on a smart phone with Android OS least version 2.3.” | NFR | FR_NFR_ dataset |

| “User shall be able to add, modify, or remove user data, with changes reflected successfully.” | FR |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Eltahier, S.; Dawood, O.; Saeed, I. BERT Fine-Tuning for Software Requirement Classification: Impact of Model Components and Dataset Size. Information 2025, 16, 981. https://doi.org/10.3390/info16110981

Eltahier S, Dawood O, Saeed I. BERT Fine-Tuning for Software Requirement Classification: Impact of Model Components and Dataset Size. Information. 2025; 16(11):981. https://doi.org/10.3390/info16110981

Chicago/Turabian StyleEltahier, Safaa, Omer Dawood, and Imtithal Saeed. 2025. "BERT Fine-Tuning for Software Requirement Classification: Impact of Model Components and Dataset Size" Information 16, no. 11: 981. https://doi.org/10.3390/info16110981

APA StyleEltahier, S., Dawood, O., & Saeed, I. (2025). BERT Fine-Tuning for Software Requirement Classification: Impact of Model Components and Dataset Size. Information, 16(11), 981. https://doi.org/10.3390/info16110981