Agent-Poster: A Multi-Scale Feature Fusion Emotion Recognition Model Based on an Agent Attention Mechanism

Abstract

1. Introduction

- •

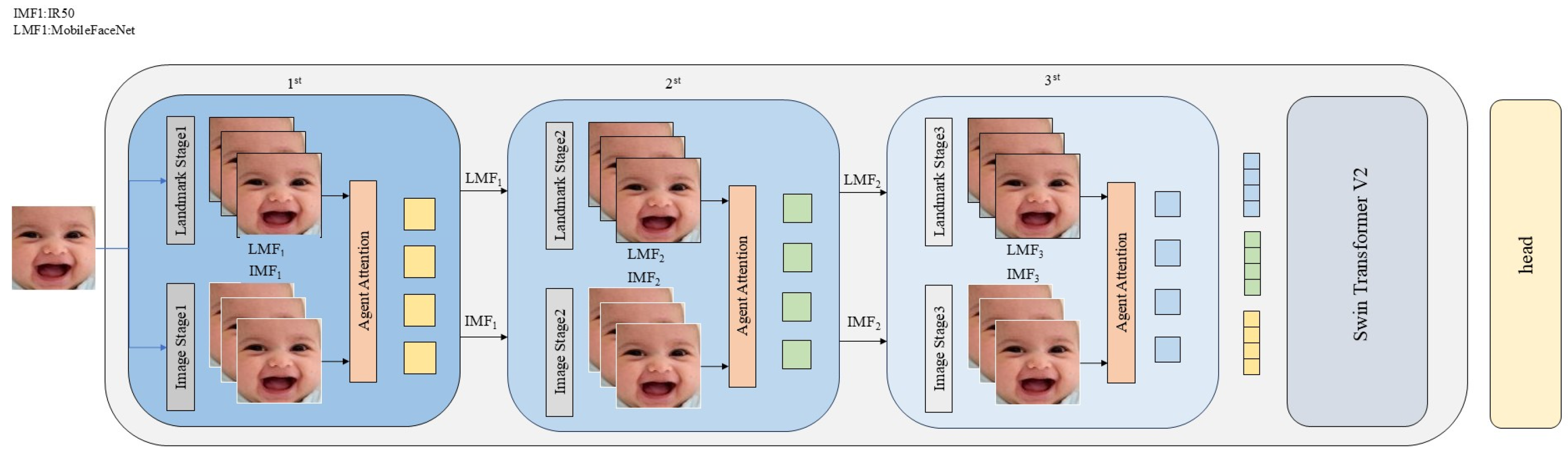

- Introducing Agent Attention: An Agent Attention mechanism [19] is introduced to enhance cross-modal interaction between facial images and landmarks in facial expression recognition. It proposes additional agent tokens that act as intermediaries between queries and key–value pairs, effectively reducing computational complexity while retaining global modeling capability.

- •

- Simplifying the Dual-Stream Architecture: We streamline the original bidirectional cross-attention by removing redundant image-to-landmark branches, retaining only the unidirectional attention from landmark to images.

- •

- Optimizing Multi-Scale Feature Fusion: Multi-scale features are extracted directly from the backbone network and fused via a lightweight module comprising a two-layer Swin Transformer V2, replacing the original ViT. This approach achieves efficient cross-scale integration and improves robustness to scale variations.

2. Related Work

3. Methods

3.1. Overall Architecture

3.2. Agent Attention

3.3. Swin Transformer V2

4. Experiments

4.1. Datasets

4.2. Experiment Setup

4.3. Ablation Study

4.4. Cross-Dataset Experiment

4.5. Comparison

4.6. FLOPs and Param Comparison

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, K.; Peng, X.; Yang, J.; Meng, D.; Qiao, Y. Region Attention Networks for Pose and Occlusion Robust Facial Expression Recognition. IEEE Trans. Image Process. 2020, 29, 4057–4069. [Google Scholar] [CrossRef]

- Yang, Y.; Jia, B.; Zhi, P.; Huang, S. PhyScene: Physically Interactable 3D Scene Synthesis for Embodied AI. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 1–18. [Google Scholar]

- Gao, Z.; Patras, I. Self-Supervised Facial Representation Learning with Facial Region Awareness. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 2081–2088. [Google Scholar]

- Liu, Y.; Wang, W.; Zhan, Y.; Feng, S.; Liu, K.; Chen, Z. Pose-Disentangled Contrastive Learning for Self-supervised Facial Representation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 9717–9728. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Han, D.; Yun, S.; Heo, B.; Yoo, Y.J. REXNet: Diminishing Representational Bottleneck on Convolutional Neural Network. arXiv 2020, arXiv:2007.00992. [Google Scholar]

- Mao, J.; Xu, R.; Yin, X.; Chang, Y.; Nie, B.; Huang, A. POSTER++: A Simpler and Stronger Facial Expression Recognition Network. Pattern Recognit. 2025, 157, 110951. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Advances in Neural Information Processing Systems 30; Curran Associates, Inc.: New York, NY, USA, 2017; pp. 5998–6008. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16x16 Words: Transformers for Image Recognition at Scale. In Proceedings of the 9th International Conference on Learning Representations, Virtual Event, 3–7 May 2021. [Google Scholar]

- Mollahosseini, A.; Chan, D.; Mahoor, M.H. Going Deeper in Facial Expression Recognition Using Deep Neural Networks. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision, Lake Placid, NY, USA, 7–10 March 2016; pp. 1–10. [Google Scholar]

- Shao, J.; Qian, Y. Three Convolutional Neural Network Models for Facial Expression Recognition in the Wild. Neurocomputing 2019, 355, 82–92. [Google Scholar] [CrossRef]

- Gürsesli, M.C.; Lombardi, S.; Duradoni, M.; Guazzini, A. Facial Emotion Recognition (FER) Through Custom Lightweight CNN Model: Performance Evaluation in Public Datasets. IEEE Access 2024, 12, 45543–45559. [Google Scholar] [CrossRef]

- Zhong, L.; Liu, Q.; Yang, P.; Liu, B.; Huang, J.; Metaxas, D.N. Learning Active Facial Patches for Expression Analysis. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 2562–2569. [Google Scholar]

- Savchenko, A.V. Facial Expression and Attributes Recognition Based on Multi-Task Learning of Lightweight Neural Networks. In Proceedings of the 2021 IEEE 19th International Symposium on Intelligent Systems and Informatics, Subotica, Serbia, 16–18 September 2021; pp. 119–124. [Google Scholar]

- Sang, D.V.; Ha, P.T. Discriminative Deep Feature Learning for Facial Emotion Recognition. In Proceedings of the 2018 1st International Conference on Multimedia Analysis and Pattern Recognition, Hanoi, Vietnam, 16–17 April 2018; pp. 1–6. [Google Scholar]

- Kim, S.; Nam, J.; Ko, B.C. Facial expression recognition based on squeeze vision transformer. Sensors 2022, 22, 3729. [Google Scholar] [CrossRef]

- Li, H.; Sui, M.; Zhu, Z.; Zhao, F. MFEViT: A Robust Lightweight Transformer-Based Network for Multimodal 2D+3D Facial Expression Recognition. arXiv 2021, arXiv:2109.13086. [Google Scholar]

- Han, D.; Ye, T.; Han, Y.; Xia, Z.; Song, S.; Huang, G. Agent Attention: On the Integration of Softmax and Linear Attention. arXiv 2023, arXiv:2312.08874. [Google Scholar] [CrossRef]

- Zhao, G.; Pietikäinen, M. Dynamic Texture Recognition Using Local Binary Patterns with an Application to Facial Expressions. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 915–928. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikäinen, M.; Mäenpää, T. Multiresolution Gray-Scale and Rotation Invariant Texture Classification with Local Binary Patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 10–17 October 2021; pp. 9992–10002. [Google Scholar]

- Liu, C.; Hirota, K.; Dai, Y. Patch Attention Convolutional Vision Transformer for Facial Expression Recognition with Occlusion. Inf. Sci. 2023, 619, 781–794. [Google Scholar] [CrossRef]

- Chen, X.; Zheng, X.; Sun, K.; Liu, W.; Zhang, Y. Self-Supervised Vision Transformer-Based Few-Shot Learning for Facial Expression Recognition. Inf. Sci. 2023, 634, 206–226. [Google Scholar] [CrossRef]

- Deng, J.; Guo, J.; Xue, N.; Zafeiriou, S. ArcFace: Additive Angular Margin Loss for Deep Face Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4690–4699. [Google Scholar]

- Chen, C.J. PyTorch Face Landmark: A Fast and Accurate Facial Landmark Detector. 2021. Available online: https://github.com/cunjian/pytorch_face_landmark (accessed on 19 October 2025).

- Liu, Z.; Hu, H.; Lin, Y.; Yao, Z.; Xie, Z.; Wei, Y.; Ning, J.; Cao, Y.; Zhang, Z.; Dong, L.; et al. Swin Transformer V2: Scaling Up Capacity and Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11999–12009. [Google Scholar]

- Wang, Z.; Yan, C.; Hu, Z. Lightweight Multi-Scale Network with Attention for Facial Expression Recognition. In Proceedings of the 4th International Conference on Advanced Electronic Materials, Computers and Software Engineering, Zhuhai, China, 26–28 March 2021; pp. 695–698. [Google Scholar]

- Chen, Y.; Wu, L.; Wang, C. A Micro-Expression Recognition Method Based on Multi-Level Information Fusion Network. Acta Autom. Sin. 2024, 50, 1445–1457. [Google Scholar]

- Li, Y.; Li, S.; Sun, G.; Han, X.; Liu, Y. Lightweight Swin Transformer Combined with Multi-Scale Feature Fusion for Face Expression Recognition. Opt.-Electron. Eng. 2025, 52, 240234. [Google Scholar]

- Zheng, C.; Mendieta, M.; Chen, C. POSTER: A Pyramid Cross-Fusion Transformer Network for Facial Expression Recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Paris, France, 2–3 October 2023; pp. 3138–3147. [Google Scholar]

- Hazirbulan, I.; Zafeiriou, S.; Pantic, M. Facial Expression Recognition Using Enhanced Deep 3D Convolutional Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 1234–1243. [Google Scholar]

- Li, H.; Wang, N.; Yu, Y.; Wang, X. Facial Expression Recognition with Grid-Wise Attention and Visual Transformer. Inf. Sci. 2021, 580, 35–54. [Google Scholar] [CrossRef]

- Li, S.; Deng, W.; Du, J. Reliable Crowdsourcing and Deep Locality-Preserving Learning for Expression Recognition in the Wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2584–2593. [Google Scholar]

- Mollahosseini, A.; Hasani, B.; Mahoor, M.H. AffectNet: A Database for Facial Expression, Valence, and Arousal Computing in the Wild. IEEE Trans. Affect. Comput. 2019, 10, 18–31. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Xue, F.; Wang, Q.; Guo, G. TransFER: Learning Relation-Aware Facial Expression Representations with Transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 10–17 October 2021; pp. 3601–3610. [Google Scholar]

- Zhang, Y.; Wang, C.; Ling, X.; Deng, W. Learn from All: Erasing Attention Consistency for Noisy Label Facial Expression Recognition. In Proceedings of the 17th European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 418–434. [Google Scholar]

- Zhang, S.; Zhang, Y.; Zhang, Y.; Wang, Y.; Song, Z. A Dual-Direction Attention Mixed Feature Network for Facial Expression Recognition. Electronics 2023, 12, 3595. [Google Scholar] [CrossRef]

- Lee, I.; Lee, E.; Yoo, S.B. Latent-OFER: Detect, Mask, and Reconstruct with Latent Vectors for Occluded Facial Expression Recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 1536–1546. [Google Scholar]

- Chen, Y.; Li, J.; Shan, S.; Wang, M.; Hong, R. From Static to Dynamic: Adapting Landmark-Aware Image Models for Facial Expression Recognition in Videos. IEEE Trans. Affect. Comput. 2024, 16, 624–638. [Google Scholar] [CrossRef]

- Colares, W.G.; Costa, M.G.F.; Costa Filho, C.F.F. Enhancing Emotion Recognition: A Dual-Input Model for Facial Expression Recognition Using Images and Facial Landmarks. In Proceedings of the 2024 46th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Orlando, FL, USA, 15–18 July 2024; pp. 1–5. [Google Scholar]

- Huang, Y. FERMixNet: An Occlusion Robust Facial Expression Recognition Model With Facial Mixing Augmentation and Mid-Level Representation Learning. IEEE Trans. Affect. Comput. 2025, 16, 639–654. [Google Scholar] [CrossRef]

| Dataset | Train Size | Test Size | Classes |

|---|---|---|---|

| RAF-DB | 12,271 | 3068 | 7 |

| AffectNet | 280,401 | 3500 | 7 |

| Method | Components | RAF-DB (%) | AffectNet (%) | Statistical Significance |

|---|---|---|---|---|

| A1 Baseline | - | 0.12 | 0.15 | - |

| A2 = A1+ | Multi-scale feature extraction | 0.14 | p < 0.05 | |

| A3 = A2+ | Agent Attention | 0.16 | p < 0.01 | |

| A4 = A3+ | Lightweight Swin Transformer V2 | 0.13 | p < 0.001 | |

| Agent-Poster (Ours) | All components with L → I fusion | 0.09 | 0.11 | p < 0.001 |

| Method | RA (%) | AR (%) |

|---|---|---|

| POSTER++ [8] | 60.13 | 87.62 |

| Agent-Poster (Ours) | 63.45 | 89.17 |

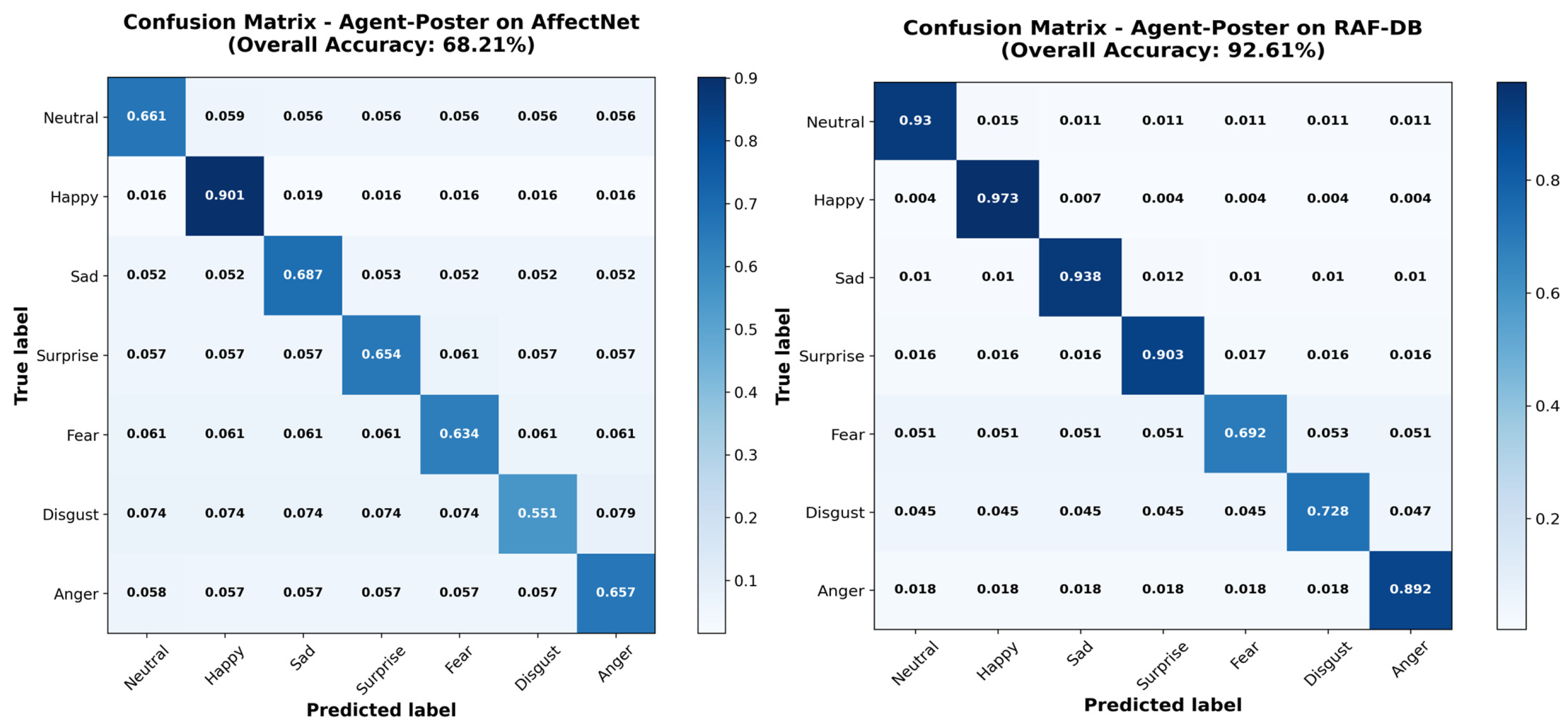

| Dataset | Method | Neutral (%) | Happy (%) | Sad (%) | Surprise (%) | Fear (%) | Disgust (%) | Anger (%) | Mean Acc. (%) |

|---|---|---|---|---|---|---|---|---|---|

| RAF-DB | POSTER++ | 92.06 | 97.22 | 92.89 | 90.58 | 68.92 | 71.88 | 88.27 | 85.97 |

| RAF-DB | Agent-Poster | 93.01 | 97.37 | 93.81 | 90.32 | 69.26 | 72.84 | 89.20 | 86.54 |

| AffectNet | POSTER++ | 65.40 | 89.40 | 68.00 | 66.00 | 64.20 | 54.40 | 65.00 | 67.45 |

| AffectNet | Agent-Poster | 66.19 | 90.14 | 68.70 | 65.46 | 63.42 | 55.17 | 65.78 | 67.84 |

| Method | Reference | RAF-DB (%) | AffectNet (%) |

|---|---|---|---|

| TransFER [37] | ICCV 2021 | 90.91 | 66.23 |

| EAC-Net [38] | ECCV 2022 | 90.35 | 65.32 |

| POSTER [31] | ICCVW 2023 | 86.03 | 67.31 |

| PCL [3] | CVPR 2023 | 85.92 | 66.16 |

| DDAMFN [39] | Electronics 2023 | 91.35 | 67.03 |

| Latent-OFER [40] | ICCV 2023 | 89.60 | 63.90 |

| S2D [41] | TAC 2024 | 92.57 | 67.62 |

| FRA [4] | CVPR 2024 | 89.95 | 66.16 |

| 1D-CNN + DenseNet [42] | EMBC 2024 | - | 60.17 |

| FERMixNet [43] | TAFFC 2024 | 91.62 | 66.40 |

| POSTER++ [8] | PR 2025 | 90.12 | 67.49 |

| Agent-Poster (Ours) | - | 92.61 | 68.21 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fu, L.; Wan, Y.; Zou, G. Agent-Poster: A Multi-Scale Feature Fusion Emotion Recognition Model Based on an Agent Attention Mechanism. Information 2025, 16, 982. https://doi.org/10.3390/info16110982

Fu L, Wan Y, Zou G. Agent-Poster: A Multi-Scale Feature Fusion Emotion Recognition Model Based on an Agent Attention Mechanism. Information. 2025; 16(11):982. https://doi.org/10.3390/info16110982

Chicago/Turabian StyleFu, Lin, Yaping Wan, and Gang Zou. 2025. "Agent-Poster: A Multi-Scale Feature Fusion Emotion Recognition Model Based on an Agent Attention Mechanism" Information 16, no. 11: 982. https://doi.org/10.3390/info16110982

APA StyleFu, L., Wan, Y., & Zou, G. (2025). Agent-Poster: A Multi-Scale Feature Fusion Emotion Recognition Model Based on an Agent Attention Mechanism. Information, 16(11), 982. https://doi.org/10.3390/info16110982