Abstract

The rapid emergence of Generative Artificial Intelligence (GenAI) has sparked a growing debate about its ethical, methodological, and epistemological implications for qualitative research. This study aimed to examine and deeply understand researchers’ perceptions regarding the use of GenAI tools in different phases of the qualitative research process. The study involved a sample of 214 researchers from diverse disciplinary areas, with publications indexed in Web of Science or Scopus that apply qualitative methods. Data collection was conducted using an open-ended questionnaire, and analysis was carried out using coding and thematic analysis procedures, which allowed us to identify patterns of perception, user experiences, and barriers. The findings show that, while GenAI is valued for its ability to optimize tasks such as corpus organization, initial coding, transcription, translation, and information synthesis, its implementation raises concerns regarding privacy, consent, authorship, the reliability of results, and the loss of interpretive depth. Furthermore, a dual ecosystem is observed, where some researchers already incorporate it, mainly generative text assistants like ChatGPT, while others have yet to use it or are unfamiliar with it. Overall, the results suggest that the most solid path is an assisted model, supported by clear ethical frameworks, adapted methodological guidelines, and critical training for responsible and humanistic use.

1. Introduction

Currently, the integration of Generative Artificial Intelligence (GenAI) into qualitative research has sparked an increasingly intense and multidimensional debate, focusing on its ethical, methodological, and epistemological implications. As emerging technologies continue to evolve at a rapid pace, researchers employing qualitative approaches face the challenge of incorporating GenAI tools into their data collection, analysis, and interpretation processes. This integration raises fundamental questions about how these technologies may impact data authenticity, methodological transparency, and ultimately, the validity and reliability of research findings [1] (Ngozwana, 2018).

The automation of traditionally human-driven processes, such as transcription, thematic analysis, or category generation, introduces new dynamics that require critical examination. In particular, there is growing concern about the potential dehumanization of qualitative analysis, the loss of contextual and subjective nuances, and the overreliance on algorithmic tools that often function as “black boxes,” offering little insight into how results are produced. Within this context, the present study aims to deeply explore and understand researchers’ perceptions regarding the use of GenAI tools in qualitative research processes.

Five research questions were formulated: (1) What are the ethical implications of using Generative Artificial Intelligence (GenAI) in qualitative research, particularly concerning authorship, interpretation, and data integrity? (2) What protocols, guidelines, or regulatory frameworks currently exist to guide the responsible use of GenAI in qualitative research, and how effective are they in practice? (3) What practical implications arise from incorporating GenAI tools into qualitative research in terms of accessibility, researcher training, and the quality of outcomes? (4) What specific applications of GenAI are being used or considered by qualitative researchers, and for what methodological or analytical purposes? (5) What barriers and challenges emerge when using GenAI tools in qualitative research?

This study presents a broad, cross-disciplinary qualitative mapping (n = 214) based on employed researchers with proven expertise in qualitative methods, documenting how GenAI is conceptualized and used in real work settings. The methodological strategy is described in detail as a transparent, replicable protocol. By combining expert discourses with rigorous procedures, the study provides a solid basis for understanding practices, ethical dilemmas, and training needs for the responsible adoption of GenAI.

2. Theoretical Framework

2.1. The Role of GenAI in Contemporary Qualitative Research

GenAI represents one of the most disruptive areas of development within the broader field of Artificial Intelligence (AI), as it focuses on the automatic creation of new content based on patterns learned from large datasets. This generative capability is grounded in complex models such as Generative Adversarial Networks (GANs), an algorithmic architecture composed of two neural networks, a generator and a discriminator, that are trained in opposition to produce highly realistic synthetic data [2]. Moreover, GenAI integrates both bottom-up and top-down approaches through open systems, enabling it to explore dynamic informational environments, adapt to changing contextual conditions, and optimize solution generation based on feedback [3].

From a scientific perspective, generative modeling not only facilitates data production but also allows for the simulation of complex phenomena through transformative representations of knowledge. This capability has proven instrumental in understanding multidimensional systems and generating innovative hypotheses across various disciplines [4]. In this regard, GenAI transcends mere task automation and emerges as an epistemic tool capable of expanding the frontiers of contemporary research.

In the realm of qualitative research, the incorporation of GenAI has introduced significant transformations to traditional methodologies. Currently, generative systems are employed across multiple stages of the research process: from automatic transcription of interviews [5], to automated coding and data analysis using specialized software such as NVivo, MAXQDA, or Atlas.ti [6,7]. Additionally, generative models have been used for emotion and sentiment analysis in qualitative corpora [8], as well as for assisting in the writing of scientific and academic texts, supporting tasks such as editing, summarization, stylistic enhancement, and argument structuring [9].

Beyond its instrumental value, GenAI enables advanced functionalities such as acting as a conversational assistant in interviews or virtual focus groups, and as a support agent in idea formulation through context-aware content generation [10]. This redefines the modes of interaction between researchers and data, facilitating a more fluid and continuous engagement with collected information. Nevertheless, the adoption of these technologies also raises serious ethical and methodological concerns. The automation of analytical processes may compromise interpretive authenticity if not accompanied by reflective oversight from the human researcher [11]. Furthermore, the opacity of GenAI’s internal mechanisms complicates the traceability of reasoning behind generated inferences, potentially affecting the validity and reliability of findings [10]. Another critical issue is the handling of sensitive data: since many AI systems rely on data for training, ensuring privacy, obtaining informed consent, and complying with data protection regulations becomes imperative in qualitative contexts [12].

Additionally, the attribution of work produced with GenAI support raises new debates around authorship, intellectual responsibility, and originality in scientific production. The lack of consensus on how to disclose the use of these tools may lead to ambiguity in the transparency of the research process [9]. While platforms like ChatGPT have demonstrated utility in enhancing efficiency and depth in qualitative analysis, their implementation requires clear ethical guidelines to ensure responsible use aligned with the core principles of qualitative research [11].

2.2. Ethical Foundations in Qualitative Research in the Face of the Advance of GenAI

The incorporation of GenAI into research contexts has introduced new and complex ethical challenges that demand a critical reassessment of the principles that have historically guided qualitative inquiry. The use of automated systems capable of autonomously generating textual, visual, or auditory content from large-scale datasets not only reshapes traditional methodologies but also introduces ethical dilemmas related to privacy, consent, authorship, social justice, and transparency [13].

One of the most sensitive issues concerns the extensive use of personal data for training generative models. GenAI systems require vast amounts of information, often harvested en masse from open or private sources without the explicit consent of the individuals involved. This practice undermines fundamental research ethics principles such as autonomy, confidentiality, and individual control over personal information [14]. Despite the existence of regulatory frameworks such as the General Data Protection Regulation (GDPR), the rapid pace of technological development has outstripped the capacity of such frameworks to effectively regulate novel forms of automated data processing.

Another critical axis of ethical debate lies in the generation and dissemination of misinformation. GenAI’s ability to produce highly realistic yet false content, including text, images, videos, or audio, poses a significant threat to the integrity of knowledge, particularly within academic and media environments. The difficulty of distinguishing between human and AI-generated outputs jeopardizes the credibility of sources, the reliability of evidence, and the robustness of public discourse [15]. In academia, this raises concerns about knowledge originality, automated plagiarism, and the legitimate attribution of scientific authorship, all of which necessitate a regulatory reexamination of intellectual property in the AI era [16] (Zohny et al., 2023).

Moreover, GenAI models are not neutral: they reproduce the patterns embedded in their training data. This means they can perpetuate and amplify historical, social, cultural, or racial biases present in those corpora, thereby affecting equity in sensitive domains such as hiring, education, justice, or healthcare [17]. In practice, this may result in algorithmic discrimination, the systemic exclusion of underrepresented groups, or inappropriate recommendations that disproportionately impact vulnerable populations. For instance, in healthcare, a lack of representativeness in training datasets can lead to less effective diagnoses or treatments for specific ethnic or socioeconomic communities, thereby exacerbating structural inequalities [18,19]. The ethical dimension of access also warrants particular attention. GenAI technologies tend to benefit individuals and institutions with greater technological resources and digital capabilities, thus widening the gap between researchers or organizations with and without access to such tools. In health, education, and research contexts, this imbalance in access may compromise principles of distributive justice and epistemic equity [20].

Despite its advantages, such as improving efficiency, fostering creativity, and enabling the personalization of research experiences, the deployment of GenAI in qualitative research requires robust ethical governance [21]. The absence of adequate regulatory frameworks may lead to significant collateral effects: erosion of public trust, data manipulation, infringement of individual rights, and the legitimization of biased outcomes [22]. Hence, it is essential to construct a multidimensional ethical framework that integrates normative principles, transparent practices, and accountability mechanisms.

Such a framework should foster interdisciplinary collaboration among experts in ethics, data science, law, sociology, and the humanities to ensure that the design, training, and implementation of generative systems are conducted responsibly. Furthermore, the development of auditable models—with explicit review criteria and control mechanisms—is necessary to mitigate algorithmic opacity and to enable the detection of biases in generated outputs [14]. Finally, promoting critical digital literacy among both researchers and participants is vital for understanding the implications of GenAI use, in both technical and ethical dimensions. Such training would strengthen user autonomy vis-à-vis AI tools and consolidate a culture of reflective, informed, and ethically engaged use [23,24].

2.3. Researcher Perceptions and Emerging Regulatory Frameworks

The incorporation of GenAI into academic research has produced a heterogeneous landscape regarding attitudes, uses, and ethical evaluations within the scientific community. The study by [25] identifies three attitudinal profiles toward the use of GenAI in academic research: the group “GenAI as a workhorse,” which employs it as an operational tool for technical tasks; the profile “GenAI as a linguistic assistant,” which restricts its use to auxiliary functions due to concerns about reliability; and the group “GenAI as a research accelerator,” which strategically integrates it across multiple phases of the scientific process.

This latter group is particularly representative of younger researchers or those with stronger technological expertise, who consider GenAI useful for idea exploration, article drafting, initial thematic analysis, and even suggestions for research design [26]. However, even among the most favorable users, there is consensus on the need for critical human oversight of AI-generated interpretations, reaffirming the principle of a symmetrical collaboration between humans and machines [27].

Attitudinal differences also correlate with disciplinary backgrounds. Researchers in technical, medical, or quantitative social sciences display greater openness toward GenAI adoption, in contrast with those in the humanities or qualitative social sciences, where the interpretive nature of data raises more significant epistemic and ethical barriers [28]. These differences can also be linked to underlying epistemological frameworks: positivist approaches tend to value efficiency and replicability, whereas interpretive approaches privilege deep understanding, reflexivity, and situated knowledge construction [29].

It is worth noting a general trend toward cautious adoption: while many researchers already incorporate GenAI into their workflows, the depth and scope of its use vary depending on factors such as experience level, disciplinary culture, and access to technological resources [30]. The technology is perceived as transformative, but its implementation requires continuous training to preserve essential competencies such as critical thinking and creativity, thereby preventing mechanical or uncritical integration [31]. Researchers consistently raise ethical concerns, focusing on three main dimensions: (1) academic integrity (authorship and originality), (2) privacy and confidentiality of qualitative data, and (3) the risk of bias and misinformation generated by poorly trained or opaque models [11,27,32]. These concerns are particularly acute in research involving sensitive populations or contexts where personal data could be misused or exposed through insecure platforms [33].

The emerging consensus underscores the need for robust institutional policies, continuous training programs, and ethical oversight mechanisms to balance the advantages of GenAI with the safeguarding of fundamental principles of qualitative research. This aligns with broader recommendations from international bodies such as United Nations Educational, Scientific and Cultural Organization (UNESCO) and the American Psychological Association (APA), which emphasize preserving human oversight, ethically validating tools before adoption, training educators and researchers in critical use, and promoting cultural inclusion and diversity. Furthermore, ref. [34] calls for regulatory frameworks to protect privacy and intellectual property rights, while encouraging reflection on the long-term implications of these technologies for knowledge production and educational systems. Likewise, the [34] policy on the use of GenAI in academic publications establishes clear criteria to ensure transparency and scientific integrity: any use of GenAI in manuscript preparation must be disclosed in the methods section, appropriately cited, and not attributed authorship. Authors must verify the accuracy of generated content and provide it as supplementary material. The policy also warns against privacy risks when inputting data into AI systems and prohibits editors or reviewers from using GenAI with manuscripts under review, to safeguard editorial confidentiality.

Therefore, the use of GenAI in academic research requires advancing toward a culture of shared responsibility, in which technological innovation is coupled with clear ethical principles, effective institutional oversight, and the critical training of researchers. The convergence between disciplinary perceptions and emerging regulatory frameworks suggests that the transformative potential of these tools can only be fully realized through reflective, transparent, and human-centered implementation. As ref. [35] argues, the use of AI in scientific research can be beneficial, provided that its ethical limits and virtues are rigorously addressed.

3. Materials and Methods

3.1. Design

A phenomenological qualitative research design was adopted, ideally suited to address questions related to the ethical and practical implications of GenAI, as it enables an in-depth exploration of the lived experiences and subjective perceptions of the actors involved [36]. This approach allowed participants to critically reflect on their interaction with specific GenAI applications in qualitative research, providing nuanced and detailed accounts that reveal both perceived benefits and emerging concerns [37].

3.2. Participants and Setting

We used a purposive, non-probability sampling strategy to recruit experienced qualitative researchers [38]. The sampling frame was constructed by identifying authors with indexed publications in Web of Science or Scopus that applied qualitative methods. Eligible researchers were invited via email to complete an open-ended online questionnaire. Data were collected between April and September 2024. We monitored sample heterogeneity during recruitment to maximize variation across disciplines, world regions, gender, age, and years of experience. The final sample comprised 214 researchers, reaching theoretical data saturation [39]. We do not claim statistical representativeness; instead, our aim was maximum variation and analytical transferability. The achieved diversity is detailed in Table 1 and supports cross-disciplinary coverage.

Table 1.

Sociodemographic characteristics of the sample.

The gender distribution included 120 male participants (56.1%) and 94 female participants (43.9%). The mean age was 45.37 years (±12.59), with an average professional experience of 14.01 years (±10.97). Geographically, 41.6% of participants resided in Europe, 14.5% in North America, 19.2% in Asia, 10.3% in South America, 10.7% in Africa, and 3.7% in Oceania. With respect to academic fields, 43.9% were affiliated with the Social and Political Sciences, 34.1% with Health Sciences, 9.8% with the Natural Sciences, 8.4% with Arts and Humanities, and 3.7% with Engineering and Architecture.

3.3. Data Collection

The data collection process began with individual contact with potential participants, who were informed about the study objectives and asked to provide their informed consent to participate [40]. Once their willingness was confirmed, basic sociodemographic information was gathered to contextualize subsequent data. Participants then received a digital open-ended questionnaire, designed to encourage extensive and reflective responses. This format was chosen for its suitability in eliciting rich and detailed narratives, thereby facilitating the identification of emerging patterns and the exploration of unanticipated themes. Furthermore, the use of digital tools expanded the geographical reach of the sample, ensured accessibility, and provided participants with the flexibility to respond at their convenience in terms of time and location [41].

The questionnaire was structured around five thematic categories (C): C1. Ethical implications of GenAI in qualitative research; C2. Protocols and guidelines to ensure the ethical use of GenAI in qualitative research; C3. Practical uses and implications of GenAI in qualitative research; C4. GenAI applications currently employed in qualitative research; C5. Barriers to the adoption of GenAI in qualitative research. Furthermore, the instrument was constructed based on the study’s objectives and the literature on qualitative research practices. Content and face validity were established through an internal peer review, involving several experts in the field (qualitative data analysts, qualitative methodology experts, and an educational technology technician). Each reviewer independently assessed the relevance, clarity, and coverage of each item using a structured rubric and proposed alternative wordings. Consensus meetings were subsequently held to resolve discrepancies and agree on possible modifications.

In the final version, each content axis was addressed through the following questions: What ethical implications do you believe GenAI poses in qualitative research? What protocols or guidelines should be implemented to ensure the ethical and responsible use of GenAI? How do you or your research team currently use GenAI, and what practical implications do you perceive? What GenAI applications are you currently employing in your qualitative research? This methodological strategy enabled the collection of substantive data directly aligned with the study objectives, while preserving participants’ voices and ensuring the interpretive richness required for qualitative analysis. However, we did not conduct an external pilot test, which we acknowledge as a limitation. At the beginning of data collection, we monitored the interpretability of the responses, and no issues were identified that warranted further modifications.

Confidentiality safeguards were applied at every stage of the study, beginning with data minimization, the questionnaire did not request names, institutional affiliations, IP addresses, or other direct identifiers, followed by pseudonymization, whereby each submission received a random code and any linkage key was stored separately on an encrypted drive with role-based restricted access; data were securely handled, collected over HTTPS, stored on encrypted servers, and accessible only to the analysis team; and reporting controls were implemented such that, when presenting illustrative quotations, potentially identifying details were removed or paraphrased to mitigate the risk of deductive disclosure.

3.4. Data Analysis

Data analysis was conducted using a thematic analysis approach, following the systematic procedure described by [42], which consists of six phases: familiarization with the data, initial coding, theme generation, review, definition and naming of themes, and the production of the final report. This method, widely validated in qualitative research for its flexibility and analytical rigor, enabled the identification of relevant patterns and the organization of the narrative material into thematic axes aligned with the study objectives.

To optimize data management, coding, and analysis, Computer-Assisted Qualitative Data Analysis Software (CAQDAS), specifically NVivo version 14, was employed. This tool facilitated the systematic organization of transcripts, the development of initial codes, the clustering of excerpts into thematic nodes, and the visualization of relationships across categories [43]. Additionally, NVivo supported a rigorous monitoring of the analytical process, ensuring both traceability of decisions and methodological transparency [44].

To ensure that interpretations remained grounded in participants’ accounts, in vivo coding in the first cycle (privileging participants’ own terms) was combined with the iterative, consensual development of a codebook. A reflective audit trail was maintained, and peer debriefing sessions were held within the team to challenge initial explanations and surface assumptions. We applied a calibration–consensus workflow in NVivo 14. Three analysts independently coded an initial subset of 20 participants, reconciled discrepancies in consensus meetings, and then fixed the codebook to ensure credibility. The remainder of the corpus was coded with a midstream double-coding check to verify fit within categories and monitor coder drift. Researcher triangulation was ensured through role rotation (lead coder, critical challenger, methodological auditor) and peer debriefings that stress-tested provisional themes against the data, including negative-case searches and constant comparison across disciplines and areas. The final codebook that supported the data analysis is provided in Appendix A (Table A1).

In line with the recommendations of [45], qualitative rigor was ensured through the application of criteria such as credibility, transferability, dependability, and confirmability. These strategies included researcher triangulation, peer review, and comprehensive documentation of analytical decisions, thereby guaranteeing that the interpretations derived were firmly grounded in empirical evidence and that the findings were valid, consistent, and transferable to similar contexts. All references to frequency in results are descriptive indicators of relevance within the coded data set and do not represent prevalence or numerical proportions of respondents. Interpretations are based on thematic patterns and illustrative quotes.

4. Results

The results are organized around five thematic axes. First, participants highlighted ethical concerns related to the integration of GenAI, including academic integrity, data privacy, and algorithmic bias. Second, they proposed protocols and guidelines to ensure ethical and responsible use, spanning institutional frameworks and practical recommendations. Third, reflections were shared on the practical implications of GenAI in everyday research, particularly regarding efficiency and analytical quality. Fourth, participants described specific applications and functions of GenAI considered most useful for qualitative inquiry. Finally, barriers and limitations to adoption were identified. This structure provides a comprehensive view of both the potential and challenges of incorporating GenAI into qualitative research.

4.1. Ethical Implications of GenAI in Qualitative Research

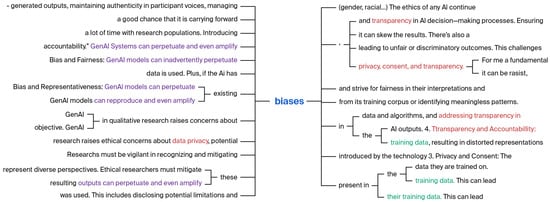

Algorithmic bias emerged as a central concern among participants, reflected in the main node of the word tree “biases” and its branches related to bias and fairness and bias and representativeness (Figure 1). Most of participants emphasized that GenAI systems can “perpetuate and even amplify” biases embedded in training data, leading to unfair or discriminatory outcomes. The word tree also highlighted connections between bias, privacy, consent, and transparency, with calls for greater traceability and clearer communication regarding how GenAI is used in qualitative research. The responses reveal broad agreement that the ethical use of GenAI in research requires a comprehensive framework: “Its use must be transparent… so that it does not undermine the credibility of the conclusions.” The analysis further revealed concerns about training data and distorted representations, underscoring the need to recognize and mitigate exclusionary patterns or inaccuracies derived from incomplete or biased datasets. Many participants warned that reliance on culturally limited or partial data could yield non-representative interpretations.

Figure 1.

Word tree linked to the concept of “biases”.

Another recurring theme was the risk of generating unreliable or fabricated results. Several participants referred to the danger of producing “pseudo-findings” lacking empirical grounding, noting the ethical risks of inappropriate reliance on GenAI, particularly among early-career researchers. Concerns were also raised about the loss of human insight and empathy, with automated interpretation perceived as unable to capture cultural and emotional nuances: “Lack of richness of interpretation of human experiences” and “GenAI may fail to empathize with the participants.” Finally, issues of intellectual property and authorship were discussed, particularly regarding the legitimacy of attributing results to researchers when GenAI played a predominant role. As one participant cautioned: “Researchers cannot be considered authors if the entire manuscript is written by GenAI.”

4.2. Protocols and Guidelines to Ensure the Ethical Use of GenAI in Qualitative Research

Responses commonly pointed to the need for a comprehensive framework to support the ethical use of GenAI in research that combines regulation, ethical principles, human oversight, interdisciplinary participation, continuous training, and technical safeguards. First, the need to establish clear regulatory frameworks governing the use of GenAI at institutional, national, and international levels was emphasized. Several participants proposed harmonizing protocols with existing regulations, such as those in Europe, and developing global consensus: “Regulate guidelines and protocols for the use of GenAI at the European and international levels,” and “We need a harmonization of approaches… not only at the country level, but also globally.” These regulations should precisely define permitted functions and tool limitations, preventing indiscriminate use.

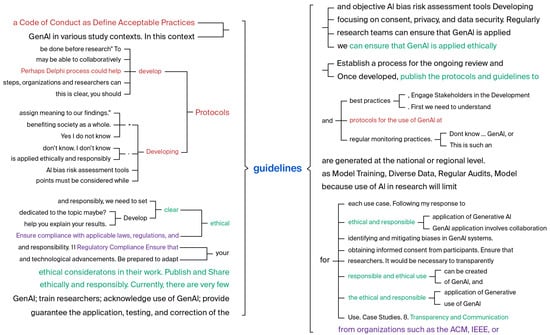

As shown in Figure 2, in the area of protocols, participants occasionally suggested the prior definition of acceptable practices through a Code of Conduct applicable to different study contexts: “a Code of Conduct as Define Acceptable Practices… in various study contexts.” The development of such protocols could be carried out using participatory methodologies, such as the Delphi process or inter-institutional working groups, involving organizations, researchers, and other relevant stakeholders: “Perhaps Delphi process could help… organizations and researchers can develop steps.” These protocols should clearly delimit human and AI functions, avoiding unsupervised assignment of meaning by GenAI: “You should help explain your results.”

Figure 2.

Word tree linked to the concept of “guidelines”.

Regarding guidelines, the emphasis was placed on establishing clear principles centered on transparency, privacy, and data security. Several responses highlighted the need to focus on “consent, privacy, and data security” as well as to implement regular assessments of risks and biases: “objective AI bias risk assessment tools.” Guidelines should result from a collaborative process involving stakeholders and should include ongoing review mechanisms: “Establish a process for the ongoing review and… publish the protocols and guidelines to best practices.”

In addition, few participants stressed that guidelines must align with international ethical and regulatory standards, such as those of the Association for Computing Machinery (ACM) and Institute of Electrical and Electronics Engineers (IEEE): “from organizations such as the ACM, IEEE.” They should also include training for researchers, ensuring awareness of ethical implications and the responsible use of technology: “Currently, there are very few GenAI; train researchers; acknowledge use of GenAI.” Further importance was given to specifying modeling and auditing practices—“as Model Training, Diverse Data, Regular Audits”—along with informed consent and process traceability: “obtaining informed consent from participants… ensure the ethical and responsible use.”

Second, ethical principles and transparency emerged as guiding pillars. Calls for explicit disclosure of GenAI in research outputs appeared consistently across responses: “The usage of GenAI in research should invariably be communicated… make it completely clear how the AI came up with their set of codes.” Honesty and traceability in the process were viewed as indispensable guarantees of scientific integrity, alongside respect for the role of the human researcher: “It is unacceptable that GenAI completely, or even partially, replaces the role of researchers.” A third recurring theme was human oversight and process control. Participants often portrayed GenAI as a support tool rather than a substitute for the researcher. This entails clearly delimiting tasks suitable for AI intervention and those that must be performed by humans: “You cannot use AI to generate data or results… but you can use it to help you explain your results,” and “Clearly delimit what needs to be done by humans… most tasks should be clearly performed by humans.”

The fourth axis identified was the creation of ethics committees and interdisciplinary collaboration. Several proposals suggested the establishment of working groups integrating specialists in ethics, law, technology, and scientific disciplines, with participation from the international academic community: “There should be an educational ethics committee that issues a prior memorandum,” and “The protocols should be developed collaboratively… universities from several countries.” Recognition also emerged that a lack of knowledge could lead to unrealistic regulations, leading to the fifth theme: training and AI literacy. A subset of participants proposed creating training spaces to better understand the functioning, potential, and risks of GenAI: “Create training spaces so that we better understand what we’re going to try to regulate,” and “Universities need to invest… in training of researchers to use the tools for efficiency and correctly.”

A significant block of comments focused on data protection, privacy, and bias mitigation. Here, participants stressed the importance of guaranteeing anonymization, preventing the use of data in model training, and establishing protocols to detect and correct biases: “It would be important for AI to have a way of guaranteeing that it doesn’t share data with anyone… or share it anonymously,” and “Implement strategies to detect and mitigate biases… diverse training data, regular audits, and bias detection tools.” At last, many accounts described uncertainty or limited preparedness to propose specific protocols or guidelines, reflecting both the complexity of the issue and the nascent state of academic discussion on the ethical use of GenAI. Expressions such as “I don’t know,” “Not sure,” or “It is beyond my expertise” evidenced a lack of technical and regulatory knowledge that hinders the formulation of solid proposals. This uncertainty is linked, in some cases, to the perception that technology evolves too quickly to establish stable rules, and in others, to an explicit acknowledgment of the need for further training, debate, and collective reflection before defining binding standards. The presence of such responses suggests that any development of protocols and guidelines must be accompanied by training programs and dialogue spaces enabling the research community to better understand the capacities, limitations, and risks of GenAI.

Without attempting statistical comparisons, the analysis revealed consistent patterns. By region, settings with stronger regulation prioritized privacy and compliance, whereas others emphasized practical consent and platform reliability. By discipline, social sciences and health highlighted participant protection and confidentiality in small communities; in engineering, the focus was on methodological transparency, validation, and traceability of GenAI outputs. By career stage, early-career researchers reported lower procedural preparedness and a need for hands-on guidance, while senior researchers emphasized governance, documentation, and auditability. These variations indicate the value of context-specific protocols and training—guidance aligned with local legal frameworks, discipline-specific materials with practical cases, and tiered programs by experience level (operational modules for early-career researchers and governance/audit modules for senior leads).

4.3. Practical Uses and Implications of GenAI in Qualitative Research

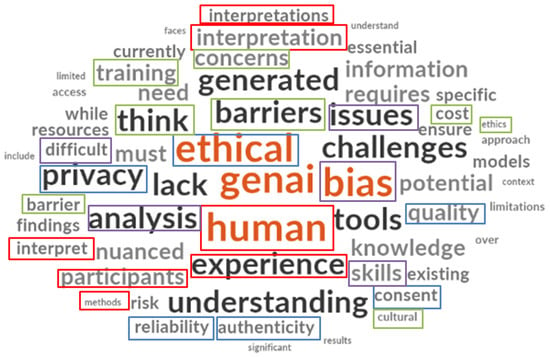

The word cloud in Figure 3 synthetically reflects the most recurrent concepts in the responses analyzed, showing a wide heterogeneity in the degree of adoption, concrete uses, and perceptions of GenAI in the field of qualitative research. It highlights terms such as “qualitative,” “analysis,” “research,” “GenAI,” “using,” and “coding,” which directly refer to the central functions attributed to GenAI in qualitative research. Likewise, the presence of words such as “ethical,” “biases,” “human,” and “interpretation” underscores concerns about human oversight, interpretive quality, and ethical implications. Other terms, including “literature,” “interview,” “themes,” and “findings,” connect to specific stages of the research process and to practices identified in the thematic analysis, such as literature review, interview analysis, theme identification, or the generation of findings. This visual representation anticipates the central axes of the analysis presented below, where the uses and implications of incorporating GenAI into qualitative research are examined in detail.

Figure 3.

Wordcloud of use and practical implications of GenAI in qualitative research.

At one end, some participants stated that they do not use it, citing reasons related to the sensitivity of research topics, distrust of the technology, or the lack of institutional maturity to manage it. As some expressed: “We don’t use it; the research topics we address are very sensitive and require careful interpretation,” or “I do not use GenAI and nobody in my team does. I don’t see how this will change in the future.” In other cases, rejection stemmed from matters of principle, as reflected in the statement “not ready. If GenAI can write it for us, then it really is not original enough to be worth pursuing,” or from the perception that its use could be considered plagiarism in certain institutional contexts: “I am yet to explore it because certain institutions are still taking GenAI as plagiarism.”

A second group is in very early phases of experimentation, describing their use as anecdotal or exploratory—“Currently, its use is anecdotal, as a first exploratory phase”—or acknowledging that “We are not using it at the moment.” Among those who do use it, one of the most frequent areas is support for routine tasks that are time-consuming but do not require interpretive judgment. GenAI is used for transcription, proofreading, and text translation, as reported by several participants: “Lately, it has been useful to quickly transcribe a first version of a set of interviews… ‘cockatoo’,” “I’ve primarily used it to reduce the time spent transcribing… AI has also been useful for proofreading and translating texts,” or “automatic transcriptions… worked pretty well as long as the transcriptions are in English.” Its use for data organization and mining, as well as for literature review, was also reported. Some examples include: “For the organization of data and texts,” “linked to data mining actions provided by programs,” or “support the identification of relevant and reliable articles… compare a set of articles…”.

At the analytical level, several responses pointed to the usefulness of GenAI for generating codes, identifying themes, and creating groupings or clusters. One researcher stated “My team and I use GenAI for content analysis to establish thematic clusters,” while another explained: “Practically, I use it to generate codes from transcripts and compare that with my independent coding.” Its potential was also recognized for initial coding and the identification of key concepts: “ChatGPT can be very useful for initial coding and identification of key concepts and themes.” Another line of use is linked to the production of intermediate or final outputs. Applications mentioned included drafting summaries and structuring reports: “develop rounded synthesis for metadata mapping…”, “Automated summaries… generate summaries and reports of the qualitative data,” or “ask you to give me a summary of a document or a script… more efficient for me.”

In the creative and ideation phase, GenAI is used to support brainstorming and improve textual quality: “I use it for brainstorming and to stimulate ideation,” and “improve writing, check spelling… rephrase… better, concise.” Advanced scenarios were also reported, including experimentation with visualizations, chatbots, or simulations: “create interactive tools or chatbots that engage participants,” “simulate various scenarios based on qualitative data,” and “create visual representations… word clouds, thematic maps, network diagrams.” In terms of technological integration, some participants mentioned its incorporation into CAQDAS tools such as Atlas.ti version 25 or NVivo version 15, particularly valuing the security provided by these environments: “I use it within the Atlas.ti program because it guarantees data security… identifying categories and verbatim,” “Nvivo includes some part of AI, where you are able to overlook a high amount of data,” and “Tools like MAXQDA version 24.4 are offering AI already.” In more peripheral uses, documentation creation was identified, including drafting questionnaires or preparing documents for ethics committees: “To generate draft questionnaires… help in completing approval forms eg for ethics approval; participant information sheets”.

Nevertheless, issues of quality, bias, and authenticity constitute another critical point in the use of GenAI for data analysis. While some highlighted technical deficiencies—“I tried to use AI for automatic analysis, this was terrible,” and “the results still seem insufficient”—others warned of the risk of bias and loss of authenticity: “GenAI systems can introduce and even reinforce biases… distort research findings,” and “It easy our work but decrease data authenticity.” Across responses, the need for human supervision was stressed, both to detect errors and to control “hallucinations”: “double-checking each result… using more than one tool… to reduce errors or hallucinations,” and “corroborate that what the GenAI interpreted is correct.” This review also requires specific competencies and training, particularly for non-native speakers: “Skill Development: Train researchers… understand limitations and capabilities of GenAI,” and “as I’m not a native speaker, I use AI to revise my writing… teach me”.

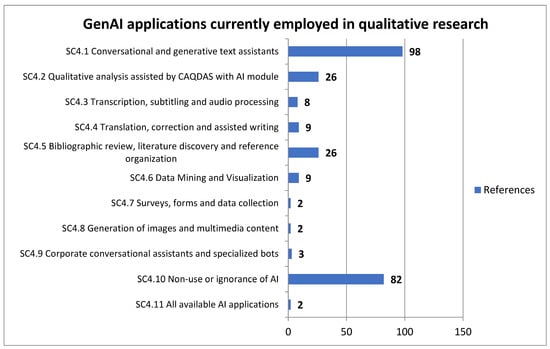

4.4. GenAI Applications Currently Employed in Qualitative Research

The results reveal a clear predominance of conversational and text-generative assistants, with 98 references (Figure 4). Within this category, ChatGPT accounts for the majority of mentions (66), followed by Copilot (10), Gemini (7), and Claude (5). This indicates that applications based on natural language generation constitute the core of the GenAI ecosystem used by participants. The second most frequently cited tools were AI-assisted qualitative analysis software, with 26 mentions, primarily Atlas.ti (11) and NVivo (9), reflecting their integration into academic research processes. A comparable proportion was observed for literature review and discovery tools, where Consensus (3), Elicit (4), Perplexity (5), and reference managers such as Mendeley and Zotero were highlighted.

Figure 4.

GenAI applications currently employed in qualitative research.

Audio analysis through transcription and subtitling tools (8 mentions), along with translation, proofreading, and writing assistance (9 mentions), with applications such as DeepL, Grammarly, and OtterAI, showed intermediate frequency, indicating their supportive role in communication and textual data processing. Similarly, data mining and visualization (9 mentions), linked to platforms such as Tableau, KNIME, and PowerBI, reflected a more technical and specialized use, adopted primarily by researchers with solid technical expertise. In contrast, areas such as data collection via surveys (2 mentions), image and multimedia generation (2 mentions), and corporate assistants or specialized bots (3 mentions) showed only marginal levels of adoption.

A particularly relevant finding is that 82 participants reported either no use or lack of awareness of these tools, making this the second largest category and revealing a significant gap in technological familiarity and adoption. This suggests that, despite the high visibility of text-generative assistants, there remains a substantial sector of researchers who do not yet integrate AI into their practices, whether due to lack of knowledge, limited access, or low perceived usefulness: “Not used any yet. But will try to explore to understand more about GenAI in research”; “We don’t use it yet, but we are interested in doing so”; “None. I reject the technology and find its embrace by some researchers’ offensive”.

4.5. Barriers to the Adoption of GenAI in Qualitative Research

The analysis of participants’ responses reveals a complex set of barriers that hinder the adoption of GenAI tools in qualitative research. These barriers are organized around epistemological and methodological (red), ethical (blue), technical, cultural, and educational (green) dimensions, as well as those related to data validity and interpretation (purple), which are represented in the word cloud of texts in Figure 5.

Figure 5.

Wordcloud of barriers to using GenAI in qualitative research.

First, epistemological and methodological barriers emerge, related to the apparent incompatibility between the interpretive nature of qualitative research and the automated character of GenAI. As one participant emphasized, “epistemologically… the point of research work presupposes the existence of a human researcher who carries out the interpretive work.” The artisanal essence of qualitative analysis, which involves reflexivity, context, and subjectivity, is perceived as being at risk of dilution in the face of automated processes that, according to another testimony, “may simplify complex processes, which becomes a barrier to the qualitative researcher’s learning”.

Ethical barriers represent another central axis, particularly concerning data privacy, confidentiality, and academic integrity. Several participants stressed the difficulty of ensuring informed consent and the protection of sensitive information: “Data privacy: handling sensitive or confidential information poses significant challenges.” Others directly questioned the legitimacy of using these tools in qualitative research, noting that “the humanity of the responses is lost, there is a lack of empathy in the analysis,” or even that “what GenAI represents is the opposite of what qualitative research means”.

From a technical and resource perspective, significant limitations were identified. Restricted access to high-quality platforms, elevated costs, and the need for specialized infrastructure are seen as obstacles, especially in Global South contexts: “In Latin America, the costs… to access the best-quality tools and process large amounts of data,” or “paying fund for it is a fundamental barrier in the context of Nepal.” Additional challenges include current model limitations such as hallucinations, lack of transparency in their functioning, and technological immaturity: “Currently, some methodologies… require immersion in the data; shortcuts are counterproductive”.

Another set of responses highlighted cultural and acceptance barriers, associated with resistance to change and traditional paradigms in qualitative research. As one participant stated, “the main one is the fear of change and the unknown that humans have,” while another attributed the resistance to “the older generation of researchers’ commitment to their old ways of doing things.” These perceptions are linked to the fear that adopting GenAI entails a loss of authenticity and a process of dehumanization in analysis. Equally relevant are barriers related to training and digital literacy. The lack of specific competencies for using these tools limits their potential. Several participants acknowledged that researchers are “poorly trained in the use of GenAI” and that there are “few guides for combining human and intelligent agent interpretations.” Another stressed that the “lack of training in prompting” results in low-quality outputs and biases, undermining the credibility of analyses.

As a final point, barriers of validity and interpretation emerge strongly. Trust in GenAI outputs is questioned, as researchers believe that models tend to generate “unverifiable data” or that automation leads to “oversimplify complex human experiences, leading to a loss of depth.” The risk that interpretations may become generic and disconnected from contextual meanings is a particular concern: “by letting GenAI interpret our data for us… the results could become so generic that they become useless”.

5. Discussion

Drawing on a qualitative mapping with a large sample of contracted specialists and grounded in a replicable analytic protocol, our findings characterize expert discourses on GenAI in qualitative research and delineate the practical and ethical pressure points that should guide the design of targeted guidance and training.

The findings of this study confirm that the incorporation of GenAI into qualitative research is shaped by a set of challenges articulated across ethical, methodological, practical, and epistemological dimensions. Within the first category of analysis, ethical implications emerge as the primary concern among researchers, particularly in relation to privacy, informed consent, and process transparency. As noted in the literature [13,14], the use of sensitive data and the opacity of algorithms threaten the integrity of qualitative inquiry. The results reinforce this concern by highlighting risks such as the production of pseudo-findings, the loss of empathy and interpretive richness, and the potential for plagiarism or misattribution of authorship. These perceptions reflect the urgency of consolidating ethical protocols that safeguard both participant protection and the interpretive essence of qualitative analysis [11].

In the second category of analysis, the data indicate a consensus on the need for clear protocols and guidelines to regulate the use of GenAI, which aligns with recommendations from international organizations such as UNESCO and the APA. These bodies propose preserving human oversight, ethically validating tools prior to adoption, and explicitly declaring their use in manuscripts [46]. Participants call for the development of codes of conduct and ongoing review processes, echoing prior proposals for adaptive and collaborative regulatory frameworks [16,21]. Likewise, the demand for interdisciplinary ethics committees and training programs resonates with the warnings of [28,31], who emphasize that regulation must be accompanied by processes of critical digital literacy to enable researchers to understand both the capacities and limitations of GenAI.

The third category of analysis highlights the ambivalent practical implications of GenAI. On the one hand, researchers recognize benefits in streamlining routine tasks such as transcription, translation, data organization, or summarization, in line with previous studies [5,6]. On the other hand, distrust persists with respect to the reliability of outputs, technical limitations, and the potential for bias, which necessitates maintaining human oversight as a guiding principle [10]. Moreover, disparities in access and high costs constitute significant barriers in certain geographical contexts, reproducing epistemic inequalities already noted in the literature [20].

In the fourth category of analysis, the results show that the most commonly used GenAI applications are conversational and text-generative assistants, particularly ChatGPT, followed by Copilot, Gemini, and Claude. This predominance confirms the centrality of language models within the current ecosystem, consistent with reports by [47,48,49], who attribute ChatGPT’s dominance to its support for writing, textual analysis, and efficiency in information processing. At the same time, the use of CAQDAS platforms such as Atlas.ti or NVivo for AI-assisted qualitative analysis confirms the trend identified by [6,7] regarding the integration of generative modules into specialized software. However, the fact that a substantial proportion of participants reported either not using or being unaware of such tools reveals a gap in digital literacy, as already identified by [28], which may result in unequal appropriation of technological innovations across disciplines and generations of researchers.

Finally, the fifth category of analysis confirms the existence of multiple barriers limiting the adoption of GenAI in qualitative research. These include the perceived incompatibility with the interpretive nature of this approach, concerns about the loss of authenticity and subjectivity, lack of specialized training, high access costs, and doubts about the validity of outputs. Such barriers, also identified in recent studies [11,50], demonstrate that resistance to GenAI integration cannot be explained solely by technical factors, but is rooted in deeper epistemological, cultural, and philosophical considerations.

Overall, the integration of GenAI into qualitative research requires moving toward a balance between innovation and the preservation of foundational principles. The challenge lies in leveraging its capacities to optimize instrumental tasks without compromising the interpretive, reflective, and humanistic dimensions that define qualitative inquiry. Achieving this goal demands the development of robust ethical frameworks [21], critical training programs [31], and a collective commitment by the scientific community to ensure a responsible, transparent, and methodologically coherent use of GenAI in alignment with the core principles of qualitative research.

6. Conclusions

The voices of the participating researchers indicate that the incorporation of GenAI into qualitative research is perceived as an ambivalent process, where opportunities for methodological support coexist with profound ethical and epistemological concerns. Their perspectives converge on the idea that, although these tools can enhance efficiency in routine tasks and broaden analytical possibilities, their use without appropriate regulation and critical oversight may jeopardize both the credibility and the very essence of qualitative inquiry. Participants repeatedly emphasized that ethical dilemmas—linked to algorithmic bias, data privacy, transparency in authorship, and respect for academic integrity—cannot be separated from the need to establish protocols and guidelines that ensure the responsible use of GenAI. Proposed measures include codes of conduct, interdisciplinary committees, and continuous training processes, reflecting a collective concern to situate the technology within a shared regulatory framework rather than leaving it to individual discretion.

At the same time, the experiences reported show that the practical value of GenAI is recognized primarily in the automation of operational tasks—such as transcription, translation, data organization, or assistance in initial coding—but always under the condition that interpretive analysis remains in the hands of the researcher. In this regard, the expressed opinions stress that cultural sensitivity, empathy, and reflexivity—central elements of the qualitative tradition—cannot be replicated by algorithms. The diversity of positions also reveals a heterogeneous academic community: while some researchers are open to experimentation and integration of these tools in specialized settings, others express rejection or distrust, whether due to epistemological convictions, fear of losing authenticity, or lack of training and access to resources. This variety of perceptions highlights the existence of educational, institutional, and regional gaps that shape the adoption of GenAI across different contexts.

Among the limitations of this study, it is important to note that, as it relies on self-reported testimonies, the results reflect subjective experiences conditioned by researchers’ familiarity with GenAI and their institutional contexts. Furthermore, the emergent nature of the phenomenon under study implies that the perceptions gathered are subject to rapid change as technology, regulation, and training in this field evolve. This requires interpreting the conclusions with caution, while recognizing their contribution to strengthening the regulatory foundations of this area of inquiry. These conclusions are based on qualitative thematic interpretation aimed at analytical, rather than statistical, generalization. Although descriptive counts are included, we do not infer prevalence. Rigor was ensured through coding, calibration-consensus, triangulation, audit trails, and saturation checks. Future work should quantitatively validate these patterns, using multinational surveys, scenario experiments, and organizational audits, to estimate magnitudes and verify external validity.

Moreover, several avenues for future research emerge that could expand and consolidate knowledge in this field. It is necessary to comparatively explore how perceptions and practices vary across institutional, disciplinary, and regional contexts; to examine how attitudes evolve as regulatory frameworks and digital competencies are consolidated; and to conduct empirical studies that assess the effects of GenAI on the quality of qualitative research processes and outcomes. Likewise, longitudinal studies could shed light on how research practices transform according to the degree of integration and trust placed in these technologies.

Author Contributions

Conceptualization, J.L.C.-G. and M.C.S.-G.; methodology, J.L.C.-G. and M.C.S.-G.; software, J.L.C.-G.; validation, J.L.C.-G., I.d.B.-A. and M.C.S.-G.; formal analysis, J.L.C.-G.; investigation, J.L.C.-G.; resources, I.d.B.-A. and M.C.S.-G.; data curation, J.L.C.-G.; writing—original draft preparation, I.d.B.-A.; writing—review and editing, I.d.B.-A. and J.L.C.-G.; visualization, J.L.C.-G. and I.d.B.-A.; supervision, J.L.C.-G., I.d.B.-A. and M.C.S.-G.; project administration, M.C.S.-G. and I.d.B.-A.; funding acquisition, M.C.S.-G. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Ethical review and approval were waived for this study because, under Spanish law (Law 14/2007, of July 3, on Biomedical Research) and the University of Extremadura’s Research Ethics Commission guidelines, non-interventional social/educational studies that collect anonymous, non-identifiable data from competent adults and pose minimal risk are exempt from ethics committee review. The study comprised anonymous, voluntary online questionnaires/interviews with university faculty; no clinical procedures, no biomedical experimentation, and no collection of personal or sensitive data were carried out. All procedures complied with the 2013 revision of the Declaration of Helsinki; participants were informed about the purpose of the study, confidentiality, and their right to withdraw at any time without consequences. No personally identifiable information was collected.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The original data presented in the study are openly available in Zenodo Repository at: https://doi.org/10.5281/zenodo.16905164.

Acknowledgments

We sincerely thank each of the researchers who participated in this study for generously sharing their time, experiences, and reflections. Their contributions were essential in providing a plural and critical understanding of the challenges and opportunities that GenAI brings to qualitative research. Without their voices and commitment, this work would not have been possible.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| GenAI | Generative Artificial Intelligence |

| AI | Artificial Intelligence |

| GAN | Generative Adversarial Networks |

| GDPR | General Data Protection Regulation |

| UNESCO | United Nations Educational, Scientific and Cultural Organization |

| APA | American Psychological Association |

| C | Category |

| SC | Subcategory |

| CAQDAS | Computer-Assisted Qualitative Data Analysis Software |

| ACM | Association for Computing Machinery |

| IEEE | Institute of Electrical and Electronics Engineers |

Appendix A

The codebook was structured into five main categories and a total of 39 subcategories, covering ethical, normative, practical, application, and barrier dimensions to GenAI adoption in qualitative research.

Table A1.

Codebook.

Table A1.

Codebook.

| Category | Subcategory |

|---|---|

| C1. Ethical implications of GenAI in qualitative research | SC1.1 Algorithmic Bias SC1.2 Declaration of GenAI Use and Integration SC1.3 Recognizing and Mitigating Patterns of Exclusion or Inaccurate Representations SC1.4 Data Truth, Rigor, and Risk of Falsification SC1.5 Loss of the Human Component and Empathy as an Inherent Risk |

| C2. Protocols and guidelines to ensure the ethical use of GenAI in qualitative research | SC2.1 Need to establish clear regulatory frameworks governing the use of GenAI SC2.2 Ethical principles and researcher transparency SC2.3 Human oversight and process control SC2.4 Creation of ethics committees and interdisciplinary collaboration SC2.5 AI training and literacy SC2.6 Data protection, privacy, and bias mitigation SC2.7 Not knowing or feeling prepared to propose specific protocols or guidelines |

| C3. Practical uses and implications of GenAI in qualitative research | SC3.1 Does not use GenAI in qualitative research, either individually or as a team SC3.2 Initial phase of GenAI experimentation in qualitative research SC3.3 Support for routine tasks that are time-consuming but do not require interpretive judgment (transcription, translation, review and summarization of texts, etc.) SC3.4 Data organization and mining SC3.5 Literature review SC3.6 Tasks related to content analysis (generating codes, identifying themes, or creating groupings or clusters) SC3.7 Preparation of intermediate or final products (writing summaries or structuring reports) SC3.8 Technological integration of GenAI into CAQDAS (Atlas.ti version 25, NVivo version 15, or MAXQDA version 24.4) SC3.9 Creation of documentation (questionnaires or dossiers for ethics committees) SC3.10 Critical points in the analysis of qualitative data with GenAI (quality, bias, authenticity, or need for human supervision) |

| C4. GenAI applications currently employed in qualitative research | SC4.1 Conversational and generative text assistants (ChatGPT version 5, Claude version Opus 4.1, Gemini version 2.0 Flash Thinking, Google Bard, Jasper version 9.0.1, JenniAI, Copilot version 22H2, MetaAI, AIWriter version 1.1.6, AIAssistant version 8, ScholarGPT, Bing version 31.4.2110003555) SC4.2 Qualitative analysis assisted by CAQDAS with AI module (Atlas.ti version 25, NVivo version 15, MAXQDA version 24.4, Quirkos version 3.0, Leximancer version 4.5) SC4.3 Transcription, subtitling and audio processing (Amberscript, Cockatoo version 3.6.5, OtterAI ersion 3.7.0, Trint, SpeechLogger version 0.16, Julius version 4.3.1, Kahubi) SC4.4 Translation, correction and assisted writing (Deepl version 25.42, Grammarly version 1.2.207, Word traductor included in Office 365 package) SC4.5 Bibliographic review, literature discovery and reference organization (Connected Papers, Consensus version 3.1, Elicit version 1.1.7, Research Rabbit, SciteAI version 1.37.0, Scispace version 9.8, Scholarcy version 5.3.0, Mendeley version 1.19.5, Zotero version 7, NotebookLM version 2025.10.14.820451664, Pinpoint version 0.1.9, Perplexity iOS app v2.251016.0) SC4.6 Data Mining and Visualization (PowerBI version 2.148.878.0, Tableau version 2025.1.1, D3.js version 7.8.5, Matlab version R2025b, KNIME version 5.8, RapidMiner version 2025.1, Alteryx version 2025.1.2.79, MonkeyLearn, Lexalytics) SC4.7 Surveys, forms and data collection (Qualtrics version 3.2.1, SurveyMonkey version 4.5.5) SC4.8 Generation of images and multimedia content (DALL·E version 3, Midjourney version 7) SC4.9 Corporate conversational assistants and specialized bots (IBM Watson Assistant 5.1.1, Microsoft Azure Bot Service version 4, ChatbaseAI) SC4.10 Non-use or ignorance of AI SC4.11 All available AI applications |

| C5. Barriers to the adoption of GenAI in qualitative research | SC5.1 Epistemological and methodological barriers SC5.2 Ethical barriers SC5.3 Technical and resource accessibility barriers SC5.4 Cultural resistance to change and attachment to traditional approaches in qualitative research SC5.5 Training and digital literacy barriers SC5.6 Barriers to validity and interpretation of results |

References

- Ngozwana, N. Ethical Dilemmas in Qualitative Research Methodology: Researcher’s Reflections. Int. J. Educ. Methodol. 2018, 4, 19–28. [Google Scholar] [CrossRef]

- Salvaris, M.; Dean, D.; Tok, W.H. Generative Adversarial Networks. In Deep Learning with Azure: Building and Deploying Artificial Intelligence Solutions on the Microsoft AI Platform; Salvaris, M., Dean, D., Tok, W.H., Eds.; Apress: Berkeley, CA, USA, 2018; pp. 187–208. [Google Scholar] [CrossRef]

- van der Zant, T.; Kouw, M.; Schomaker, L. Generative Artificial Intelligence. In Philosophy and Theory of Artificial Intelligence; Müller, V.C., Ed.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 107–120. [Google Scholar] [CrossRef]

- Griffith, T.W. A Computational Theory of Generative Modeling in Scientific Reasoning. Ph.D. Thesis, Georgia Institute of Technology, Atlanta, GA, USA, 1999. [Google Scholar]

- Yépez-Reyes, V.; Cruz-Silva, J. Inteligencia artificial en la transcripción de entrevistas. Contratexto 2024, 183–202. [Google Scholar] [CrossRef]

- Tai, R.H.; Bentley, L.R.; Xia, X.; Sitt, J.M.; Fankhauser, S.C.; Chicas-Mosier, A.M.; Monteith, B.G. An Examination of the Use of Large Language Models to Aid Analysis of Textual Data. Int. J. Qual. Methods 2004, 23, 16094069241231168. [Google Scholar] [CrossRef]

- dos Anjos, J.R.; de Souza, M.G.; de Andrade Neto, A.S.; de Souza, B.C. An analysis of the generative AI use as analyst in qualitative research in science education. Rev. Pesqui. Qual. 2024, 12, 1–29. [Google Scholar] [CrossRef]

- Gamboa, A.J.P.; Díaz-Guerra, D.D. Artificial Intelligence for the development of qualitative studies. LatIA 2023, 1, 4. [Google Scholar] [CrossRef]

- Owoahene Acheampong, K.; Nyaaba, M. Review of Qualitative Research in the Era of Generative Artificial Intelligence. SSRN Electron. J. 2024. [Google Scholar] [CrossRef]

- Dahal, N. How Can Generative AI (GenAI) Enhance or Hinder Qualitative Studies? A Critical Appraisal from South Asia, Nepal. Qual. Rep. 2024, 29, 722–733. [Google Scholar] [CrossRef]

- Chiu, T.K.F. Future research recommendations for transforming higher education with generative AI. Comput. Educ. Artif. Intell. 2024, 6, 100197. [Google Scholar] [CrossRef]

- Davison, R.M.; Chughtai, H.; Nielsen, P.; Marabelli, M.; Iannacci, F.; van Offenbeek, M.; Tarafdar, M.; Trenz, M.; Techatassanasoontorn, A.A.; Díaz Andrade, A.; et al. The ethics of using generative AI for qualitative data analysis. Inf. Syst. J. 2024, 34, 1433–1439. [Google Scholar] [CrossRef]

- Ferrara, E. GenAI against humanity: Nefarious applications of generative artificial intelligence and large language models. J. Comput. Soc. Sc. 2024, 7, 549–569. [Google Scholar] [CrossRef]

- Al-kfairy, M.; Mustafa, D.; Kshetri, N.; Insiew, M.; Alfandi, O. Ethical Challenges and Solutions of Generative AI: An Interdisciplinary Perspective. Informatics 2024, 11, 58. [Google Scholar] [CrossRef]

- Bale, A.S.; Dhumale, R.B.; Beri, N.; Lourens, M.; Varma, R.A.; Kumar, V.; Sanamdikar, S.; Savadatti, M.B. The Impact of Generative Content on Individuals Privacy and Ethical Concerns. Int. J. Intell. Syst. Appl. Eng. 2024, 12, 697–703. [Google Scholar]

- Zohny, H.; McMillan, J.; King, M. Ethics of generative AI. J. Med. Ethics 2023, 49, 79–80. [Google Scholar] [CrossRef] [PubMed]

- Chichande, X.S.F.; Gallardo, N.M.M.; Márquez, T.B.M.; Rodriguez, M.C.T. La etica en la inteligencia artificial desafíos y oportunidades para la sociedad moderna. Sage Sphere Int. J. 2024, 1, 1–24. Available online: https://sagespherejournal.com/index.php/SSIJ/article/view/1 (accessed on 8 August 2025).

- Tal, A.; Elyoseph, Z.; Haber, Y.; Angert, T.; Gur, T.; Simon, T.; Asman, O. The Artificial Third: Utilizing ChatGPT in Mental Health. Am. J. Bioeth. 2023, 23, 74–77. [Google Scholar] [CrossRef] [PubMed]

- Kumar, D.; Dhalwal, R.; Chaudhary, A. Exploring the Ethical Implications of Generative AI in Healthcare. In The Ethical Frontier of AI and Data Analysis; IGI Global Scientific Publishing: Palmdale, PA, USA, 2024; pp. 180–195. [Google Scholar] [CrossRef]

- Piedra-Castro, W.I.; Cajamarca-Correa, M.A.; Burbano-Buñay, E.S.; Moreira-Alcívar, E.F. Integración de la inteligencia artificial en la enseñanza de las Ciencias Sociales en la educación superior. J. Econ. Soc. Sci. Res. 2024, 4, 105–126. [Google Scholar] [CrossRef]

- Nguyen-Trung, K. ChatGPT in thematic analysis: Can AI become a research assistant in qualitative research? Qual. Quant. 2025. [Google Scholar] [CrossRef]

- Adarkwah, M.A. GenAI-Infused Adult Learning in the Digital Era: A Conceptual Framework for Higher Education. Adult Learn. 2025, 36, 149–161. [Google Scholar] [CrossRef]

- Islam, G.; Greenwood, M. Generative Artificial Intelligence as Hypercommons: Ethics of Authorship and Ownership. J. Bus. Ethics 2024, 192, 659–663. [Google Scholar] [CrossRef]

- Cabanillas-García, J.L. International Trends and Influencing Factors in the Integration of Artificial Intelligence in Education with the Application of Qualitative Methods. Informatics 2025, 12, 61. [Google Scholar] [CrossRef]

- Andersen, J.P.; Degn, L.; Fishberg, R.; Graversen, E.K.; Horbach, S.P.J.M.; Schmidt, E.K.; Schneider, J.W.; Sørensen, M.P. Generative Artificial Intelligence (GenAI) in the research process—A survey of researchers’ practices and perceptions. Technol. Soc. 2025, 81, 102813. [Google Scholar] [CrossRef]

- Yan, L.; Echeverria, V.; Fernandez-Nieto, G.M.; Jin, Y.; Swiecki, Z.; Zhao, L.; Gašević, D.; Martinez-Maldonado, R. Human-AI Collaboration in Thematic Analysis Using ChatGPT: A User Study and Design Recommendations. In Extended Abstracts of the CHI Conference on Human Factors in Computing Systems, in CHI EA ’24; Association for Computing Machinery: New York, NY, USA, 2024; pp. 1–7. [Google Scholar] [CrossRef]

- Kang, R.; Xuan, Z.; Tong, L.; Wang, Y.; Jin, S.; Xiao, Q. Nurse Researchers’ Experiences and Perceptions of Generative AI: Qualitative Semistructured Interview Study. J. Med. Internet Res. 2025, 27, e65523. [Google Scholar] [CrossRef] [PubMed]

- Herrera, J.D. La Comprensión de lo Social: Horizontes Hermenéutico de las Ciencias Sociales; Universidad de los Andes: Bogotá, Colombia, 2023. [Google Scholar]

- Ning, Y.; Teixayavong, S.; Shang, Y.; Savulescu, J.; Nagaraj, V.; Miao, D.; Mertens, M.; Ting, D.S.W.; Ong, J.C.L.; Liu, M.; et al. Generative artificial intelligence and ethical considerations in health care: A scoping review and ethics checklist. Lancet Digit. Health 2024, 6, e848–e856. [Google Scholar] [CrossRef] [PubMed]

- Saz-Pérez, F.; Pizà-Mir, B. Necesidades y perspectivas de la integración de la inteligencia artificial generativa en el contexto educativo español. UTE. Teach. Technol. Univ. Tarracon. 2024, 2. Available online: https://raco.cat/index.php/UTE/article/view/429956 (accessed on 8 August 2025).

- Pereira, R.; Reis, I.W.; Ulbricht, V.; Santos, N. dos Generative artificial intelligence and academic writing: An analysis of the perceptions of researchers in training. Manag. Res. J. Iberoam. Acad. Manag. 2024, 22, 429–450. [Google Scholar] [CrossRef]

- Fawaz, M.; El-Malti, W.; Alreshidi, S.M.; Kavuran, E. Exploring Health Sciences Students’ Perspectives on Using Generative Artificial Intelligence in Higher Education: A Qualitative Study. Nurs. Health Sci. 2025, 27, e70030. [Google Scholar] [CrossRef]

- de la Fuente Tambo, D.; Moreno, S.I.; Ruiz, M.A. Barriers and Enablers for Generative Artificial Intelligence in Clinical Psychology: A Qualitative Study Based on the COM-B and Theoretical Domains Framework (TDF) Models. Res. Sq. 2024, 1–20. [Google Scholar] [CrossRef]

- APA Journals Policy on Generative AI: Additional Guidance. Available online: https://www.apa.org/pubs/journals/resources/publishing-tips/policy-generative-ai (accessed on 8 August 2025).

- Rivera, L.C.; Mamani, I.P.; Chalco, J.R.M.; Jáuregui, C.A.G.; Castillo, N.S.C. Ética en el uso de la Inteligencia Artificial en la Investigación Científica: Desafíos y consideraciones. Aula Virtual 2024, 5, 1488–1509. [Google Scholar] [CrossRef]

- Sánchez-Gómez, M.C.; Cabanillas-Garcia, J.L.; Verdugo-Castro, S. Percepción y experiencias de los estudiantes de la Universidad de Salamanca sobre el ocio y el tiempo libre. Pedagog. Soc. Rev. Interuniv. 2024, 45, 231–255. [Google Scholar] [CrossRef]

- Badil; Muhammad, D.D.M.D.; Aslam, Z.A.Z.; Khan, K.K.K.; Ashiq, A.A.A.; Bibi, U.B.U. The Phenomenology Qualitative Research Inquiry: A Review Paper: Phenomenology Qualitative Research Inquir. Pak. J. Health Sci. 2023, 4, 9–13. [Google Scholar] [CrossRef]

- Emerson, R.W. Convenience Sampling Revisited: Embracing Its Limitations Through Thoughtful Study Design. J. Vis. Impair. Blind. 2021, 115, 76–77. [Google Scholar] [CrossRef]

- Braun, V.; Clarke, V. To saturate or not to saturate? Questioning data saturation as a useful concept for thematic analysis and sample-size rationales. Qual. Res. Sport Exerc. Health 2021, 13, 201–216. [Google Scholar] [CrossRef]

- Zhong, R.; Northrop, J.; Sahota, P.; Glick, H.; Rostain, A. Informed About Informed Consent: A Qualitative Study of Ethics Education. J. Health Ethics 2019, 15, 2. [Google Scholar] [CrossRef]

- Cabanillas-García, J.L. The Application of Active Methodologies in Spain: An Investigation of Teachers’ Use, Perceived Student Acceptance, Attitude, and Training Needs Across Various Educational Levels. Educ. Sci. 2025, 15, 210. [Google Scholar] [CrossRef]

- Braun, V.; Clarke, V. Using thematic analysis in psychology. Qual. Res. Psychol. 2006, 3, 77–101. [Google Scholar] [CrossRef]

- Cabanillas-García, J.L.; Rodríguez-Jiménez, C.J.; Sánchez-Gómez, M.C.; Losada-Vázquez, Á.; Losada-Moncada, M.; Corrales-Vázquez, J.M. Observational Study of Experiential Activities Linked to Astronomy with CAQDAS NVivo. In Computer Supported Qualita-Tive Research; Costa, A.P., Moreira, A., Freitas, F., Costa, K., Bryda, G., Eds.; Springer International Publishing: Cham, Switzerland, 2023; pp. 180–198. [Google Scholar] [CrossRef]

- Brandão, C. P. Bazeley and K. Jackson, Qualitative Data Analysis with NVivo (2nd ed.): (2013). London: Sage. Qual. Res. Psychol. 2015, 12, 492–494. [Google Scholar] [CrossRef]

- Nowell, L.S.; Norris, J.M.; White, D.E.; Moules, N.J. Thematic Analysis: Striving to Meet the Trustworthiness Criteria: Striving to Meet the Trustworthiness Criteria. Int. J. Qual. Methods 2017, 16, 1609406917733847. [Google Scholar] [CrossRef]

- Miao, F.; Holmes, W. Guía Para el Uso de IA Generativa en Educación e Investigación—UNESCO Biblioteca Digital; Unesco: Paris, France, 2024; Available online: https://unesdoc.unesco.org/ark:/48223/pf0000389227 (accessed on 25 March 2025).

- Morgan, D.L. Exploring the Use of Artificial Intelligence for Qualitative Data Analysis: The Case of ChatGPT. Int. J. Qual. Meth. 2023, 22, 16094069231211248. [Google Scholar] [CrossRef]

- Sun, H.; Kim, M.; Kim, S.; Choi, L. A methodological exploration of generative artificial intelligence (AI) for efficient qualitative analysis on hotel guests’ delightful experiences. Int. J. Hosp. Manag. 2025, 124, 103974. [Google Scholar] [CrossRef]

- Li, Z.; Ironsi, C.S. The Efficacy of Generative Artificial Intelligence in Developing Science Education Preservice Teachers’ Writing Skills: An Experimental Approach. J. Sci. Educ. Technol. 2024, 1–12. [Google Scholar] [CrossRef]

- Chatzichristos, G. Qualitative Research in the Era of AI: A Return to Positivism or a New Paradigm? Int. J. Qual. Methods 2025, 24, 16094069251337583. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).