Abstract

The paper presents a case study on using artificial intelligence (AI) for preliminary grading of student programming assignments. By integrating our previously introduced learning programming interface Verificator with the Gemini 2.5 large language model via Google AI Studio, C++ student submissions were evaluated automatically and compared with teacher-assigned grades. The results showed moderate to high correlation, although the AI was stricter. The study demonstrates that AI tools can improve grading speed and consistency while highlighting the need for human oversight due to limitations in interpreting non-standard solutions. It also emphasizes ethical considerations such as transparency, bias, and data privacy in educational AI use. A hybrid grading model combining AI efficiency and human judgment is recommended.

1. Introduction

Artificial intelligence (AI) is increasingly being used in higher education, especially in STEM fields, where automation and analytical support can significantly relieve teachers and improve student experience. In programming courses, the process of grading student assignments traditionally requires significant time, expert code review, and additional communication with students for clarification or correction. With the increasing availability of advanced generative models and tools for word and code processing, the possibility arises to perform preliminary grading of assignments with AI systems, which can analyze, comment on, and classify student work in real time.

To support the teaching process in our programming subjects, the Verificator software v5.09 (Developed by co-authors of this article) [1] is used. Verificator has been improved over many years, so now it has several features that support the teaching process. These features include personalization of programs, time limitation for solving the assignment, prevention of copying programs from colleagues, adoption of good programming habits and help for easier detection of syntax and logic errors [1]. In addition, Verificator requires testing of the program at various stages of development, which may result in a partial penalty on the number of points achieved, e.g., one point for each failed test [2].

In our teaching practice, Verificator was already combined with other tools, like tools for mutual comparing of students’ exercises (to find out rewriting), collecting results from many student exercises, finding some unauthorized activities, etc. With the emergence of various AI tools based on large language models (LLM), the idea of automatic preliminary assessment of student assignments using AI has also emerged. Different LLMs are available via web interface but also by using a programming interface. One such interface is offered via Google AI Studio. Among several models offered, we chose the Gemini 2.5 because of its relatively large contextual window of 1 million tokens. The idea was to ask AI to grade each student assignment from the course Programming 2 at the undergraduate level of study.

For the purposes of the experiment, the regular student exercises in the form of C++ code, together with Verificator’s metadata, were exported from the Moodle system and used to test the AI system. The evaluation included three phases: (1) code analysis using the LLM in the context of the given criteria (functionality, form, penalties), (2) comparison of results with teacher ratings, and (3) quantitative and qualitative analysis of deviations and accuracy of grading.

The scientific contribution of this work is threefold. First, a new methodology for semi-automated grading of student papers using AI systems integrated into open and commercial access tools is presented. Second, the application of fine-tuned and contextually rich AI models in a real educational environment is demonstrated, including prototype scripts for processing and grading code.

These developments reflect a broader trend in higher education, where artificial intelligence is not only reshaping content delivery and learner engagement but also redefining assessment practices. The integration of AI tools into programming education offers new opportunities to improve grading efficiency, ensure consistency, and support personalized feedback [3,4]. By leveraging advanced models such as Gemini 2.5, educators can begin to automate parts of the evaluation process while maintaining pedagogical quality and oversight.

Finally, it is important to emphasize that this study presents a prototype implementation of an AI-assisted grading workflow and should be interpreted as a feasibility exploration rather than a validated grading solution. The evaluation was conducted using a single version of the LLM and a fixed rubric, without formal calibration or tuning. As such, the results are intended to illustrate potential capabilities and challenges, rather than to confirm generalizable performance.

2. The Use of AI in Education

AI has increasingly been leveraged to improve educational processes and outcomes. Recent literature reviews have summarized numerous applications of AI in education [5]:

Personalized Learning (PL). PL systems use AI to analyze learner data and adjust content dynamically, fostering equitable access to high-quality education compared to traditional one-size-fits-all approaches [6,7,8].

Adaptive Learning Platforms (ALP). ALPs collect and analyze learner data to adjust content delivery, pacing, and difficulty, creating personalized learning experiences that enhance outcomes across diverse educational contexts [9].

Intelligent Tutoring Systems and Learning Assistants (ITS). ITSs monitor student progress, identify difficulties, and offer tailored feedback and tasks, simulating one-on-one tutoring through AI-driven personalization [10,11].

Intelligent Assessment and Feedback. AI-powered assessment systems streamline grading and provide personalized feedback, enhancing student understanding and reducing administrative burdens for educators [12].

Some studies already claim that the integration of artificial intelligence in education is significantly enhancing both the quality and accessibility of learning [13,14]. AI enables personalized approaches through adaptive learning systems, intelligent tutoring, and automated assessment tools, thereby improving student engagement and outcomes. These technologies not only tailor educational content to individual needs but also reduce the administrative burden on educators, allowing for more meaningful student-teacher interactions. Overall, current developments highlight the transformative potential of AI in modern educational environments.

2.1. General AI Tools with Web Interface

As part of research into the preliminary assessment of student programming assignments, several general-purpose AI tools with web interfaces were analyzed. These tools require no local installation and provide immediate, accessible interaction via standard web browsers. The evaluated tools included ChatGPT 4.5 (OpenAI), Claude 3.5 (Anthropic), Perplexity Pro, DeepSeek R1, and Minimax ver. 01. All these tools are based on advanced large language models (LLMs), differing in their underlying architectures, context window length, support for code-related tasks, and capabilities for complex analytical operations.

Among them, ChatGPT emerged as the most versatile tool for the project due to its integrated extensions, such as DeepResearch and Data Analyst, as noticed also by [15,16]. These features enable the uploading of CSV files, data analysis, and the execution of complex spreadsheet-like operations within an interactive environment. In the educational context, ChatGPT was used to automate the review and refinement of teaching materials, including evaluating task descriptions for clarity, checking logical consistency of instructions, and correcting language and grammar. Through several iterations, task descriptions were refined to ensure content coherence, cognitive alignment with course level, and linguistic accessibility for non-native English speakers.

Additionally, ChatGPT’s Data Analyst feature facilitated the upload and analysis of student performance data across the semester. Based on this analysis, dynamic reports were generated to identify high-performing students (potential candidates for exemption from partial exams), detect anomalies (e.g., sudden changes in performance), and compute descriptive statistics (mean, median, standard deviation per assignment). This significantly accelerated and objectified pedagogical decision-making during the semester.

Claude.ai, built on Anthropic’s Claude 3.0 model series, proved especially useful for working with extended textual inputs (exceeding 100,000 tokens), such as full repositories of student code with comments. Its strength lies in the ability to maintain consistency when evaluating multiple assignments within a single conversational context [17].

Perplexity demonstrated value as a tool for quickly generating contextual summaries and comparing student solutions to best practices [18]. DeepSeek was tested due to its advertised ability to handle multiple programming languages and generate technically accurate responses in C/C++, Python, and JavaScript [19]. Minimax, though more limited in availability and features, was used experimentally to contrast its capabilities with other tools—particularly in processing annotated code [20].

Despite their advanced capabilities, these tools still require manual validation of outputs. Errors were observed in the interpretation of complex semantic relationships in code, as well as occasional oversimplification of problems. Additional challenges include limitations of free-tier versions, usage quotas, and privacy concerns when processing student data on commercial platforms.

In conclusion, general AI tools with web interfaces demonstrate substantial potential for supporting educators in preparing teaching materials, analyzing results, and conducting preliminary assessments. However, their integration into the educational process must be guided by a carefully designed usage framework, clear evaluation criteria, adherence to ethical guidelines, and continuous monitoring of output quality [21,22].

2.2. Using AI Through a Programming Interface

The use of artificial intelligence via programming interfaces (APIs) offers powerful and flexible alternatives to general-purpose AI tools with graphical web interfaces. While tools like ChatGPT or Claude are optimized for direct interaction with human users, programming interfaces enable automated, scalable, and script-based AI integration into complex workflows. These interfaces allow developers and educators to embed AI models directly into applications, data pipelines, or custom tools, thereby enabling batch processing, advanced data manipulation, and context-specific querying [23,24].

Modern AI APIs provided by platforms such as OpenAI (ChatGPT API), Anthropic (Claude API), Cohere, Mistral, and Google (Gemini API) support interactions using multiple programming languages, most commonly Python 3.10. They enable users to [24,25].

- Submit textual and code-based prompts programmatically;

- Receive structured outputs (e.g., JSON) for automated parsing and decision-making;

- Leverage fine-tuned or specialized models for domain-specific tasks (e.g., code review, language translation, summarization);

- Handle large-scale or high-frequency tasks, such as evaluating hundreds of student assignments;

- Access advanced features like file uploads, long-context windows, or external tool integration (e.g., spreadsheets, databases, learning management systems).

These APIs are particularly useful in educational settings, where AI can be embedded into automated grading systems, feedback generators, curriculum design tools, or data analytics dashboards. Through programmatic access, educators gain greater control over input formatting, evaluation rules, and reproducibility of results [24,26].

In the context of this experiment, Google AI Studio was used as the development and execution environment. It enables direct interaction with the Gemini model family (versions 1.5, 2.0, and 2.5) through Python scripting and supports various forms of input and output, including:

- Textual prompts and source code;

- Data files (e.g., CSV, Excel, Google Sheets);

- Predefined grading rubrics and instructions.

One of the distinguishing strengths of the Gemini models is their large context window, up to 1,000,000 tokens in some configurations. This feature allowed for the simultaneous analysis of entire batches of student submissions, task descriptions, and grading criteria within a single prompt, eliminating the need to split inputs across multiple requests. This significantly reduced the risk of losing semantic continuity, which can be critical when evaluating code quality and alignment with assignment specifications.

This experimental setup showcased the effectiveness of combining AI programming interfaces with structured educational data to automate parts of the assessment process, laying the groundwork for scalable, explainable, and personalized feedback systems in programming education.

2.3. Ethical Issues of Using AI Tools

The growing integration of artificial intelligence in education brings opportunities for personalization, automation, and data-driven decision-making. However, it also raises critical ethical questions. As AI systems become more embedded in educational tools and decision processes, educators and institutions must address the challenges that are mostly associated with transparency, bias, data privacy, and equitable access [27,28].

General Ethical Challenges in Educational AI Use:

- Data Privacy and Security: AI systems often require access to large amounts of student data, which can expose individuals to risks if data is mishandled, improperly stored, or shared without consent. Compliance with data protection regulations such as GDPR is essential to preserve student rights [24,29].

- Bias and Fairness: AI models trained on unbalanced datasets may reinforce social, linguistic, or cultural biases. This could result in discriminatory outputs that negatively impact students from underrepresented backgrounds [30].

- Transparency and Explainability: Many AI systems operate as black boxes, offering limited insight into their decision-making. This opacity reduces accountability and makes it harder for educators or students to trust the system’s outputs [31].

- Over-reliance and Critical Thinking: If students use AI to bypass engagement with learning tasks (e.g., generating essays or solving problems without understanding), it may hinder their development of higher-order cognitive skills [32].

- Digital Equity: The uneven availability of advanced AI tools and Internet infrastructure may widen existing educational gaps, especially in lower-income or rural regions [33].

When AI is applied to student grading and feedback, the ethical landscape becomes even more sensitive. These systems directly influence academic outcomes and must therefore meet high standards of fairness, accuracy, and accountability [26,29].

Key concerns specific to AI-assisted student assessment include:

- Assessment Validity and Reliability: AI systems must generate grades and feedback that are aligned with human judgment and pedagogical standards. Any discrepancy between human and AI assessments can undermine the credibility of grading [34].

- Algorithmic Bias: There is a risk that AI tools unfairly penalize certain coding styles, language usage, or problem-solving strategies—particularly those differing from the training data norm. This can disadvantage students whose backgrounds or thinking styles are underrepresented [30].

- Academic Integrity and Manipulation Risks: Automated grading systems can be exploited if students learn how to game the system (e.g., prompt manipulation, filler text). Without human oversight, this can lead to inflated or misleading grades [34].

While AI tools offer significant potential to enhance educational processes, their ethical deployment requires careful planning, transparency, and institutional oversight. Particularly in assessment, where student outcomes are directly affected, the use of AI must be guided by robust principles of fairness, accountability, and validity. Addressing these ethical challenges is essential not only for maintaining academic integrity but also for ensuring that AI-driven innovations contribute positively and equitably to educational experience.

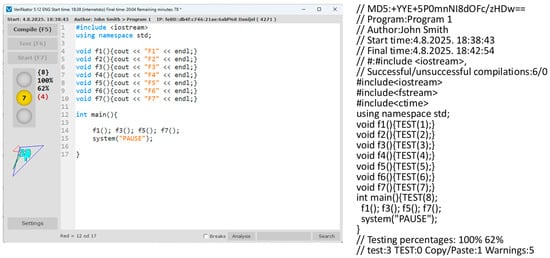

3. Case Study: Preliminary Assessment of Student Programming Assignments

As mentioned in the introduction, our teaching process on the Programming 2 course includes the usage of Verificator programming interface. In the context of this testing, it is important that Verificator individualizes student assignments in C++ (by adding metadata about the author and the time of code creation), makes it difficult to copy assignments (e.g., excludes copy/paste from outside of the program) and records data on performed tests [35]. This significantly standardizes student assignments, and as such, makes it easier to perform preliminary grading using AI (Figure 1).

Figure 1.

Verificator interface and output code.

Each programming task consists of four subtasks, collectively worth a maximum of four points. In addition to solving the tasks, students are required to perform testing. During testing, all program blocks that make up the current phase of development must be executed. Testing is expected to cover specified intervals, which are defined based on the number of program blocks. The percentage recorded reflects the proportion of completed program blocks relative to the total number of blocks in the program. For each interval that was not tested, a penalty of one point is applied—something the AI was expected to account for in the preliminary evaluation.

3.1. Experimental Setup

The experiment was focused on preliminary automated grading of student assignments using a large language model (LLM). Two groups of student tasks were selected from each exercise included in the test, each consisting of approximately 20 student assignments. The assignments were collected from three separate exercises distributed across the semester: one at the beginning, one in the middle, and one at the end of the semester. This ensured coverage of different learning phases and varying levels of task complexity.

All student assignments had previously been graded manually by instructors. For the purposes of this experiment, the same assignments were re-evaluated using AI. Specifically, Python scripts were developed to automate the assessment process, leveraging the Gemini 2.5 large language model, available via Google’s API. The AI system received structured task data, student code submissions, and relevant evaluation criteria (including subtask descriptions and expected output). It then generated scores and feedback for each assignment.

The AI was prompted using a well-structured instruction that included the task description, grading rubric, and student code. No advanced prompt engineering techniques (e.g., few-shot learning or chain-of-thought prompting) were applied; rather, the prompt directed the model to assign scores and comments according to the provided criteria. This clear, template-based input was designed to guide the LLM toward consistent output without ambiguity.

To evaluate the AI’s effectiveness, the results were compared with the original manual grades. This allowed for analysis of the consistency between human and AI grading, as well as identification of possible discrepancies, particularly in handling edge cases and partial solutions.

The goal of the experiment was to assess the feasibility and reliability of integrating AI-based preliminary grading into the workflow of programming education, with a focus on improving efficiency while preserving grading fairness and transparency.

3.2. Dataset

Student exercises were exported from the Moodle LMS system. There were three exercises, each of them with two variants of the programming task. The number of student assignments per exercise and per task variants is shown in Table 1.

Table 1.

Number of student exercises per practice and per task variants.

Each student assignment contains program code in C++ with some metadata in the form of comments. This includes testing percentages.

In addition to student assignments, the important data for the AI were texts of student exercises, so that the obtained solutions could be compared with the tasks that had to be completed. Also, some previous tests have shown that AI often produces results that are not uniform, that they sometimes fall outside the given rating scales, that the level of explanation varies, etc. For this reason, a template was created in the form of a text file, which defines the layout of the report for each individual task. Variable positions are defined using placeholders, as in the following example:

---------------------------------------------------------------------

#task_file_name#

1. task (#task_topic1#):

#comment_for_task1#

#points_for_task1#

2. task (#task_topic2#):

#comment_for_task2#

#points_for_task2#

3. task (#task_topic3#):

#comment_for_task3#

#points_for_task3#

4. task (#task_topic4#):

#comment_for_task4#

#points_for_task4#

Points_without_penalization:#points_without_penalization#

Testing_percentages:#testing_percentages#

Homework points: #total_points_including_penalization#

The ‘#’ characters represent placeholders, e.g., #task_file_name# represents the file containing student assignment (the filename is created by Verificator and includes the student name and surname). According to previous experiences, AI sometimes recognizes the type of content that should replace the placeholder, but it can also be instructed to do so when this is not the case.

3.3. Python Scripting

To automate the grading of student assignments using AI, a custom Python script was developed, leveraging the Google AI SDK made available through Google AI Studio. Access to the system was enabled via an API key, which authenticated the script and allowed communication with the Gemini 2.5 language model.

The script was configured with parameters optimized for the grading task. These included a high context sensitivity (via top_p = 0.95), a balanced level of output creativity (temperature = 1), and a token limit set sufficiently high (up to 200,000 tokens) to accommodate detailed feedback. The selected model version, gemini-2.5-flash-preview-05-20, supported input contexts up to one million tokens, allowing full student assignments and associated grading criteria to be processed in a single request.

To provide the model with the necessary information, the script compiled a structured input that consisted of:

- A template defining the layout of the grading report (including placeholders for comments and scores);

- The textual description of the programming task assigned to students;

- All submitted C++ source files;

- And a final instruction prompt directing the AI to evaluate the assignments according to specified criteria.

Each element of the input was tagged with an identifier derived from the corresponding file name to ensure clarity and separation between sources. The model then processed this structured input and generated a grading report for each assignment. The output included: the file name of the student submission, per-task comments and scores, a total score before penalties, testing percentages (as recorded by Verificator), and the final score after applying any penalties due to failed tests. This approach enabled consistent, repeatable, and structured AI evaluation across a diverse set of student submissions.

3.4. Hallucinations

One notable limitation in the use of large language models for assessment tasks is the phenomenon of hallucinations—instances where the AI generates confident but factually incorrect or unfounded output. In the context of programming assignment grading, such hallucinations could manifest as incorrect feedback, misinterpretation of student code logic, or even fabricated explanations for deducted points. This poses a serious risk to the reliability and fairness of the grading process, particularly when human oversight is limited or absent. Although no significant hallucinations were observed in our controlled experimental setup, their potential occurrence warrants caution. Future implementations should include systematic validation procedures and flagging mechanisms to detect and correct such anomalies before feedback is delivered to students.

4. Experiment Results

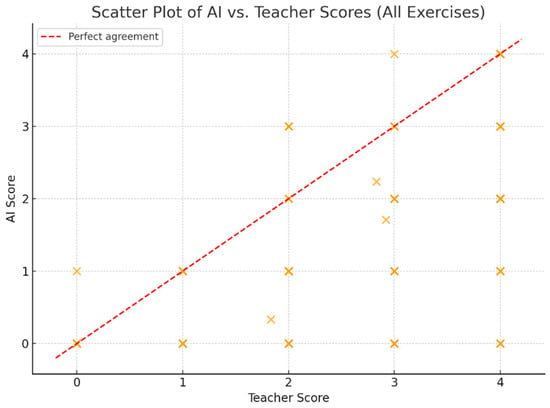

Based on the collected student assignments, the evaluation results were analyzed by comparing AI-generated scores to those assigned by human instructors. Table 2 presents the average scores and correlation coefficients for three programming exercises administered at different points in the semester.

Table 2.

AI ratings compared to teacher ratings.

While the overall correlation between AI and human scores ranged from moderate to high (r ≈ 0.55–0.73), a consistent trend was observed: the AI system tended to assign lower scores than teachers across all exercises. This difference was particularly noticeable in the mid-semester exercise, where the AI was significantly stricter.

To visualize this relationship, a scatter plot (Figure 2) was created using all available individual scores from three exercises (E1, E5, and E9), with each point representing one student submission. The diagram illustrates how AI scores align with teacher scores, and includes a reference line denoting perfect agreement. A clear cluster of points below the diagonal line suggests a general tendency of the AI to underrate compared to human instructors.

Figure 2.

Scatter plot of AI Vs. Teacher scores for all exercises.

These results point to the AI’s limited flexibility in handling partial or unconventional solutions. While human instructors may account for student effort, style, or creativity, the AI appears to follow grading rubrics more rigidly. This may result in penalizing non-standard but valid submissions.

The scatter plot illustrates notable deviations from the ideal line of agreement, with the AI frequently assigning lower scores than instructors for the same submissions. Since no calibration or score normalization was applied, systematic differences between AI and human scores are expected. These findings highlight the current limitations of the system and underscore the need for human moderation. Correlation alone should not be interpreted as evidence of grading accuracy or fairness without further agreement analysis.

Such findings suggest that while AI can be helpful for preliminary assessment, it is not yet ready to replace human judgment in nuanced grading scenarios. The observed variation reinforces the importance of human oversight, particularly when AI-generated scores are used to inform high-stakes academic decisions.

5. Discussion and Conclusions

5.1. Implications

The integration of AI tools into the preliminary assessment of student programming assignments offers several clear benefits. Most notably, AI-assisted grading significantly accelerates the evaluation process, allowing instructors to reallocate time toward qualitative feedback and individualized student support. Manual grading of a single programming submission typically requires between 5 and 10 min of instructor time, whereas the automated system produced structured feedback within seconds. This reflects an approximate tenfold improvement in throughput, allowing the AI pipeline to process multiple submissions per minute rather than per hour. Such gains may enable instructors to shift time from routine scoring toward qualitative support and feedback. Furthermore, the use of large language models (LLMs) such as Gemini 2.5 shows potential for improving grading consistency, particularly in large cohorts where inter-rater variability is a concern. By applying a uniform rubric, AI systems can reduce subjective variation and ensure more standardized evaluation. However, the AI’s strict adherence to criteria may lead to underappreciation of student creativity, partial solutions, or unconventional logic, which instructors might otherwise consider valid. These observations suggest that AI can serve as a valuable assistant, but not as a replacement for human pedagogical judgment.

5.2. Limitations

Despite promising results, several limitations affect the generalizability and robustness of this study. First, the AI model occasionally assigned significantly lower scores than human instructors, particularly for partially correct or atypical code, highlighting a need for further refinement in handling edge cases. Second, the study relied on correlation as a primary metric of agreement; while useful, correlation does not capture the magnitude or direction of discrepancies at the individual level. No systematic testing for hallucinations was conducted in the current setup. However, AI-generated grade reports were manually cross-checked against instructor-assigned grades to identify any clear inconsistencies. Subtle factual inaccuracies or logical errors may have gone unnoticed, as no multi-run consistency checks or adversarial prompts were applied. Future implementations would benefit from structured hallucination audits and validation protocols to ensure reliability prior to releasing feedback to students. Additionally, the use of a single LLM version and a specific assignment format limits the transferability of the findings to broader educational settings. Technical limitations such as API quotas, processing time, and dependence on cloud infrastructure also pose challenges for large-scale deployment.

5.3. Recommendations for Practice

To ensure responsible use of AI in grading, institutions should:

- Implement hybrid grading models in which AI provides preliminary scores that are reviewed or moderated by instructors.

- Provide transparency to students about how AI tools are used and ensure consent where applicable.

- Periodically validate AI grading accuracy against human evaluation, especially in high-stakes settings.

5.4. Future Work

Future studies should explore the performance of AI models in more diverse and complex educational contexts, such as open-ended assignments or cross-language code submissions. It is also important to develop explainability mechanisms that allow instructors and students to understand why a particular grade was assigned. This could include visual summaries of grading rationale or confidence indicators. Further research should investigate student perceptions of AI-assisted grading and assess whether transparency measures influence trust and acceptance. Finally, integrating self-assessment or peer-assessment data with AI output may offer a more holistic evaluation framework.

Author Contributions

Conceptualization, A.B. and D.R.; methodology, A.B. and D.R.; software, D.R.; validation, A.B., D.R. and A.Č.; formal analysis, A.B.; investigation, D.R.; resources, D.R.; writing—original draft preparation, D.R.; writing—review and editing, A.B.; visualization, A.B.; supervision, D.R.; funding acquisition, A.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Ethical review and approval were not required for this study, as it did not involve human participants, experiments, or interventions. No student participated in any phase of the research, nor were any personal or identifiable data collected. The study analyzed anonymized, previously graded programming assignments that were part of a regular university course. Artificial intelligence tools were applied retrospectively to evaluate how automated grading compared to the instructor’s original assessments. All data were fully anonymized before analysis, and no new tasks, experiments, or surveys were conducted with students. According to Article 8, paragraph 1 of the Croatian Act on Scientific Activity and Higher Education (Official Gazette 123/03, 198/03, 105/04, 174/04, 2/07, 46/07, 45/09, 63/11, 94/13, 139/13, 101/14, 60/15, 131/17, 96/18, 98/19, and 57/22), ethical approval is required only for research that directly involves human or animal subjects. As this study does not meet those conditions, no ethical approval or exemption from an ethics committee was necessary.

Informed Consent Statement

Approval were not required for this study, as it did not involve human participants, experiments, or interventions. No student participated in any phase of the research, nor were any personal or identifiable data collected.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to Danijel Radošević.

Conflicts of Interest

Author Andrej Čep was employed by the company Inpro d.o.o. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Radošević, D.; Orehovački, T.; Lovrenčić, A. Verificator: Educational Tool for Learning Programming. Inform. Educ. 2009, 8, 261–280. [Google Scholar] [CrossRef]

- Radošević, D. Application of Verificator in Teaching Programming During the Pandemic. Politech. Des. 2020, 8, 245–252. [Google Scholar] [CrossRef]

- Tseng, E.Q.; Huang, P.C.; Hsu, C.; Wu, P.Y.; Ku, C.T.; Kang, Y. CodEv: An Automated Grading Framework Leveraging Large Language Models for Consistent and Constructive Feedback. In Proceedings of the 2024 IEEE International Conference on Big Data (BigData), Washington, DC, USA, 15–18 December 2024; pp. 5442–5449. [Google Scholar] [CrossRef]

- Cisneros-González, J.; Gordo-Herrera, N.; Barcia-Santos, I.; Sánchez-Soriano, J. JorGPT: Instructor-Aided Grading of Programming Assignments with Large Language Models (LLMs). Future Internet 2025, 17, 265. [Google Scholar] [CrossRef]

- Čep, A.; Bernik, A. ChatGPT and Artificial Intelligence in Higher Education: Literature Review Powered by Artificial Intelligence. In Intelligent Computing; Arai, K., Ed.; SAI 2024; Lecture Notes in Networks and Systems; Springer: Cham, Switzerland, 2024; Volume 1018. [Google Scholar] [CrossRef]

- Beese, J. AI and personalized learning: Bridging the gap with modern educational goals. arXiv 2019, arXiv:2503.12345. [Google Scholar]

- Walkington, C.; Bernacki, M.L. Personalization of instruction: Design dimensions and implications for cognition. J. Exp. Educ. 2017, 86, 50–68. [Google Scholar] [CrossRef]

- Ayeni, O.O.; Al Hamad, N.M.; Chisom, O.N.; Osawaru, B.; Adewusi, O.E. AI in education: A review of personalized learning and educational technology. GSC Adv. Res. Rev. 2024, 18, 261–271. [Google Scholar] [CrossRef]

- Tan, L.Y.; Hu, S.; Yeo, D.J.; Cheong, K.H. Artificial intelligence-enabled adaptive learning platforms: A review. Comput. Educ. Artif. Intell. 2025, 9, 100429. [Google Scholar] [CrossRef]

- Tang, K.-Y.; Chang, C.-Y.; Hwang, G.-J. Trends in artificial intelligence-supported e-learning: A systematic review and co-citation network analysis (1990–2019). Interact. Learn. Environ. 2021, 29, 2134–2152. [Google Scholar] [CrossRef]

- UNESCO. AI and Education: Guidance for Policy-Makers. United Nations Educational, Scientific and Cultural Organization. 2021. Available online: https://unesdoc.unesco.org/ark:/48223/pf0000376709 (accessed on 2 October 2025).

- Kwid, G.; Sarty, N.; Dazni, Y. A Review of AI Tools: Definitions, Functions, and Applications for K-12 Education. AI Comput. Sci. Robot. Technol. 2024, 3, 1–22. [Google Scholar] [CrossRef]

- Walter, Y. Embracing the future of Artificial Intelligence in the classroom: The relevance of AI literacy, prompt engineering, and critical thinking in modern education. Int. J. Educ. Technol. High. Educ. 2024, 21, 15. [Google Scholar] [CrossRef]

- Vieriu, A.M.; Petrea, G. The Impact of Artificial Intelligence (AI) on Students’ Academic Development. Educ. Sci. 2025, 15, 343. [Google Scholar] [CrossRef]

- Ahn, S. Data science through natural language with ChatGPT’s Code Interpreter. Transl. Clin. Pharmacol. 2024, 32, 73–82. [Google Scholar] [CrossRef] [PubMed]

- Koçak, D. Examination of ChatGPT’s Performance as a Data Analysis Tool. Educ. Psychol. Meas. 2025, 85, 00131644241302721. [Google Scholar] [CrossRef] [PubMed]

- Klishevich, E.; Denisov-Blanch, Y.; Obstbaum, S.; Ciobanu, I.; Kosinski, M. Measuring determinism in large language models for software code review. arXiv 2025, arXiv:2502.20747. [Google Scholar] [CrossRef]

- Katz, A.; Shakir, U.; Chambers, B. The utility of large language models and generative AI for education research. arXiv 2023, arXiv:2305.18125. [Google Scholar] [CrossRef]

- Zhu, Q.; Guo, D.; Shao, Z.; Yang, D.; Wang, P.; Xu, R.; Wu, Y.; Li, Y.; Gao, H.; Ma, S.; et al. Deepseek-coder-v2: Breaking the barrier of closed-source models in code intelligence. arXiv 2024, arXiv:2406.11931. [Google Scholar]

- Li, A.; Gong, B.; Yang, B.; Shan, B.; Liu, C.; Zhu, C.; Zhang, C.; Guo, C.; Chen, D.; Li, D.; et al. Minimax-01: Scaling foundation models with lightning attention. arXiv 2025, arXiv:2501.08313. [Google Scholar] [CrossRef]

- Kılınç, S. Comprehensive AI assessment framework: Enhancing educational evaluation with ethical AI integration. J. Educ. Technol. Online Learn. 2024, 7, 521–540. [Google Scholar] [CrossRef]

- Nguyen, K.V. The Use of Generative AI Tools in Higher Education: Ethical and Pedagogical Principles. J. Acad. Ethics. 2025, 23, 1435–1455. [Google Scholar] [CrossRef]

- Perron, B.E.; Luan, H.; Qi, Z.; Victor, B.G.; Goyal, K. Demystifying application programming interfaces (APIs): Unlocking the power of Large Language Models and other web-based AI services in social work research. J. Soc. Soc. Work. Res. 2025, 16, 519–556. [Google Scholar] [CrossRef]

- Pérez-Jorge, D.; González-Afonso, M.C.; Santos-Álvarez, A.G.; Plasencia-Carballo, Z.; Perdomo-López, C.d.l.Á. The Impact of AI-Driven Application Programming Interfaces (APIs) on Educational Information Management. Information 2025, 16, 540. [Google Scholar] [CrossRef]

- Tsai, Y.C. Empowering learner-centered instruction: Integrating ChatGPT python API and tinker learning for enhanced creativity and problem-solving skills. In International Conference on Innovative Technologies and Learning; Springer Nature: Cham, Switzerland, 2023; pp. 531–541. [Google Scholar]

- Messer, M.; Brown, N.C.; Kölling, M.; Shi, M. Automated grading and feedback tools for programming education: A systematic review. ACM Trans. Comput. Educ. 2024, 24, 1–43. [Google Scholar] [CrossRef]

- Gouseti, A.; James, F.; Fallin, L.; Burden, K. The ethics of using AI in K-12 education: A systematic literature review. Technol. Pedagog. Educ. 2024, 34, 161–182. [Google Scholar] [CrossRef]

- Al-Zahrani, A.M. Unveiling the shadows: Beyond the hype of AI in education. Heliyon 2024, 10, e30696. [Google Scholar] [CrossRef]

- Bulut, O.; Cutumisu, M.; Yilmaz, M. Ethical challenges in AI-based educational measurement. arXiv 2024, arXiv:2406.18900. [Google Scholar]

- Chinta, R.; Qazi, M.; Raza, M. FairAIED: A review of fairness, accountability, and bias in educational AI systems. arXiv 2024, arXiv:2407.18745. [Google Scholar]

- Chaudhry, A.; Wolf, J.; Gervasio, M. A Transparency Index for AI in education. arXiv 2022, arXiv:2206.03220. [Google Scholar] [CrossRef]

- Zhai, X. AI-generated content and its impact on student learning and critical thinking: A pedagogical reflection. Smart Learn. Environ. 2024, 11, 12. [Google Scholar]

- Chan, C.K.Y. A comprehensive AI policy education framework for university teaching and learning. Int. J. Educ. Technol. High. Educ. 2023, 20, 38. [Google Scholar] [CrossRef]

- Gobrecht, A.; Tuma, F.; Möller, M.; Zöller, T.; Zakhvatkin, M.; Wuttig, A.; Sommerfeldt, H.; Schütt, S. Beyond human subjectivity and error: A novel AI grading system. arXiv 2024, arXiv:2405.04323. [Google Scholar] [CrossRef]

- Orehovački, T.; Radošević, D.; Konecki, M. Perceived Quality of Verificator in Teaching Programming. In Proceedings of the 37th International Convention on Information Technology, Electronics and Microelectronics (MIPRO 2014), Opatija, Croatia, 26–30 May 2014. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).