Prediction of Postoperative ICU Requirements: Closing the Translational Gap with a Real-World Clinical Benchmark for Artificial Intelligence Approaches

Abstract

1. Introduction

2. Materials and Methods

- •

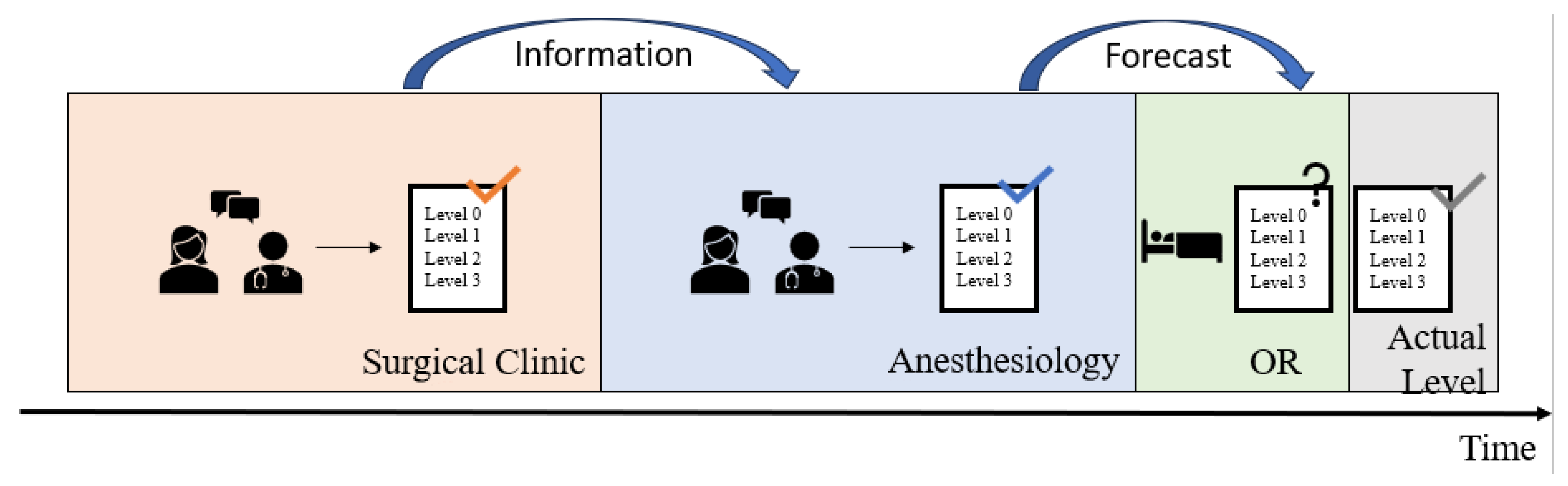

- A patient assigned to Level 0 requires standard postoperative care in the recovery room followed by timely transfer to a general ward (=standard care) when transfer criteria are met. This corresponds to the international standard of a PACU. This level was set as the system default.

- •

- Level 1 patients are expected to stay in the PACU for an extended period of at least 4 h, e.g., including overnight monitoring. Level 2 patients are monitored at the IMC during the postoperative phase.

- •

- For Level 3 patients, postoperative care in an ICU is assumed to be necessary.

2.1. Clinical Practice at the University Hospital Augsburg

2.2. Statistics

3. Results

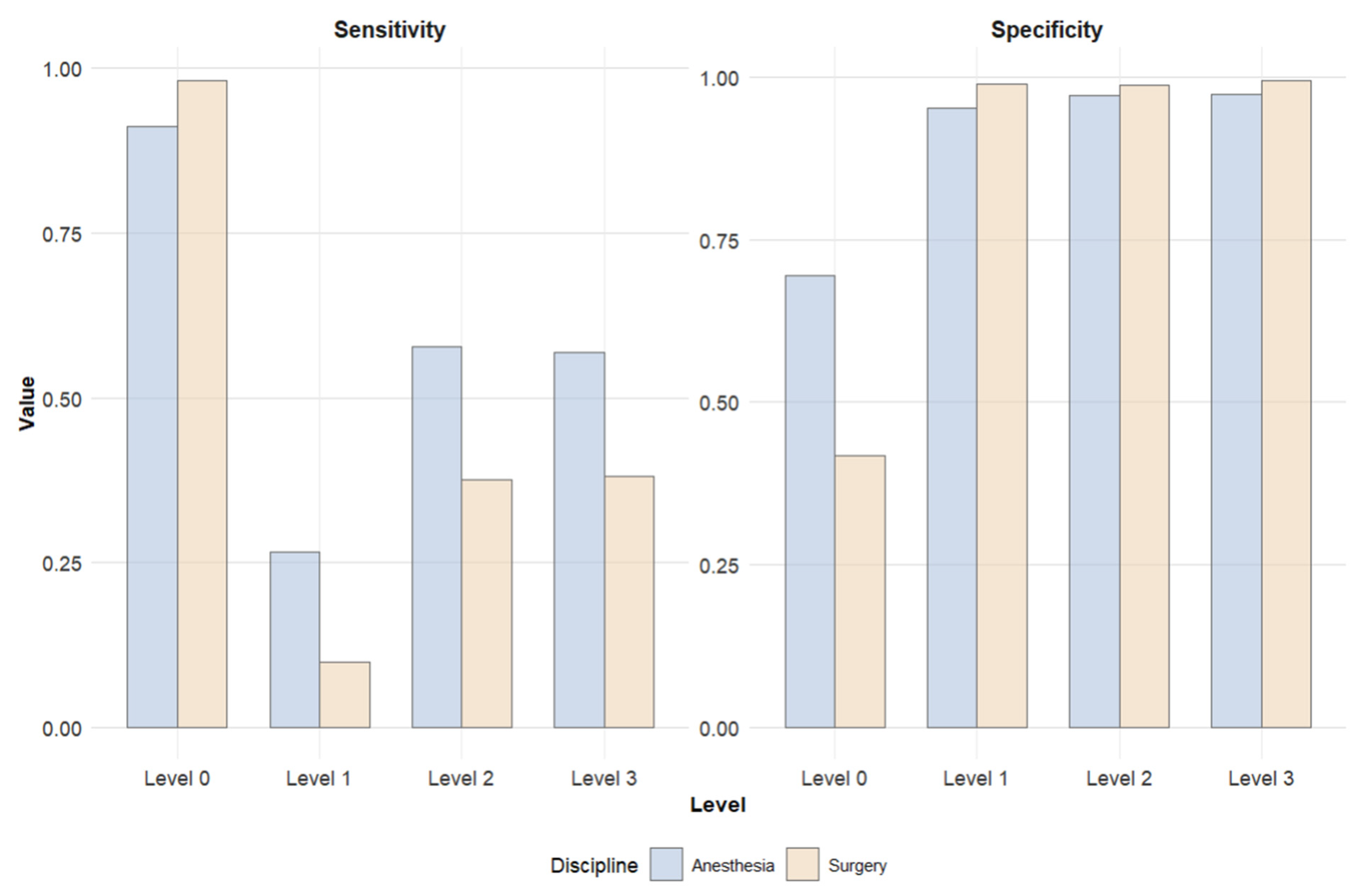

3.1. Surgery-Based Predictions

3.2. Anesthesiology-Based Predictions

3.3. Comparison of Predictions

4. Discussion

4.1. Summary of the Results and Their Significance for the Establishment of Future AI Models

4.2. Limitations

4.3. Strengths

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ICU | Intensive Care Unit |

| ML | Machine Learning |

| PACU | Post-Anesthesia Care Unit |

| IMC | Intermediate Care |

| SVM | Support Vector Machine |

| OR | Operating Room |

| AI | Artificial Intelligence |

References

- van Klei, W.A.; Moons, K.G.M.; Rutten, C.L.G.; Schuurhuis, A.; Knape, J.T.A.; Kalkman, C.J.; Grobbee, D.E. The effect of outpatient preoperative evaluation of hospital inpatients on cancellation of surgery and length of hospital stay. Anesth. Analg. 2002, 94, 644–649. [Google Scholar] [CrossRef] [PubMed]

- Turunen, E.; Miettinen, M.; Setälä, L.; Vehviläinen-Julkunen, K. Financial cost of elective day of surgery cancellations. J. Hosp. Adm. 2018, 7, 30. [Google Scholar] [CrossRef]

- Thevathasan, T.; Copeland, C.C.; Long, D.R.; Patrocínio, M.D.; Friedrich, S.; Grabitz, S.D.; Kasotakis, G.; Benjamin, J.; Ladha, K.; Sarge, T.; et al. The Impact of Postoperative Intensive Care Unit Admission on Postoperative Hospital Length of Stay and Costs: A Prespecified Propensity-Matched Cohort Study. Anesth. Analg. 2019, 129, 753–761. [Google Scholar] [CrossRef]

- Chiew, C.J.; Liu, N.; Wong, T.H.; Sim, Y.E.; Abdullah, H.R. Utilizing Machine Learning Methods for Preoperative Prediction of Postsurgical Mortality and Intensive Care Unit Admission. Ann. Surg. 2020, 272, 1133–1139. [Google Scholar] [CrossRef] [PubMed]

- Grass, F.; Behm, K.T.; Duchalais, E.; Crippa, J.; Spears, G.M.; Harmsen, W.S.; Hübner, M.; Mathis, K.L.; Kelley, S.R.; Pemberton, J.H.; et al. Impact of delay to surgery on survival in stage I-III colon cancer. Eur. J. Surg. Oncol. 2020, 46, 455–461. [Google Scholar] [CrossRef]

- Kompelli, A.R.; Li, H.; Neskey, D.M. Impact of Delay in Treatment Initiation on Overall Survival in Laryngeal Cancers. Otolaryngol. Head Neck Surg. 2019, 160, 651–657. [Google Scholar] [CrossRef]

- Nothofer, S.; Geipel, J.; Aehling, K.; Sommer, B.; Heller, A.R.; Shiban, E.; Simon, P. Postoperative Surveillance in the Postoperative vs. Intensive Care Unit for Patients Undergoing Elective Supratentorial Brain Tumor Removal: A Retrospective Observational Study. J. Clin. Med. 2025, 14, 2632. [Google Scholar] [CrossRef]

- Silva, J.M.; Rocha, H.M.C.; Katayama, H.T.; Dias, L.F.; de Paula, M.B.; Andraus, L.M.R.; Silva, J.M.C.; Malbouisson, L.M.S. SAPS 3 score as a predictive factor for postoperative referral to intensive care unit. Ann. Intensive Care 2016, 6, 42. [Google Scholar] [CrossRef]

- Moonesinghe, S.R.; Mythen, M.G.; Das, P.; Rowan, K.M.; Grocott, M.P.W. Risk stratification tools for predicting morbidity and mortality in adult patients undergoing major surgery: Qualitative systematic review. Anesthesiology 2013, 119, 959–981. [Google Scholar] [CrossRef]

- Devereaux, P.J.; Bradley, D.; Chan, M.T.V.; Walsh, M.; Villar, J.C.; Polanczyk, C.A.; Seligman, B.G.S.; Guyatt, G.H.; Alonso-Coello, P.; Berwanger, O.; et al. An international prospective cohort study evaluating major vascular complications among patients undergoing noncardiac surgery: The VISION Pilot Study. Open Med. 2011, 5, e193–e200. [Google Scholar]

- Cabrera, A.; Bouterse, A.; Nelson, M.; Razzouk, J.; Ramos, O.; Chung, D.; Cheng, W.; Danisa, O. Use of random forest machine learning algorithm to predict short term outcomes following posterior cervical decompression with instrumented fusion. J. Clin. Neurosci. 2023, 107, 167–171. [Google Scholar] [CrossRef] [PubMed]

- van de Sande, D.; van Genderen, M.E.; Verhoef, C.; van Bommel, J.; Gommers, D.; van Unen, E.; Huiskens, J.; Grünhagen, D.J. Predicting need for hospital-specific interventional care after surgery using electronic health record data. Surgery 2021, 170, 790–796. [Google Scholar] [CrossRef] [PubMed]

- Wijnberge, M.; Geerts, B.F.; Hol, L.; Lemmers, N.; Mulder, M.P.; Berge, P.; Schenk, J.; Terwindt, L.E.; Hollmann, M.W.; Vlaar, A.P.; et al. Effect of a Machine Learning-Derived Early Warning System for Intraoperative Hypotension vs Standard Care on Depth and Duration of Intraoperative Hypotension During Elective Noncardiac Surgery: The HYPE Randomized Clinical Trial. JAMA 2020, 323, 1052–1060. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; He, H.; Xiang, L.; Wang, Y. A warning model for predicting patient admissions to the intensive care unit (ICU) following surgery. Perioper. Med. 2025, 14, 60. [Google Scholar] [CrossRef]

- Xu, Z.; Yao, S.; Jiang, Z.; Hu, L.; Huang, Z.; Zeng, Q.; Liu, X. Development and validation of a prediction model for postoperative intensive care unit admission in patients with non-cardiac surgery. Heart Lung 2023, 62, 207–214. [Google Scholar] [CrossRef]

- Turan, E.I.; Baydemir, A.E.; Şahin, A.S.; Özcan, F.G. Effectiveness of ChatGPT-4 in predicting the human decision to send patients to the postoperative intensive care unit: A prospective multicentric study. Minerva Anestesiol. 2025, 91, 259–267. [Google Scholar] [CrossRef]

- Stieger, A.; Schober, P.; Venetz, P.; Andereggen, L.; Bello, C.; Filipovic, M.G.; Luedi, M.M.; Huber, M. Predicting admission to and length of stay in intensive care units after general anesthesia: Time-dependent role of pre- and intraoperative data for clinical decision-making. J. Clin. Anesth. 2025, 103, 111810. [Google Scholar] [CrossRef]

- Özçıbık Işık, G.; Kılıç, B.; Erşen, E.; Kaynak, M.K.; Turna, A.; Özçıbık, O.S.; Yıldırım, T.; Kara, H.V. Prediction of postoperative intensive care unit admission with artificial intelligence models in non-small cell lung carcinoma. Eur. J. Med. Res. 2025, 30, 293. [Google Scholar] [CrossRef]

- Hariharan, S.; Zbar, A. Risk scoring in perioperative and surgical intensive care patients: A review. Curr. Surg. 2006, 63, 226–236. [Google Scholar] [CrossRef]

- Brueckmann, B.; Villa-Uribe, J.L.; Bateman, B.T.; Grosse-Sundrup, M.; Hess, D.R.; Schlett, C.L.; Eikermann, M. Development and validation of a score for prediction of postoperative respiratory complications. Anesthesiology 2013, 118, 1276–1285. [Google Scholar] [CrossRef]

- Kheterpal, S.; Tremper, K.K.; Heung, M.; Rosenberg, A.L.; Englesbe, M.; Shanks, A.M.; Campbell, D.A. Development and validation of an acute kidney injury risk index for patients undergoing general surgery: Results from a national data set. Anesthesiology 2009, 110, 505–515. [Google Scholar] [CrossRef]

- Rozeboom, P.D.; Henderson, W.G.; Dyas, A.R.; Bronsert, M.R.; Colborn, K.L.; Lambert-Kerzner, A.; Hammermeister, K.E.; McIntyre, R.C.; Meguid, R.A. Development and Validation of a Multivariable Prediction Model for Postoperative Intensive Care Unit Stay in a Broad Surgical Population. JAMA Surg. 2022, 157, 344–352. [Google Scholar] [CrossRef] [PubMed]

- Cashmore, R.M.J.; Fowler, A.J.; Pearse, R.M. Post-operative intensive care: Is it really necessary? Intensive Care Med. 2019, 45, 1799–1801. [Google Scholar] [CrossRef] [PubMed]

- Park, C.-M.; Suh, G.Y. Who benefits from postoperative ICU admissions?-more research is needed. J. Thorac. Dis. 2018, 10, S2055–S2056. [Google Scholar] [CrossRef] [PubMed]

- Waydhas, E.; Herting, S.; Kluge, A.; Markewitz, G.; Marx, E.; Muhl, T.; Nicolai, K.; Notz, V. Intermediate Care Station Empfehlungen zur Ausstattung und Struktur; Deutsche Interdisziplinäre Vereinigung für Intensiv-und Notfallmedizin eV (DIVI): Berlin, Germany, 2017. [Google Scholar]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef]

- Brinker, T.J.; Hekler, A.; Enk, A.H.; Berking, C.; Haferkamp, S.; Hauschild, A.; Weichenthal, M.; Klode, J.; Schadendorf, D.; Holland-Letz, T.; et al. Deep neural networks are superior to dermatologists in melanoma image classification. Eur. J. Cancer 2019, 119, 11–17. [Google Scholar] [CrossRef]

- Rajpurkar, P.; Irvin, J.; Ball, R.L.; Zhu, K.; Yang, B.; Mehta, H.; Duan, T.; Ding, D.; Bagul, A.; Langlotz, C.P.; et al. Deep learning for chest radiograph diagnosis: A retrospective comparison of the CheXNeXt algorithm to practicing radiologists. PLoS Med. 2018, 15, e1002686. [Google Scholar] [CrossRef]

- Steiner, D.F.; MacDonald, R.; Liu, Y.; Truszkowski, P.; Hipp, J.D.; Gammage, C.; Thng, F.; Peng, L.; Stumpe, M.C. Impact of Deep Learning Assistance on the Histopathologic Review of Lymph Nodes for Metastatic Breast Cancer. Am. J. Surg. Pathol. 2018, 42, 1636–1646. [Google Scholar] [CrossRef]

- Loftus, T.J.; Tighe, P.J.; Filiberto, A.C.; Efron, P.A.; Brakenridge, S.C.; Mohr, A.M.; Rashidi, P.; Upchurch, G.R.; Bihorac, A. Artificial Intelligence and Surgical Decision-making. JAMA Surg. 2020, 155, 148–158. [Google Scholar] [CrossRef]

- Sokol, K.; Fackler, J.; Vogt, J.E. Artificial intelligence should genuinely support clinical reasoning and decision making to bridge the translational gap. npj Digit. Med. 2025, 8, 345. [Google Scholar] [CrossRef] [PubMed]

- Arina, P.; Kaczorek, M.R.; Hofmaenner, D.A.; Pisciotta, W.; Refinetti, P.; Singer, M.; Mazomenos, E.B.; Whittle, J. Prediction of Complications and Prognostication in Perioperative Medicine: A Systematic Review and PROBAST Assessment of Machine Learning Tools. Anesthesiology 2024, 140, 85–101. [Google Scholar] [CrossRef]

- Ive, J.; Olukoya, O.; Funnell, J.P.; Booker, J.; Lam, S.H.M.; Reddy, U.; Noor, K.; Dobson, R.J.; Luoma, A.M.V.; Marcus, H.J. AI assisted prediction of unplanned intensive care admissions using natural language processing in elective neurosurgery. npj Digit. Med. 2025, 8, 549. [Google Scholar] [CrossRef] [PubMed]

- Cao, Y.; Wang, Y.; Liu, H.; Wu, L. Artificial intelligence revolutionizing anesthesia management: Advances and prospects in intelligent anesthesia technology. Front. Med. 2025, 12, 1571725. [Google Scholar] [CrossRef] [PubMed]

- Montomoli, J.; Hilty, M.P.; Ince, C. Artificial intelligence in intensive care: Moving towards clinical decision support systems. Minerva Anestesiol. 2022, 88, 1066–1072. [Google Scholar] [CrossRef] [PubMed]

- Sokolova, M.; Japkowicz, N.; Szpakowicz, S. Beyond Accuracy, F-Score and ROC: A Family of Discriminant Measures for Performance Evaluation. In Proceedings of the AI 2006: Advances in Artificial Intelligence: 19th Australian Joint Conference on Artificial Intelligence, Hobart, Australia, 4–8 December 2006; Springer: Berlin, Heidelberg, 2006; pp. 1015–1021, ISBN 978-3-540-49787-5. [Google Scholar]

- Hicks, S.A.; Strümke, I.; Thambawita, V.; Hammou, M.; Riegler, M.A.; Halvorsen, P.; Parasa, S. On evaluation metrics for medical applications of artificial intelligence. Sci. Rep. 2022, 12, 5979. [Google Scholar] [CrossRef]

- Bihorac, A.; Ozrazgat-Baslanti, T.; Ebadi, A.; Motaei, A.; Madkour, M.; Pardalos, P.M.; Lipori, G.; Hogan, W.R.; Efron, P.A.; Moore, F.; et al. MySurgeryRisk: Development and Validation of a Machine-learning Risk Algorithm for Major Complications and Death After Surgery. Ann. Surg. 2019, 269, 652–662. [Google Scholar] [CrossRef]

| Actual Postoperative Level of Care | Surgery-Based Prediction | ||||

|---|---|---|---|---|---|

| Level 0 | Level 1 | Level 2 | Level 3 | Total | |

| Level 0 | 31,480 (98.21%) | 296 (0.92%) | 125 (0.39%) | 152 (0.47%) | 32,053 |

| Level 1 | 736 (86.59%) | 84 (9.88%) | 21 (2.47%) | 9 (1.06%) | 850 |

| Level 2 | 492 (57.14%) | 41 (4.76%) | 324 (37.63%) | 4 (0.46%) | 861 |

| Level 3 | 772 (44.78%) | 28 (1.62%) | 268 (15.55%) | 656 (38.05%) | 1724 |

| total | 33,480 | 449 | 738 | 821 | 35,488 |

| Actual Postoperative Level of Care | Anesthesiology-Based Prediction | ||||

|---|---|---|---|---|---|

| Level 0 | Level 1 | Level 2 | Level 3 | Total | |

| Level 0 | 29,212 (91.14%) | 1544 (4.82%) | 532 (1.66%) | 765 (2.39%) | 32,053 |

| Level 1 | 517 (60.82%) | 226 (26.59%) | 85 (10.00%) | 22 (2.59%) | 850 |

| Level 2 | 197 (22.88%) | 78 (9.06%) | 498 (57.84%) | 88 (10.22%) | 861 |

| Level 3 | 335 (19.43%) | 64 (3.71%) | 345 (20.01%) | 980 (56.84%) | 1724 |

| total | 30,261 | 1912 | 1460 | 1855 | 35,488 |

| Actual Postoperative Level of Care | Sensitivity AIN | Sensitivity SUR | Specificity AIN | Specificity SUR | PPV AIN | PPV SUR | NPV AIN | NPV SUR | Prevalence AIN | Prevalence SUR |

|---|---|---|---|---|---|---|---|---|---|---|

| Level 0 | 0.911 | 0.982 | 0.695 | 0.418 | 0.965 | 0.940 | 0.456 | 0.715 | 0.903 | 0.903 |

| Level 1 | 0.266 | 0.099 | 0.951 | 0.989 | 0.118 | 0.187 | 0.981 | 0.978 | 0.024 | 0.024 |

| Level 2 | 0.578 | 0.376 | 0.972 | 0.988 | 0.341 | 0.439 | 0.989 | 0.985 | 0.024 | 0.024 |

| Level 3 | 0.568 | 0.381 | 0.974 | 0.995 | 0.528 | 0.799 | 0.978 | 0.969 | 0.049 | 0.049 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Althammer, A.; Berger, F.; Spring, O.; Simon, P.; Girrbach, F.; Dieing, M.; Brunner, J.O.; Shmygalev, S.; Bartenschlager, C.C.; Heller, A.R. Prediction of Postoperative ICU Requirements: Closing the Translational Gap with a Real-World Clinical Benchmark for Artificial Intelligence Approaches. Information 2025, 16, 888. https://doi.org/10.3390/info16100888

Althammer A, Berger F, Spring O, Simon P, Girrbach F, Dieing M, Brunner JO, Shmygalev S, Bartenschlager CC, Heller AR. Prediction of Postoperative ICU Requirements: Closing the Translational Gap with a Real-World Clinical Benchmark for Artificial Intelligence Approaches. Information. 2025; 16(10):888. https://doi.org/10.3390/info16100888

Chicago/Turabian StyleAlthammer, Alexander, Felix Berger, Oliver Spring, Philipp Simon, Felix Girrbach, Maximilian Dieing, Jens O. Brunner, Sergey Shmygalev, Christina C. Bartenschlager, and Axel R. Heller. 2025. "Prediction of Postoperative ICU Requirements: Closing the Translational Gap with a Real-World Clinical Benchmark for Artificial Intelligence Approaches" Information 16, no. 10: 888. https://doi.org/10.3390/info16100888

APA StyleAlthammer, A., Berger, F., Spring, O., Simon, P., Girrbach, F., Dieing, M., Brunner, J. O., Shmygalev, S., Bartenschlager, C. C., & Heller, A. R. (2025). Prediction of Postoperative ICU Requirements: Closing the Translational Gap with a Real-World Clinical Benchmark for Artificial Intelligence Approaches. Information, 16(10), 888. https://doi.org/10.3390/info16100888