Abstract

Text classification (TC) is a subtask of natural language processing (NLP) that categorizes text pieces into predefined classes based on their textual content and thematic aspects. This process typically includes the training of a Machine Learning (ML) model on a labeled dataset, where each text example is associated with a specific class. Recent progress in Deep Learning (DL) enabled the development of deep neural transformer models, surpassing traditional ML ones. In any case, works of the topic classification literature prioritize high-resource languages, particularly English, while research efforts for low-resource ones, such as Greek, are limited. Taking the above into consideration, this paper presents: (i) the first Greek social media topic classification dataset; (ii) a comparative assessment of a series of traditional ML models trained on this dataset, utilizing an array of text vectorization methods including TF-IDF, classical word and transformer-based Greek embeddings; (iii) a fine-tuned GREEK-BERT-based TC model on the same dataset; (iv) key empirical findings demonstrating that transformer-based embeddings significantly increase the performance of traditional ML models, while our fine-tuned DL model outperforms previous ones. The dataset, the best-performing model, and the experimental code are made public, aiming to augment the reproducibility of this work and advance future research in the field.

1. Introduction

Natural Language Processing (NLP) focuses on the development of approaches that aim to address a series of challenges that are related to processing and understanding human language. One of them concerns the semantic processing of vast amounts of textual volumes available on the Internet. To efficiently address this challenge, NLP adopts a multi-disciplinary approach, integrating advancements from research areas such as linguistics, computer science, and artificial intelligence.

Text classification (TC) is a highly interesting subtask of NLP, which categorizes text pieces into predefined classes based on their textual content and thematic aspects. Manual annotation of large textual volumes by field experts is time-consuming and laborious [1], thus necessitating the automation of this subtask. TC typically includes training a Machine Learning (ML) model on a labeled dataset, where each text example is associated with a specific class. Several TC applications can be found in the literature, concerning among others: (i) email spam detection; (ii) sentiment analysis on product reviews, and (iii) topic classification of news articles [1,2,3].

Recent TC reviews distinguish between two types of TC approaches, namely the traditional and the deep learning (DL) ones; the former utilize conventional ML models, while the latter achieve state-of-the-art results [1,2,3]. Furthermore, these reviews show that the Transformer-based [4] TC approaches outperform all previously considered DL models. Specifically, Transformer models utilize the encoder-decoder architecture combined with attention mechanisms, enabling parallel processing of the entire input text, rather than the sequential processing of previous DL approaches (e.g., RNN, etc.). This efficient processing makes them suitable for large-scale corpora.

In any case, TC research targets high-resource languages (e.g., English), while works on low-resource ones are limited. Recently, Transformer-based models have achieved state-of-the-art performance in Greek NLP tasks [5,6,7]. However, DL techniques for modern Greek are still understudied [7] and the required resources (e.g., datasets, models) for their development are limited [8]. To the best of our knowledge, there are no previous works that consider the creation of a social media dataset for Greek topic classification. Furthermore, previous works on Greek text classification do not investigate possible performance improvements for traditional ML classifiers using transformer-based embeddings.

Aiming to fill the above research gaps, this study expands the limited number of Greek NLP datasets by introducing a novel one and studies the performance benefits of applying various transformer-based models for Greek TC. Overall, the contribution of our work includes: (i) the introduction of the first social media Greek topic classification dataset; (ii) a comparative assessment of a series of traditional ML models trained on this dataset, utilizing an array of text vectorization methods including TF-IDF, classical word and transformer-based Greek embeddings; (iii) a fine-tuned GREEK-BERT-based topic classification model on the same dataset; (iv) key empirical findings demonstrating that transformer-based embeddings significantly increase the performance of traditional ML models, while our fine-tuned DL model outperforms previous ones. Our experimentations reveal that transformer-based embeddings significantly increase the performance of traditional ML models; however, our fine-tuned DL model outperforms all previous ones. Aiming to enhance the reproducibility of this work and advance future research efforts in the area under consideration, we have made our dataset, the best-performing fine-tuned model (https://huggingface.co/IMISLab accessed on 23 July 2024), and the experimental code publicly available (https://github.com/NC0DER/GreekReddit accessed on 23 July 2024).

The rest of this work is organized as follows. A list of traditional ML and recent DL model architectures is presented in Section 2, along with Greek TC datasets and text vectorization methods. Section 3 elaborates on the proposed Greek NLP dataset for topic classification, accompanied by a comparative analysis with other Greek datasets from the NLP literature. Section 4 describes the proposed TC approach, the experimental setup, and the evaluation procedure. Finally, Section 5 discusses concluding remarks, provides a series of insights about current Greek NLP techniques and models, and sketches directions for future research endeavors.

2. Related Work

This section presents noteworthy works on Greek NLP and topic classification; specifically, Section 2.1 reports on ML and DL architectures that can be adapted for Greek TC; Section 2.2 presents Greek embedding models for TC; Section 2.3 reviews Greek NLP TC datasets; Section 2.4 presents various works for social media topic classification.

2.1. Greek Text Classification Models

Traditional ML classification approaches rely on conventional ML models, including a linear model fitted with Stochastic Gradient Descent (SGD) [9], the Passive Aggressive (PA) family of models [10], Gradient Boosting Decision Trees (GB) [11], and shallow neural networks with zero to two hidden layers [3], such as Multi-Layer Perceptron (MLP) [12]. This work focuses on the SGD, GB, PA, and MLP models. In general, such approaches rely on extensive data preprocessing and meticulous feature engineering to obtain competitive results [3]. For the case of TC, these features are textual vector representations, such as TF-IDF or several word- or transformer-based embeddings (more information is given below, in Section 2.2).

GREEK-BERT [5] is a pre-trained Greek language model (LM) built using BERT [13]. This model is trained on a large generic dataset that contains Greek textual data from three different sources: (i) Wikipedia articles, (ii) documents from the European Parliament Proceedings [14], and (iii) the OSCAR dataset (https://oscar-project.org/ accessed on 23 July 2024). This large dataset is formed using multiple preprocessing steps, such as lowercasing and accent removal. The same corpus is used to obtain a 35,000-token vocabulary for the model. GREEK-BERT’s pre-training process includes two learning tasks, namely Masked Language Modelling and Next Sequence Prediction. GREEK-BERT is fine-tuned for various downstream tasks (e.g., TC, Named Entity Recognition—NER) and has demonstrated better performance than previously introduced DL models.

GREEK-LEGAL-BERT [15] is a pre-trained Greek LM, which is introduced for NER on Greek legal texts. The model’s pre-training includes a 5 GB dataset that contains the entirety of Greek Legislation. The model has been evaluated against GREEK-BERT and achieved similar results with respect to the NER task. Another recent work [6] evaluates GREEK-LEGAL-BERT on a Greek classification dataset, alongside other TC models (traditional ML, DL, and GREEK-BERT models), and reveals that the two BERT-based models have the best overall performance.

2.2. Greek Embeddings

spaCy is an open-source Python toolkit that offers support for several NLP tasks including TC. It provides pre-trained NLP models for numerous languages, including Greek. Some of these models (https://spacy.io/models/el accessed on 23 July 2024) contain Greek word embeddings, which are created from Common Crawl and Wikipedia datasets using fastText models [16].

Similar works for Greek classical word embeddings are presented below. Outsios and colleagues [17] have developed and evaluated multiple Greek word embedding models, based on Word2Vec [18] and fastText [19], utilizing multiple datasets (i.e., Wikipedia, Common Crawl, and the Greek web pages corpus [20]). They evaluate all these models through two evaluation datasets that focus on word analogies and word similarities. Their experimental results reveal that all considered models can create meaningful word-embedding representations. Lioudakis and colleagues [21] have introduced a new method for creating word embeddings, called Continuous-Bag-of-Skip-grams (CBOS). Their method combines earlier word embedding models and outperforms them on multiple NLP tasks, including Greek TC.

Sentence-transformers is an open-source Python library that offers state-of-the-art sentence embeddings created using the Sentence-BERT model [22]. This library provides a collection of pre-trained embedding models, which perform exceptionally in many NLP tasks, such as semantic search and TC. Amongst the provided models, there are several multilingual ones supporting over 100 languages, including Greek.

Finally, Papadopoulos and colleagues [23] have trained a semantic textual similarity model using Sentence-Transformers which can be used to infer Greek sentence embeddings for many NLP tasks. This model is called lighteternal/stsb-xlm-r-greek-transfer and is used in an automated claim validation framework for online news portals, namely FarFetched.

2.3. Greek Text Classification Datasets

Greek Legal Code (GLC) [6] is designed for the task of legal TC and contains a collection of 47,563 labeled documents that are classified into multi-level categories. These categories include 47 legislative volumes, 389 chapters, and 2285 subject categories. These documents are retrieved from the Official Government Gazette of the Hellenic Parliament and converted from their original form into a JSON format. This dataset has been utilized to evaluate a variety of TC models, including traditional ML and recent DL ones.

Pitenis and colleagues [24] have introduced a manually annotated TC dataset, called Offensive Greek Tweet Dataset (OGTD), which consists of 4779 Greek Twitter posts labeled as offensive or non-offensive. These posts were randomly selected from a collection of more than 49,000 tweets and preprocessed to remove unnecessary information. Three volunteers participated in the manual annotation process, following detailed instructions on the definition of offensive language. The authors use their proposed dataset to assess various TC models (i.e., five classical ML models and six DL ones), which are trained on it.

Makedonia [21] is a Greek TC dataset that consists of approximately 8000 articles from the online portal of the Makedonia newspaper. The articles are classified into 18 diverse news categories (e.g., Sports, Economy, Politics, Arts-Culture, Society, etc.). A balanced subset of the dataset, which contains documents from 7 out of the 18 total news categories, has been used to evaluate a linear ML model using five different Greek word embedding models.

GreekSUM [7] is a multi-purpose Greek NLP dataset extracted from the online Greek news portal News 24/7. It can be used for discrete NLP tasks, such as topic TC, title generation, and abstract summarization for news articles. The TC dataset variant contains approximately 170,000 articles that fall into five different categories, namely politics, society, economy, culture, and world news. An experimental evaluation of four different DL TC models on the GreekSUM and Makedonia datasets is described in [7].

Some of the above works [6,24] have tested traditional ML approaches with classical word embeddings against Greek DL models under the same evaluation framework. To the best of our knowledge, current Greek TC works do not assess the performance of ML approaches incorporating transformer-based Greek embeddings against classical word embeddings. The approach proposed in Section 4 aims to fill this gap.

2.4. Topic Classification

Various literature works have already explored the task of topic classification in social media. In these works, user posts and their associated topics are collected from popular social media platforms (e.g., Facebook, X; a.k.a. Twitter, etc.) to build topic classification datasets [25,26,27,28]. These datasets are annotated either by users who are mandated to select predefined categories before posting or by human experts, and they are used to train or fine-tune various classification models, including classical ML and transformer-based models (e.g., BERT). In the forthcoming paragraphs, we report on some of these works in the context of this paper.

Omar et al. [25] explore the importance of social media topic classification and sentiment analysis for preventing unwanted content in Arabic. The authors highlight several research challenges, such as the specificities and different dialects of the language and the lack of resources for Arabic. Their work introduces a multi-class Arabic dataset on which they build a new technique for classifying short texts from Arabic social media. This dataset consists of 44,000 posts from Facebook and Twitter, which are uniformly divided into 11 categories, including politics, sports, economy, and more. Furthermore, the authors added an additional label to each post identifying it as positive, negative, or neutral, making it suitable for sentiment analysis tasks. They experimented with 9 ML algorithms to evaluate the performance of the dataset and their findings suggest that a large dataset size leads to improved results.

TAG-it [26] is a multi-label dataset composed of posts from the Italian ForumFree platform. The topic of each post along with the age and gender of each user constitute the three different labels of the dataset. In total, it contains more than 2.4 million posts from 7000 different users, divided into 30 different thematic groups, which can be either general (e.g., sports, culture, tourism, politics) or specific (e.g., bicycles, aesthetic medicine, watches). The authors experimented with the dataset to evaluate the task of classifying a user’s age based on lexical or linguistic information. Papucci et al. [27] utilized a subset of the dataset, consisting of more than 18,000 posts divided into 11 categories, to evaluate several transformer-based classification models for the topic classification task. Their results show that a fine-tuned BERT model for Italian outperformed all other considered models.

TweetTopic [28] is a dataset intended for the task of topic classification in social media posts. The authors collected a total of 1,264,037 raw tweets from the Twitter platform using specific keywords and time periods via the Twitter API. The authors undertook a series of data filtering steps to obtain a clean English corpus, excluding any irrelevant or unwanted content. Then they randomly selected 11,000 tweets, which were manually labeled by five annotators from the Amazon Mechanical Turk platform. These annotators used a list of 23 topics, carefully curated by domain experts, to categorize the tweets. The considered topics included sports, arts and culture, food and dining, and science and technology. Finally, two versions of the dataset were created, one for multi-label and another one for single-label classification. The multi-label version consists of 6997 articles divided into 19 topics. In the single-label setting, the authors retained the most dominant topics, while merging other similar ones into a single label to create a balanced dataset consisting of 11,267 tweets divided into six topics. The authors experimented on the dataset using several LMs and some classical ML models as baselines. Their experimental results revealed that some of these LMs outperformed the selected baselines.

In Table 1, the proposed approach is compared against the related social media topic classification approaches on various aspects such as the types of utilized models, the considered performance metrics, the language of the posts, and the social media from which the data are collected.

Table 1.

Summary of related work on social media topic classification.

3. GreekReddit

Reddit [29] is a social media platform with a particular structure; its entry point is the Reddit homepage, which is a post feed from the subreddits followed by a registered user (or from all subreddits in the case of an unregistered user). These posts are ranked according to user votes, posts’ age, and relevance. The user may further proceed to view a selected subreddit, where Reddit’s feed shows posts belonging solely to this subreddit; each one dedicated to specific topic(s). Each post can be upvoted or downvoted and allows users to add comments, which are structured as a rooted tree by the “reply-to” relation to other comments or the post itself.

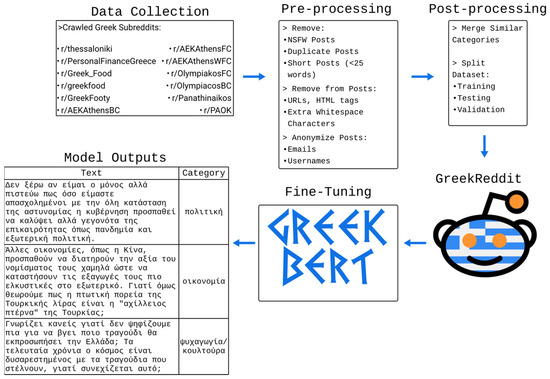

Due to its popularity and accessibility, Reddit is considered to be a high-quality source of data for several tasks [30]. In the case of Reddit’s popularity in Greece, many Greek subreddits exist, with “Greece” being the most popular one. It has 248 K members and is ranked by Reddit as being in the top 1% in terms of user base size. Proferes and colleagues [30] have offered a systematic review of 727 literature works regarding the use of Reddit for data collection. Considering the limited amount of Greek TC resources, we introduce below the GreekReddit dataset, which comprises social media posts collected from Greek subreddits, and their respective titles, URLs, IDs, and user-assigned labels. To the best of our knowledge, there are no other Greek NLP datasets based on Reddit. Overall, the approach proposed in this work collects social media data, preprocesses them through anonymization, removes duplicate and inappropriate content, aggregates similar categories, and creates a multi-class classification dataset, which can be used to train/fine-tune an ML/DL model (see Figure 1).

Figure 1.

The creation of the proposed dataset and the fine-tuning process (the English translation of the model inputs/outputs is given in Appendix A).

3.1. Data Collection

Various third-party APIs for gathering Reddit data already exist [30]. However, these have become highly expensive lately (https://mashable.com/article/reddit-ceo-steve-huffman-api-changes accessed on 23 July 2024). In this work, we use the PRAW Python library (https://pypi.org/project/praw/ accessed on 23 July 2024), which is aligned with Reddit’s official API rules and freely offers a limited number of posts per subreddit, upon request. Since this work emphasizes on Greek topic classification, we relied on 13 subreddits that contain posts written in Greek and chose 12 different topic labels.

The selected subreddits either contain manually labeled posts belonging to different topics or contain posts of a specific topic. The considered subreddits are: “Greece”, “PersonalFinanceGreece”, “thessaloniki”, “greekfood”, “Greek_Food”, “GreekFooty”, “OlympiakosFC”, “OlympiacosBC”, “PAOK”, “Panathinaikos”, “AEKAthensFC”, “AEKAthensBC” and “AEKAthensWFC”. Most of these subreddits have specific member rules, which instruct them to refrain from posting offensive and off-topic material.

Most Greek subreddits accept posts that belong to a specific topic; off-topic posts are removed by moderators. In the case of the “Greece” subreddit, there are multiple topics under discussion; however, users are encouraged to post under specific single-topic categories. These categories are pre-determined by the administrators; when users make a new post, the tag is assigned by them, while moderators may amend the tag to better reflect the main topic discussed by the post. We consider the case of a post belonging to multiple topics; since this is not currently supported by Greek Subreddits, we create this dataset with the single-topic classification task in mind.

Based on user assigned tags for multi-topic subreddits (e.g., “Greece”) or a subreddit’s theme for single-topic subreddits (e.g., “greekfood”), we sorted posts into the following topics; English translations are provided in brackets: “κοινωνία (society)”, “εκπαίδευση (education)”, “πολιτική (politics)”, “οικονομία (economy)”, “ταξίδια (travel)”, “τεχνολογία (technology)”, “ψυχαγωγία (entertainment)”, “αθλητικά (sports)”, “κουλτούρα (culture)”, “φαγητό (food)”, “ιστορία (history)”, and “επιστήμη (science)”.

Since the Reddit API limits its results to approx. 200 posts per search request, we manually created a set of keywords for each topic. We combined these keywords with different sorting filters based on popularity (e.g., “hot”, “top”, “new” posts) and time (e.g., “all”, “year”, “month”) to create multiple search profiles. An example of a search profile would be to extract the top posts of all time that have the keyword “οικονομική κρίση (economic crisis)”, which overcame the above limitation. Overall, we collected thousands of posts using our custom code based on PRAW by aggregating the results from each search profile. In this code we also implement rate limits, i.e., delaying a few seconds between data retrieval requests to respect the Reddit API policies. The full set of keywords is available at the GitHub repository of this work (https://github.com/NC0DER/GreekReddit accessed on 23 July 2024).

3.2. Preprocessing

A series of preprocessing steps was applied to the collected data. Firstly, we considered posts with text that were mostly written in Greek and excluded posts with less than 25 words, or posts with explicit context that are marked as NSFW (Not Safe For Work). From the main text and title of each post, we removed URLs, HTML tags, emojis, and extra whitespace characters, and performed anonymization by replacing referenced usernames or emails with special tokens (i.e., <user>, <email>). Finally, we removed duplicate posts.

3.3. Post-Processing

Upon inspection, we noticed that some of the acquired topic labels contained highly similar posts. Thus, we merged similar categories into individual ones; “τεχνολογία (technology)” and “επιστήμη (science)” were merged into “τεχνολογία/επιστήμη (technology/science)”; “ψυχαγωγία (entertainment)”, and “κουλτούρα (culture)” were merged into “ψυχαγωγία/κουλτούρα (entertainment/culture)”. The resulting dataset contains 6534 posts divided into 10 topic labels. Apart from the main text and the corresponding topic label, each dataset entry contains the title, unique ID, and URL of the original post. We used a stratified sampling strategy and separated data into train, test, and validation splits that contain 5530, 504, and 500 posts, respectively. Thus, GreekReddit has a training, test, and validation split of ~85%, ~7.5%, and ~7.5%.

3.4. Analysis

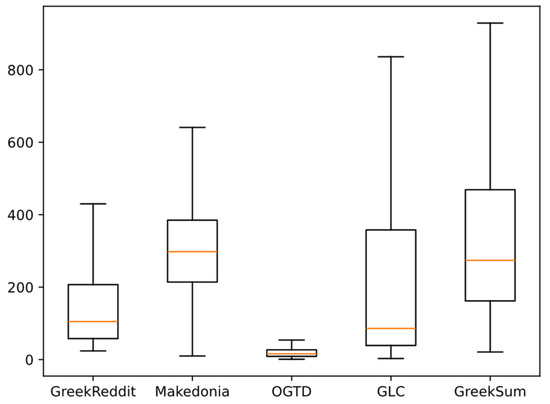

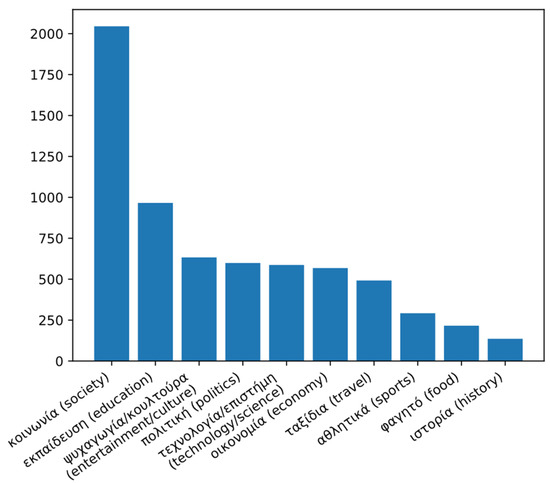

In Table 2, we analyzed GreekReddit alongside other Greek TC datasets for their number of total documents and dataset splits. In terms of dataset splits, OGTD has no reported validation split, while no splits at all are reported for the Makedonia dataset. GreekReddit has a similar split percentage distribution to GreekSUM. According to Figure 2, in terms of the mean document lengths (measured in words), the GreekSUM and Makedonia news TC datasets have the largest ones. OGTD is a social media dataset collected from Twitter, which imposes a 500-character limit on posts; thus, this dataset has the shortest mean document length. Our dataset is also a social media dataset; however, it has a larger mean document length than OGTD, yet a smaller one than the news TC datasets. The distribution of document lengths (measured in words) for each dataset is illustrated in Figure 2; statistical outliers (<2.5%) are disregarded. The distribution of documents per class label for GreekReddit is elaborated in Table 3 and visualized in Figure 3. As shown in Table 3, the dataset shows a relatively uneven document distribution between categories. The two most populous categories (i.e., “society” and “education” with 2045 and 966 documents, respectively) and the two less populous ones (i.e., “food” and “history” with 216 and 136 documents, respectively) deviate considerably from the rest ones. It is further noted that we did not exclude or augment any data to balance the dataset.

Table 2.

Greek TC Dataset Analysis; #, % denotes the number and the percentage of the documents, respectively. Dataset splits are given in the order of training/testing/validation splits.

Figure 2.

Document Length (words/document) Distributions for Greek TC Datasets.

Table 3.

Distribution of samples among the categories of GreekReddit. #, % denotes the number and the percentage of the documents per category, respectively. Dataset documents are given in the order of training/testing/validation.

Figure 3.

Number of Documents per Class Distribution in GreekReddit.

4. A Series of ML and DL Models for Greek Social Media Topic Classification

This section presents a series of models for multi-class topic classification on GreekReddit, as well as the corresponding experimental evaluation. Specifically, Section 4.1 presents the hardware and software specifications used in our research; Section 4.2 describes the training and validation procedures for the development of the models under consideration, and reports on the data and metrics of the experimental setup; Section 4.3 reports on the experimental results.

4.1. Setup

As far as hardware specifications are concerned, we used a computer with an Intel Core i9-13900K 32 logical core CPU, 64 GB of RAM and an Nvidia RTX 3060 12 GB VRAM GPU. For the fine-tuning process, we used the GPU since popular DL libraries provide accelerated performance. With respect to software, we used the Transformers (https://huggingface.co/docs/transformers/ accessed on 23 July 2024) DL library and the Scikit-Learn (https://scikit-learn.org/ accessed on 23 July 2024) ML library. Our experiments also included several Greek embedding models; we used fastText word embeddings provided by spaCy (https://spacy.io/models/el accessed on 23 July 2024) and transformer-based embeddings using the Sentence-Transformers (https://www.sbert.net/ accessed on 23 July 2024) library. Finally, we utilized the precision, recall, F1-score, and Hamming Loss evaluation metrics as implemented in Scikit-Learn.

4.2. Validation and Training

In this subsection, we report on the development of classical ML models incorporating various text vectorization methods, such as TF-IDF, classical word, and transformer-based embeddings, as well as on the development of a fine-tuned model based on GREEK-BERT. For our classical ML models, we utilized Scikit-Learn’s SGDClassifier (SGDC), PassiveAggressiveClassifier (PAC), GradientBoostingClassifier (GBC), MLPClassifier (MLPC), based on the ML models discussed in the first paragraph of Section 2.1.

We trained the models and performed parameter tuning using all hyperparameter combinations from Table 4 and evaluated them on the validation split of GreekReddit. We chose the best-performing model, where the validation and training losses were close to avoid overfitting, yet both had the lowest possible values (early stopping). These ML models have a maximum number of 1000 iterations; however, the actual number of iterations is smaller due to the early stopping strategy. We note that the hyperparameter search was conducted manually; the selected hyperparameters yielded the highest F1 scores and lowest loss values when evaluated in the validation subset of the GreekReddit dataset.

Table 4.

Hyperparameter search space for all models.

For the SGDC, we used the hinge loss function, which is equivalent to a linear support vector machine (L-SVM). Through the penalty parameter, different regularization terms (i.e., None, L1, L2, ElasticNet) were considered in the loss function; L1 was the term corresponding to the best setup. We did not use the automatic adjustment of the weights associated with the statistical distribution of the dataset’s classes, since we observed a performance decrease.

For the PAC, similarly to SGDC, we enable the early stopping strategy, while setting the maximum number of iterations to 1000. We enable the shuffling parameter of this classifier, which shuffles the data after each epoch. For the loss function, we considered both the hinge and squared_hinge, which are defined as equations PA-I and PA-II in the original publication [10]. We also experimented with the automatic adjustment of the weights associated with the statistical distribution of the dataset’s classes. During our validation, the best results were achieved by using the squared_hinge loss function and without using the class weight adjustment.

For the GBC, we considered as a criterion the mean squared error (mse) and the mse with improvement score by Friedman (mse-friedman) [11]. We also used the log-loss function, which is suitable for classification problems. Unlike other considered classifiers, this one does not have parameters controlling the maximum number of iterations or enabling the early stopping strategy. The best results during validation were achieved with the mse criterion.

For the MLPC, we considered a hidden neural network (NN) layer with a ReLU activation function [31] and a size of 100 neurons. We examined different weight optimization solvers (Adam [32], L-BFGS [33], SGD [34]) and after validation, the best results were achieved with L-BFGS.

Regarding the different Greek vectorization methods, we considered TF-IDF as implemented in Scikit-Learn’s TfidfVectorizer, fastText embeddings from spaCy (el_core_news_lg), and three different transformer-based embedding models used as input to the sentence-transformers library. These are: (i) MPNet-V2 (https://huggingface.co/sentence-transformers/paraphrase-multilingual-mpnet-base-v2 accessed on 23 July 2024), (ii) XLM-R-GREEK (https://huggingface.co/lighteternal/stsb-xlm-r-greek-transfer accessed on 23 July 2024), and (iii) GREEK-BERT (https://huggingface.co/nlpaueb/bert-base-greek-uncased-v1 accessed on 23 July 2024).

We applied a series of steps for each vectorization technique used for training, validation, and evaluation, which are described below. One common preprocessing step between them is that we made the input document lowercase in training. In the case of GREEK-BERT, we also replaced accented vowels with non-accented ones, since GREEK-BERT was pre-trained on non-accented Greek corpora.

The TDIFVectorizer extracts n-grams from text that do not contain stopwords, and then creates TF-IDF vectors from the input text. In our experiments, we used spaCy’s Greek stopwords, we set the n-gram range to (1, 3), we applied sublinear term frequency scaling, and we ignored terms that appear in documents less frequently than 1% and more frequently than 99%.

For the spaCy vectorization method, we used spaCy’s part of speech (POS) tagger and extracted only (proper) nouns and adjectives from each document. For each token, we inferred the word embedding using the el_core_news_lg model. We then averaged the word embeddings to obtain the document embedding.

For the transformer-based vectorization method, we tokenized the text into sentences using spaCy and we passed each sentence through the sequence encoder of each model to obtain the sentence embedding. Then, we averaged the sentence embeddings to obtain the document embedding. This was done since transformer-based models have input limitations of a few hundred tokens per sequence. By utilizing the validation subset, we experimented with different parametric setups (see Table 4), and we selected the best overall parameter combination according to the best results for most vectorization methods for each traditional ML classifier.

Based on previous works utilizing GREEK-BERT [5,6,7,15], we examined various learning rates and batch sizes for validation (see Table 4). Our best results were achieved with batch size 16 and a learning rate of 5 × 10−5. We set the dropout rate to 0.1 based on the findings of previous works [15,34]. After validation, we fine-tuned GREEK-BERT for 4 epochs; our findings show that the model’s performance is increased, and its loss is decreased. However, the model performance starts to decline after the fifth epoch; thus, our findings agree with the suggestion of having 2–4 training epochs as indicated in previous works [5,15].

4.3. Experiments

We conducted an experimental evaluation where we assessed the performance of all the developed models on the test split of GreekReddit. The evaluation metrics used in our experiments include macro-averaged Precision, Recall, F1-score and Hamming Loss. We employed a set of 20 different random seeds, i.e., {0, 1, 42, 5, 11, 10, 67, 45, 23, 20, 88, 92, 53, 31, 13, 2, 55, 3, 39, 72}, to train and evaluate each model setup presented in Table 4. For each setup, we report the mean values of 20 different runs (seeds) of each evaluation metric along with the standard deviation. The aggregated results of our experiments are presented in Table 5; the values highlighted in bold indicate the best result on each metric, while the underlined values indicate the best vectorization method for each of the two ML models (higher values are better in Precision, Recall, and F1 score, while lower values are better in Loss).

Table 5.

Experimental Results on GreekReddit (±variance across 20 distinct random seed runs). The score of the best performing experimental setup for each model is highlighted in underline, while the score of the best performing model overall is highlighted in bold.

Table 5 demonstrates the results of the social media topic classification task on GreekReddit. Firstly, we observe a significant overall improvement in the classical ML models when sentence transformer embeddings are incorporated instead of TF-IDF and word embeddings. In particular, the performance of the SGDC demonstrated the greatest performance gains when combined with the MPNet-V2 embeddings, while the performance of the MLPC, PAC, and GBC improved the most when combined with the XLM-R-GREEK embeddings. Although the incorporation of GREEK-BERT embeddings shows an improvement on several classifiers, it does not manage to outperform the two other sentence-transformers embedding models. We argue that this occurs because XLM-R-GREEK and MPNet-V2 were trained using the Sentence-BERT model, which considers the context of the entire sentence(s), instead of individual tokens (e.g., in the case of GREEK-BERT). Finally, our experimental results reveal that the fine-tuned GREEK-BERT model outperforms all other ML models on the Recall and F1 metrics by a significant margin.

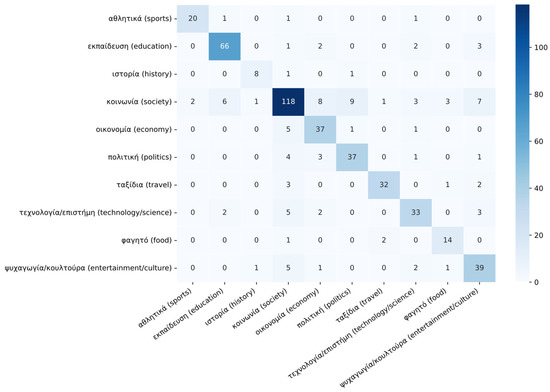

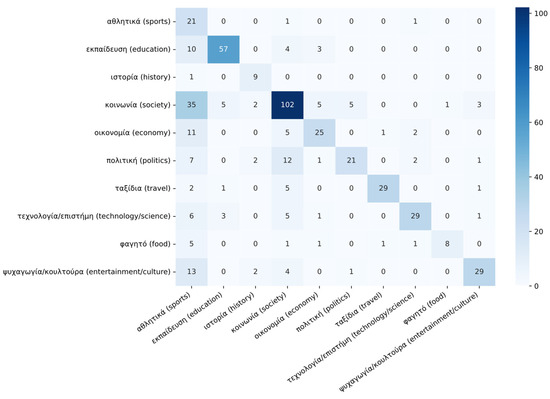

To further analyze the top two performing models (i.e., GREEK-BERT and MLPC), we calculate the confusion matrices, aiming to investigate the model performance on each dataset category (see Figure 4 and Figure 5). As shown in Figure 4, the fine-tuned DL model has a minimum number of false positives and negatives, since non-diagonal elements are zero (or near zero) compared to the number of true positives and negatives at the diagonal (highlighted in darker blue colors). On the contrary, as shown in Figure 5, there are significantly more false negatives, i.e., elements of the lower triangular submatrix (bottom left). Potential reasons for the false predicted elements include: (i) the dataset class imbalance (as analyzed in Table 3 and visualized in Figure 3); and (ii) the ambiguity of classifying texts belonging to multiple categories (correctly and falsely classified examples can be found in Table A1 and Table A2 in Appendix A).

Figure 4.

Confusion Matrix for the fine-tuned GREEK-BERT model.

Figure 5.

Confusion Matrix for the trained MLPC model.

5. Discussion

This paper has introduced a novel social media topic classification dataset, namely GreekReddit. This dataset contains 6534 Reddit posts accompanied by their corresponding topic label, title, unique ID, and URL. Our analysis revealed that this dataset contains on-average documents of medium length when compared to other Greek NLP datasets for TC. We developed a series of models for multi-class topic classification on GreekReddit, using ML classifiers with several vectorization methods (TF-IDF, word embeddings, transformer-based embeddings), as well as a GREEK-BERT model fine-tuned for topic classification.

5.1. Interpretation of the Results

As shown in our experimental results, the ML models using pre-trained transformer-based embeddings (i.e., XLM-R-GREEK or MPNet-V2-Multilingual) outperform those using other vectorization methods. In any case, our fine-tuned GREEK-BERT model outperforms all considered ML models on the evaluation metrics of Recall, and F1 score. To evaluate the performance of the top ML and DL models for each dataset category, we calculated the confusion matrices. The results show that the DL model has a minimum number of false negatives and positives, while the ML model has a considerable number of false negatives. We argue that potential reasons for these false predicted elements include the dataset class imbalance, and the ambiguity of classifying texts belonging to multiple categories.

5.2. Ethical Implications

The data collected and processed in this study are public and openly accessible through a Python library that utilizes the official Reddit API. All retrieved data in this work was collected and processed exclusively for academic purposes. We respected possible rate limits by delaying a few seconds between data retrieval requests. We also performed additional preprocessing on the proposed dataset to remove any identifying information including usernames, emails, and URLs.

5.3. Limitations

The GreekReddit dataset contains posts from Greek subreddits. Since Reddit is a social media platform, these posts use colloquial and everyday language. To facilitate the development of general-purpose TC models, multiple datasets with distinct themes and writing styles are needed for training and evaluation. However, due to the limited number of Greek NLP datasets for TC, this is not currently feasible. A second limitation is concerned with the generalization of our findings. Given the minimum amount of LMs supporting modern Greek, a comprehensive assessment of our proposed models with future Greek LMs using multiple NLP datasets is much needed in this direction. A third limitation stems from certain data biases that exist in this dataset, which are inherited by the Reddit posts; despite the user conduct rules of the considered subreddits, the collected posts may include inappropriate or toxic language, as well as political and historical biases.

5.4. Future Work Directions

The creation of more Greek NLP datasets is needed to facilitate the development of a series of general-purpose TC models. The proposed dataset can also be used for the development and benchmarking of future deep-learning models. Another research direction concerns the utilization of GreekReddit for title generation, as articles in Reddit are accompanied by corresponding titles. Moreover, our dataset could be further analyzed to explore the thematical aspects, important keywords, linguistic idiosyncrasies, and potential biases associated with Greek subreddit posts. Apart from the dataset analysis, the fine-tuned model based on GREEK-BERT could be further studied using model interpretability techniques (e.g., attention weight diagrams), which highlight the parts of the input that significantly influence the model’s decision-making process for the TC task.

Finally, future work in Greek TC should consider the evaluation of ML classifiers using transformer-based embeddings over classical vectorization methods (TF-IDF, word embeddings) alongside Greek LMs, using multiple Greek TC datasets, to further generalize the findings of this work.

6. Conclusions

Since the introduction of the transformer architecture, there has been a paradigm shift toward using pre-trained transformer-based embeddings for TC tasks. However, previous Greek NLP works have not examined the benefits of using them over previous classical word embedding methods. We argue that the use of these transformer-based embeddings is important since the computational cost of inferring embeddings from LMs is lower than fine-tuning them for TC. Our key empirical findings also align with other Greek NLP works [5,6,15], where fine-tuning GREEK-BERT yields the best results. Overall, the contribution of this paper includes: (i) the creation of a novel social media Greek TC dataset; (ii) a comparative assessment of various traditional ML models trained on this dataset; (iii) a fine-tuned BERT TC model that can be used for topic inference in a zero-shot fashion; (iv) key empirical findings on the performance of transformer-based embeddings and DL model fine-tuning.

Author Contributions

Conceptualization, C.M. and N.G.; methodology, N.G.; software, N.G. and C.M.; validation, N.G. and C.M.; investigation, C.M. and N.G.; resources, N.K.; data curation, C.M. and N.G.; writing—original draft preparation, C.M. and N.G.; writing—review and editing, N.G., C.M. and N.K.; visualization, N.G. and C.M.; supervision, N.K.; project administration, N.K.; funding acquisition, N.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The dataset and the best performing model are available at https://huggingface.co/IMISLab accessed on 23 July 2024, and the experimental code is available at https://github.com/NC0DER/GreekReddit accessed on 23 July 2024.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Table A1.

GREEK-BERT Model inputs and correct outputs. (English Translations are provided).

Table A1.

GREEK-BERT Model inputs and correct outputs. (English Translations are provided).

| Model Inputs | Model Outputs |

|---|---|

| Δεν ξέρω αν είμαι ο μόνος αλλά πιστεύω πως όσο είμαστε απασχολημένοι με την όλη κατάσταση της αστυνομίας η κυβέρνηση προσπαθεί να καλύψει αλλά γεγονότα της επικαιρότητας όπως πανδημία και εξωτερική πολιτική. | πολιτική |

| I don’t know if I’m the only one but I believe that while we are busy with the whole police situation the government is trying to cover up current events like the pandemic and foreign policy. | politics |

| Άλλες οικονομίες, όπως η Κίνα, προσπαθούν να διατηρούν την αξία του νομίσματος τους χαμηλά ώστε να καταστήσουν τις εξαγωγές τους πιο ελκυστικές στο εξωτερικό. Γιατί όμως θεωρούμε πως η πτωτική πορεία της Τουρκικής λίρας είναι η “αχίλλειος πτέρνα” της Τουρκίας; | οικονομία |

| Other economies, such as China, try to keep the value of their currency low to make their exports more attractive abroad. But why do we think that the downward course of the Turkish lira is the “Achilles heel” of Turkey? | economy |

| Γνωρίζει κανείς γιατί δεν ψηφίζουμε πια για να βγει ποιο τραγούδι θα εκπροσωπήσει την Ελλάδα; Τα τελευταία χρόνια ο κόσμος είναι δυσαρεστημένος με τα τραγούδια που στέλνουν, γιατί συνεχίζεται αυτό; | Ψυχαγωγία /κουλτούρα |

| Does anyone know why we no longer vote for which song will represent Greece? In recent years people are unhappy with the songs they send, why does this continue? | Entertainment /culture |

Table A2.

GREEK-BERT Model inputs and erroneous outputs. (English Translations are provided).

Table A2.

GREEK-BERT Model inputs and erroneous outputs. (English Translations are provided).

| Model Inputs | Model Outputs |

|---|---|

| Βγήκαν τα αποτελέσματα των πανελληνίων και από ότι φαίνεται περνάω στη σχολή που θέλω—HΜΜΥ. H ερώτηση μου είναι τι είδους υπολογιστή να αγοράσω. Προσωπικά προτιμώ σταθερό αλλά δε ξέρω αν θα χρειαστώ λάπτοπ για εργασίες ή εργαστήρια. Επίσης μπορώ να τα βγάλω πέρα με ένα απλό λάπτοπ ή θα πρέπει να τρέξω πιο <<βαριά>> προγράμματα; Edit: budget 750–800 € Θα περάσω στη πόλη που μένω. | τεχνολογία/επιστήμη (Human assigned) εκπαίδευση (predicted) |

| The results of the Panhellenic exams are out and it seems that I am getting into the school I want—ECE. My question is what kind of computer should I buy. Personally, I prefer a desktop one, but I don’t know if I will need a laptop for course or laboratory assignments. Also can I get by with a simple laptop or should I run more <<heavy>> programs? Edit: budget 750–800 € I will study in the city where I live. | technology/science (Human assigned) education (predicted) |

| Καλησπέρα και Καλή Χρονιά! Έχω λογαριασμό στην Freedom 24 αλλά δεν θέλω να ασχοληθώ άλλο με αυτή την πλατφόρμα. Την βρήκα πολύ δύσχρηστη και περίεργη. Υπάρχει κάποια άλλη πλατφόρμα που μπορείς να επενδύσεις σε SP500 από Ελλάδα; | οικονομία (Human assigned) εκπαίδευση (predicted) |

| Good evening and Happy New Year! I have an account with Freedom 24 but I don’t want to deal with this platform anymore. I found it very unusable and weird. Is there any other platform where you can invest in SP500 from Greece? | economy (Human assigned) education (predicted) |

| Παιδιά θέλω προτάσεις για φτηνό φαγητό στην κέντρο Aθήνας, φάση all you can eat μπουφέδες ή να τρως πολύ φαγητό με λίγα χρήματα. Επίσης μοιραστείτε και ενδιαφέροντα εστιατόρια/μπαρ/ταβέρνες που έχουν happy hours. Sharing is caring. | φαγητό (Human assigned) κοινωνία (predicted) |

| Guys, I want suggestions for cheap food in the center of Athens, like all you can eat buffets or eating a lot of food for little money. Also share interesting restaurants/bars/taverns that have happy hours. Sharing is caring. | food (Human assigned) society (predicted) |

References

- Minaee, S.; Kalchbrenner, N.; Cambria, E.; Nikzad, N.; Chenaghlu, M.; Gao, J. Deep Learning—Based Text Classification: A Comprehensive Review. ACM Comput. Surv. 2021, 54, 1–40. [Google Scholar] [CrossRef]

- Li, Q.; Peng, H.; Li, J.; Xia, C.; Yang, R.; Sun, L.; Yu, P.S.; He, L. A Survey on Text Classification: From Traditional to Deep Learning. ACM Trans. Intell. Syst. Technol. 2022, 13, 1–41. [Google Scholar] [CrossRef]

- Gasparetto, A.; Marcuzzo, M.; Zangari, A.; Albarelli, A. A Survey on Text Classification Algorithms: From Text to Predictions. Information 2022, 13, 83. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Koutsikakis, J.; Chalkidis, I.; Malakasiotis, P.; Androutsopoulos, I. GREEK-BERT: The Greeks Visiting Sesame Street. In Proceedings of the 11th Hellenic Conference on Artificial Intelligence, Athens, Greece, 2–4 September 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 110–117. [Google Scholar]

- Papaloukas, C.; Chalkidis, I.; Athinaios, K.; Pantazi, D.; Koubarakis, M. Multi-Granular Legal Topic Classification on Greek Legislation. In Proceedings of the Natural Legal Language Processing Workshop 2021, Punta Cana, Dominican Republic, 10 November 2021; Aletras, N., Androutsopoulos, I., Barrett, L., Goanta, C., Preotiuc-Pietro, D., Eds.; Association for Computational Linguistics: Kerrville, TX, USA, 2021; pp. 63–75. [Google Scholar]

- Evdaimon, I.; Abdine, H.; Xypolopoulos, C.; Outsios, S.; Vazirgiannis, M.; Stamou, G. GreekBART: The First Pretrained Greek Sequence-to-Sequence Model. In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), Torino, Italy, 20–25 May 2024; Calzolari, N., Kan, M.Y., Hoste, V., Lenci, A., Sakti, S., Xue, N., Eds.; ELRA: Paris, France; ICCL: Prague, Czech Republic, 2024; pp. 7949–7962. [Google Scholar]

- Giarelis, N.; Mastrokostas, C.; Siachos, I.; Karacapilidis, N. A Review of Greek NLP Technologies for Chatbot Development. In Proceedings of the 27th Pan-Hellenic Conference on Progress in Computing and Informatics, Lamia, Greece, 24–26 November 2023; Association for Computing Machinery: New York, NY, USA, 2024; pp. 15–20. [Google Scholar]

- Zhang, T. Solving Large Scale Linear Prediction Problems Using Stochastic Gradient Descent Algorithms. In Proceedings of the Twenty-First International Conference on Machine Learning, Banff, AB, Canada, 4–8 July 2004; Association for Computing Machinery: New York, NY, USA, 2004; p. 116. [Google Scholar]

- Crammer, K.; Dekel, O.; Keshet, J.; Shalev-Shwartz, S.; Singer, Y.; Warmuth, M.K. Online Passive-Aggressive Algorithms. J. Mach. Learn. Res. 2006, 7, 551–585. [Google Scholar]

- Friedman, J.H. Greedy Function Approximation: A Gradient Boosting Machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Murtagh, F. Multilayer Perceptrons for Classification and Regression. Neurocomputing 1991, 2, 183–197. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; Burstein, J., Doran, C., Solorio, T., Eds.; Association for Computational Linguistics: Kerrville, TX, USA, 2019; pp. 4171–4186. [Google Scholar]

- Koehn, P. Europarl: A Parallel Corpus for Statistical Machine Translation. In Proceedings of the Machine Translation Summit X, Phuket, Thailand, 13 September 2005; Papers. 2005; pp. 79–86. [Google Scholar]

- Athinaios, K.; Chalkidis, I.; Pantazi, D.A.; Papaloukas, C. Named Entity Recognition Using a Novel Linguistic Model for Greek Legal Corpora Based on BERT Model. Bachelor’s Thesis, School of Science, Department of Informatics and Telecommunications, National and Kapodistrian University Of Athens, Athens, Greece, 2020. [Google Scholar]

- Grave, E.; Bojanowski, P.; Gupta, P.; Joulin, A.; Mikolov, T. Learning Word Vectors for 157 Languages. In Proceedings of the Eleventh International Conference on Language Resources and Evaluation (LREC 2018), Miyazaki, Japan, 7–12 May 2018; Calzolari, N., Choukri, K., Cieri, C., Declerck, T., Goggi, S., Hasida, K., Isahara, H., Maegaard, B., Mariani, J., Mazo, H., et al., Eds.; European Language Resources Association (ELRA): Paris, France, 2018. [Google Scholar]

- Outsios, S.; Karatsalos, C.; Skianis, K.; Vazirgiannis, M. Evaluation of Greek Word Embeddings. In Proceedings of the Twelfth Language Resources and Evaluation Conference, Marseille, France, 11–16 May 2020; Calzolari, N., Béchet, F., Blache, P., Choukri, K., Cieri, C., Declerck, T., Goggi, S., Isahara, H., Maegaard, B., Mariani, J., et al., Eds.; European Language Resources Association: Paris, France, 2020; pp. 2543–2551. [Google Scholar]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient Estimation of Word Representations in Vector Space. arXiv 2013, arXiv:1301.3781. [Google Scholar] [CrossRef]

- Bojanowski, P.; Grave, E.; Joulin, A.; Mikolov, T. Enriching Word Vectors with Subword Information. Trans. Assoc. Comput. Linguist. 2017, 5, 135–146. [Google Scholar] [CrossRef]

- Outsios, S.; Skianis, K.; Meladianos, P.; Xypolopoulos, C.; Vazirgiannis, M. Word Embeddings from Large-Scale Greek Web Content. arXiv 2018, arXiv:1810.06694. [Google Scholar] [CrossRef]

- Lioudakis, M.; Outsios, S.; Vazirgiannis, M. An Ensemble Method for Producing Word Representations Focusing on the Greek Language. In Proceedings of the 3rd Workshop on Technologies for MT of Low Resource Languages, Suzhou, China, 4–7 December 2020; Karakanta, A., Ojha, A.K., Liu, C.-H., Abbott, J., Ortega, J., Washington, J., Oco, N., Lakew, S.M., Pirinen, T.A., Malykh, V., et al., Eds.; Association for Computational Linguistics: Kerrville, TX, USA, 2020; pp. 99–107. [Google Scholar]

- Reimers, N.; Gurevych, I. Sentence-BERT: Sentence Embeddings Using Siamese BERT-Networks. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; Inui, K., Jiang, J., Ng, V., Wan, X., Eds.; Association for Computational Linguistics: Kerrville, TX, USA, 2019; pp. 3982–3992. [Google Scholar]

- Papadopoulos, D.; Metropoulou, K.; Papadakis, N.; Matsatsinis, N. FarFetched: Entity-Centric Reasoning and Claim Validation for the Greek Language Based on Textually Represented Environments. In Proceedings of the 12th Hellenic Conference on Artificial Intelligence, Corfu, Greece, 7–9 September 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 1–10. [Google Scholar]

- Pitenis, Z.; Zampieri, M.; Ranasinghe, T. Offensive Language Identification in Greek. In Proceedings of the Twelfth Language Resources and Evaluation Conference, Marseille, France, 11–16 May 2020; Calzolari, N., Béchet, F., Blache, P., Choukri, K., Cieri, C., Declerck, T., Goggi, S., Isahara, H., Maegaard, B., Mariani, J., et al., Eds.; European Language Resources Association: Paris, France, 2020; pp. 5113–5119. [Google Scholar]

- Omar, A.; Mahmoud, T.M.; Abd-El-Hafeez, T.; Mahfouz, A. Multi-Label Arabic Text Classification in Online Social Networks. Inf. Syst. 2021, 100, 101785. [Google Scholar] [CrossRef]

- Maslennikova, A.; Labruna, P.; Cimino, A.; Dell’Orletta, F. Quanti Anni Hai? Age Identification for Italian. In Proceedings of the CLiC-it, Bari, Italy, 13–15 November 2019; Available online: https://ceur-ws.org/Vol-2481/ (accessed on 23 July 2024).

- Papucci, M.; De Nigris, C.; Miaschi, A. Evaluating Text-To-Text Framework for Topic and Style Classification of Italian Texts. In Proceedings of the Sixth Workshop on Natural Language for Artificial Intelligence (NL4AI 2022) Co-Located with 21th International Conference of the Italian Association for Artificial Intelligence (AI*IA 2022), Udine, Italy, 30 November 2022; Available online: https://ceur-ws.org/Vol-3287/ (accessed on 23 July 2024).

- Antypas, D.; Ushio, A.; Camacho-Collados, J.; Silva, V.; Neves, L.; Barbieri, F. Twitter Topic Classification. In Proceedings of the 29th International Conference on Computational Linguistics, Gyeongju, Republic of Korea, 12–17 October 2022; Calzolari, N., Huang, C.R., Kim, H., Pustejovsky, J., Wanner, L., Choi, K.S., Ryu, P.M., Chen, H.H., Donatelli, L., Ji, H., et al., Eds.; International Committee on Computational Linguistics: Prague, Czech Republic, 2022; pp. 3386–3400. [Google Scholar]

- Medvedev, A.N.; Lambiotte, R.; Delvenne, J.-C. The Anatomy of Reddit: An Overview of Academic Research. In Proceedings of the Dynamics On and Of Complex Networks III; Ghanbarnejad, F., Saha Roy, R., Karimi, F., Delvenne, J.-C., Mitra, B., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 183–204. [Google Scholar]

- Proferes, N.; Jones, N.; Gilbert, S.; Fiesler, C.; Zimmer, M. Studying Reddit: A Systematic Overview of Disciplines, Approaches, Methods, and Ethics. Soc. Media Soc. 2021, 7, 205630512110190. [Google Scholar] [CrossRef]

- Agarap, A.F. Deep Learning Using Rectified Linear Units (ReLU). arXiv 2018, arXiv:1803.08375. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar] [CrossRef]

- Liu, D.C.; Nocedal, J. On the Limited Memory BFGS Method for Large Scale Optimization. Math. Program. 1989, 45, 503–528. [Google Scholar] [CrossRef]

- El Anigri, S.; Himmi, M.M.; Mahmoudi, A. How BERT’s Dropout Fine-Tuning Affects Text Classification? In Proceedings of the Business Intelligence, Beni Mellal, Morocco, 27–29 May 2021; Fakir, M., Baslam, M., El Ayachi, R., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 130–139. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).