Underwater Image Enhancement Using Dynamic Color Correction and Lightweight Attention-Embedded SRResNet

Abstract

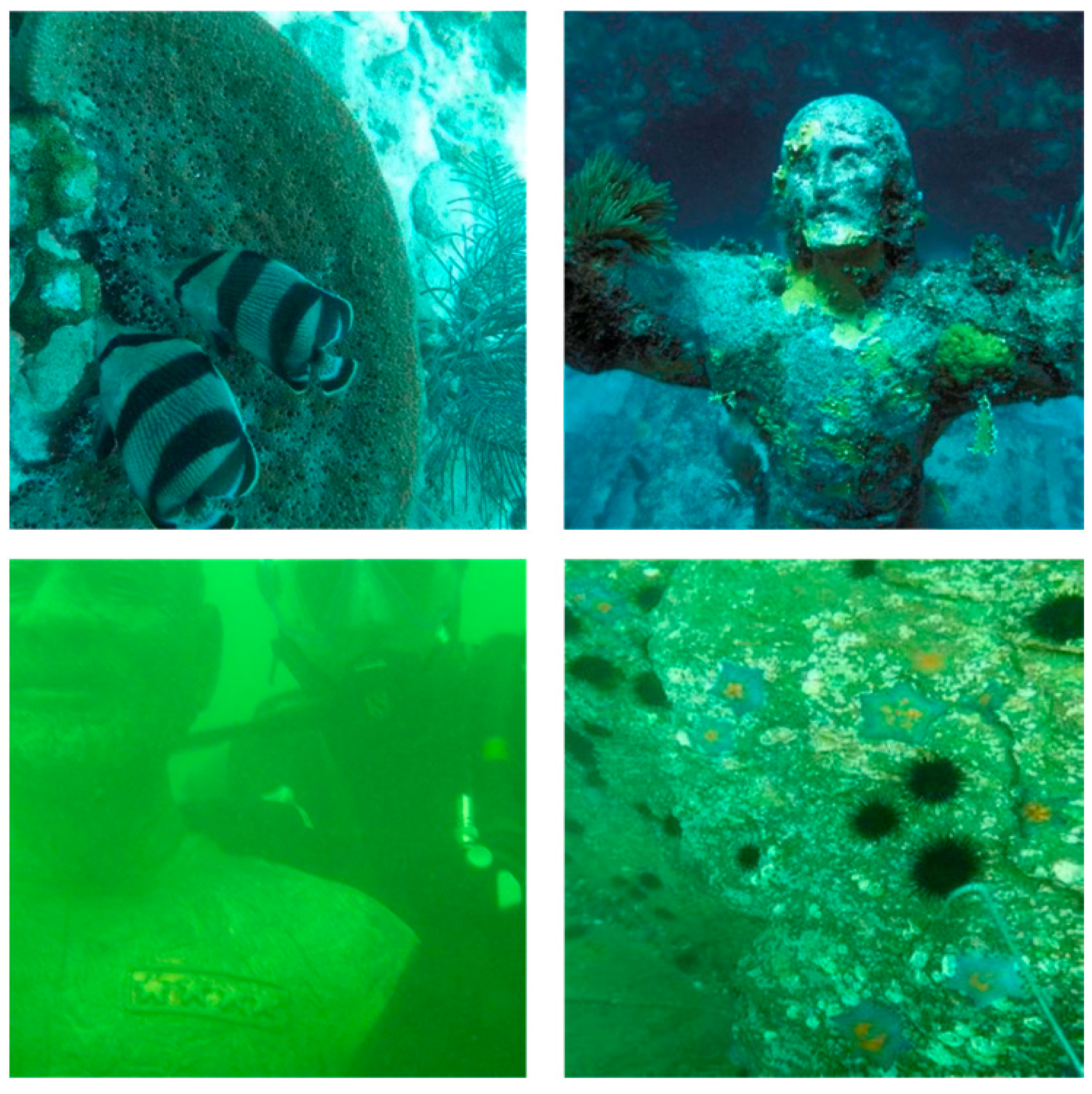

1. Introduction

- Dynamic Color-Recovery Module: Derives per-channel correction factors directly from image mean values—eliminating prior assumptions—to achieve effective color cast removal.

- Lightweight Residual Blocks: An SRResNet-derived architecture leveraging depthwise separable convolutions with reduced channel dimensions and layer counts to minimize computational overhead, while preserving feature propagation through residual connections.

- Comprehensive Experimental Validation: Conducts comparative and ablation studies on the UIEB dataset against traditional and established deep-learning methods, employing qualitative and quantitative metrics to substantiate the method’s superiority in color correction and detail enhancement.

2. Proposed Method

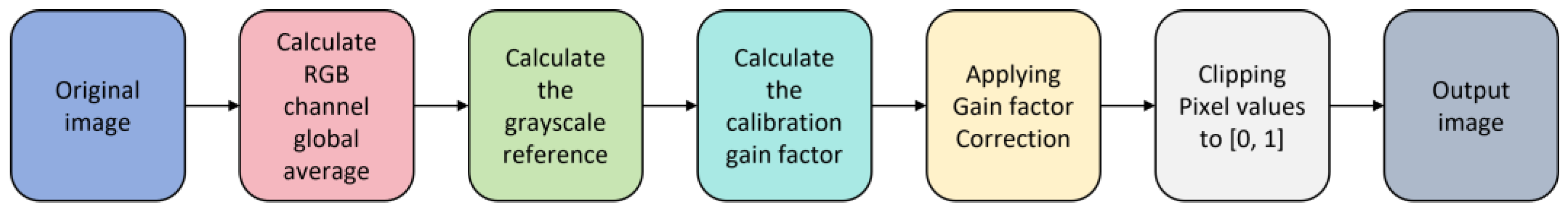

2.1. Dynamic Color Recorrection Module

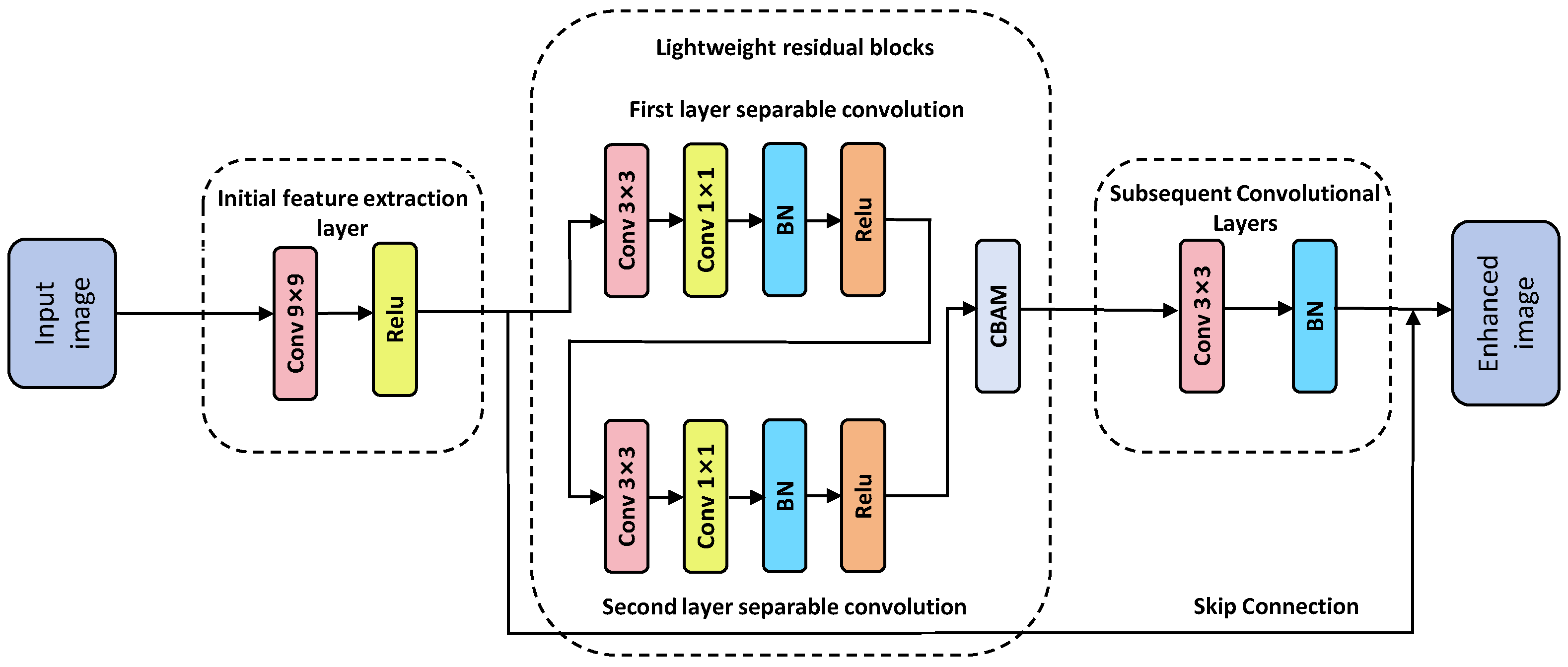

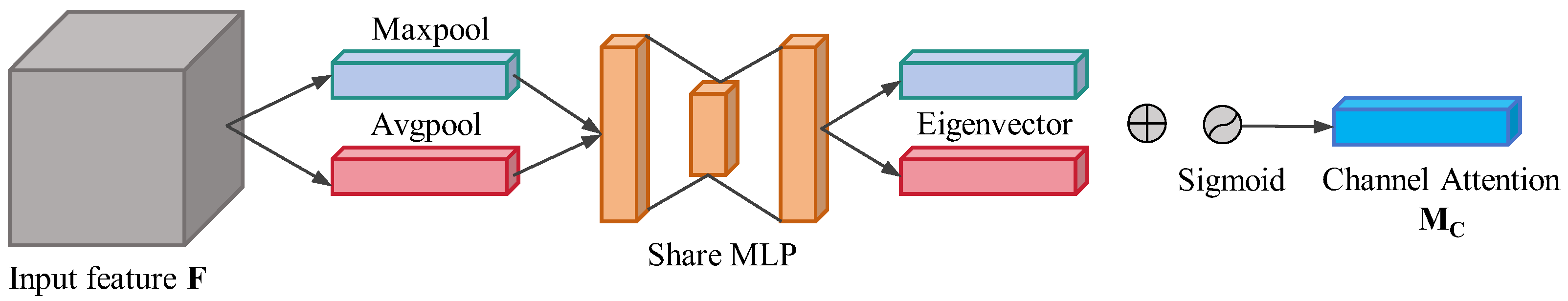

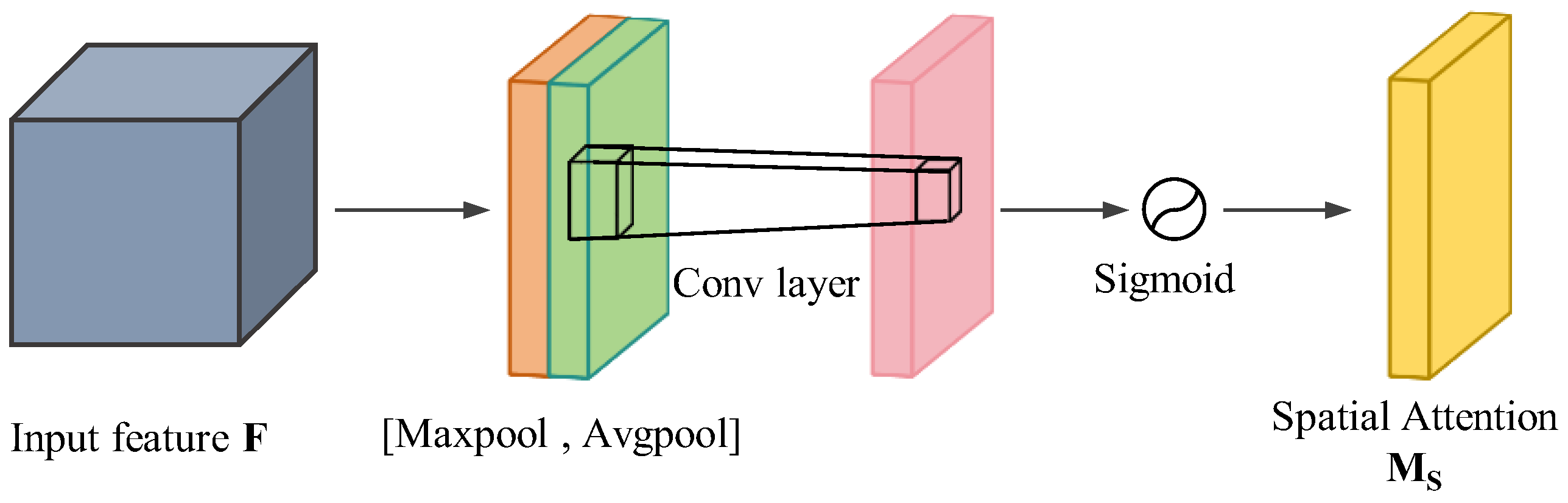

2.2. Lightweight Residual Enhancement Network

Core Design of Lightweight Residual Block

2.3. Loss Functions and Optimization Strategy

3. Experimental Validation

3.1. Dataset Selection and Experimental Configuration

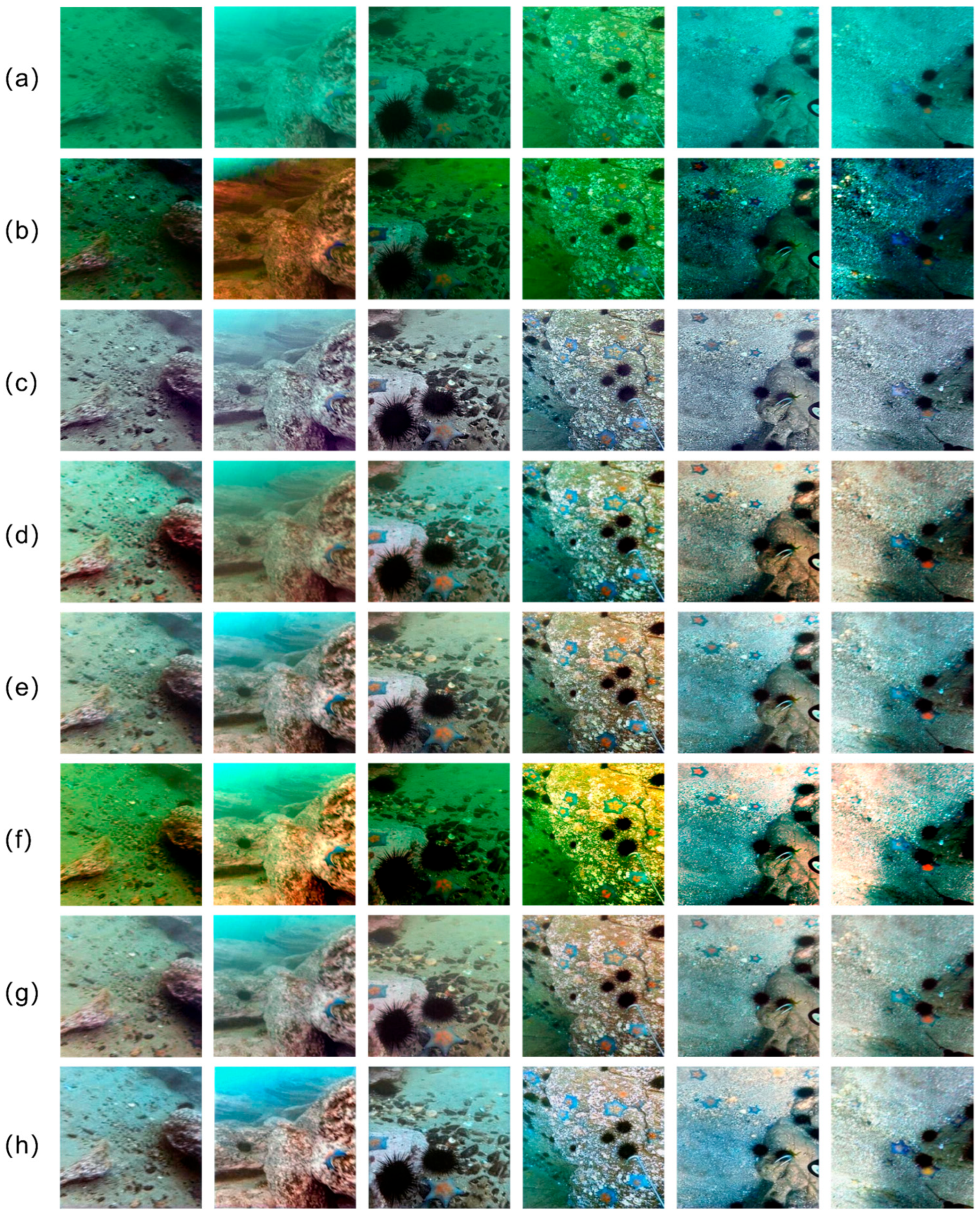

3.2. Qualitative Comparison

3.3. Quantitative Comparison

3.4. Ablation Studies

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Correction Statement

References

- Raihan, J.A.; Abas, P.A.; De Silva, L.C. Review of underwater image restoration algorithms. IET Image Process. 2019, 13, 1587–1596. [Google Scholar] [CrossRef]

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.; Tao, D. An Underwater Image Enhancement Benchmark Dataset and Beyond. IEEE Trans. Image Process. 2019, 29, 4376–4389. [Google Scholar] [CrossRef] [PubMed]

- Drews-Jr, P.L.; Nascimento, E.; Moraes, F.; Botelho, S.; Campos, M. Transmission estimation in underwater single images. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Sydney, Australia, 2–8 December 2013; pp. 825–830. [Google Scholar]

- Land, E.H.; McCann, J.J. Lightness and Retinex Theory. J. Opt. Soc. Am. 1971, 61, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Pizer, S.M.; Amburn, E.P.; Austin, J.D.; Cromartie, R.; Geselowitz, A.; Greer, T.; Romeny, B.T.H.; Zimmerman, J.B.; Zuiderveld, K. Adaptive histogram equalization and its variations. Comput. Vis. Graph. Image Process. 1987, 39, 355–368. [Google Scholar] [CrossRef]

- Schechner, Y.Y.; Karpel, N. Recovery of underwater visibility and structure by polarization analysis. IEEE J. Ocean. Eng. 2005, 30, 570–587. [Google Scholar] [CrossRef]

- Ancuti, C. Color Balance and Fusion for Underwater Image Enhancement. IEEE Trans. Image Process. 2017, 27, 379–393. [Google Scholar] [CrossRef]

- Peng, Y.T.; Chen, Y.R.; Chen, Z.; Wang, J.H. Underwater image enhancement based on histogram-equalization approximation using physics-based dichromatic modeling. Sensors 2022, 22, 2168. [Google Scholar] [CrossRef]

- Anwar, S.; Li, C.; Porikli, F. Deep Underwater Image Enhancement. arXiv 2018, arXiv:1807.03528. [Google Scholar]

- Islam, M.J.; Xia, Y.; Sattar, J. Fast underwater image enhancement for improved visual perception. IEEE Robot. Autom. Lett. 2020, 5, 3227–3234. [Google Scholar] [CrossRef]

- Wei, Y.; Zheng, Z.; Jia, X. UHD underwater image enhancement via frequency-spatial domain aware network. In Proceedings of the Asian Conference on Computer Vision, Macao, China, 4–8 December 2022; pp. 299–314. [Google Scholar]

- Sun, X.; Liu, L.P.; Dong, J.Y. Underwater image enhancement with encoding-decoding deep CNN networks. In Proceedings of the IEEE SmartWorld, Ubiquitous Intelligence & Computing, Advanced & Trusted Computed, Scalable Computing & Communications, Cloud & Big Data Computing, Internet of People and Smart City Innovation, San Francisco, CA, USA, 4–8 August 2017; IEEE Computer Society Press: Los Alamitos, CA, USA, 2017; pp. 1–6. [Google Scholar]

- Wang, Y.; Guo, J.; Gao, H.; Yue, H. UIEC2-Net: CNN-based Underwater Image Enhancement Using Two Color Space. Signal Process. Image Commun. 2021, 96, 116250. [Google Scholar] [CrossRef]

- Li, C.; Guo, J.; Guo, C. Emerging from Water: Underwater Image Color Correction Based on Weakly Supervised Color Transfer. IEEE Signal Process. Lett. 2018, 25, 323–327. [Google Scholar] [CrossRef]

- Lin, J.C.; Hsu, C.B.; Lee, J.C. Dilated generative adversarial networks for underwater image restoration. J. Mar. Sci. Eng. 2022, 10, 500. [Google Scholar] [CrossRef]

- Xiang, Y.; Yang, X.; Ren, Q.; Wang, G.; Gao, J.; Chew, K.H.; Chen, R.P. Underwater polarization imaging recovery based on polarimetric residual dense network. IEEE Photon. J. 2022, 14, 7860206. [Google Scholar] [CrossRef]

- Fu, Z.; Lin, H.; Yang, Y.; Chai, S.; Sun, L.; Huang, Y.; Ding, X. Unsupervised underwater image restoration: From a homology perspective. In Proceedings of the 36th AAAI Conference on Artificial Intelligence, Online, 22 February–1 March 2022; AAAI Press: Palo Alto, CA, USA, 2022; pp. 643–651. [Google Scholar]

- Qin, N.; Wu, J.; Liu, X. MCRNet: Underwater image enhancement using multi-color space residual network. Biomim. Intell. Robot. 2024, 4, 100169. [Google Scholar] [CrossRef]

- Shin, Y.S.; Cho, Y.; Pandey, G.; Kim, A. Estimation of ambient light and transmission map with common convolutional architecture. In Proceedings of the OCEANS 2016 MTS/IEEE Monterey, Monterey, CA, USA, 19–23 November 2016; IEEE: New York, NY, USA, 2016; pp. 1–7. [Google Scholar]

- Hao, J.; Yang, H.; Hou, X.; Zhang, Y. Two-stage underwater image restoration algorithm based on physical model and causal intervention. IEEE Signal Process. Lett. 2023, 30, 120–124. [Google Scholar] [CrossRef]

- Spandana, C.; Srisurya, I.V.; Priyadharshini, A.R.; Krithika, S.; Nandhini, S.A. Underwater image enhancement and restoration using cycle GAN. In Proceedings of the International Conference on Innovative Computing and Communication, New Delhi, India, 16–17 February 2023; Springer Nature: Singapore, 2023; pp. 99–110. [Google Scholar]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A. Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Liu, R.; Fan, X.; Zhu, M. Real-world underwater enhancement: Challenges, benchmarks, and solutions under natural light. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 4861–4875. [Google Scholar] [CrossRef]

- Zhang, W.; Zhuang, P.; Sun, H. Underwater image enhancement via minimal color loss and locally adaptive contrast enhancement. IEEE Trans. Image Process. 2022, 31, 3997–4010. [Google Scholar] [CrossRef]

- Tolie, H.F.; Ren, J.; Elyan, E. DICAM: Deep inception and channel-wise attention modules for underwater image enhancement. Neurocomputing 2024, 584, 127585. [Google Scholar] [CrossRef]

- Jiang, J.; Ye, T.; Bai, J. Five A + Network: You Only Need 9K Parameters for Underwater Image Enhancement. arXiv 2023, arXiv:2305.08824. [Google Scholar]

- Fu, Z.; Li, X.; Ma, H.; Yan, P.; Li, Q.; Chen, W. Uncertainty Inspired Underwater Image Enhancement. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer Nature: Cham, Switzerland, 2022; pp. 465–482. [Google Scholar]

- Kumar, N.N.; Ramakrishna, S. An Impressive Method to Get Better Peak Signal Noise Ratio (PSNR), Mean Square Error (MSE) Values Using Stationary Wavelet Transform (SWT). Glob. J. Comput. Sci. Technol. Graph. Vis. 2012, 12, 34–40. [Google Scholar]

- Hore, A.; Ziou, D. Image quality metrics: PSNR vs. SSIM. In Proceedings of the 2010 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 2366–2369. [Google Scholar]

- Yang, M.; Sowmya, A. An underwater color image quality evaluation metric. IEEE Trans. Image Process. 2015, 24, 6062–6071. [Google Scholar] [CrossRef]

- Panetta, K.; Gao, C.; Agaian, S. Human-visual-system-inspired underwater image quality measures. IEEE J. Ocean Eng. 2015, 41, 541–551. [Google Scholar] [CrossRef]

| Methods | UIEB | RUIE | ||||

|---|---|---|---|---|---|---|

| PSNR | SSIM | UCIQE | UIQM | UCIQE | UIQM | |

| UDCP | 14.147 | 0.628 | 0.223 | 0.357 | 0.195 | 0.328 |

| MLLE | 17.756 | 0.717 | 0.253 | 1.340 | 0.259 | 1.623 |

| DICAM | 18.208 | 0.755 | 0.261 | 1.105 | 0.251 | 1.348 |

| FA+Net | 19.174 | 0.836 | 0.273 | 1.208 | 0.246 | 1.468 |

| DeepWater | 17.079 | 0.680 | 0.315 | 0.641 | 0.317 | 0.895 |

| PUIE-Net | 19.048 | 0.829 | 0.829 | 1.080 | 0.242 | 1.559 |

| Ours | 20.093 | 0.805 | 0.965 | 1.563 | 0.492 | 1.611 |

| Module Name | Adding Modules | PSNR | SSIM | UCIQE | UIQM |

|---|---|---|---|---|---|

| Methods 1 | - | 15.344 | 0.492 | 0.523 | 1.361 |

| Methods 2 | +DCR | 17.826 | 0.663 | 0.701 | 1.511 |

| Methods 3 | +CBAM | 16.087 | 0.618 | 0.690 | 1.448 |

| Methods 4 | DCR + CBAM | 19.236 | 0.747 | 0.729 | 1.587 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, K.; Zhang, Y.; Yuan, D.; Feng, X. Underwater Image Enhancement Using Dynamic Color Correction and Lightweight Attention-Embedded SRResNet. J. Mar. Sci. Eng. 2025, 13, 1546. https://doi.org/10.3390/jmse13081546

Zhang K, Zhang Y, Yuan D, Feng X. Underwater Image Enhancement Using Dynamic Color Correction and Lightweight Attention-Embedded SRResNet. Journal of Marine Science and Engineering. 2025; 13(8):1546. https://doi.org/10.3390/jmse13081546

Chicago/Turabian StyleZhang, Kui, Yingying Zhang, Da Yuan, and Xiandong Feng. 2025. "Underwater Image Enhancement Using Dynamic Color Correction and Lightweight Attention-Embedded SRResNet" Journal of Marine Science and Engineering 13, no. 8: 1546. https://doi.org/10.3390/jmse13081546

APA StyleZhang, K., Zhang, Y., Yuan, D., & Feng, X. (2025). Underwater Image Enhancement Using Dynamic Color Correction and Lightweight Attention-Embedded SRResNet. Journal of Marine Science and Engineering, 13(8), 1546. https://doi.org/10.3390/jmse13081546