A Ship Heading Estimation Method Based on DeepLabV3+ and Contrastive Learning-Optimized Multi-Scale Similarity

Abstract

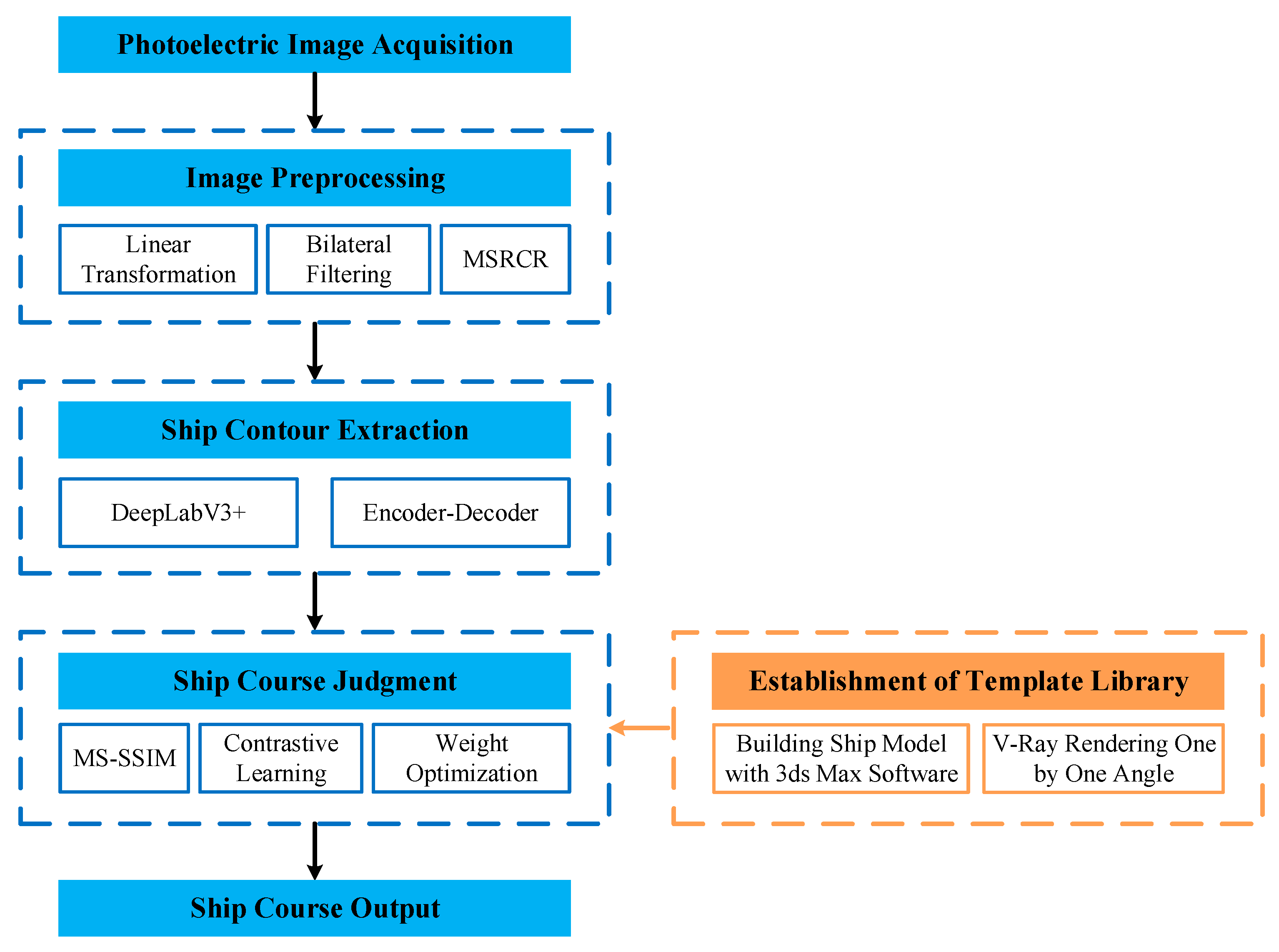

1. Introduction

- (1)

- A cascaded image enhancement framework is proposed, which integrates linear transformation, bilateral filtering, and the MSRCR algorithm to achieve brightness enhancement, denoising, and dehazing, thereby significantly improving the visibility of ship targets.

- (2)

- High-precision vessel contour extraction is achieved based on the DeepLabV3+ network, providing robust support for subsequent heading estimation through template matching.

- (3)

- A novel heading estimation method based on multi-scale similarity matching optimized via contrastive learning is proposed. By employing triplet training, the method dynamically adjusts the scale weights in the MS-SSIM algorithm, significantly enhancing robustness against image degradation and partial occlusion.

- (4)

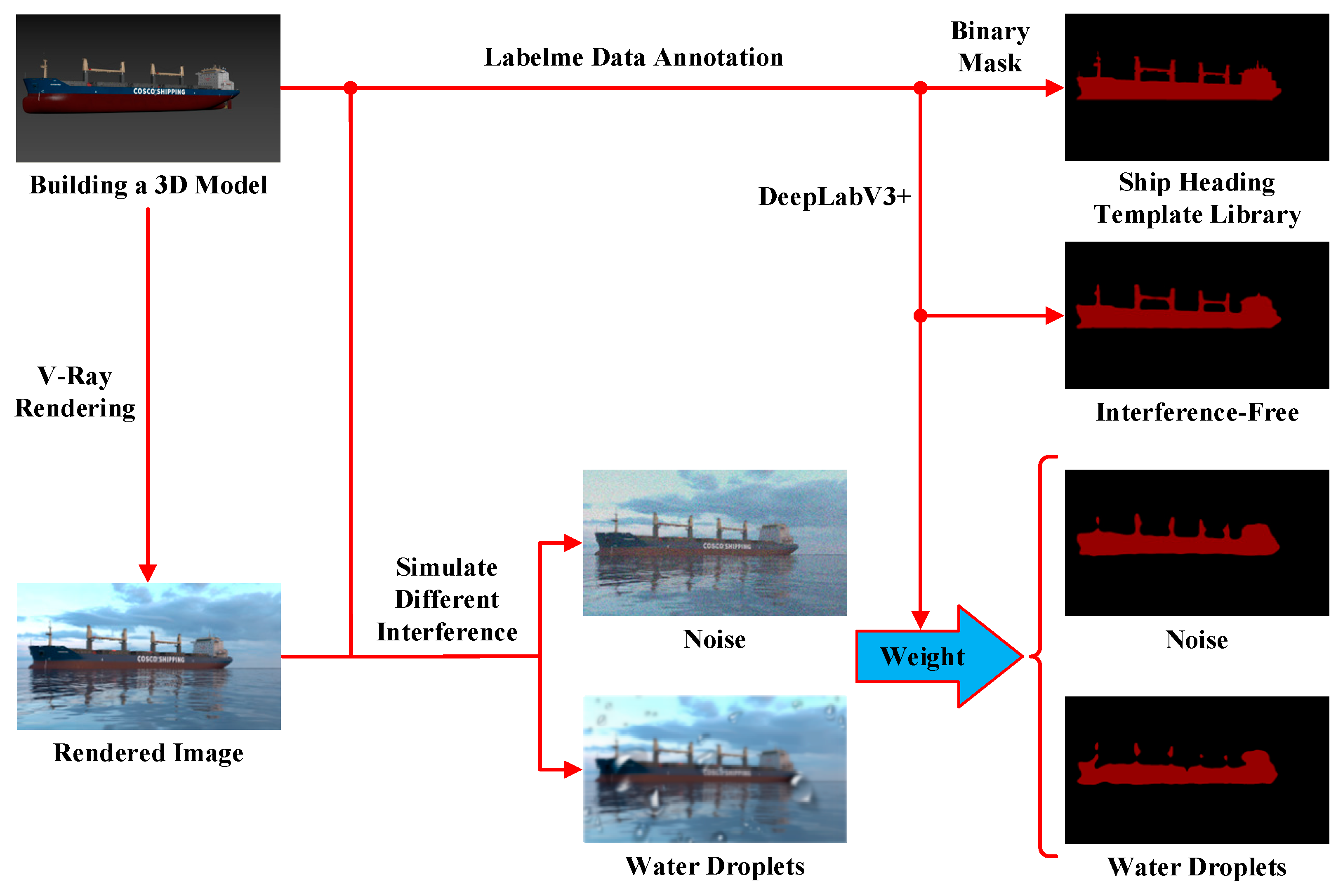

- A 3D model template library of ships at multiple orientations is constructed, and the proposed method is validated in simulated scenarios, demonstrating comprehensive advantages in both robustness and accuracy.

2. Methodology

2.1. Image Preprocessing

2.1.1. Brightness Enhancement

2.1.2. Denoising

2.1.3. Defogging

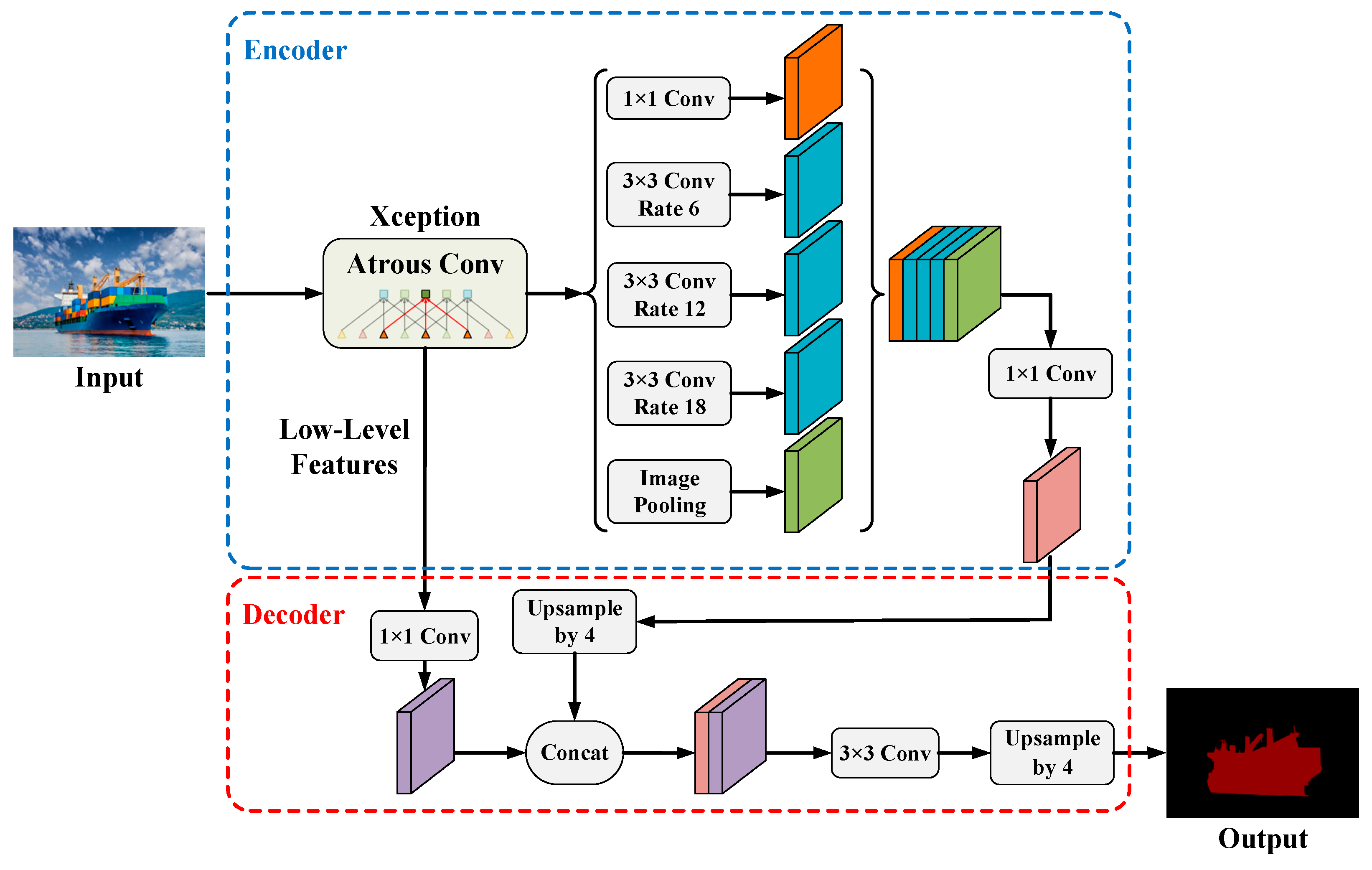

2.2. Ship Target Contour Extraction

- (1)

- Multi-scale receptive field via atrous convolution: In the encoder part of DeepLabV3+, atrous convolution allows the model to capture the semantic features of ship targets at different scales with multi-scale receptive fields, making it particularly suitable for maritime images where targets are unevenly distributed over various distances.

- (2)

- Contextual fusion through the ASPP module: The Atrous Spatial Pyramid Pooling (ASPP) module effectively integrates local detail information and global contextual information, enhancing the model’s segmentation capabilities in complex environments and supporting multi-target ship segmentation tasks.

- (3)

- Edge feature restoration through the decoder: The decoder of DeepLabV3+ fuses high-level semantic features with low-level edge features extracted by the encoder, generating high-resolution segmentation results, which are well suited for the accurate segmentation of elongated ship structures and contours.

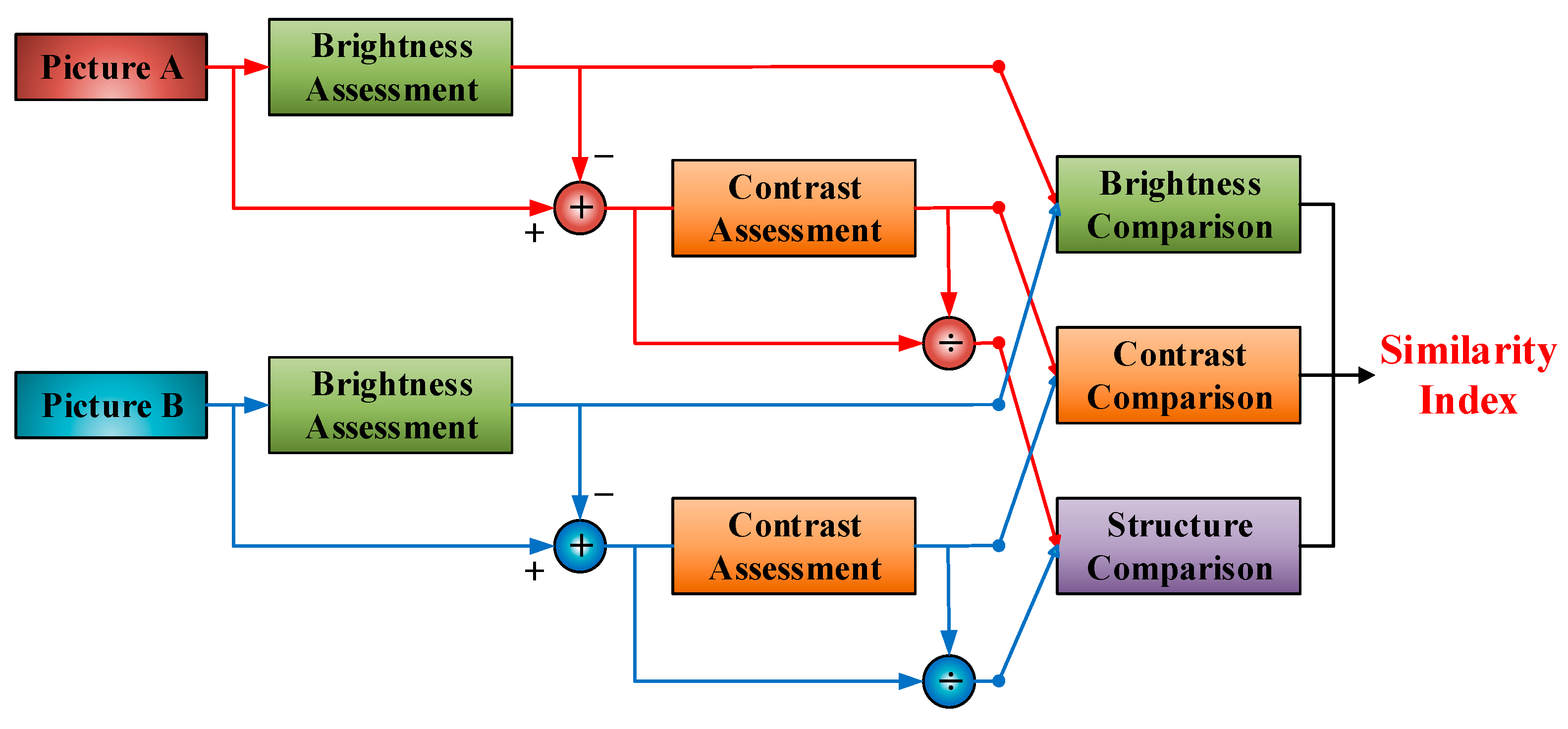

2.3. Dynamic Multi-Scale Similarity-Based Heading Matching

2.3.1. SSIM

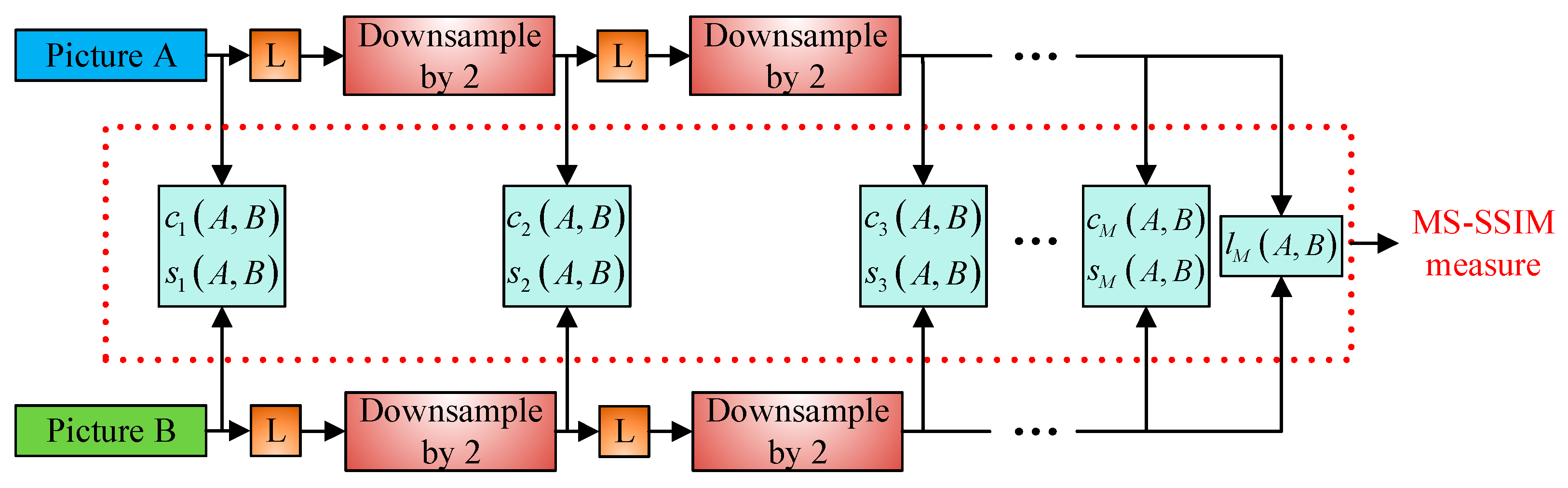

2.3.2. MS-SSIM

2.3.3. Contrastive Learning for Weight Optimization

- (1)

- The sensitivity of structural features at different scales to heading variations dynamically changing depending on factors such as ship size, distance, and the degree of occlusion.

- (2)

- Environmental noise and atmospheric disturbances at sea often degrading the feature consistency at certain scales, causing some scale-specific features to become invalid and requiring effective suppression of their weights during the dynamic process.

2.3.4. Algorithm Flow

| Algorithm 1 Ship heading matching algorithm based on MS-SSIM with contrastive-learning-based weight optimization | |

| Input: Given the segmented ship image to be matched D, the set of template library images Z, positive sample images from the template library P, negative sample images G, initial weights ηinit, learning rate ξ, total number of scales for MS-SSIM computation M, margin threshold ψ, and maximum number of iterations δmax. | |

| Output: Optimal heading estimation θopt and optimal matching similarity Smax. | |

| //Step 1. Contrastive Learning-Based Weight Optimization | |

| 1: | η ← ηinit//Weight Initialization |

| 2: | for δ = 1 to δmax do |

| 3: | ∆η ← 0 |

| 4: | for each positive sample Pn ∈ P and negative sample Gn ∈ G do |

| 5: | SDP ← MS-SSIM(D, Pn, η)//Compute the similarity of the positive sample according to Equations (10)–(16) and (19). |

| 6: | SDG ← MS-SSIM(D, Gn, η)//Compute the similarity of the negative sample according to Equations (10)–(16) and (19). |

| 7: | FDPG ← max(SDG − SDP + ψ, 0)//Compute the contrastive loss according to Equation (21). |

| 8: | if FDPG > 0 then |

| 9: | //Compute the gradient of the positive sample with respect to the weights according to Equations (23) and (24). |

| 10: | //Compute the gradient of the negative sample with respect to the weights according to Equations (23) and (24). |

| 11: | ∆η ← ∆η + (∆ηDG − ∆ηDP) |

| 12: | end if |

| 13: | end for |

| 14: | η ← η − ξ · ∆η //Update the weights according to Equation (25). |

| 15: | //Apply weight projection and normalization constraint according to Equation (26). |

| 16: | end for |

| //Step 2. Template Library Matching | |

| 17: | for each template library sample Zn ∈ Z do |

| 18: | Sλn ← MS-SSIM(D, Zn, η)//Parallel Computing |

| 19: | Sλ[n − 1] ← Sλn |

| 20: | end for |

| 21: | θopt, Smax ← argmax(Sλ) |

| 22: | return θopt, Smax |

3. Results

3.1. Experimental Environment and Dataset Construction

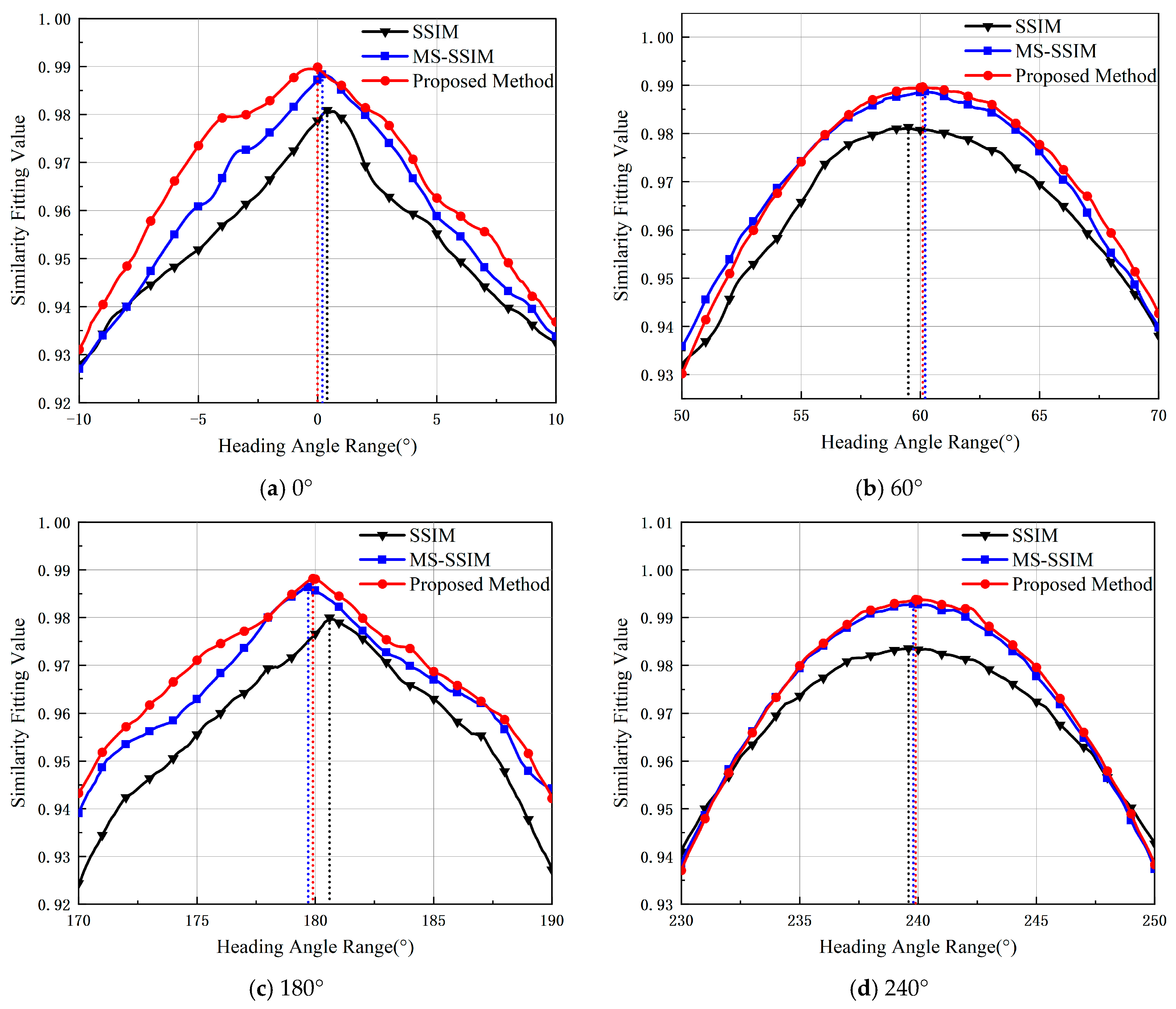

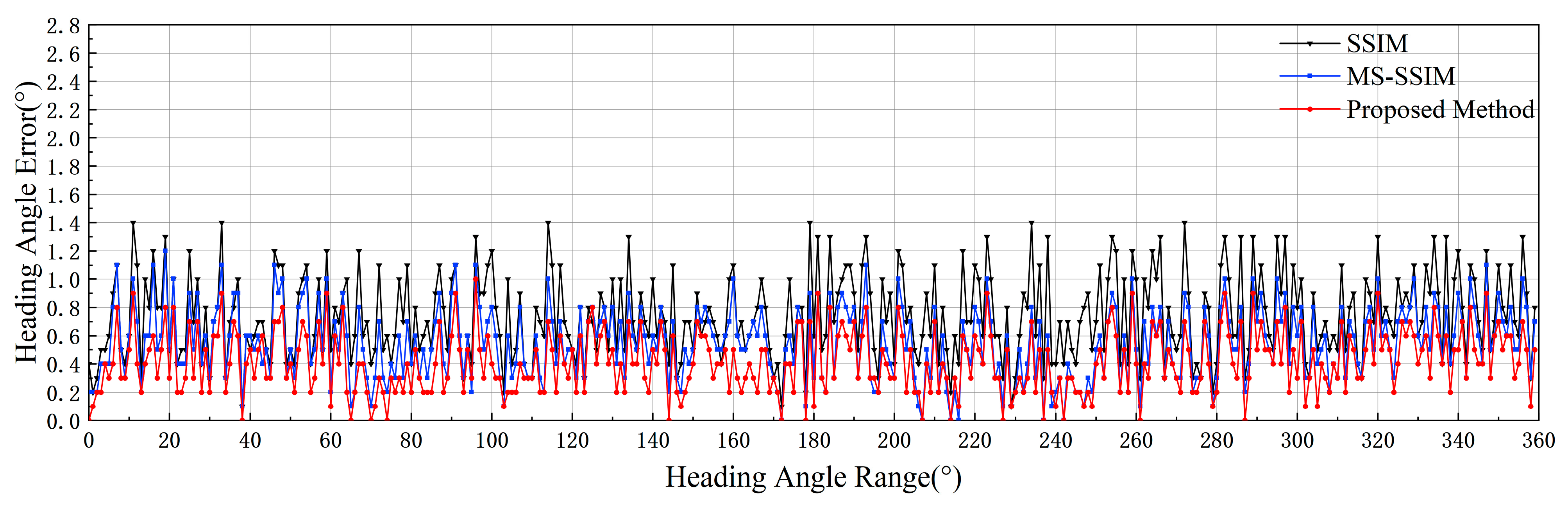

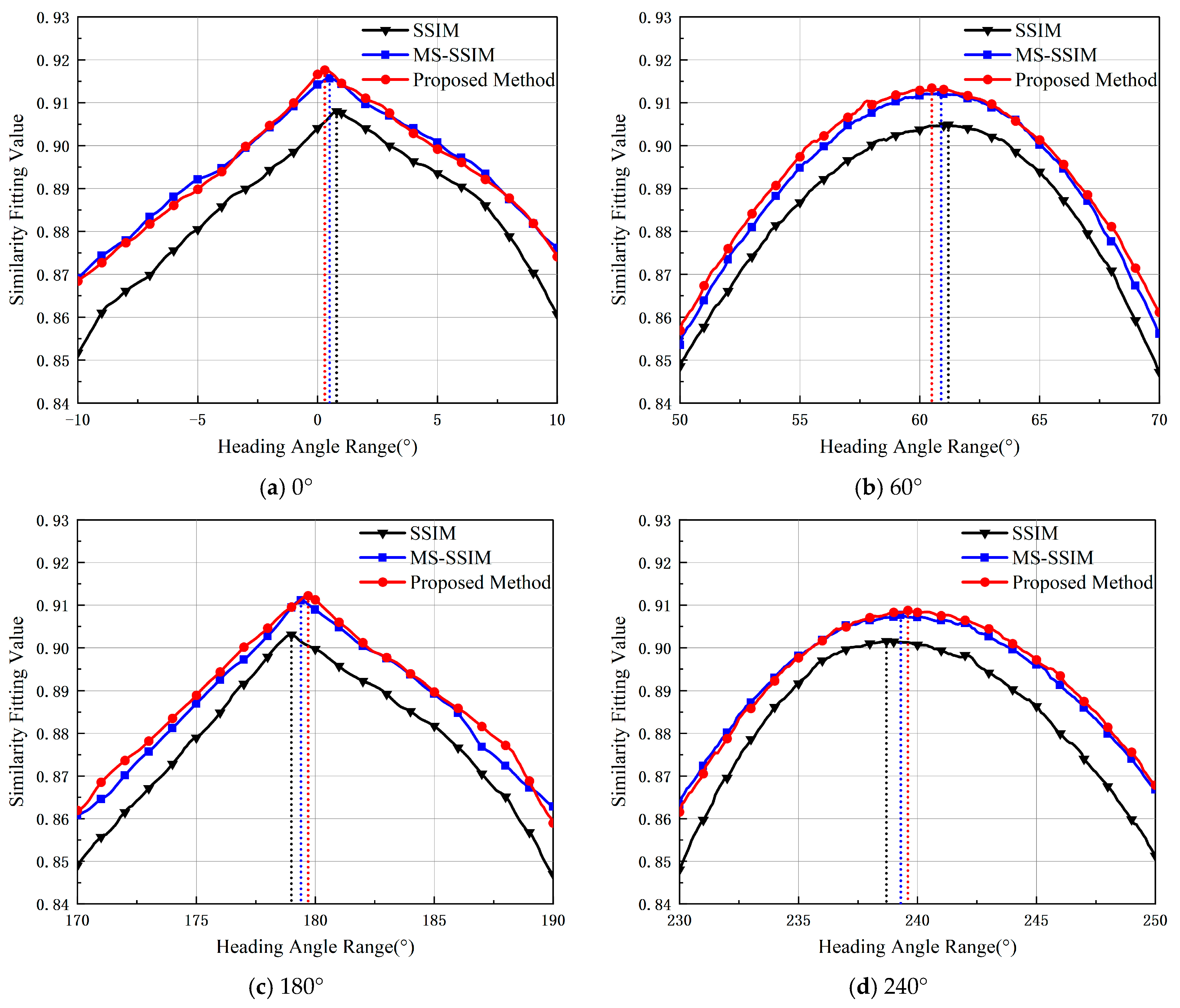

3.2. Algorithm Simulation and Validation

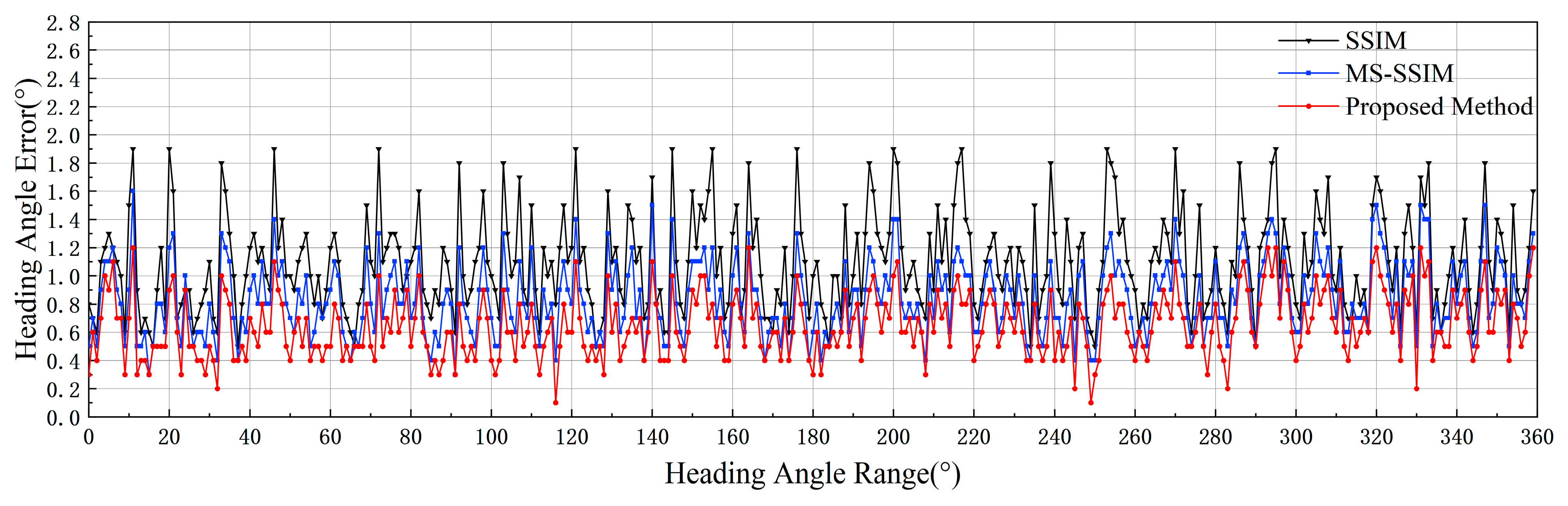

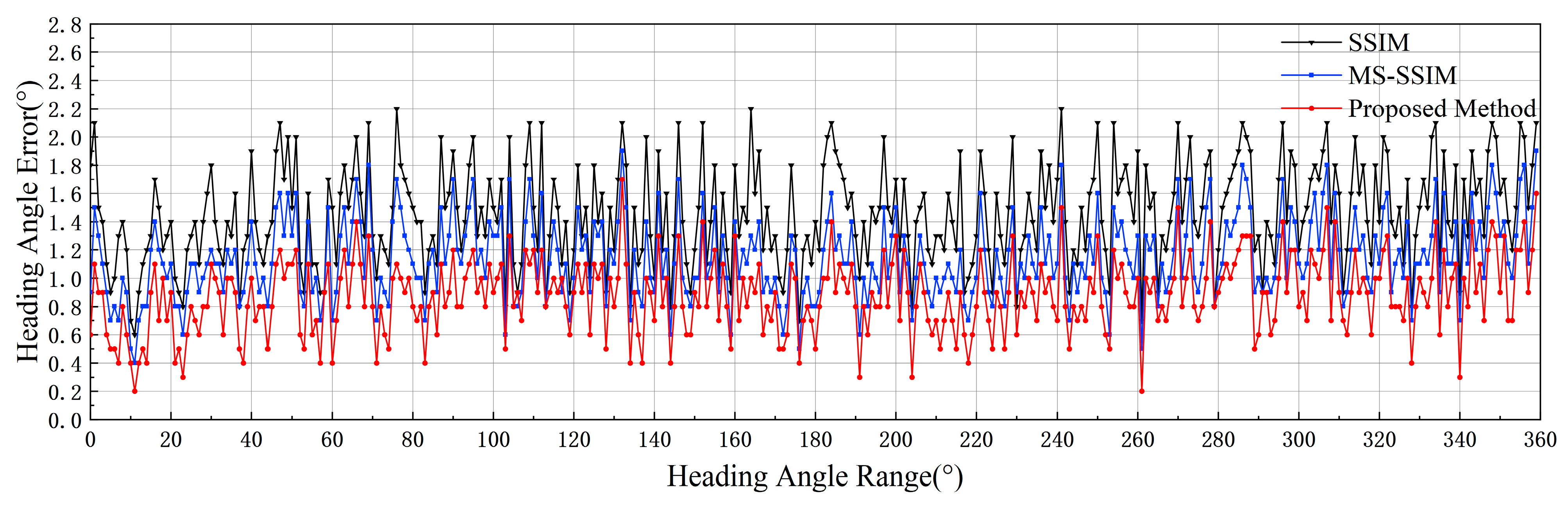

- (1)

- Single-scale SSIM algorithm;

- (2)

- Fixed-weight MS-SSIM algorithm, following the default multi-scale weight settings proposed by Wang et al. [28] (δ = [0.0448, 0.2856, 0.3001, 0.2363, 0.1333]);

- (3)

- The proposed contrastive-learning-based, dynamically weighted MS-SSIM algorithm (with initial weights identical to those in method (2)).

3.2.1. Interference-Free

3.2.2. Noise Interference

3.2.3. Water Mist Occlusion Interference

3.3. Real Image Experimental Validation

4. Research Limitations

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhu, L.; Li, T. Observer-based autopilot heading finite-time control design for intelligent ship with prescribed performance. J. Mar. Sci. Eng. 2021, 9, 828. [Google Scholar] [CrossRef]

- Liu, J.; Tian, L.; Fan, X. Ship heading detection based on optical remote sensing images. Command Control Simul. 2021, 43, 112–117. (In Chinese) [Google Scholar]

- Dong, K.; Zhang, Y.; Li, Z. Ship target detection and parameter estimation based on remote sensing images. Electron. Sci. Technol. 2015, 28, 102–106. (In Chinese) [Google Scholar]

- Li, X.; Chen, P.; Yang, J.; An, W.; Luo, D.; Zheng, G.; Lu, A. Extracting ship and heading from Sentinel-2 images using convolutional neural networks with point and vector learning. J. Oceanol. Limnol. 2025, 43, 16–28. [Google Scholar] [CrossRef]

- You, Y.; Ran, B.; Meng, G.; Li, Z.; Liu, F.; Li, Z. OPD-Net: Prow detection based on feature enhancement and improved regression model in optical remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2020, 59, 6121–6137. [Google Scholar] [CrossRef]

- Gao, M.; Fang, S.; Wan, L.; Kang, W.; Ma, L.; He, Y.; Zhao, K. Ship-VNet: An algorithm for ship velocity analysis based on optical remote sensing imagery containing Kelvin wakes. Electronics 2024, 13, 3468. [Google Scholar] [CrossRef]

- Graziano, M.D.; D’Errico, M.; Rufino, G. Ship heading and velocity analysis by wake detection in SAR images. Acta Astronaut. 2016, 128, 72–82. [Google Scholar] [CrossRef]

- Chen, T. Research on Ship Heading Estimation and Imaging Method for Single-Base Large Squint SAR. Master’s Thesis, University of Electronic Science and Technology of China, Chengdu, China, 2024. (In Chinese). [Google Scholar]

- Joshi, S.K.; Baumgartner, S.V. A Fast Ship Size and Heading Angle Estimation Method for Focused SAR and ISAR Images. In Proceedings of the 2024 International Radar Symposium (IRS), Berlin, Germany, 24–26 June 2024; pp. 39–43. [Google Scholar]

- Li, X.; Chen, P.; Yang, J.; An, W.; Zheng, G.; Luo, D. TKP-net: A three keypoint detection network for ships using SAR imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 17, 364–376. [Google Scholar] [CrossRef]

- Niu, Y.; Li, Y.; Huang, J.; Chen, Y. Efficient encoder-decoder network with estimated direction for SAR ship detection. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Qu, J.; Luo, Y.; Chen, W.; Wang, H. Research on the identification method of key parts of ship target based on contour matching. AIP Adv. 2023, 13, 115011. [Google Scholar] [CrossRef]

- Jiang, B.; Lu, Y.; Zhang, B.; Lu, G. Few-shot learning for image denoising. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 4741–4753. [Google Scholar] [CrossRef]

- Ding, B.; Zhang, R.; Xu, L.; Liu, G.; Yang, S.; Liu, Y. U2D2-Net: Unsupervised unified image dehazing and denoising network for single hazy image enhancement. IEEE Trans. Multimed. 2023, 26, 202–217. [Google Scholar] [CrossRef]

- Sheng, Z.; Liu, X.; Cao, S.Y.; Shen, H.L.; Zhang, H. Frequency-domain deep guided image denoising. IEEE Trans. Multimed. 2022, 25, 6767–6781. [Google Scholar] [CrossRef]

- Dehghani Tafti, A.; Mirsadeghi, E. A novel adaptive Recursive Median Filter in image noise reduction based on using the entropy. In Proceedings of the 2012 IEEE International Conference on Control System, Computing and Engineering (ICCSCE), Penang, Malaysia, 23–25 November 2012; pp. 520–523. [Google Scholar]

- Xu, G.; Zheng, C.; Gu, Y. Model for single image enhancement based on adaptive contrast stretch and multiband fusion. In Proceedings of the 2022 IEEE Conference on Telecommunications, Optics and Computer Science (TOCS), Dalian, China, 11–12 December 2022; pp. 1–7. [Google Scholar]

- Tang, H.; Zhang, W.; Zhu, H.; Zhao, K. Self-supervised real-world image denoising based on multi-scale feature enhancement and attention fusion. IEEE Access. 2024, 12, 49720–49734. [Google Scholar] [CrossRef]

- Galdran, A.; Vazquez-Corral, J.; Pardo, D.; Bertalmío, M. Fusion-based variational image dehazing. IEEE Signal Process. Lett. 2017, 24, 151–155. [Google Scholar] [CrossRef]

- Tomasi, C.; Manduchi, R. Bilateral filtering for gray and color images. In Proceedings of the Sixth International Conference on Computer Vision (ICCV), Bombay, India, 4–7 January 1998; pp. 839–846. [Google Scholar]

- Munteanu, C.; Rosa, A. Color image enhancement using evolutionary principles and the retinex theory of color constancy. In Neural Networks for Signal Processing XI: Proceedings of the 2001 IEEE Signal Processing Society Workshop, Falmouth, MA, USA, 23–25 September 2001; pp. 393–402. [Google Scholar]

- Rahman, Z.; Jobson, D.J.; Woodell, G.A. Multi-scale retinex for color image enhancement. In Proceedings of the 3rd IEEE International Conference on Image Processing, Lausanne, Switzerland, 16–19 September 1996; Volume 3, pp. 1003–1006. [Google Scholar]

- Xue, W.; Zhang, Y.; Zhu, Y.; Ye, H.; Yang, X.; Liu, W. An Improved Instance Segmentation Method of Visible Occluded Ship Images Based on Residual Feature Fusion Module and Ship Contour Predictor. IEEE Access 2024, 12, 139974–139987. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X. A Mask Attention Interaction and Scale Enhancement Network for SAR Ship Instance Segmentation. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4511005. [Google Scholar] [CrossRef]

- Yang, F.; Liu, Z.; Bai, X.; Zhang, Y. An Improved Intuitionistic Fuzzy C-Means for Ship Segmentation in Infrared Images. IEEE Trans. Fuzzy Syst. 2022, 30, 332–344. [Google Scholar] [CrossRef]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Wang, Z.; Simoncelli, E.P.; Bovik, A.C. Multiscale structural similarity for image quality assessment. In Proceedings of the 37th Asilomar Conference on Signals, Systems & Computers, Pacific Grove, CA, USA, 9–12 November 2003; Volume 2, pp. 1398–1402. [Google Scholar]

- Chen, L.C. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Liu, W.; Shu, Y.; Tang, X.; Liu, J. Semantic segmentation of remote sensing images using DeepLabv3+ with dual attention mechanism. Trop. Geogr. 2020, 40, 303–313. (In Chinese) [Google Scholar]

- Fu, H.; Gu, Z.; Wang, B.; Wang, Y. Marine target segmentation based on improved DeepLabv3+. In Proceedings of the 2022 41st Chinese Control Conference (CCC), Hefei, China, 25–27 July 2022; pp. 7314–7319. [Google Scholar]

- Cheng, L.; Xiong, R.; Wu, J.; Yan, X.; Yang, C.; Zhang, Y. Fast segmentation algorithm of USV accessible area based on attention fast DeepLabV3. IEEE Sens. J. 2024, 24, 24168–24177. [Google Scholar] [CrossRef]

- Chen, C.; Hao, X.; Long, H.; Sun, X. Asphalt pavement crack detection method based on improved DeepLabv3+ network. Semicond. Optoelectron. 2024, 45, 493–500. (In Chinese) [Google Scholar]

- Alexander, S.T. The Mean Square Error (MSE) Performance Criteria; Springer: New York, NY, USA, 1986. [Google Scholar]

- Horé, A.; Ziou, D. Image quality metrics: PSNR vs. SSIM. In Proceedings of the 20th International Conference on Pattern Recognition (ICPR), Istanbul, Turkey, 23–26 August 2010; pp. 2366–2369. [Google Scholar]

| Methods | Real-Time Capability | Resource Dependency | Edge Preservation | Color Fidelity |

|---|---|---|---|---|

| FSLID [13] | × 1 | × | √ 2 | ∆ 3 |

| U2D2Net [14] | ∆ | ∆ | × | × |

| FGDNet [15] | × | × | √ | ∆ |

| ARMF [16] | ∆ | × | ∆ | √ |

| MF-ACS [17] | × | ∆ | ∆ | √ |

| MA-BSN [18] | × | × | √ | ∆ |

| FVID [19] | √ | ∆ | × | ∆ |

| This Paper | √ | √ | √ | √ |

| Item | Configuration Parameters |

|---|---|

| Operating System | Windows 11 64 bit |

| Processor | Intel® Core™ i9–14900 KF @3.2 GHz 1 |

| Graphics Card | NVIDIA GeForce RTX 4090 24 GB |

| RAM | DDR5 64 G 6400 MHz |

| Deep Learning Framework | Python 3.9/PyTorch 1.12.0/CUDA 12.8 |

| Modeling and Rendering Framework | 3ds Max 2023/V–Ray 6.0 |

| Item | Parameters |

|---|---|

| Linear Transformation | ω = 1.2, λ = 0 |

| Bilateral Filtering | σr = 40, σs = 40 |

| MSRCR | K = 3, α = 1, β = 2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tao, W.; Luo, Y.; Tong, J.; Xia, Q.; Qu, J. A Ship Heading Estimation Method Based on DeepLabV3+ and Contrastive Learning-Optimized Multi-Scale Similarity. J. Mar. Sci. Eng. 2025, 13, 1085. https://doi.org/10.3390/jmse13061085

Tao W, Luo Y, Tong J, Xia Q, Qu J. A Ship Heading Estimation Method Based on DeepLabV3+ and Contrastive Learning-Optimized Multi-Scale Similarity. Journal of Marine Science and Engineering. 2025; 13(6):1085. https://doi.org/10.3390/jmse13061085

Chicago/Turabian StyleTao, Weihao, Yasong Luo, Jijin Tong, Qingtao Xia, and Jianjing Qu. 2025. "A Ship Heading Estimation Method Based on DeepLabV3+ and Contrastive Learning-Optimized Multi-Scale Similarity" Journal of Marine Science and Engineering 13, no. 6: 1085. https://doi.org/10.3390/jmse13061085

APA StyleTao, W., Luo, Y., Tong, J., Xia, Q., & Qu, J. (2025). A Ship Heading Estimation Method Based on DeepLabV3+ and Contrastive Learning-Optimized Multi-Scale Similarity. Journal of Marine Science and Engineering, 13(6), 1085. https://doi.org/10.3390/jmse13061085