FACMamba: Frequency-Aware Coupled State Space Modeling for Underwater Image Enhancement

Abstract

1. Introduction

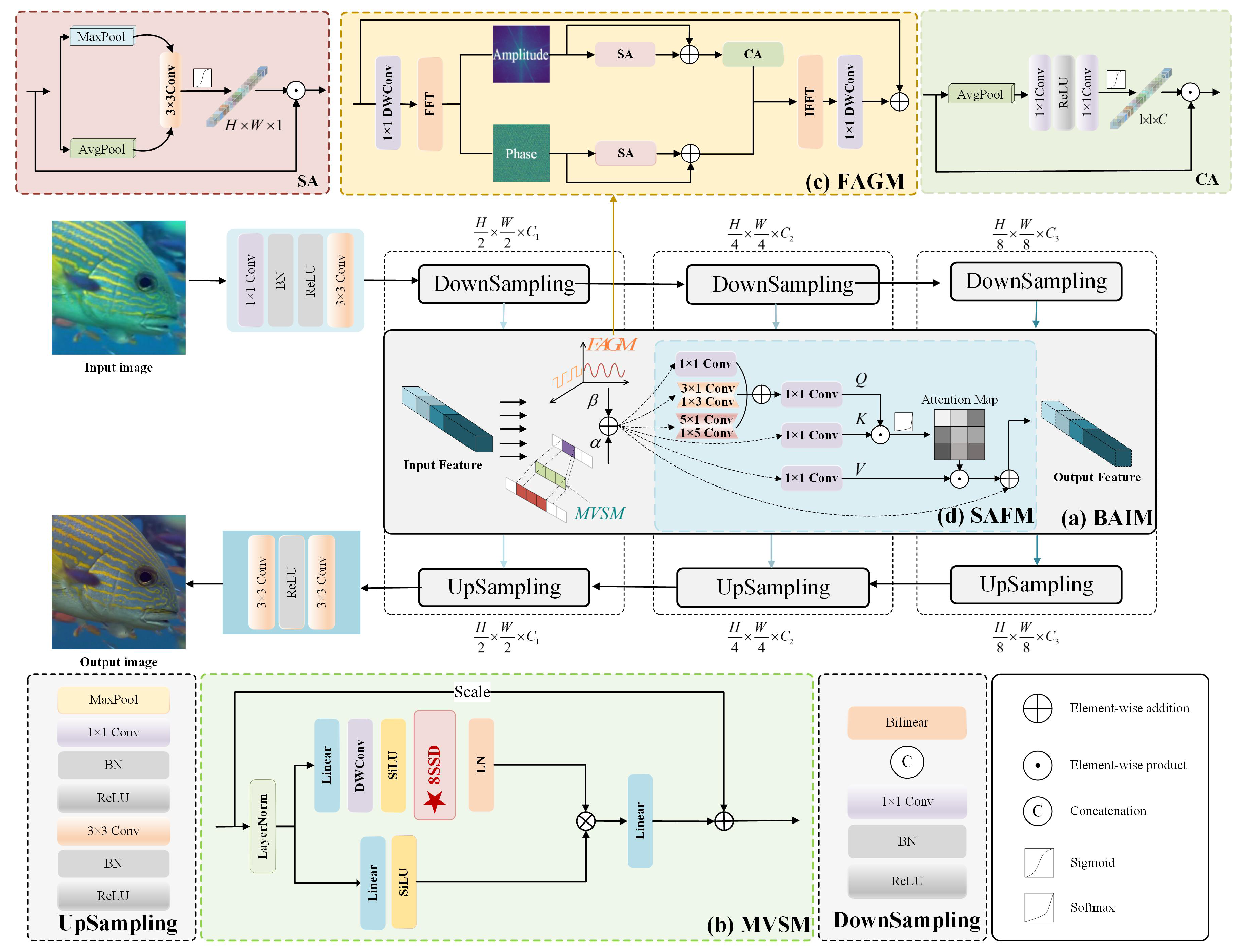

- To address the inefficiency of global modeling and detail enhancement in UIE tasks, a novel state-space-based U-Net architecture named FACMamba is proposed. FACMamba integrates a lightweight yet expressive Bidirectional Awareness Integration Module (BAIM) between the encoder and decoder stages to facilitate efficient bidirectional information flow across scales. Such a design allows the network to effectively integrate frequency-domain information with long-range spatial modeling, all within a framework of linear computational complexity.

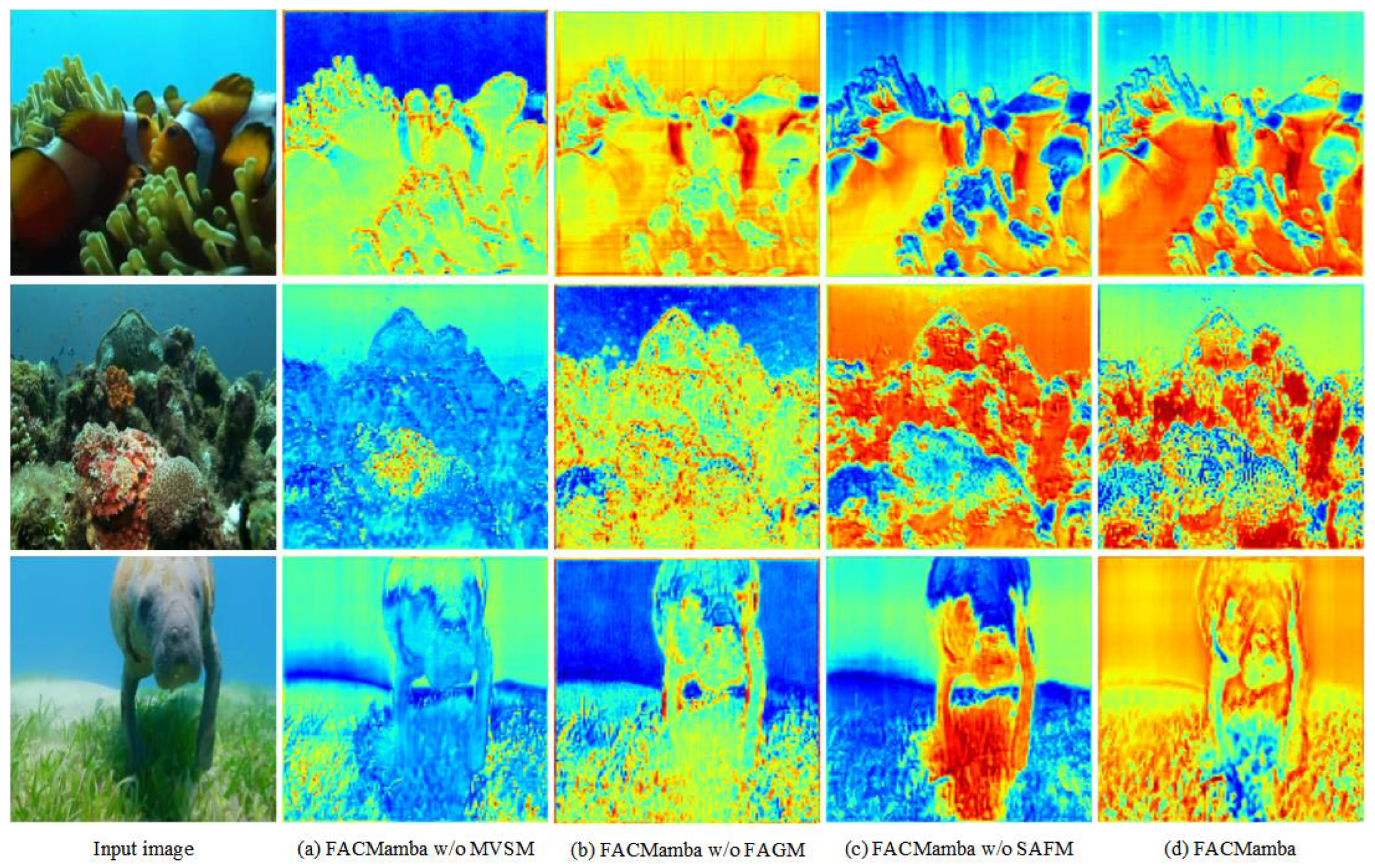

- To cope with the inherent challenges of underwater image degradation, the proposed BAIM incorporates three submodules: the Multi-Directional Vision State-Space Module (MVSM) for long-range spatial interaction, the Frequency-Aware Guidance Module (FAGM) for high-frequency detail restoration, and the Structure-Aware Fusion Module (SAFM) for adaptive integration of global and local features. This comprehensive integration enhances the model’s perceptual quality and robustness in diverse underwater scenarios.

- Comprehensive evaluations on multiple UIE benchmarks show that FACMamba delivers performance comparable to state-of-the-art methods while maintaining markedly lower computational complexity.

2. Related Work

2.1. Underwater Image Enhancement

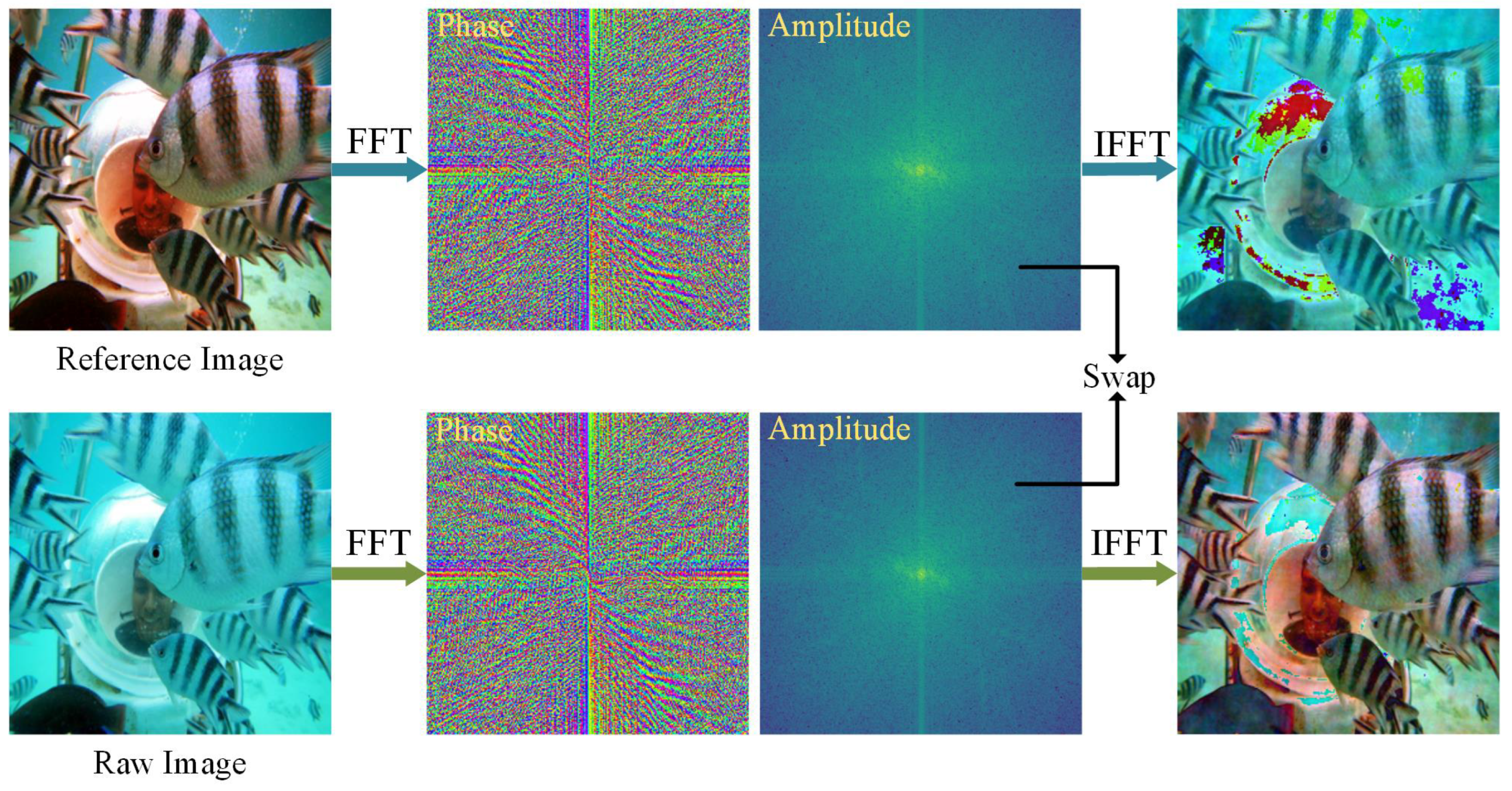

2.2. Fourier Transform

3. Proposed Method

3.1. Architecture

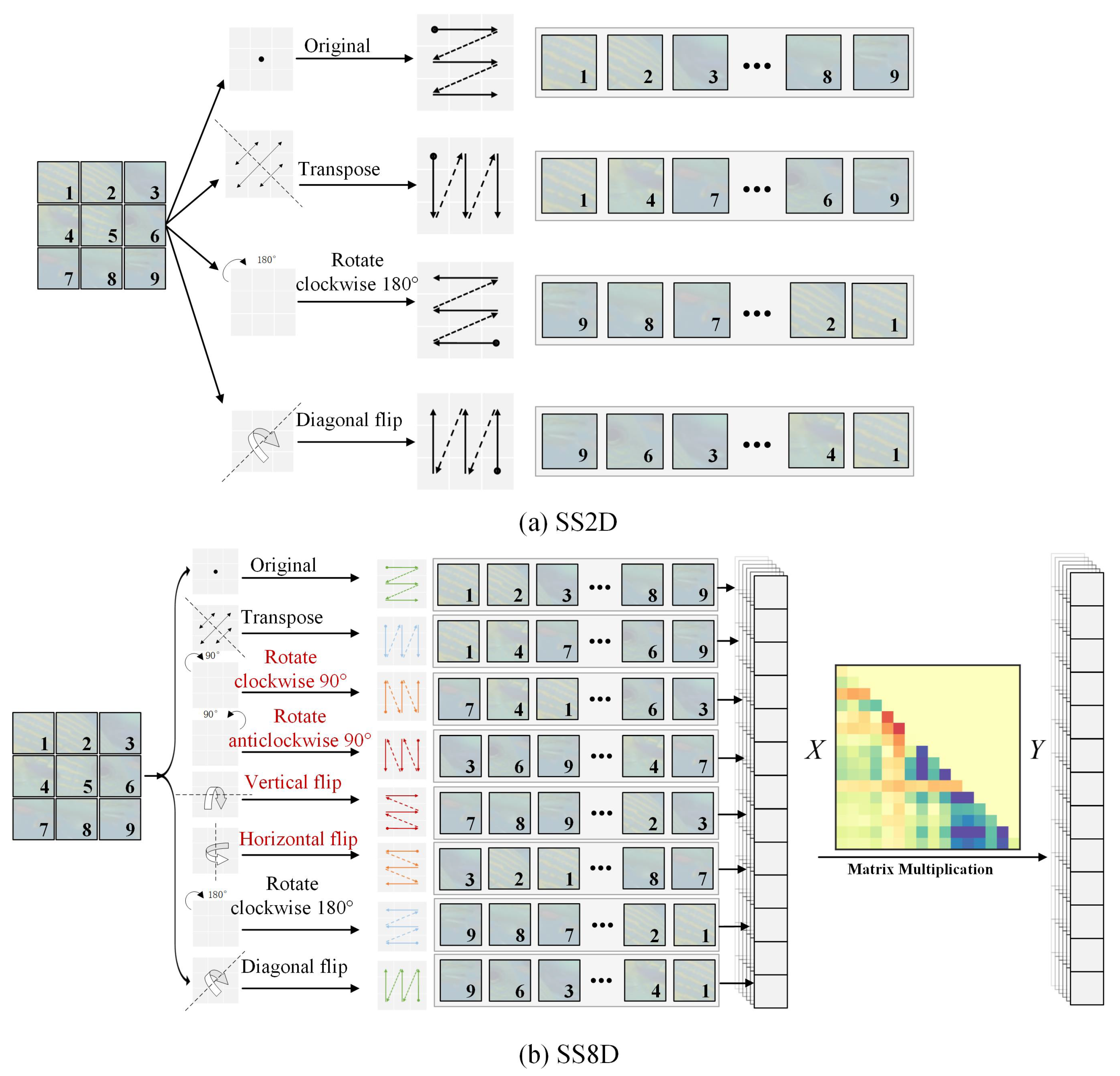

3.2. Multi-Directional Vision State-Space Module

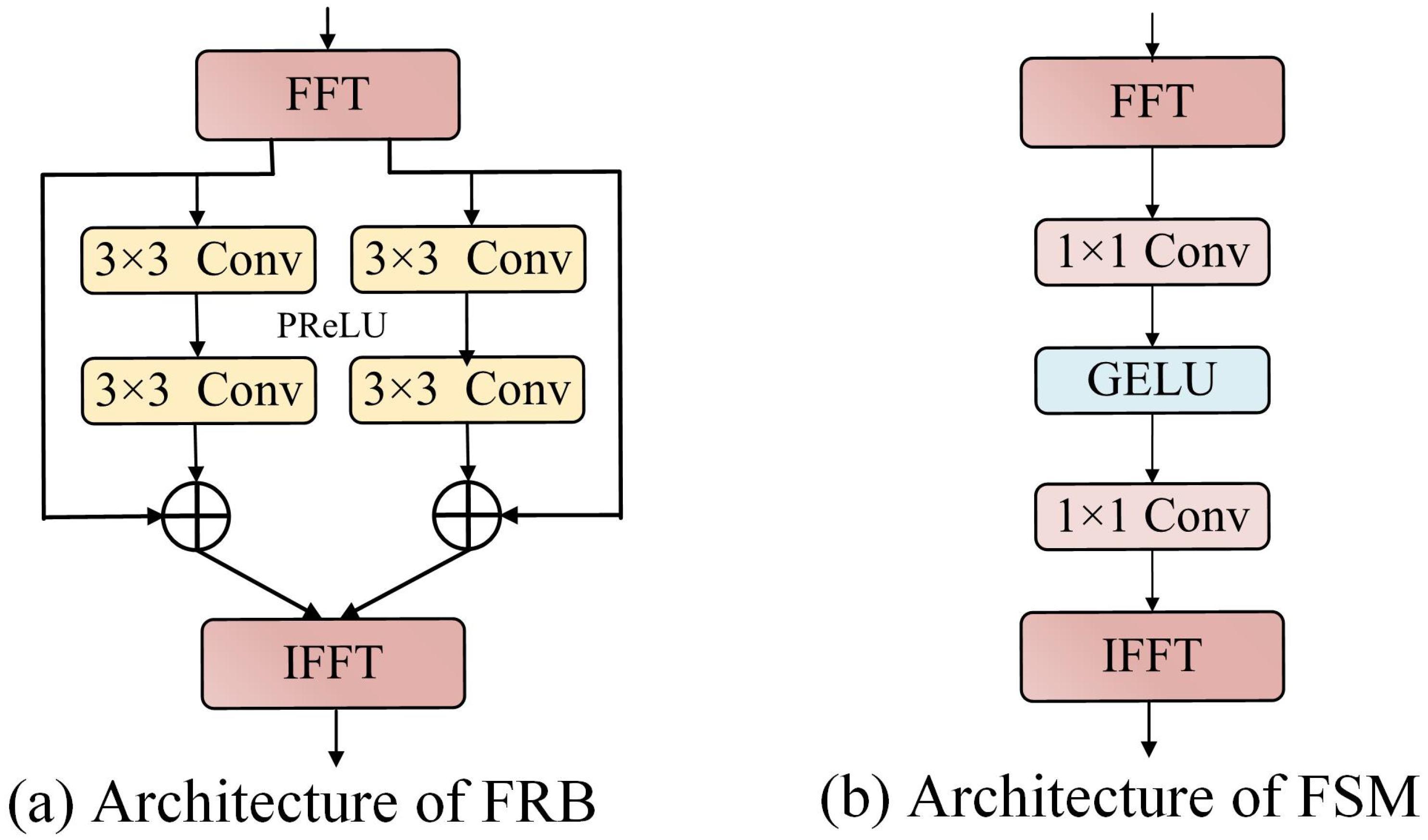

3.3. Frequency-Aware Guidance Module

3.4. Structure-Aware Fusion Module

3.5. Loss Function

4. Experiments

4.1. Datasets

4.2. Implementation Details

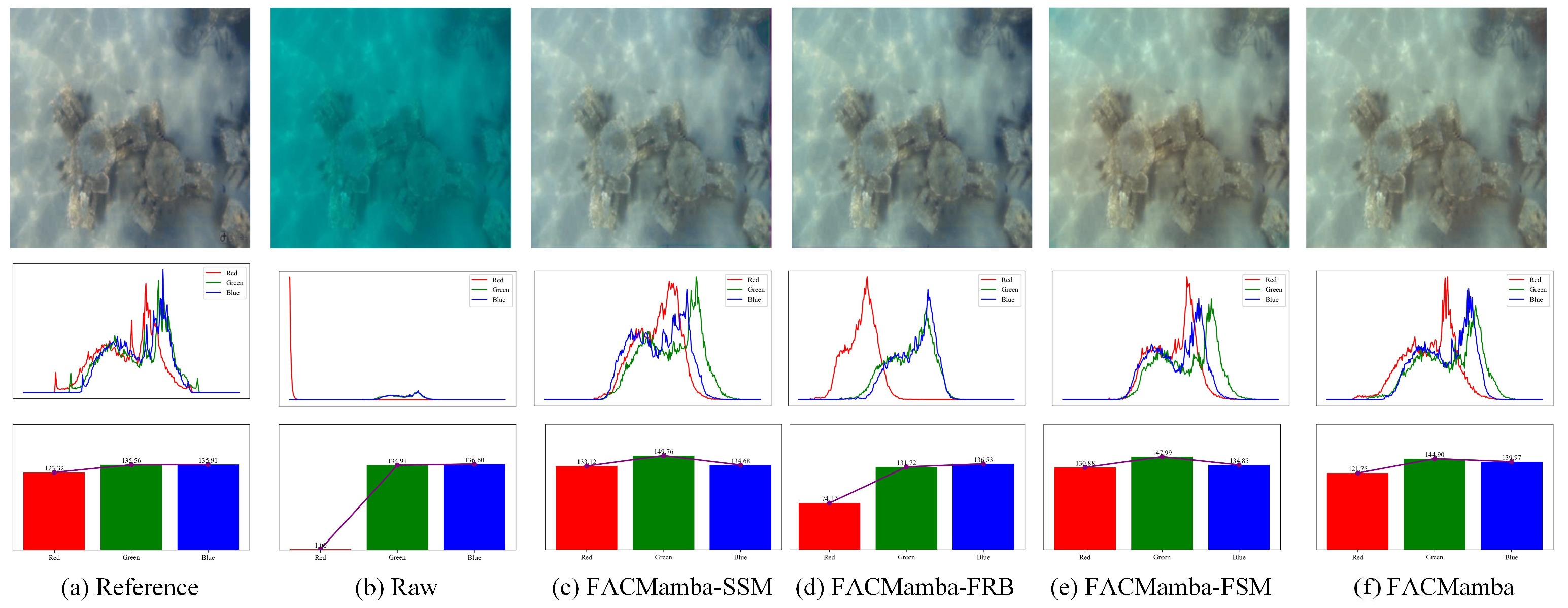

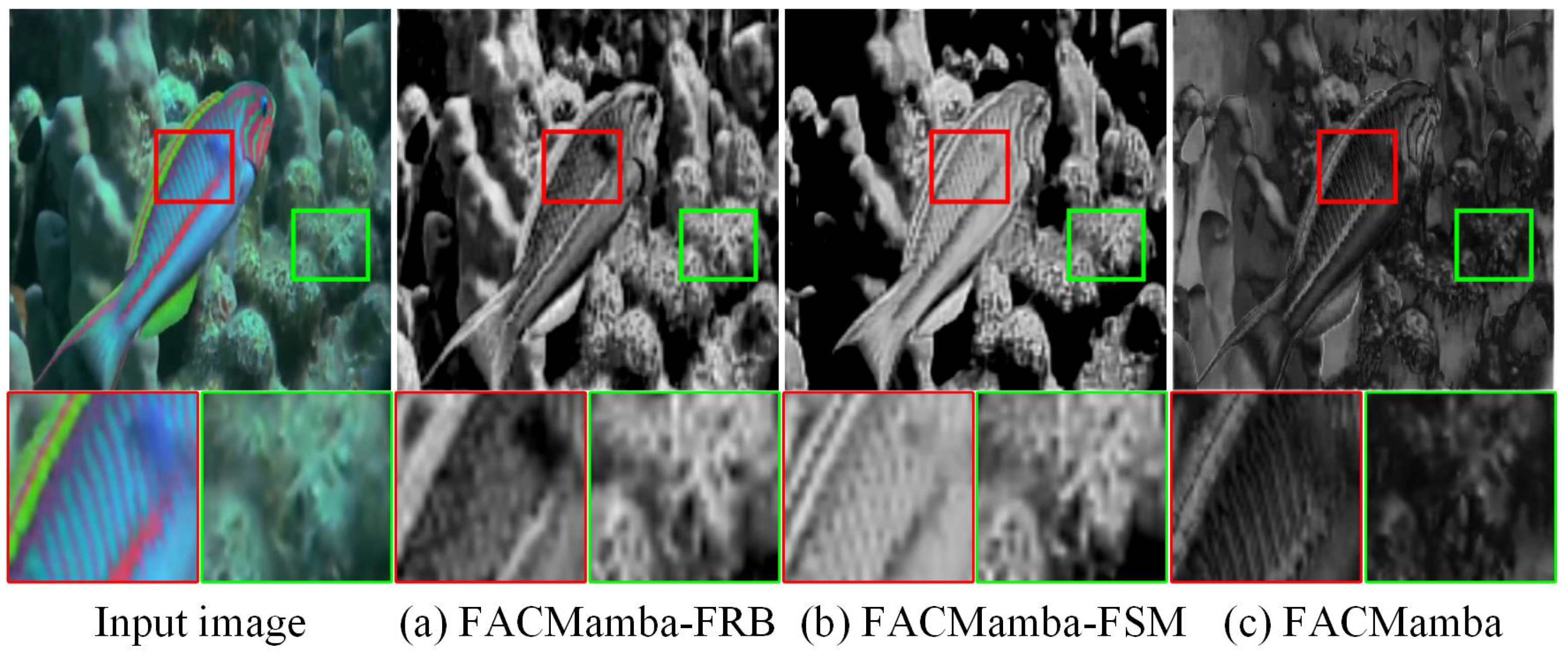

4.3. Model Analysis

4.4. Ablation Study

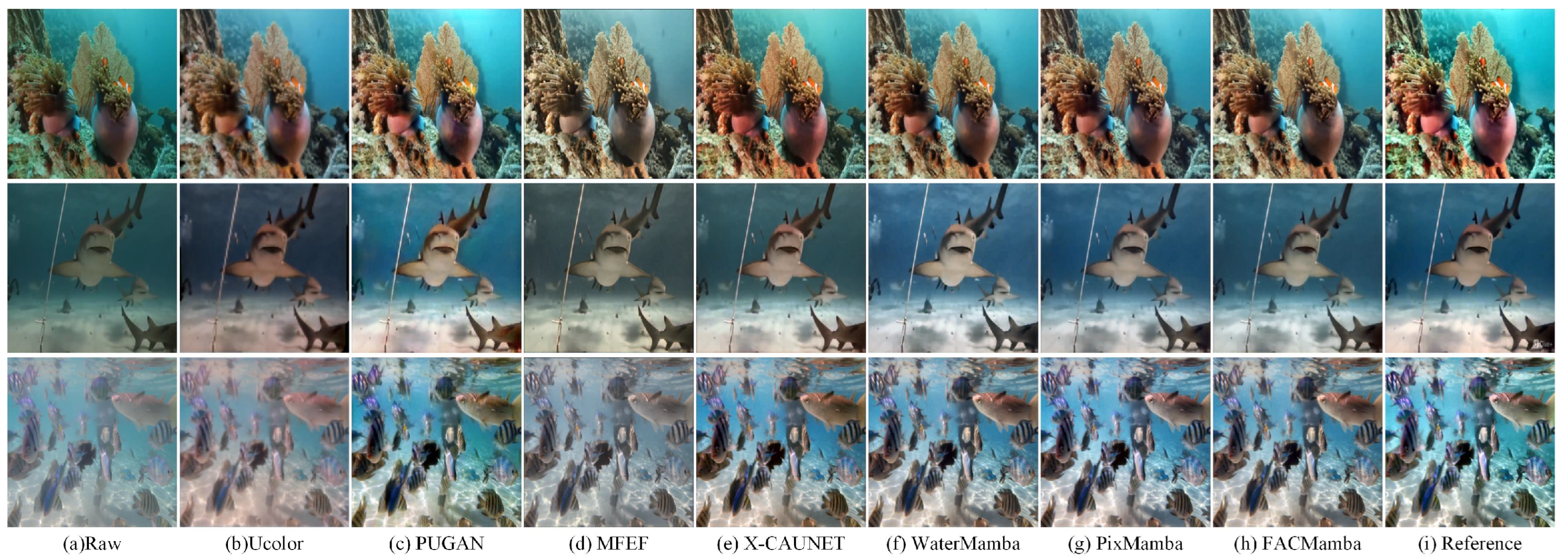

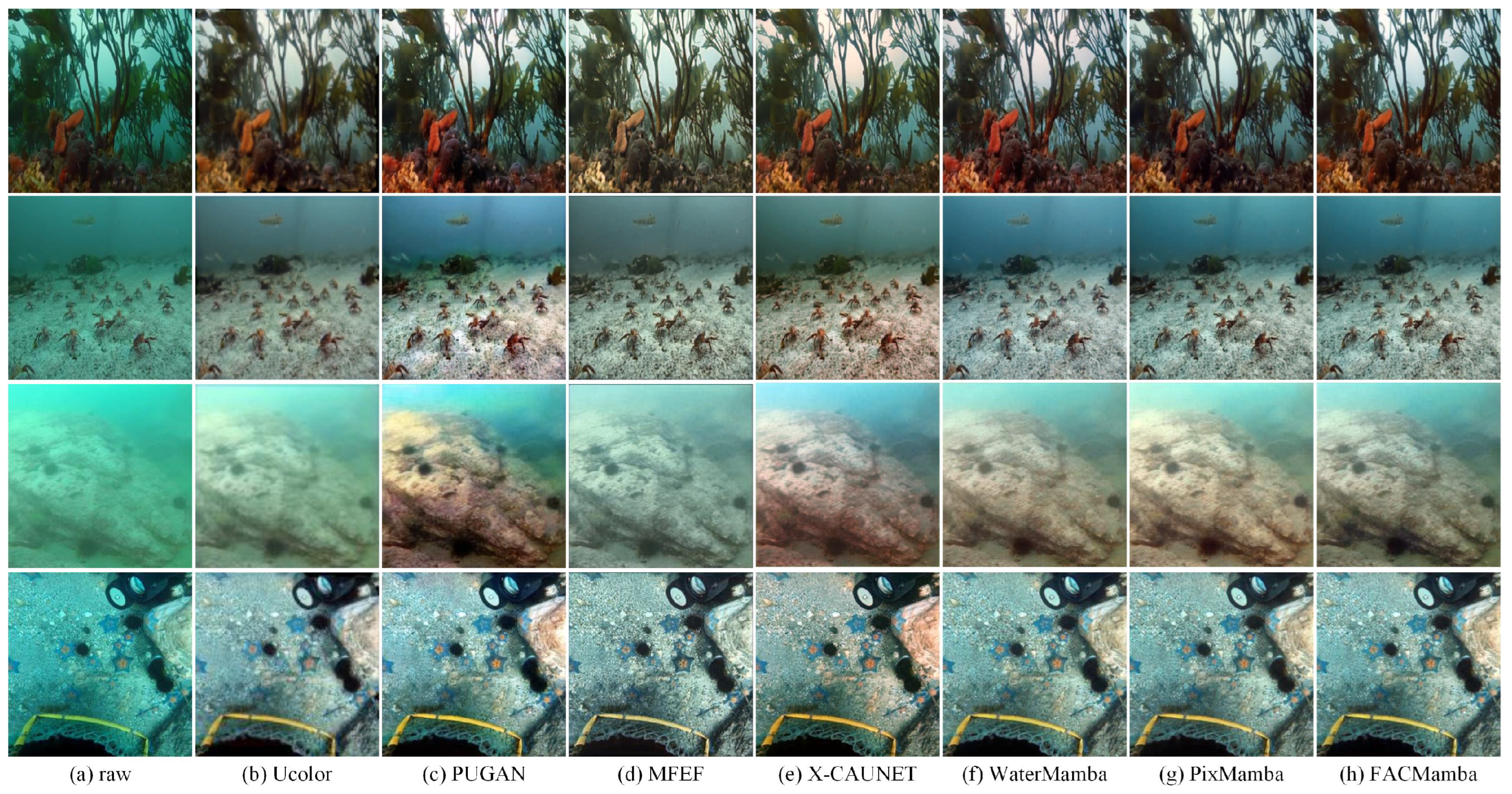

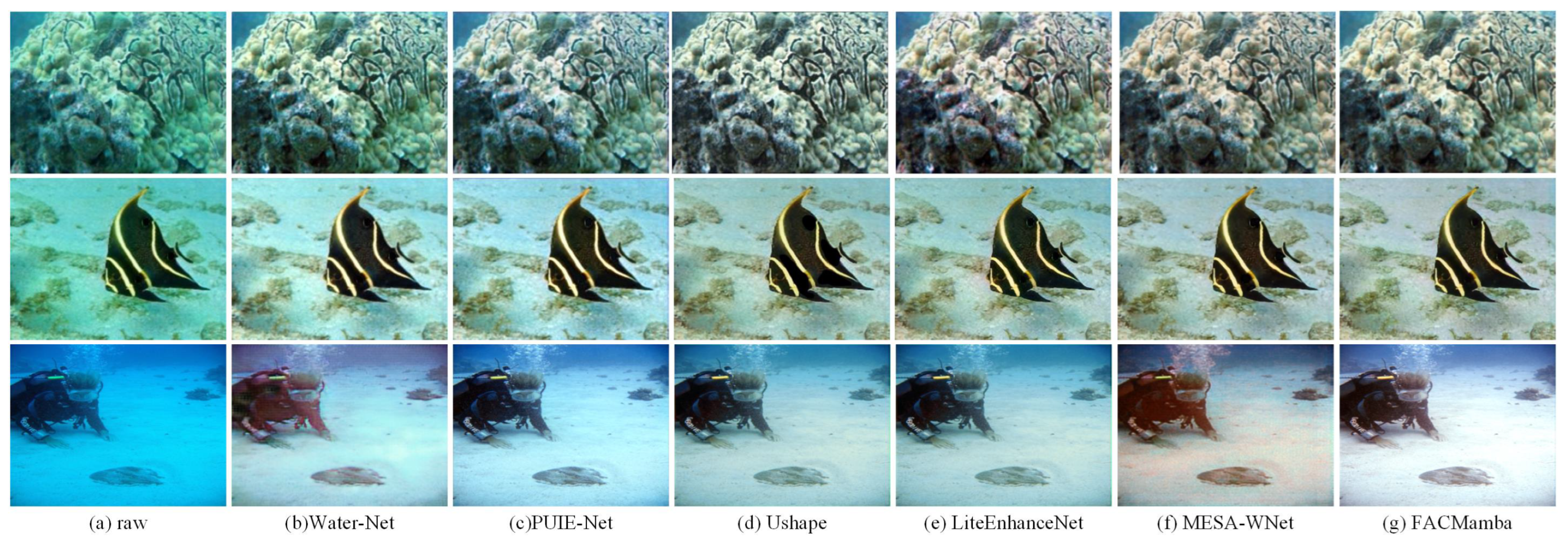

4.5. Comparison with SOTA Methods

4.6. Model Efficiency

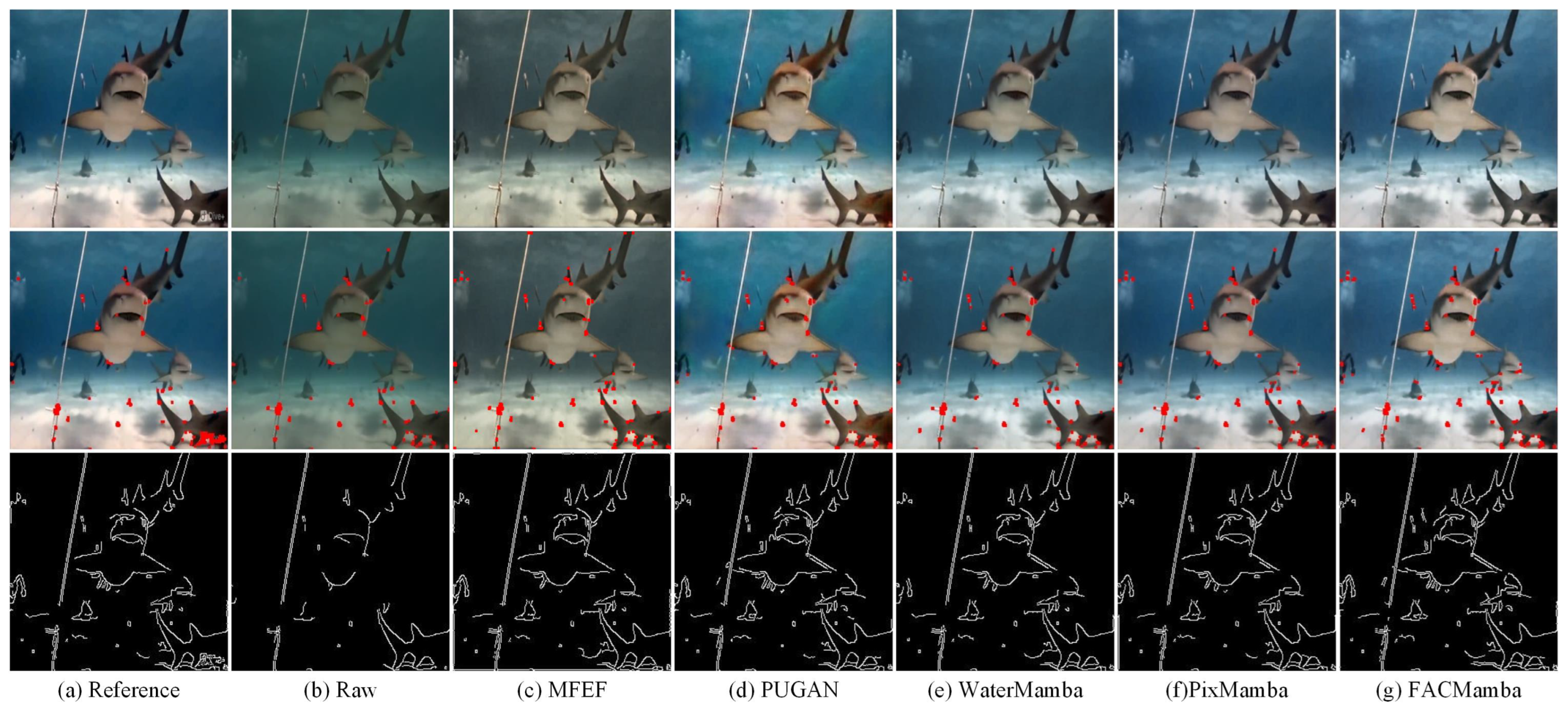

4.7. Applications

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, C.; Anwar, S.; Hou, J.; Cong, R.; Guo, C.; Ren, W. Underwater Image Enhancement via Medium Transmission-Guided Multi-Color Space Embedding. IEEE Trans. Image Process. 2021, 30, 4985–5000. [Google Scholar] [CrossRef]

- Peng, L.; Zhu, C.; Bian, L. U-Shape Transformer for Underwater Image Enhancement. IEEE Trans. Image Process. 2023, 32, 3066–3079. [Google Scholar] [CrossRef]

- Han, G.; Wang, M.; Zhu, H.; Lin, C. UIEGAN: Adversarial Learning-Based Photorealistic Image Enhancement for Intelligent Underwater Environment Perception. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5611514. [Google Scholar] [CrossRef]

- Tao, Y.; Tang, J.; Zhao, X.; Zhou, C.; Wang, C.; Zhao, Z. Multi-scale network with attention mechanism for underwater image enhancement. Neurocomputing 2024, 595, 127926. [Google Scholar] [CrossRef]

- Chen, W.; Lei, Y.; Luo, S.; Zhou, Z.; Li, M.; Pun, C.M. Uwformer: Underwater image enhancement via a semi-supervised multi-scale transformer. In Proceedings of the 2024 International Joint Conference on Neural Networks (IJCNN), Yokohama, Japan, 30 June–5 July 2024; pp. 1–8. [Google Scholar]

- Fan, J.; Xu, J.; Zhou, J.; Meng, D.; Lin, Y. See Through Water: Heuristic Modeling Toward Color Correction for Underwater Image Enhancement. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 4039–4054. [Google Scholar] [CrossRef]

- Zhang, W.; Li, X.; Huang, Y.; Xu, S.; Tang, J.; Hu, H. Underwater image enhancement via frequency and spatial domains fusion. Opt. Lasers Eng. 2025, 186, 108826. [Google Scholar] [CrossRef]

- Liu, X.; Gu, Z.; Ding, H.; Zhang, M.; Wang, L. Underwater image super-resolution using frequency-domain enhanced attention network. IEEE Access 2024, 12, 6136–6147. [Google Scholar] [CrossRef]

- Pramanick, A.; Megha, D.; Sur, A. Attention-Based Spatial-Frequency Information Network for Underwater Single Image Super-Resolution. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 3560–3564. [Google Scholar] [CrossRef]

- Gu, A.; Dao, T. Mamba: Linear-Time Sequence Modeling with Selective State Spaces. In Proceedings of the First Conference on Language Modeling, Pennsylvania, PA, USA, 7–10 October 2024. [Google Scholar]

- Guo, H.; Li, J.; Dai, T.; Ouyang, Z.; Ren, X.; Xia, S.T. MambaIR: A Simple Baseline for Image Restoration with State-Space Model. arXiv 2024, arXiv:2402.15648. [Google Scholar]

- Guan, M.; Xu, H.; Jiang, G.; Yu, M.; Chen, Y.; Luo, T.; Song, Y. WaterMamba: Visual State Space Model for Underwater Image Enhancement. arXiv 2024, arXiv:2405.08419. [Google Scholar] [CrossRef]

- Lin, W.T.; Lin, Y.X.; Chen, J.W.; Hua, K.L. PixMamba: Leveraging State Space Models in a Dual-Level Architecture for Underwater Image Enhancement. arXiv 2024, arXiv:2406.08444. [Google Scholar]

- Dong, C.; Zhao, C.; Cai, W.; Yang, B. O-Mamba: O-shape State-Space Model for Underwater Image Enhancement. arXiv 2024, arXiv:2408.12816. [Google Scholar]

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.; Tao, D. An Underwater Image Enhancement Benchmark Dataset and Beyond. IEEE Trans. Image Process. 2019, 29, 4376–4389. [Google Scholar] [CrossRef]

- Guan, M.; Xu, H.; Jiang, G.; Yu, M.; Chen, Y.; Luo, T.; Zhang, X. DiffWater: Underwater Image Enhancement Based on Conditional Denoising Diffusion Probabilistic Model. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 2319–2335. [Google Scholar] [CrossRef]

- Li, C.; Guo, J.; Chen, S.; Tang, Y.; Pang, Y.; Wang, J. Underwater image restoration based on minimum information loss principle and optical properties of underwater imaging. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 1993–1997. [Google Scholar] [CrossRef]

- Drews, P.L.; Nascimento, E.R.; Botelho, S.S.; Montenegro Campos, M.F. Underwater Depth Estimation and Image Restoration Based on Single Images. IEEE Comput. Graph. Appl. 2016, 36, 24–35. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, H.; Chau, L.P. Single Underwater Image Restoration Using Adaptive Attenuation-Curve Prior. IEEE Trans. Circuits Syst. I Regul. Pap. 2018, 65, 992–1002. [Google Scholar] [CrossRef]

- Cong, R.; Yang, W.; Zhang, W.; Li, C.; Guo, C.L.; Huang, Q.; Kwong, S. PUGAN: Physical Model-Guided Underwater Image Enhancement Using GAN with Dual-Discriminators. IEEE Trans. Image Process. 2023, 32, 4472–4485. [Google Scholar] [CrossRef] [PubMed]

- Qi, Q.; Li, K.; Zheng, H.; Gao, X.; Hou, G.; Sun, K. SGUIE-Net: Semantic Attention Guided Underwater Image Enhancement with Multi-Scale Perception. IEEE Trans. Image Process. 2022, 31, 6816–6830. [Google Scholar] [CrossRef] [PubMed]

- An, G.; He, A.; Wang, Y.; Guo, J. UWMamba: UnderWater Image Enhancement with State Space Model. IEEE Signal Process. Lett. 2024, 31, 2725–2729. [Google Scholar] [CrossRef]

- Xiao, Y.; Yuan, Q.; Jiang, K.; Chen, Y.; Zhang, Q.; Lin, C.W. Frequency-Assisted Mamba for Remote Sensing Image Super-Resolution. arXiv 2024, arXiv:2405.04964. [Google Scholar] [CrossRef]

- Zhang, X.; Su, Q.; Yuan, Z.; Liu, D. An efficient blind color image watermarking algorithm in spatial domain combining discrete Fourier transform. Optik 2020, 219, 165272. [Google Scholar] [CrossRef]

- Yang, Y.; Soatto, S. FDA: Fourier Domain Adaptation for Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 4085–4095. [Google Scholar]

- Xu, Q.; Zhang, R.; Zhang, Y.; Wang, Y.; Tian, Q. A Fourier-Based Framework for Domain Generalization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 14383–14392. [Google Scholar]

- Cheng, Z.; Fan, G.; Zhou, J.; Gan, M.; Chen, C.L.P. FDCE-Net: Underwater Image Enhancement with Embedding Frequency and Dual Color Encoder. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 1728–1744. [Google Scholar] [CrossRef]

- Walia, J.S.; Venkatraman, S.; LK, P. FUSION: Frequency-guided Underwater Spatial Image recOnstructioN. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville TN, USA, 11–15 June 2025. [Google Scholar]

- Zhao, C.; Cai, W.; Dong, C.; Hu, C. Wavelet-based Fourier Information Interaction with Frequency Diffusion Adjustment for Underwater Image Restoration. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 8281–8291. [Google Scholar] [CrossRef]

- Zhu, Z.; Li, X.; Ma, Q.; Zhai, J.; Hu, H. FDNet: Fourier transform guided dual-channel underwater image enhancement diffusion network. Sci. China Technol. Sci. 2025, 68, 1100403. [Google Scholar] [CrossRef]

- Liu, Y.; Tian, Y.; Zhao, Y.; Yu, H.; Xie, L.; Wang, Y.; Ye, Q.; Liu, Y. VMamba: Visual State Space Model. Adv. Neural Inf. Process. Syst. 2024, 37, 103031–103063. [Google Scholar]

- Wang, C.; Jiang, J.; Zhong, Z.; Liu, X. Spatial-Frequency Mutual Learning for Face Super-Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 22356–22366. [Google Scholar]

- Liu, R.; Fan, X.; Zhu, M.; Hou, M.; Luo, Z. Real-World Underwater Enhancement: Challenges, Benchmarks, and Solutions Under Natural Light. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 4861–4875. [Google Scholar] [CrossRef]

- Islam, M.J.; Xia, Y.; Sattar, J. Fast Underwater Image Enhancement for Improved Visual Perception. IEEE Robot. Autom. Lett. 2020, 5, 3227–3234. [Google Scholar] [CrossRef]

- Islam, M.J.; Luo, P.; Sattar, J. Simultaneous Enhancement and Super-Resolution of Underwater Imagery for Improved Visual Perception. arXiv 2020, arXiv:2002.01155. [Google Scholar] [CrossRef]

- Li, H.; Li, J.; Wang, W. A Fusion Adversarial Underwater Image Enhancement Network with a Public Test Dataset. arXiv 2019, arXiv:1906.06819. [Google Scholar] [CrossRef]

- Chang, B.; Yuan, G.; Li, J. Mamba-enhanced spectral-attentive wavelet network for underwater image restoration. Eng. Appl. Artif. Intell. 2025, 143, 109999. [Google Scholar] [CrossRef]

- Loshchilov, I.; Hutter, F. Decoupled Weight Decay Regularization. arXiv 2019, arXiv:1711.05101. [Google Scholar] [CrossRef]

- Loshchilov, I.; Hutter, F. SGDR: Stochastic Gradient Descent with Warm Restarts. arXiv 2017, arXiv:1608.03983. [Google Scholar] [CrossRef]

- Korhonen, J.; You, J. Peak signal-to-noise ratio revisited: Is simple beautiful? In Proceedings of the 2012 Fourth International Workshop on Quality of Multimedia Experience, Melbourne, Australia, 5–7 July 2012; pp. 37–38. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.; Sheikh, H.; Simoncelli, E. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Panetta, K.; Gao, C.; Agaian, S. Human-Visual-System-Inspired Underwater Image Quality Measures. IEEE J. Ocean. Eng. 2016, 41, 541–551. [Google Scholar] [CrossRef]

- Liu, L.; Liu, B.; Huang, H.; Bovik, A.C. No-reference image quality assessment based on spatial and spectral entropies. Signal Proc. Image Commun. 2014, 29, 856–863. [Google Scholar] [CrossRef]

- Fu, Z.; Wang, W.; Huang, Y.; Ding, X.; Ma, K.K. Uncertainty Inspired Underwater Image Enhancement. In Computer Vision—ECCV 2022; Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T., Eds.; Spinger: Cham, Switzerland, 2022; pp. 465–482. [Google Scholar]

- Ren, T.; Xu, H.; Jiang, G.; Yu, M.; Zhang, X.; Wang, B.; Luo, T. Reinforced Swin-Convs Transformer for Simultaneous Underwater Sensing Scene Image Enhancement and Super-resolution. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4209616. [Google Scholar] [CrossRef]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H. Restormer: Efficient Transformer for High-Resolution Image Restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 5728–5739. [Google Scholar]

- Zhou, J.; Sun, J.; Zhang, W.; Lin, Z. Multi-view underwater image enhancement method via embedded fusion mechanism. Eng. Appl. Artif. Intell. 2023, 121, 105946. [Google Scholar] [CrossRef]

- Huang, S.; Wang, K.; Liu, H.; Chen, J.; Li, Y. Contrastive Semi-Supervised Learning for Underwater Image Restoration via Reliable Bank. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 18145–18155. [Google Scholar]

- Wang, B.; Xu, H.; Jiang, G.; Yu, M.; Ren, T.; Luo, T.; Zhu, Z. UIE-Convformer: Underwater Image Enhancement Based on Convolution and Feature Fusion Transformer. IEEE Trans. Emerg. Top. Comput. Intell. 2024, 8, 1952–1968. [Google Scholar] [CrossRef]

- Pramanick, A.; Sarma, S.; Sur, A. X-CAUNET: Cross-Color Channel Attention with Underwater Image-Enhancing Transformer. In Proceedings of the ICASSP 2024-2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 3550–3554. [Google Scholar] [CrossRef]

- Naik, A.; Swarnakar, A.; Mittal, K. Shallow-UWnet: Compressed Model for Underwater Image Enhancement (Student Abstract). Proc. AAAI Conf. Artif. Intell. 2021, 35, 15853–15854. [Google Scholar] [CrossRef]

- Wang, Y.; Guo, J.; Gao, H.; Yue, H. UIEC⌃2-Net: CNN-based underwater image enhancement using two color space. Signal Proc. Image Commun. 2021, 96, 116250. [Google Scholar] [CrossRef]

- Guo, C.; Wu, R.; Jin, X.; Han, L.; Zhang, W.; Chai, Z.; Li, C. Underwater Ranker: Learn Which Is Better and How to Be Better. Proc. AAAI Conf. Artif. Intell. 2023, 37, 702–709. [Google Scholar] [CrossRef]

- Zhang, W.; Zhuang, P.; Sun, H.H.; Li, G.; Kwong, S.; Li, C. Underwater Image Enhancement via Minimal Color Loss and Locally Adaptive Contrast Enhancement. IEEE Trans. Image Process. 2022, 31, 3997–4010. [Google Scholar] [CrossRef]

- Xie, J.; Hou, G.; Wang, G.; Pan, Z. A Variational Framework for Underwater Image Dehazing and Deblurring. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 3514–3526. [Google Scholar] [CrossRef]

- Park, C.W.; Eom, I.K. Underwater image enhancement using adaptive standardization and normalization networks. Eng. Appl. Artif. Intell. 2024, 127, 107445. [Google Scholar] [CrossRef]

- Zhang, S.; Zhao, S.; An, D.; Li, D.; Zhao, R. LiteEnhanceNet: A lightweight network for real-time single underwater image enhancement. Expert Syst. Appl. 2024, 240, 122546. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A Combined Corner and Edge Detector. In Proceedings of the Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; pp. 23.1–23.6. [Google Scholar] [CrossRef]

- Canny, J. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 679–698. [Google Scholar] [CrossRef]

| Model | PSNR ↑ | SSIM ↑ | UIQM ↑ | UCIQE ↑ | Params | FLOPs |

|---|---|---|---|---|---|---|

| FACMamba-SSM | 23.45 | 0.918 | 2.977 | 0.550 | 1.92 M | 25.91 G |

| FACMamba-FRB | 23.51 | 0.915 | 3.004 | 0.569 | 8.78 M | 31.48 G |

| FACMamba-FSM | 23.49 | 0.916 | 3.001 | 0.566 | 3.62 M | 25.90 G |

| FACMamba | 23.53 | 0.920 | 3.011 | 0.572 | 3.03 M | 25.91 G |

| Model | PSNR ↑ | SSIM ↑ | UIQM ↑ | UCIQE ↑ | Params | FLOPs |

|---|---|---|---|---|---|---|

| FACMamba w/o MVSM | 22.94 | 0.899 | 2.942 | 0.542 | 0.56 M | 24.24 G |

| FACMamba w/o FAGM | 23.44 | 0.915 | 2.988 | 0.567 | 3.01 M | 25.90 G |

| FACMamba w/o SAFM | 23.32 | 0.903 | 2.983 | 0.554 | 2.63 M | 4.44 G |

| FACMamba | 23.53 | 0.920 | 3.011 | 0.572 | 3.03 M | 25.91 G |

| Method | C60 | UCCS | Params ↓ | FLOPs ↓ | ||

|---|---|---|---|---|---|---|

| UIQM ↑ | UCIQE ↑ | UIQM ↑ | UCIQE ↑ | |||

| Ucolor [1] | 2.482 | 0.553 | 3.019 | 0.550 | 157.4 M | 34.68 G |

| PUIE-Net [44] | 2.521 | 0.558 | 3.003 | 0.536 | 1.41 M | 30.09 G |

| URSCT [45] | 2.642 | 0.543 | 2.947 | 0.544 | 11.41 M | 18.11 G |

| Restormer [46] | 2.688 | 0.572 | 2.981 | 0.542 | 26.10 M | 140.99 G |

| PUGAN [20] | 2.652 | 0.566 | 2.977 | 0.536 | 95.66 M | 72.05 G |

| MFEF [47] | 2.652 | 0.566 | 2.977 | 0.556 | 61.86 M | 26.52 G |

| Semi-UIR [48] | 2.667 | 0.574 | 3.079 | 0.554 | 1.65 M | 36.44 G |

| UIE-Convformer [49] | 2.684 | 0.572 | 2.946 | 0.555 | 25.9 M | 36.9 G |

| X-CAUNET [50] | 2.683 | 0.564 | 2.922 | 0.541 | 31.78 M | 261.48 G |

| WaterMamba [12] | 2.853 | 0.582 | 3.057 | 0.550 | 3.69 M | 7.53 G |

| PixMamba [50] | 2.868 | 0.586 | 3.053 | 0.561 | 8.68 M | 7.60 G |

| FACMamba (Ours) | 2.690 | 0.570 | 3.074 | 0.597 | 3.03 M | 25.91 G |

| Method | T90 | ||||

|---|---|---|---|---|---|

| PSNR ↑ | SSIM ↑ | MSE ↓ | UIQM ↑ | UCIQE ↑ | |

| Ucolor [1] | 21.093 | 0.872 | 0.096 | 3.049 | 0.555 |

| Shallow-uwnet [51] | 18.278 | 0.855 | 0.131 | 2.942 | 0.544 |

| UIECˆ2-Net [52] | 22.958 | 0.907 | 0.078 | 2.999 | 0.599 |

| PUIE-Net [44] | 21.382 | 0.882 | 0.093 | 3.021 | 0.566 |

| NU2Net [53] | 23.061 | 0.923 | 0.086 | 2.936 | 0.587 |

| WaterMamba [12] | 24.715 | 0.931 | - | - | - |

| PixMamba [50] | 23.587 | 0.921 | 0.061 | 3.048 | 0.617 |

| FACMamba (Ours) | 23.727 | 0.938 | 0.060 | 3.074 | 0.602 |

| Method | EUVP | UFO-120 | U45 | ||||||

|---|---|---|---|---|---|---|---|---|---|

| UIQM ↑ | UCIQE ↑ | NIQE ↓ | UIQM ↑ | UCIQE ↑ | NIQE ↓ | UIQM ↑ | UCIQE ↑ | NIQE ↓ | |

| FUnIE-GAN [34] | 2.763 | 0.588 | 4.323 | 2.755 | 0.601 | 4.376 | 4.981 | 0.534 | 4.981 |

| Water-Net [15] | 3.079 | 0.587 | 4.639 | 3.023 | 0.592 | 4.423 | 3.125 | 0.567 | 6.048 |

| PUIE-Net [44] | 3.039 | 0.588 | 4.523 | 3.015 | 0.601 | 4.367 | 3.189 | 0.566 | 4.107 |

| MLLE [54] | 2.229 | 0.611 | 4.636 | 2.309 | 0.621 | 4.628 | 2.484 | 0.597 | 6.352 |

| UNTV [55] | 2.497 | 0.619 | 5.307 | 2.523 | 0.621 | 5.174 | 1.766 | 0.627 | 8.939 |

| U-shape [2] | 2.947 | 0.574 | 4.478 | 2.898 | 0.575 | 4.387 | 3.104 | 0.553 | 4.569 |

| ASNet [56] | 3.064 | 0.616 | 4.579 | 3.015 | 0.618 | 4.473 | 3.217 | 0.603 | 6.165 |

| LiteEnhanceNet [57] | 2.957 | 0.620 | 4.593 | 2.954 | 0.622 | 4.436 | 3.171 | 0.580 | 4.064 |

| MESA-WNet [37] | 3.057 | 0.622 | 4.228 | 3.032 | 0.623 | 4.027 | 3.238 | 0.604 | 4.048 |

| FACMamba (Ours) | 3.090 | 0.607 | 4.387 | 3.057 | 0.612 | 4.365 | 3.260 | 0.593 | 4.004 |

| Index | CNN-Based | Mamba-Based | ||||||

|---|---|---|---|---|---|---|---|---|

| Water-Net | PUIE-Net | U-Shape | LiteEnhanceNet | MESA-WNet | WaterMamba | PixMamba | FACMamba (Ours) | |

| Params (M) | 24.81 | 1.41 | 31.59 | 0.01 | 26.87 | 3.69 | 8.68 | 3.03 |

| FLOPs (G) | 193.7 | 30.09 | 26.10 | 0.64 | 26.38 | 7.53 | 7.60 | 25.91 |

| Inference Time (s) | 0.82245 | 4.3551 | 1.5238 | 0.7608 | 2.9241 | 2.2382 | 2.7109 | 2.2674 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, L.; Shen, K.; Sun, H.; Cheng, X.; Zhu, J.; Wang, B. FACMamba: Frequency-Aware Coupled State Space Modeling for Underwater Image Enhancement. J. Mar. Sci. Eng. 2025, 13, 2258. https://doi.org/10.3390/jmse13122258

Wang L, Shen K, Sun H, Cheng X, Zhu J, Wang B. FACMamba: Frequency-Aware Coupled State Space Modeling for Underwater Image Enhancement. Journal of Marine Science and Engineering. 2025; 13(12):2258. https://doi.org/10.3390/jmse13122258

Chicago/Turabian StyleWang, Li, Keyong Shen, Haiyang Sun, Xiaoling Cheng, Jun Zhu, and Bixuan Wang. 2025. "FACMamba: Frequency-Aware Coupled State Space Modeling for Underwater Image Enhancement" Journal of Marine Science and Engineering 13, no. 12: 2258. https://doi.org/10.3390/jmse13122258

APA StyleWang, L., Shen, K., Sun, H., Cheng, X., Zhu, J., & Wang, B. (2025). FACMamba: Frequency-Aware Coupled State Space Modeling for Underwater Image Enhancement. Journal of Marine Science and Engineering, 13(12), 2258. https://doi.org/10.3390/jmse13122258