Abstract

Underwater debris poses a significant threat to marine ecosystems, fisheries, and the tourism industry, necessitating the development of automated vision-based detection systems. Although recent studies have sought to enhance detection performance through underwater image enhancement, improvements in visual quality do not necessarily translate into higher detection accuracy and may, in some cases, degrade performance. To address this discrepancy between perceptual quality and detection reliability, we propose DiCAF, a dual-input co-attention fusion network built upon the latest You Only Look Once v11 detector. The proposed architecture processes both original and enhanced images in parallel and fuses their complementary features through a co-attention module, thereby improving detection stability and consistency. To mitigate high-frequency noise amplified during the enhancement process, a lightweight Gaussian filter is applied as a post-processing step, enhancing robustness against speckle noise commonly introduced by suspended particles in underwater environments. Furthermore, DiCAF incorporates a non-maximum suppression (NMS)-based ensemble that integrates detection outputs from three branches—original, enhanced, and fused—enabling complementary detection of objects missed by individual models and maximizing overall detection performance. Experimental results demonstrate that the proposed single-model DiCAF with Gaussian post-processing achieves an AP@0.5 of 0.87 and an AP@0.5:0.95 of 0.71 on a marine trash dataset. With the NMS-based ensemble, performance improves to 0.91 and 0.75, respectively. Under artificially injected speckle noise conditions, the proposed method maintains superior robustness, achieving an AP@0.5 of 0.62 and consistently outperforming conventional enhancement-based models.

1. Introduction

Underwater debris has become a critical global environmental concern, threatening not only marine ecosystems but also the productivity of fisheries and the sustainability of tourism resources [1]. Such debris—including plastic waste, metal fragments, glass, and discarded fishing nets—is frequently ingested by marine organisms or accumulates on the seabed. Among these, plastic waste is particularly hazardous because it gradually degrades into microplastics, which enter the biological food chain and ultimately pose risks to human health [2]. Consequently, there is an increasing need for automated technologies capable of detecting and monitoring underwater debris.

Conventional approaches to underwater debris detection have primarily relied on manual inspection by divers or on the post-analysis of video footage. These methods are time-consuming, labor-intensive, and difficult to scale for large or repeated surveys. Moreover, the dynamic nature of marine environments—characterized by variations in depth, current, and visibility—poses challenges for operator safety and substantially increases operational costs as the monitoring area expands. To overcome these limitations, recent studies have explored the integration of deep learning–based object detection with autonomous underwater vehicles (AUVs), facilitating real-time and scalable monitoring solutions [3,4]. Among the various algorithms developed, the You Only Look Once (YOLO) family of object detectors [5,6,7] has gained wide adoption for underwater visual detection tasks owing to its favorable balance between detection speed and accuracy [8,9,10].

However, real-world underwater environments pose substantially greater challenges than conventional datasets, including limited visibility, severe color distortion, and noise from suspended particles. These factors significantly impair the performance of general-purpose object detectors, which are often sensitive to low-light conditions, turbidity, and color attenuation. A promising strategy to alleviate these issues is to enhance the visual quality of underwater images prior to detection. Both physics-based restoration methods and learning-based enhancement models have demonstrated effectiveness in correcting common underwater artifacts such as color shifts, low contrast, and blurring [11,12]. Nevertheless, an important limitation is that image enhancement does not always translate into improved detection accuracy [13]. In many cases, enhanced images exaggerate background details or distort object boundaries, thereby hindering the internal feature learning of detectors.

This structural mismatch between image enhancement and object detection constitutes a major bottleneck in developing reliable underwater detection systems. To address this issue, a more integrated framework that bridges these two stages is required. In this study, we propose DiCAF, a dual-input backbone with co-attention fusion network built upon the latest YOLOv11 detector. The DiCAF framework simultaneously processes both the original and enhanced images, fusing their features through a co-attention mechanism to perform joint object detection. In parallel, two additional YOLOv11 detectors operate independently on the original and enhanced images, respectively. Consequently, three independent detection outputs are obtained: one from the DiCAF-fused branch and two from the single-input branches. These outputs are subsequently combined using a non-maximum suppression (NMS)-based ensemble strategy. This multi-branch fusion compensates for missed detections in individual branches, thereby enhancing the overall robustness and accuracy of underwater object detection.

Moreover, since image enhancement can amplify high-frequency noise caused by suspended particles or debris—potentially increasing false detections—a low-intensity Gaussian filter is applied as a post-processing step immediately after enhancement within the DiCAF framework. This filtering step suppresses noise while preserving object contours, resulting in more stable feature extraction and fewer false positives, as demonstrated in our experiments. The proposed ensemble framework also supports flexible deployment strategies: the single DiCAF model can be employed for real-time applications where inference speed is critical, whereas the full NMS-based ensemble of all three branches is suitable for scenarios that prioritize detection accuracy.

The main contributions of this work are summarized as follows:

- A dual-backbone architecture based on YOLOv11 is designed, incorporating a co-attention fusion module to effectively integrate complementary features from the original and enhanced images, thereby improving robustness to the structural variability of underwater scenes.

- A lightweight Gaussian filter is introduced as a post-processing step to suppress high-frequency noise amplified during image enhancement, thereby reducing false detections caused by speckle noise from suspended particles.

- An NMS-based ensemble strategy is proposed to integrate detection outputs from three independent branches—DiCAF-fused, original-only, and enhanced-only—achieving superior detection performance compared to any single branch.

2. Related Works

2.1. Underwater Image Enhancement

Underwater image enhancement techniques can be broadly classified into physics-based and learning-based approaches. Early research primarily focused on mathematically modeling underwater light absorption and scattering processes to restore color balance and contrast. For example, Song et al. [14] integrated the dark channel prior method with guided filtering to mitigate color overcorrection in underwater images, whereas Zhang et al. [15] achieved stable restoration performance under varying conditions using a wavelet-based weighted fusion strategy. However, physics-based methods are highly sensitive to environmental parameters such as water quality, illumination, and visibility, often leading to unstable parameter estimation and degradation in restoration quality.

Recent advancements have increasingly leveraged deep learning–based techniques to overcome the limitations of physics-based approaches. Zhang et al. [16] integrated cycle-consistent generative adversarial network (CycleGAN) with physical modeling, while Cong et al. [17] proposed a GAN-based enhancement guided by an image formation model, combining physical parameter estimation with adversarial learning to improve visual quality and scene adaptability. Wang et al. [18] further advanced this approach by incorporating transformer modules into a U-Net-based feature extractor, achieving generalized restoration under more complex distortion conditions. Although these learning-based methods show notable improvements in visual quality compared to traditional techniques, most studies focus primarily on visual evaluation metrics and overlook the impact of enhancement on downstream recognition tasks, such as object detection. Enhancement may inadvertently sharpen background regions or amplify high-frequency noise from suspended particles, potentially disrupting the feature extraction process of detectors.

2.2. Object Detection in Underwater Environment

Deep learning–based object detectors are typically categorized into two-stage and one-stage methods based on their architecture. Although two-stage detectors generally achieve higher accuracy, their computational complexity limits real-time applicability in underwater detection scenarios [19]. In contrast, one-stage detectors, such as the YOLO family, provide a favorable trade-off between inference speed and detection accuracy, making them widely adopted for underwater debris detection. Zhou et al. [20] introduced YOLOTrashCan, an enhanced version of YOLOv4 with a lightweight backbone, improving both detection accuracy and efficiency. More recent studies have focused on further enhancing YOLO performance underwater by incorporating multi-scale feature encoding [21] and compressing the backbone network [22].

Nevertheless, real-world underwater conditions—such as increased turbidity, uneven illumination, and color distortion—continue to obscure foreground-background distinctions and compromise detector reliability. To address these challenges, recent studies have investigated integrated frameworks that combine underwater image enhancement with object detection. For instance, Lucas et al. [23] coupled a U-Net-based enhancement module with YOLO–Neural Architecture Search to improve detection accuracy, while Qiu et al. [24] proposed CFEC-YOLOv7, which integrates blur removal with YOLOv7 to maintain high performance under complex blur and turbidity. Liu et al. [25] jointly trained an object detector with a modified Koschmieder model–based enhancement unit, achieving enhanced detection performance across diverse color distortion scenarios.

Despite these advances, current approaches continue to face fundamental limitations arising from unstable feature distributions and increased background interference introduced by image enhancement. While enhancement can improve detection under certain conditions, it may also lead to false positives or missed detections in other scenarios. Consequently, achieving robust and stable underwater object detection remains an open challenge.

2.3. Research Gap and Motivation

Existing underwater image enhancement methods effectively improve visual quality but can compromise the performance of downstream object detection by amplifying high-frequency background noise and destabilizing feature distributions. Furthermore, most detectors rely exclusively on either the original or the enhanced image, limiting their ability to robustly handle the complex and variable underwater environment, which is characterized by turbidity, color distortion, and particulate noise.

Motivated by these challenges, this work proposes a structural solution that simultaneously leverages the complementary features of original and enhanced images, suppresses enhancement-induced noise through post-processing, and robustly integrates multi-branch detection results. Specifically, we design DiCAF based on YOLOv11, incorporating a low-intensity Gaussian filter immediately after enhancement. The final detection results are obtained via an NMS-based ensemble that fuses outputs from three independent branches: one from DiCAF processing fused features of the original and enhanced images, and two others from separate YOLOv11 detectors operating independently on the original and enhanced images. This approach aims to overcome the structural limitations of prior methods and achieve reliable underwater object detection in real-world scenarios.

3. Methodology

This section presents the overall architecture of the proposed framework for underwater object detection, along with detailed descriptions of its key components. First, the overall pipeline, which simultaneously utilizes both the original and enhanced images, is introduced. Next, the structure and operating principles of the DiCAF module—responsible for fusing complementary information through a dual-input architecture—are explained. Finally, the low-intensity Gaussian post-processing technique, designed to mitigate high-frequency noise introduced during image enhancement, and the NMS-based ensemble strategy for integrating detection results from the three branches are presented sequentially.

3.1. Overall Framework

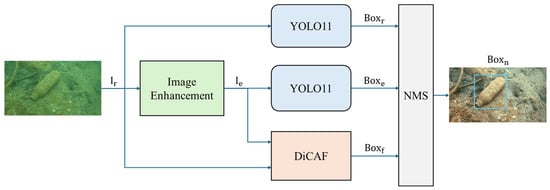

The overall pipeline proposed in this study is illustrated in Figure 1. The input image is first processed by a deep learning–based image enhancement module to generate the enhanced image . Both and are then independently fed into separate YOLOv11 detectors, producing detection outputs and , respectively. Simultaneously, the DiCAF module processes the two inputs in parallel through a dual-backbone architecture to extract multi-scale features. These feature maps are fused via a co-attention mechanism that enables mutual information exchange, generating an additional detection output, . Finally, the three detection outputs—original branch , enhanced branch , and fused branch are integrated using NMS to produce the final detection results. This design effectively exploits the complementary information from both original and enhanced images, combining the strengths of each branch to achieve stable and robust underwater object detection.

Figure 1.

Overall architecture of the proposed underwater object detection framework. The blue box indicates the final detection result obtained by integrating , , and using NMS.

3.2. Image Enhancement

Underwater images experience degradation that differs from atmospheric conditions due to the absorption and scattering properties of light. Specifically, wavelength-dependent absorption rapidly attenuates the red component even at shallow depths, while green and blue components are relatively preserved, resulting in cyan color casts and color distortions. Additionally, suspended particles and heterogeneous media cause forward and backward scattering, reducing image contrast and blurring object contours [26,27].

To address these degradation effects, this study adopts the physics-based underwater image enhancement method proposed by Chen et al. [28] as a preprocessing step. This method models the underwater image formation process physically and estimates relevant parameters via a convolutional neural network (CNN)-based module. The underwater image formation can be expressed as

where is the observed underwater image, is the restored ideal image, is the transmission map representing the proportion of light passing through the medium, and denotes the backscatter light caused by the underwater environment. Equation (1) describes how the observed underwater image is composed of the direct object reflection attenuated by the medium and the global background light caused by particulate scattering. Rearranging for the ideal image , we obtain

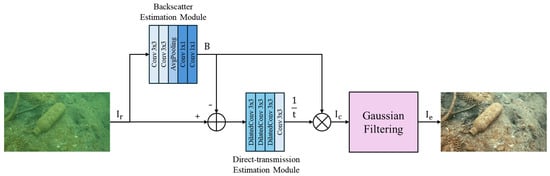

Since and cannot be directly measured, they are estimated using a CNN-based approach. The complete restoration pipeline is illustrated in Figure 2 and consists of three components: the backscatter estimation module, the direct-transmission estimation module, and a Gaussian filtering step to further suppress high-frequency noise.

Figure 2.

Architecture of the underwater image enhancement module.

The backscatter estimation module predicts the underwater background light from the input image using convolutional operations and PReLU activation functions within the CNN, enabling reliable learning of global illumination components. The direct-transmission estimation module then takes the concatenation of and the estimated as input, predicting the inverse of the transmission map . Dilated convolutions are employed to model scattering effects across multiple spatial scales, while the multiplicative restoration framework enhances training stability. The enhanced image is subsequently computed using Equation (2) with the estimated parameters.

Although this approach effectively corrects color distortion and low contrast, fine-grained random noise may be amplified due to scattering and inter-pixel color estimation errors. To mitigate this, a Gaussian filtering post-processing step is applied, producing the final enhanced image :

where is a normalized two-dimensional Gaussian kernel with standard deviation . This low-pass filtering suppresses amplified high-frequency noise, stabilizing feature distributions and reducing false positives in subsequent object detection stages.

3.3. Dual-Input Co-Attention Fusion

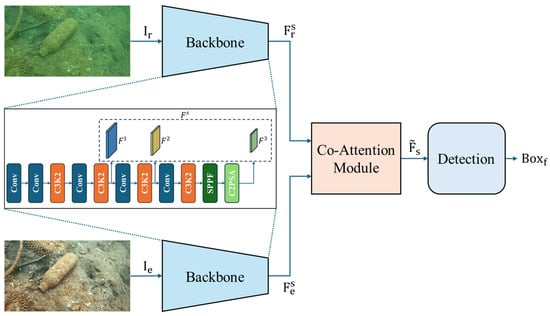

Underwater images exhibit diverse characteristics depending on environmental factors such as illumination, turbidity, and color distortion. The original image generally preserves structural information well, but color distortions and reduced contrast make it difficult to separate objects from the background. In contrast, the enhanced image restores color and object contours more distinctly; however, it may excessively emphasize background regions, potentially confusing the detector. Naively combining these complementary inputs can lead to information loss or increased false detections. To address these challenges, this study proposes a novel DiCAF architecture, which employs dual YOLOv11 backbones operating in parallel to independently extract multi-scale features from each input. The extracted feature maps are then aligned and selectively fused through a co-attention module, enabling mutual information exchange between the original and enhanced features. The overall DiCAF architecture is illustrated in Figure 3.

Figure 3.

Architecture of the proposed DiCAF framework.

Each input image—the original and the enhanced—is processed in parallel through independent YOLOv11-based backbone networks. Both backbones share an identical architecture and extract multi-scale feature maps from their respective inputs. Each backbone consists of four main blocks: Conv, C3K2, spatial pyramid pooling-fast (SPPF), and C2 partial spatial attention (C2PSA). The Conv block adjusts the number of channels and ensures compatibility between features at different resolutions through 1 × 1 and 3 × 3 convolution operations. The C3K2 block is a modified cross stage partial structure that splits the input into two paths: one path is forwarded directly, while the other passes through two bottleneck layers before merging. Internally, the block contains three convolutional paths, and the “K2” denotes the number of bottleneck repetitions. This design minimizes information loss while enabling efficient feature extraction.

The SPPF block integrates information from multiple receptive fields through multi-scale max-pooling operations of varying sizes, generating robust feature representations that are resilient to object scale variations. The C2PSA block combines channel attention and spatial attention to emphasize important object regions while suppressing background interference. Since both inputs originate from the same scene, their resolutions and spatial alignments are consistent. Each backbone extracts feature maps at three resolution levels, denoted as and for the original and enhanced inputs, respectively. Simple fusion strategies, such as addition or concatenation, do not fully exploit the complementary information between the two inputs. Therefore, each pair of multi-scale feature maps is processed by a co-attention module, which performs mutual reference-based alignment and selective fusion. The architecture of this module is illustrated in Figure 4.

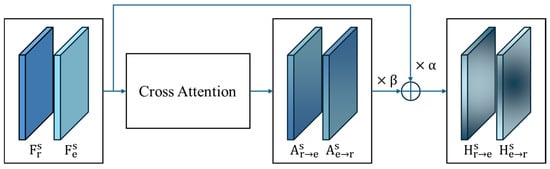

Figure 4.

Structure of the co-attention module, illustrating feature alignment and selective fusion of complementary information between the original and enhanced inputs.

The proposed co-attention module takes the original feature and the enhanced feature at scale as inputs and performs mutual cross-attention to effectively fuse information from both sources. Here, denotes three feature levels corresponding to 1/8, 1/16, and 1/32 of the input image resolution, which primarily contribute to detecting small, medium, and large objects, respectively. The module employs a single-head cross-attention structure, and no additional normalization layers are applied to avoid feature distribution distortion. The fused features produced by the co-attention module are aligned through a 1 × 1 convolution and then propagated to the standard YOLO neck and head for subsequent detection. It aims to capture complementary relationships between the two feature maps, enhancing the focus on target objects. Each input feature is first projected into Query (Q), Key (K), and Value (V) representations. The attention outputs are computed as

Here, , , and denote 1 × 1 convolution operations that transform the input features into Query, Key, and Value tensors, respectively. The variable represents the dimensionality of the inner product space and acts as a scaling factor to stabilize the softmax computation. Equation (4) identifies the regions in the enhanced feature that the original feature should attend to, while Equation (5) determines how the enhanced feature references the original feature. To prevent attention results from becoming overly biased toward one input—potentially causing loss of intrinsic information from the other input—residual connections are applied. This preserves the original features while incorporating attention-enhanced information, producing the fused representations:

Here, and are learnable coefficients that regulate the relative contributions of the original features and the attention outputs. This process allows the original feature to retain its structural information while gaining enhanced color and contrast details, and the enhanced feature to incorporate improved structural stability alongside color information. The two features and , are then concatenated along the channel dimension and passed through a 1 × 1 convolution to remove redundancy and preserve the most critical information, producing the fused feature:

The fused feature is subsequently forwarded to the Neck and Head modules of YOLOv11, where it is used to predict object locations and confidence scores.

3.4. NMS-Based Ensemble Strategy

The proposed DiCAF architecture processes both the original and enhanced images as dual inputs, extracting complementary visual information in parallel and effectively fusing these features through the co-attention module to produce enriched representations. However, relying on a single model may not ensure consistent detection performance under all underwater conditions, where illumination, turbidity, and visibility can vary, causing fluctuations in detection results depending on image quality. To address this, an ensemble strategy based on NMS is employed to integrate predictions from three independent detection branches: the original input branch and the enhanced input branch, both using the YOLOv11 detector, and the DiCAF fusion branch, which performs detection using the fused features from the co-attention module. Each branch independently predicts candidate object bounding boxes from its respective input.

Each branch produces prediction results using a common intersection over union (IoU) threshold . The candidate boxes from all three branches are merged into a single set, and NMS is applied to eliminate redundant overlapping predictions, retaining only the highest-confidence detection at each location. This process can be expressed as

where , , and denote the sets of bounding boxes predicted by the original input YOLOv11 detector, the enhanced input YOLOv11 detector, and the DiCAF module, respectively.

This NMS-based ensemble strategy leverages the strengths of all three branches, integrating complementary information to robustly detect objects that may be missed by any single branch. Specifically, objects overlooked under certain conditions by one detector are likely compensated for by the others, enabling the overall system to achieve near-optimal detection performance.

4. Experimental Results

This section evaluates the performance of the proposed DiCAF-based detection framework under diverse underwater environmental conditions. The framework’s effectiveness is assessed through quantitative comparisons with existing methods. Experiments were conducted on a publicly available marine debris image dataset designed to capture a range of illumination and turbidity conditions commonly encountered in real underwater scenarios. Comparative baselines include conventional pipelines combining underwater image enhancement with simple detector concatenation, single-input YOLOv11 detectors, and recently proposed fusion-based detection architectures.

4.1. Experimental Setup

All experiments were implemented in the PyTorch version 2.1.2 and executed on an NVIDIA RTX 3060 GPU paired with an Intel Core i7-12700 CPU. Input images were uniformly resized to 640 × 640 pixels. Training was performed with a batch size of 12 for 1000 epochs, using stochastic gradient descent with a momentum of 0.9. To ensure stable convergence during the initial training phase, a warm-up learning rate strategy was applied. Several data augmentation techniques were employed to improve model generalization, including random cropping, horizontal flipping, rotation, brightness adjustment, and mosaic augmentation.

Following the image enhancement process, a low-intensity Gaussian filter with a standard deviation was applied as a post-processing step to suppress the amplification of fine particulate noise caused by suspended materials. Experiments were conducted using the Enhanced Marine Debris Image Data [29], constructed with support from the Korea Intelligent Information Society Agency under funding from the Ministry of Science and ICT. The dataset contains various underwater debris objects, including plastic bottles, glass bottles, beverage cans, and discarded fishing gear. For this study, plastic bottles, glass bottles, and beverage cans—objects with significant ecological impact—were consolidated into a single “trash” class for labeling. The dataset was split into 1647 images for training, 200 for validation, and 376 for testing. The test set comprised 22 cans, 296 plastic bottles, and 124 glass objects. To further evaluate the generalization capability across different domains, the SeaClear Dataset [30] was utilized. This dataset consists of images captured along the Mediterranean coast using ROVs and AUVs, with imaging equipment and underwater illumination conditions that differ substantially from those of the previous dataset. Following the same labeling scheme, 2333 images were used for training, 200 for validation, and 433 for testing.

To quantitatively evaluate the proposed detection model, mean average precision (mAP)—a standard metric in object detection—was adopted as the primary criterion. Both mAP@0.5, using an IoU threshold of 0.5, and mAP@0.5:0.95, calculated as the average mAP over IoU thresholds from 0.5 to 0.95 in increments of 0.05, were reported. This provides a comprehensive assessment of the model’s detection performance across varying levels of localization strictness. The mAP is computed by averaging the area under the precision–recall (PR) curve for each class. Precision and recall are defined as

Here, TP, FP, and FN represent true positives, false positives, and false negatives, respectively. Precision quantifies the proportion of correctly detected objects among all detected objects, reflecting the accuracy of the detector. Recall measures the proportion of correctly detected objects relative to the total number of ground truth objects, indicating the detector’s ability to capture true objects while minimizing missed detections.

4.2. Ablation Study

An ablation study was conducted under identical experimental conditions to quantitatively evaluate the individual and combined contributions of the co-attention module, Gaussian filtering, and ensemble strategy within the proposed DiCAF framework. Specifically, each component was selectively disabled, and the resulting performance was measured using both AP@0.5 and AP@0.5:0.95 metrics. The corresponding performance variations are summarized in Table 1.

Table 1.

Ablation study on the effects of co-attention, Gaussian filtering, and ensemble integration.

The baseline model, which uses only the original image without enhancement, achieved AP@0.5 = 0.88 and AP@0.5:0.95 = 0.72, indicating a reasonably high detection accuracy even in the absence of multi-input fusion or post-processing modules. When only the co-attention module was activated, semantic correspondence between the original and enhanced inputs was established; however, the absence of noise suppression allowed background artifacts from the enhanced image to propagate through the feature space, reducing the overall performance to AP@0.5 = 0.84 and AP@0.5:0.95 = 0.67. This observation suggests that feature fusion without adequate filtering can inadvertently degrade detection accuracy.

The configuration employing only the filtering module combined with simple concatenation-based fusion yielded AP@0.5 = 0.85 and AP@0.5:0.95 = 0.66, demonstrating that straightforward concatenation is insufficient to fully leverage the complementary nature of the two input domains. By jointly applying the co-attention and filtering modules, performance was restored to AP@0.5 = 0.87 and AP@0.5:0.95 = 0.71, approaching that of the baseline. In this configuration, new object instances were successfully detected; however, a few previously detected objects were missed, implying a trade-off between precision and recall.

Finally, the full configuration incorporating the ensemble module achieved the highest performance, with AP@0.5 = 0.91 and AP@0.5:0.95 = 0.75. These results demonstrate that integrating the predictions from the original, enhanced, and fused branches through NMS effectively compensates for the trade-offs observed in preceding configurations, thereby enhancing overall detection robustness and consistency.

4.3. Quantitative Comparison

To quantitatively evaluate the impact of image enhancement on underwater object detection, comparative experiments were conducted using the YOLOv11 detector with various preprocessing methods. Six approaches were assessed, including physics-based restoration methods by Wang et al. [31] and Zhang et al. [15], a deep learning–based enhancement method by Chen et al. [28], and the baseline detector using original images by Mughal et al. [32]. The results of these comparisons are summarized in Table 2.

Table 2.

Detection performance comparison of YOLOv11 under different underwater image enhancement methods.

The baseline model by Mughal et al. [32], which applies YOLOv11 directly to the original images without enhancement, achieved an AP@0.5 of 0.88 and an AP@0.5:0.95 of 0.72. In contrast, the physics-based enhancement methods by Wang et al. [31] and Zhang et al. [15] led to degraded detection performance, with AP@0.5 scores of 0.79 and 0.83, respectively—representing up to a 9% reduction relative to the baseline. Their AP@0.5:0.95 metrics also decreased to 0.59 and 0.63. These results suggest that, although traditional color restoration methods may enhance visual quality for human observers, they can adversely affect the feature representations relied upon by object detectors. The deep learning–based enhancement method by Chen et al. [28] produced visually superior color correction; however, its detection performance remained lower than the baseline (AP@0.5 = 0.84, AP@0.5:0.95 = 0.66). This empirically demonstrates that image enhancement does not necessarily translate into improved performance for downstream tasks, particularly object detection.

In contrast, the proposed DiCAF architecture processes both the original and enhanced images in parallel and fuses their complementary information, effectively maintaining or improving detection performance. Using only the single fusion branch without NMS, the model achieved AP@0.5 = 0.87 and AP@0.5:0.95 = 0.71, comparable to the baseline. This demonstrates effective integration of complementary information between the two inputs. Notably, the ensemble configuration, which combines outputs from the original, enhanced, and fusion branches through NMS, achieved the highest performance, with AP@0.5 = 0.91 and AP@0.5:0.95 = 0.75. These results indicate that robustly combining features from multiple input sources is essential to approach the upper bound of detection performance.

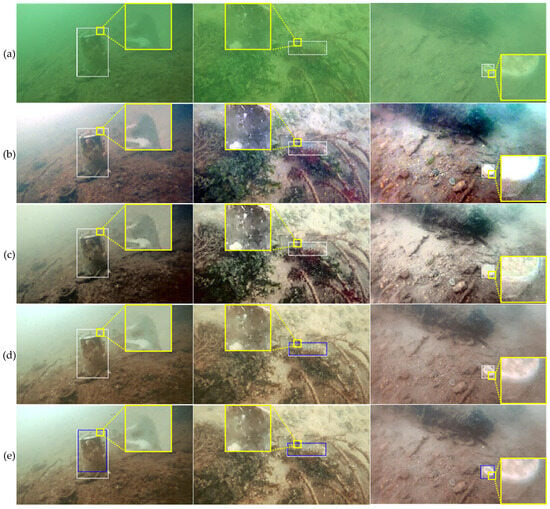

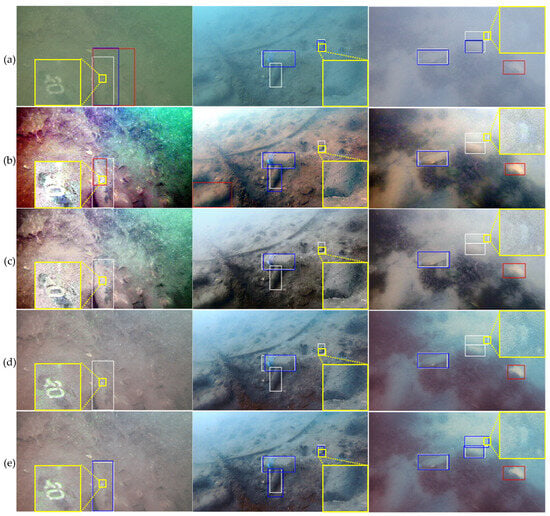

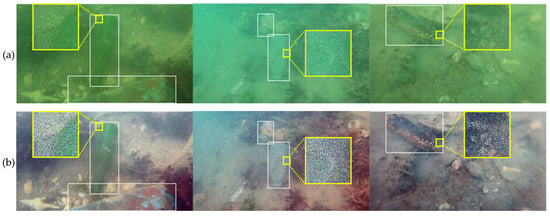

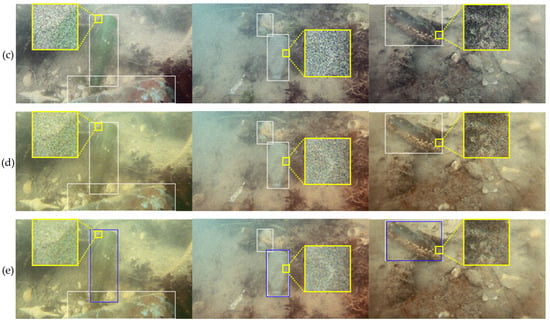

Beyond quantitative evaluation, the effectiveness of the proposed method was also assessed through visual comparisons. Figure 5 and Figure 6 present detection results under underwater conditions with visibility below and above 1 m, respectively. In all cases, results are shown on the same scenes, with blue boxes indicating correct detections, red boxes denoting false positives, and white boxes representing ground truth. Yellow boxes highlight the zoomed-in regions that provide a closer view of detection results under challenging underwater conditions. The proposed DiCAF method consistently produced stable detections, particularly in challenging environments, and successfully identified objects that were missed by existing techniques.

Figure 5.

Detection results under severely degraded underwater conditions (visibility < 1 m): (a) Mughal et al. [32]; (b) Wang et al. [31]; (c) Zhang et al. [15]; (d) Chen et al. [28]; (e) Ours (with NMS).

Figure 6.

Detection results under relatively clear underwater conditions (visibility ≥ 1 m): (a) Mughal et al. [32]; (b) Wang et al. [31]; (c) Zhang et al. [15]; (d) Chen et al. [28]; (e) Ours (with NMS).

Figure 5 compares object detection results of various image enhancement methods and the proposed DiCAF model under challenging underwater conditions with visibility below 1 m. In such low-visibility scenarios, rapid attenuation of the red channel results in a cyan-green color cast, causing pronounced color distortion and reduced contrast across the scene.

Figure 5a shows the detection results of Mughal et al. [32] without any preprocessing, where severe color distortion and low-contrast backgrounds result in complete failure of object detection. Figure 5b,c illustrate the outcomes of physics-based color correction methods by Wang et al. [31] and Zhang et al. [15], respectively. Although these methods temporarily enhance contrast, excessive color correction causes object boundaries to blend with the background, leading to missed detections. Notably, while Figure 5b,c appear visually improved, detection performance does not improve, highlighting the discrepancy between visual quality and detector accuracy. Figure 5d presents results using Chen et al. [28]’s deep learning–based enhancement method, which detects some objects but remains sensitive to noise and produces inconsistent results. In contrast, Figure 5e demonstrates the proposed DiCAF method, which fuses the structural stability of the original image with the color and contrast information of the enhanced image via a co-attention mechanism. This approach achieves robust and reliable detection, successfully identifying objects missed by prior methods.

Figure 6 presents visual comparisons of detection results for various enhancement methods and the proposed DiCAF model on underwater images captured in relatively clear environments with visibility greater than 1 m. Although these images still exhibit blurriness from suspended particles and fine particulate noise, overall contrast and clarity are improved compared to Figure 5. Figure 6a shows results from the study by Mughal et al. [32], where low contrast leads to indistinct object contours and frequent false positives. Figure 6b illustrates Wang et al.’s [31] method, which fails to remove background interference despite color correction, resulting in multiple detection failures. Similarly, Figure 6c depicts Zhang et al.’s [15] method, where excessive color correction distorts object boundaries and causes unstable detection performance. Figure 6d shows the deep learning–based enhancement method by Chen et al. [28], which detects some objects but demonstrates reduced consistency due to increased sensitivity to noise. In contrast, Figure 6e demonstrates the proposed DiCAF method, which effectively fuses structural cues from the original image with color and contrast information from the enhanced image, achieving robust detection, precise localization, and successfully identifying objects missed by previous methods. Some scenes, however, still exhibit accumulation of false positives due to limitations of the NMS technique.

To further assess the generalization capability of the proposed DiCAF framework, additional validation experiments were conducted using the publicly available SeaClear dataset [30]. This dataset presents a more challenging setting compared to the Enhanced Marine Debris Image Data, featuring complex backgrounds, motion blur caused by AUV movement, and higher variability in illumination and turbidity. Under identical experimental settings, the detection performance was evaluated to investigate the model’s robustness across diverse underwater conditions. The quantitative results are summarized in Table 3.

Table 3.

Comparison of YOLOv11 detection performance under different underwater image enhancement methods evaluated on the SeaClear dataset.

As shown in Table 3, all methods exhibited a decrease in overall detection accuracy, primarily due to the challenging conditions of the SeaClear dataset, which include complex backgrounds, strong color attenuation, and motion-induced blur. The baseline method of Mughal et al. [32], which directly applies object detection to the original images without enhancement, achieved AP@0.5 = 0.69 and AP@0.5:0.95 = 0.52, maintaining a relatively stable performance but still underperforming compared to the proposed method. In contrast, the restoration techniques by Wang et al. [31] and Zhang et al. [15] showed the most pronounced performance degradation, achieving AP@0.5 = 0.33 and 0.32, respectively. These results indicate that excessive color contrast enhancement and inaccurate restoration under motion blur can distort object boundaries, thereby reducing the discriminative power of feature representations essential for detection. The deep learning–based enhancement approach proposed by Chen et al. [28] achieved moderate improvements (AP@0.5 = 0.44, AP@0.5:0.95 = 0.32); however, its detection accuracy remained limited, likely due to increased sensitivity to noise.

By comparison, the proposed DiCAF framework effectively leveraged the complementary characteristics of the original and enhanced images. Even when employing only a single fusion branch (Ours without NMS), the model achieved AP@0.5 = 0.58, outperforming all prior enhancement-based methods. The integration of the NMS-based ensemble strategy (Ours with NMS) further improved performance to AP@0.5 = 0.71 and AP@0.5:0.95 = 0.53, yielding the best result among all compared models. These findings empirically demonstrate that the proposed dual-input fusion and ensemble approach can maintain stable and robust detection accuracy even under substantially different underwater conditions.

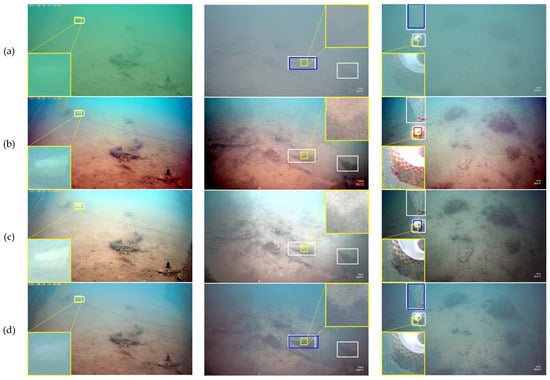

Figure 7 presents a visual comparison of detection results in underwater scenes captured by a moving AUV with active illumination. In this setting, strong light attenuation and increased turbidity reduce color contrast and blur object boundaries, making detection particularly challenging. In Figure 7a, the method by Mughal et al. [32] suffers from low contrast, causing object boundaries to merge with the background and resulting in numerous missed detections. Figure 7b,c, corresponding to the methods by Wang et al. [31] and Zhang et al. [15], exhibit excessive contrast enhancement that distorts object shapes and leads to the omission of previously detectable objects. Figure 7d demonstrates that the approach by Chen et al. [28] enhances visibility; however, the accompanying increase in noise compromises detection stability. By contrast, the proposed method (Figure 7e) effectively combines the structural features of the original image with the color and luminance information from the enhanced image, enabling robust detection of objects that were missed by other methods and demonstrating superior overall performance in this challenging dynamic underwater environment.

Figure 7.

Detection results in underwater scenes captured by a moving AUV with active illumination: (a) Mughal et al. [32]; (b) Wang et al. [31]; (c) Zhang et al. [15]; (d) Chen et al. [28]; (e) Ours (with NMS).

4.4. Noise Robustness Evaluation

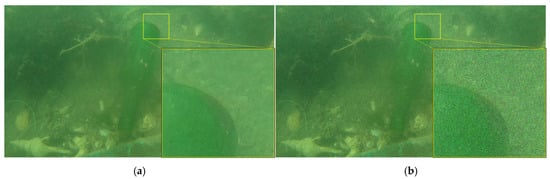

Underwater images inherently contain various types of noise caused by suspended particles. Such noise can bias the parameter estimation of image enhancement algorithms, blur object boundaries, and distort object shapes, thereby reducing the reliability of detectors. To evaluate the noise robustness of the proposed method, speckle noise was artificially added to the test images, and the detection performance of comparative models was analyzed, as illustrated in Figure 8. The speckle noise was sampled from a uniform distribution with zero mean and variance of 0.05.

Figure 8.

Visual comparison of detection results before and after speckle noise injection: (a) original images without noise; (b) images with added speckle noise.

Figure 8a shows the original test image, while Figure 8b presents the same image after the addition of artificial speckle noise. This noise simulates particulate matter commonly found in underwater environments by introducing pointwise random variations, creating distortion patterns that resemble the speckled appearance observed in real underwater scenes. The added noise blurs object contours and shapes, potentially reducing detector confidence and increasing false positives. Furthermore, speckle noise can negatively impact parameter estimation in image enhancement algorithms. Detection performance under these noise conditions is summarized in Table 4.

Table 4.

Detection performance comparison of various methods under speckle noise conditions.

The baseline model without preprocessing, Mughal et al. [32], achieved an AP@0.5 of 0.32 and an AP@0.5:0.95 of 0.21 under speckle noise conditions. The physics-based color correction methods by Wang et al. [31] and Zhang et al. [15] exhibited even greater performance degradation, with AP@0.5 dropping to 0.16 and 0.23, and AP@0.5:0.95 to 0.11 and 0.15, respectively. The deep learning–based enhancement method by Chen et al. [28] recorded the lowest performance, with AP@0.5 of 0.12 and AP@0.5:0.95 of 0.08. These results indicate that parameter uncertainty during enhancement, as well as the amplification of speckle-like artifacts, can severely impair downstream detection performance in noisy underwater environments.

In contrast, the proposed method with low-intensity Gaussian filtering as a post-processing step, referred to as Ours (without NMS), effectively mitigated high-frequency noise from suspended particles, achieving an AP@0.5 of 0.60 and an AP@0.5:0.95 of 0.42, representing a substantial improvement over existing methods. Furthermore, the configuration that integrates outputs from the original, enhanced, and fused branches using non-maximum suppression, denoted as Ours (with NMS), achieved slightly higher performance with an AP@0.5 of 0.62 and an AP@0.5:0.95 of 0.44. These results indicate that Gaussian filtering enhances the robustness of individual branches, while the NMS-based fusion effectively leverages complementary information to achieve further gains. Both configurations consistently outperformed the physics-based and deep learning–based enhancement methods under noisy conditions.

Figure 9 visually compares detection results under speckle noise across all models. Figure 9a shows the baseline model without enhancement by Mughal et al. [32], where detection fails completely due to severe color distortion and high-frequency noise, despite the object being clearly present. Figure 9b,c, representing physics-based corrections by Wang et al. [31] and Zhang et al. [15], partially reduce color distortion but lack noise suppression, allowing speckle patterns to persist and causing object boundaries to blend into the background, which degrades detection performance. Figure 9d, using Chen et al. [28]’s deep learning–based method, provides moderate visual clarity but remains vulnerable to noise, often missing fine object structures. In contrast, Figure 9e demonstrates the proposed DiCAF method, which combines Gaussian filtering with NMS-based multi-branch fusion to suppress speckle noise and robustly detect objects by leveraging both the structural cues from the original image and the contrast enhancements from the enhanced image.

Figure 9.

Detection results under speckle noise conditions: (a) Mughal et al. [32]; (b) Wang et al. [31]; (c) Zhang et al. [15]; (d) Chen et al. [28]; (e) Ours (with NMS).

These results empirically demonstrate the detrimental effects of speckle noise on underwater object detection and underscore the importance of a sophisticated pipeline that combines post-processing with multi-branch fusion, rather than relying solely on color correction. The proposed DiCAF method maintains high robustness even under noisy underwater conditions, achieving consistent and stable object detection while minimizing false positives.

5. Discussion

This study introduced a dual-backbone architecture with a co-attention-based fusion mechanism to address the observed disconnect between visual enhancement and object detection in underwater environments. Although the fusion architecture did not produce a substantial improvement in mAP over single-input models, detailed analysis revealed that each input modality—the original and the enhanced image—captured distinct subsets of objects. Importantly, the fusion branch successfully detected instances missed by both single-input detectors. These findings indicate that the proposed co-attention-based fusion primarily enhances detection stability and robustness rather than absolute mAP gains. This observation supports the broader challenge outlined in the introduction: underwater image enhancement, while visually beneficial, often introduces structural inconsistencies that impair detection. By explicitly fusing semantic and structural cues from both input domains, the proposed architecture effectively mitigates overfitting to enhancement-induced artifacts and preserves contextual information present in the original image.

Furthermore, the lightweight Gaussian filter introduced as a post-enhancement step played a pivotal role in suppressing high-frequency noise, particularly that originating from suspended particulate matter such as speckles and floating debris. In the absence of this filtering, such noise components could be amplified during enhancement, resulting in unstable feature learning and an increased number of false positives. Applying the filter prior to detection enabled the model to preserve clearer object boundaries. Additionally, during the NMS phase, the filtering reduced redundancy among noisy, low-confidence predictions, thereby mitigating the accumulation of false positives. Empirical evaluations with synthetically injected speckle noise further confirmed that models incorporating post-filtering exhibited slower performance degradation and maintained higher detection consistency under noisy conditions. However, the benefits of filtering must be carefully balanced against the risk of oversmoothing, which may obscure small or low-contrast object boundaries. This trade-off underscores the importance of tuning hyperparameters—such as kernel size and standard deviation—based on the statistical properties of the dataset. When appropriately calibrated, even lightweight filtering can exert a substantial positive influence on detection stability and reliability.

To further enhance detection robustness, an ensemble strategy was proposed that integrates outputs from three independent detection branches: one operating on the original image, another on the enhanced image, and a third utilizing the DiCAF fusion. The NMS-based ensemble effectively leveraged the strengths of each branch, recovering detections missed by individual models and achieving performance approaching an upper-bound limit. This ensemble validated the complementarity between different feature domains and established a scalable framework suitable for performance-critical applications. Depending on deployment constraints, practitioners may opt for a single-branch configuration to achieve real-time operation or employ the full ensemble for maximum accuracy.

Despite these contributions, several limitations remain. First, the dataset used in this study contained only a single object class (“trash”), leaving the method’s generalizability to multi-class detection untested. Second, the NMS-based ensemble requires three detectors to operate in parallel, substantially increasing computational overhead and limiting its practicality in resource-constrained environments. Third, both the co-attention fusion mechanism and post-processing filtering introduce additional computational costs, highlighting the need for future work in model compression and optimization.

In summary, this study reinforces the critical insight that underwater image enhancement does not inherently improve detection accuracy and may, in fact, degrade performance if not appropriately constrained. By combining dual-input fusion, noise-aware filtering, and multi-branch ensemble integration, a robust and scalable framework for underwater object detection has been established. Future work will focus on extending this pipeline to multi-class detection tasks and dynamic underwater environments, as well as exploring more efficient, edge-preserving filters and post-processing-aware training strategies to further enhance the stability and computational efficiency of underwater detection systems.

6. Conclusions

This study empirically demonstrated that, although underwater image enhancement can improve visual perception for human observers, it does not necessarily enhance object detection performance in deep learning models. On the contrary, enhancement processes may amplify noise or distort object boundaries, thereby reducing detection accuracy. To address this limitation, we proposed DiCAF, a dual-input co-attention fusion module that simultaneously processes both original and enhanced images. Instead of relying on a single input, the DiCAF framework exploits the complementary characteristics of both representations, thereby improving detection robustness under complex and variable underwater conditions.

Experimental results demonstrated that although the DiCAF module did not consistently surpass single-input models in terms of average precision, it provided notable advantages in detection stability. Specifically, it successfully identified objects that were missed by either the original or enhanced input branch, confirming the complementary nature of the two input domains. Furthermore, the application of a low-intensity Gaussian filter immediately after image enhancement effectively suppressed noise arising from suspended particles, thereby reducing false positives and preserving detection performance under noisy conditions. This post-processing step served as a practical and lightweight noise mitigation strategy, particularly valuable for real-world underwater applications.

Furthermore, an NMS-based ensemble was proposed to integrate detection outputs from three independent branches—original, enhanced, and DiCAF-fused. This ensemble achieved performance approaching the upper bound attainable by the model configuration, demonstrating that such integration effectively recovers missed detections and enhances overall accuracy. These results indicate that the DiCAF architecture provides a practical baseline for deployment in resource-constrained scenarios, whereas the ensemble configuration offers a high-performance alternative when computational resources allow.

The main contributions of this work are summarized as follows: (1) empirical evidence was provided highlighting the discrepancy between human visual perception and machine-based object detection in enhanced underwater imagery; (2) the effectiveness of lightweight Gaussian filtering was validated in suppressing enhancement-induced noise, contributing to stable and reliable detection; (3) the dual-input co-attention fusion strategy was shown to enable complementary detection of objects missed by individual inputs; and (4) the NMS-based ensemble integration was demonstrated to approach the theoretical upper bound of detection performance, offering strategic flexibility between single-model deployment and ensemble-based optimization depending on application requirements.

Future work will focus on extending the proposed framework to multi-class detection tasks, improving computational efficiency for real-time deployment, and developing more efficient fusion and post-processing strategies to reduce the complexity of ensemble inference. Ultimately, the goal is to establish a robust and scalable underwater debris detection system adaptable to AUVs operating in diverse and dynamic marine environments.

Author Contributions

S.Y. and J.C. participated in the discussion of the work described in this paper. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by a National Research Foundation of Korea (NRF) grant funded by the Korean government (MOE) (No. 2021R1I1A3055973) and by the Soonchunhyang University Research Fund.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Jalil, B.; Maggiani, L.; Valcarenghi, L. Convolutional neural networks and transformers-based techniques for underwater marine debris classification: A comparative study. IEEE J. Ocean. Eng. 2025, 50, 594–607. [Google Scholar] [CrossRef]

- Nyadjro, E.S.; Webster, J.A.B.; Boyer, T.P.; Cebrian, J.; Collazo, L.; Kaltenberger, G.; Larsen, K.; Lau, Y.H.; Mickle, P.; Toft, T.; et al. The NOAA NCEI marine microplastics database. Sci. Data 2023, 10, 726. [Google Scholar] [CrossRef]

- Sawant, S.; D’sOuza, L.; Kulkarni, A.; Uchil, D.; Nagvenkar, K. Performance evaluation of YOLO variants on marine trash images: A comparative study of YOLOv5, YOLOv7, YOLOv8, and TinyYOLO. In Proceedings of the IEEE Bangalore Humanitarian Technology Conference, Karkala, India, 22–23 March 2024. [Google Scholar]

- Chin, C.S.; Neo, A.B.H.; See, S. Visual marine debris detection using YOLOv5s for autonomous underwater vehicle. In Proceedings of the IEEE/ACIS International Conference on Computer and Information Science, Zhuhai, China, 26–28 June 2022. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state of the art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Varghese, R.; Sambath, M. YOLOv8: A novel object detection algorithm with enhanced performance and robustness. In Proceedings of the International Conference on Advances in Data Engineering and Intelligent Computing Systems, Chennai, India, 18–19 April 2024. [Google Scholar]

- Loh, A.I.L.; Goh, Y.X.; Bingi, L.; Ibrahim, R. YOLOv11-powered emergency vehicle detection algorithm using Tello quadrotor drone. In Proceedings of the IEEE International Conference on Robotics and Technologies for Industrial Automation, Kuala Lumpur, Malaysia, 12 April 2025. [Google Scholar]

- Corrigan, B.C.; Tay, Z.Y.; Konovessis, D. Real-time instance segmentation for detection of underwater litter as a plastic source. J. Mar. Sci. Eng. 2023, 11, 1532. [Google Scholar] [CrossRef]

- Adimulam, R.P.; Thumu, R.R.; Lanke, N. Real-time underwater trash object detection using enhanced YOLOv8. In Proceedings of the International Conference on Intelligent Systems, Advanced Computing and Communication, Silchar, India, 27–28 February 2025. [Google Scholar]

- Rasheed, S.; Mirza, A.; Saeed, M.S.; Yousaf, M.H. Trash detection in water bodies using YOLO with explainable AI insight. In Proceedings of the International Conference on Robotics and Automation in Industry, Rawalpindi, Pakistan, 18–19 December 2024. [Google Scholar]

- Saleem, A.; Awad, A.; Paheding, S.; Lucas, E.; Havens, T.C.; Esselman, P.C. Understanding the influence of image enhancement on underwater object detection: A quantitative and qualitative study. Remote Sens. 2025, 17, 185. [Google Scholar] [CrossRef]

- Ouyang, W.; Wei, Y.; Hou, T.; Liu, J. An in-situ image enhancement method for the detection of marine organisms by remotely operated vehicles. ICES J. Mar. Sci. 2024, 81, 440–452. [Google Scholar] [CrossRef]

- Xu, S.; Zhang, M.; Song, W.; Mei, H.; He, Q.; Liotta, A. A systematic review and analysis of deep learning-based underwater object detection. Neurocomputing 2023, 527, 204–232. [Google Scholar] [CrossRef]

- Song, H.; Xia, W.; Kang, J.; Zhang, S.; Ye, C.; Kang, W.; Toe, T.T. Underwater image enhancement method based on dark channel prior and guided filtering. In Proceedings of the International Conference on Automation, Robotics and Computer Engineering, Wuhan, China, 16–17 December 2022. [Google Scholar]

- Zhang, W.; Zhou, L.; Zhuang, P.; Li, G.; Pan, X.; Zhao, W.; Li, C. Underwater image enhancement via weighted wavelet visual perception fusion. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 2469–2483. [Google Scholar] [CrossRef]

- Zhang, G.; Li, C.; Yan, J.; Zheng, Y. ULD-CycleGAN: An underwater light field and depth map-optimized CycleGAN for underwater image enhancement. IEEE J. Ocean. Eng. 2024, 49, 1275–1288. [Google Scholar] [CrossRef]

- Cong, R.; Yang, W.; Zhang, W.; Li, C.; Guo, C.-L.; Huang, Q.; Kwong, S. PUGAN: Physical model-guided underwater image enhancement using GAN with dual-discriminators. IEEE Trans. Image Process. 2023, 32, 4472–4485. [Google Scholar] [CrossRef]

- Wang, B.; Xu, H.; Jiang, G.; Yu, M. UIE-convformer: Underwater image enhancement based on convolution and feature fusion transformer. IEEE Trans. Emerg. Top. Comput. Intell. 2024, 8, 1952–1968. [Google Scholar] [CrossRef]

- Yu, F.; Xiao, F.; Li, C.; Cheng, E.; Yuan, F. AO-UOD: A novel paradigm for underwater object detection using acousto-optic fusion. IEEE J. Ocean. Eng. 2025, 50, 919–940. [Google Scholar] [CrossRef]

- Zhou, W.; Zheng, F.; Yin, G.; Pang, Y.; Yi, J. YOLOTrashCan: A deep learning marine debris detection network. IEEE Trans. Instrum. Meas. 2022, 72, 1–12. [Google Scholar] [CrossRef]

- Li, X.; Zhao, Y.; Su, H.; Wang, Y.; Chen, G. Efficient underwater object detection based on feature enhancement and attention detection head. Sci. Rep. 2025, 15, 5973. [Google Scholar] [CrossRef]

- He, X.; Zhang, Y.; Zhang, Q. AIN-YOLO: A lightweight YOLO network with attention-based InceptionNext and knowledge distillation for underwater object detection. Adv. Eng. Inform. 2025, 66, 103504. [Google Scholar] [CrossRef]

- Lucas, E.; Awad, A.; Geglio, A.; Saleem, A. Underwater image enhancement and object detection: Are poor object detection results on enhanced images due to missing human labels? In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision Workshops, Tucson, AZ, USA, 28 February–4 March 2025. [Google Scholar]

- Qiu, X.; Shi, Y. Multimodal fusion image enhancement technique and CFEC-YOLOv7 for underwater target detection algorithm research. Front. Neurorobot. 2025, 19, 1616919. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Wang, B.; Li, Y.; He, J.; Li, Y. UnitModule: A lightweight joint image enhancement module for underwater object detection. Pattern Recognit. 2025, 151, 110435. [Google Scholar] [CrossRef]

- Zhang, W.; Li, X.; Xu, S.; Li, X.; Yang, Y.; Xu, D.; Liu, T.; Hu, H. Underwater image restoration via adaptive color correction and contrast enhancement fusion. Remote Sens. 2023, 15, 4699. [Google Scholar] [CrossRef]

- Liu, W.; Xu, J.; He, S.; Chen, Y.; Zhang, X.; Shu, H.; Qi, P. Underwater-image enhancement based on maximum information-channel correction and edge-preserving filtering. Symmetry 2025, 17, 725. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, P.; Quan, L.; Yi, C.; Lu, C. Underwater image enhancement based on deep learning and image formation model. arXiv 2021, arXiv:2101.00991. [Google Scholar] [CrossRef]

- AI Hub. Enhanced Marine Debris Image Data. Available online: https://www.aihub.or.kr/aihubdata/data/view.do?currMenu=115&topMenu=100&dataSetSn=71340 (accessed on 3 January 2025).

- Đuraš, A.; Wolf, B.J.; Ilioudi, A.; Palunko, I.; De Schutter, B.A. Dataset of Detection and Segmentation of Underwater Marine Debris in Shallow Waters. Sci Data 2024, 11, 921. [Google Scholar] [CrossRef]

- Wang, Z.; Zhou, D.; Li, Z.; Yuan, Z.; Yang, C. Underwater image enhancement via adaptive color correction and stationary wavelet detail enhancement. IEEE Access 2024, 12, 11066–11082. [Google Scholar] [CrossRef]

- Mughal, A.B.; Shah, S.M.; Khan, R.U. Real-time detection of underwater debris using YOLOv11: An optimized approach. In Proceedings of the International Conference on Emerging Technologies in Electronics, Computing, and Communication, Jamshoro, Pakistan, 23–25 April 2025. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).