Hybrid Regularized Variational Minimization Method to Promote Visual Perception for Intelligent Surface Vehicles Under Hazy Weather Condition

Abstract

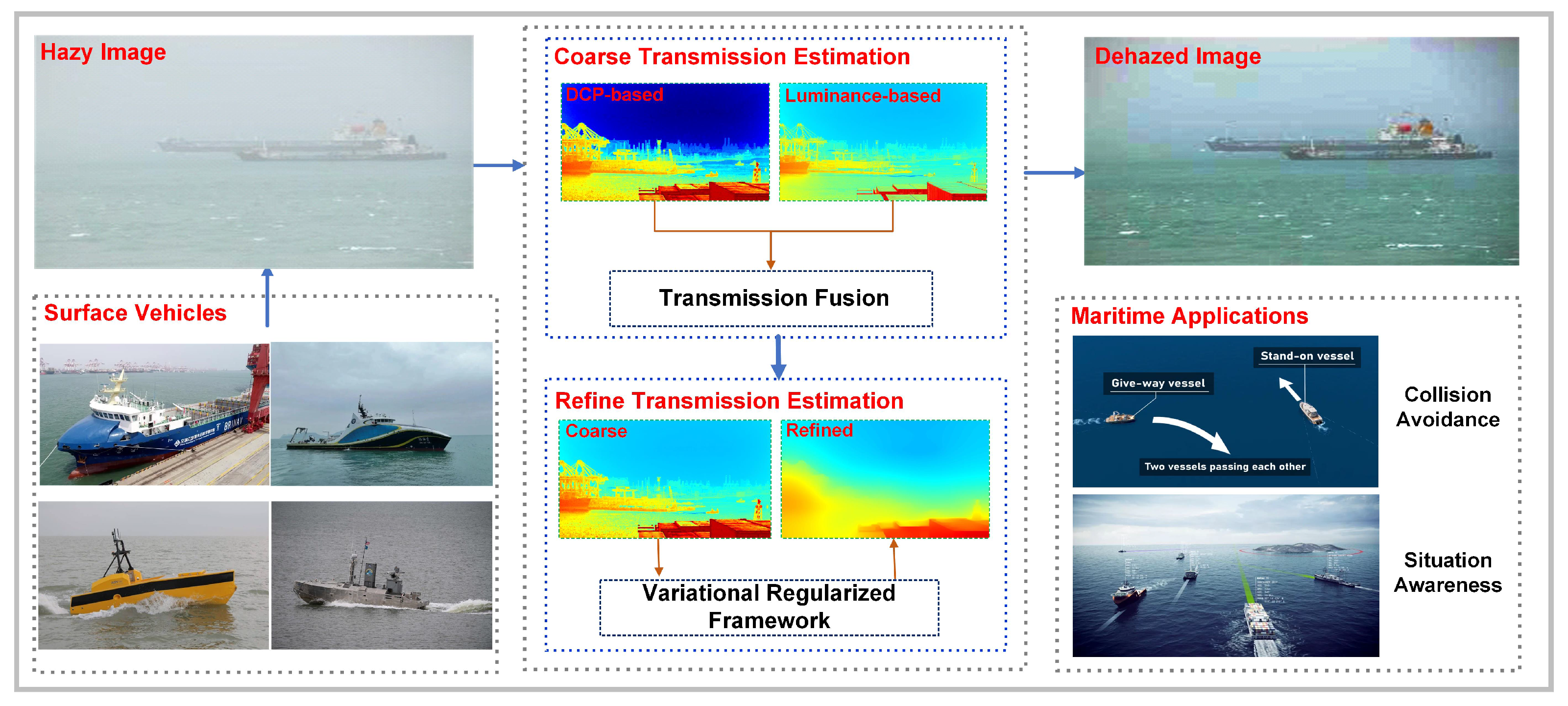

1. Introduction

1.1. Related Works

1.1.1. Contrast Enhancement Methods

1.1.2. Physical-Based Methods

1.1.3. Deep Learning-Based Methods

1.2. Motivation and Contributions

| Algorithm 1 Hybrid Regularized Variational Dehazing |

|

- Hybrid Variational Method. A hybrid variational model, which combines with TGV and RTV regularizers, is proposed to refine the transmission map. The hybrid regularizer effectively solves the problems of over-smoothness and artifact interference.

- Numerical Optimization Algorithm. The original transmission-refined model is a nonconvex nonsmooth optimization problem, which is decomposed into simpler subproblems based on the alternating direction method and easily handled by existing numerical methods.

- Competitive Dehazing Performance. Experiments conducted on synthetic and realistic maritime images proved that the proposed approach could robustly and effectively restore the visibility of hazy images in maritime scenes.

2. Problem Formulation

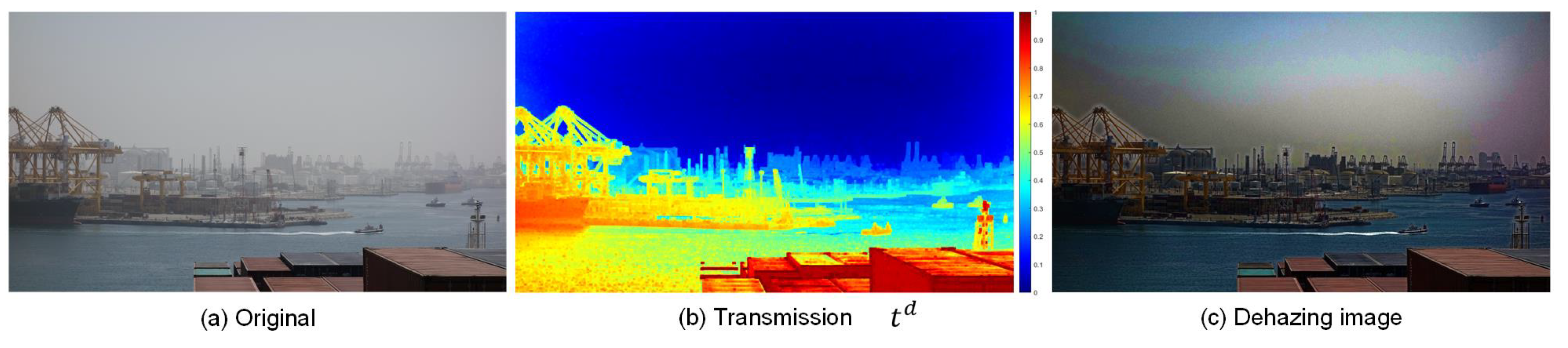

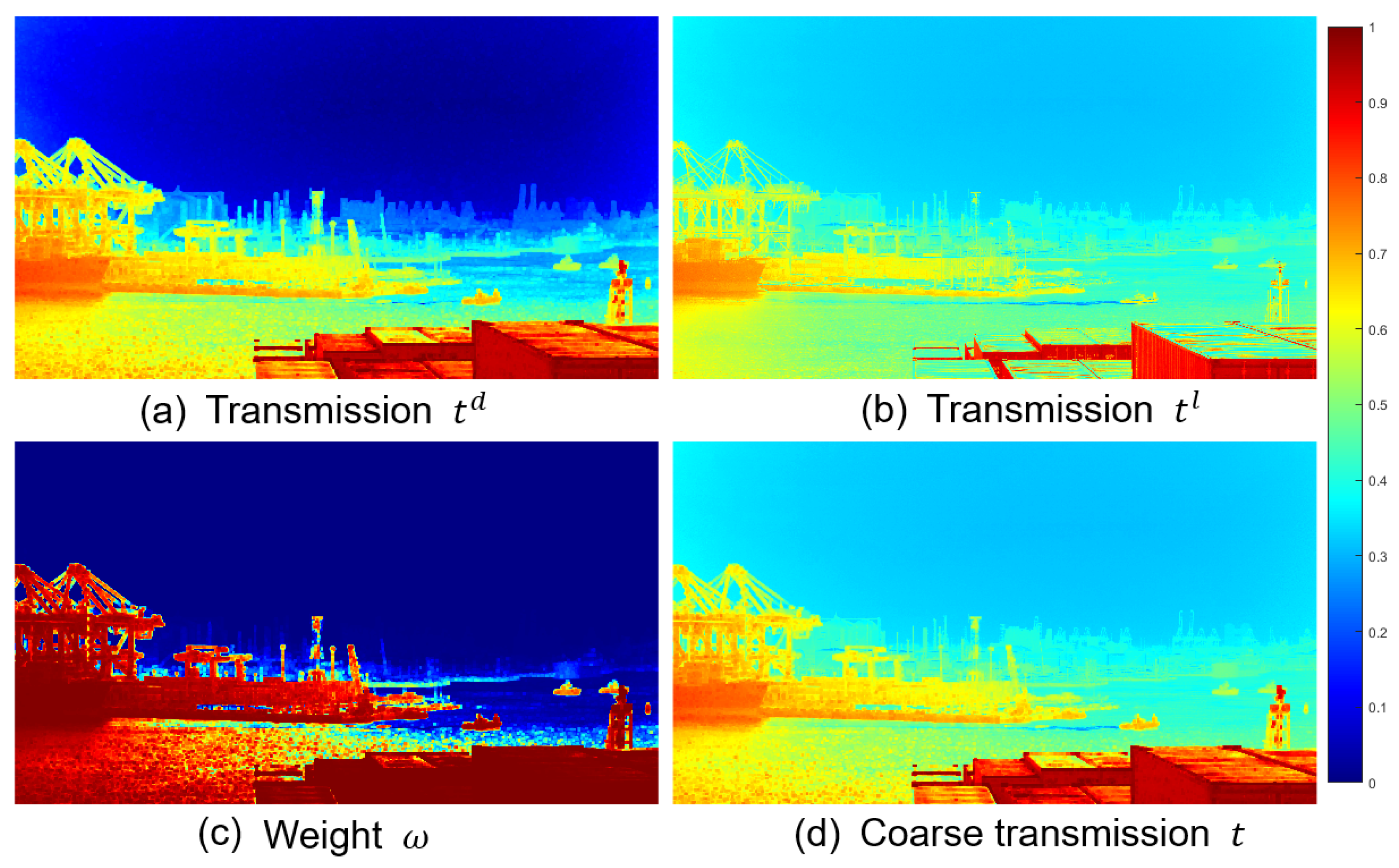

3. Estimation of Coarse Transmission Map

3.1. DCP-Based Transmission Map

3.2. Luminance-Based Transmission Map

3.3. Weighted Transmission Map

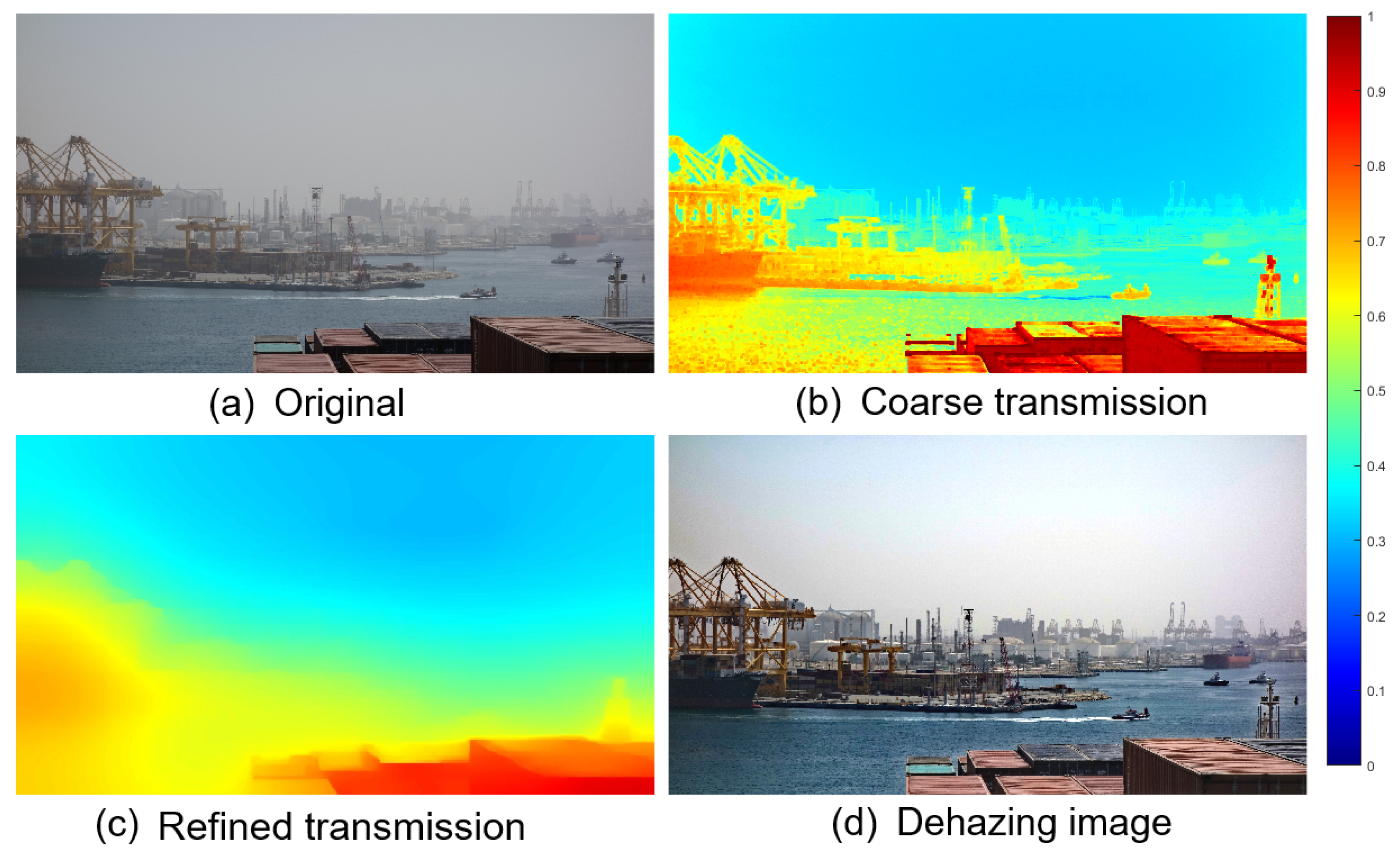

4. Estimation of Refined Transmission Map

4.1. Hybrid Variational Model

4.2. Numerical Optimization Algorithm

4.2.1. X-Subproblem

4.2.2. Y Subproblem

4.2.3. Subproblem

4.2.4. T Subproblem

| Algorithm 2 FISTA for t subproblem |

|

4.3. Latent Sharp Image Restoration

5. Experimental Results and Analysis

5.1. Experimental Data

5.2. Experimental Settings

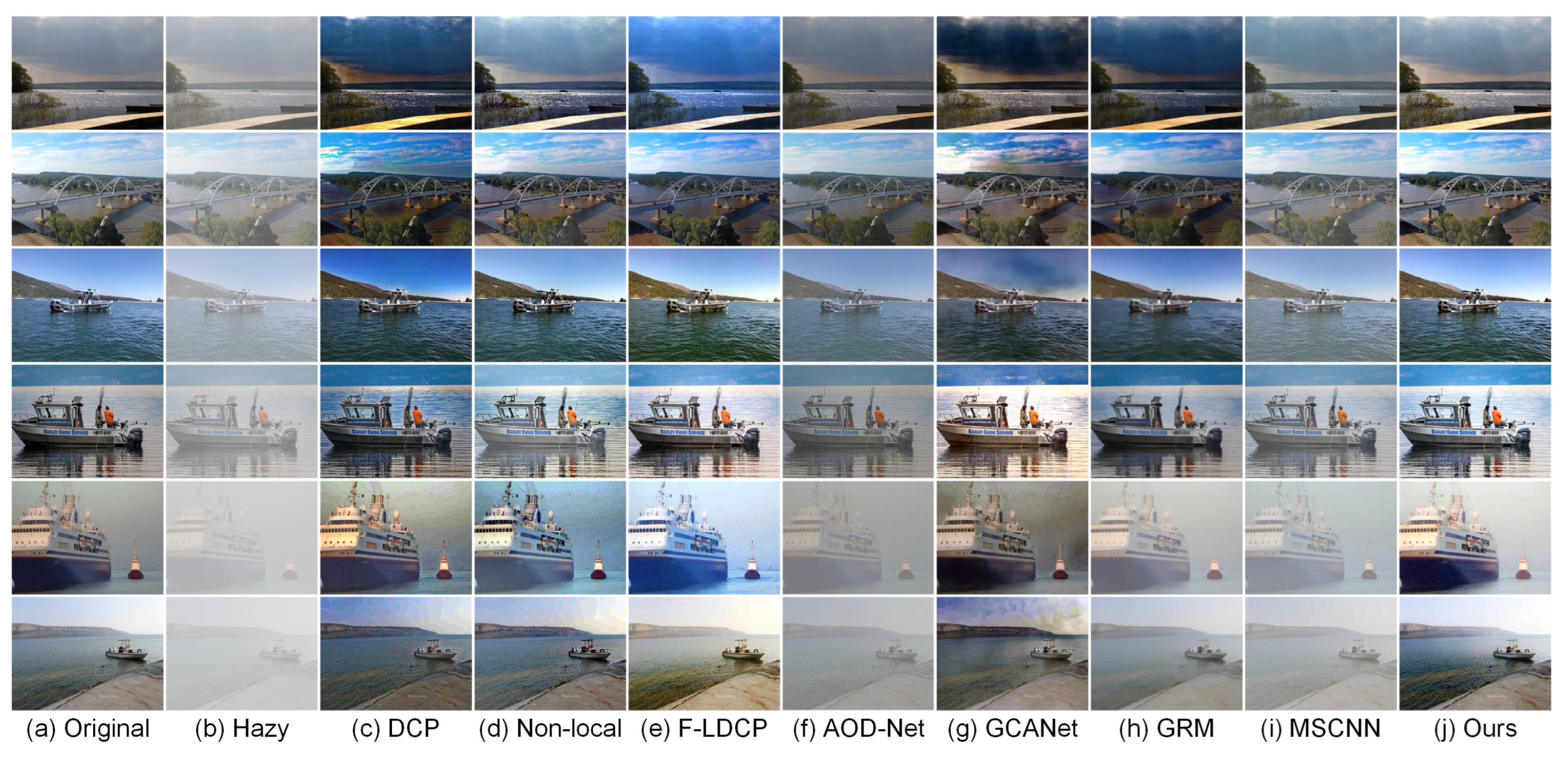

5.3. Dehazing Experiments on Synthetic Hazy Images

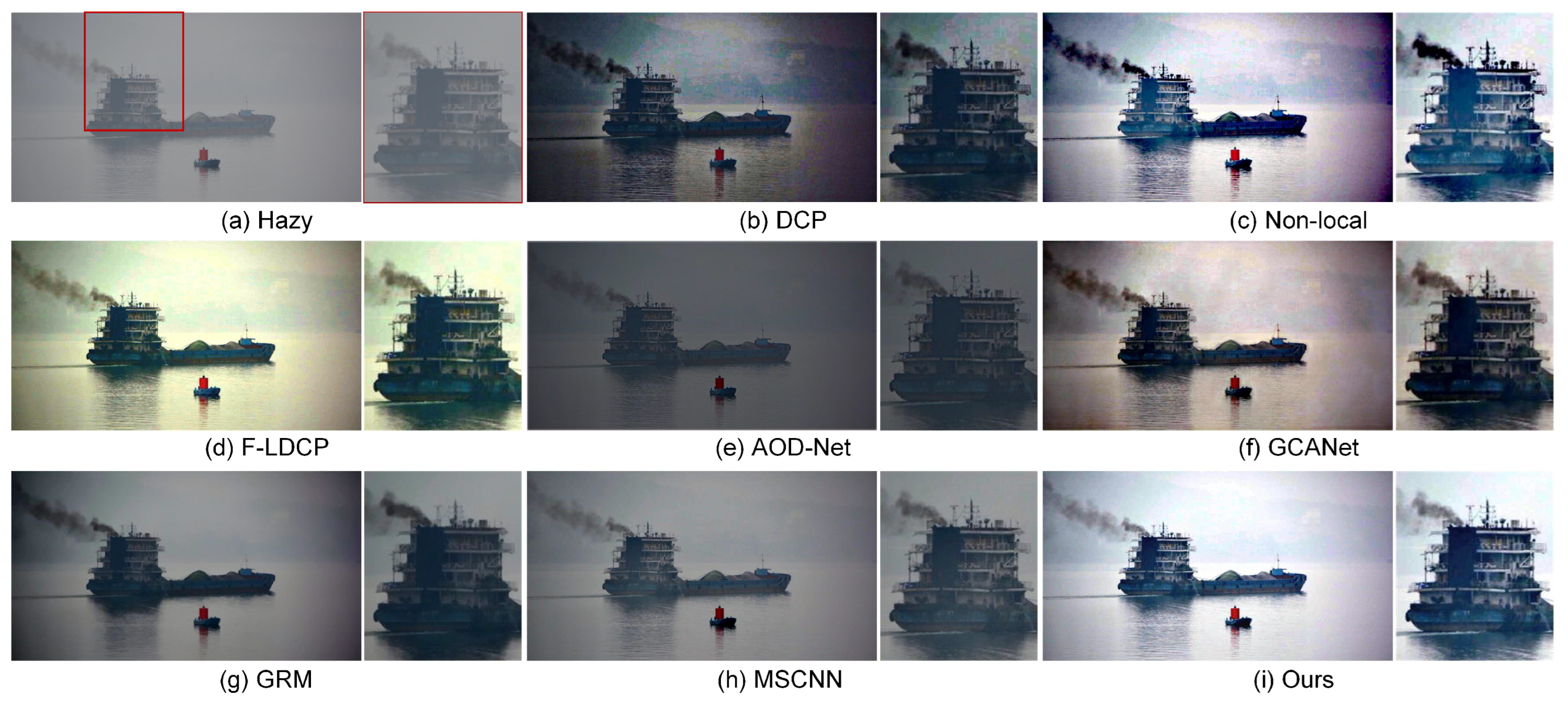

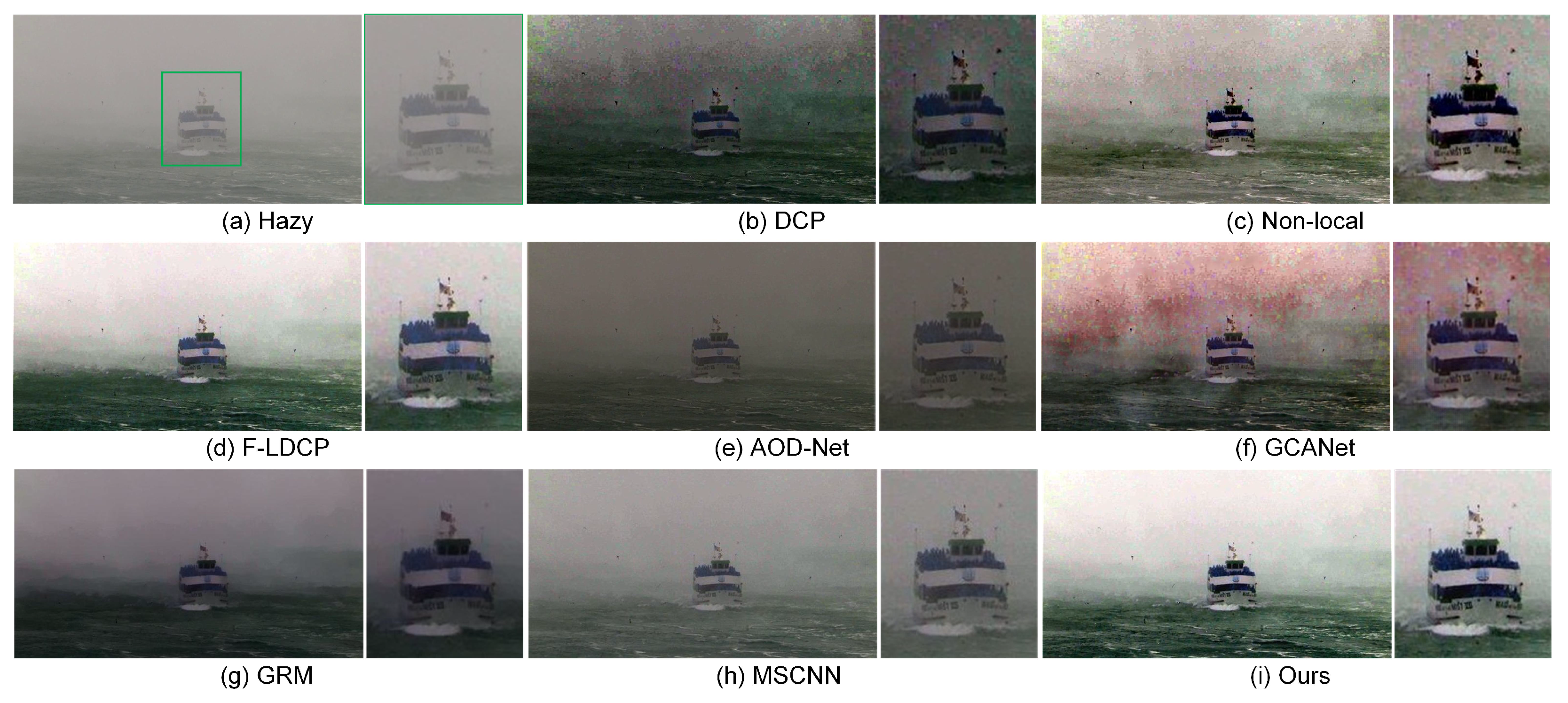

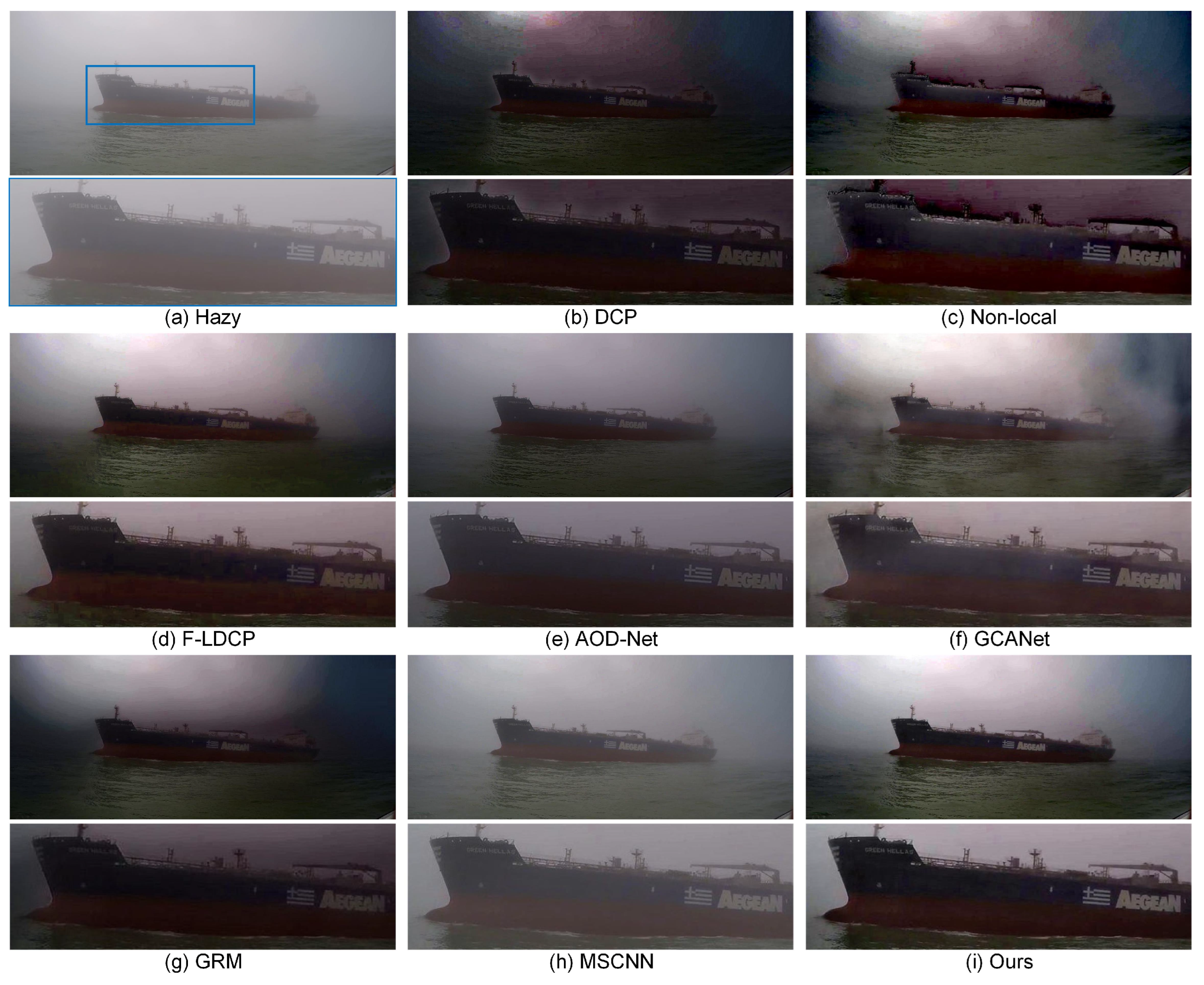

5.4. Dehazing Experiments on Realistic Hazy Images

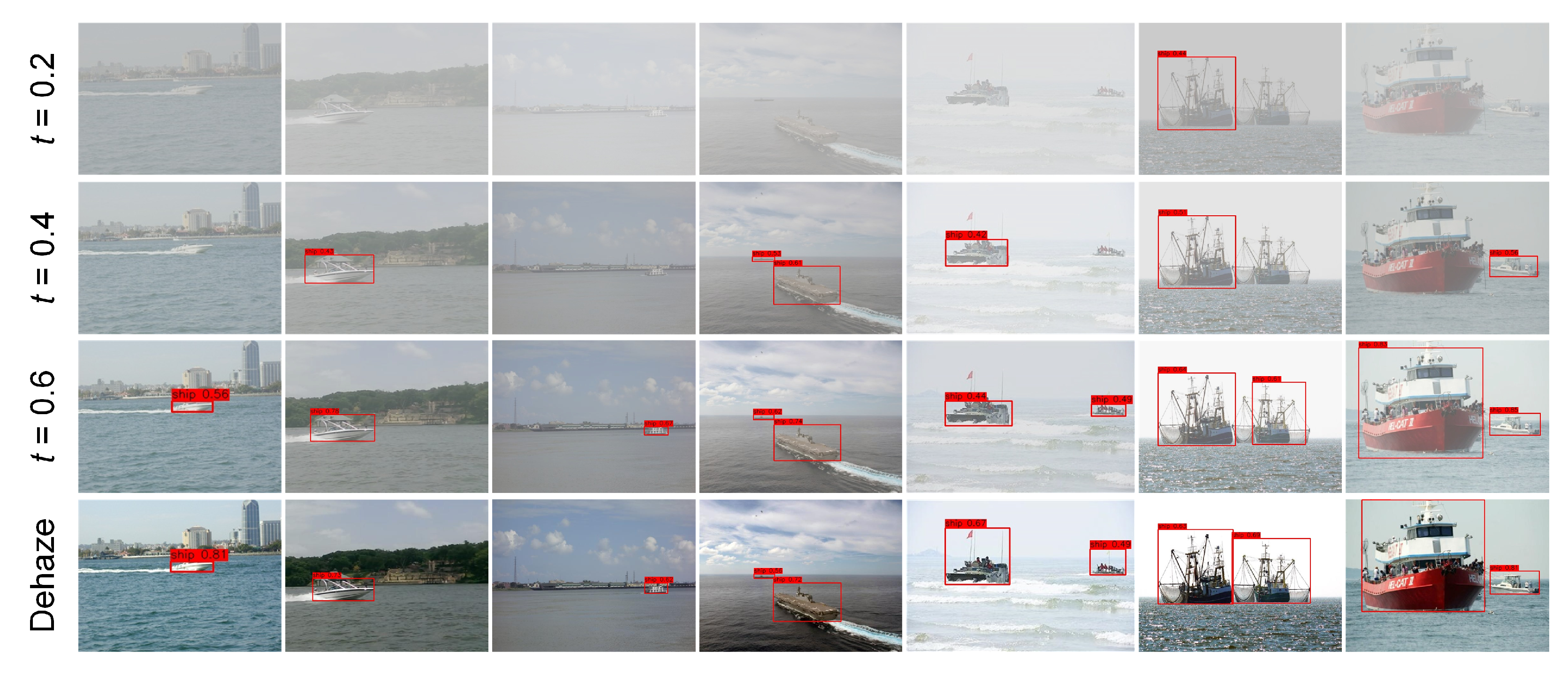

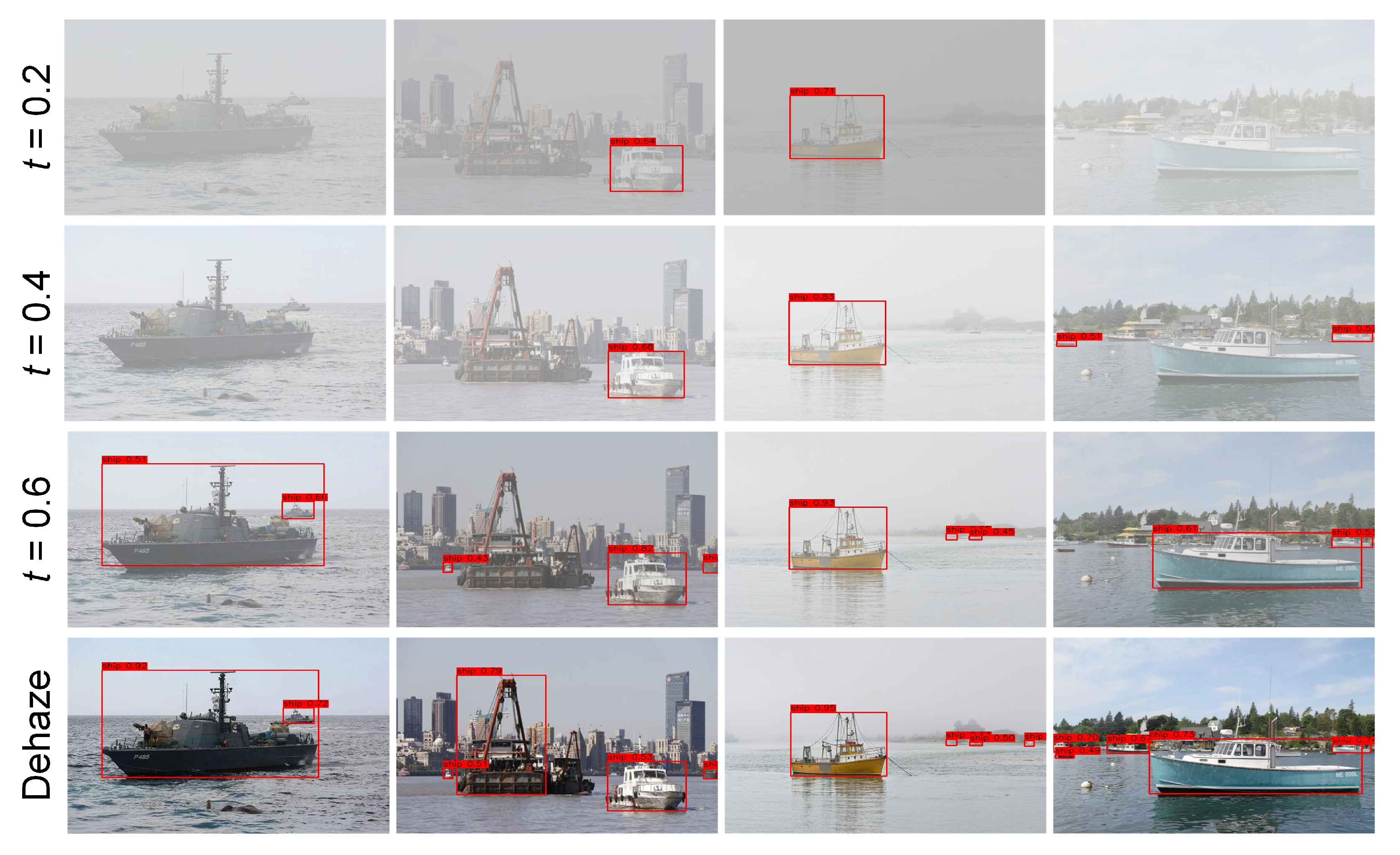

5.5. Influences of Dehazing on Ship Detection

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Liu, R.W.; Lu, Y.; Guo, Y.; Ren, W.; Zhu, F.; Lv, Y. AiOENet: All-in-one low-visibility enhancement to improve visual perception for intelligent marine vehicles under severe weather conditions. IEEE Trans. Intell. Veh. 2024, 9, 3811–3826. [Google Scholar] [CrossRef]

- Qiao, Y.; Yin, J.; Wang, W.; Duarte, F.; Yang, J.; Ratti, C. Survey of deep learning for autonomous surface vehicles in marine environments. IEEE Trans. Intell. Transp. Syst. 2023, 24, 3678–3701. [Google Scholar] [CrossRef]

- Stark, J.A. Adaptive image contrast enhancement using generalizations of histogram equalization. IEEE Trans. Image Process. 2000, 9, 889–896. [Google Scholar] [CrossRef] [PubMed]

- Soni, B.; Mathur, P. An improved image dehazing technique using CLAHE and guided filter. In Proceedings of the International Conference on Signal Processing and Integrated Networks (SPIN), Noida, India, 27–28 February 2020; pp. 902–907. [Google Scholar] [CrossRef]

- Zhou, J.; Zhou, F. Single image dehazing motivated by retinex theory. In Proceedings of the 2nd International Symposium on Instrumentation and Measurement, Sensor Network and Automation (IMSNA), Toronto, ON, Canada, 20–22 December 2013; pp. 243–247. [Google Scholar] [CrossRef]

- Land, E.H. The retinex. Am. Sci. 1964, 52, 247–264. [Google Scholar]

- Ancuti, C.O.; Ancuti, C. Single image dehazing by multi-scale fusion. IEEE Trans. Image Process. 2013, 22, 3271–3282. [Google Scholar] [CrossRef]

- Fattal, R. Single image dehazing. ACM Trans. Graph. 2008, 27, 1–9. [Google Scholar] [CrossRef]

- Berman, D.; Treibitz, T.; Avidan, S. Non-local image dehazing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1674–1682. [Google Scholar] [CrossRef]

- Fattal, R. Dehazing using color-lines. ACM Trans. Graph. 2014, 34, 1–14. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar] [CrossRef]

- Nair, D.; Sankaran, P. Color image dehazing using surround filter and dark channel prior. J. Vis. Commun. Image Represent. 2018, 50, 9–15. [Google Scholar] [CrossRef]

- Zhu, Y.; Tang, G.; Zhang, X.; Jiang, J.; Tian, Q. Haze removal method for natural restoration of images with sky. Neurocomputing 2018, 275, 499–510. [Google Scholar] [CrossRef]

- Cai, B.; Xu, X.; Jia, K.; Qing, C.; Tao, D. DehazeNet: An end-to-end system for single image haze removal. IEEE Trans. Image Process. 2016, 25, 5187–5198. [Google Scholar] [CrossRef] [PubMed]

- Ren, W.; Liu, S.; Zhang, H.; Pan, J.; Cao, X.; Yang, M.-H. Single image dehazing via multi-scale convolutional neural networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 154–169. [Google Scholar] [CrossRef]

- Golts, A.; Freedman, D.; Elad, M. Unsupervised single image dehazing using dark channel prior loss. IEEE Trans. Image Process. 2019, 29, 2692–2701. [Google Scholar] [CrossRef]

- Chen, W.; Fang, H.; Ding, J.; Kuo, S. PMHLD: Patch map-based hybrid learning DehazeNet for single image haze removal. IEEE Trans. Image Process. 2020, 29, 6773–6788. [Google Scholar] [CrossRef]

- Liu, R.W.; Guo, Y.; Lu, Y.; Chui, K.T.; Gupta, B.B. Deep network-enabled haze visibility enhancement for visual IoT-driven intelligent transportation systems. IEEE Trans. Ind. Inform. 2023, 19, 1581–1591. [Google Scholar] [CrossRef]

- Sahu, G.; Seal, A.; Bhattacharjee, D.; Frischer, R.; Krejcar, O. A novel parameter adaptive dual channel MSPCNN based single image dehazing for intelligent transportation systems. IEEE Trans. Intell. Transp. Syst. 2023, 24, 3027–3047. [Google Scholar] [CrossRef]

- Park, M.-H.; Choi, J.-H.; Lee, W.-J. Object detection for various types of vessels using the YOLO algorithm. J. Adv. Mar. Eng. Technol. 2024, 48, 81–88. [Google Scholar] [CrossRef]

- Liao, M.; Lu, Y.; Li, X.; Di, S.; Liang, W.; Chang, V. An unsupervised image dehazing method using patch-line and fuzzy clustering-line priors. IEEE Trans. Fuzzy Syst. 2024, 32, 3381–3395. [Google Scholar] [CrossRef]

- Guo, Y.; Lu, Y.; Liu, R.W.; Yang, M.; Chui, K.T. Low-light image enhancement with regularized illumination optimization and deep noise suppression. IEEE Access 2020, 8, 145297–145315. [Google Scholar] [CrossRef]

- Yang, M.; Nie, X.; Liu, R.W. Coarse-to-fine luminance estimation for low-light image enhancement in maritime video surveillance. In Proceedings of the IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 299–304. [Google Scholar] [CrossRef]

- Bredies, K.; Kunisch, K.; Pock, T. Total generalized variation. SIAM J. Imaging Sci. 2010, 3, 492–526. [Google Scholar] [CrossRef]

- Xu, L.; Yan, Q.; Xia, Y.; Jia, J. Structure extraction from texture via relative total variation. ACM Trans. Graph. 2012, 31, 1–10. [Google Scholar] [CrossRef]

- McCartney, E.J. Optics of the Atmosphere: Scattering by Molecules and Particles; John Wiley and Sons, Inc.: New York, NY, USA, 1976. [Google Scholar] [CrossRef]

- Shu, Q.; Wu, C.; Xiao, Z.; Liu, R.W. Variational regularized transmission refinement for image dehazing. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 2781–2785. [Google Scholar] [CrossRef]

- Gabay, D.; Mercier, B. A dual algorithm for the solution of nonlinear variational problems via finite element approximation. Comput. Math. Appl. 1976, 2, 17–40. [Google Scholar] [CrossRef]

- Goldstein, T.; Osher, S. The split Bregman method for l1-regularized problems. SIAM J. Imaging Sci. 2009, 2, 323–343. [Google Scholar] [CrossRef]

- Beck, A.; Teboulle, M. A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2009, 2, 183–202. [Google Scholar] [CrossRef]

- Chambolle, A.; Pock, T. A first-order primal-dual algorithm for convex problems with applications to imaging. J. Math. Imaging Vis. 2011, 40, 120–145. [Google Scholar] [CrossRef]

- Condat, L. A primal–dual splitting method for convex optimization involving Lipschitzian, proximable and linear composite terms. J. Optim. Theory Appl. 2013, 158, 460–479. [Google Scholar] [CrossRef]

- Liu, Q.; Xiong, B.; Yang, D.; Zhang, M. A generalized relative total variation method for image smoothing. Multimed. Tools Appl. 2016, 75, 7909–7930. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1397–1409. [Google Scholar] [CrossRef]

- Zheng, S.; Sun, J.; Liu, Q.; Qi, Y.; Zhang, S. Overwater image dehazing via cycle-consistent generative adversarial network. In Proceedings of the Asian Conference on Computer Vision, Kyoto, Japan, 30 November–4 December 2020. [Google Scholar]

- Chen, C.; Do, M.N.; Wang, J. Robust image and video dehazing with visual artifact suppression via gradient residual minimization. In Computer Vision—ECCV 2016; Springer: Cham, Switzerland, 2016. [Google Scholar] [CrossRef]

- Li, B.; Peng, X.; Wang, Z.; Xu, J.; Feng, D. AOD-Net: All-in-one dehazing network. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4770–4778. [Google Scholar] [CrossRef]

- Chen, D.; He, M.; Fan, Q.; Liao, J.; Zhang, L.; Hou, D.; Yuan, L.; Hua, G. Gated context aggregation network for image dehazing and deraining. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 7–10 January 2019; pp. 1375–1383. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C. Mean squared error: Love it or leave it? A new look at signal fidelity measures. IEEE Signal Process. Mag. 2009, 26, 98–117. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Mou, X.; Zhang, D. FSIM: A feature similarity index for image quality assessment. IEEE Trans. Image Process. 2011, 20, 2378–2386. [Google Scholar] [CrossRef] [PubMed]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Xu, J.; Hu, X.; Zhu, L.; Heng, P.-A. Unifying physically-informed weather priors in a single model for image restoration across multiple adverse weather conditions. IEEE Trans. Circuits Syst. Video Technol. 2025, 1. [Google Scholar] [CrossRef]

- He, W.; Wang, M.; Chen, Y.; Zhang, H. An unsupervised dehazing network with hybrid prior constraints for hyperspectral image. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5514715. [Google Scholar] [CrossRef]

| Methods | PSNR | SSIM | FSIM | |

|---|---|---|---|---|

| DCP [11] | 14.70 ± 2.09 | 0.807 ± 0.044 | 0.913 ± 0.028 | 0.909 ± 0.029 |

| Non-local [9] | 18.53 ± 2.72 | 0.862 ± 0.051 | 0.926 ± 0.034 | 0.922 ± 0.034 |

| F-LDCP [13] | 20.91 ± 2.12 | 0.916 ± 0.034 | 0.956 ± 0.013 | 0.951 ± 0.015 |

| GRM [36] | 16.88 ± 1.42 | 0.761 ± 0.078 | 0.885 ± 0.033 | 0.882 ± 0.033 |

| AOD-Net [37] | 18.15 ± 1.01 | 0.870 ± 0.034 | 0.884 ± 0.017 | 0.882 ± 0.017 |

| GCANet [38] | 19.07 ± 4.37 | 0.874 ± 0.069 | 0.938 ± 0.037 | 0.930 ± 0.041 |

| MSCNN [15] | 18.71 ± 2.14 | 0.874 ± 0.044 | 0.939 ± 0.014 | 0.937 ± 0.014 |

| Ours | 21.92 ± 2.35 | 0.933 ± 0.019 | 0.971 ± 0.012 | 0.967 ± 0.013 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, P.; Qiao, D.; Luo, C.; Wan, D.; Li, G. Hybrid Regularized Variational Minimization Method to Promote Visual Perception for Intelligent Surface Vehicles Under Hazy Weather Condition. J. Mar. Sci. Eng. 2025, 13, 1991. https://doi.org/10.3390/jmse13101991

Li P, Qiao D, Luo C, Wan D, Li G. Hybrid Regularized Variational Minimization Method to Promote Visual Perception for Intelligent Surface Vehicles Under Hazy Weather Condition. Journal of Marine Science and Engineering. 2025; 13(10):1991. https://doi.org/10.3390/jmse13101991

Chicago/Turabian StyleLi, Peizheng, Dayong Qiao, Caofei Luo, Desong Wan, and Guilian Li. 2025. "Hybrid Regularized Variational Minimization Method to Promote Visual Perception for Intelligent Surface Vehicles Under Hazy Weather Condition" Journal of Marine Science and Engineering 13, no. 10: 1991. https://doi.org/10.3390/jmse13101991

APA StyleLi, P., Qiao, D., Luo, C., Wan, D., & Li, G. (2025). Hybrid Regularized Variational Minimization Method to Promote Visual Perception for Intelligent Surface Vehicles Under Hazy Weather Condition. Journal of Marine Science and Engineering, 13(10), 1991. https://doi.org/10.3390/jmse13101991